Predicting Student Success in English Tests Using Artificial Intelligence Algorithm †

Abstract

1. Introduction

2. Materials and Methods

Research Method

- Step 1: Data collection and cleaning

- Step 2: Prediction model constructions

- Step 3: Evaluation

- Step 4: Comparison

- Step 5. Discussion and Conclusions

3. Results

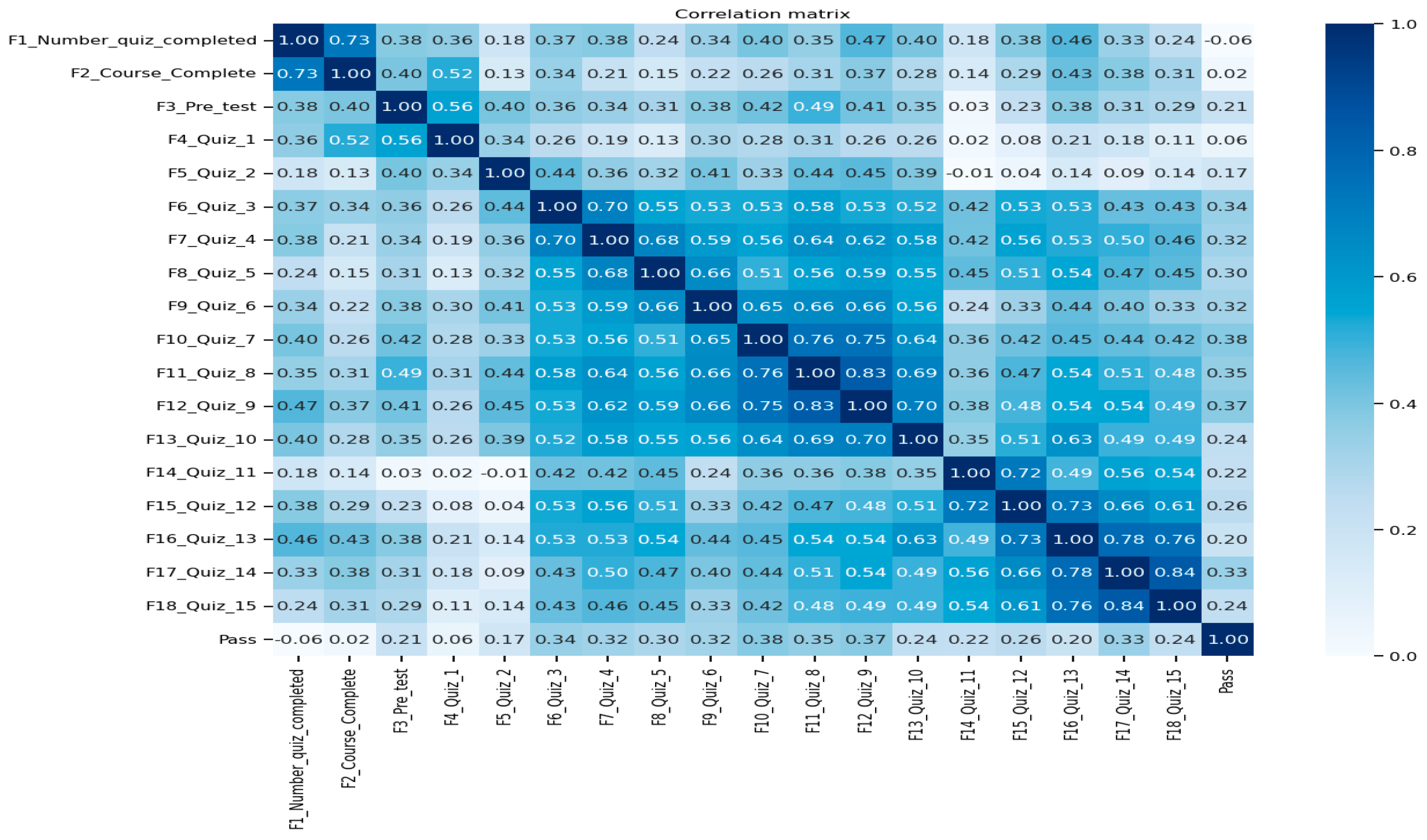

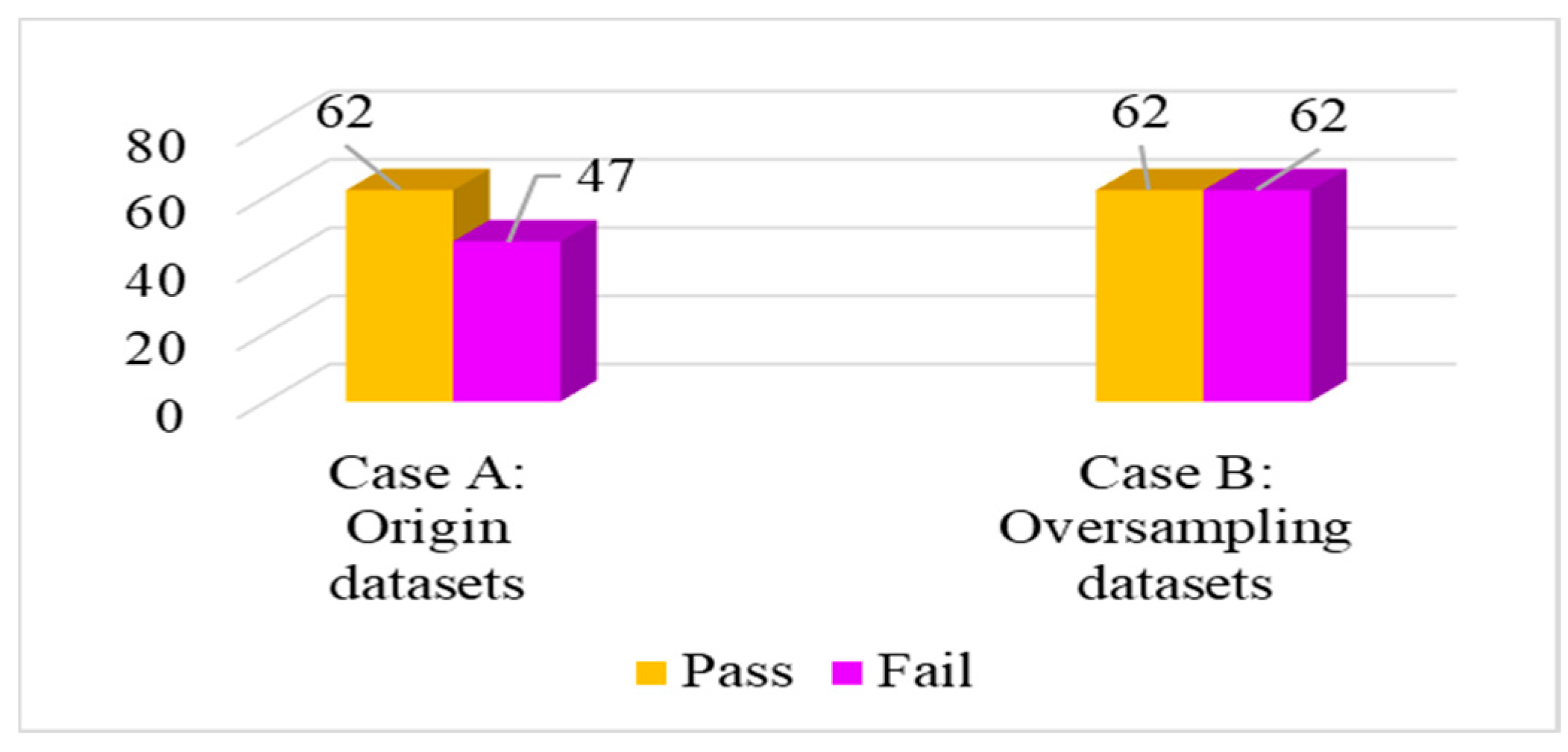

3.1. Classification

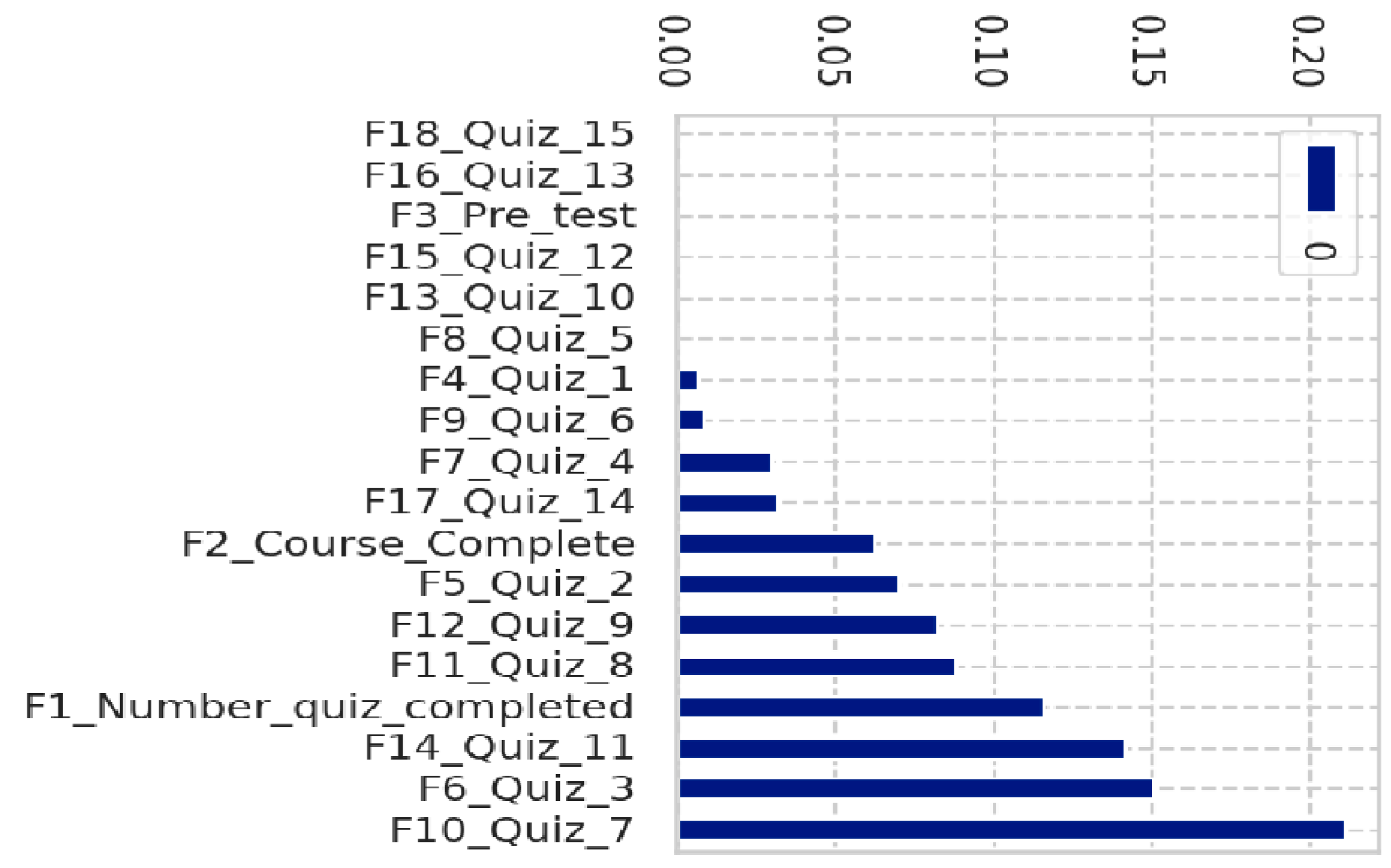

3.2. Feature Selection

4. Discussion and Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Nguyen, T.N. Thirty years of English language and English education in Vietnam. Eng. Today 2017, 33, 33–35. [Google Scholar] [CrossRef]

- Huynh-Cam, T.T.; Agrawal, S.; Chen, L.S.; Fan, T.L. Using MOODLE-based e-assessment in English listening and reading courses: A Vietnamese case study. J. Inst. Res. South East Asia 2021, 19, 66–92. [Google Scholar]

- Ngo, M.T.; Tran, L.T. Current English education in Vietnam: Policy, practices, and challenges. In English Language Education for Graduate Employability in Vietnam; Springer Nature: Singapore, 2023; pp. 49–69. [Google Scholar]

- Ministry of Education and Training (MOET). Suggestions for Implementing the National Foreign Language Project 2020 in 2024 at the Local Units. Available online: https://thuvienphapluat.vn/cong-van/Giao-duc/Cong-van-259-BGDDT-DANN-2023-de-xuat-trien-khai-De-an-Ngoai-ngu-Quoc-gia-tai-don-vi-552539.aspx (accessed on 11 November 2024).

- Prime Minister. Approving, Revising, and Amending the National Foreign Language Teaching and Learning Project in the National Education System for the Period 2017–2025. Available online: https://datafiles.chinhphu.vn/cpp/files/vbpq/2017/12/2080.signed.pdf (accessed on 11 November 2024).

- Bujang, S.D.A.; Selamat, A.; Ibrahim, R.; Krejcar, O.; Herrera-Viedma, E.; Fujita, H.; Ghani, N.A.M. Multiclass prediction model for student grade prediction using machine learning. IEEE Access 2021, 9, 95608–95621. [Google Scholar] [CrossRef]

- Gardner, J.; O’Leary, M.; Yuan, L. Artificial intelligence in educational assessment: ‘Breakthrough? Or buncombe and ballyhoo?’. J. Comp. Assis. Learn. 2021, 37, 1207–1216. [Google Scholar] [CrossRef]

- Hassan, K.M.; Khafagy, M.H.; Thabet, M. Mining educational data to analyze the student’s performance in TOEFL iBT Reading, Listening and Writing Scores. Int. J. Adv. Comput. Sci. Appl. 2022, 13, 327–334. [Google Scholar] [CrossRef]

- Quynh, T.D.; Dong, N.D.; Thuan, N.Q. A case study of student performance predictions in English course: The data mining approach. In International Congress on Information and Communication Technology; Springer Nature: Singapore, 2024; pp. 419–429. [Google Scholar]

- Huynh-Cam, T.T.; Chen, L.S.; Lu, T.C. Early prediction models and crucial factor extraction for first-year undergraduate student dropouts. J. Appl. Res. High. Educ. 2025, 17, 624–639. [Google Scholar] [CrossRef]

| Factor ID | Factors | Factor Description and Transformed Values |

|---|---|---|

| F1 | Number of quizzes students completed in the OSC | 5–15 |

| F2 | Students complete the OSC | 1 = Yes, 0 = No |

| F3 | Pre-test score | 0–10 |

| F4 | Quiz 1 score | 0–7.71 |

| F5 | Quiz 2 score | 0–8.57 |

| F6 | Quiz 3 score | 0–9.71 |

| F7 | Quiz 4 score | 0–9.14 |

| F8 | Quiz 5 score | 0–9.43 |

| F9 | Quiz 6 score | 0–9.43 |

| F10 | Quiz 7 score | 0–9.14 |

| F11 | Quiz 8 score | 0–9.14 |

| F12 | Quiz 9 score | 0–9.44 |

| F13 | Quiz 10 score | 0–10 |

| F14 | Quiz 11 score | 0–9.71 |

| F15 | Quiz 12 score | 0–10 |

| F16 | Quiz 13 score | 0–10 |

| F17 | Quiz 14 score | 0–9.71 |

| F18 | Quiz 15 score | 0–10 |

| Output: Pass | English final exam scores | 1 = Pass: ≥5~10; 0 = Fail: <5.0 |

| Dataset | Number of Students | Percentage |

|---|---|---|

| Training set | 87 | 80% |

| Testing set | 22 | 20% |

| Total | 109 | 100% |

| Classification Cases | Classifier | Prediction Performance (%) | |||||

|---|---|---|---|---|---|---|---|

| Overall Accuracy | F1 | AUC | |||||

| Mean | SD | Mean | SD | Mean | SD | ||

| Case A: Original datasets | CART | 69.50 | 7.45 | 59.17 | 8.59 | 65.67 | 6.65 |

| LR | 68.33 | 5.85 | 60.17 | 8.64 | 81.50 | 6.38 | |

| Case B: Oversampling datasets | CART | 74.67 | 7.87 | 76.50 | 10.99 | 89.33 | 6.02 |

| LR | 74.67 | 7.87 | 76.50 | 10.99 | 73.67 | 4.93 | |

| Rank Order | Factors |

|---|---|

| 1 | F10. Quiz 7 score |

| 2 | F6. Quiz 3 score |

| 3 | F14. Quiz 11 score |

| 4 | F1. Number of quizzes completed in the OSC |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Huynh-Cam, T.-T.; Truong, D.T.; Chen, L.-S.; Lu, T.-C.; Nalluri, V. Predicting Student Success in English Tests Using Artificial Intelligence Algorithm. Eng. Proc. 2025, 98, 19. https://doi.org/10.3390/engproc2025098019

Huynh-Cam T-T, Truong DT, Chen L-S, Lu T-C, Nalluri V. Predicting Student Success in English Tests Using Artificial Intelligence Algorithm. Engineering Proceedings. 2025; 98(1):19. https://doi.org/10.3390/engproc2025098019

Chicago/Turabian StyleHuynh-Cam, Thao-Trang, Dat Tan Truong, Long-Sheng Chen, Tzu-Chuen Lu, and Venkateswarlu Nalluri. 2025. "Predicting Student Success in English Tests Using Artificial Intelligence Algorithm" Engineering Proceedings 98, no. 1: 19. https://doi.org/10.3390/engproc2025098019

APA StyleHuynh-Cam, T.-T., Truong, D. T., Chen, L.-S., Lu, T.-C., & Nalluri, V. (2025). Predicting Student Success in English Tests Using Artificial Intelligence Algorithm. Engineering Proceedings, 98(1), 19. https://doi.org/10.3390/engproc2025098019