Abstract

Cattle identification is important in livestock management, and advanced techniques are required to identify cattle without ear tagging, branding, or any identification method that harms the cattle. This study aims to develop computer vision techniques to identify cattle based on their unique muzzle print features. The developed method employed the YOLOv8 object detection model to detect the cattle’s muzzle. Following detection, the captured muzzle image underwent image processing. Contrast-limited adaptive histogram equalization (CLAHE) was used to enhance the image quality and obtain a prominent and detailed image of the muzzle print. Feature extraction algorithm-oriented FAST and rotated BRIEF (ORB) was applied to extract key points and detect descriptors that are crucial for the cattle identification process. The fast library for approximate nearest neighbor (FLANN) was also employed to identify individual cattle by comparing descriptors of query images from those stored in the database. To validate the developed method, its performance was evaluated on 25 different cattle. In total, 22 out of 25 were correctly identified, resulting in an overall accuracy of 88%.

1. Introduction

Biometrics are widely used to recognize and verify individuals based on their unique physiological characteristics. Aside from fingerprints, studies have explored the field of biometrics utilizing individuals’ unique features that cannot be easily duplicated [1,2,3,4]. Animals possess unique features, similarly to humans [5,6]. Cattle’s muzzle patterns remain the same as they become bigger and older, similarly to human fingerprints [7].

Animal recognition is essential in veterinary medicine and agriculture, aiding in tracking and monitoring large numbers of animals. There are various methods for livestock identification, such as ear tags, tattoos, hot and freeze branding, ID collars, microchips, and visual markers [8]. These methods are labor-intensive and potentially harm the animals involved [9,10]. Moreover, errors, theft, and duplication risks exist, as identical marks can be shared among animals from different herds [11].

In recent studies, individual animal identification methods have been proposed. For those methods, animal nose prints are used for identification [7]. For instance, a system employed You Only Look Once (YOLO) and scale-invariant feature transform (SIFT) algorithms to identify dogs by analyzing nose print features [5]. Another study developed an ear recognition system [12]. The YOLO algorithm is used for real-time detection [13,14,15]. Such methods enable the automation of muzzle detection. The extraction of features from muzzle prints using the SIFT algorithm has been used in multiple studies [16,17]. However, there has not been significant research on improving cattle identification. While automated animal identification has been studied, a device is needed for use in the field for cattle owners. Developing a user-friendly device addresses their need for digital record keeping and effective cattle identification systems.

This research aims to develop a system for cattle identification using YOLOv8, algorithm-oriented FAST and rotated BRIEF (ORB), and the fast library for approximate nearest neighbor (FLANN) algorithms. We created a system capable of capturing images of cattle muzzles. The system enables users to record and keep track of cattle information. By implementing YOLOv8, the cattle’s muzzle patterns are accurately captured, and the ORB algorithm extracts features with the FLANN algorithm for identifying the cattle. The system was tested using a confusion matrix.

Cattle identification is crucial for ownership verification, animal welfare, and theft prevention. The developed system enhances traceability to track and monitor cattle easily. Using animal biometrics, machine learning algorithms, and computer vision, the system provides a basis for further development of biometrics.

2. Methodology

To develop the system, we conducted a comprehensive literature review of biometrics and cattle identification to gather the necessary knowledge. Then, the potential use of the automated system for cattle identification was identified. Computer vision and algorithms were selected to improve hardware performance. The system was tested for its hardware and software.

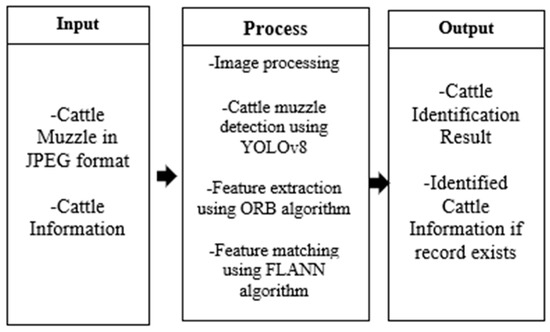

2.1. Conceptual Framework

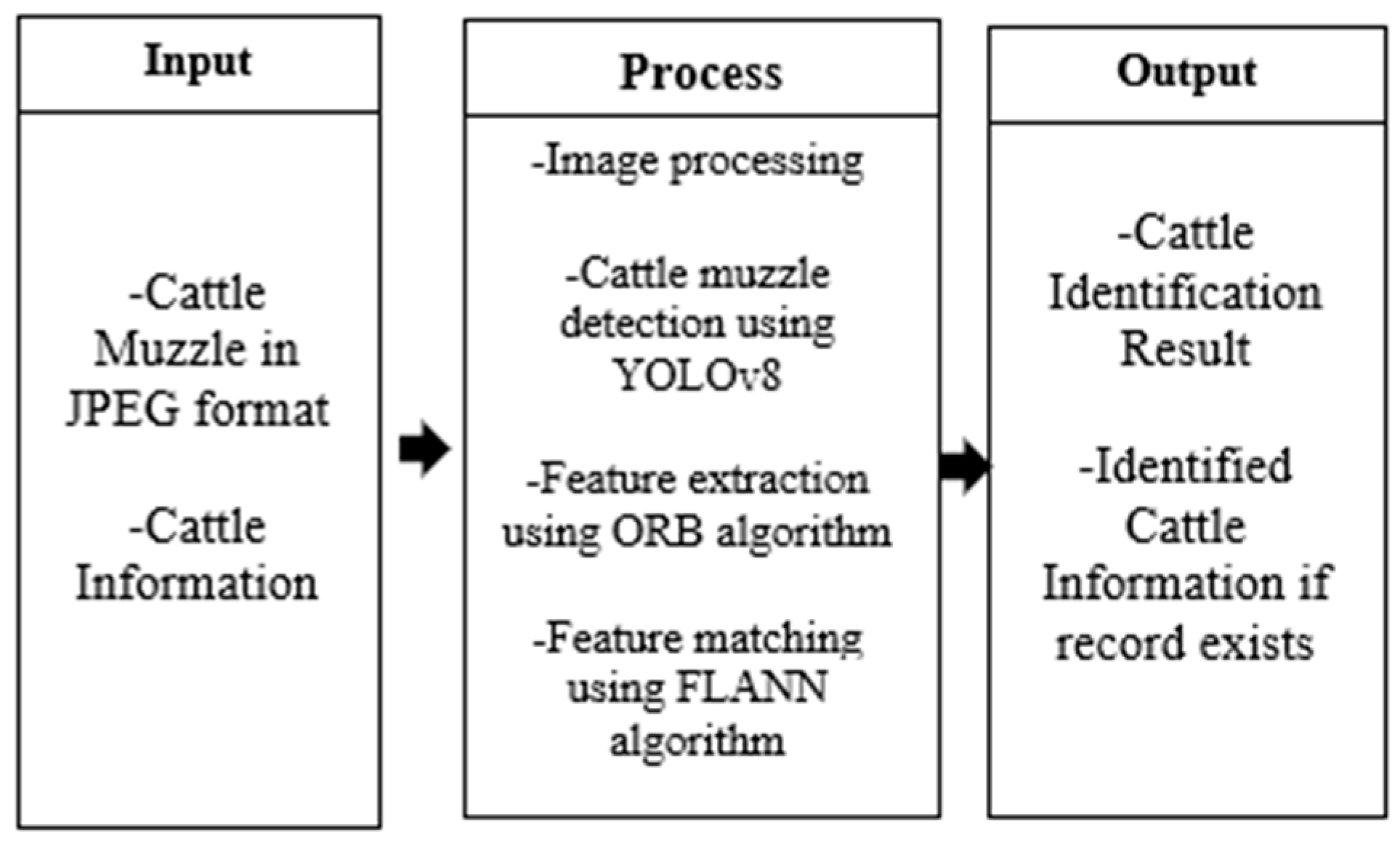

Figure 1 presents the conceptual framework of the development process. Cattle muzzle images are captured in JPEG format using a Raspberry Pi camera. YOLOv8 detects the muzzle during image capture to ensure the presence of the muzzle. ID, owner, weight, age, gender, and health information are recorded for each cattle. The captured image is preprocessed and undergoes feature extraction using the ORB algorithm. The FLANN algorithm matches the extracted features with those in the database. If a match is found, the system displays the cattle’s information.

Figure 1.

Conceptual framework of the development process.

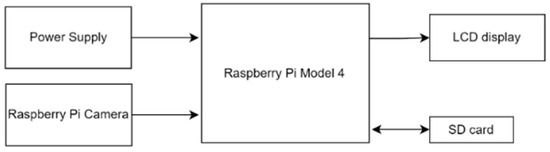

2.2. Hardware

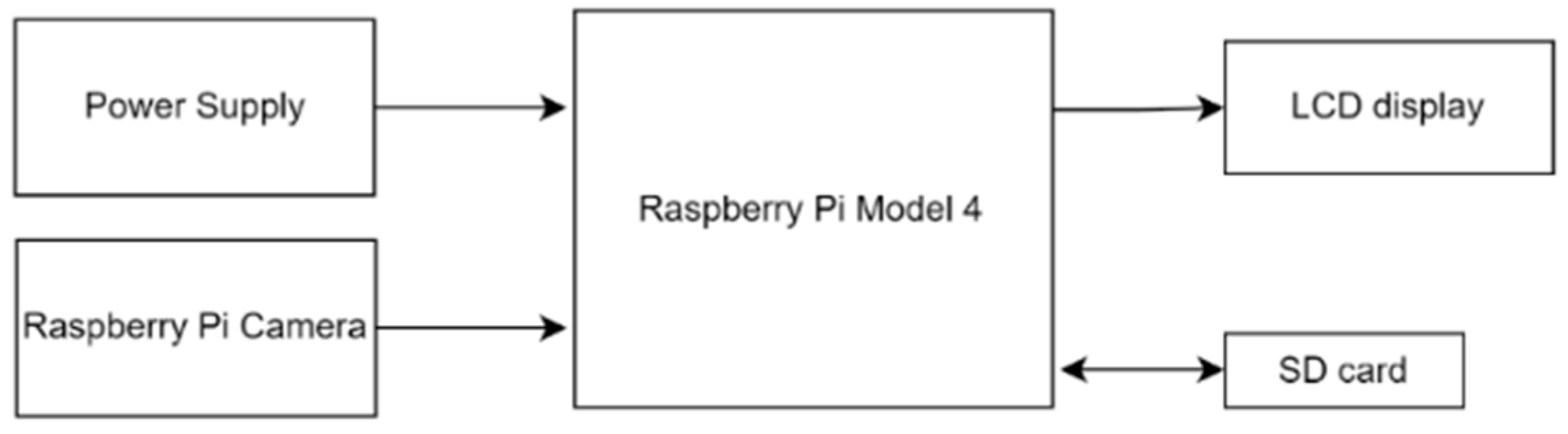

Figure 2 shows the hardware components of the system. A power supply, a Raspberry Pi 4 model, a camera, an SD card, and a Raspberry Pi 7-inch touchscreen were included. The Raspberry Pi 4 model runs the software for various operations and coding for cattle identification. The Raspberry Pi camera is used to capture cattle muzzle images. At the same time, an SD card stores the Raspberry Pi’s operating system, the software, and the dataset for cattle identification. The Raspberry Pi 7-inch touchscreen enables human interaction with the system, the input for entering cattle information, and displaying results.

Figure 2.

Hardware components.

2.3. Software

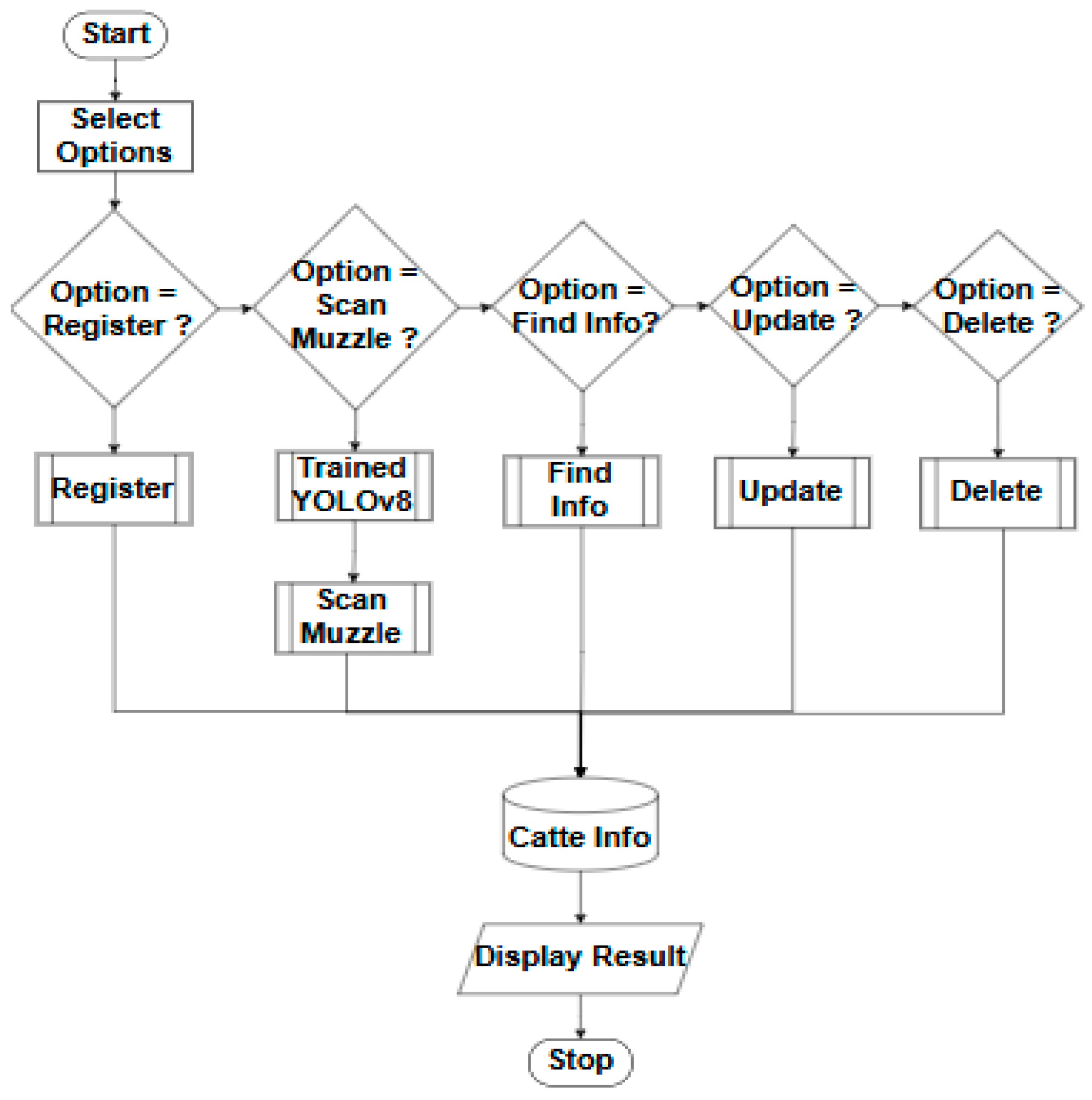

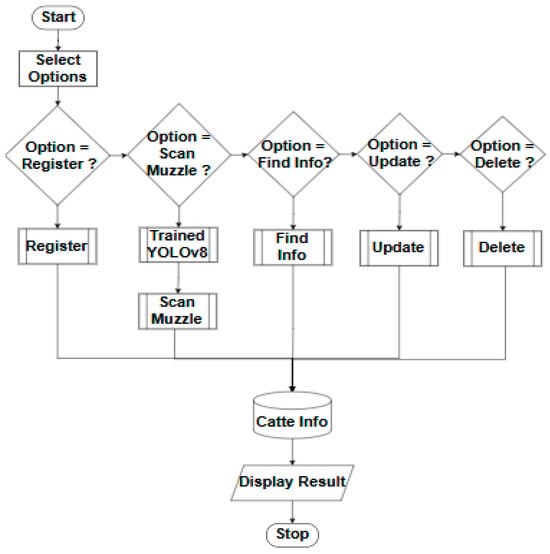

Figure 3 shows the system operation of the developed cattle identification system. The system provides options for the user: Register, Scan Muzzle, Find Information, Update Information, and Delete Information. To register, users enter information, such as cattle ID, breed, age, weight, and health information. For registration, the cattle muzzle is detected by YOLOv8. To prevent record duplicates, the system validates cattle ID to recognize if it is registered in the database. If the cattle ID or its features are found in the database, the system rejects the registration, and the user is notified of that. Three muzzle images of the cattle are registered in the system. The Scan Muzzle option is used to identify already registered cattle by capturing an image of its muzzle. The system detects a muzzle first. Once the YOLOv8 model detects a muzzle, the system prompts the user to capture the muzzle image. After the muzzle image is captured, the system proceeds to the Find Match module, where the identification process starts. The ORB algorithm is responsible for feature extraction. The system compares the extracted features with those in the database, which is operated by FLANN. If there is a match, related information appears. The other options, Find Information, Update Information, and Delete Information, are used to manage the cattle records stored in the system.

Figure 3.

System operation.

2.4. YOLOv8 Algorithm

YOLOv8 was trained with the data of cattle images. Each image in the dataset was annotated to define bounding boxes around the muzzles and the region of interest (ROI). Once training was completed, the trained YOLOv8 model identified cattle muzzles. Model training continued until satisfactory performance was obtained.

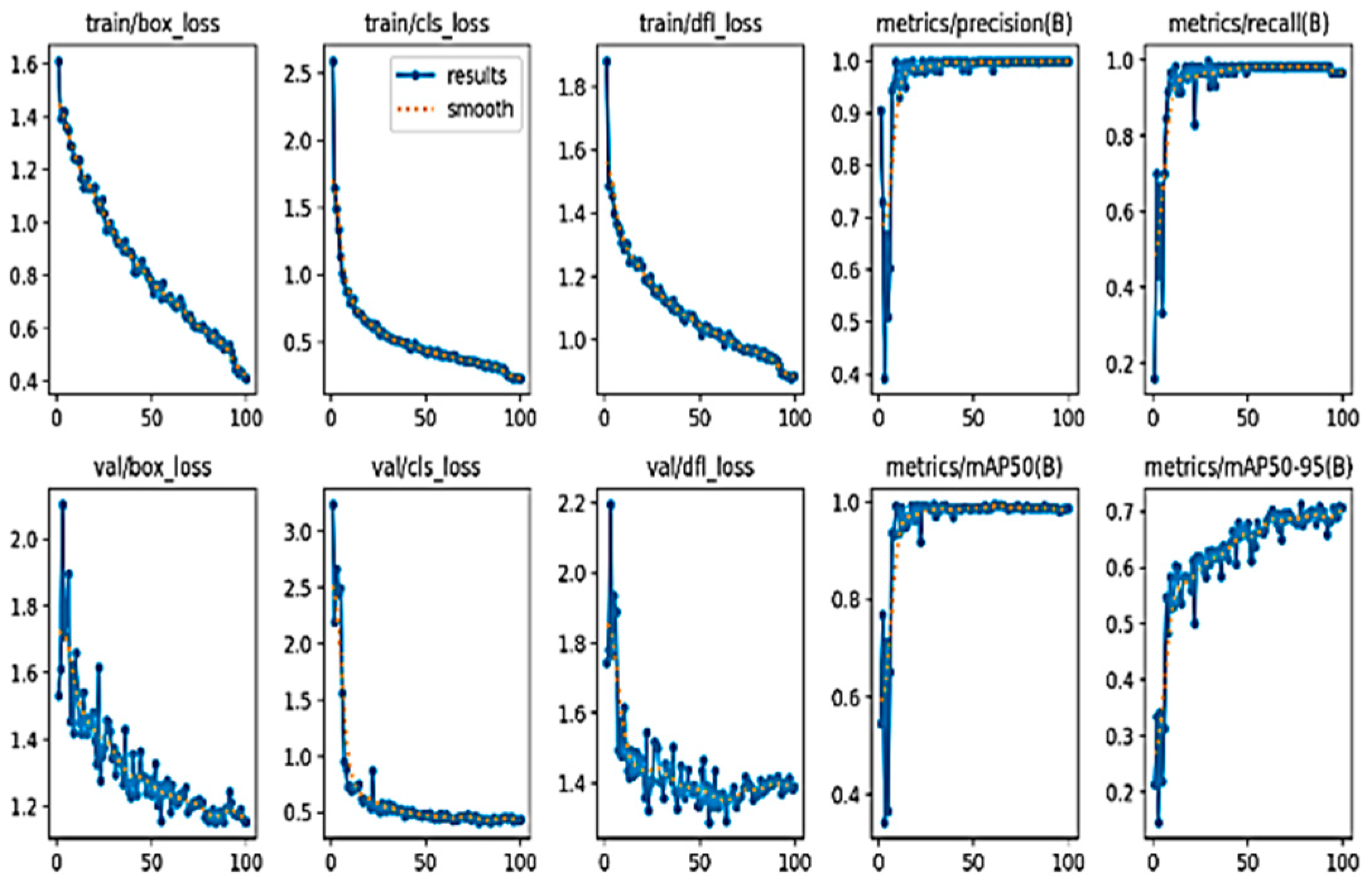

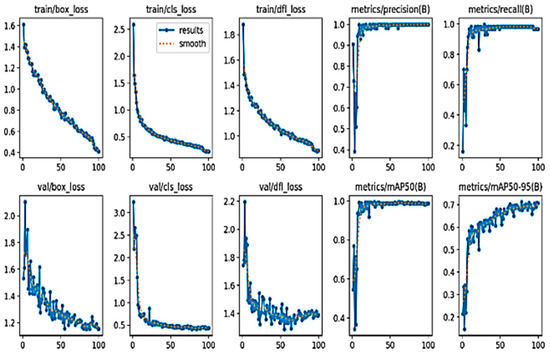

Figure 4 shows the metrics of the training results of the YOLOv8 model. The graphs show the model’s learning progress. The decrease in the loss value indicates that the model improved its ability to provide accurate predictions. The effectiveness of the trained model was evaluated. The precision was 0.95, the recall was 0.90, and the mean average precision was 0.92 for @0.5 intersection over union (IOU) and 0.67 for @0.95 IOU. The effectiveness of YOLOv8 in performing muzzle detection was validated through the evaluation.

Figure 4.

YOLOv8 training results shown by the blue line.

2.5. ORB and FLANN Algorithm

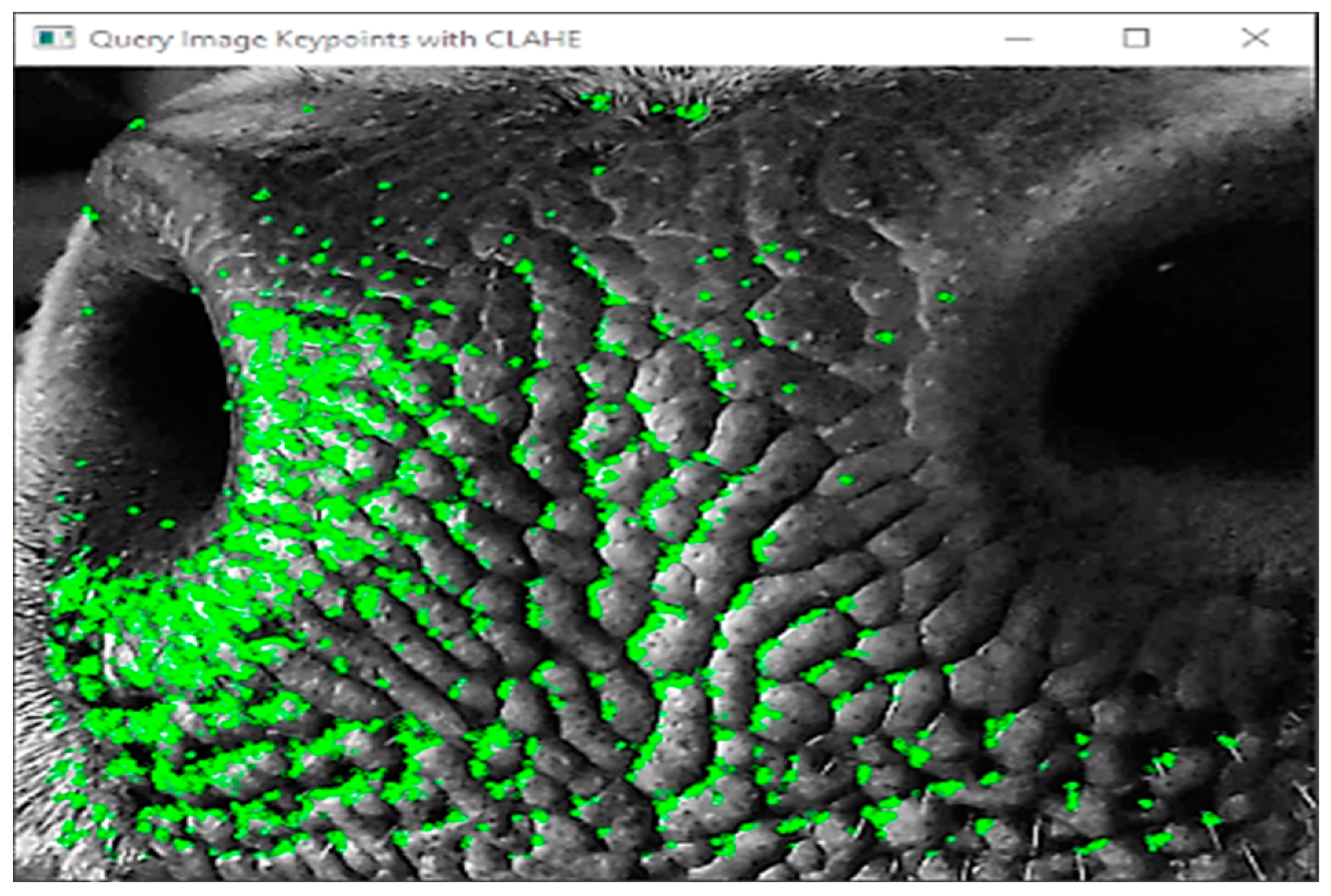

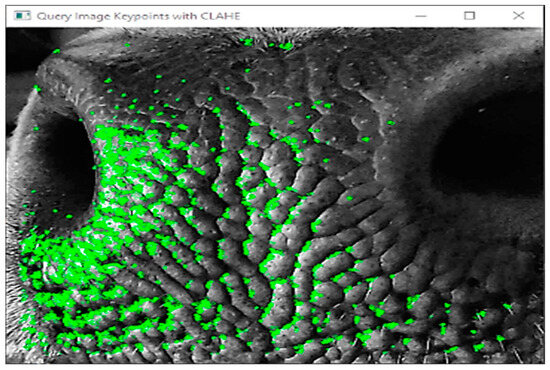

Before feature extraction, the query image was enhanced using CLAHE. The details of the muzzle print were amplified to make unique patterns more distinguishable. Features, such as ridges and shapes in the muzzle, became more pronounced to detect key points better. After the image was enhanced, the ORB algorithm recognized and extracted key points and descriptors found in the query image. These features were compared with those registered in the system. A ratio test was applied to discard poor matches. The system computed the similarity and displayed the results if the score exceeded the threshold.

Figure 5 shows the result of feature extraction on a cattle muzzle image following the initial application of CLAHE. The image’s contrast was enhanced, making the muzzle patterns more distinct. The effectiveness of ORB in detecting the key points was improved. The green dots indicate the detected key points and specific locations of distinctive characteristics of the image, such as corners, blobs, or edges of the muzzle. Once the key points were detected, they were converted into points in binary vectors using the BRIEF descriptors to describe the location and orientation of the key point.

Figure 5.

Query image key points.

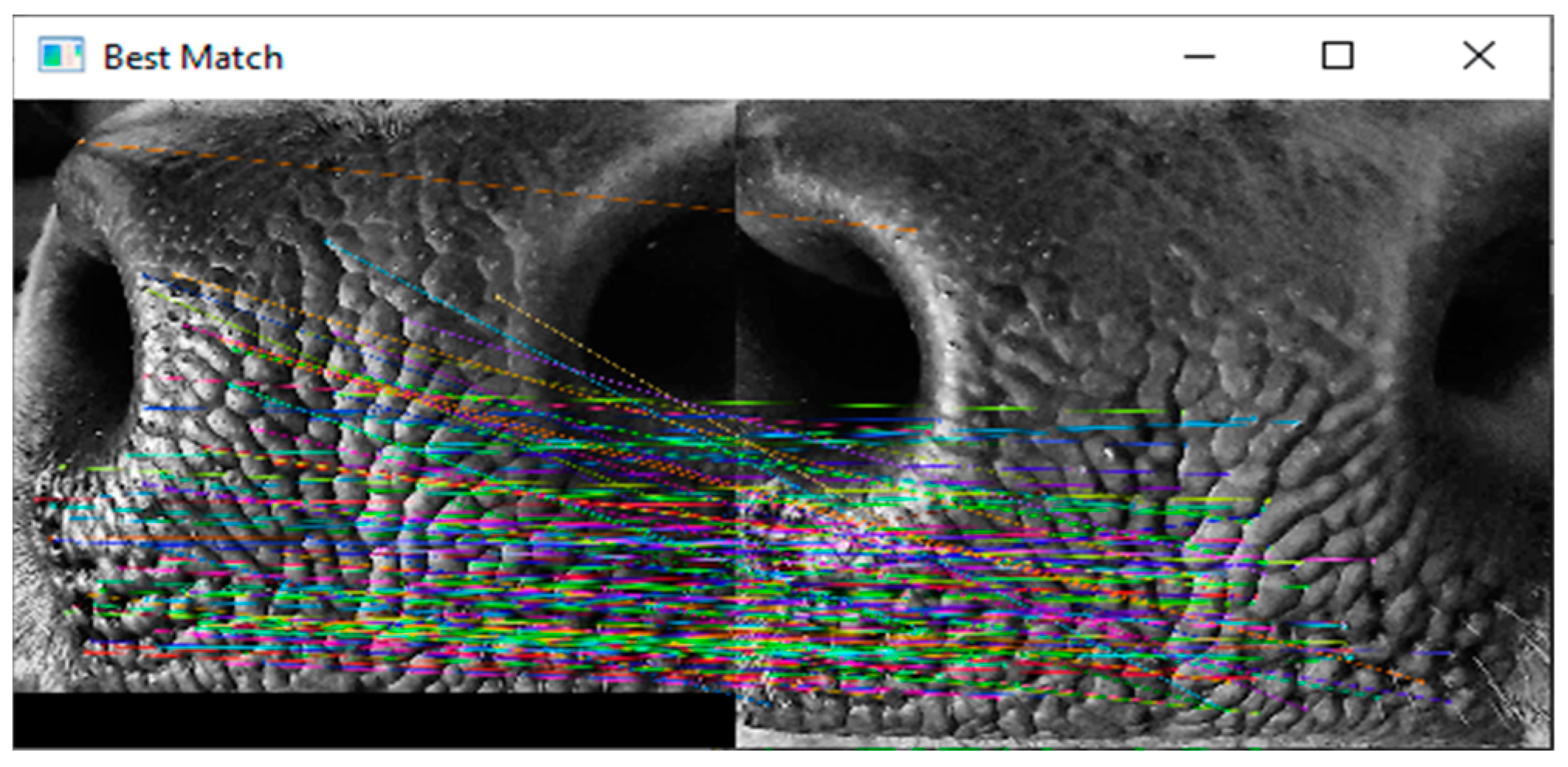

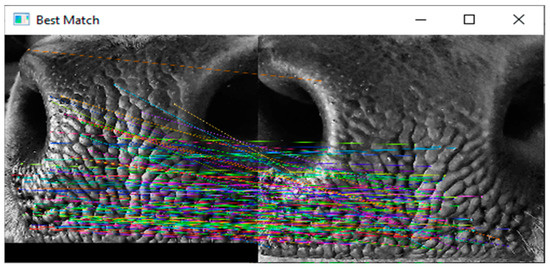

Once ORB generated the descriptors for the query image, FLANN searched the database for descriptors of the query image and compared them with those stored in the database (Figure 6). Potential matches were confirmed by calculating the distance between these descriptors and finding the closest matches. The left picture in Figure 6 represents the query image, while the right picture shows the image stored in the database. The colored lines connecting the two images indicate matched key points. Each line corresponds to a specific feature extracted by the ORB algorithm matched to a corresponding feature in the other image. To find the best match, the average sum of the distance and good matches were calculated. The image from the database with the lowest average score with the highest number of good matches was considered the best match. In addition, a threshold was set for both average score distance and number of good matches to be met for an image to qualify as a match.

Figure 6.

Key point matches.

2.6. Experimental Setup

Using the developed hardware, the experiment was conducted, as shown in Figure 7. The muzzle image was captured first. To ensure safety, a safe distance was kept from the cattle. To minimize the movement of the cattle, the cattle were handled by their owners, avoiding any potential risk of accidents and lessening cattle movement for image capturing.

Figure 7.

Experiment on the farm.

3. Results and Discussions

Data were gathered for YOLOv8 model training and system testing. Images of cattle muzzles were obtained from a nearby cattle farm and the internet. For YOLO training, 200 images of different cattle were used. The model was tested with 25 different cattle, as shown in Table 1.

Table 1.

Testing data.

Based on the data and the identification results, a confusion matrix was created for this system. The confusion matrix illustrated the system’s performance in matching registered cattle based on their muzzle prints. In the matrix, each row corresponds to the actual cattle class, while columns correspond to the predicted class. Correct predictions are presented on the diagonal in green. The system incorrectly identified 2 out of 20 cases. Matching accuracy was calculated using (1).

The confusion matrix showed the system’s correct identification of the registered cattle. The system identified 90% of the tested cattle correctly. The system distinguished unique muzzle prints, and the feature extraction and matching process were effectively conducted (Table 2).

Table 2.

Confusion matrix results for identification of registered cattle.

Table 3 shows the results of the matching of the system. The total number of trials, actual cattle, predicted cattle, and remarks are presented in the table. The system was tested for 25 different cattle, of which 20 of the cattle were registered and 5 were not. The system correctly identified 22 out of 25 cattle in the database.

Table 3.

System data output.

Table 4 presents the confusion matrix for the identification of the system. The matrix shows the system’s ability to identify both categories correctly. The system achieved 88% overall accuracy in distinguishing between registered and unregistered cattle.

Table 4.

Confusion matrix for registered and unregistered cattle identification.

4. Conclusions and Recommendations

We developed a cattle identification system utilizing the unique features of cattle muzzle patterns. The system successfully identified the muzzle patterns by integrating YOLOv8 for muzzle detection, ORB for feature extraction, and FLANN for feature matching. Its overall accuracy was 88%. The system was easy to use and user friendly, ensuring that cattle farmers use it in the field. The system simplified the cattle identification process, making it less labor-intensive than traditional methods. It showed potential as a reliable method for non-invasive livestock identification.

For further research, there is room for improvement. Higher accuracy can be obtained by refining the feature extraction algorithms, improving the image quality, and using a larger dataset. Additionally, advanced techniques are needed to improve performance in diverse environmental conditions. Moreover, exploring other biometric features, such as facial recognition or body markings, enables more accurate cattle identification. Implementing real-time functionality also improves the system’s usability.

Author Contributions

Conceptualization, A.J.B., K.M.A.P. and M.V.C.C.; methodology, A.J.B. and K.M.A.P.; software, A.J.B. and K.M.A.P.; validation, A.J.B., K.M.A.P. and M.V.C.C.; writing—original draft preparation, A.J.B. and K.M.A.P.; writing—review and editing, A.J.B., K.M.A.P. and M.V.C.C.; visualization, A.J.B. and K.M.A.P.; supervision, M.V.C.C.; All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Data are contained within the article.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Adcock, S.J.J.; Tucker, C.B.; Weerasinghe, G.; Rajapaksha, E. Branding Practices on Four Dairies in Kantale, Sri Lanka. Animals 2018, 8, 137. [Google Scholar] [CrossRef] [PubMed]

- Bodkhe, J.; Dighe, H.; Gupta, A.; Bopche, L. Animal Identification. In Proceedings of the 2018 International Conference on Advanced Computation and Telecommunication, ICACAT 2018, Bhopal, India, 28–29 December 2018. [Google Scholar] [CrossRef]

- Hayer, J.; Nysar, D.; Schmitz, A.; Leubner, C.; Heinemann, C.; Steinhoff-Wagner, J. Wound lesions caused by ear tagging in unweaned calves: Assessing the prevalence of wound lesions and identifying risk factors. Animal 2022, 16, 100454. [Google Scholar] [CrossRef] [PubMed]

- Awad, A.I. From classical methods to animal biometrics: A review on cattle identification and tracking. Comput. Electron. Agric. 2016, 123, 423–435. [Google Scholar] [CrossRef]

- Caya, M.V.; Arturo, E.D.; Bautista, C.Q. Dog Identification System Using Nose Print Biometrics. In Proceedings of the 2021 IEEE 13th International Conference on Humanoid, Nanotechnology, Information Technology, Communication and Control, Environment, and Management, HNICEM 2021, Manila, Philippines, 28–30 November 2021. [Google Scholar] [CrossRef]

- Dulal, R.; Zheng, L.; Kabir, M.A.; McGrath, S.; Medway, J.; Swain, D.; Swain, W. Automatic Cattle Identification using YOLOv5 and Mosaic Augmentation: A Comparative Analysis. In Proceedings of the 2022 International Conference on Digital Image Computing: Techniques and Applications, DICTA 2022, Sydney, Australia, 30 November–2 December 2022. [Google Scholar] [CrossRef]

- Petersen, W. The Identification of the Bovine by Means of Nose-Prints. J. Dairy Sci. 1922, 5, 249–258. [Google Scholar] [CrossRef]

- Das, A.; Sinha, S.S.S.; Chandru, S. Identification of a Zebra Based on Its Stripes through Pattern Recognition. In Proceedings of the 2021 International Conference on Design Innovations for 3Cs Compute Communicate Control, ICDI3C 2021, Bangalore, India, 10–11 June 2021; pp. 120–122. [Google Scholar] [CrossRef]

- Hirsch, M.; Graham, E.F.; Dracy, A.E. A Classification for the Identification of Bovine Noseprints. J. Dairy Sci. 1952, 35, 314–319. [Google Scholar] [CrossRef]

- Aleluia, V.M.T.; Soares, V.N.G.J.; Caldeira, J.M.L.P.; Rodrigues, A.M.; Polytechnic Institute of Castelo Branco. Livestock Monitoring: Approaches, Challenges and Opportunities. Int. J. Eng. Adv. Technol. 2022, 11, 67–76. [Google Scholar] [CrossRef]

- Caya, M.V.C.; Caringal, M.E.C.; Manuel, K.A.C. Tongue Biometrics Extraction Based on YOLO Algorithm and CNN Inception. In Proceedings of the 2021 IEEE 13th International Conference on Humanoid, Nanotechnology, Information Technology, Communication and Control, Environment, and Management, HNICEM 2021, Manila, Philippines, 28–30 November 2021. [Google Scholar] [CrossRef]

- Balangue, R.D.; Padilla, C.D.M.; Linsangan, N.B.; Cruz, J.P.T.; Juanatas, R.A.; Juanatas, I.C. Ear Recognition for Ear Biometrics Using Integrated Image Processing Techniques via Raspberry Pi. In Proceedings of the 2022 IEEE 14th International Conference on Humanoid, Nanotechnology, Information Technology, Communication and Control, Environment, and Management, HNICEM 2022, Boracay Island, Philippines, 1–4 December 2022. [Google Scholar] [CrossRef]

- Chan, C.J.L.; Reyes, E.J.A.; Linsangan, N.B.; Juanatas, R.A. Real-time Detection of Aquarium Fish Species Using YOLOv4-tiny on Raspberry Pi 4. In Proceedings of the 4th IEEE International Conference on Artificial Intelligence in Engineering and Technology, IICAIET 2022, Kota Kinabalu, Malaysia, 13–15 September 2022. [Google Scholar] [CrossRef]

- Legaspi, K.R.B.; Sison, N.W.S.; Villaverde, J.F. Detection and Classification of Whiteflies and Fruit Flies Using YOLO. In Proceedings of the 2021 13th International Conference on Computer and Automation Engineering, ICCAE 2021, Melbourne, Australia, 20–22 March 2021; pp. 1–4. [Google Scholar] [CrossRef]

- Bresolin, T.; Ferreira, R.; Reyes, F.; Van Os, J.; Dórea, J. Assessing optimal frequency for image acquisition in computer vision systems developed to monitor feeding behavior of group-housed Holstein heifers. J. Dairy Sci. 2023, 106, 664–675. [Google Scholar] [CrossRef] [PubMed]

- Alde, R.B.A.; De Castro, K.D.L.; Caya, M.V.C. Identification of Musaceae Species using YOLO Algorithm. In Proceedings of the 2022 5th International Seminar on Research of Information Technology and Intelligent Systems, ISRITI 2022, Yogyakarta, Indonesia, 8–9 December 2022; pp. 666–671. [Google Scholar] [CrossRef]

- Caya, M.V.C.; Durias, J.P.H.; Linsangan, N.B.; Chung, W.-Y. Recognition of tongue print biometrie using binary robust independent elementary features. In Proceedings of the 2017 IEEE 9th International Conference on Humanoid, Nanotechnology, Information Technology, Communication and Control, Environment and Management (HNICEM), Manila, Philippines, 1–3 December 2017; pp. 1–4. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).