Abstract

We propose a fast eye-tracking method that takes the depth image and the gray-scale infrared (IR) image using a traditional image processing algorithm. As an IR image contains one face and the corresponding depth image, the method locates the real coordinate of the camera with a high speed (>90 frames per second) and with and acceptable error. The method takes advantage of the depth information to quickly locate the face by shrinking the eyeballs. The method decreases the error rate but accelerates the operation speed. After finding the face region, less complicated computer vision algorithms are used at a high execution speed. Refinement mechanisms for extracting features and determining edge distribution are used to locate the eyeball’s position and transform the pixel coordinate of the image to the real coordinate.

1. Introduction

Eye tracking is used to find the position of eyeballs. The eye-tracking method is designed for an application using the coordinate (x, y, z) of the eyes [1]. The coordinate is determined instantly after the camera captures an image that contains the eyes and is updated for every frame. The frame rate of the camera is the lower bound of the execution speed. A conventional camera captures frames at a rate of 90 images per second, but we used a camera that could take 270–320 frames per second, which is three times more than that of the conventional camera.

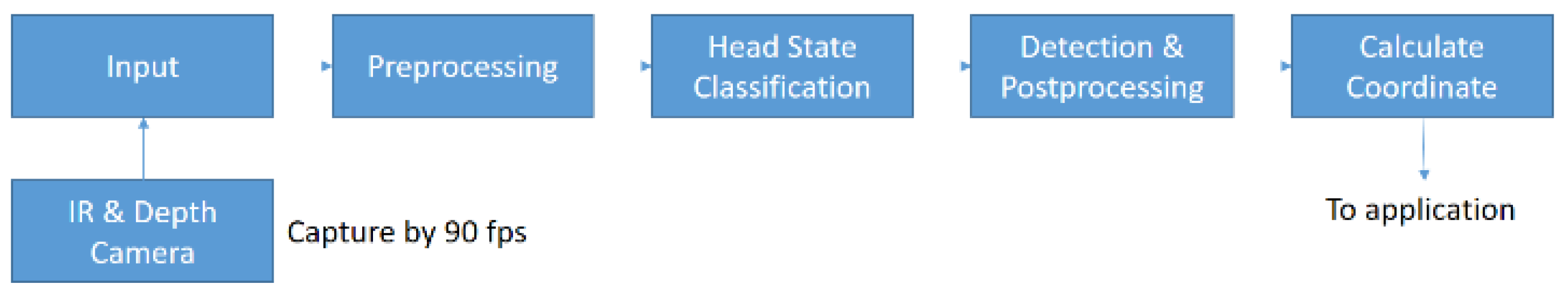

The flow chart and the visualization of the method in this study are shown in Figure 1 and Figure 2. The output three-dimensional coordinate of the sample images was (1.43514, 4.08796, 58.1) cm. The method was developed to achieve high execution speed by shrinking the image in the preprocessing step. The rotation of the face or the mask was used for the detection of the eyes. The head-state classification was also used to check if the face was rotated correctly (the orientation of the head) or if the mask was worn. Finally, we determined the position of the eyes on the shrunken image after detection and postprocessing. The midpoint of elements of the pair was transformed on the coordinate set by a camera.

Figure 1.

Flow chart of the developed method.

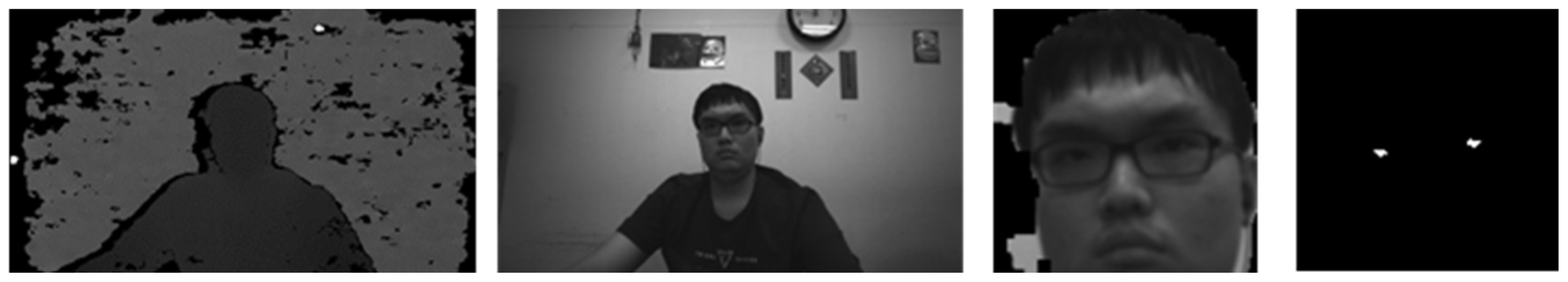

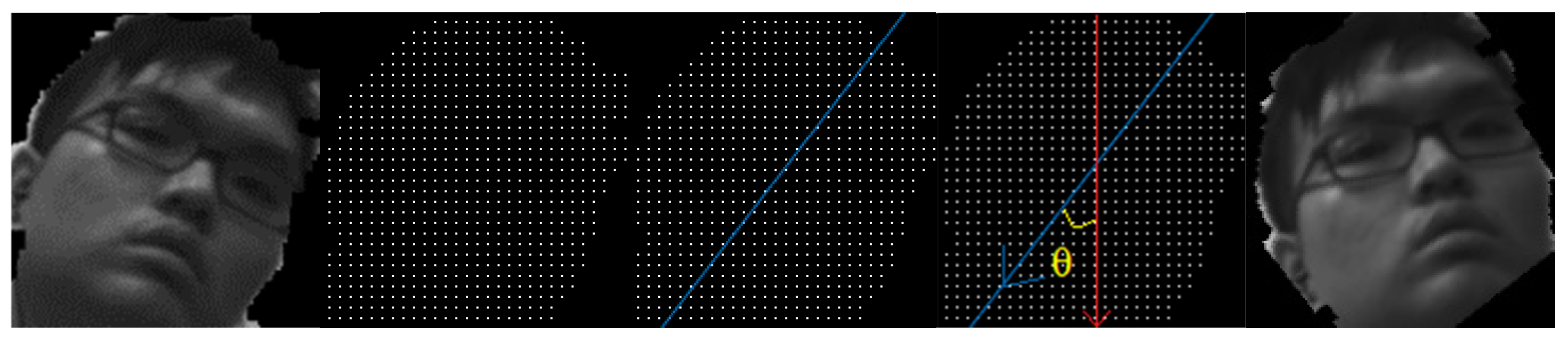

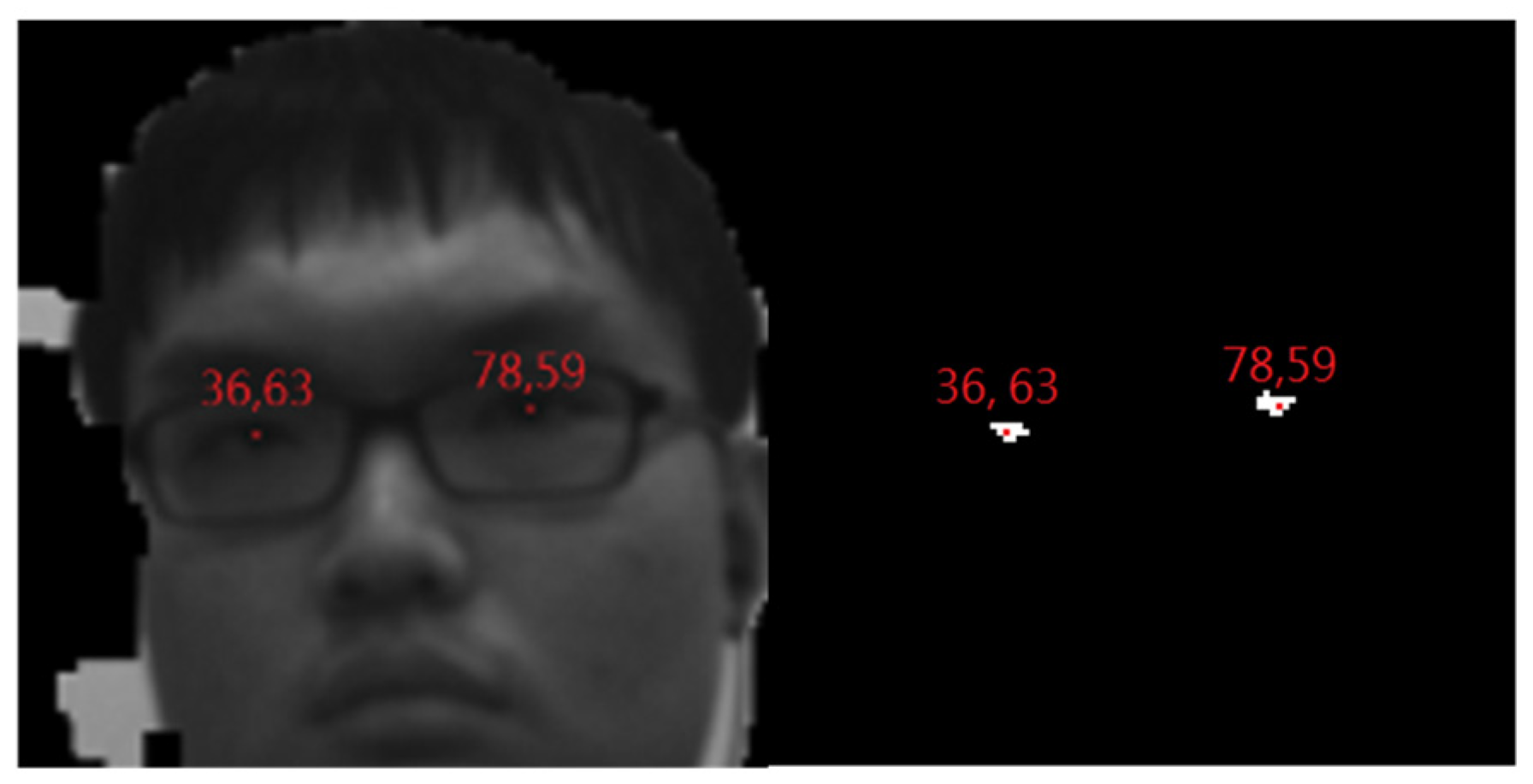

Figure 2.

Depth input and IR input, after preprocessing, after detection, and postprocessing from left to right.

2. Method

2.1. Input Images

In the method, two input images were captured at a speed of 90 frames per second. The pixels of the depth image were 0–65,535 (16 bits). The gray-scale infrared image had a range of 0–255. When two objects were captured in the same resolution at the same time, their positions and sizes were also identical. Therefore, if the midpoint of the eyeballs is at (r, c) on the image, the depth value at (r, c) can be computed on the 3D coordinate. This property enables the position of the face by decreasing the detection region in image preprocessing.

2.2. Preprocessing

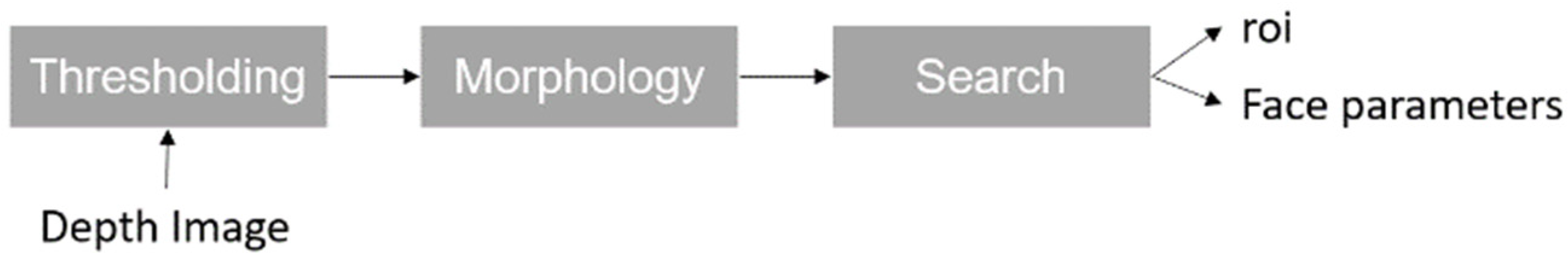

The size of the input image was 848 columns by 480 rows, which was too large for the system to achieve high execution speed. Apart from the speed, the error rate must be reduced. By highlighting the facial region on images, confusing objects such as the background or the users’ clothes were omitted. Figure 3 shows the flow chart of image preprocessing. The region of interest (ROI) contained the facial region. Other parameters such as average intensity were also recorded (Table 1). The eye-tracking method measures the distance between the camera and the user less than 700 mm; so, the thresholding was defined as (1).

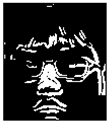

Figure 3.

Flow chart of preprocessing.

Table 1.

Searching step of preprocessing.

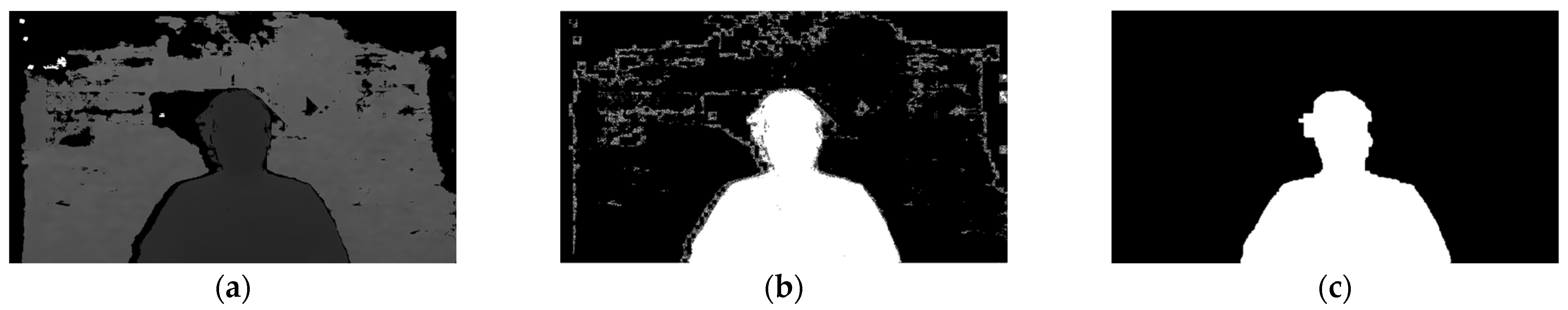

Then, we used binary open and closing to remove noise and fill holes in the image by using (2) and (3).

We searched the images as shown in Figure 4 to determine the ROI and other outputs, which are denoted “F” and are M rows by N columns (Figure 5).

Figure 4.

(a) Input depth image, (b) after thresholding, and (c) after morphology.

Figure 5.

Sample outputs of the preprocessing step.

2.3. Head State Classification

The face image was rotated to determine whether the mask was on or not. By changing either state, the detection process was conducted. If the head is tilted, a pair of eyeballs do not lie in the same horizontal position. Therefore, it is necessary to check the state of the face before detecting the eyeballs. Then, we represented each type of state as state variables. The name and brief introduction of state variables are listed in Table 2. The variables pitch, roll, and yaw are represented in “char”, and the variable mask is presented as “bool”. Figure 6 shows the examples of four head states.

Table 2.

State variables.

Figure 6.

(a) Roll right, (b) pitch up, (c) yaw left, and (d) mask on.

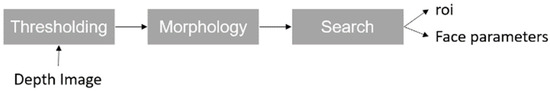

2.3.1. Roll

In step 7 of procedure 1 in Table 1, a tilting angle θ was determined by rotating the head by −θ to make the look as if it was not tilted. In Figure 7, we show the result to make the orientation of the head align with the vertical axis by rotation.

Figure 7.

Canceled effect of “roll”.

2.3.2. Pitch-Up, Yaw, and Mask

We checked if the head was lifted, turned, or was on the mask using the information of the edge distribution of the lower part of F. We unrelated the mask with the three types of rotation into the face state as they affected the edge distribution on the bottom part of the face.

To obtain the edge of F, we used the Sobel operator (4) on F [3], and the Sobel operator was calculated using (5). I is the gray-scale image on the edges, and S is the edge distribution with the variable type, integer. In (6), Eg is the edge distribution represented by a binary image and is the 80th percentile in S found by a histogram. In the histogram, the O(nlogn) was determined at a lower bound. Therefore, 1-pixels of Eg represented the top 20% likely-to-be-edge pixels.

The edge distributions of the lower part of different cases are shown in Table 3.

Table 3.

Edge distribution in different cases.

When the mask was not worn and the face was not lifted, there were “thick” edges at the bottom part of the image due to the fact that mouth and nose were there. When the mask was not worn and the head was lifted, there were no edges at the bottommost region, but several edges of the mouth or nose were visible below the half of the image. When the mask was worn and the head was not rotated, a horizontal edge was found across the face, which was caused by the border between the mask and the face. The number of edges on the lower part was significantly less than that on the upper part. When the mask was worn and the head was turned, a thin edge on the border between the mask and the cheeks was visible. When the mask was worn and the head was lifted, no visible edges except for side regions were observed. Thick edges of the mouth were observed in the x direction. In comparison, the vertical edge of the mask and the cheek was thinner. We excluded the thin edges by simply opening the image with a kernel with one row.

In the method, we determined the three state variables by Procedure 2 in Table 4 using the above principle.

Table 4.

Procedure 2: state classification part 2.

To consider the edges of the mask or the face and remove the edge between the background and the user, steps 1 and 2 were used. Steps 3 to 5 were used to check if the face met the condition. If so, the system judged that the user did not wear mask and did not lift the head, and then determined if the head was turned from the position of thick edges. If there were no or few thick edges, the user lifted the head with a mask. Then, steps 6 to 8 were applied to judge the status of the face. Edges close to the center and located at the bottom half of F were determined. in step 6 was computed to determine the number of edges in the upper part that was significantly larger than that in the lower part.

In step 8, the standard deviation of the x coordinates of thick edges and y coordinates of all edges was calculated. Standard deviation was used to determine the dispersion of a dataset in x or y directions.

Then, state classification is performed according to the criterions in Table 5. The edges of the mouth and the nose were concentrated compared to the horizontal edge of the mask. Therefore, if the standard deviation of x coordinates is small, the edges are relatively concentrated, and the system judges that the face without the mask was lifted.

Table 5.

Procedure 3: state classification part 3.

When the standard deviation of the x coordinates was large, the dispersion of the y coordinate was observed. The vertical edge of the mask was invisible when the mask was worn and the face was not turned. Although the edges spread over the x-axis, they were concentrated on the y-axis. On the other hand, the vertical edge was visible and spread over the entire y-axis.

2.3.3. Pitch Down

To detect if the head was pitched down, the edge distribution of the upper part of the face was determined. When a person pitched the head down, the hair covered the upper part of the head. Therefore, the number of edges in the upper part decreased.

2.4. Detection and Postprocessing

After size reduction and determining the states of the face, the system detected the eyeballs from F. The first step was to determine find Mid[i], the mid-column coordinate of non-zero pixels at the ith row. Then, the ROIs were calculated in four directions using (7) (Table 6).

where F′ means the non-zero region of F.

Table 6.

Procedure 4: find the ROI of eyes.

After thresholding, we viewed each connected component of one pixel as a candidate for the eye, and we removed all candidates whose area was larger than 0.015MN or was not located in the ROI of eyes.

Finally, we selected a pair of candidates that were most likely to be eyes. Every pair was composed of a candidate on the left to the midline of the face, and a candidate was located near it, and every pair had a score calculated by using (8).

where and are the areas of the candidates in the pair; and are weight that will be explained later; and V means the validity of the pair, in other words, if the pair is impossible to be the pair of eyes, V = 0, and Table 7 shows the condition that V = 1.

Table 7.

Thresholds in different cases.

The uppermost and bottommost coordinates of the two elements were labeled as and , in the pair as . The two candidates were overlapped in a row by “k” means. The two integer intervals () and () were overlapped. “” and “” are the “weight”, which is either 1 or 4 when pitch != ‘D’. The weight of a side was set to be 4 when the candidate formed a nonzero score pair, which was the lowest (the row coordinate was the highest), or the candidate overlapped with the lowest candidate of its side in the row in a range of 2. Otherwise, the weight was 1. Because eyebrows were a dark object, they became candidates, and the positions and areas were close to those of eyeballs which caused misdetection. Therefore, the weight was used to prevent the situation that eyebrows were chosen because eyebrows were positioned higher than the eyeballs. In the pitch-down cases, the eyeballs’ region shrank compared with that in the non-rotated case; so, the weight became higher.

The candidate was the eyes connected to the eyebrows, and the problem was solved by postprocessing. When the difference in intensity, , in a candidate was larger than 3, the pixels whose intensity > was reset to 0. Then, because this operation broke a candidate into several pieces, the system selected two candidates again (Table 8).

Table 8.

Requirements for V = 1.

2.5. Coordinate Transformation and Correction

After determining the two candidates, we transformed the midpoint of the centroids of the two candidates to the global coordinate by using the pinhole camera model. The raw result might be an image with lots of non-smooth peaks, oscillations. To avoid this, a correction was made as follows.

A coordinate that is the nth sample, f[n], is an oscillation if (9) is satisfied.

where is the second-order difference and the variable m is the samples after the last reset of the correction step. The corrected image was reset (m reset to 0) if the number of continuous corrections was larger than 5. This was necessary because the error presented in Figure 8 occurred.

Figure 8.

Error caused by continuous “corrections”.

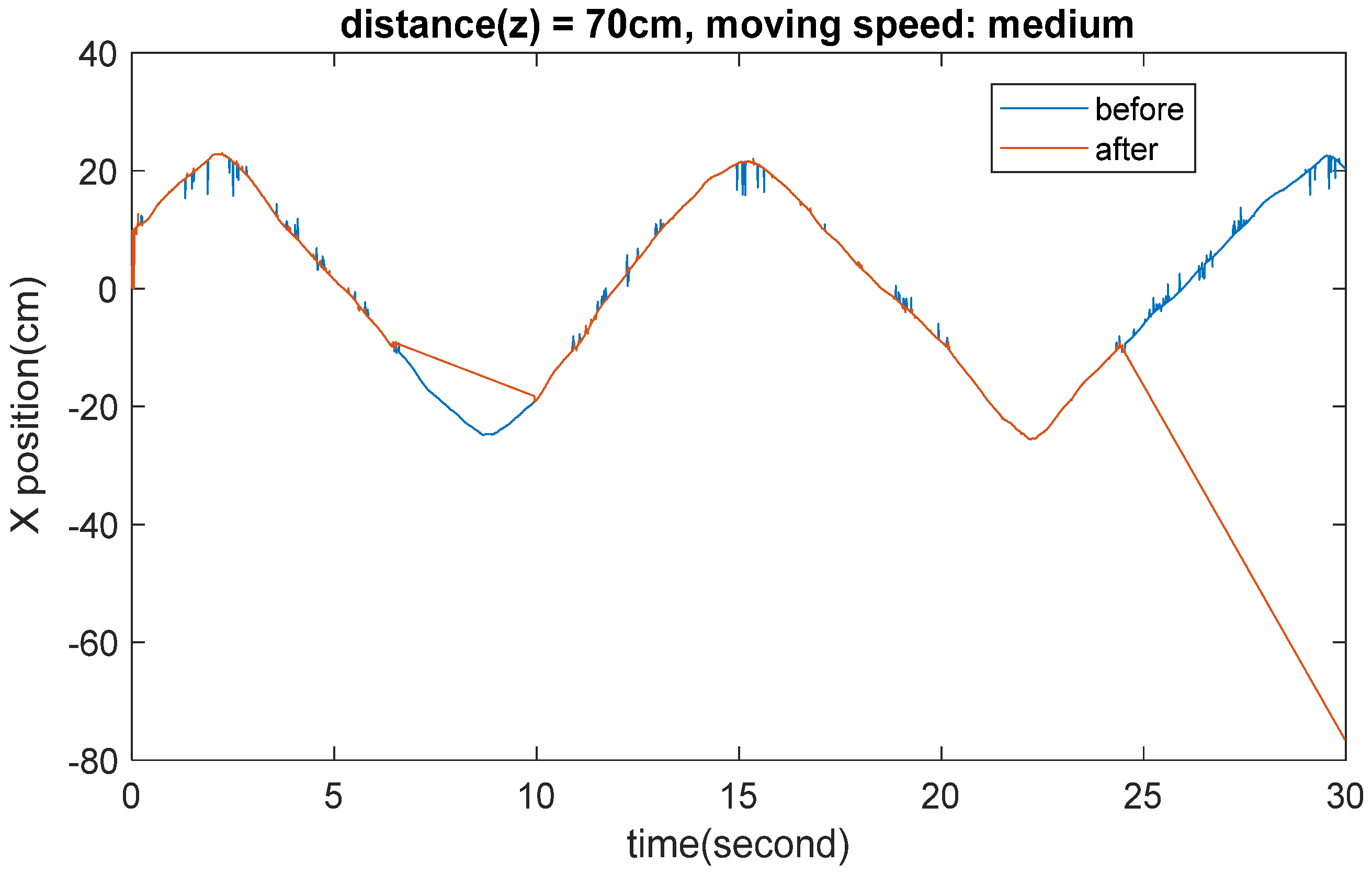

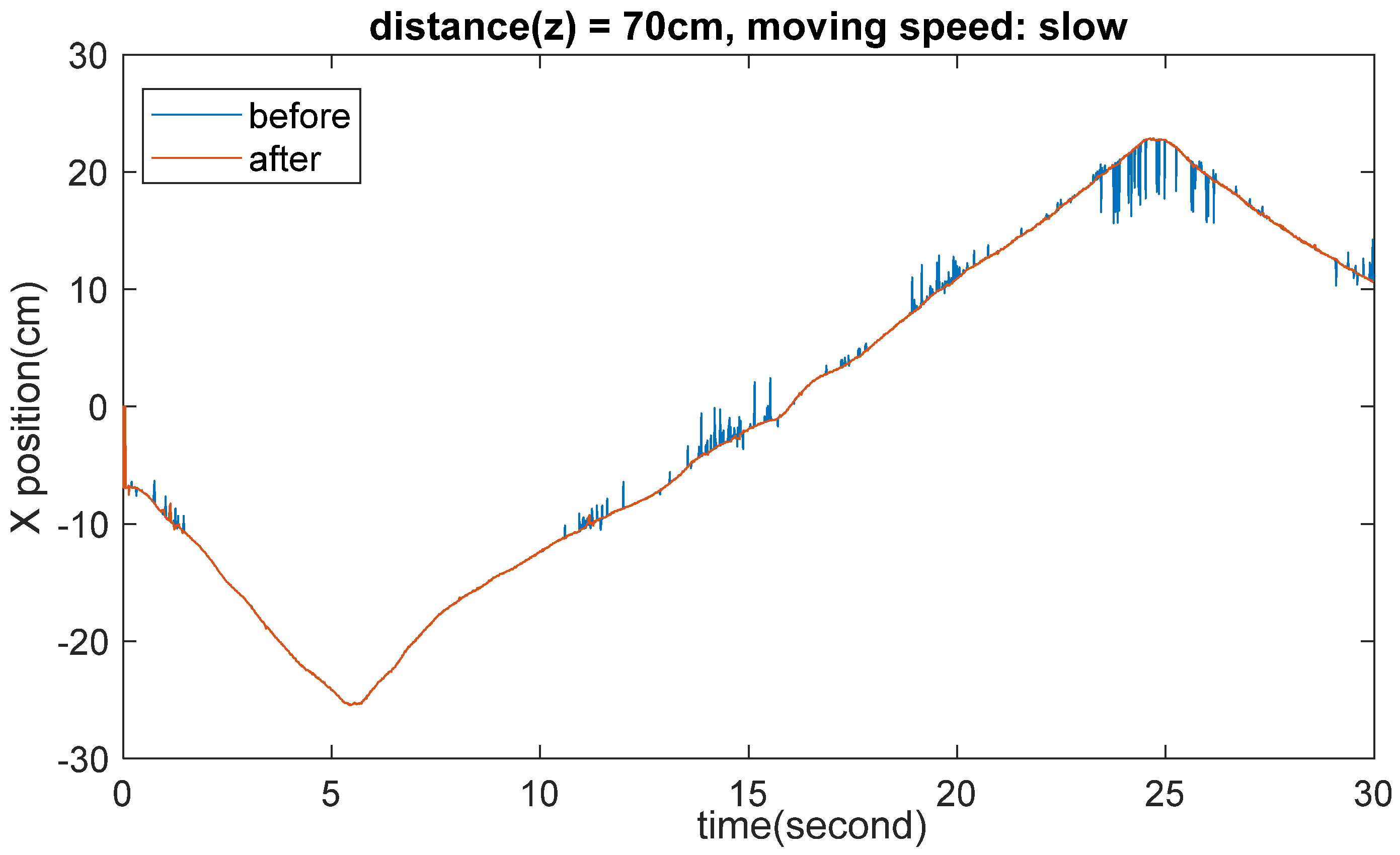

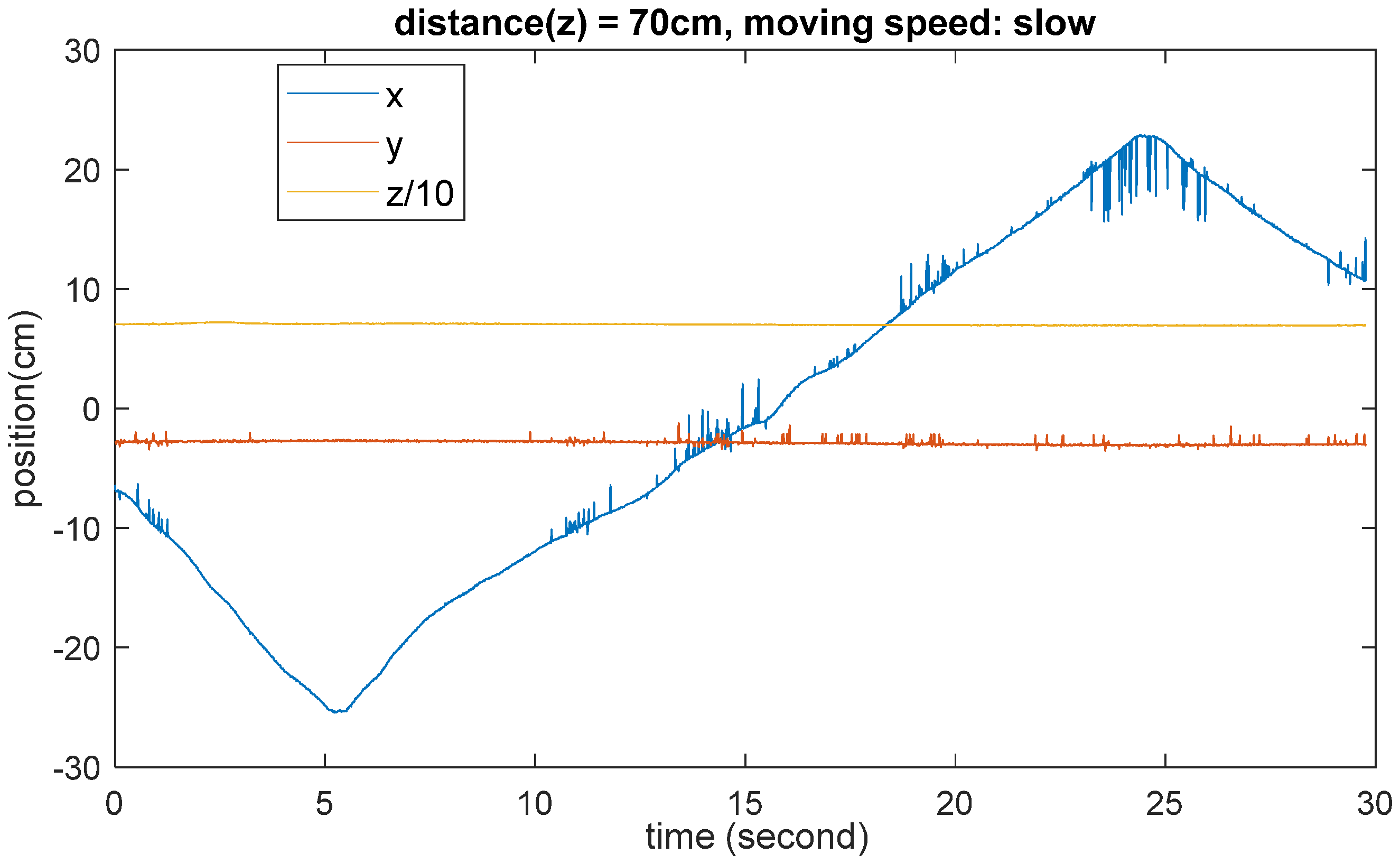

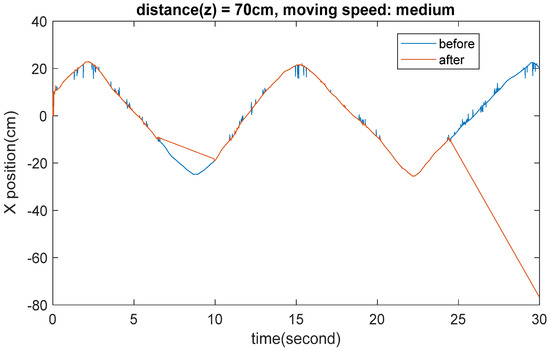

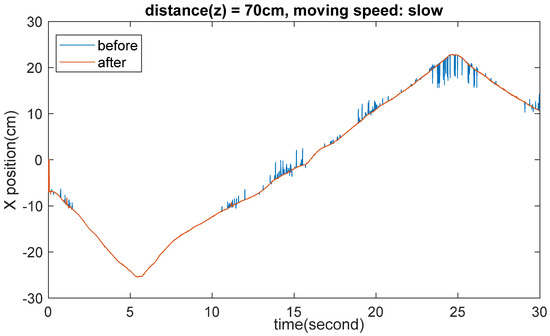

When a coordinate was an oscillation, we corrected it by finding the linear regression [4] of the last nine samples behind it and then corrected the value of the coordinate by treating it as the tenth samples. Then, the value was predicted using the linear regression model. The result after correction is shown as the orange curve in Figure 9, where the blue curve is the x-curve in Figure 10.

Figure 9.

Corrected x position in Figure 10.

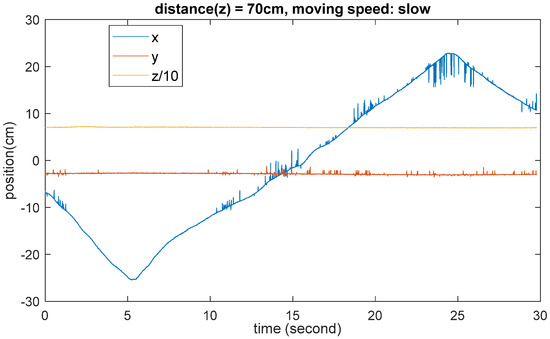

Figure 10.

Sample output of the 3D coordinates.

3. Discussions

The execution time of the method was calculated. We used Intel core i7-6700K @ 4.00 GHz and ISO C++ 14 on the platform Visual Studio 2022 v143. In the developed method, 270–320 frames per second was used, which is three times faster than the camera’s capture rate.

3.1. Detection Rates in Different Cases

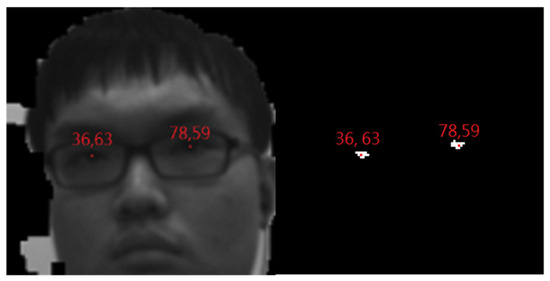

A camera was placed in front of the user who had different head states, and images were captured at a rate of 90 frames per second. A detection was regarded successful if the pair of candidates overlapped the eyeballs on F. Figure 11 shows an example of successful detection. The detection rate is listed in Table 9. Because the detection was successful only if the head state classification was correct, the detection rate was regarded as the lower bound of the successful rate of the head orientation detection.

Figure 11.

Successful detection.

Table 9.

Detection rates in different cases.

3.2. Accuracy and Stability

We evaluated the method using random face images. The printed face with a mask and glasses was put in 27 different places in front of the camera at distances of 50, 60, and 70 cm and 9 angles per distance. Then, 9000 frames (100 s) were captured in each place. Three parameters were defined using (10) to (12), and the result are shown in Table 10, Table 11 and Table 12.

Table 10.

Results in 50 cm.

Table 11.

Results in 60 cm.

Table 12.

Results in 70 cm.

4. Conclusions

We propose a fast eye-tracking method based on computer vision and image processing algorithms. We shrank the input image with the assistance of depth information to significantly increase the speed of the system and remove interferences. For eyes in the rotated face, we adopted edge detection and PCA to determine the status of the face. After two preparatory processes, we detected eyes by using the connected component analysis. Then, we transformed the average of the centers of the eyes to the coordinates in the camera model. The system showed a speed of 270 to 320 frames per second with stability. The standard deviation (precision) of the method in detecting eyes on static face was lower than 1 mm, and the maximum deviation (maxshift) was lower than 5 mm. For accuracy and high detection rate, we did not eliminate the influence of the camera distortion, and the mean error (accuracy) seldom exceeded 10 mm. The detection rate surpassed 90% when the face was not rotated.

Author Contributions

Conceptualization, M.-C.Y. and J.-J.D.; methodology, M.-C.Y.; software, M.-C.Y.; validation, M.-C.Y.; formal analysis, J.-J.D.; investigation, J.-J.D.; resources, M.-C.Y.; data curation, M.-C.Y.; writing—original draft preparation, M.-C.Y.; writing—review and editing, J.-J.D.; visualization, M.-C.Y.; supervision, J.-J.D.; project administration, J.-J.D.; funding acquisition, J.-J.D. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the AUO Corporation.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

No new data were created.

Acknowledgments

The authors thank for the support of the AUO Corporation.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Satoh, K.; Kitahara, I.; Ohta, Y. 3D Image Display with Motion Parallax by Camera Matrix Stereo. In Proceedings of the Third IEEE International Conference on Multimedia Computing and Systems, Hiroshima, Japan, 17–23 June 1996; pp. 349–357. [Google Scholar]

- Wijewickrema, S.N.R.; Papliński, A.P. Principal Component Analysis for The Approximation of an Image as an Ellipse. In Proceedings of the 13th International Conference in Central Europe on Computer Graphics, Visualization and Computer Vision 2005, Plzen, Czech Republic, 31 January–4 February 2005; pp. 69–70. [Google Scholar]

- Vincent, O.R.; Folorunso, O. A Descriptive Algorithm for Sobel Image Edge Detection. Informing Sci. IT Educ. Conf. 2009, 40, 97–107. [Google Scholar]

- Jiang, B.N. On The Least-Squares Method. Comput. Methods Appl. Mech. Eng. 1998, 152, 239–257. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).