Abaca Blend Fabric Classification Using Yolov8 Architecture †

Abstract

1. Introduction

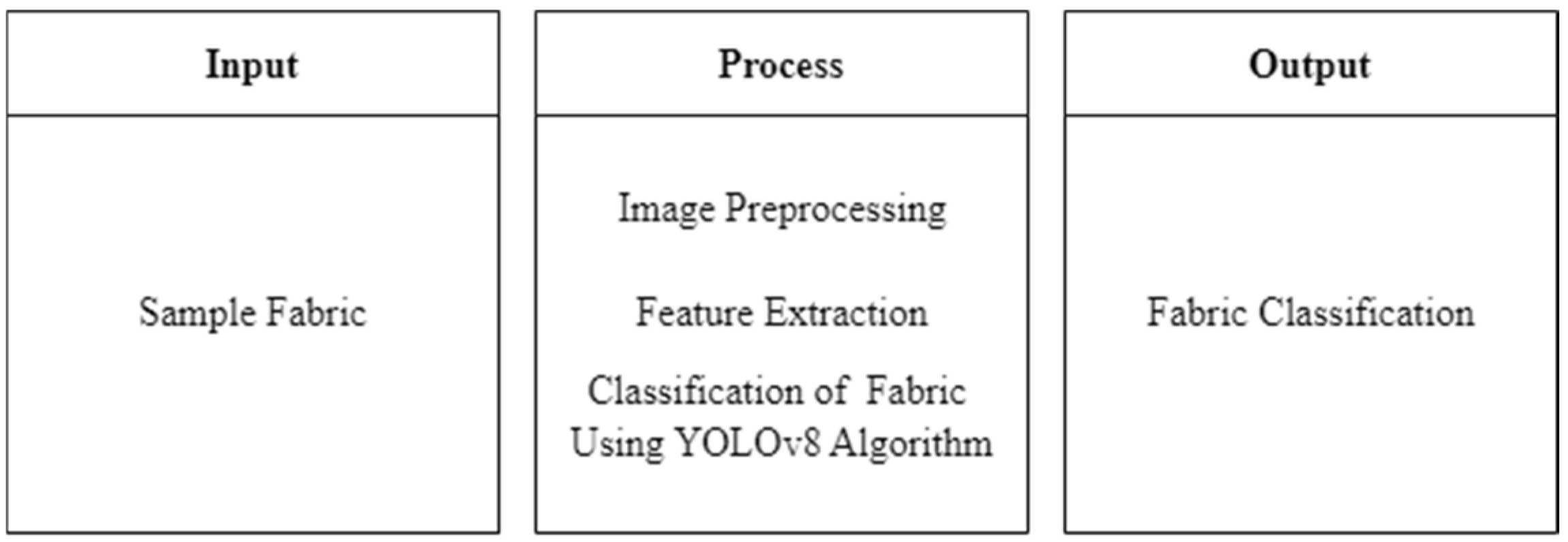

2. Methods

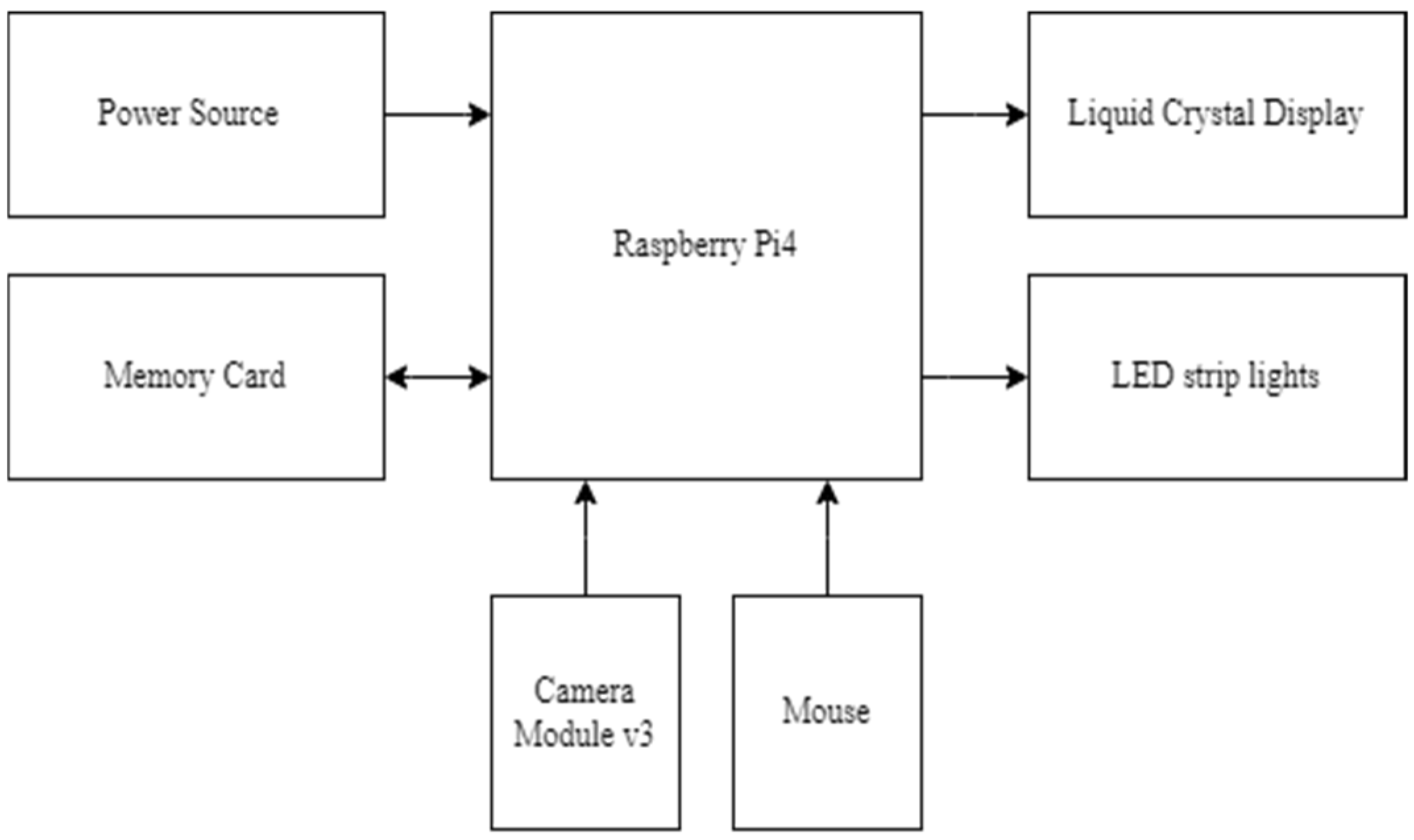

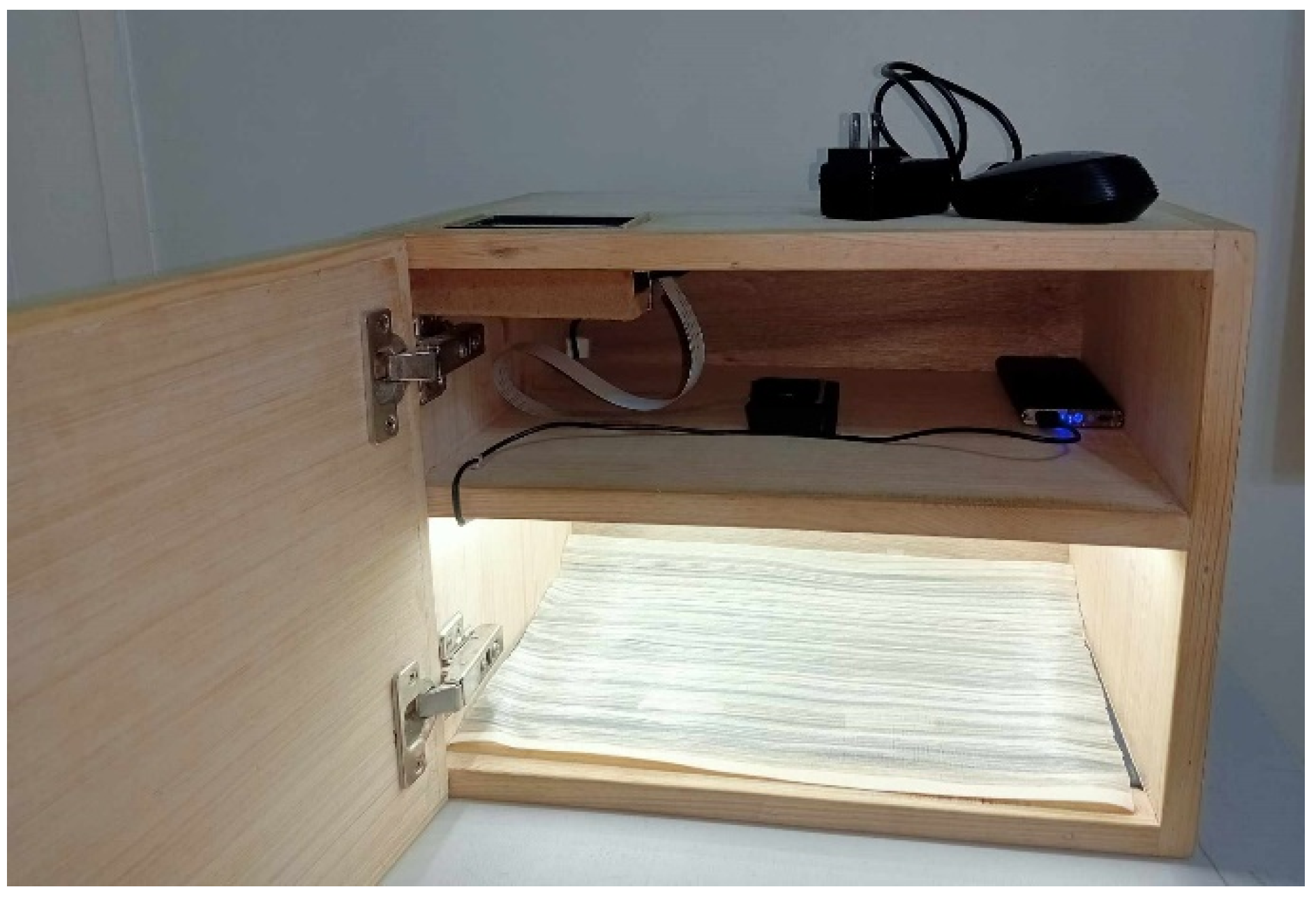

2.1. Hardware Development

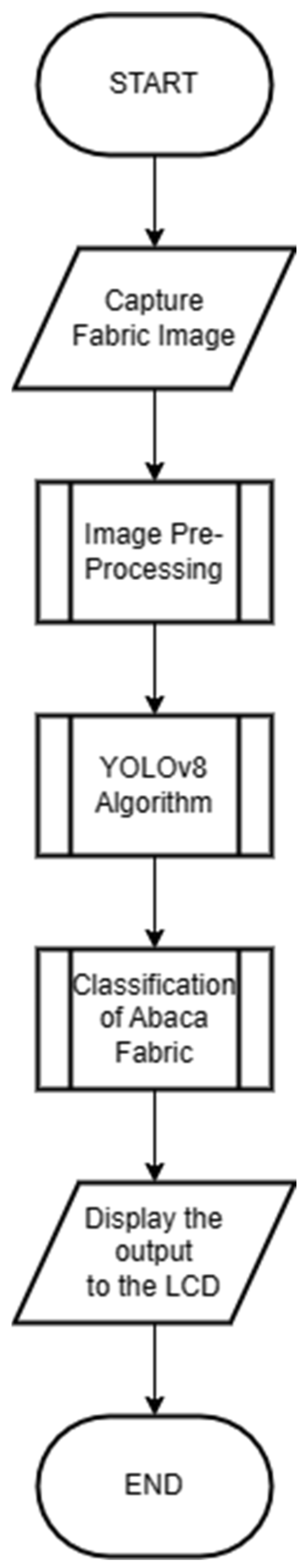

2.2. Software Development

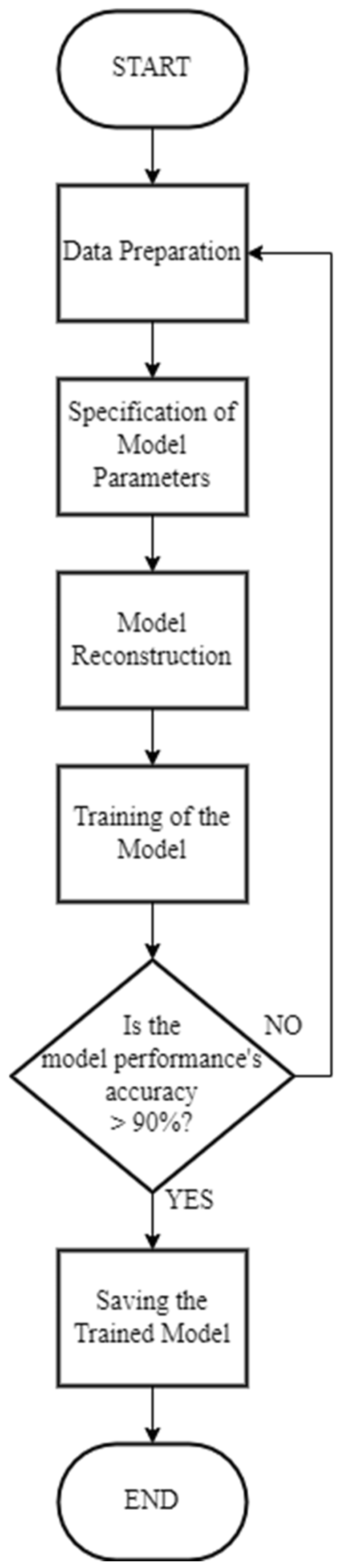

2.3. Training

2.4. Experimental Setup

2.5. GUI

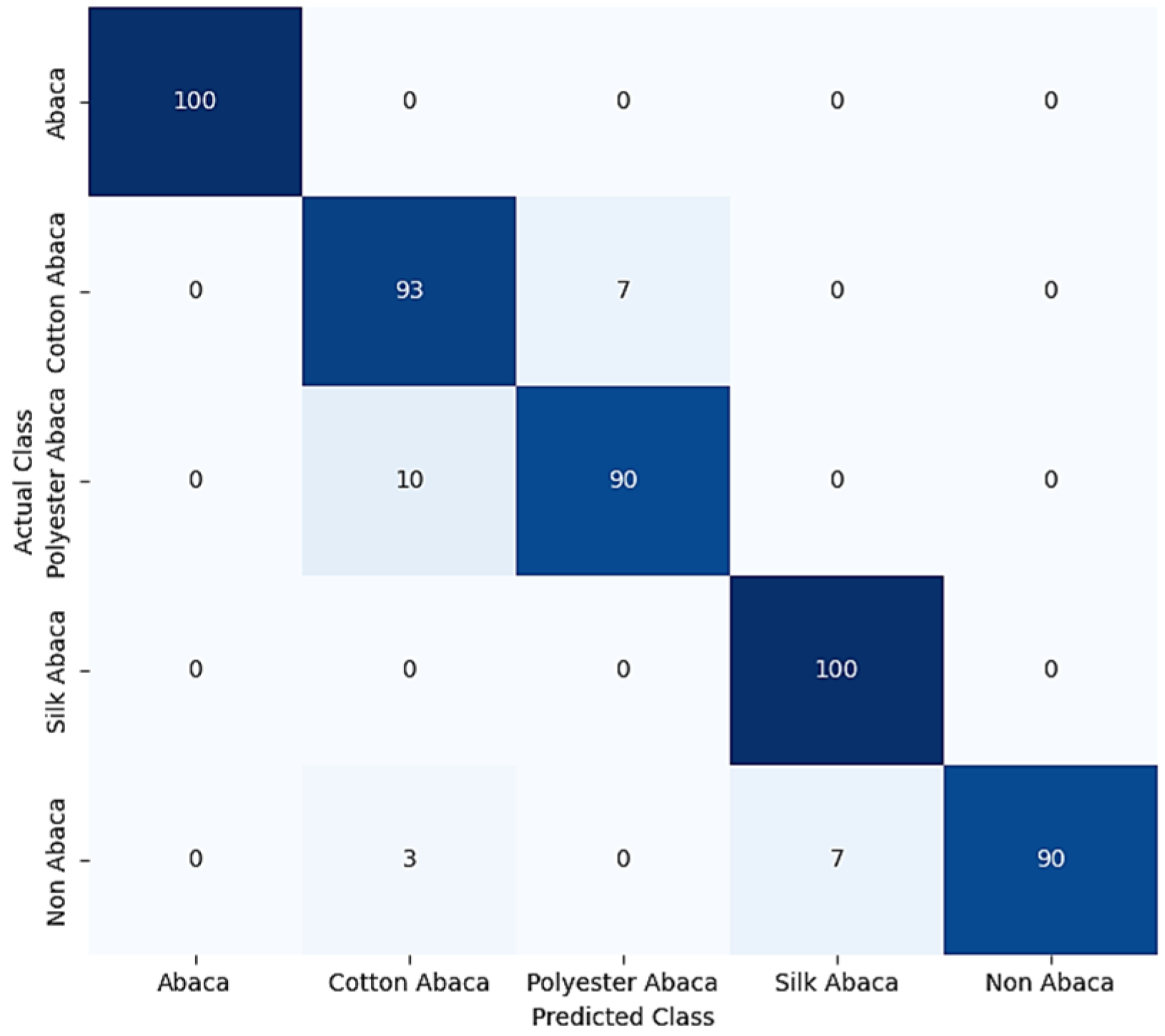

3. Results and Discussion

3.1. Data Gathering

3.2. Statistical Treatment

4. Conclusions and Recommendation

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Gunawan, K.C.; Lie, Z.S. Apple Ripeness Level Detection Based on Skin Color Features with Convolutional Neural Network Classification Method. In Proceedings of the 7th International Conference on Electrical, Electronics and Information Engineering: Technological Breakthrough for Greater New Life, ICEEIE 2021, Malang, Indonesia, 2 October 2021; Institute of Electrical and Electronics Engineers Inc.: Piscataway, NJ, USA, 2021. [Google Scholar] [CrossRef]

- Legaspi, J.; Pangilinan, J.R.; Linsangan, N. Tomato Ripeness and Size Classification Using Image Processing. In Proceedings of the 2022 5th International Seminar on Research of Information Technology and Intelligent Systems, ISRITI 2022, Yogyakarta, Indonesia, 8–9 December 2022; Institute of Electrical and Electronics Engineers Inc.: Piscataway, NJ, USA, 2022; pp. 613–618. [Google Scholar] [CrossRef]

- Virtusio, D.T.U.; Tapaganao, F.J.D.; Maramba, R.G. Enhanced Iris Recognition System Using Daugman Algorithm and Multi-Level Otsu’s Thresholding. In Proceedings of the International Conference on Electrical, Computer, and Energy Technologies, ICECET 2022, Prague, Czech Republic, 20–22 July 2022; Institute of Electrical and Electronics Engineers Inc.: Piscataway, NJ, USA, 2022. [Google Scholar] [CrossRef]

- Abalos, M.R.; Villaverde, J.F. Fresh Fish Classification Using HOG Feature Extraction and SVM. In Proceedings of the 2022 IEEE 14th International Conference on Humanoid, Nanotechnology, Information Technology, Communication and Control, Environment, and Management, HNICEM 2022, Boracay Island, Philippines, 1–4 December 2022; Institute of Electrical and Electronics Engineers Inc.: Piscataway, NJ, USA, 2022. [Google Scholar] [CrossRef]

- Bonifacio, D.J.M.; Pascual, A.M.I.E.; Caya, M.V.C.; Fausto, J.C. Determination of Common Maize (Zea mays) Disease Detection using Gray-Level Segmentation and Edge-Detection Technique. In Proceedings of the 2020 IEEE 12th International Conference on Humanoid, Nanotechnology, Information Technology, Communication and Control, Environment, and Management, HNICEM 2020, Manila, Philippines, 3–7 December 2020; Institute of Electrical and Electronics Engineers Inc.: Piscataway, NJ, USA, 2020. [Google Scholar] [CrossRef]

- Andujar, B.J.; Ferranco, N.J.; Villaverde, J.F. Recognition of Feline Epidermal Disease using Raspberry-Pi based Gray Level Co-occurrence Matrix and Support Vector Machine. In Proceedings of the 2021 IEEE 13th International Conference on Humanoid, Nanotechnology, Information Technology, Communication and Control, Environment, and Management, HNICEM 2021, Manila, Philippines, 28–30 November 2021; Institute of Electrical and Electronics Engineers Inc.: Piscataway, NJ, USA, 2021. [Google Scholar] [CrossRef]

- Jardeleza, S.G.S.; Jose, J.C.; Villaverde, J.F.; Ann Latina, M. Detection of Common Types of Eczema Using Gray Level Co-occurrence Matrix and Support Vector Machine. In Proceedings of the 2023 15th International Conference on Computer and Automation Engineering, ICCAE 2023, Sydney, Australia, 3–5 March 2023; Institute of Electrical and Electronics Engineers Inc.: Piscataway, NJ, USA, 2023; pp. 231–236. [Google Scholar] [CrossRef]

- De Guzman, S.R.C.; Tan, L.C.; Villaverde, J.F. Social Distancing Violation Monitoring Using YOLO for Human Detection. In Proceedings of the 2021 IEEE 7th International Conference on Control Science and Systems Engineering (ICCSSE 2021), Qingdao, China, 30 July–1 August 2021; pp. 216–222. [Google Scholar] [CrossRef]

- Legaspi, K.R.B.; Sison, N.W.S.; Villaverde, J.F. Detection and Classification of Whiteflies and Fruit Flies Using YOLO. In Proceedings of the 2021 13th International Conference on Computer and Automation Engineering, ICCAE 2021, Melbourne, Australia, 20–22 March 2021; Institute of Electrical and Electronics Engineers Inc.: Piscataway, NJ, USA, 2021; pp. 1–4. [Google Scholar] [CrossRef]

- Reddy, V.B.K.; Basha, S.S.; Swarnalakshmi, J. Augmenting Underwater Plastic Detection: A Study with YOLO-V8m on Enhanced Datasets. In Proceedings of the 3rd International Conference on Applied Artificial Intelligence and Computing, ICAAIC 2024, Salem, India, 5–7 June 2024; Institute of Electrical and Electronics Engineers Inc.: Piscataway, NJ, USA, 2024; pp. 1309–1315. [Google Scholar] [CrossRef]

- Lakshmanan, S.; Divya, B.; Nirmala, K.; Annamalai, M.; Pragadeesh, T.; Sanju Varshini, T. Portable assistive system for visually impaired using raspberry pi. In Proceedings of the 2020 IEEE International Conference on Advances and Developments in Electrical and Electronics Engineering, ICADEE 2020, Coimbatore, India, 10–11 December 2020; Institute of Electrical and Electronics Engineers Inc.: Piscataway, NJ, USA, 2020. [Google Scholar] [CrossRef]

- Christopherson, P.S.; Eleyan, A.; Bejaoui, T.; Jazzar, M. Smart Stick for Visually Impaired People using Raspberry Pi with Deep Learning. In Proceedings of the 2022 International Conference on Smart Applications, Communications and Networking, SmartNets 2022, Palapye, Botswana, 29 November–1 December 2022; Institute of Electrical and Electronics Engineers Inc.: Piscataway, NJ, USA, 2022. [Google Scholar] [CrossRef]

- Abd-Elrahim, A.M.; Abu-Assal, A.; Mohammad, A.A.A.A.; Al-Imam, A.I.M.; Hassan, A.H.A.; Muhi-Aldeen, M.A.M. Design and Implementation of Raspberry Pi based Cell phone. In Proceedings of the 2020 International Conference on Computer, Control, Electrical, and Electronics Engineering, ICCCEEE 2020, Khartoum, Sudan, 26 February–1 March 2021; Institute of Electrical and Electronics Engineers Inc.: Piscataway, NJ, USA, 2021. [Google Scholar] [CrossRef]

- Buenconsejo, L.T.; Linsangan, N.B. Classification of Healthy and Unhealthy Abaca leaf using a Convolutional Neural Network (CNN). In Proceedings of the 2021 IEEE 13th International Conference on Humanoid, Nanotechnology, Information Technology, Communication and Control, Environment, and Management, HNICEM 2021, Manila, Philippines, 28–30 November 2021; Institute of Electrical and Electronics Engineers Inc.: Piscataway, NJ, USA, 2021. [Google Scholar] [CrossRef]

- Hong, J.; Caya, M.V.C. Development of Convolutional Neural Network Model for Abaca Fiber Stripping Quality Classification System. In Proceedings of the 2022 IEEE 14th International Conference on Humanoid, Nanotechnology, Information Technology, Communication and Control, Environment, and Management, HNICEM 2022, Boracay Island, Philippines, 1–4 December 2022; Institute of Electrical and Electronics Engineers Inc.: Piscataway, NJ, USA, 2022. [Google Scholar] [CrossRef]

- Tapado, B.M. Enhancing Abaca Fiber Production Through a GIS-Based Application. In Proceedings of the 2022 IEEE 7th International Conference on Information Technology and Digital Applications, ICITDA 2022, Yogyakarta, Indonesia, 4–5 November 2022; Institute of Electrical and Electronics Engineers Inc.: Piscataway, NJ, USA, 2022. [Google Scholar] [CrossRef]

- Salazar, E.; Morales, A. Smart Irrigation Framework Using Arduino for an Improved Abaca Farming System. In Proceedings of the 2023 6th International Conference on Control, Robotics and Informatics, ICCRI 2023, Danang, Vietnam, 26–28 May 2023; Institute of Electrical and Electronics Engineers Inc.: Piscataway, NJ, USA, 2023; pp. 39–45. [Google Scholar] [CrossRef]

- Mehta, A.; Jain, R. An Analysis of Fabric Defect Detection Techniques for Textile Industry Quality Control. In Proceedings of the 2023 World Conference on Communication and Computing, WCONF 2023, Raipur, India, 14–16 July 2023; Institute of Electrical and Electronics Engineers Inc.: Piscataway, NJ, USA, 2023. [Google Scholar] [CrossRef]

- Siam, A.S.M.; Arafat, Y.; Talukdar, M.M.; Mehedi Hasan, M.; Rahman, R. Textile Net: A Deep Learning Approach for Textile Fabric Material Identification from OCT and Macro Images. In Proceedings of the 2023 26th International Conference on Computer and Information Technology, ICCIT 2023, Cox’s Bazar, Bangladesh, 13–15 December 2023; Institute of Electrical and Electronics Engineers Inc.: Piscataway, NJ, USA, 2023. [Google Scholar] [CrossRef]

- Andersen, I. Jeans v2 Dataset. Roboflow Universe. Available online: https://universe.roboflow.com/irvin-andersen/jeans-v2 (accessed on 20 August 2024).

| Blend of Fabric | Training | Testing |

|---|---|---|

| Abaca | 1000 | 100 |

| Cotton Abaca | 1000 | 100 |

| Non-Abaca | N/A | 100 |

| Polyester Abaca | 1000 | 100 |

| Silk Abaca | 1000 | 100 |

| Total | 4000 | 500 |

| Class | Accuracy |

|---|---|

| Abaca | 100% |

| Cotton Abaca | 93% |

| Polyester Abaca | 90% |

| Silk Abaca | 100% |

| Non-Abaca | 90% |

| Model’s Accuracy | 94.6% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Cinco, C.D.; Dominguez, L.M.R.; Villaverde, J.F. Abaca Blend Fabric Classification Using Yolov8 Architecture. Eng. Proc. 2025, 92, 42. https://doi.org/10.3390/engproc2025092042

Cinco CD, Dominguez LMR, Villaverde JF. Abaca Blend Fabric Classification Using Yolov8 Architecture. Engineering Proceedings. 2025; 92(1):42. https://doi.org/10.3390/engproc2025092042

Chicago/Turabian StyleCinco, Cedrick D., Leopoldo Malabanan R. Dominguez, and Jocelyn F. Villaverde. 2025. "Abaca Blend Fabric Classification Using Yolov8 Architecture" Engineering Proceedings 92, no. 1: 42. https://doi.org/10.3390/engproc2025092042

APA StyleCinco, C. D., Dominguez, L. M. R., & Villaverde, J. F. (2025). Abaca Blend Fabric Classification Using Yolov8 Architecture. Engineering Proceedings, 92(1), 42. https://doi.org/10.3390/engproc2025092042