Dog Activity Recognition Using Convolutional Neural Network †

Abstract

1. Introduction

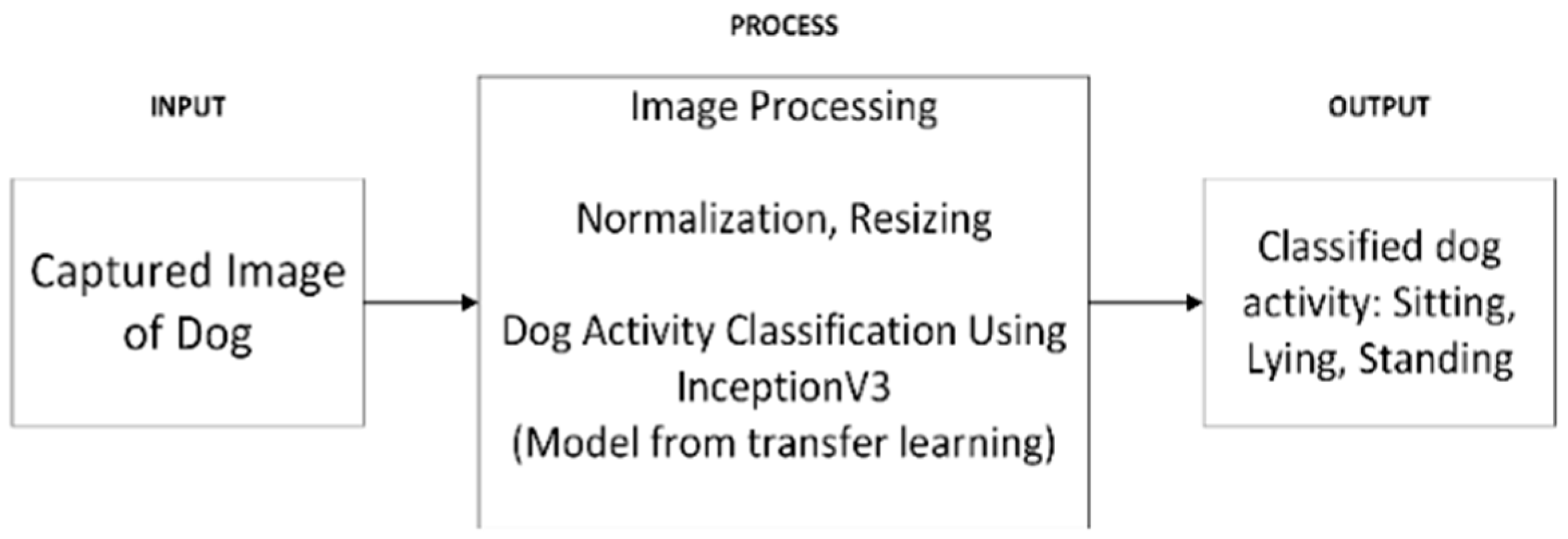

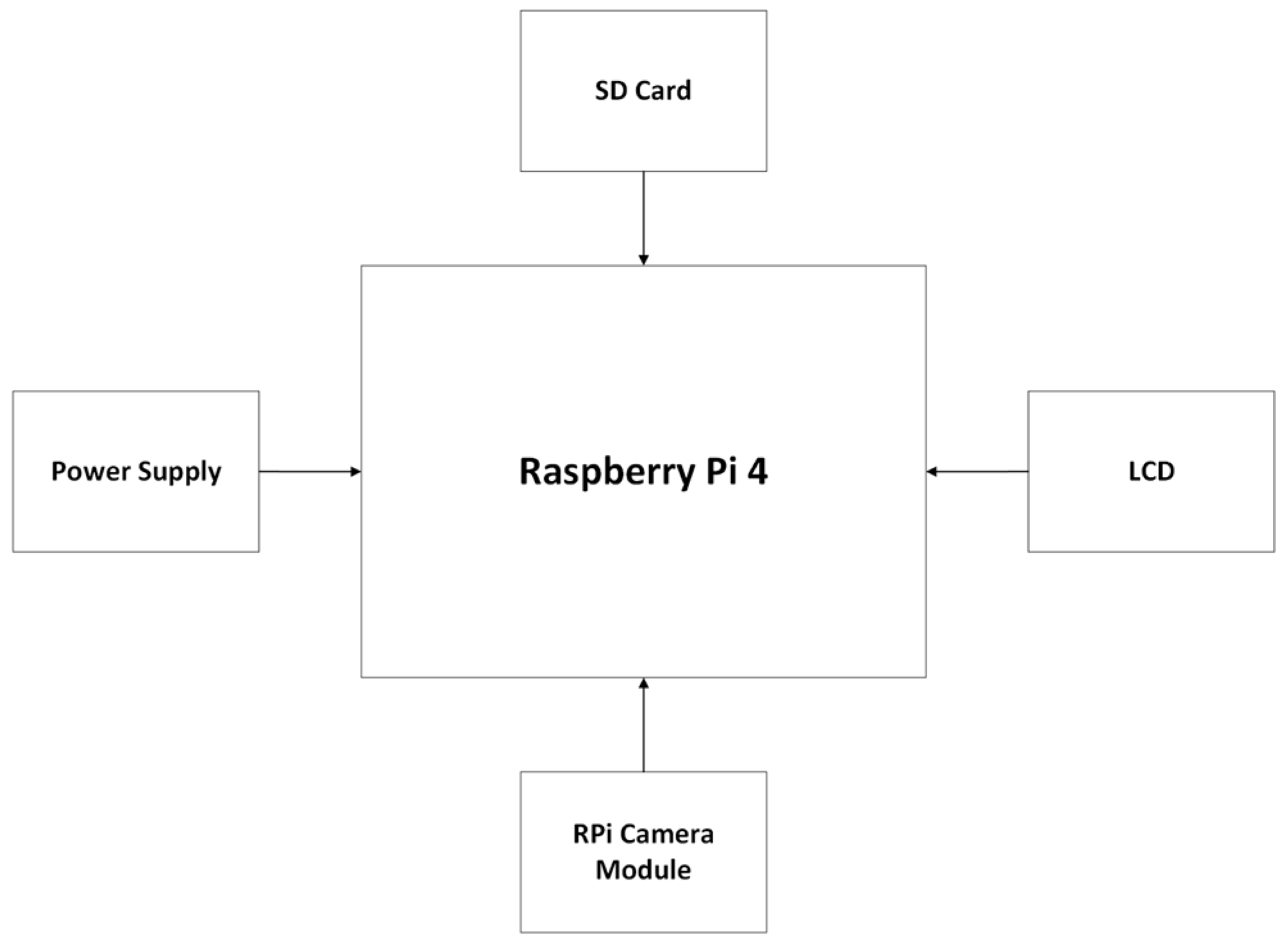

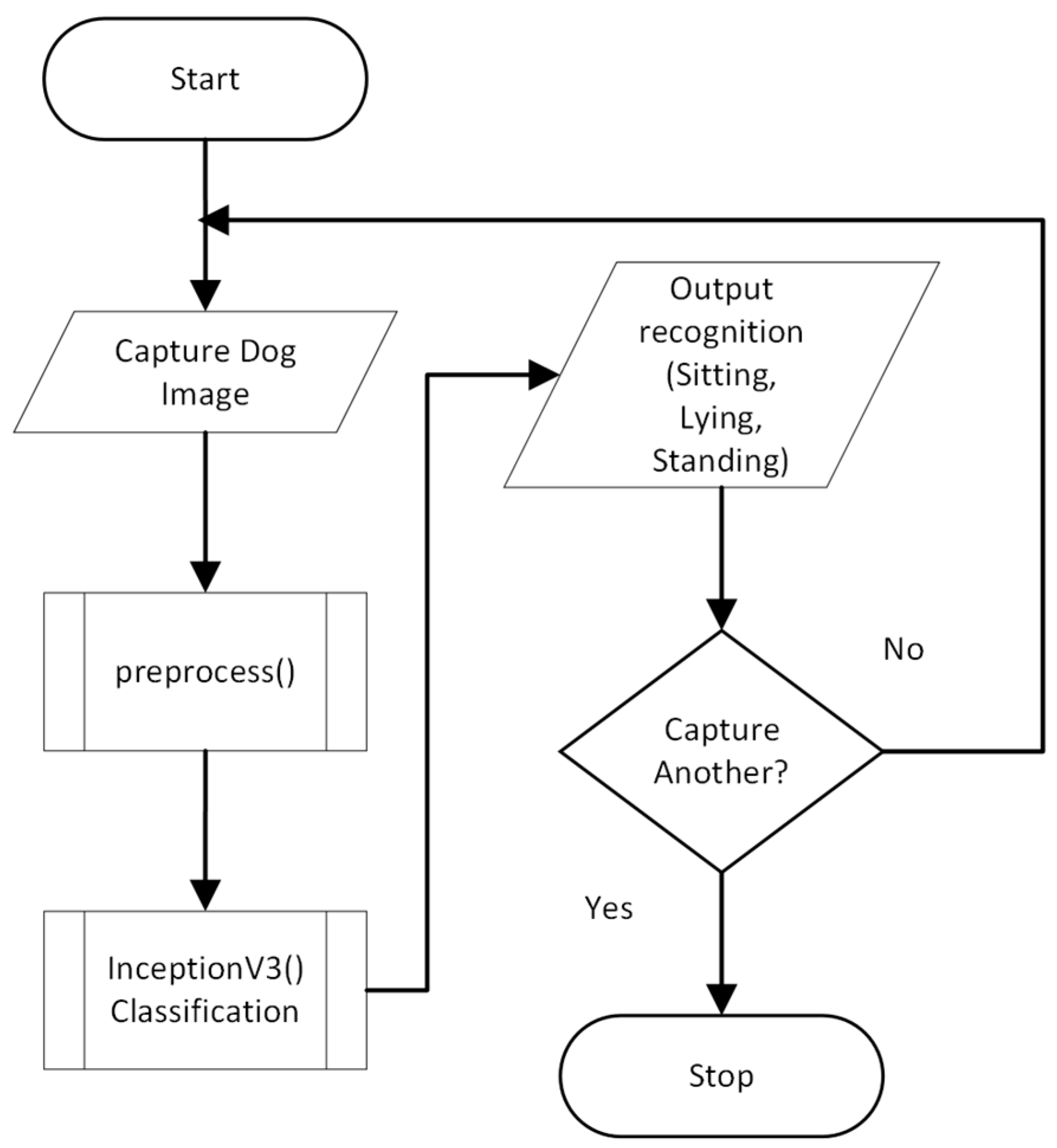

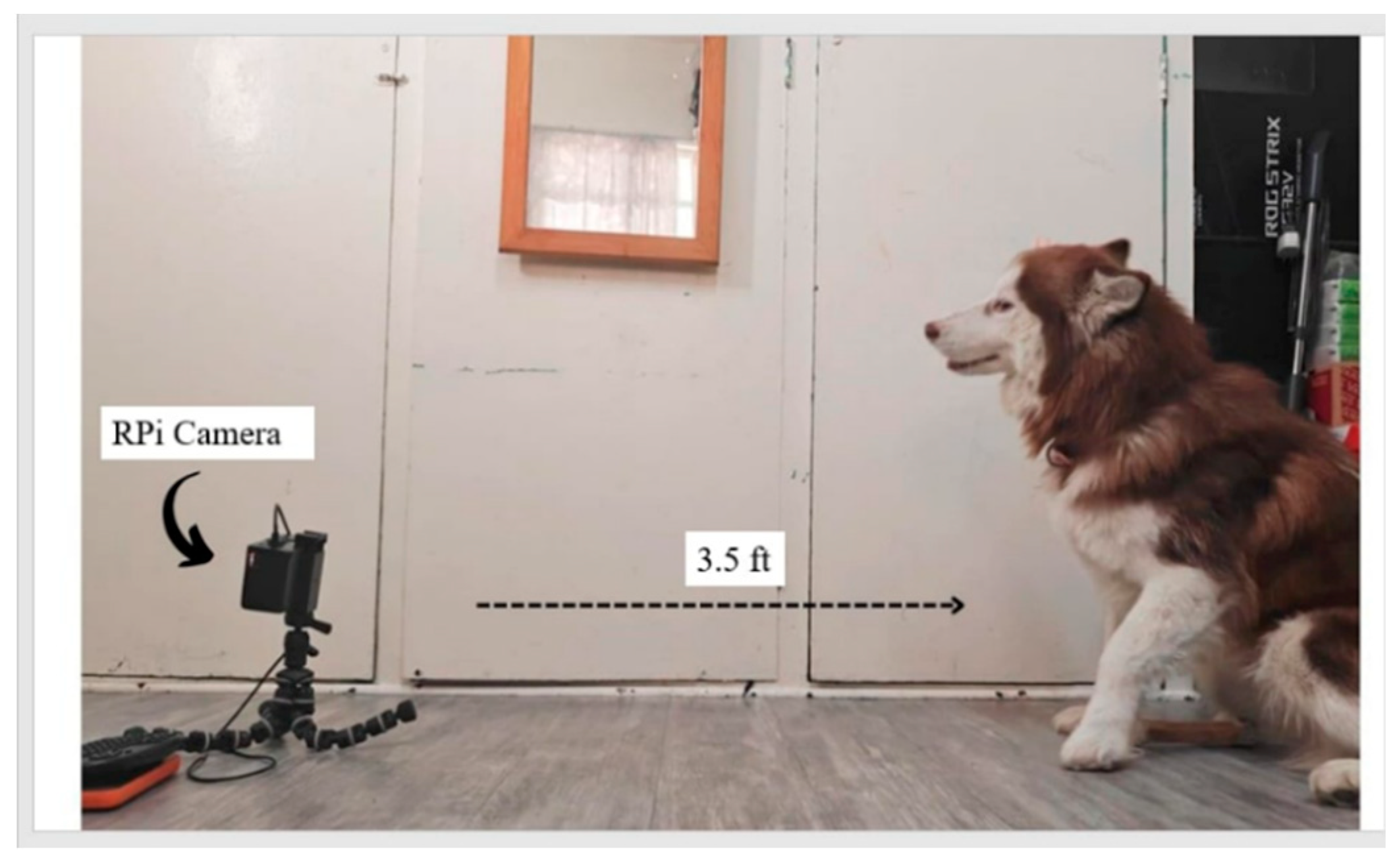

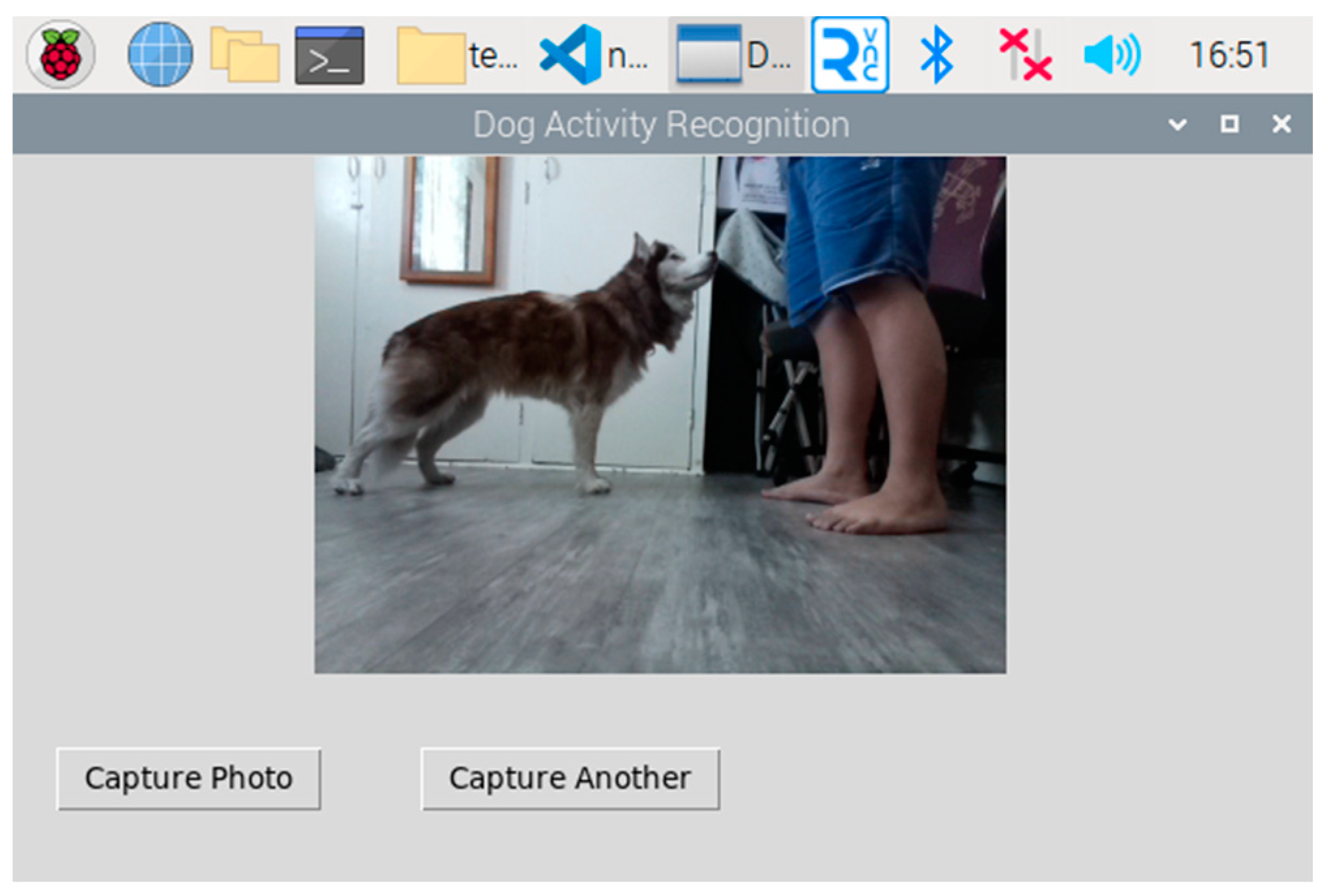

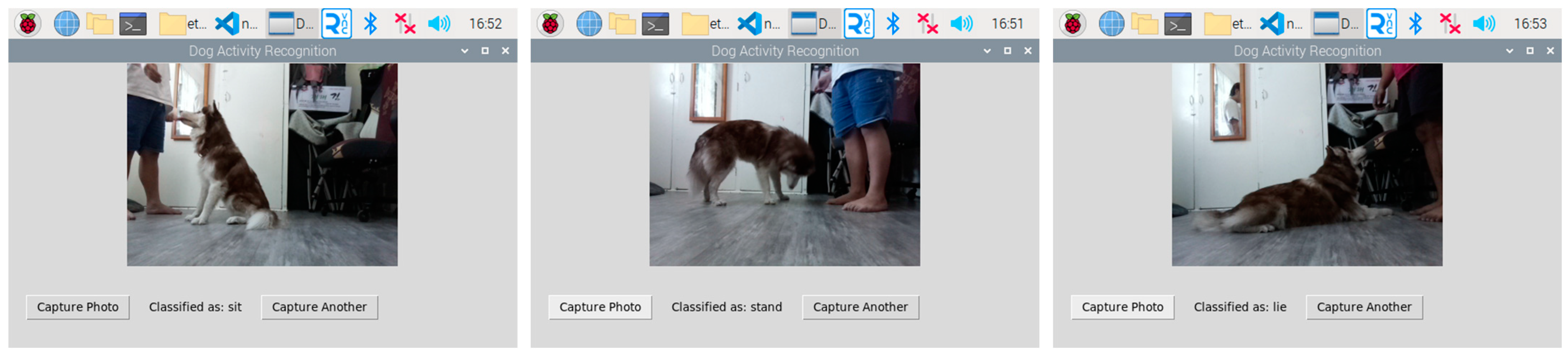

2. Methodology

3. Results and Discussion

4. Conclusions and Recommendations

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Benz-Schwarzburg, J.; Monsó, S.; Huber, L. How Dogs Perceive Humans and How Humans Should Treat Their Pet Dogs: Linking Cognition with Ethics. Front. Psychol. 2020, 11, 584037. [Google Scholar] [CrossRef] [PubMed]

- Chaudhari, A.; Kartal, T.; Brill, G.; Amano, K.J.; Lagayan, M.G.; Jorca, D. Dog ecology and demographics in several areas in the Philippines and its application to anti-rabies vaccination programs. Animals 2022, 12, 105. [Google Scholar] [CrossRef] [PubMed]

- Meints, K.; Racca, A.; Hickey, N. How to prevent dog bite injuries? Children misinterpret dogs’ facial expressions. Inj. Prev. 2010, 16 (Suppl. 1), A68. [Google Scholar] [CrossRef]

- Hussain, A.; Ali, S.; Abdullah; Kim, H.-C. Activity Detection for the Wellbeing of Dogs Using Wearable Sensors Based on Deep Learning. IEEE Access 2022, 10, 53153–53163. [Google Scholar] [CrossRef]

- Eerdekens, A.; Callaert, A.; Deruyck, M.; Martens, L.; Joseph, W. Dog’s Behaviour Classification Based on Wearable Sensor Accelerometer Data. In Proceedings of the 5th Conference on Cloud and Internet of Things, CIoT, Marrakech, Morocco, 28–30 March 2022; pp. 226–231. [Google Scholar] [CrossRef]

- Wang, S.; Gao, J.Z.; Lin, H.; Shitole, M.; Reza, L.; Zhou, S. Dynamic human behavior pattern detection and classification. In Proceedings of the 5th IEEE International Conference on Big Data Service and Applications, BigDataService, Newark, CA, USA, 4–9 April 2019; pp. 159–166. [Google Scholar] [CrossRef]

- Pangilinan, J.R.; Legaspi, J.; Linsangan, N. InceptionV3, ResNet50, and VGG19 Performance Comparison on Tomato Ripeness Classification. In Proceedings of the 2022 5th International Seminar on Research of Information Technology and Intelligent Systems, ISRITI, Yogyakarta, Indonesia, 8–9 December 2022; pp. 619–624. [Google Scholar] [CrossRef]

- Juy, J.N.; Villaverde, J.F. A Durian Variety Identifier Using Canny Edge and CNN. In Proceedings of the 2021 7th International Conference on Control Science and Systems Engineering, ICCSSE, Qingdao, China, 30 July–1 August 2021; pp. 293–297. [Google Scholar] [CrossRef]

- Navarro, L.K.B.; Mateo, K.C.H.; Manlises, C.O. CNN Models for Identification of Macro-Nutrient Deficiency in Onion Leaves (Allium cepa L.). In Proceedings of the 2023 IEEE 5th Eurasia Conference on IOT, Communication and Engineering, ECICE, Yunlin, Taiwan, 27–29 October 2023; pp. 396–400. [Google Scholar] [CrossRef]

- Buenconsejo, L.T.; Linsangan, N.B. Detection and Identification of Abaca Diseases using a Convolutional Neural Network CN. In Proceedings of the IEEE Region 10 Annual International Conference, Proceedings/TENCON, Auckland, New Zealand, 7–10 December 2021; pp. 94–98. [Google Scholar] [CrossRef]

- Soliman-Cuevas, H.L.; Linsangan, N.B. Day-Old Chick Sexing Using Convolutional Neural Network (CNN) and Computer Vision. In Proceedings of the 5th IEEE International Conference on Artificial Intelligence in Engineering and Technology, IICAIET, Kota Kinabalu, Malaysia, 12–14 September 2023; pp. 45–49. [Google Scholar] [CrossRef]

- Fitz Bumacod, D.S.; Delfin, J.V.; Linsangan, N.; Angelia, R.E. Image-Processing-based Digital Goniometer using OpenCV. In Proceedings of the 2020 IEEE 12th International Conference on Humanoid, Nanotechnology, Information Technology, Communication and Control, Environment, and Management, HNICEM, Manila, Philippines, 3–7 December 2020. [Google Scholar] [CrossRef]

- Muhali, A.S.; Linsangan, N.B. Classification of Lanzones Tree Leaf Diseases Using Image Processing Technology and a Convolutional Neural Network (CNN). In Proceedings of the 4th IEEE International Conference on Artificial Intelligence in Engineering and Technology, IICAIET, Kota Kinabalu, Malaysia, 13–15 September 2022. [Google Scholar] [CrossRef]

- Sanchez, R.B.; Esteves, J.A.C.; Linsangan, N.B. Determination of Sugar Apple Ripeness via Image Processing Using Convolutional Neural Network. In Proceedings of the 2023 15th International Conference on Computer and Automation Engineering, ICCAE, Sydney, Australia, 3–5 March 2023; pp. 333–337. [Google Scholar] [CrossRef]

- Caya, M.V.C.; Caringal, M.E.C.; Manuel, K.A.C. Tongue Biometrics Extraction Based on YOLO Algorithm and CNN Inception. In Proceedings of the 2021 IEEE 13th International Conference on Humanoid, Nanotechnology, Information Technology, Communication and Control, Environment, and Management, HNICEM, Manila, Philippines, 28–30 November 2021. [Google Scholar] [CrossRef]

- De Goma, J.C.; Divina, F.G.; Isaac, M.K.B.; Pajaro, R.J. Effectiveness of Using Fundus Image Data Containing Other Retinal Diseases in Identifying Age-Related Macular Degeneration using Image Classification. In Proceedings of the 2023 13th International Conference on Software Technology and Engineering, ICSTE, Osaka, Japan, 27–29 October 2023; pp. 113–117. [Google Scholar] [CrossRef]

- Legaspi, J.; Pangilinan, J.R.; Linsangan, N. Tomato Ripeness and Size Classification Using Image Processing. In Proceedings of the 2022 5th International Seminar on Research of Information Technology and Intelligent Systems, ISRITI, Yogyakarta, Indonesia, 8–9 December 2022; pp. 613–618. [Google Scholar] [CrossRef]

| N = 50 | Predicted | |||

|---|---|---|---|---|

| Standing | Sitting | Lying | ||

| Actual | Standing | 15 | 1 | 0 |

| Sitting | 0 | 17 | 0 | |

| Lying | 2 | 3 | 12 | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Nolasco, E., Jr.; Aldea, A.C.; Villaverde, J. Dog Activity Recognition Using Convolutional Neural Network. Eng. Proc. 2025, 92, 41. https://doi.org/10.3390/engproc2025092041

Nolasco E Jr., Aldea AC, Villaverde J. Dog Activity Recognition Using Convolutional Neural Network. Engineering Proceedings. 2025; 92(1):41. https://doi.org/10.3390/engproc2025092041

Chicago/Turabian StyleNolasco, Evenizer, Jr., Anton Caesar Aldea, and Jocelyn Villaverde. 2025. "Dog Activity Recognition Using Convolutional Neural Network" Engineering Proceedings 92, no. 1: 41. https://doi.org/10.3390/engproc2025092041

APA StyleNolasco, E., Jr., Aldea, A. C., & Villaverde, J. (2025). Dog Activity Recognition Using Convolutional Neural Network. Engineering Proceedings, 92(1), 41. https://doi.org/10.3390/engproc2025092041