Abstract

This article proposes a systematic methodology for developing a certification standard for AI safety-critical systems in military aviation, combining military and civil airworthiness references. It involves a thorough analysis conducted to identify overlaps, contradictions, and specific needs for AI certification in this field. The methodology includes incremental updates to a foundational certification framework, continuously integrating new references. An illustrative application to an ISO reference demonstrates the process of extracting AI certification requirements, and systematically derived requirements from various ISO references exemplify the methodology’s efficacy. The aim of this approach is to consolidate pertinent information to establish robust certification standards, ensuring comprehensive coverage of relevant criteria.

1. Introduction

In today’s tech-driven world, Artificial Intelligence (AI) is revolutionizing various industries, significantly enhancing decision-making and expanding into new areas [1,2]. However, the rapid advancement of AI raises concerns about its reliability, ethics, and security [3]. To address these issues objectively and based on evidence, a methodology for analyzing state-of-the-art AI certification references is proposed herein [4].

Previous methodologies, such as those outlined in DO-178C for airborne software or ARP4754A for system development, have set foundational approaches for certification. However, these frameworks are primarily designed for traditional systems and lack considerations specific to AI’s dynamic and adaptive nature. Integrating elements from these methodologies into a tailored approach for AI systems ensures that critical gaps in current certification processes are addressed.

The aim of this methodology is to establish a robust standard for implementing and deploying AI-based safety-critical systems in military aviation. While previous research has focused on one-on-one air combat scenarios with precise situational information [5,6], modern air combat involves formations and multiple sensors [7].

The proposed methodology identifies specific components within the AI certification framework to meet the unique requirements of safety-critical systems in military aviation, ensuring the certification process aligns with AI advancements and adapts to the complexities of air combat [8].

The proposed methodology addresses significant gaps observed in past attempts to certify AI-based systems. For instance, failures in AI reliability have historically led to inconsistencies in safety-critical applications, such as unforeseen biases in decision-making algorithms or insufficient validation of autonomous behaviors in complex scenarios. A structured and systematic certification approach, like the one proposed here, could have mitigated such issues by providing clear criteria for verification and validation tailored to AI’s unique challenges.

By considering current aviation certification processes and AI standards, this approach facilitates the evaluation of references and the development of a new certification standard for AI-based safety-critical systems [9].

For example, the lack of standard methodologies has been highlighted in studies involving autonomous aerial combat systems, where inconsistencies in the certification process led to delays and operational limitations [6,7]. By adopting a systematic methodology, these challenges could have been addressed through better-defined objectives and compliance measures.

2. Standardization Domains Relevant to AI-Based Safety Critical Systems in Military Aviation

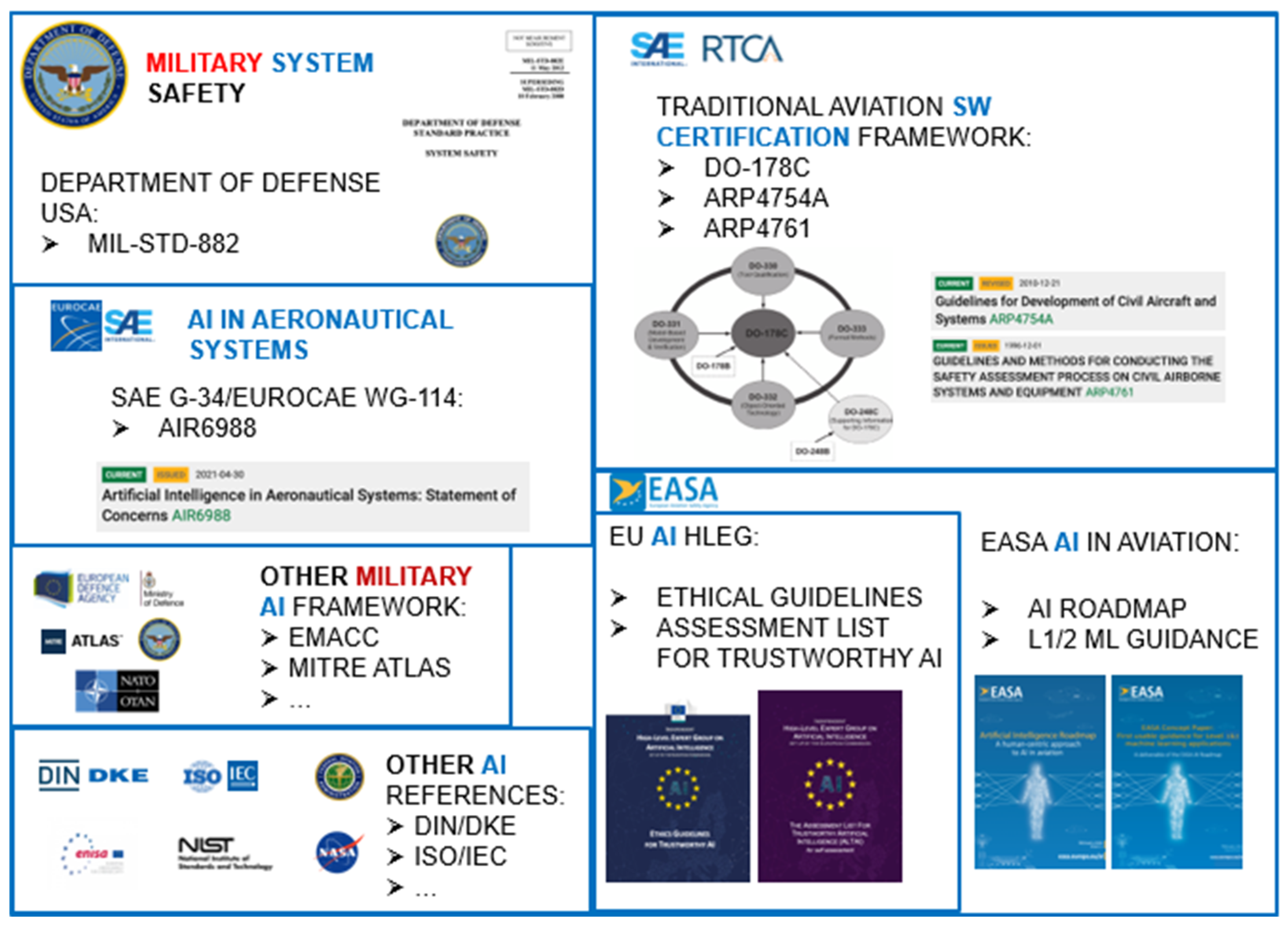

The military aviation system is conceptualized as a network of systems [10], where Artificial Intelligence is anticipated to play a crucial role in highly automated and safety-critical components [11]. This system is subject to both civilian and military aviation regulations and certification processes [12]. Consequently, the following areas of regulation and standardization are deemed highly pertinent in establishing the standardization framework for the development, validation, and certification of AI-based safety-critical components. This information is also crucial for comprehending and distinguishing the current requirements in constructing such a framework.

Military Aviation Airworthiness Regulations: These regulations govern the military aviation system, encompassing policies, rules, directives, standards, processes, and associated guidance. They assess air safety within military aviation activities. Generally, Military Aviation Authorities have regulatory sets underpinned by ICAO principles, but they are not expected to comply with these principles [13].

Civil Aviation Regulations: These regulations are relevant to the aviation domains involved in air combat systems, focusing on the standardization of safety-critical systems, safety-critical software, and AI applications in civil aviation domains. Comparing civil rules across nations may allow future harmonization of the criteria [14].

General-AI Standardization Processes: These processes relate to the standardization of general AI, considering the objectives, requirements, and means of compliance with AI to be horizontal and applicable across various domains. Standards can affect the broader context in which AI is researched, developed, and implemented through process specifications [15].

Autonomous Systems Standards: These standards pertain to autonomous systems, especially those incorporating AI applications. Autonomous systems should be evaluated using an iterative, stepwise, normative approach [16].

Given the extensive work undertaken in these domains, a primary goal of this task is to conduct a comprehensive examination, with the following aims.

Identify Certification Basis: Determine the applicable certification basis for a military air combat system, distinguishing specific provisions relevant for AI-based safety-critical components and identifying areas requiring adaptation or extension for certifying AI safety-critical components [17].

Standardization Framework Elements: Identify elements applicable and reusable in a specific standardization framework for air-combat-AI safety-critical components [18], avoiding duplication of efforts already undertaken by other projects and organizations.

Examine Objectives and Requirements: Examine and discuss, where applicable, objectives, requirements, or AMC from these areas, requiring further evaluation of applicability, sufficiency, duplication, overlapping, or potential contradictions in the context of military aviation.

Identify Needs: Identify specific and distinctive needs of the military air combat system that are not covered or planned to be addressed by ongoing or short-term standardization efforts [19].

This review encompasses current and ongoing standards in the aforementioned four areas as well as other types of documents and results judged to contain pertinent and applicable information, such as outcomes of research projects and publications.

3. Motivation

The examination of documents comprising the standardization framework for AI has allowed us to identify various dimensions essential for any future military aviation AI frameworks. Recognizing these dimensions aids in organizing and structuring the assessment of requirements. This set of dimensions provides a detailed breakdown of AI standardization into smaller, well-structured components rather than a hierarchical representation of a standardization framework. It aims to identify additional needs related to objectives, applicability, coverage, sufficiency, potential overlaps, or contradictions as well as needs requiring further attention.

These dimensions can be seen as puzzle pieces that different stakeholders must assemble to address the complete requirements of an AI-based safety-critical components standardization framework for military aviation. This breakdown into dimensions is a dynamic structure that can be expanded and enhanced as more references are analyzed. The dimensions may be developed or partially developed in the above standards, but there may also be other dimensions that are less developed or not as broad in scope, representing needs to be identified in the standards to be analyzed.

The current standards framework has extensively developed some dimensions, but mainly for supervised ML-based systems with lower levels of criticality and autonomy. Consequently, there is a need to consider other branches of AI, such as statistical AI methods, symbolic AI (logical or knowledge-based), algorithmic approaches, hybrid AI, and online learning applications.

4. Methodology

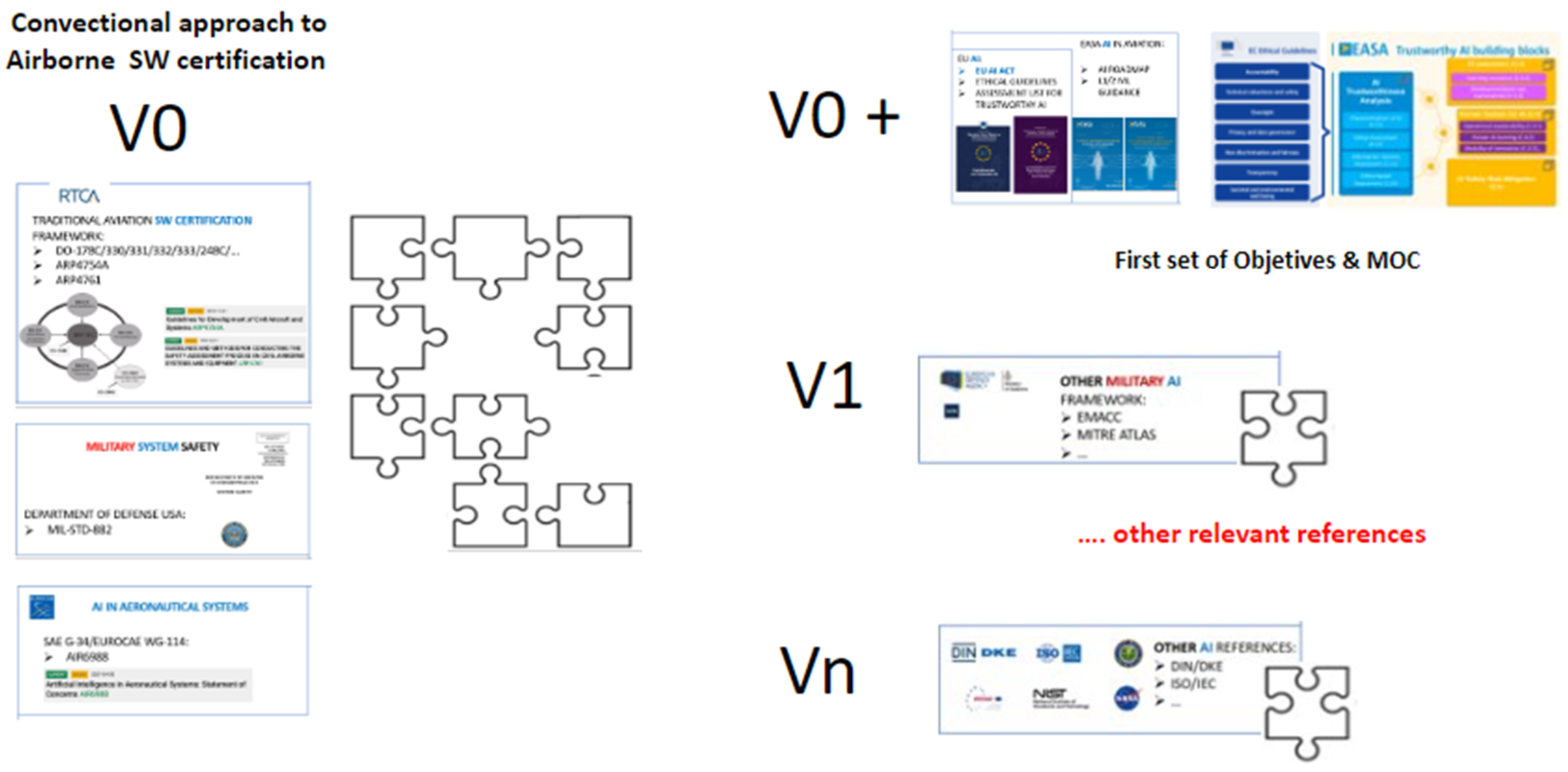

The aim of this assessment is to identify the foundational components of the forthcoming certification framework, encompassing objectives, requirements, AMC, aMoC, and GM. The analysis is conducted via an incremental process, starting with an initial framework version derived from key reference documents. This initial version involves reviewing current standards pertinent to the verification, validation, and certification of safety-critical software in a military context, such as EMARs, and the certification basis for a military air combat system. The goal is to identify which parts of these standards are still relevant to AI-based safety-critical functions and components in military aviation, which require modifications, and which are no longer applicable.

Additionally, the first version of the framework includes an assessment of existing aerospace software development standards used in the certification of safety-critical airborne and ground-based systems in civil aviation. Key references include DO-178C [20], MIL-HDBK-516C [21], ARP4761 [22], ARP4754A [23], CS 25 [24], and AMC-20 [25]. This initial framework also reviews the current EASA AI Roadmap [26] and Concept Paper [27]. Combining these documents completes the first draft of a new certification standard.

The selection of these references was driven by their established credibility and relevance in certifying safety-critical systems [28]. For instance, MIL-HDBK-516C is the cornerstone of military airworthiness certification, making it essential for aligning the new standard with existing military protocols. Meanwhile, EASA’s AMC-20 provides insights into acceptable means of compliance for large airplanes, offering a complementary perspective for bridging civil and military certification processes.

In addition, DO-178C provides a foundational framework for verifying and validating software in airborne systems, emphasizing traceability and robustness. Its structured approach is instrumental in addressing the verification challenges inherent in AI-based systems. Similarly, ARP4761 focuses on safety assessment processes for airborne systems, offering a detailed methodology for hazard analysis that is directly applicable to AI components requiring high safety integrity levels. These references serve as a starting point, ensuring that the new certification framework inherits well-established principles from aviation safety while adapting to the unique demands of AI.

Future work will extend this initial version by incorporating other AI general standards and applicable references, generating new versions of the standard incrementally. A template proposal is provided to streamline the progressive construction of the framework, aiming to identify and distinguish elements already included, those partially covered, and those presenting contradictions or incompatibilities. The template is also designed to identify new building blocks, needs, objectives, and aMoCs not identified in the initial version.

This approach ensures the progressive integration of different references into the new standard through a comprehensive and incremental revision process. Figure 1 illustrates the references to be included in this initial certification framework and in subsequent versions. Further research will be necessary to ensure all relevant certification standards are considered.

Figure 1.

References to consider in the process of the revision of a certification framework applicable to AI-based safety critical components.

To clarify the methodology, a workflow representation for the incremental review process is provided.

The references were selected based on their applicability to the certification of safety-critical systems, focusing on areas where AI introduces unique challenges. Each document was evaluated for its potential to contribute to specific dimensions of the new standard, such as regulatory compliance, verification and validation procedures, and human factors considerations. This systematic approach ensures that the framework builds on proven methodologies while addressing gaps specific to AI as can be seen in Figure 2.

Figure 2.

Workflow for the Incremental Revision Process.

5. Model Developed: Template for the Analysis of the Certification Framework

To conduct a structured analysis of references and standards, a template was devised to facilitate the thorough review and extraction of pertinent information. The template aims to achieve the following from each reference:

- -

- Provide a concise summary of the key concepts within the standard.

- -

- Conduct a structured analysis across dimensions, comparing these concepts with the initial framework version.

- -

- Identify any gaps or needs revealed during the reference analysis.

This template is essential for identifying foundational components of the framework and distinguishing elements already included, partially covered, or presenting contradictions to or incompatibilities with the initial framework version. The template divides the review into distinct sections.

- -

- Summary of Main Concepts: This section offers a brief overview of the central ideas presented in the reference, outlining key conclusions or concepts discussed.

- -

- Analysis by Dimensions Compared with the Initial Framework: This section analyzes how the reference addresses dimensions established in the initial certification framework for AI safety-critical systems. This comparison highlights overlaps or discrepancies and determines which dimensions the reference covers and from what perspective.

- -

- Identified Needs: This section identifies areas addressed by the reference that are not covered in the initial framework, aiming to identify potential needs for the new standard and harmonize concepts where common criteria are required.

This structured approach ensures a comprehensive evaluation of references and promotes the systematic development of the new certification standard.

6. Model Implementation: Example of the Assessment of References

To validate the effectiveness and applicability of this template, a comprehensive analysis of an ISO standard was conducted to potentially inform future iterations of the new standard. It is important to clarify that the normative exploration using this template aims to validate its suitability—and does not imply that other more suitable references do not exist—to enhance the presented certification structure.

Due to space limitations in this article, a representative example of the regulation analyzed is provided. It is necessary to emphasize that this example is not exhaustive and does not limit the diversity of standards that could contribute to the continual improvement and robustness of the foundational document. This selection of specific standards is aimed at illustrating the validation process of the template, recognizing that there are other pertinent references that could be considered in future document revisions.

6.1. Reference: ISO/IEC TR 24028:2020—Information Technology—Artificial Intelligence—Overview of Trustworthiness in Artificial Intelligence

6.1.1. Summary of the Main Concepts

- (a)

- Scope

This reference document addresses trustworthiness in AI systems, covering essential properties, typical threats, and potential mitigation measures. It provides a comprehensive overview without specifying a trustworthiness-level scheme.

- (b)

- Purpose

The document aims to analyze factors impacting AI systems’ trustworthiness, including characteristics, threats, vulnerabilities, and approaches to enhance trustworthiness through mitigation measures, assessment, and evaluation methods.

- (c)

- Description

ISO/IEC TR 24028:2020 establishes foundational High-Level Properties for trustworthy AI systems, addressing threats, vulnerabilities, and challenges in AI production and application. It suggests mitigation measures already integrated into the initial version of the new standard, with future considerations for Means of Compliance in verification and validation. Trustworthiness encompasses non-functional requirements like reliability, security, privacy, and transparency, essential across physical, cyber, and social trust layers. Stakeholder identification, asset management, and governance roles are crucial for establishing trust. Specific vulnerabilities and threats include security issues, privacy concerns, biases, unpredictability, and system faults, all mitigated through various testing and evaluation approaches already detailed in the new standard’s initial version.

6.1.2. Analysis by Dimensions Covered by the Reference in Comparison with the First Version of the New Standard

- -

- Regulatory Coverage: ISO/IEC TR 24028:2020 provides mitigation measures for vulnerabilities and threats in AI systems broadly, aligning fully with the initial version of the new standard. While the new standard offers a more detailed version, this document lays the theoretical groundwork for future developments. Model update considerations are mentioned but not included in the first version.

- -

- Technical Objectives: This document’s ideas are well integrated into the initial version’s technical objectives. However, considerations for model updates are absent. Approaches like incremental learning for modifying lean model behavior should be explored for defining continuing airworthiness requirements.

- -

- Technical Requirements: No references to technical requirements are made in the document.

- -

- Organizational Provisions: The document complies with organizational provisions in the first version of the new standard.

- -

- Anticipated Means of Compliance (aMoC): The general testing approaches presented, such as formal methods, empirical testing, intelligence comparison, testing in simulated environments, field trials, and comparison to human intelligence, could inform further definitions of aMoC for verification and validation. However, specific procedures are not detailed enough.

- -

- Acceptable Means of Compliance (AMC): No references to AMC are made in the document.

- -

- Guidance Material: The document could contribute to Guidance Material overviewing trustworthiness in AI systems, particularly in terms of mitigation measures, although more detailed adjustments would be necessary.

- -

- AI Trustworthiness Analysis: No additional applicable information is provided beyond what is covered in the first version of the framework.

- -

- AI Assurance: Additional considerations for assurance during model updates should be included in AI assurance for continuing airworthiness.

- -

- Human Factors for AI: Considerations about AI system misuse due to overreliance are not addressed in the first version of the new standard. Systems inspiring trust can lead to systemic failures in situations beyond their intended capabilities.

- -

- AI Safety Risk Mitigation: ISO/IEC TR 24028:2020’s mitigation measures could serve as Guidance Material for aMoC SRM-01, though system in-service experience-related measures are not explicitly covered in the first version of the framework. These include managing expectations, product labeling, and cognitive science relating to humans and automation.

- -

- Organizations and AI Management: No additional information is applicable to the first version of the framework.

- -

- Timeframe: Published in 2020, most concepts are already covered in the initial version of the framework.

- -

- Competence: Apart from mentioning training needs, no references to competencies are made.

- -

- Modulation of Objectives—LoR: No references to modulation of objectives are made.

- -

- Tool Qualification: No references to tool qualification are made.

- -

- Scaling AI: No references to scaling AI are made.

- -

- Embeddability: The document refers to IEC 61508 for methods and processes to implement fail-safe hardware in embedded ML, aligning with certifiable functional safety levels.

- -

- Frugality: No references to frugality are made.

- -

- Distributed AI: No references to distributed AI are made.

- -

- Adaptive AI: No references to adaptive AI are made.

6.1.3. List of Identified Needs

- -

- ISO/IEC 24028:2020 Updates for Continuing Airworthiness: This standard includes considerations on AI system updates for the continuing airworthiness certification process, advocating for methods like incremental learning. These aspects are not currently integrated into the first version of the new standard framework.

- -

- ISO/IEC 24028:2020 Testing Approaches: The document provides a brief overview of testing approaches applied to AI systems. The project should evaluate the suitability of each testing approach across different testing phases and properties (e.g., data completeness, inference model robustness, etc.).

- -

- ISO/IEC 24028:2020 Hardware (HW) Vulnerabilities: This standard highlights how HW vulnerabilities can significantly impact AI system performance and safety. The project should review and assess HW certification processes and standards to ensure their adequacy for AI methods.

- -

- ISO/IEC 24028:2020 In-Service Safety Measures: This standard addresses in-service safety measures for AI systems, such as expectations management, product labeling, and the impact of automation on human factors (e.g., overreliance). The project should ensure these measures are considered by the applicant.

7. Conclusions

Upon applying the proposed methodology for reference analysis to develop a new standard, several conclusions emerge. Firstly, the selected documents were chosen to validate the efficacy of the methodology, although there may be other more pertinent documents that can help address additional needs and comprehensively cover all the dimensions being compared.

Secondly, this framework enables the creation of successive versions aimed at refining the foundational certification framework in an incremental process. This iterative approach ensures alignment with established standards and facilitates agile adaptation to evolving regulatory landscapes.

This methodology has demonstrated efficiency and provided a robust structure for enhancing various aspects of the certification process, accommodating adjustments and improvements as required.

The systematic analysis method has proven valuable for identifying overlaps, contradictions, and omissions within the initial framework, thereby informing future iterations towards establishing a solid base for AI safety-critical systems certification in military aviation.

Author Contributions

Conceptualization, C.G.A.; methodology, R.D.-A.J.; software, F.P.M.; validation, M.Z.S.; formal analysis, V.O.P.; investigation, X.Y.; resources, R.D.-A.J.; data curation, F.P.M.; writing—original draft preparation, V.O.P.; writing—review and editing V.O.P.; visualization, F.P.M.; supervision, R.D.-A.J. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The original contributions presented in this study are included in the article. Any further inquiries can be directed toward the corresponding author.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Dwivedi, Y.; Hughes, L.; Ismagilova, E.; Aarts, G.; Coombs, C.; Crick, T.; Williams, M.D. Artificial Intelligence (AI): Multidisciplinary perspectives on emerging challenges, opportunities, and agenda for research, practice and policy. Int. J. Inf. Manag. 2021, 57, 101994. [Google Scholar] [CrossRef]

- Duan, Y.; Edwards, J.S.; Dwivedi, Y.K. Artificial intelligence for decision making in the era of Big Data-evolution, challenges and research agenda. Int. J. Inf. Manag. 2019, 48, 63–71. [Google Scholar]

- Shneiderman, B. Brinding the gap between ethics and practice: Guidelines for reliable, safe, and trustworthy human-centered AI systems. ACM Trans. Interact. Intell. Syst. (TIIS) 2020, 10, 1–31. [Google Scholar]

- Khanna, S.; Srivastava, S. Patient-Centric Ethical Frameworks for Privacy, Transparency, and Bias Awarness in Deep Learning-Based Medical Systems. Appl. Res. Artif. Intell. Cloud Comput. 2020, 3, 16–35. [Google Scholar]

- Morgan, F.E.; Boudreaux, B.; Lohn, A.J.; Ashby, M.; Curriden, C.; Klima, K.; Grossman, D. Military Applications of Artificial Intelligence; RAND Corporation: Santa Monica, CA, USA, 2020. [Google Scholar]

- Shin, H.; Lee, J.; Kim, H.; Shim, D.H. An autonomous aerial combat framework for two-on-two engagements based on basic fighter maneuvers. Aerosp. Sci. Technol. 2018, 72, 305–315. [Google Scholar]

- Kong, W.; Zhou, D.; Du, Y.; Zhou, Y.; Zhao, Y. Reinforcement learning for multi-aircraft autunomous air combat in multi-sensor UCAV platform. IEEE Sens. J. 2022, 23, 20596–20606. [Google Scholar]

- Tutty, M.G.; White, T. Unlocking the future: Decision making in complex military and safety critical systems. In Proceedings of the Systems Engineering Test and Evaluation Conference, Sydney, Australia, 30 April–2 May 2018; Volume 30. [Google Scholar]

- Torens, C.; Durak, U.; Dauer, J.C. Guidelines and regulatory framework for machine learning in aviation. In Proceedings of the AIAA Scitech 2022 Forum, San Diego, CA, USA, 3–7 January 2022. [Google Scholar]

- Shu, Z.; Jia, Q.; Li, X.; Wang, W. An OODA loop-based function network modeling and simulation evaluation method for combat system-of-systems. In Proceedings of the Asian Simulation Conference, Beijing, China, 8–11 October 2016; Springer: Singapore, 2016; pp. 393–402. [Google Scholar]

- Layton, P. Fighting Artificial Intelligence Battles: Operational Concepts for Future AI-Enabled Wars. Network 2021, 4, 100. [Google Scholar]

- Guldal, M.R.; Andersson, J. Integrating Process Standards for System Safety Analysis to Enhance Efficiency in Initial Airworthiness Certification of Military Aircraft: A Systems Engineering Perspective. INCOSE Int. Symp. 2020, 30, 589–603. [Google Scholar] [CrossRef]

- Purton, L.; Kourousis, K. Military airworthiness management frameworks: A critical review. Procedia Eng. 2014, 80, 545–564. [Google Scholar] [CrossRef]

- Missoni, E.; Nikolić, N.; Missoni, I. Civil aviation rules on crew flight time, flight duty, and rest: Comparison of 10 ICAO member states. Aviat. Space Environ. Med. 2009, 80, 135–138. [Google Scholar]

- Cihon, P. Standards for AI Governance: International Standards to Enable Global Coordination in AI Research & Development; Future of Humanity Institute, University of Oxford: Oxford, UK, 2019. [Google Scholar]

- Danks, D.; London, A.J. Regulating autonomous systems: Beyond standards. IEEE Intell. Syst. 2017, 32, 88–91. [Google Scholar]

- Wang, Y.; Chung, S.H. Artificial intelligence in safety-critical systems: A systematic review. Ind. Manag. Data Syst. 2022, 122, 442–470. [Google Scholar]

- Kläs, M.; Adler, R.; Jöckel, L.; Groß, J.; Reich, J. Using Complementary Risk Acceptance Criteria to Structure Assurance Cases for Safety-Critical AI Components. In AISafety@ IJCAI; Fraunhofer IESE: Kaiserslautern, Germany, 2021; Available online: https://ceur-ws.org/Vol-2916/paper_9.pdf (accessed on 1 March 2025).

- Piao, H.; Han, Y.; Chen, H.; Peng, X.; Fan, S.; Sun, Y.; Zhou, D. Complex relationship graph abstraction for autonomous air combat collaboration: A learning and expert knowledge hybrid approach. Expert Syst. Appl. 2023, 215, 119285. [Google Scholar]

- RTCA DO-178C; Software Considerations in Airborne Systems and Equipment Certification. 5 January 2012. 1150 18th NW, Suite 910 Washington, D.C. 20036. Available online: https://www.rtca.org/do-178/ (accessed on 1 March 2025).

- MIL-HDBK-516C; Department of Defense Handbook: Airworthiness Certification Criteria. 12 December 2014. Department of Defense, United States of America. Available online: http://everyspec.com/MIL-HDBK/MIL-HDBK-0500-0599/MIL-HDBK-516C_52120/ (accessed on 1 March 2025).

- SAE International. Guidelines and Methods for Conducting the Safety Assessment Process on Civil Airborne Systems and Equipment ARP4761; SAE International: Warrendale, PA, USA, 1996. [Google Scholar]

- SAE International. Guidelines for Development of Civil Aircraft and Systems ARP4754A; SAE International: Warrendale, PA, USA, 2010. [Google Scholar]

- EASA. Certification Specifications for Large Aeroplanes (CS-25); EASA: Cologne, Germany, 2007. [Google Scholar]

- EASA. Easy Access Rules for Acceptable Means of Compliance for Airworthiness of Products, Parts and Appliances (AMC-20); EASA: Cologne, Germany, 2023. [Google Scholar]

- EASA. Artificial Intelligence Roadmap 2.0. Human-Centric Approach to AI in Aviation; EASA: Cologne, Germany, 2023. [Google Scholar]

- EASA. EASA Concept Paper: First Usable Guidance for Level 1&2 Machine Learning Applications; EASA: Cologne, Germany, 2023. [Google Scholar]

- The Open Group. The TOGAF® Standard, Version 9.2. San Francisco, EEUU. 16 April 2018. Available online: https://publications.opengroup.org/ (accessed on 1 March 2025).

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).