Recommender System for Apparel Products Based on Image Recognition Using Convolutional Neural Networks †

Abstract

1. Introduction

2. Related Work

2.1. Deep Learning and CNN

2.2. Recommender Systems

2.3. Recommenders Based on Deep Learning

3. Research Methodology

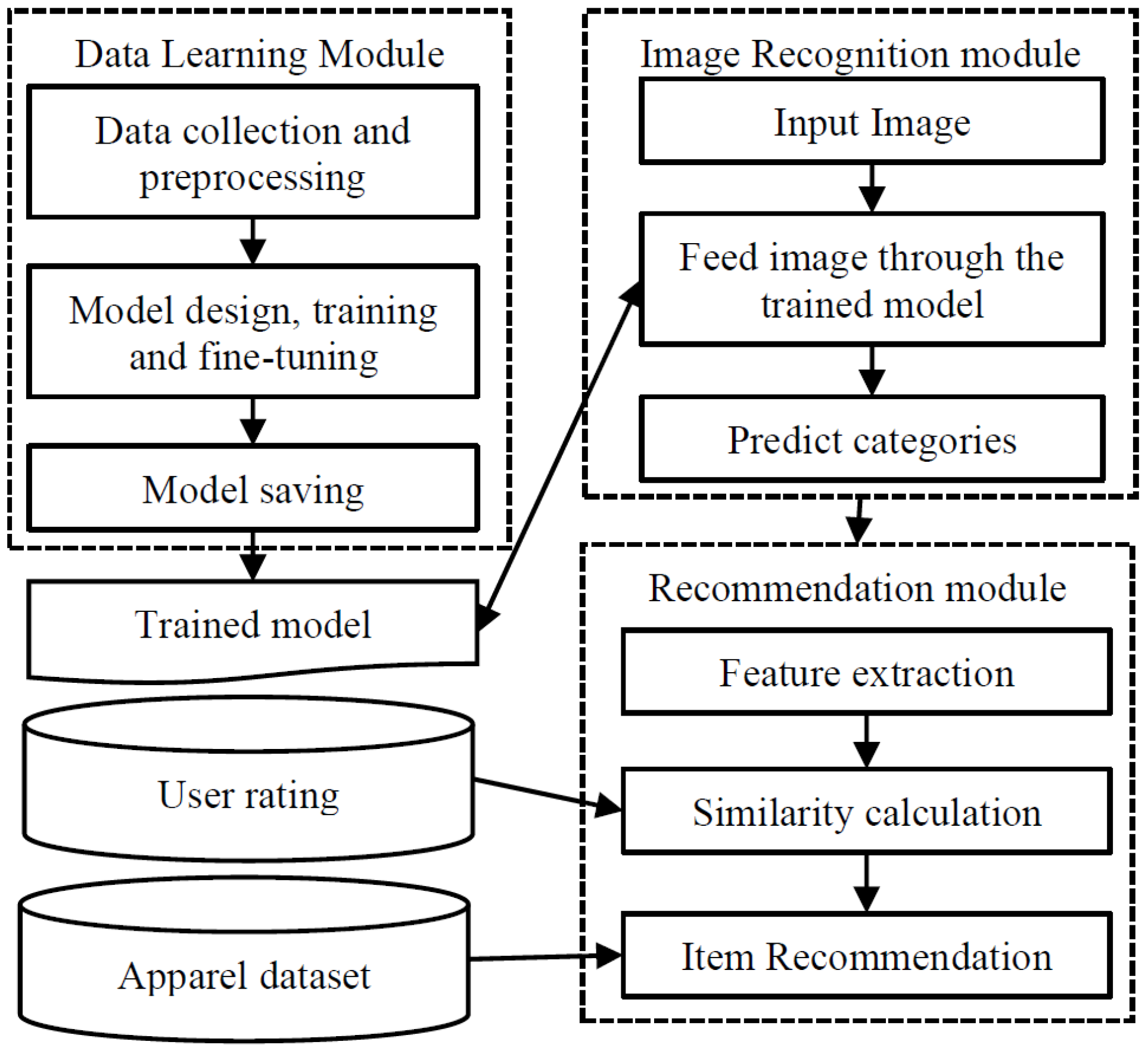

3.1. System Design

- The data learning module collects, preprocesses, and trains models, storing the trained model and weights for future use.

- The image recognition module processes image input and uses the trained model to predict categories.

- The image recommendation module extracts features, calculates similarity with user ratings, and provides personalized recommendations.

3.2. Data Collection and Preprocessing

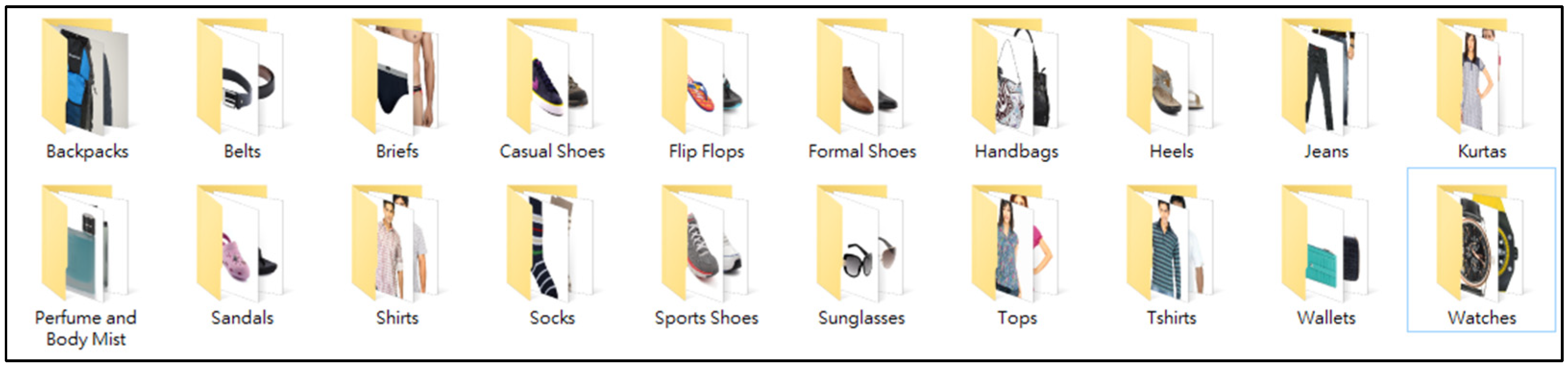

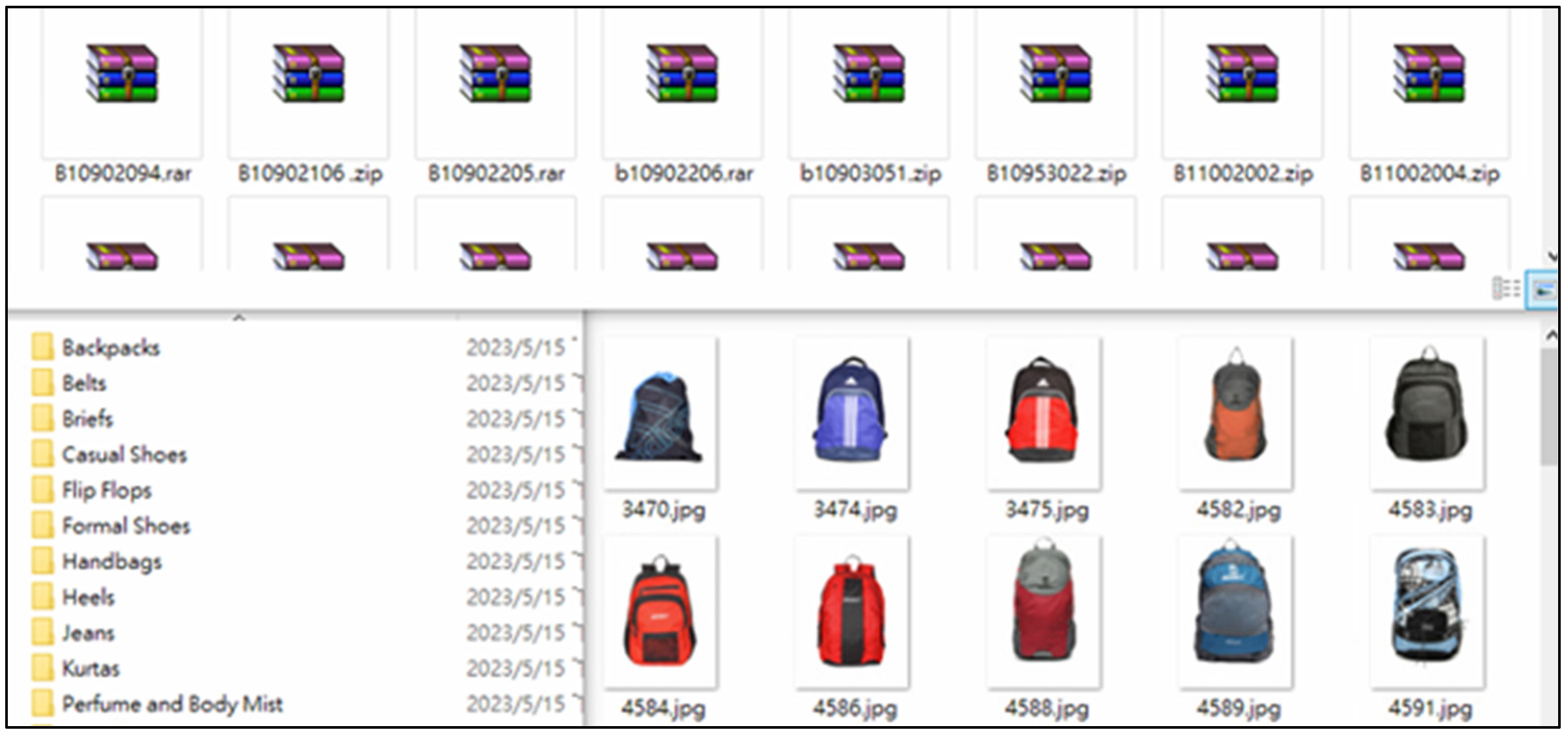

- The dataset comprises 20 categories of apparel specifically curated for this study.

- It is sourced from the “Fashion Product Images Dataset”, specifically the 108-category dataset within it.

- The dataset is a collaboration with Param Aggarwal (Owner).

- The data originate from the Indian fashion e-commerce website: myntra.com.

- Dataset source link is https://www.kaggle.com/datasets/paramaggarwal/fashion-product-images-dataset (accessed on 20 March 2024).

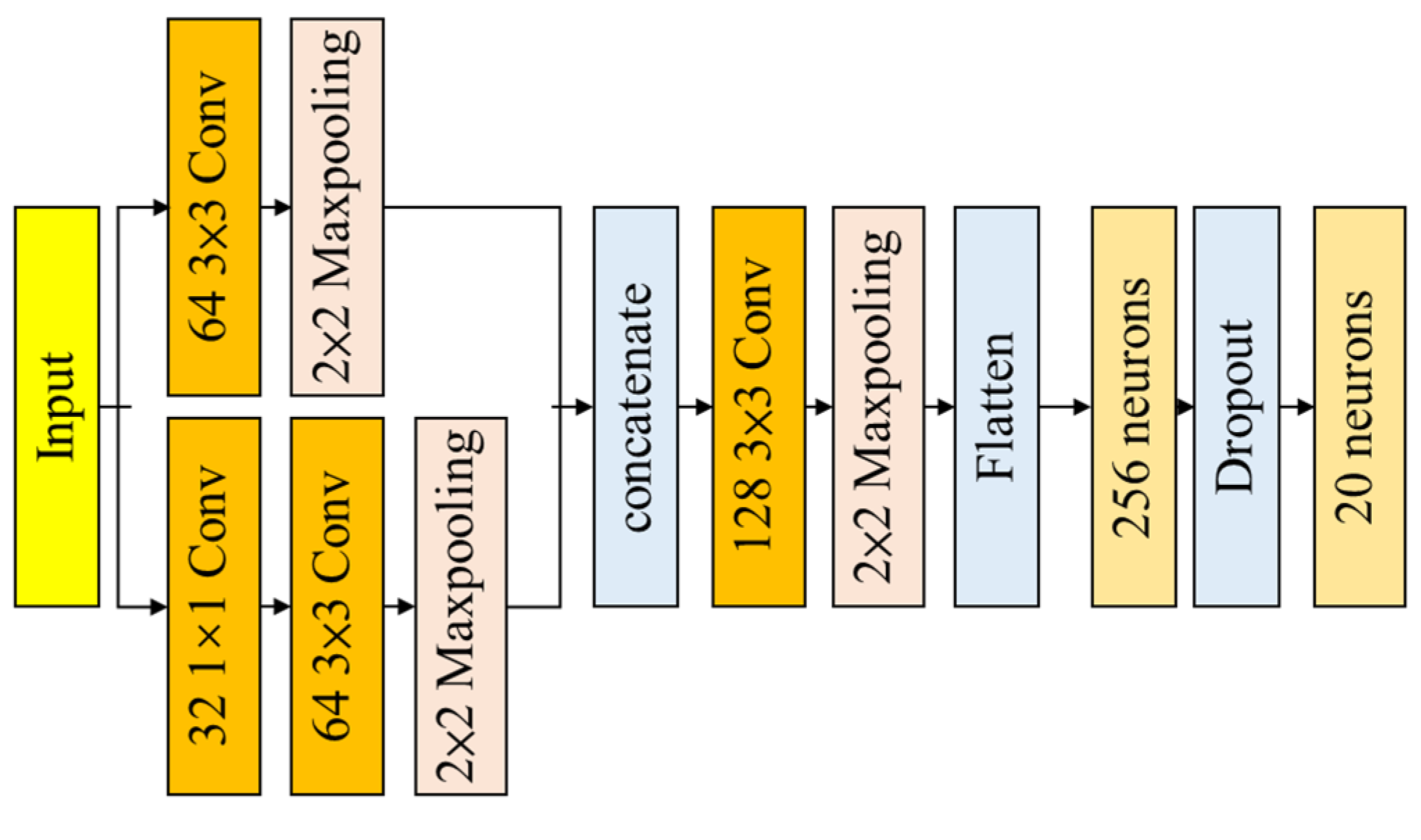

3.3. Model Design, Training, and Fine-Tuning

- Dataset construction: A dataset consisting of 20 apparel categories is established, divided into a 60:20:20 ratio for training, validation, and testing, respectively.

- ResNet50, VGG19, YOLOv8n, self-built CNN training: Each training module runs for 100 iterations, with 64 images processed per iteration, and validation is also conducted for 100 iterations with the same batch size.

- Testing: Testing involves processing 64 images at a time, with the final output being the accuracy rate.

3.4. Recommendation Engine

- For k = 1, items with high similarity scores but no ratings are prioritized at a 3% probability, with the remaining 97% of recommendations based on comprehensive scores.

- For k = 5, items with high similarity scores but no ratings are prioritized with a 20% probability, with the remaining 80% of recommendations based on scores.

- For k = 10, a 5% probability is allocated to include 1 item with a high similarity score but no rating in the recommendations, and a 90% probability to include 2 items with high similarity scores but no ratings. The remaining 5% of recommendations are based on comprehensive scores.

4. Experiments and Results

4.1. Experimental Device

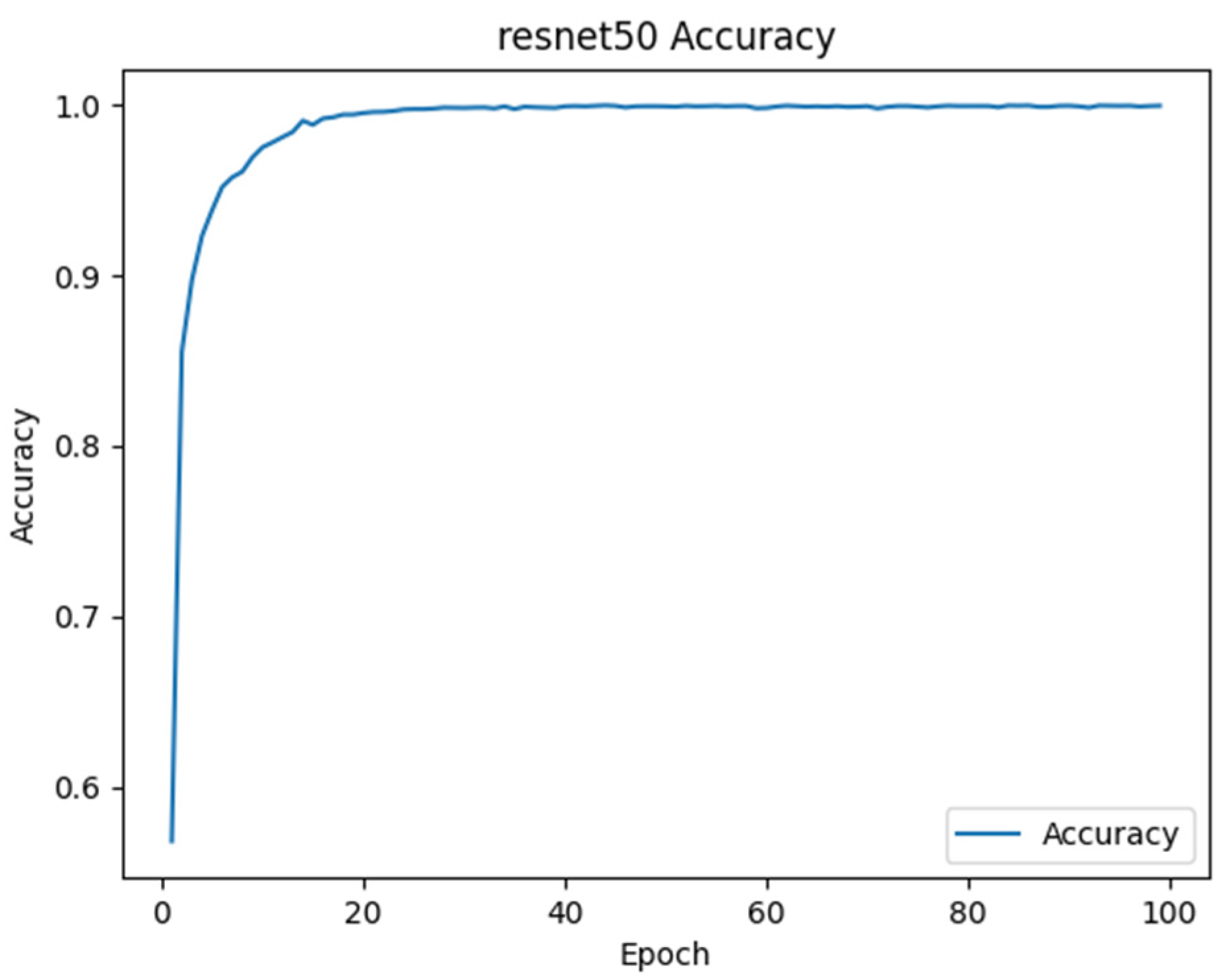

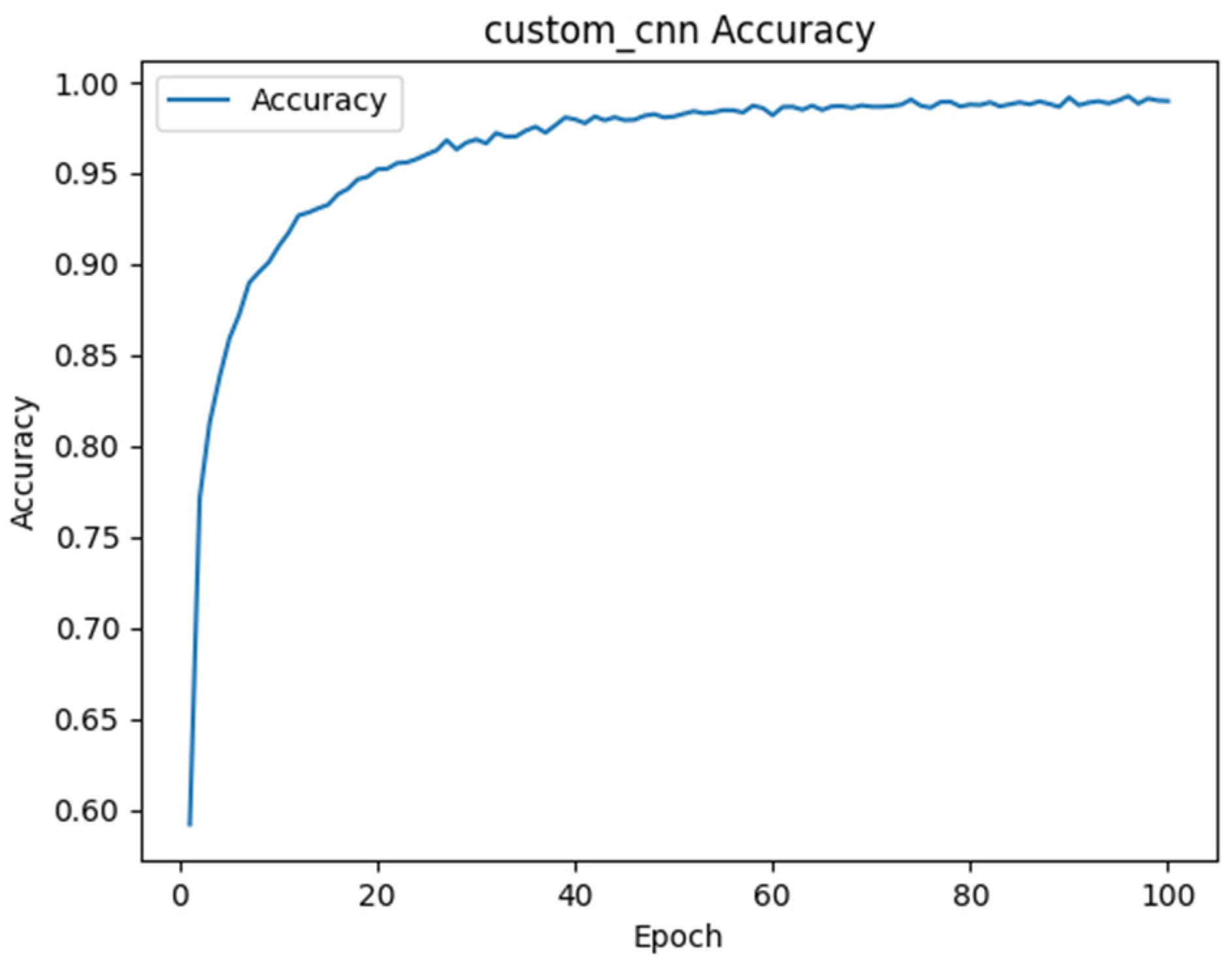

4.2. Model Training and Evaluation

4.3. Recommendation Evaluation

- Precision at k is the value of successfully recommended items out of the total recommended items, where k is the total number of recommended items.

- Recall at k is the number of correctly predicted items among the recommended items.

- F1-score is the harmonic mean of precision and recall.

4.4. Computation Time

5. Conclusions and Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- John, D. Kelleher, Deep Learning; The MIT Press: Cambridge, MA, USA, 2019. [Google Scholar]

- LeCun, Y.; Bottou, L.; Bengio, Y.; Haffner, P. Gradient-based learning applied to document recognition. Proc. IEEE 1998, 86, 2278–2324. [Google Scholar] [CrossRef]

- Huang, G.; Liu, Z.; van der Maaten, L.; Weinberger, K.Q. Densely Connected Convolutional Networks. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 2261–2269. [Google Scholar]

- Wu, T.; He, S.; Liu, J.; Sun, S.; Liu, K.; Han, Q.-L.; Tang, Y. A brief overview of ChatGPT: The history, status quo and potential future development. IEEE/CAA J. Autom. Sin. 2023, 10, 1122–1136. [Google Scholar] [CrossRef]

- Mittal, S. A survey on optimized implementation of deep learning models on the NVIDIA Jetson platform. J. Syst. Archit. 2019, 97, 428–442. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Koonce, B. ResNet 50. In Convolutional Neural Networks with Swift for Tensorflow: Image Recognition and Dataset Categorization; Apress: New York, NY, USA, 2021; pp. 63–72. [Google Scholar]

- Zisserman, A.; Simonyan, K. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Jocher, J.G.; Stoken, A.; Borovec, J.; Changyu, L.; Hogan, A.; Diaconu, L.; Poznanski, J.; Yu, L.; Rai, P.; Ferriday, R.; et al. ultralytics/yolov5: V3. 0. Zenodo 2020. [Google Scholar] [CrossRef]

- Terven, J.R.; Cordova-Esparza, D.M. A comprehensive review of YOLO: From YOLOv1 to YOLOv8 and beyond. arXiv 2023, arXiv:2304.00501. [Google Scholar]

- Jannach, D.; Zanker, M.; Felfernig, A.; Friedrich, G. Recommender Systems: An Introduction; Cambridge University Press: Cambridge, UK, 2010. [Google Scholar]

- Martin, F.J.; Donaldson, J.; Ashenfelter, A.; Torrens, M.; Hangartner, R. The big promise of recommender systems. AI Mag. 2011, 32, 19–27. [Google Scholar] [CrossRef]

- Resnick, P.; Varian, H.R. Recommender Systems. Commun. ACM 1997, 40, 56–58. [Google Scholar] [CrossRef]

- Cheng, H.-T.; Koc, L.; Harmsen, J.; Shaked, T.; Chandra, T.; Aradhye, H.; Anderson, G.; Corrado, G.; Chai, W.; Ispir, M.; et al. Wide & deep learning for recommender systems. In Proceedings of the 1st Workshop on Deep Learning for Recommender Systems, Boston, MA, USA, 15 September 2016; pp. 7–10. [Google Scholar]

- Zhang, S.; Yao, L.; Sun, A.; Tay, Y. Deep Learning Based Recommender System: A Survey and New Perspectives. ACM Comput. Surv. 2020, 52, 1–38. [Google Scholar] [CrossRef]

- Ko, B.; Ok, J. Time Matters in Using Data Augmentation for Vision-Based Deep Reinforcement Learning. arXiv 2021, arXiv:2102.08581. [Google Scholar]

- Yun, S.; Han, D.; Oh, S.J.; Chun, S.; Choe, J.; Yoo, Y. CutMix: Regularization Strategy to Train Strong Classifiers with Localizable Features. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, South Korea, 27 October–2 November 2019; pp. 6023–6032. [Google Scholar]

- Zuva, K.; Zuva, T. Evaluation of Information Retrieval Systems. Int. J. Comput. Sci. Inf. Technol. (IJCSIT) 2012, 4, 35–43. [Google Scholar] [CrossRef]

- Tan, P.-N.; Steinbach, M.; Kumar, V. Introduction to Data Mining, 2nd ed.; Pearson: London, UK, 4 January 2018. [Google Scholar]

- Singhal, A. Modern Information Retrieval: A Brief Overview. Bull. IEEE Comput. Soc. Tech. Comm. Data Eng. 2001, 24, 35–43. [Google Scholar]

- Li, Y.; Liang, F.; Zhao, L.; Cui, Y.; Ouyang, W.; Shao, J.; Yu, F.; Yan, J. Supervision exists everywhere: A data efficient contrastive language-image pre-training paradigm. arXiv 2021, arXiv:2110.05208. [Google Scholar]

| Model | ResNet50 | VGG19 | YOLOv8n |

|---|---|---|---|

| Architecture | 50 layers | 19 layers | 225–230 layers |

| Convolutional filters | 3 × 3 | 3 × 3 | Varying sizes |

| Max pooling | 2 × 2 | 2 × 2 | Various sizes |

| Fully connected layers | Minimal (Often 1) | Large number | Minimal (might have none) |

| Top-5 accuracy on ImageNet | 97.33% | 97.07% | Not available |

| Data requirements | High (Tens of thousands) | High (Tens of thousands) | High (Tens of thousands) |

| Computational complexity | Medium | High | Lower |

| Interpretability | Moderate | Limited | Moderate |

| Hardware | |

|---|---|

| Processor | Intel® Core™ i7-8086K |

| Memory | DDR4-32G |

| Graphics Card | NVIDIA GeForce GTX 1080Ti (11G) |

| Hardware | |

| Operating System | Windows 10 |

| Development Tools | Anaconda3 |

| Python Version | 3.10 |

| Training Model | Training Set Accuracy | Validation Set Accuracy | Test Set Accuracy |

|---|---|---|---|

| ResNet50 | 0.9996 | 0.921 | 0.9288 |

| VGG19 | 0.9987 | 0.9297 | 0.9258 |

| Self-built CNN | 0.9897 | 0.8792 | 0.8842 |

| YOLOv8n | 0.9517 | 0.922 | 0.9233 |

| Training Model | Top-1 | Top-5 | Top-10 |

|---|---|---|---|

| ResNet50 | 0.96541 | 0.95925 | 0.81454 |

| VGG19 | 0.97 | 0.96175 | 0.81567 |

| Self-built CNN | 0.96833 | 0.96308 | 0.81625 |

| YOLOv8n | 0.97083 | 0.96242 | 0.81333 |

| Training Model | Top-1 | Top-5 | Top-10 |

|---|---|---|---|

| ResNet50 | 0.09943 | 0.18272 | 0.61035 |

| VGG19 | 0.11136 | 0.21986 | 0.76828 |

| Self-built CNN | 0.12432 | 0.33533 | 0.99172 |

| YOLOv8n | 0.14396 | 0.50067 | 0.98776 |

| Training Model | Top-1 | Top-5 | Top-10 |

|---|---|---|---|

| ResNet50 | 0.18029 | 0.307 | 0.69782 |

| VGG19 | 0.19975 | 0.35791 | 0.79127 |

| Self-built CNN | 0.22035 | 0.49747 | 0.89547 |

| YOLOv8n | 0.25074 | 0.65869 | 0.8921 |

| Training Model | Training Set Accuracy | Validation Set Accuracy | Test Set Accuracy |

|---|---|---|---|

| ResNet50 | 0.9996 | 0.921 | 0.9288 |

| VGG19 | 0.9987 | 0.9297 | 0.9258 |

| Self-built CNN | 0.9897 | 0.8792 | 0.8842 |

| YOLOv8n | 0.9517 | 0.922 | 0.9233 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chang, C.-C.; Wei, C.-H.; Wang, Y.-H.; Yang, C.-H.T.; Hsiao, S. Recommender System for Apparel Products Based on Image Recognition Using Convolutional Neural Networks. Eng. Proc. 2025, 89, 38. https://doi.org/10.3390/engproc2025089038

Chang C-C, Wei C-H, Wang Y-H, Yang C-HT, Hsiao S. Recommender System for Apparel Products Based on Image Recognition Using Convolutional Neural Networks. Engineering Proceedings. 2025; 89(1):38. https://doi.org/10.3390/engproc2025089038

Chicago/Turabian StyleChang, Chin-Chih, Chi-Hung Wei, Yen-Hsiang Wang, Chyuan-Huei Thomas Yang, and Sean Hsiao. 2025. "Recommender System for Apparel Products Based on Image Recognition Using Convolutional Neural Networks" Engineering Proceedings 89, no. 1: 38. https://doi.org/10.3390/engproc2025089038

APA StyleChang, C.-C., Wei, C.-H., Wang, Y.-H., Yang, C.-H. T., & Hsiao, S. (2025). Recommender System for Apparel Products Based on Image Recognition Using Convolutional Neural Networks. Engineering Proceedings, 89(1), 38. https://doi.org/10.3390/engproc2025089038