Enhancing Fabric Detection and Classification Using YOLOv5 Models †

Abstract

1. Introduction

2. Related Works

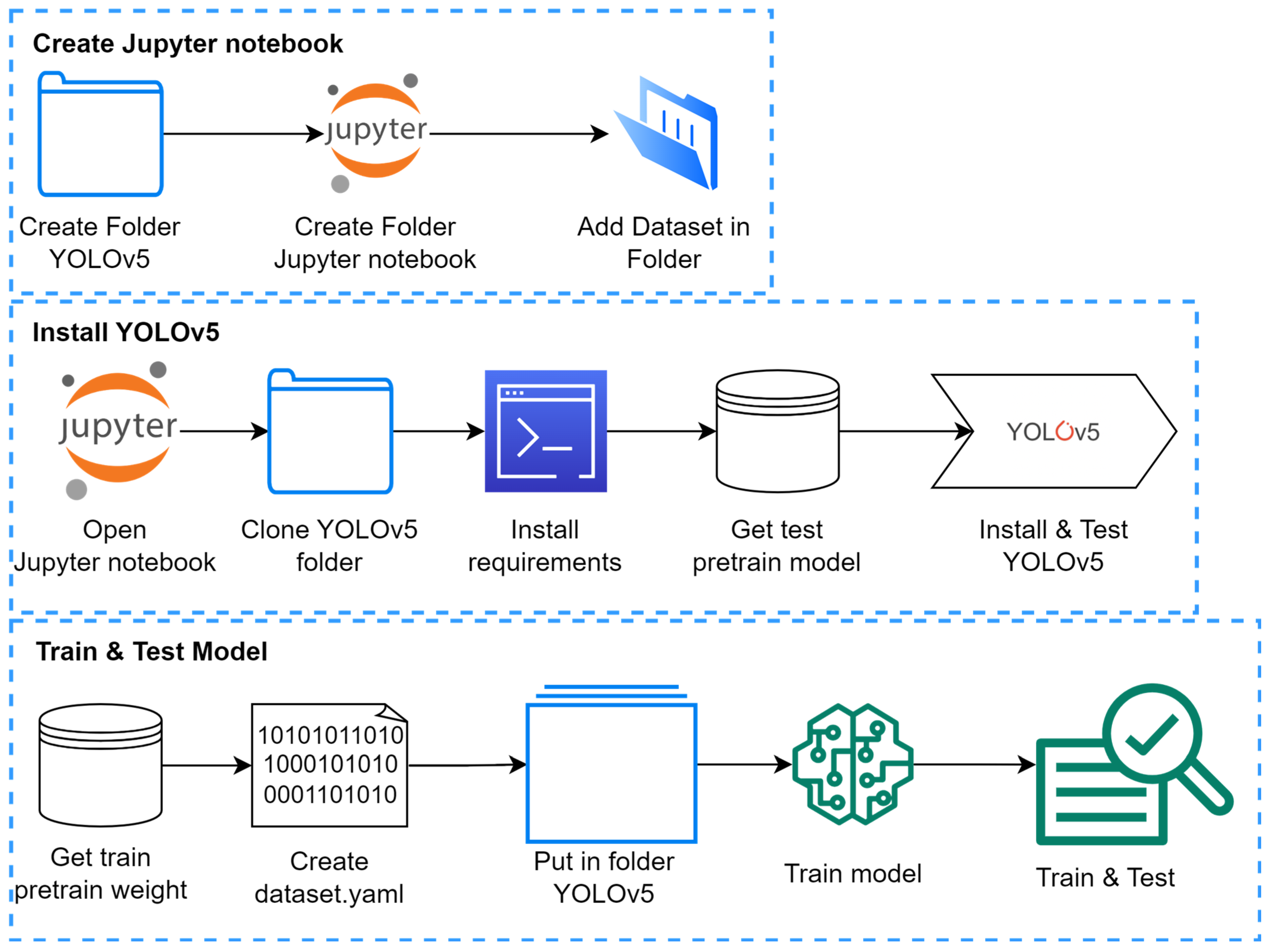

3. Methodology

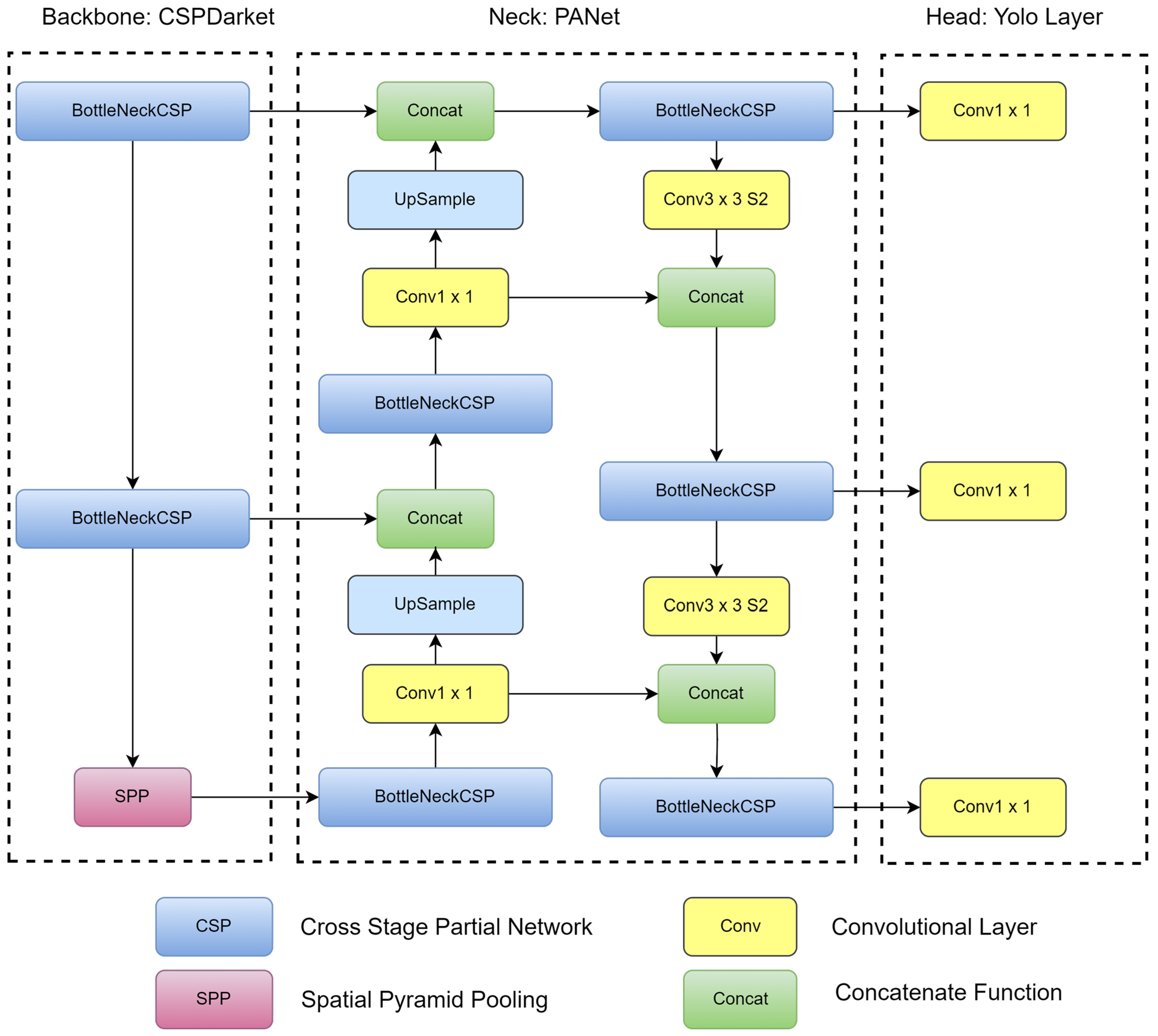

3.1. YOLO Algorithms

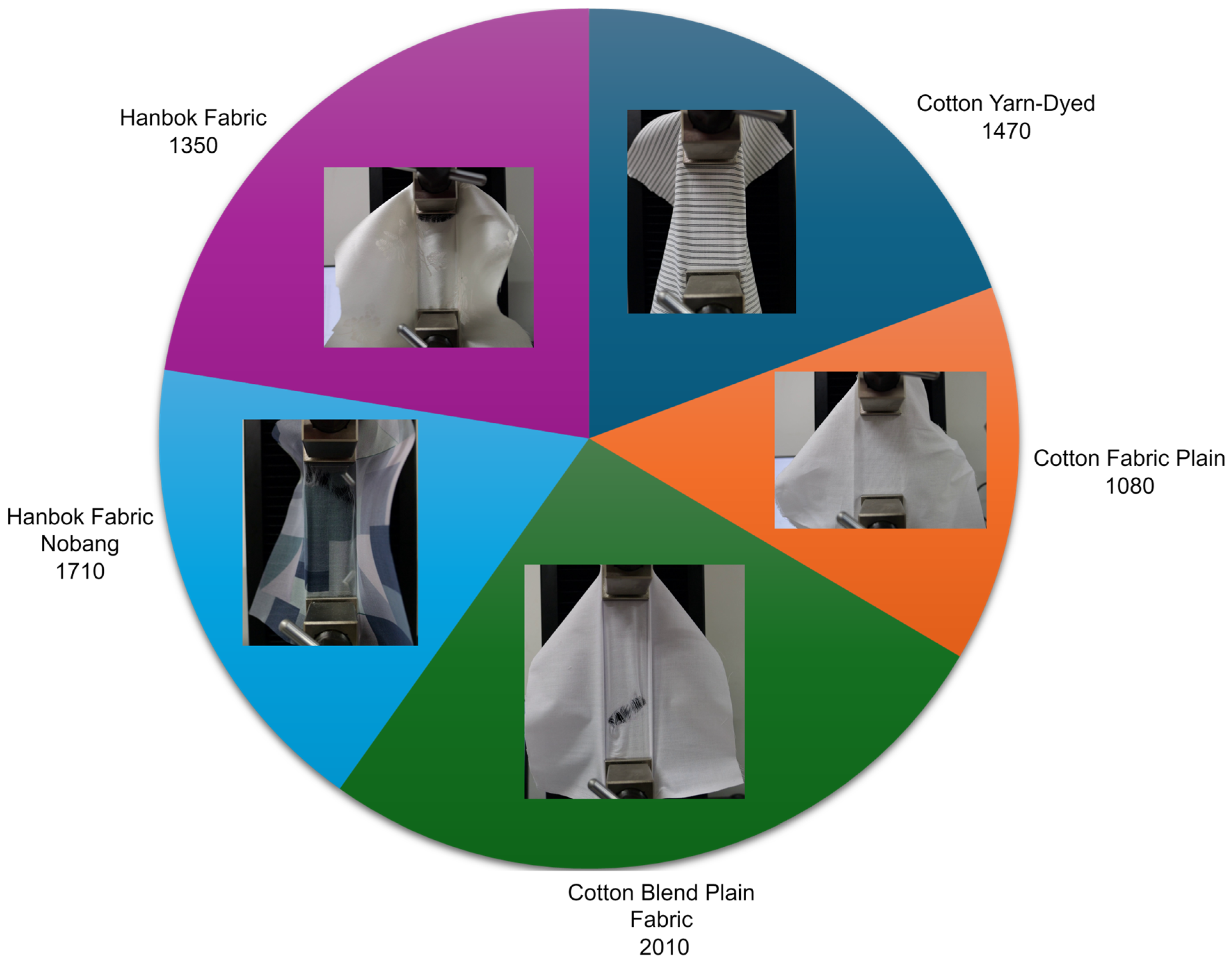

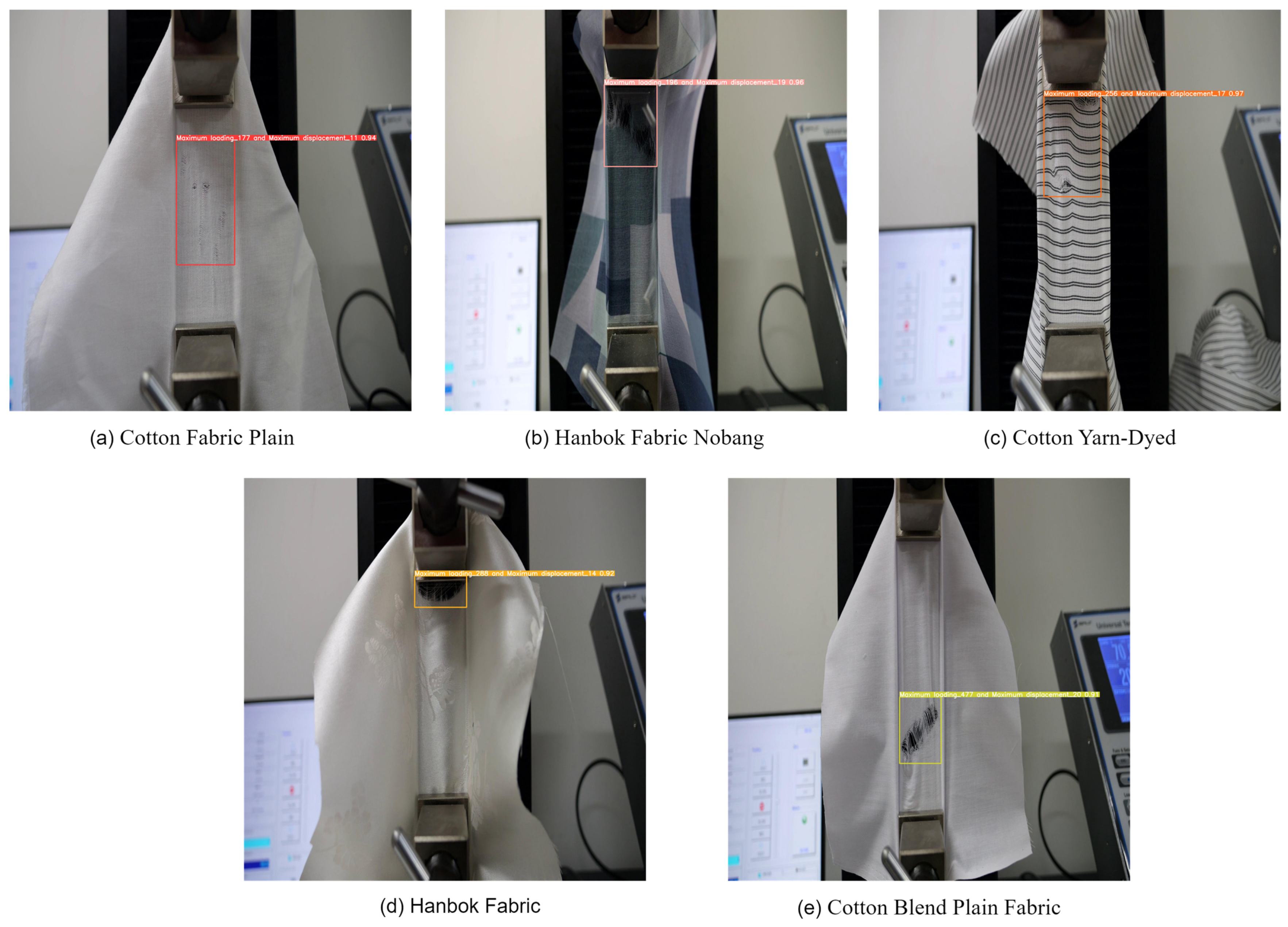

3.2. Dataset

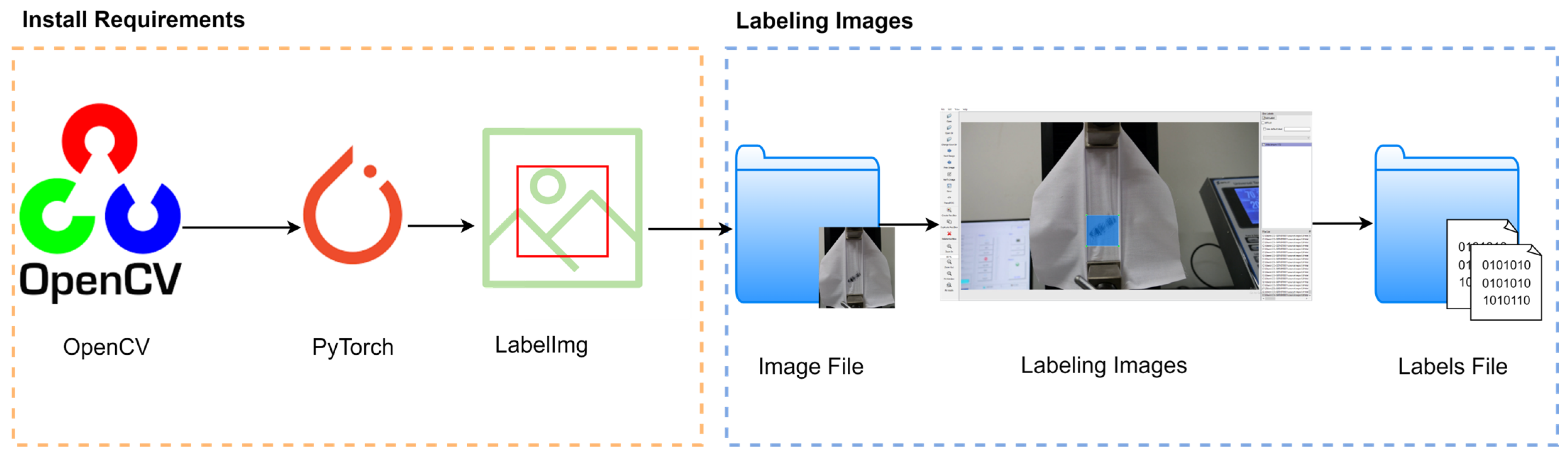

3.3. Image Labeling

4. Result and Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Cheng, Z.Q.; Wu, X.; Liu, Y.; Hua, X.S. Video2shop: Exact matching clothes in videos to online shopping images. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 4048–4056. [Google Scholar]

- Latha, R.S.; Sreekanth, G.R.; Rajadevi, R.; Nivetha, S.K.; Kumar, K.A.; Akash, V.; Bhuvanesh, S.; Anbarasu, P. Fruits and vegetables recognition using YOLO. In Proceedings of the International Conference on Computer Communication and Informatics (ICCCI), Hammamet, Tunisia, 25–27 January 2022; pp. 1–6. [Google Scholar]

- Zhou, M.; Chen, G.H.; Ferreira, P.; Smith, M.D. Consumer behavior in the online classroom: Using video analytics and machine learning to understand the consumption of video courseware. J. Mark. Res. 2021, 58, 1079–1100. [Google Scholar] [CrossRef]

- Yin, W.; Xu, B. Effect of online shopping experience on customer loyalty in apparel business-to-consumer ecommerce. Text. Res. J. 2021, 91, 2882–2895. [Google Scholar] [CrossRef]

- Soegoto, E.S.; Marbun, M.A.S.; Dicky, F. Building the Design of E-Commerce. IOP Conf. Ser. Mater. Sci. Eng. 2018, 407, 012021. [Google Scholar] [CrossRef]

- Boardman, R.; Chrimes, C. E-commerce is king: Creating effective fashion retail website designs. In Pioneering New Perspectives in the Fashion Industry: Disruption, Diversity and Sustainable Innovation; Emerald Publishing Limited: Bingley, UK, 2023; pp. 245–254. [Google Scholar]

- Mao, M.; Va, H.; Lee, A.; Hong, M. Supervised Video Cloth Simulation: Exploring Softness and Stiffness Variations on Fabric Types Using Deep Learning. Appl. Sci. 2023, 13, 9505. [Google Scholar] [CrossRef]

- Song, Q.; Li, S.; Bai, Q.; Yang, J.; Zhang, X.; Li, Z.; Duan, Z. Object detection method for grasping robot based on improved YOLOv5. Micromachines 2021, 12, 1273. [Google Scholar] [CrossRef] [PubMed]

- Jung, H.K.; Choi, G.S. Improved yolov5: Efficient object detection using drone images under various conditions. Appl. Sci. 2022, 12, 7255. [Google Scholar] [CrossRef]

- Benjumea, A.; Teeti, I.; Cuzzolin, F.; Bradley, A. YOLO-Z: Improving small object detection in YOLOv5 for autonomous vehicles. arXiv 2021, arXiv:2112.11798. [Google Scholar]

- Gong, H.; Mu, T.; Li, Q.; Dai, H.; Li, C.; He, Z.; Wang, W.; Han, F.; Tuniyazi, A.; Li, H.; et al. Swin-transformer-enabled YOLOv5 with attention mechanism for small object detection on satellite images. Remote Sens. 2022, 14, 2861. [Google Scholar] [CrossRef]

| Fabric Dataset | Training | Validation | Testing |

|---|---|---|---|

| Plain Cotton Fabric | 756 | 216 | 108 |

| Hanbok Fabric | 945 | 270 | 135 |

| Dyed Cotton Yarn | 1029 | 294 | 147 |

| Hanbok Fabric, Nobang | 1197 | 342 | 171 |

| Plain Cotton Blend Fabric | 1470 | 402 | 201 |

| Model Name | mAP@0.5 (%) | mAP@0.95 (%) | CPU Time (s) | GPU Time (s) |

|---|---|---|---|---|

| YOLOv5s | 60.07 | 81.08 | 1805 s | 665 s |

| YOLOv5n | 45.07 | 81.02 | 3364 s | 665 s |

| YOLOv5m | 50.07 | 81.02 | 1.1169 s | 784 s |

| YOLOv5l | 50.08 | 81.02 | 1.8475 s | 906 s |

| YOLOv5x | 60.33 | 81.02 | 3.8888 s | 1200 s |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Mao, M.; Ma, J.; Lee, A.; Hong, M. Enhancing Fabric Detection and Classification Using YOLOv5 Models. Eng. Proc. 2025, 89, 33. https://doi.org/10.3390/engproc2025089033

Mao M, Ma J, Lee A, Hong M. Enhancing Fabric Detection and Classification Using YOLOv5 Models. Engineering Proceedings. 2025; 89(1):33. https://doi.org/10.3390/engproc2025089033

Chicago/Turabian StyleMao, Makara, Jun Ma, Ahyoung Lee, and Min Hong. 2025. "Enhancing Fabric Detection and Classification Using YOLOv5 Models" Engineering Proceedings 89, no. 1: 33. https://doi.org/10.3390/engproc2025089033

APA StyleMao, M., Ma, J., Lee, A., & Hong, M. (2025). Enhancing Fabric Detection and Classification Using YOLOv5 Models. Engineering Proceedings, 89(1), 33. https://doi.org/10.3390/engproc2025089033