Comparative Evaluation of Images of Alveolar Bone Loss Using Panoramic Images and Artificial Intelligence †

Abstract

1. Introduction

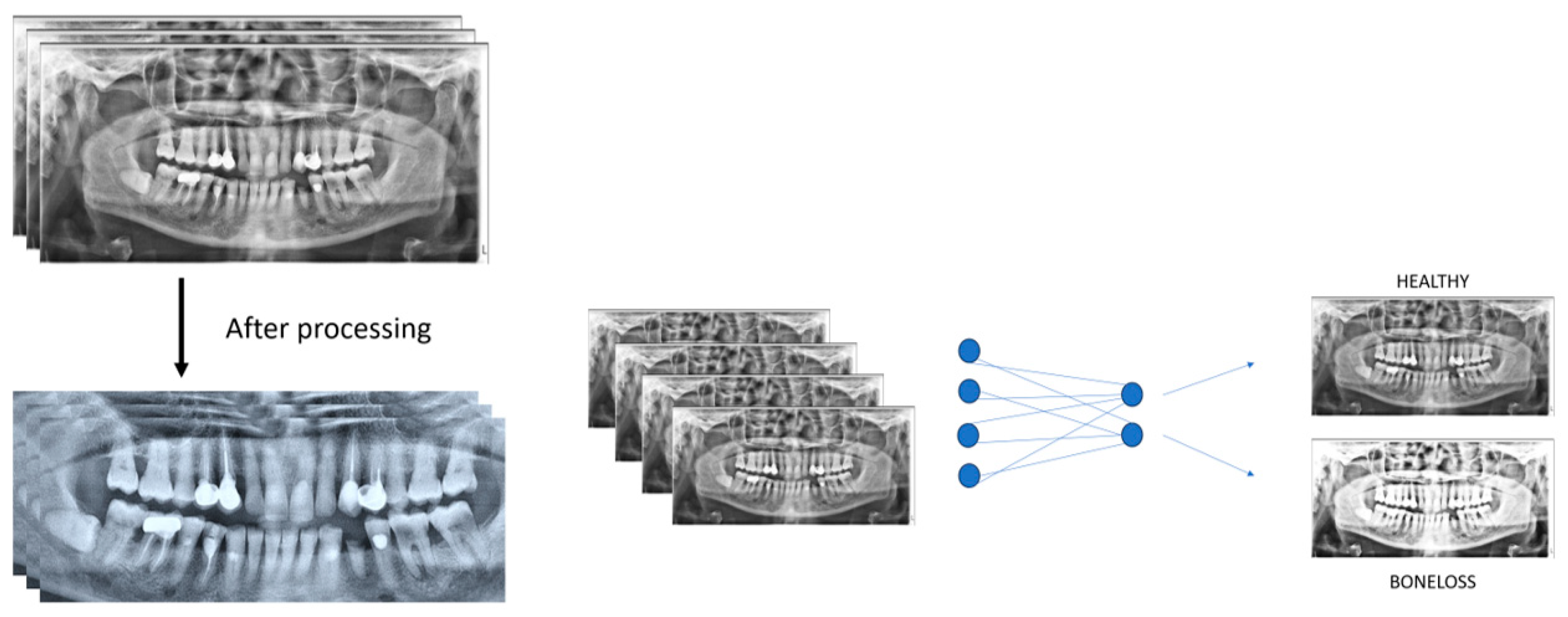

2. Materials and Methods

2.1. Patient Selection and Imaging

2.2. Evaluation of Panoramic Radiography Images

2.3. Statistical Analyses

3. Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Tonetti, M.S.; Jepsen, S.; Jin, L.; Otomo-Corgel, J. Impact of the Global Burden of Periodontal Diseases on Health, Nutrition and Wellbeing of Mankind: A Call for Global Action. J. Clin. Periodontol. 2017, 44, 456–462. [Google Scholar] [CrossRef] [PubMed]

- Kharat, P.B.; Dash, K.S.; Rajpurohit, L.; Tripathy, S.; Mehta, V. Revolutionizing Healthcare through Chat GPT: AI Is Accelerating Medical Diagnosis. Oral Oncol. Rep. 2024, 9, 100222. [Google Scholar] [CrossRef]

- Rosa, A.; Ranieri, N.; Miranda, M.; Mehta, V.; Fiorillo, L.; Cervino, G. Mini Crestal Sinus Lift with Bone Grafting and Simultaneous Insertion of Implants in Severe Maxillary Conditions as an Alternative to Lateral Sinus Lift: Multicase Study Report of Different Techniques. J. Craniofac. Surg. 2024, 35, 203–207. [Google Scholar] [CrossRef]

- Raichur, P.S.; Setty, S.B.; Thakur, S.L.; Naikmasur, V.G. Comparison of Radiovisiography and Digital Volume Tomography to Direct Surgical Measurements in the Detection of Infrabony Defects. J. Clin. Exp. Dent. 2012, 4, e43–e47. [Google Scholar] [CrossRef] [PubMed]

- Chakrapani, S.; Sirisha, K.; Srilalitha, A.; Srinivas, M. Choice of Diagnostic and Therapeutic Imaging in Periodontics and Implantology. J. Indian Soc. Periodontol. 2013, 17, 711–718. [Google Scholar] [CrossRef]

- Mohan, R.; Singh, A.; Gundappa, M. Three-Dimensional Imaging in Periodontal Diagnosis—Utilization of Cone Beam Computed Tomography. J. Indian Soc. Periodontol. 2011, 15, 11–17. [Google Scholar] [CrossRef]

- Patil, S.; Joda, T.; Soffe, B.; Awan, K.H.; Fageeh, H.N.; Tovani-Palone, M.R.; Licari, F.W. Efficacy of Artificial Intelligence in the Detection of Periodontal Bone Loss and Classification of Periodontal Diseases: A Systematic Review. J. Am. Dent. Assoc. 2023, 154, 795–804.e1. [Google Scholar] [CrossRef] [PubMed]

- Turosz, N.; Chęcińska, K.; Chęciński, M.; Brzozowska, A.; Nowak, Z.; Sikora, M. Applications of Artificial Intelligence in the Analysis of Dental Panoramic Radiographs: An Overview of Systematic Reviews. Dentomaxillofac. Radiol. 2023, 52, 20230284. [Google Scholar] [CrossRef]

- Jabbar, S.I.; Day, C.R.; Heinz, N.; Chadwick, E.K. Using Convolutional Neural Network for Edge Detection in Musculoskeletal Ultrasound Images. In Proceedings of the 2016 International Joint Conference on Neural Networks (IJCNN), Vancouver, BC, Canada, 24–29 July 2016; pp. 4619–4626. [Google Scholar]

- Revilla-León, M.; Gómez-Polo, M.; Barmak, A.B.; Inam, W.; Kan, J.Y.K.; Kois, J.C.; Akal, O. Artificial Intelligence Models for Diagnosing Gingivitis and Periodontal Disease: A Systematic Review. J. Prosthet. Dent. 2023, 130, 816–824. [Google Scholar] [CrossRef]

- Sklan, J.E.S.; Plassard, A.J.; Fabbri, D.; Landman, B.A. Toward Content Based Image Retrieval with Deep Convolutional Neural Networks. Proc. SPIE Int. Soc. Opt. Eng. 2015, 9417, 94172C. [Google Scholar] [CrossRef]

- Lee, J.-H.; Kim, D.; Jeong, S.-N.; Choi, S.-H. Diagnosis and Prediction of Periodontally Compromised Teeth Using a Deep Learning-Based Convolutional Neural Network Algorithm. J. Periodontal Implant. Sci. 2018, 48, 114–123. [Google Scholar] [CrossRef] [PubMed]

- Tripathy, S.; Mathur, A.; Mehta, V. A View of Neural Networks in Artificial Intelligence in Oral Pathology. Oral Surg. 2023, 17, 179–180. [Google Scholar] [CrossRef]

- Rajpurkar, P.; Irvin, J.; Zhu, K.; Yang, B.; Mehta, H.; Duan, T.; Ding, D.; Bagul, A.; Langlotz, C.; Shpanskaya, K.; et al. CheXNet: Radiologist-Level Pneumonia Detection on Chest X-Rays with Deep Learning. arXiv 2017, arXiv:1711.05225. [Google Scholar]

- Alotaibi, G.; Awawdeh, M.; Farook, F.F.; Aljohani, M.; Aldhafiri, R.M.; Aldhoayan, M. Artificial Intelligence (AI) Diagnostic Tools: Utilizing a Convolutional Neural Network (CNN) to Assess Periodontal Bone Level Radiographically—A Retrospective Study. BMC Oral Health 2022, 22, 399. [Google Scholar] [CrossRef]

- Jiang, L.; Chen, D.; Cao, Z.; Wu, F.; Zhu, H.; Zhu, F. A Two-Stage Deep Learning Architecture for Radiographic Staging of Periodontal Bone Loss. BMC Oral Health 2022, 22, 106. [Google Scholar] [CrossRef]

- Tammina, S. Transfer Learning Using VGG-16 with Deep Convolutional Neural Network for Classifying Images. Int. J. Sci. Res. Publ. IJSRP 2019, 9, 143–150. [Google Scholar] [CrossRef]

- Yauney, G.; Rana, A.; Wong, L.C.; Javia, P.; Muftu, A.; Shah, P. Automated Process Incorporating Machine Learning Segmentation and Correlation of Oral Diseases with Systemic Health. In Proceedings of the 2019 41st Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Berlin, Germany, 23–27 July 2019. [Google Scholar] [CrossRef]

- World Medical Association. World Medical Association Declaration of Helsinki: Ethical Principles for Medical Research Involving Human Subjects. JAMA 2013, 310, 2191–2194. [Google Scholar] [CrossRef]

- Cha, J.-Y.; Yoon, H.-I.; Yeo, I.-S.; Huh, K.-H.; Han, J.-S. Peri-Implant Bone Loss Measurement Using a Region-Based Convolutional Neural Network on Dental Periapical Radiographs. J. Clin. Med. 2021, 10, 1009. [Google Scholar] [CrossRef]

- Chang, H.-J.; Lee, S.-J.; Yong, T.-H.; Shin, N.-Y.; Jang, B.-G.; Kim, J.-E.; Huh, K.-H.; Lee, S.-S.; Heo, M.-S.; Choi, S.-C.; et al. Deep Learning Hybrid Method to Automatically Diagnose Periodontal Bone Loss and Stage Periodontitis. Sci. Rep. 2020, 10, 7531. [Google Scholar] [CrossRef]

- Gulshan, V.; Peng, L.; Coram, M.; Stumpe, M.C.; Wu, D.; Narayanaswamy, A.; Venugopalan, S.; Widner, K.; Madams, T.; Cuadros, J.; et al. Development and Validation of a Deep Learning Algorithm for Detection of Diabetic Retinopathy in Retinal Fundus Photographs. JAMA 2016, 316, 2402–2410. [Google Scholar] [CrossRef]

- Kim, J.; Lee, H.-S.; Song, I.-S.; Jung, K.-H. DeNTNet: Deep Neural Transfer Network for the Detection of Periodontal Bone Loss Using Panoramic Dental Radiographs. Sci. Rep. 2019, 9, 17615. [Google Scholar] [CrossRef] [PubMed]

- Lakhani, P.; Sundaram, B. Deep Learning at Chest Radiography: Automated Classification of Pulmonary Tuberculosis by Using Convolutional Neural Networks. Radiology 2017, 284, 574–582. [Google Scholar] [CrossRef] [PubMed]

- Lee, C.-T.; Kabir, T.; Nelson, J.; Sheng, S.; Meng, H.-W.; Van Dyke, T.E.; Walji, M.F.; Jiang, X.; Shams, S. Use of the Deep Learning Approach to Measure Alveolar Bone Level. J. Clin. Periodontol. 2022, 49, 260–269. [Google Scholar] [CrossRef] [PubMed]

- Kurt, S.; Çelik, Ö.; Bayrakdar, İ.Ş.; Orhan, K.; Bilgir, E.; Odabas, A.; Aslan, A.F. Success of Artificial Intelligence System in Determining Alveolar Bone Loss from Dental Panoramic Radiography Images. Cumhur. Dent. J. 2020, 23, 318–324. [Google Scholar] [CrossRef]

- Lehman, C.D.; Wellman, R.D.; Buist, D.S.M.; Kerlikowske, K.; Tosteson, A.N.A.; Miglioretti, D.L.; Breast Cancer Surveillance Consortium. Diagnostic Accuracy of Digital Screening Mammography with and Without Computer-Aided Detection. JAMA Intern. Med. 2015, 175, 1828–1837. [Google Scholar] [CrossRef]

- Machado, V.; Proença, L.; Morgado, M.; Mendes, J.J.; Botelho, J. Accuracy of Panoramic Radiograph for Diagnosing Periodontitis Comparing to Clinical Examination. J. Clin. Med. 2020, 9, 2313. [Google Scholar] [CrossRef]

- Krois, J.; Ekert, T.; Meinhold, L.; Golla, T.; Kharbot, B.; Wittemeier, A.; Dörfer, C.; Schwendicke, F. Deep Learning for the Radiographic Detection of Periodontal Bone Loss. Sci. Rep. 2019, 9, 8495. [Google Scholar] [CrossRef]

- Li, H.; Zhou, J.; Zhou, Y.; Chen, Q.; She, Y.; Gao, F.; Xu, Y.; Chen, J.; Gao, X. An Interpretable Computer-Aided Diagnosis Method for Periodontitis from Panoramic Radiographs. Front. Physiol. 2021, 12, 655556. [Google Scholar] [CrossRef]

| True Positive | True Negative | |

|---|---|---|

| Predicted Positive | 92 | 11 |

| Predicted Negative | 8 | 89 |

| Parameter | Value |

|---|---|

| Sensitivity | 0.8327 |

| Specificity | 0.8683 |

| Precision | 0.8918 |

| Accuracy | 0.8927 |

| F1 score | 0.8615 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Mathur, A.; Pawar, S.; Kamma, P.K.G.; Obulareddy, V.T.; Dash, K.S.; Meto, A.; Mehta, V. Comparative Evaluation of Images of Alveolar Bone Loss Using Panoramic Images and Artificial Intelligence. Eng. Proc. 2025, 87, 80. https://doi.org/10.3390/engproc2025087080

Mathur A, Pawar S, Kamma PKG, Obulareddy VT, Dash KS, Meto A, Mehta V. Comparative Evaluation of Images of Alveolar Bone Loss Using Panoramic Images and Artificial Intelligence. Engineering Proceedings. 2025; 87(1):80. https://doi.org/10.3390/engproc2025087080

Chicago/Turabian StyleMathur, Ankita, Sushil Pawar, Praveen Kumar Gonuguntla Kamma, Vishnu Teja Obulareddy, Kabir Suman Dash, Aida Meto, and Vini Mehta. 2025. "Comparative Evaluation of Images of Alveolar Bone Loss Using Panoramic Images and Artificial Intelligence" Engineering Proceedings 87, no. 1: 80. https://doi.org/10.3390/engproc2025087080

APA StyleMathur, A., Pawar, S., Kamma, P. K. G., Obulareddy, V. T., Dash, K. S., Meto, A., & Mehta, V. (2025). Comparative Evaluation of Images of Alveolar Bone Loss Using Panoramic Images and Artificial Intelligence. Engineering Proceedings, 87(1), 80. https://doi.org/10.3390/engproc2025087080