Abstract

This study explores the application of advanced Recurrent Neural Network (RNN) architectures—specifically Long Short-Term Memory (LSTM) and Gated Recurrent Unit (GRU)—for classifying chicken egg fertility based on embryonic development detected in egg images. Traditional methods, such as candling, are labor-intensive and often inaccurate, making them unsuitable for large-scale poultry operations. By leveraging the capabilities of LSTM and GRU models, this research aims to automate and enhance the accuracy of egg fertility classification, thereby contributing to agricultural automation. A dataset comprising 240 high-resolution egg images was employed, resized to 256 × 256 pixels for optimal processing efficiency. LSTM and GRU models were trained to discern fertile from infertile eggs by analyzing the sequential data represented by the pixel rows in these images. The LSTM model demonstrated superior performance, achieving a validation accuracy of 89.58%, significantly surpassing the GRU model (66.67%). Compared to classical methods such as Decision Tree (85%), Logistic Regression (88.3%), the LSTM model demonstrated superior performance, achieving a validation accuracy of 89.58%, significantly surpassing the GRU model (66.67%). Compared to Decision Tree (85%), Logistic Regression (88.3%), SVM (84.57%), K-means (82.9%), and R-CNN (70%), the LSTM model achieved the highest classification accuracy. Unlike classical machine learning approaches that rely on handcrafted features and predefined decision rules, LSTM effectively learns complex sequential dependencies within images, improving fertility classification accuracy in real-world poultry farming applications. In contrast, GRU models, while more computationally efficient, may struggle with generalization under constrained data conditions. This study underscores the potential of advanced RNNs in enhancing the efficiency and accuracy of automated farming systems, paving the way for future research to further optimize these models for real-world agricultural applications.

1. Introduction

Classifying chicken egg fertility is crucial for the poultry industry [1] as it directly impacts production efficiency and profitability [2]. Traditional methods like candling, which illuminates eggs to visualize embryos, require skilled personnel and are only sometimes accurate or feasible for large-scale operations due to their manual nature [3,4]. Recent advancements in machine learning [5,6], particularly image classification with Recurrent Neural Networks (RNNs) [7], offer promising solutions for automating and enhancing this process. Classical machine learning approaches [8] such as Decision Tree and Logistic Regression rely on manually extracted features, limiting their adaptability to variations in image quality and lighting conditions. While models like SVM and K-means clustering improve upon purely statistical classifiers, they still struggle with capturing complex spatial relationships. LSTM and GRU, by contrast, learn long-range dependencies within images, making them more practical for real-time, automated fertility classification in poultry farming. RNNs, especially Long Short-Term Memory (LSTM) and Gated Recurrent Units (GRUs) are adept at processing the sequential and spatial data [9] critical to identifying embryonic development in egg images [10,11].

This study employs LSTM and GRU to address the shortcomings of traditional and manual egg fertility assessments [12,13]. It leverages their capability to handle complex data dependencies without the issues of simpler RNNs, such as vanishing gradients. By analyzing each row of pixels as a sequence, these models effectively aim to discern fertile from infertile eggs.

The innovation of this research lies in applying LSTM and GRU to the field of embryology within poultry science—a novel approach given the limited exploration of these advanced neural networks in such a context. The objectives are to develop, fine-tune, and compare these models on a dataset of 240 resized egg images to determine which model best balances accuracy with computational efficiency. Ultimately, this study seeks to enhance poultry farming technology and contribute to agricultural automation by showcasing the practical application of sophisticated neural networks.

2. Background and Related Work

2.1. Technological Background

Recurrent Neural Networks (RNNs) are a class of neural networks designed to handle sequential data [9] by introducing loops that allow information to persist within the networks [14]. This characteristic makes RNNs ideal for involving time series or sequential data, such as language modeling, speech recognition, and sequential image analysis, which is the basis of this study on egg fertility classification [15]. A specific type of RNN, Long Short-Term Memory (LSTM), was developed to mitigate the vanishing gradient problem that can limit the ability of traditional RNNs to learn long-range dependencies within sequences [16]. An LSTM unit has three gates: an input gate, a forget gate, and an output gate [17]. These gates collectively decide what information to discard and what to retain, which helps maintain the flow of relevant information through the network [18].

Gated Recurrent Units (GRUs) simplify the LSTM architecture by combining the input and forget gates into a single “update gate” and merging the cell and hidden states [19]. This simplified structure reduces computational complexity while achieving performance comparable to LSTMs in many applications. In situations where computational efficiency is paramount, GRUs offer a compelling alternative to LSTM, particularly in cases with limited data availability or when real-time performance is needed [20].

This study extends the application of LSTM and GRU to embryology within poultry science, a relatively unexplored domain for advanced RNN architectures. Unlike conventional egg fertility assessment methods, such as candling, which are labor-intensive and prone to inaccuracies, this research investigates the potential of LSTM and GRU for automating the classification of chicken egg fertility based on sequential image data analysis.

2.2. Review of Related Work

Previous studies have explored various image processing techniques for egg fertility assessment [2], with methods ranging from traditional image thresholding to more complex machine learning algorithms [3,21,22]. However, these studies primarily focus on external characteristics and rarely analyze internal structural patterns critical for embryonic development detection. Techniques like support vector machines (SVMs) and Decision Trees have been employed [3,23]. However, they often require hand-crafted features and may not capture the deeper patterns within the data that deep learning models can efficiently identify.

Deep learning, particularly Convolutional Neural Networks (CNNs), has shown promise in many domains of agricultural image processing. However, RNNs in agriculture remain limited, specifically for tasks like egg fertility detection. Some studies have explored RNNs in crop yield prediction and plant disease detection using sensor data or temporal satellite images [24]. Studies utilizing RNNs to monitor animal behavior patterns [25] or predict livestock outcomes [26] further validate the applicability of RNNs in varied contexts within agriculture [27]. These applications demonstrate the suitability of RNNs for agriculture, where sequential patterns in data play a critical role. However, few applications address sequential image data for poultry fertility classification [28].

This gap in the literature signifies an opportunity for innovative research in applying LSTM and GRU models for egg fertility classification, a crucial task in the poultry industry. This research aims to address this gap by comparing the effectiveness of these advanced RNN architectures in classifying chicken egg fertility based on embryonic development detected in egg images.

3. Method

3.1. Dataset and Image Preprocessing

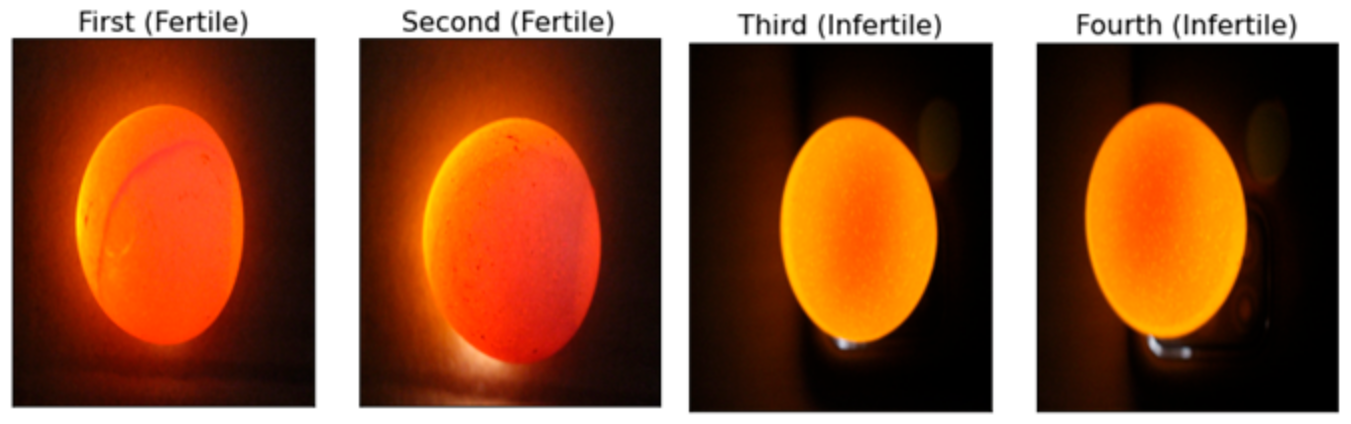

The research involves a dataset of 240 high-resolution images of chicken eggs, precisely divided into two classifications: fertile and infertile [3] (sample as shown in Figure 1). The images were captured using the candling process [3], with a 5000-lumen LED flashlight and a 5MP smartphone camera in a dark room to control lighting. The camera was positioned 20 cm above the egg, with automatic settings (focus, exposure, resolution) to ensure consistency. Further details on the camera setup could clarify potential biases in data collection. This categorization was performed through expert assessments, ensuring accuracy and reliability for model training. This balanced dataset prevents biases in model training and performance evaluation.

Figure 1.

Samples from the chicken egg dataset, illustrating fertile and infertile eggs.

All images in the dataset were resized from their original dimensions of 665 × 545 pixels [1] to a uniform size of 256 × 256 pixels. This resizing is essential for standardizing input data and enhancing the neural networks’ processing efficiency. It also helps reduce computational demand, thus speeding up the training process. The selected dimension (256 × 256) strikes a balance between maintaining sufficient image detail for detecting fertility features and optimizing computational resources.

3.2. Model Architectures

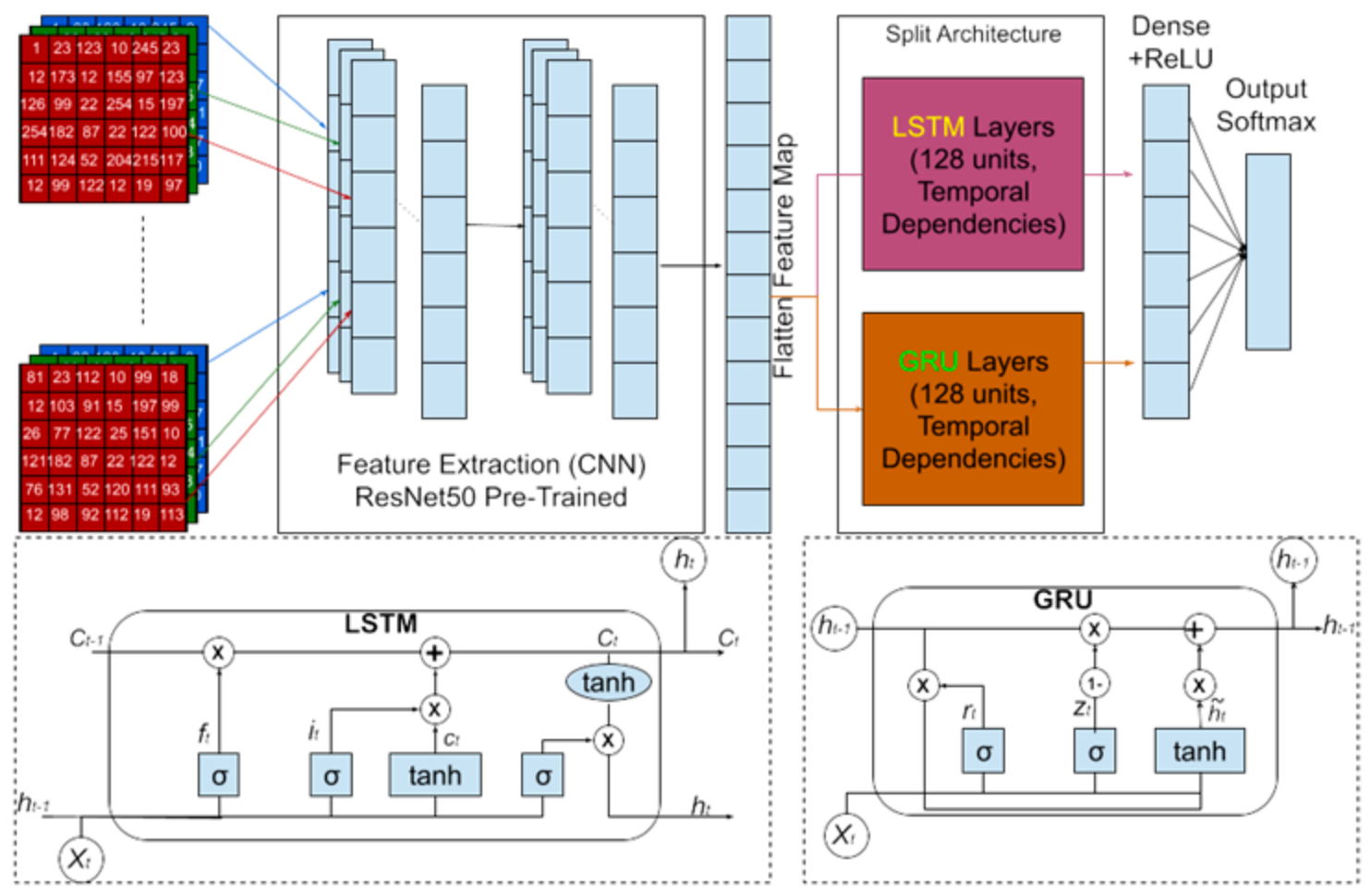

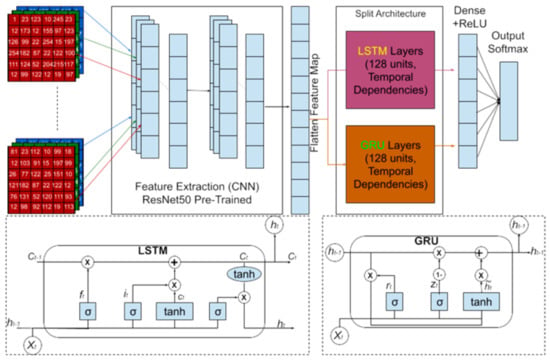

LSTM and GRU models (as shown in Figure 2) are designed to process the spatial information in the images sequentially [29]. Each image is reshaped such that each row of 256 pixels becomes a timestep in the sequence, with 256 rows each containing 765 features derived from RGB values. Each row consists of 256 features derived from the image’s RGB values, providing the network with enough spatial information to detect relevant patterns and dependencies for egg fertility classification.

Figure 2.

Architecture of the LSTM and GRU models within the CNN framework, detailing the configuration of each layer.

Figure 2 details the configuration of each layer in both the LSTM and GRU models. The models utilize feature extraction via CNN (Convolutional Neural Networks) to pre-process the input image, followed by LSTM and GRU layers for sequential processing. The key components include:

LSTM model [30]: The LSTM layer, with 128 units [31,32], captures long-range temporal dependencies in the sequential data, helping to recognize patterns in embryonic development. The model concludes with a single output layer of binary classification (fertile vs. infertile eggs).

GRU model: The GRU layer, also with 128 units [33,34], simplifies the architecture by combining the forget and input gates into a single update gate, maintaining efficiency while still learning complex temporal dependencies. Like the LSTM, it uses an output layer for binary classification.

Both models aim to capture temporal dependencies in sequential data, with the LSTM excelling in handling long-range dependencies and the GRU offering a more computationally efficient alternative.

3.3. Training Procedures and Evaluation Metrics

Training for models is executed using the Adam optimizer, known for its adaptive learning rate capabilities, which help manage sparse gradients effectively [35,36]. The Adam optimizer adjusts the learning rates based on the first- and second-moment estimates of the gradients. The update rules for each parameter θ in the model are given by Equations (1)–(4).

where is the gradient of the loss function with respect to θ at time step t, mt and vt are the first- and second-moment estimates, and and are the bias-corrected versions of the first- and second-moment estimates. The learning rate η and a small constant ϵ are used to prevent division by zero. This update rule ensures efficient parameter adjustments during training, helping to optimize model performance.

The binary cross-entropy loss function is used for training, which is particularly suited for binary classification tasks [37]. It measures the difference between the true labels y and the predicted probabilities produced by the model. The binary cross-entropy loss is computed as Equation (5).

where represents the loss, N is the batch size, is the true label for sample i, and is the predicted probability for the same sample. The binary cross-entropy loss penalizes incorrect predictions more heavily, guiding the model to output probabilities closer to the true labels as training progresses.

The models are trained for 100 epochs with a batch size of 32, allowing the models ample opportunity to learn from the dataset thoroughly. This process ensures the neural networks capture nuances needed for egg fertility classification. The training process includes a 20% validation split, which periodically evaluates the model against unseen data, thus preventing overfitting and ensuring the models are generalizable. Model performance is assessed using accuracy, which measures (Equation (6)) classification effectiveness, and loss (binary cross-entropy), which indicates the models’ predictive confidence and how well the probability outputs align with correct labels during training and validation.

where is an indicator function that equals 1 when the predicted label yˆi matches the true label yi, and 0 otherwise. This provides a clear indication of how well the model is performing in terms of classification.

4. Results and Discussion

4.1. Performance of Each Model

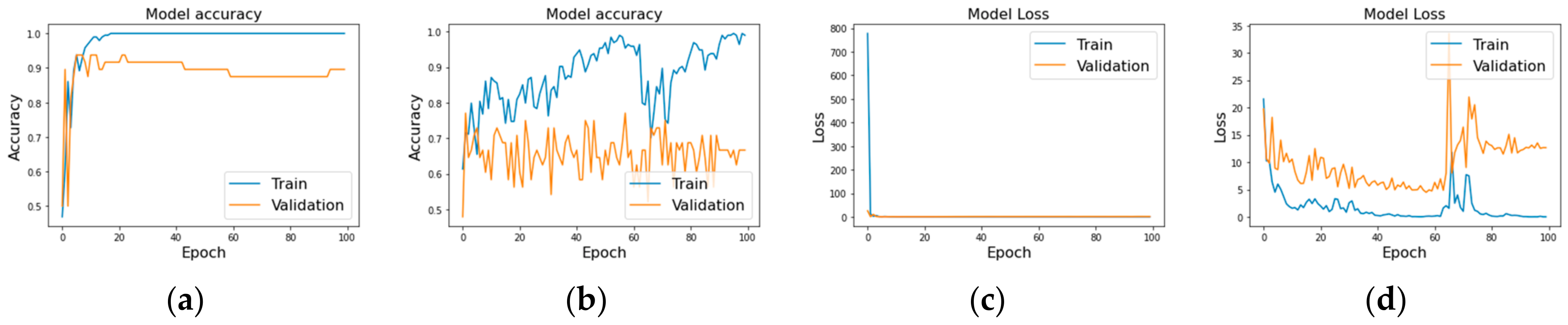

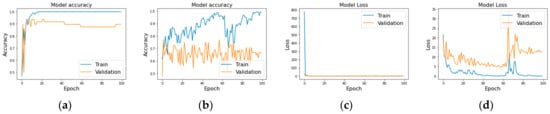

The LSTM model showcased promising results with a peak training accuracy of approximately 95%. However, the validation accuracy plateaued at around 70%, suggesting the model might be overfitting the training data Figure 3a,b. The training loss showed a significant decline, which stabilized in later epochs, but the validation loss did not parallel this trend, hinting at possible generalization issues.

Figure 3.

Model performance metrics: accuracy and loss for LSTM (a,c) and GRU (b,d) models over 100 epochs, showcasing training dynamics and overfitting tendencies.

Similarly, the GRU model demonstrated high training accuracy, nearing 98%, but its validation accuracy hovered between 65% and 67% (Figure 3c,d). Like the LSTM, the GRU model’s training loss decreased initially but leveled off, while the validation loss remained higher and more volatile. This pattern further supports the occurrence of overfitting, with the model learning the training data well but failing to generalize effectively to unseen data.

4.2. Comparative Results of LSTM and GRU

This section evaluates and compares the performance of the LSTM and GRU models based on their validation results, focusing on accuracy, loss, and a detailed examination of their confusion matrices.

The LSTM model achieved an impressive validation accuracy of 89.58% with a loss of 1.1691. This high accuracy indicates the model’s strong capability to generalize from the training data to unseen data, suggesting effective learning and adaptation to the features crucial for classifying egg fertility. The performance surpasses traditional machine learning methods, with SVM [23] achieving 84.57%, K-means [38] reaching 82.9%, and R-CNN [21] models performing at 70% accuracy. These results underscore the effectiveness of the LSTM in leveraging sequential data for this task.

In contrast, the GRU model recorded a lower accuracy of 66.67% and a significantly higher loss of 12.6634. The increased loss and reduced accuracy highlight challenges in the model’s convergence or potential overfitting to less representative features of the dataset, which could impair its generalization capabilities. While the GRU’s simpler architecture provides computational efficiency, it does not capture the necessary temporal dynamics as effectively as the LSTM, resulting in poorer performance than LSTM and other traditional methods.

The performance nuances of each model can be further revealed through their confusion matrices in Table 1. The LSTM model shows a balanced ability to identify both fertile and infertile eggs, with some room for reducing false positives and false negatives. This indicates that while the model performs well, slight improvements in tuning could further optimize its performance in distinguishing between the two classes. The GRU model shows more true positives (19) for fertile eggs and more false positives (17). This suggests that the GRU model is more aggressive in predicting fertility, leading to a higher misclassification rate, where infertile eggs are incorrectly classified as fertile.

Table 1.

Confusion matrix of LSTM and GRU: shows the number of true and false predictions for fertile and infertile eggs by each model.

The superior performance of the LSTM model in both accuracy and loss is attributed to its complex internal gating mechanisms, which efficiently manage long-range dependencies and mitigate significant overfitting better than the GRU model. The simpler architectural design of the GRU, while beneficial in terms of computational efficiency, might not have captured the necessary temporal dynamics as effectively as the LSTM, resulting in its poorer performance. This analysis suggests that while the GRU model may excel in scenarios requiring rapid processing with acceptable accuracy, the LSTM is preferable for tasks where precision and reliability are paramount.

Furthermore, when compared with other methods, such as Decision Tree (85%), Logistic Regression (88.3%), SVM [23] (84.57%), K-means [38] (82.9%), and R-CNN [21] (70%), the LSTM model outperforms these traditional machine learning approaches by a significant margin, emphasizing its superiority for this specific application.

5. Conclusions

This study compared LSTM and GRU models for classifying chicken egg fertility based on embryonic development in egg images. The LSTM outperforms the GRU in accuracy and generalization, effectively capturing complex temporal dependencies. While the GRU is more computationally efficient, it struggles with overfitting and generalization due to the limited dataset. Achieving 89.58% accuracy, the LSTM model shows strong potential for automating egg fertility classification in poultry farming. Unlike traditional methods such as Decision Tree (85%), Logistic Regression (88.3%), SVM (84.57%), K-means (82.9%), and R-CNN (70%), which rely on manual feature extraction and predefined statistical assumptions, LSTM can automatically learn sequential dependencies within images. This makes it more adaptable to different imaging conditions and more suitable for precision agricultural applications where accuracy and scalability are essential. Future work should focus on improving LSTM’s generalization through data augmentation, diverse datasets, and hybrid models. Expanding these models to other agricultural domains could further enhance deep learning’s role in agricultural efficiency.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Data available on request from the author.

Acknowledgments

We thank AGH University of Krakow and UPN Veteran Yogyakarta for their essential support in this research.

Conflicts of Interest

The author declares no conflicts of interest.

References

- Saifullah, S.; Drezewski, R.; Yudhana, A.; Pranolo, A.; Kaswijanti, W.; Suryotomo, A.P.; Putra, S.A.; Khaliduzzaman, A.; Prabuwono, A.S.; Japkowicz, N. Nondestructive chicken egg fertility detection using CNN-transfer learning algorithms. J. Ilm. Tek. Elektro Komput. Dan Inform. 2023, 9, 854–871. [Google Scholar] [CrossRef]

- Saifullah, S.; Khaliduzzaman, A. Imaging Technology in Egg and Poultry Research. In Informatics in Poultry Production; Springer: Singapore, 2022; pp. 127–142. [Google Scholar] [CrossRef]

- Saifullah, S.; Dreżewski, R. Non-Destructive Egg Fertility Detection in Incubation Using SVM Classifier Based on GLCM Parameters. Procedia Comput. Sci. 2022, 207, 3254–3263. [Google Scholar] [CrossRef]

- Tolentino, L.K.S.; Justine, G.; Enrico, E.; Listanco, R.L.M.; Anthony, M.; Ramirez, M.; Renon, T.L.U.; Rikko, B.; Samson, M. Development of Fertile Egg Detection and Incubation System Using Image Processing and Automatic Candling. In Proceedings of the TENCON 2018—2018 IEEE Region 10 Conference, Jeju, Republic of Korea, 28–31 October 2018; pp. 701–706. [Google Scholar] [CrossRef]

- Adegbenjo, A.O.; Liu, L.; Ngadi, M.O. An Adaptive Partial Least-Squares Regression Approach for Classifying Chicken Egg Fertility by Hyperspectral Imaging. Sensors 2024, 24, 1485. [Google Scholar] [CrossRef]

- Nakaguchi, V.M.; Abeyrathna, R.M.R.D.; Ahamed, T. Development of a new grading system for quail eggs using a deep learning-based machine vision system. Comput. Electron. Agric. 2024, 226, 109433. [Google Scholar] [CrossRef]

- Vandana; Yogi, K.K.; Yadav, S.P. Chicken Diseases Detection and Classification Based on Fecal Images Using EfficientNetB7 Model. Evergreen 2024, 11, 314–330. [Google Scholar] [CrossRef]

- Dioses, J.L.; Medina, R.P.; Fajardo, A.C.; Hernandez, A.A.; Dioses, I.A.M. Performance of Egg Sexing Classification Models in Philippine Native Duck. In Proceedings of the 2021 IEEE 12th Control and System Graduate Research Colloquium (ICSGRC), Shah Alam, Malaysia, 7 August 2021; pp. 248–253. [Google Scholar]

- Yang, C.; Yue, P.; Gong, J.; Li, J.; Yan, K. Detecting road network errors from trajectory data with partial map matching and bidirectional recurrent neural network model. Int. J. Geogr. Inf. Sci. 2024, 38, 478–502. [Google Scholar] [CrossRef]

- Dhruv, P.; Naskar, S. Image Classification Using Convolutional Neural Network (CNN) and Recurrent Neural Network (RNN): A Review. Adv. Intell. Syst. Comput. 2020, 1101, 367–381. [Google Scholar] [CrossRef]

- Gaafar, A.S.; Dahr, J.M.; Hamoud, A.K. Comparative Analysis of Performance of Deep Learning Classification Approach based on LSTM-RNN for Textual and Image Datasets. Informatica 2022, 46, 21–28. [Google Scholar] [CrossRef]

- Geng, L.; Wang, H.; Xiao, Z.; Zhang, F.; Wu, J.; Liu, Y. Fully Convolutional Network With Gated Recurrent Unit for Hatching Egg Activity Classification. IEEE Access 2019, 7, 92378–92387. [Google Scholar] [CrossRef]

- Geng, L.; Peng, Z.; Xiao, Z.; Xi, J. End-to-End Multimodal 16-Day Hatching Eggs Classification. Symmetry 2019, 11, 759. [Google Scholar] [CrossRef]

- Das, S.; Tariq, A.; Santos, T.; Kantareddy, S.S.; Banerjee, I. Recurrent Neural Networks (RNNs): Architectures, Training Tricks, and Introduction to Influential Research. Neuromethods 2023, 197, 117–138. [Google Scholar] [CrossRef]

- Seif, A.; Loos, S.A.M.; Tucci, G.; Roldán, É.; Goldt, S. The impact of memory on learning sequence-to-sequence tasks. Mach. Learn. Sci. Technol. 2024, 5, 015053. [Google Scholar] [CrossRef]

- Al-Selwi, S.M.; Hassan, M.F.; Abdulkadir, S.J.; Muneer, A.; Sumiea, E.H.; Alqushaibi, A.; Ragab, M.G. RNN-LSTM: From applications to modeling techniques and beyond—Systematic review. J. King Saud Univ. Comput. Inf. Sci. 2024, 36, 102068. [Google Scholar] [CrossRef]

- DiPietro, R.; Hager, G.D. Deep learning: RNNs and LSTM. In Handbook of Medical Image Computing and Computer Assisted Intervention; Elsevier: Amsterdam, The Netherlands, 2020; pp. 503–519. [Google Scholar]

- Moustafa, A.N.; Gomaa, W. Gate and common pathway detection in crowd scenes and anomaly detection using motion units and LSTM predictive models. Multimed. Tools Appl. 2020, 79, 20689–20728. [Google Scholar] [CrossRef]

- Dua, N.; Singh, S.N.; Semwal, V.B.; Challa, S.K. Inception inspired CNN-GRU hybrid network for human activity recognition. Multimed. Tools Appl. 2023, 82, 5369–5403. [Google Scholar] [CrossRef]

- Mateus, B.C.; Mendes, M.; Farinha, J.T.; Assis, R.; Cardoso, A.M. Comparing LSTM and GRU Models to Predict the Condition of a Pulp Paper Press. Energies 2021, 14, 6958. [Google Scholar] [CrossRef]

- Çevik, K.K.; Koçer, H.E.; Boğa, M. Deep Learning Based Egg Fertility Detection. Vet. Sci. 2022, 9, 574. [Google Scholar] [CrossRef]

- Saifullah, S.; Permadi, V.A. Comparison of Egg Fertility Identification based on GLCM Feature Extraction using Backpropagation and K-means Clustering Algorithms. In Proceedings of the 2019 5th International Conference on Science in Information Technology (ICSITech), Yogyakarta, Indonesia, 23–24 October 2019; pp. 140–145. [Google Scholar]

- Saifullah, S.; Suryotomo, A.P. Identification of chicken egg fertility using SVM classifier based on first-order statistical feature extraction. Ilk. J. Ilm. 2021, 13, 285–293. [Google Scholar] [CrossRef]

- Teixeira, I.; Morais, R.; Sousa, J.J.; Cunha, A. Deep Learning Models for the Classification of Crops in Aerial Imagery: A Review. Agriculture 2023, 13, 965. [Google Scholar] [CrossRef]

- Moutaouakil, K.E.; Falih, N. A New Approach to Animal Behavior Classification using Recurrent Neural Networks. In Proceedings of the 2024 4th International Conference on Innovative Research in Applied Science, Engineering and Technology (IRASET), Fez, Morocco, 16–17 May 2024; pp. 1–5. [Google Scholar]

- Rohan, A.; Rafaq, M.S.; Hasan, M.J.; Asghar, F.; Bashir, A.K.; Dottorini, T. Application of deep learning for livestock behaviour recognition: A systematic literature review. Comput. Electron. Agric. 2024, 224, 109115. [Google Scholar] [CrossRef]

- Mao, A.; Huang, E.; Wang, X.; Liu, K. Deep learning-based animal activity recognition with wearable sensors: Overview, challenges, and future directions. Comput. Electron. Agric. 2023, 211, 108043. [Google Scholar] [CrossRef]

- Shrinivasan, L.; Verma, R.; Ramaswamy, M.; Goudar, V.B.T.R. Prediction of Quality of Eggs in Poultry Farming. In Proceedings of the 2024 5th International Conference on Circuits, Control, Communication and Computing (I4C), Bangalore, India, 4–5 October 2024; pp. 202–206. [Google Scholar]

- Pan, E.; Mei, X.; Wang, Q.; Ma, Y.; Ma, J. Spectral-spatial classification for hyperspectral image based on a single GRU. Neurocomputing 2020, 387, 150–160. [Google Scholar] [CrossRef]

- Van Houdt, G.; Mosquera, C.; Nápoles, G. A review on the long short-term memory model. Artif. Intell. Rev. 2020, 53, 5929–5955. [Google Scholar] [CrossRef]

- Zhou, F.; Hang, R.; Liu, Q.; Yuan, X. Hyperspectral image classification using spectral-spatial LSTMs. Neurocomputing 2019, 328, 39–47. [Google Scholar] [CrossRef]

- Salehin, I.; Islam, M.S.; Amin, N.; Baten, M.A.; Noman, S.M.; Saifuzzaman, M.; Yazmyradov, S. Real-Time Medical Image Classification with ML Framework and Dedicated CNN–LSTM Architecture. J. Sens. 2023, 2023, 3717035. [Google Scholar] [CrossRef]

- Suvarnam, B.; Ch, V.S. Combination of CNN-GRU Model to Recognize Characters of a License Plate number without Segmentation. In Proceedings of the 2019 5th International Conference on Advanced Computing & Communication Systems (ICACCS), Coimbatore, India, 15–16 March 2019; pp. 317–322. [Google Scholar]

- Kumar, S. Deep learning based affective computing. J. Enterp. Inf. Manag. 2021, 34, 1551–1575. [Google Scholar] [CrossRef]

- Liu, D.; Wang, B.; Peng, L.; Wang, H.; Wang, Y.; Pan, Y. HSDNet: A poultry farming model based on few-shot semantic segmentation addressing non-smooth and unbalanced convergence. PeerJ Comput. Sci. 2024, 10, e2080. [Google Scholar] [CrossRef]

- Wang, D.; Wang, Q.; Chen, Z.; Guo, J.; Li, S. CVAE-DF: A hybrid deep learning framework for fertilization status detection of pre-incubation duck eggs based on VIS/NIR spectroscopy. Spectrochim. Acta Part A Mol. Biomol. Spectrosc. 2024, 320, 124569. [Google Scholar] [CrossRef]

- Iswanto, B.H.; Putri, D.S.D. Automated Classification of Egg Freshness Using Acoustic Signals and Convolutional Neural Networks. J. Phys. Conf. Ser. 2024, 2866, 012052. [Google Scholar] [CrossRef]

- Liu, L.; Ngadi, M.O. Detecting Fertility and Early Embryo Development of Chicken Eggs Using Near-Infrared Hyperspectral Imaging. Food Bioprocess Technol. 2013, 6, 2503–2513. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).