Abstract

With the rapid rise in technological advancements, health can be tracked and monitored in multiple ways. Tracking and monitoring healthcare gives the option to give precise interventions to people, enabling them to focus more on healthier lifestyles by minimising health issues concerning long screen time. Artificial Intelligence (AI) techniques like the Large Language Model (LLM) technology enable intelligent smart assistants to be used on mobile devices and in other cases. The proposed system uses the power of IoT and LLMs to create a virtual personal assistant for long-time screen users by monitoring their health parameters, with various sensors for the real-time monitoring of seating posture, heartbeat, stress levels, and the motion tracking of eye movements, etc., to constantly track, give necessary advice, and make sure that their vitals are as expected and within the safety parameters. The intelligent system combines the power of AI and Natural Language Processing (NLP) to build a virtual assistant embedded into the screens of mobile devices, laptops, desktops, and other screen devices, which employees across various workspaces use. The intelligent screen, with the integration of multiple sensors, tracks and monitors the users’ vitals along with various other necessary health parameters, and alerts them to take breaks, have water, and refresh, ensuring that the users stay healthy while using the system for work. These systems also suggest necessary exercises for the eyes, head, and other body parts. The proposed smart system is supported by user recognition to identify the current user and suggest advisory actions accordingly. The system also adapts and ensures that the users enjoy proper relaxation and focus when using the system, providing a flexible and personalised experience. The intelligent screen system monitors and improves the health of employees who have to work for a long time, thereby enhancing the productivity and concentration of employees in various organisations.

1. Introduction

The Internet of Things (IoT) is transforming people’s lives in many ways in today’s technology-driven world, where people spend most of their time in front of screens. Spending countless hours in front of screens has adverse health effects [1]. Technological advancements have facilitated the interconnection of various devices and sensors, enhancing personal care and enabling the design of effective health management systems that support innovative treatment plans and improve quality of life. Most of the time, people spend time in their workspace, and tracking and supporting them to monitor and focus on their health is essential for a better and stronger workforce [2,3]. As technological advancements have grown beyond limit, their numerous benefits and the ways in which they can be associated with livelihoods have also been developed. With the users’ health as a primary goal, smart screens are required to assist those on screens by monitoring them and advising them to take breaks and necessary precautions to care for their health.

As the people’s lifestyles changed to involve a heavy dependence on screens, it started affecting their health; health issues like eye strain, back pain, and even cardiovascular problems are now common. Relaxing movements are necessary for people accustomed to long screen time for their work. Many health risks are associated with long-time screen use [4,5]. The health of these people is dependent on various factors, including measurable and monitored parameters [6]. This research explores the possibility and checks the stability of the application of such a system, where the users’ health is also monitored and appropriate remedial activities are recommended for their wellbeing.

The evolution of AI and its derivatives, particularly Large Language Models (LLMs), has opened up exciting possibilities for addressing various challenges and improving Human–Computer Interaction (HCI). HCI helps address challenges and improve people’s lives. With their ability to understand and generate human-like language, LLMs can potentially power intelligent virtual assistants to seamlessly support users and assist them in their daily lives [7,8]. The use of AI and advanced technology frameworks like LLMs aids the creation of virtual assistants in communication with the power of Natural Language Processing (NLP), which can generate personally tailored advice and guidance [9]. This can include reminders to take breaks, stretch, or drink water, or suggestions to reduce eye strain and improve posture, which are interactive systems for proactive health management.

2. Methods

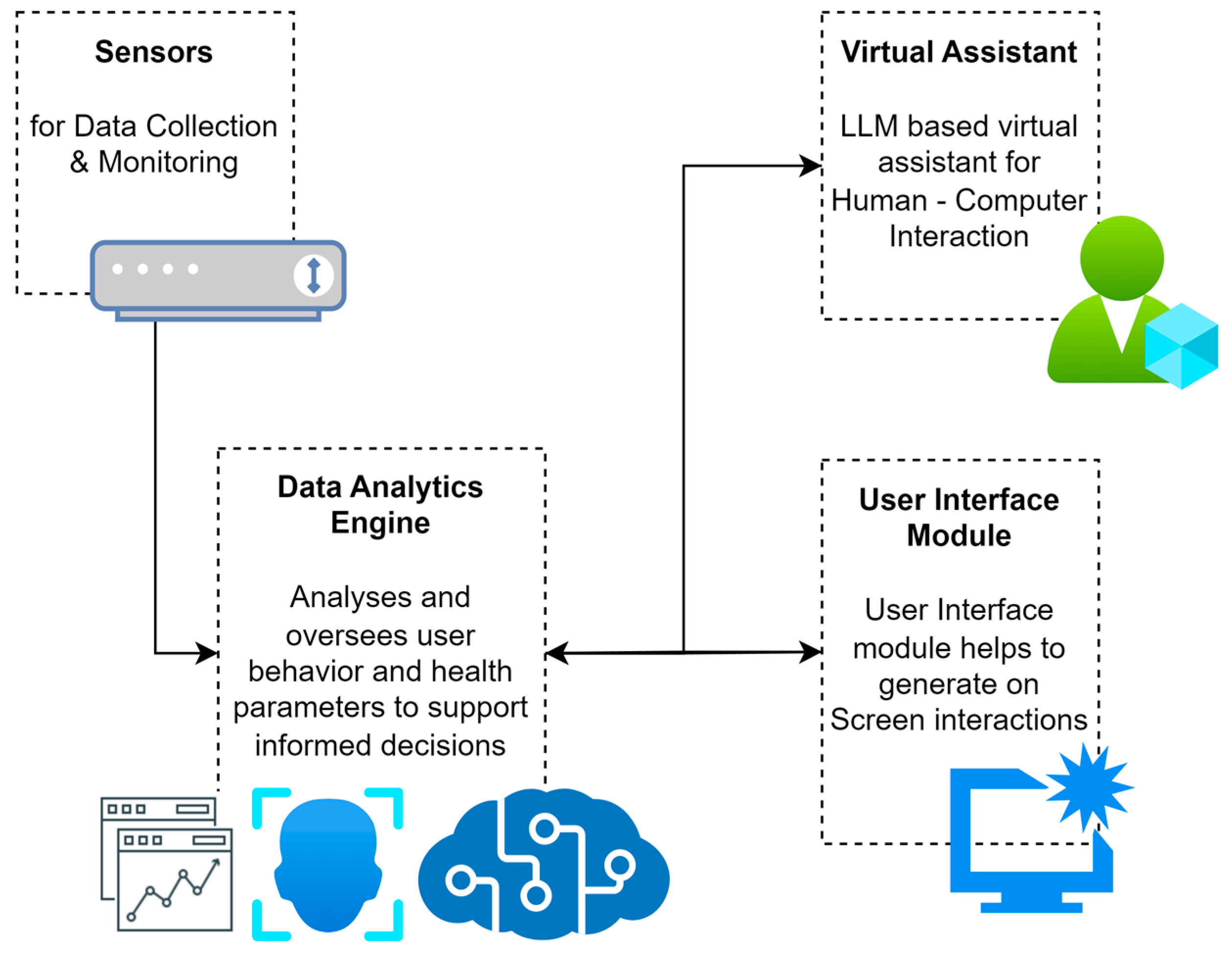

The intelligent screen system is designed to capture user behaviour from various sensors in the workspace and workstation. The screen can also be integrated with various wearable devices, including smart watches and such devices, which capture the users’ data and pass it to the data analytics engine. The data analytics engine processes, stores, and analyses the data and information from the sensors and oversees user behaviour. The health parameters and emotional changes of the user are also captured with the different sensory devices interconnected over the private network, set up to be part of the smart screen system. Figure 1 represents the overall architecture of the smart screen system, which is designed for long-time screen users to ensure that their health is monitored and that recommended actions are given to them with regular interventions, using a voice-based interactive assistant in their workspace screen.

Figure 1.

The architecture of smart screen system for long-time screen users.

The data analytics engine tracks and identifies the person using the workspace, identifies their preferences, and presets the virtual assistant and user interface modules with appropriate settings tailored to each user. The virtual assistant is designed and customised with NLP and uses the power of LLMs to converse with the users. The users receive tailored messages that are fine-tuned for their wellbeing. Self-supervised learning algorithms employed in the analytics engine track user behaviour in real-time with the support of various sensors to identify the effects of suggestions given, in order to streamline user expectations [10].

2.1. Sensor Data Collection and Processing

Various sensors track and extract the required data from the user and their behaviour and send it for processing. The sensor and sensor control unit perform the following major tasks:

- Interface between sensors for heart rate monitor, posture sensor, and eye tracker, etc.;

- Ensuring and aggregating real-time data acquisition and processing;

- Data filtering and noise reduction;

- User behaviour tracking and detection.

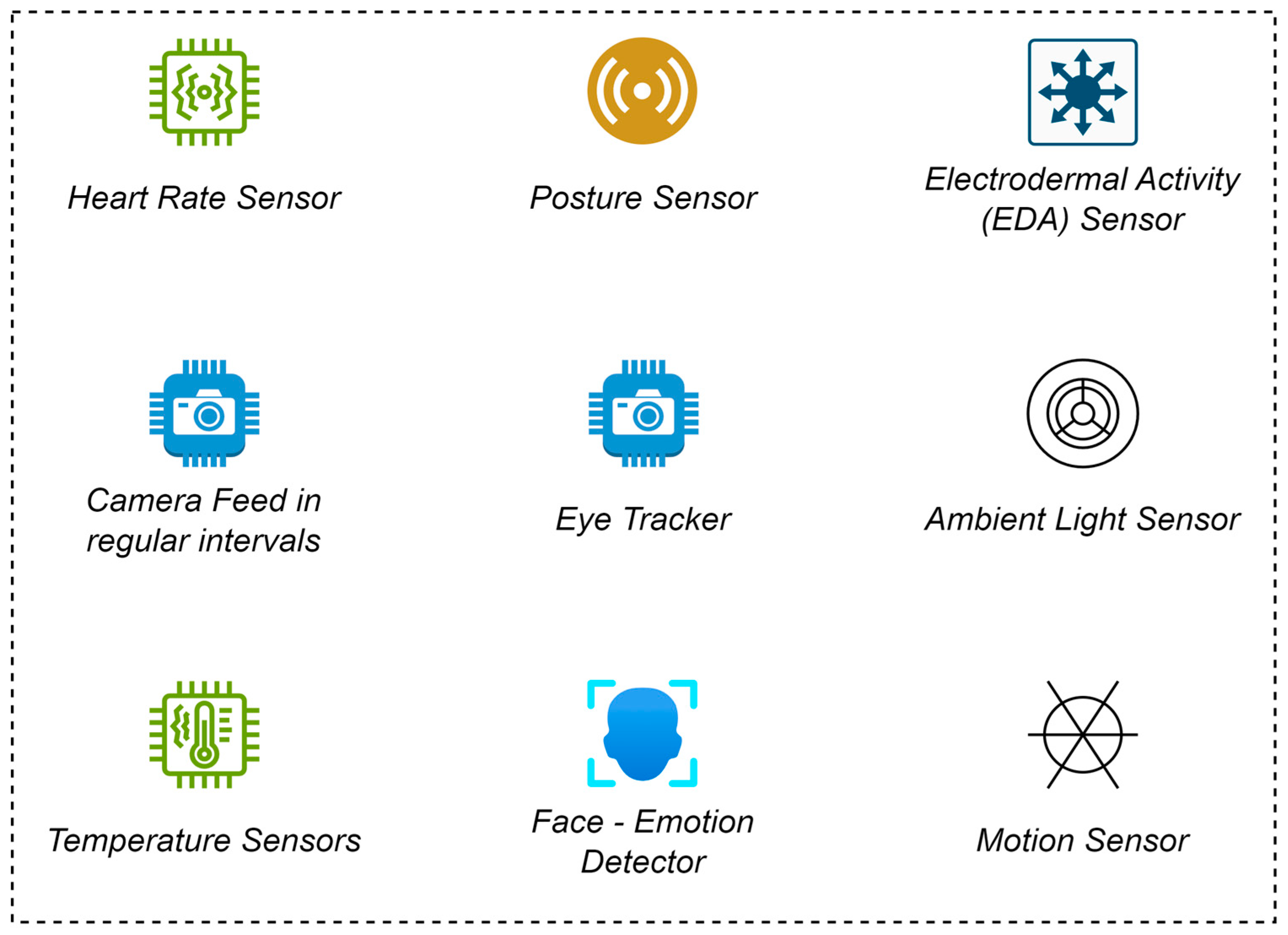

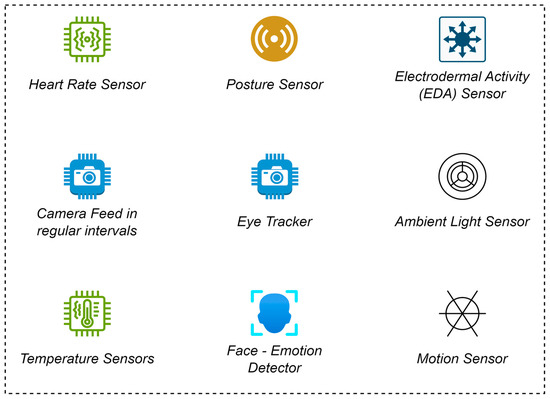

The rich set of sensors and their integration is required to ensure that the system works effectively and gives tailored messages and recommendations to the users. Figure 2 represents the collective list of sensors in the system architecture, which supports gathering various parameters related to the user’s work environment. The sensor data collection unit collects and aggregates the data for the system and passes them to the analytics layer.

Figure 2.

Sensors used in the smart screen system.

The smart screen system integrates advanced sensors specifically selected for monitoring the different health parameters of long-time screen users. Various sensors that will be part of the system include the following:

- Heart Rate Sensor (MAX30100, Shenzhen Electronics, Shenzhen, China)—Helps to track heart rate and its variation; monitoring heart rate and its variability will help to track stress levels and cardiovascular health. The sensor also helps to measure blood oxygen levels and identifies the freshness in the workspace.

- Posture Sensor—The posture sensor is a combination of multiple pressure sensors (FlexiForce A401, Sri Electronics & Embedded Solutions, Coimbatore, Tamil Nadu, India) and an Inertial Measurement Unit (IMU) (MPU-6050, RS Components Ltd., Corby, UK) to monitor head and shoulder angles. These sensors track the user’s sitting posture and identify the potential problems and risks associated with ergonomics. Posture sensors will combine pressure sensors and camera-based pose estimation techniques.

- Camera Feed—The live camera feed tracks the user’s emotional status, estimates their seating posture, and tracks their eye movement and time spent staring the screen. All these estimations and validations are conducted based on the images and video footage obtained from the camera and processed by CNN models to extract features.

- Eye Tracker (Tobii Eye Tracker 4C, Tobii, Stockholm, Sweden)—Tracks eye movements and detects signs of eye strain. It also monitors the user’s blinking rate, fixation duration, and pupil dilation, etc. This is also conducted with the support of the camera and ML models associated with the process.

- Temperature Sensor (DHT11, Bombay Electronics, Mumbai, India)—Measures workspace temperature, body temperature, and seat temperature to analyse the comfort level of the posture and the workspace balance.

- Face and Emotion Detector—The face of the user and their emotions while using the application or screens are tracked. Tracking the face, the time the face is in front of the screen, and the emotional status during this time is also conducted with the help of ML and DL algorithms from the video feed and processed with CNN models to extract relevant features.

- Electrodermal Activity Sensor (Shimmer3 GSR, Shimmer, Dublin, Ireland)—This sensor is used to measure skin conductance as an additional indicator of the stress and emotional status of the user.

- Ambient Light Sensor (TSL2561, Bytesware Electronics, Banglore, India)—This sensor measures the surrounding light levels, adjusts screen brightness, and reduces eye strain. The sensor will also help understand the change in eye movements and facial expressions based on the light intensity in the workspace.

- Motion Sensor (HC SR501, Sunrom Electronics, Gujarat, India)—This sensor detects and tracks the user’s movements, identifies their inactive time, and helps to remind the users to take breaks and change positions to have better body movements and enhance comfort and improve productivity.

The data from these sensors are processed through a multi-stage pipeline that involves noise reduction, feature extraction, and data fusion. A real-time data filtering process using Kalman filters and moving average filters is implemented to remove artefacts caused by motion or sudden environmental changes. These are some of the significant sensors and sensory groups designed to provide significant insights into the working conditions of employees working with screens for a long time. The data function techniques are applied to various sensors to ensure that the data the sensor generates are proper and give the proper interpretations.

The placement and integration of sensors play a critical role in ensuring accurate data capture and minimising interference from environmental factors. The following information outlines the sensor placement strategy used in the proposed system. All the sensors are placed in different places, either embedded in the laptop, user systems, or the seating setting in the workspace, without interfering or hindering the regular movement of the employee in the workspace. The placement of some of the sensors is as described below:

- Heart Rate Sensor—Mounted on a wristband or something wearable that maintains direct skin contact. Positioned to avoid disruptions from wrist movements.

- Posture Sensors—Integrated into ergonomic seating arrangements, with pressure sensors distributed across the seat and backrest to detect weight shifts and slouching.

- Eye Tracker—Attached to the top bezel of the screen to ensure an unobstructed view of the user’s eyes. Positioned at a fixed distance to maintain accuracy across different users.

- Temperature Sensor—Embedded within the screen frame to measure ambient temperature and identify user comfort levels.

- EDA Sensor—Placed on the non-dominant wrist to reduce motion artefacts and interference during typing activities.

Along with these sensors, various other data points are also combined for the analysis, which include:

- Internet usage;

- Time spent on mobiles;

- The tone of communication;

- Login–logout time;

- Typing speed;

- Scrolling speed.

Various factors are measured with the help of single sensors, a combination of sensors, and other AI-based software solutions, as well as by monitoring the usage statistics of interconnected devices. The efficiency and productivity of the people can also be monitored and improved with the support of this personal assistant, because the personal assistant can understand the surroundings and what the users are going through before making informed suggestions to them.

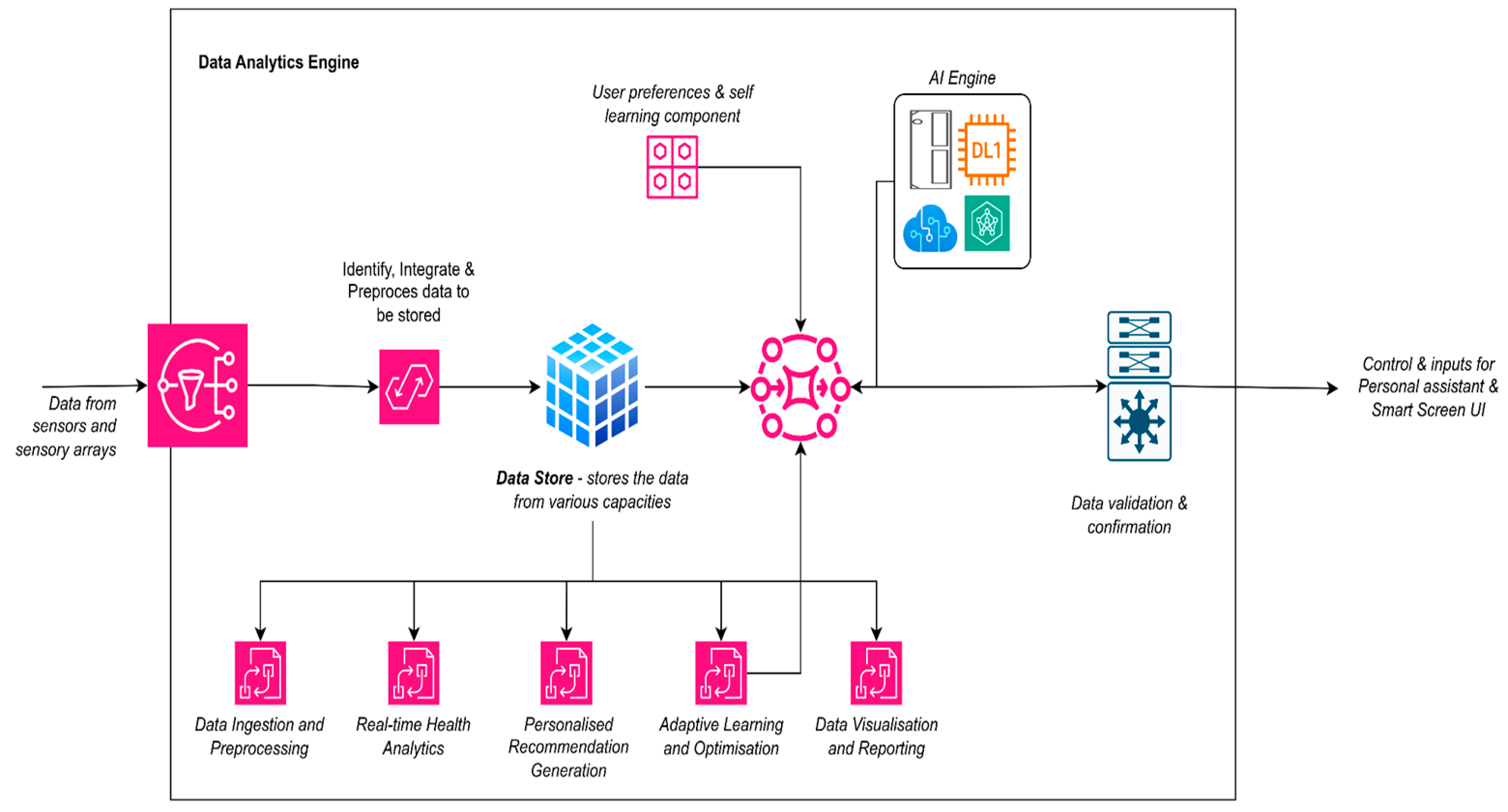

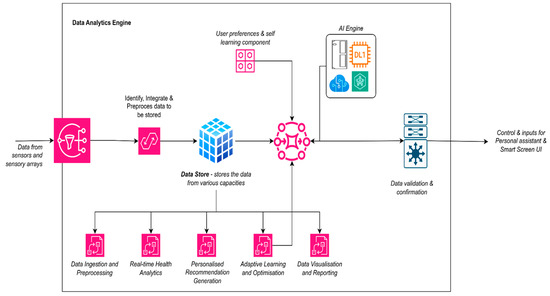

2.2. Data Analytics Engine

The data from the sensors, sensory arrays, and associated devices are sent to the analytics engine, from which the data are first processed and fine-tuned for effective storage in a local centralised data store. The data stored in the data store are then effectively processed and analysed with the help of various Machine Learning (ML) and Deep Learning (DL) algorithms. Deep learning algorithms are used for pattern mining and feature extraction, whereas machine learning algorithms are used to support informed decision-making. The engine is designed in such a way that it can distinguish between users and identify their behaviours. The various components of the data analytics engine are represented in Figure 3, where the data flow and communication between various components are represented.

Figure 3.

Components of the data analytics engine.

The data analytics engine takes the live data from various sensors and sensory arrays and places it in the data store. The significant steps that these data undergo as part of the analysis and decision-making engine are as follows:

- Data ingestion and pre-processing—Analyses, verifies, and streamlines the data from various sensors and sensory arrays stored in the data store. This process also verifies the data with the user’s health database and medical history to identify and record abnormalities. Required data filtering, cleaning, and other processes are part of this step to verify that the actual data are stored in the database.

- Real-time health analytics—Keep track of health-related parameters from the various sensors. These keep track of anomalies and reports to users on the steps they should take. With the help of DL algorithms, the system captures the various parameters, and then, using ML algorithms, the system will predict the user’s health.

- Personalised Recommendation Generation—This analytical engine handles personalised health tracking and reports. The system uses a rule-based system to trigger actions and recommendations for the other part of the system to get the users to care for their health.

- Adaptive Learning and Optimisation—This part of the analytical engine manages the feedback from the user based on the recommendations made to them, and self-learns the changes that the user has to undergo for each of the changes. The adaptive learning system is essential in the recommendation of actions for the identified stages.

- Data visualisation and reporting—This section focuses on generating reports for the user, based on which the user can finetune the system settings to tailor them to the needs of the individual.

The data analytics engine employs supervised and unsupervised ML algorithms for processing data, pattern recognition, and decision-making. The ML algorithms used for implementing the data analytics engine and their role in the engine are described below:

- Random Forest (RF) Classifier—The RF classifier helps in the effective classification of the seating posture of the users from the sensor data, which includes head and shoulder angles. RF uses the decision trees to identify the non-linear relationships between different ergonomic features. The RF model is implemented to handle the possibility of noisy sensor data.

- Support Vector Machine (SVM)—The SVM detects the user’s stress. The SVM classifies stress based on electrodermal activity and heart rate variability data.

- Convolutional Neural Networks (CNN)—The CNN model is applied to extract data from the live camera feed, in order to track the user’s facial emotions, eye movements, and posture. The CNN model extracts spatial features from video frames and predicts eye strain and emotional status.

- K-Means Clustering—The k-means clustering algorithm groups the users based on behaviour patterns and identifies recommendations based on screen time, typing speed, and activity breaks.

- Long Short-Term Memory (LSTM) Networks—LSTM networks are implemented to track the dependencies in user behaviour, while continuously monitoring health trends over time. LSTM networks could detect abnormalities over time and help recommend breaks and other activities based on user interactions.

Each of these algorithms was identified based on the strengths of the algorithms of the application, based on the literature and experiments carried out during the design and development of the prototype. The model’s performance and accuracy were validated using standard evaluation metrics such as precision, recall, and F1-score, to ensure reliability and effectiveness in diverse work environments. The results and recommendations generated from the ML algorithms from the analytical engine are converted to signals passed to the virtual assistant and the user interface modules, to communicate with the users and ensure that they benefit from the recommendations. The system understands the users and makes tailored recommendations based on user history, as well as preferences set and identified from actions.

2.3. Virtual Assistant

A virtual assistant is designed to interact with the users and communicate the suggestions and feedback that the data analytics engine produces. The virtual assistant is powered by NLP, which tries to understand the user’s emotional and personal responses and provides feedback to the users. Its major components and its role in the virtual assistant include the following:

- Natural Language Understanding (NLU)—The role of NLU is to accurately identify the user’s intent from their spoken queries and responses [11], which include queries related to health information, break reminders, and seeking exercise suggestions, etc., and to track and extract information from the entities associated, maintain context, and set the appropriate context for conversations.

- Natural Language Generation (NLG)—The NLG engine formulates clear, concise, and contextually relevant responses based on the user’s intent and the system recommendations. It generates conversation responses according to the users’ preferences and communication styles [12], enhances and tracks user engagement, and makes changes to the style of interventions.

- Dialogue Management—Responsible for maintaining natural, engaging conversations, tracking the conversation flow, and adapting to changes when interruptions occur during conversations [13]. The changes and tracking of the flow are maintained so that the user is not offended by the system responses, by retaining relevant information from previous interactions to provide contextually appropriate responses.

The virtual assistant integrates and interacts with the data analytics engine to engage with the user and provide appropriate recommendations. This includes extracting relevant information from the data store, including health parameters and relevant previous history. The system will be customised to assist the users with empathetic responses and emotional awareness, to promote a supportive user experience. The virtual assistant uses a combination of NLP models and reinforcement learning to optimise user interactions. The core NLP model is a transformer-based architecture similar to Bidirectional Encoder Representations from Transformers (BERT), for intent detection and context understanding. Reinforcement learning algorithms like Q-learning are applied to personalise responses based on user preferences and engagement patterns, ensuring that the assistant evolves to provide more relevant suggestions. The virtual assistant constantly works with the data analytics engine and the user interface module to provide an immersive experience for the users.

2.4. User Interface Module

The user interface module is another component of the smart screen system, which acts as an interface between the users and their responses. The user interface module supports the design and development of UI components that pop up on the screens, communicate with the user by giving recommendations, and allow the user to customise the system settings according to their comfort. The user interface module will have a dashboard as a central hub for displaying real-time health metrics, personalised recommendations, and system notifications. The virtual assistant interface facilitates interaction with the LLM-powered virtual agent [14,15]. The users will have access to tracked data and be able to identify the abnormalities that the system recommends. The UI components are designed to include gamification elements and a reward system that motivates the user to adhere to the health recommendations. Excellent care has been taken to minimise the disturbance of and impact on the storage and performance of the workspace with the inclusion of an intelligent system that takes up more computation and memory space; tailored customisation options given to the users will help them decide the local storage and computational requirements they opt for.

3. Results and Discussion

The system was designed and tested on 30 participants of diverse ages and ethnicities. The aim was to understand the effectiveness of the proposed system in their workspaces. During the data collection process for the study, the participants were equipped with the full sensor suite, and we collected data from 30 participants during 8 h work sessions. The data from the experimental setup are stored in a 10 s interval period. Table 1 shows the range of participants on which the study was conducted and the gender diversity of the study.

Table 1.

Count of participants in various groups.

Each data sample stored has detected, computed, and processed data attributes that include the following: heart rate, eye movements (blink rate and pupil dilation), posture metrics (head angle, shoulder angle, and back angle), emotional state (stress levels and facial expression detection), environmental conditions (ambient temperature and ambient light), and user-specific characteristics (gender, age, job role, distance travelled from home to office, and calories consumed). Internet usage, time spent on mobiles, tone of communication, typing speed, and scrolling speed are also captured regularly. The heart rate sensor and EDA sensor were calibrated at the beginning of each session to ensure accurate baseline measurements. Posture data were captured using pressure sensors embedded in an ergonomic office chair, and eye movement data was tracked using the Tobii Eye Tracker 4C, mounted on a 24-inch monitor. Data pre-processing involved a two-step noise reduction process using a Butterworth filter and a Savitzky–Golay smoothing algorithm. Anomalies were detected using Isolation Forest models and health recommendations were generated based on predefined threshold values for each health metric.

Posture score of the user is calculated via Equation (1):

where

Posture Score = w1 × Head Angle + w2 × Shoulder Angle + w3 × Back Angle

- The values w1, w2, and w3 are weights assigned to each postural parameter based on relative importance;

- Head Angle, shoulder angle, and back angle are measured in degrees and compared to ideal ergonomic ranges.

Table 2 (Table 2a,b), shows the dataset snapshot captured for the participants on the various data points considered for the study. The data points are extracted and computed from the readings of multiple combinations of sensors and data pre-processing steps. The data were captured using various sensors and processed into a dataset using the above-mentioned parameters. The system computed and stored the data for participants from different age and gender groups. The participants’ user behaviour tends to spend more time on the screens. A detailed study of each of the parameters and their impact is to be analysed for the adjustments and preparation of the intelligent engine for the designed system.

Table 2.

Dataset snapshot. (a) Sample dataset snapshot part 1; (b) sample dataset snapshot part 2.

However, the scope of the current study and its implementation was limited to a few attributes, which included heart rate, eye blink rate, posture score, and stress levels. Table 3 shows the trend observed from the gathered data that helped us to infer the usage and tracking of the user statistics, as well as the interventions provided for the participants during the usage of the system. The table shows the levels before and after the recommendations provided to the participants and the percentage of improvement in the metrics for a subject. The interventions given to the participants included recommendations such as taking breaks, listening to music, speaking with a colleague, turning on lights, turning off lights, applying curtains, or opening up windows. These interventions were based on the preliminary study conducted to implement the project.

Table 3.

Impact on health metrics.

The results demonstrated that users significantly benefitted from an intelligent system that recommended actions to manage stress and enhance their workspace experience. The study conducted on parameters such as heart rate, eye blink rate, posture score, and stress levels has proven the interventions by giving suggestions to the users, which helped them to improve their overall score and satisfaction, thereby improving their time spent in the workspace.

A user satisfaction survey has been conducted to evaluate and validate the findings and implementations of the proposed system. Table 4 shows the overall satisfaction based on the study conducted for the system users. An intelligent assistant system to assist them in caring for their health during work time has improved their overall experience. Table 4 shows the summary of the user satisfaction survey.

Table 4.

User satisfaction survey results.

The results demonstrate the efficacy of the intelligent screen system in promoting healthier habits and mitigating the adverse effects of prolonged screen time. The significant improvements in key health metrics and high user satisfaction ratings validate the system’s potential to enhance user wellbeing. The AI-powered virtual assistant delivered personalised recommendations and fostered user engagement. Its ability to understand natural language queries and provide contextually relevant responses contributed to a positive user experience. Integrating multiple sensors allowed for a comprehensive assessment of user health and wellbeing. Real-time monitoring and analysis enabled timely interventions and proactive recommendations, promoting a more mindful approach to screen time.

4. Conclusions

The proposed smart screen system demonstrates the potential of integrating AI, IoT, and NLP technologies to promote healthier screen time practices for long-term users. The system provides personalised interventions that encourage breaks, posture adjustments, and relaxation techniques by capturing user behaviour and monitoring key health metrics through sensors. The comprehensive data analytics engine, powered by ML and DL algorithms, offers tailored recommendations that improve user wellbeing and productivity. Integrating a virtual assistant enhances user interaction by delivering context-aware and empathetic guidance, thus creating a supportive digital workspace.

The results from our study indicate that the system effectively reduces stress levels, improves posture, and mitigates eye strain, as evidenced by improvements in health metrics and user satisfaction scores. By focusing on real-time monitoring and adaptive feedback, this intelligent system fosters healthier work habits, which can positively impact users’ physical and mental health.

The study also acknowledges the ethical considerations of data privacy by implementing local data storage and user-centric data controls, ensuring that the system respects user autonomy and confidentiality. Future enhancements could explore expanding the system’s capabilities through more advanced emotional detection and adaptive learning algorithms. The proposed smart screen system provides a promising solution to mitigating prolonged screen exposure’s adverse effects and enhancing users’ health and productivity in increasingly digital work environments.

Author Contributions

Conceptualization, and methodology, S.V.; software, validation, formal analysis, investigation, resources, data curation, writing—original draft preparation, J.A.; writing—review and editing, S.V.; visualization, supervision, J.L. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

The study titled ’IoT-Enabled Intelligent Health Care Screen System for Long-Time Screen Users’ was reviewed by the Institute Ethics Committee, CHRIST (Deemed to be University), Pune, Lavasa Campus. The committee determined that the study qualifies for exemption from full ethics review as it involves only the technical development and validation of an IoT-enabled system, without direct human interaction, collection of personally identifiable information, or any intervention posing risks to participants. Any data collected during the study remains fully anonymized and protected in compliance with institutional ethical guidelines. The exemption was granted with the condition that any changes in the study methodology involving human subjects would require a new ethics review.

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

The data presented in this study are available on request from the corresponding author. The data are not publicly available due to ethical restrictions.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Liao, Z.; Luo, Z.; Huang, Q.; Zhang, L.; Wu, F.; Zhang, Q.; Wang, Y. SMART: Screen-based gesture recognition on commodity mobile devices. In Proceedings of the 27th Annual International Conference on Mobile Computing and Networking, New Orleans, LA, USA, 25–29 October 2021; pp. 283–295. [Google Scholar]

- Dalton, G.; McDonna, A.; Bowskill, J.; Gower, A.; Smith, M. The design of smartspace: A personal working environment. Pers. Technol. 1998, 2, 37–42. [Google Scholar] [CrossRef]

- Androutsou, T.; Angelopoulos, S.; Hristoforou, E.; Matsopoulos, G.K.; Koutsouris, D.D. Automated Multimodal Stress Detection in Computer Office Workspace. Electronics 2023, 12, 2528. [Google Scholar] [CrossRef]

- LeBlanc, A.G.; Gunnell, K.E.; Prince, S.A.; Saunders, T.J.; Barnes, J.D.; Chaput, J.P. The ubiquity of the screen: An overview of the risks and benefits of screen time in our modern world. Transl. J. Am. Coll. Sports Med. 2017, 2, 104–113. [Google Scholar] [CrossRef]

- Montagni, I.; Guichard, E.; Carpenet, C.; Tzourio, C.; Kurth, T. Screen time exposure and reporting of headaches in young adults: A cross-sectional study. Cephalalgia 2016, 36, 1020–1027. [Google Scholar] [CrossRef]

- Neophytou, E.; Manwell, L.A.; Eikelboom, R. Effects of excessive screen time on neurodevelopment, learning, memory, mental health, and neurodegeneration: A scoping review. Int. J. Ment. Health Addict. 2021, 19, 724–744. [Google Scholar] [CrossRef]

- Yi, Z.; Ouyang, J.; Liu, Y.; Liao, T.; Xu, Z.; Shen, Y. A Survey on Recent Advances in LLM-Based Multi-turn Dialogue Systems. arXiv 2024, arXiv:2402.18013. [Google Scholar]

- Mahmood, A.; Wang, J.; Yao, B.; Wang, D.; Huang, C.M. LLM-Powered Conversational Voice Assistants: Interaction Patterns, Opportunities, Challenges, and Design Guidelines. arXiv 2023, arXiv:2309.13879. [Google Scholar]

- Lv, Z.; Poiesi, F.; Dong, Q.; Lloret, J.; Song, H. Deep learning for intelligent human–computer interaction. Appl. Sci. 2022, 12, 11457. [Google Scholar] [CrossRef]

- Zheng, Y.; Jin, M.; Liu, Y.; Chi, L.; Phan, K.T.; Chen, Y.P.P. Generative and contrastive self-supervised learning for graph anomaly detection. IEEE Trans. Knowl. Data Eng. 2021, 35, 12220–12233. [Google Scholar] [CrossRef]

- McShane, M. Natural language understanding (NLU, not NLP) in cognitive systems. AI Mag. 2017, 38, 43–56. [Google Scholar] [CrossRef]

- Reiter, E.; Dale, R. Building applied natural language generation systems. Nat. Lang. Eng. 1997, 3, 57–87. [Google Scholar] [CrossRef]

- Çekiç, T.; Manav, Y.; Dündar, E.B.; Kılıç, O.F.; Deniz, O. Natural language processing-based dialog system generation and management platform. In Proceedings of the 2020 5th International Conference on Computer Science and Engineering (UBMK), Diyarbakir, Turkey, 9–11 September 2020; pp. 99–104. [Google Scholar]

- Guan, Y.; Wang, D.; Chu, Z.; Wang, S.; Ni, F.; Song, R.; Zhuang, C. Intelligent Agents with LLM-based Process Automation. In Proceedings of the 30th ACM SIGKDD Conference on Knowledge Discovery and Data Mining, Barcelona, Spain, 25–29 August 2024; pp. 5018–5027. [Google Scholar]

- Qiu, H.; Lan, Z. Interactive Agents: Simulating Counselor-Client Psychological Counseling via Role-Playing LLM-to-LLM Interactions. arXiv 2024, arXiv:2408.15787. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).