Abstract

This paper introduces a methodology for precise object orientation determination using principal component analysis, with robust performance under significant noise conditions. It validates the potential to mitigate the challenges associated with axis-aligned bounding boxes in smart manufacturing environments. The proposed approach paves the way for improved alignment in robotic grasping tasks, positioning it as a computationally efficient alternative to ML methods employing oriented bounding boxes. the methodology demonstrated a maximum angle deviation of 3.5 degrees under severe noise conditions through testing with bolts in orientations of 0 to 180 degrees.

1. Introduction

It is aspired in both academia and industry to enhance production lines with vision systems capable of processing real-time data [1]. This enhancement aids in the accurate identification of objects, which can be used to facilitate robotic grasping operations with dynamic or moving infeed [2]. Consequently, it provides substantial flexibility to production lines, fostering an adaptable manufacturing environment [3]. The subsequent discourse in the current literature highlights a growing fascination in employing machine learning (ML) techniques, particularly convolutional neural networks (CNNs), to enhance object recognition for robotic grasping operations [4]. These ML-driven object detection algorithms, such as Faster R-CNN, YOLO, and SSD, operate under two distinct methodologies, discernible by the nature of their bounding boxes (bboxes).

The first of these methodologies can be seen in models such as Faster R-CNN, YOLO, and SSD, which implement axis-aligned bounding boxes (AABBs) [1]. AABBs use bboxes that align with the axes of the image, assuming a vertical and horizontal position. AABBs provide a simple and computational efficient way to outline the objects in the scene, making them a preferred choice for many real-time applications [1]. On the contrary, certain models like R-FCN and RRPN (rotated region proposal networks) employ oriented bounding boxes (OBBs) [5]. OBBs use bboxes that are adaptable to the object’s orientation within the image, therefore providing a tighter fit around the object. This feature becomes particularly advantageous in specific applications where the object’s orientation is of importance such as in detecting objects to be grasped by robot arms from moving platforms or conveyors [2].

AABBs, while being less computationally demanding than OBBs, may encapsulate significant background area, particularly when dealing with rotated or irregularly shaped objects. This inclusion could potentially introduce noise into a model’s predictions, detracting from overall accuracy. Conversely, OBBs have the advantage of providing a more precise fit around the object and minimizing background noise, thus potentially enhancing accuracy for certain tasks. However, this improvement in performance comes with increased computational requirements and complexity. For these reasons, K. Nguyen [4] highlights that YOLO is considered the optimal choice for real-time object detection applications requiring high frames per second and moderate precision. Nevertheless, this model has limitations as it uses AABBs, which are insufficient for autonomous robotic grasping of objects that are asymmetrical along multiple axes, such as bolts. Hence, we present a computationally efficient approach for determining the orientation of components, such as bolts. The objective of this paper is to introduce a methodology for deriving the orientation of an object post-detection using ML techniques that employ AABBs. By doing so, it seeks to mitigate the challenges associated with AABBs, thereby enhancing the alignment capabilities of robotic grippers with the grasped parts. Notably, this proposed methodology also aims to provide a more computationally efficient alternative to methods utilising OBBs.

2. Methods

The proposed method loads the test images as grayscale then applies a Gaussian blur (kernel size of 5 × 5) to reduce noise. The image is segmented using an adaptive binarisation method to separate bolt pixels from the background. This is accomplished using the mean adaptive thresholding technique in OpenCV 4.2.0, cv2.ADAPTIVE_THRESH_MEAN_C [6], where the threshold value corresponds to the neighbourhood’s average intensity. The neighbourhood size is determined by the blockSize parameter, set at 251. From this mean, a constant C value of 15, optimised through systematic trial and error, is subtracted to refine the threshold. The binary output image, achieved by applying the computed threshold to each block, signifies bolt pixels as 1’s (black) and the remainder as 0’s (white). To ensure a more continuous bolt shape, a morphological ‘closing’ operation is performed on the binary image. This operation fills small gaps within the bolt shape using a rectangular kernel with a size of 21 × 21 pixels. The bolt’s pixel locations within the image were identified and centralised by examining the non-zero intensity values. The mean was then subtracted to centralise their positions. This process yields a new set of points referred to as centralised points, which have a zero mean. Principal component analysis (PCA) [7] is then applied to determine the orientation of the bolt. The first step involves calculating the covariance matrix, Σ of the centralized points. The covariance matrix is computed using Equation (1).

where xi represents the centralized points, n is the number of points, and is the mean of the points. Eigen decomposition is performed on the covariance matrix, resulting in eigenvalues λ and their corresponding eigenvectors v. The eigenvalues are sorted in descending order, and their corresponding eigenvectors are likewise ordered. The eigenvectors represent the principal components, which signify the directions in which the data varies the most. To determine the orientation, the dot product between the second eigenvector (representing the minor axis of variation) and the centralised points is computed. By determining the angle of the principal eigenvector relative to the image’s vertical axis and gauging the relative position of each point to the minor axis line, the bolt’s orientation and shape can be accurately established. These calculations facilitate subsequent image analyses, thereby enhancing the ability to characterise the bolt.

3. Results and Discussion

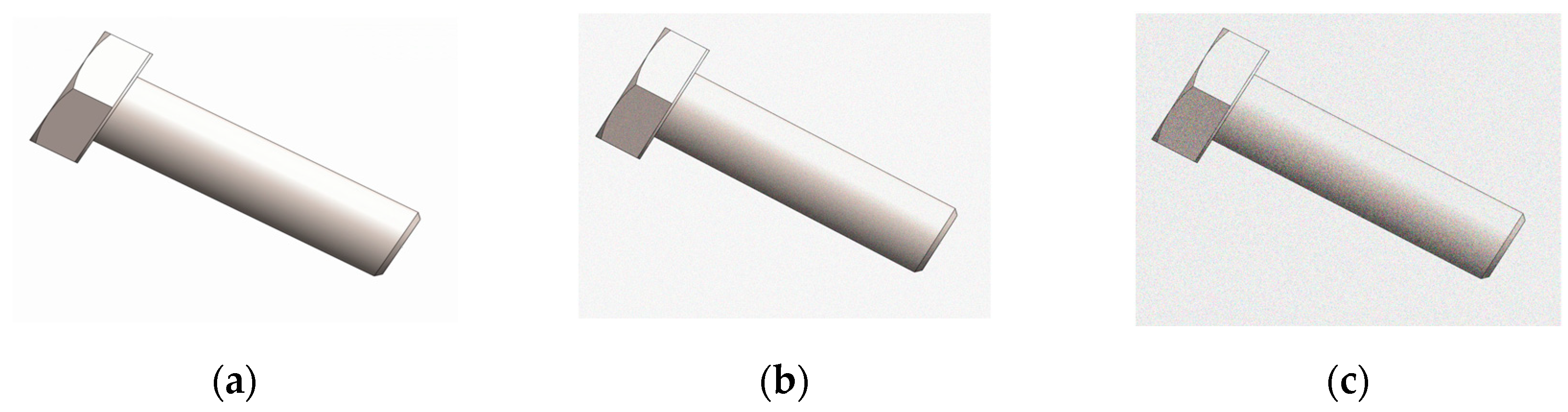

The methodology was evaluated using colour images of M12 × 40 hex bolts. To assess performance across a range of bolt orientations, 19 synthetic images were generated with variations in the bolt’s angle at 10-degree intervals from 0 to 180 degrees. Furthermore, to examine the method’s robustness, these images were augmented using Gaussian noise, following the approach outlined by G.B.P. da Costa [8]. The augmentation process resulted in the creation of six distinct test sets, each comprising 19 images. These test sets were generated by applying different levels of Gaussian noise, with standard deviations (σ) ranging from 0 to 50. This experimental setup enabled comprehensive testing and analysis of the methodology’s performance under different bolt orientations and varying levels of image noise. An example of the image noise range can be seen in Figure 1.

Figure 1.

Gaussian noise levels in test images: (a) σ = 0; (b) σ = 20; (c) σ = 50.

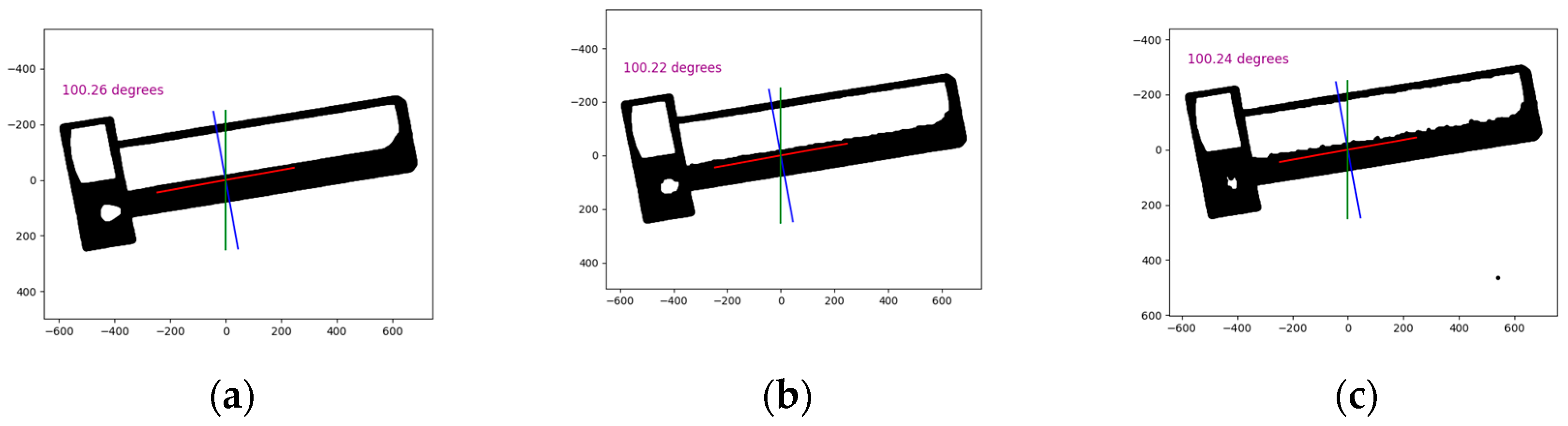

Successful orientation detection was determined if the observed angle was within a 10-degree range of the known one, factoring in the robot gripper’s ability to effectively handle minor misalignments. The proposed method reliably identified the bolt’s angle within this tolerance across all test images, as depicted in Figure 2. While no measured angle precisely matched the known angle in all rotations and noise levels, a high degree of accuracy was achieved. Notably, the most significant deviations were observed in angles from 0 and 80 degrees, with the maximum average deviation of 3.5 degrees (across all noise levels) recorded for the bolt at 40 degrees. In contrast, the precision improved for bolts oriented past 90 degrees, with the highest average deviation (across all noise levels) being 2.2 degrees for bolts at 140 and 150 degrees.

Figure 2.

Output images (100 degrees), with horizontal axis in green and Eigen vectors in blue and red: (a) σ = 0; (b) σ = 20; (c) σ = 50.

Testing has shown that lighting conditions appear to cause this change in accuracy, particularly once the bolt image is rotated beyond 90 degrees. This rotation reduces bright pixels caused by light reflecting off the bolt’s surface, leading to more pixels being converted to black during binarization. Consequently, a larger number of pixels is available for PCA application, improving the accuracy of the bolt orientation determination past 90 degrees.

Author Contributions

S.C. led the conceptualization, providing the initial idea for the research. A.G. was responsible for the methodology, formal analysis, investigation, data curation, original draft preparation, visualization, project administration, and funding acquisition. E.K. as the lead supervisor, along with D.K. and S.C. offered valuable guidance on computer vision techniques and supervised the project. All three also contributed to reviewing and editing the manuscript, enhancing its academic quality. This collaborative effort ensured the research was thorough and adhered to high standards. Each author’s contribution was substantial, reflecting their expertise and commitment to the project’s success. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Department for the Economy (DfE). The APC was funded by the Department for the Economy (DfE).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Data supporting the findings of this study are not yet available as the research is ongoing. Data will be made available upon completion of the study.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Pham, D.L.; Chang, T.W. A YOLO-based real-time packaging defect detection system. Procedia Comput. Sci. 2023, 217, 886–894. [Google Scholar]

- Wong, C.C.; Chien, M.Y.; Chen, R.J.; Aoyama, H.; Wong, K.Y. Moving object prediction and grasping system of robot manipulator. IEEE Access 2022, 10, 20159–20172. [Google Scholar] [CrossRef]

- Giberti, H.; Abbattista, T.; Carnevale, M.; Giagu, L.; Cristini, F. A methodology for flexible implementation of collaborative robots in smart manufacturing systems. Robotics 2022, 11, 9. [Google Scholar] [CrossRef]

- Nguyen, K.; Huynh, N.T.; Nguyen, P.C.; Nguyen, K.D.; Vo, N.D.; Nguyen, T.V. Detecting objects from space: An evaluation of deep-learning modern approaches. Electronics 2020, 9, 583. [Google Scholar] [CrossRef]

- Zand, M.; Etemad, A.; Greenspan, M. Oriented Bounding Boxes for Small and Freely Rotated Objects. IEEE Trans. Geosci. Remote Sens. 2022, 60, 4701715. [Google Scholar] [CrossRef]

- Image Thresholding. OpenCV. Available online: https://docs.opencv.org/4.x/d7/d4d/tutorial_py_thresholding.html (accessed on 14 July 2023).

- Abdi, H.; Williams, L.J. Principal component analysis. Wiley Interdiscip. Rev. Comput. Stat. 2010, 2, 433–459. [Google Scholar] [CrossRef]

- da Costa, G.B.P.; Contato, W.A.; Nazare, T.S.; Neto, J.E.; Ponti, M. An empirical study on the effects of different types of noise in image classification tasks. arXiv 2016, arXiv:1609.02781. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).