Comparing Different Machine Learning Techniques in Predicting Diabetes on Early Stage †

Abstract

1. Introduction

2. Related Work

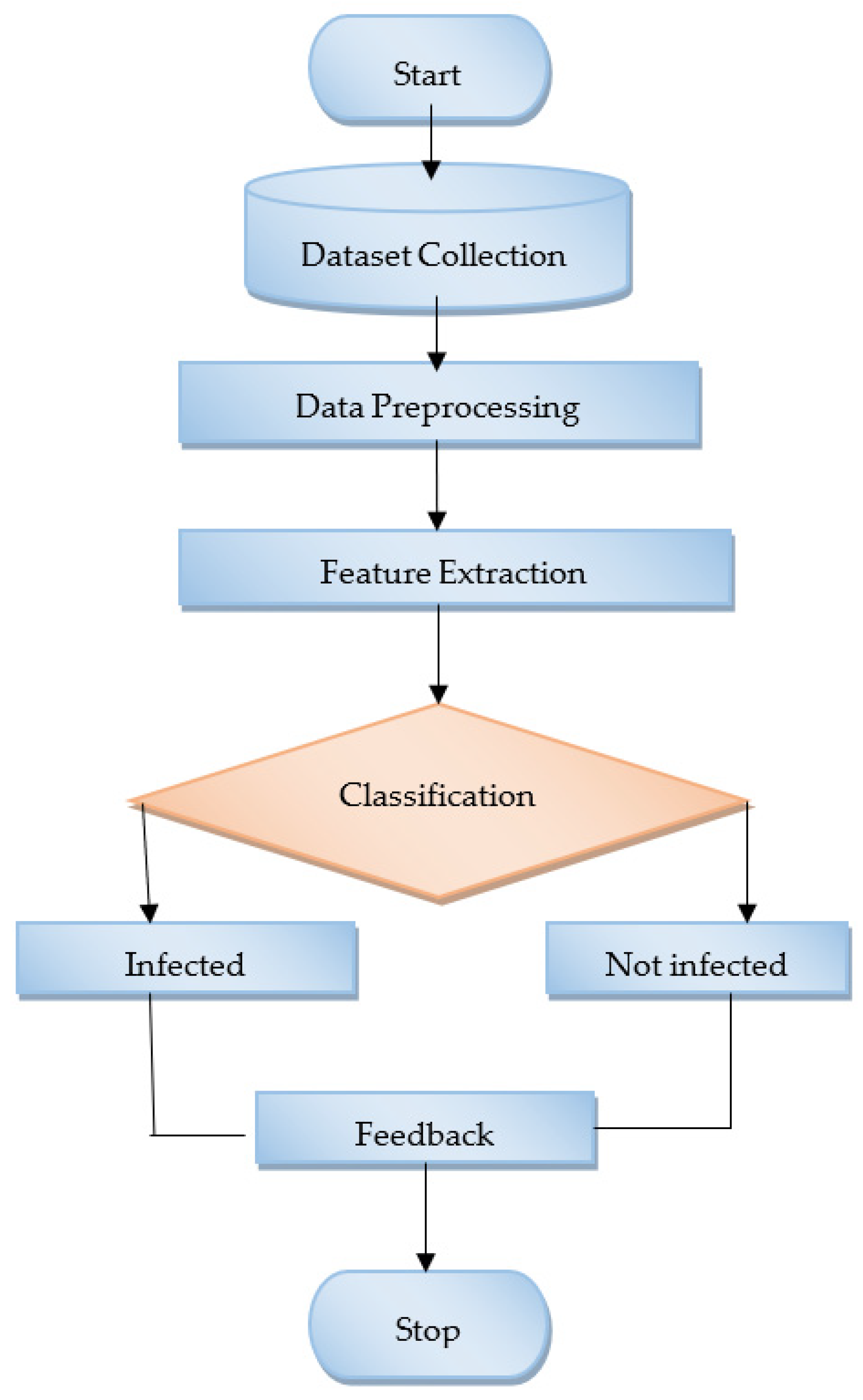

3. Methodology

- Data Collection

- B.

- Data Preprocessing

- Importance Graph analysis: Important graphs have been drawn and analyzed to determine which features play an important role in prediction. They graphically describe the feature importance. A technique called “feature importance” values input features according to how well they predict return labels. The reliability and effectiveness of a predictive model in practice can be improved by using feature importance scores, which play a significant role in predictive modeling projects and provide insight into the data, information into the model, and a basis for feature selection.

- Filter Method for Feature Selection: A filter method has been applied in the dataset to remove redundant data. Instead, using classification algorithms, filter approaches analyze features based on data qualities. Information theory, correlation, distance, consistency, fuzzy sets, and rough sets can all be used as the foundation for filter measures. First, features are chosen and sorted, batch-wise in the multivariate case (handling redundancy naturally) and independently of feature space in the univariate case. The highest ranked features are chosen in the second stage using a performance criterion [17].

- a.

- Training DatasetIn this approach, the dataset has been split into training and testing data. The data in the proposed dataset have been divided into training and testing data in an 8:2 ratio.

- b.

- Applied ModelsMachine learning algorithms have shown great success in the issues of diabetes prediction. How a machine learning system might be used to identify diabetics is explained in this study. This system makes use of the 16 properties found in the UCI Machine Learning Repository, which is openly accessible. Three different architectures, including Random Forest, Ada boosting, KNN, and Bagging are examined as the core of our research. Detailed explanations of the predefined architecture are provided below.

- Random Forest: The Random Forest algorithm, first proposed by Bierman [18,19], consists of a number of independent classifiers for tree structures, each of which makes a classification prediction. Based on the classification predictions with the highest number of votes from each classifier, the output is predicted. The accuracy increases linearly with the number of trees in the forest, which also removes overfitting problems [20]. This simple machine learning technique typically yields excellent results without hyper-tuning. When using the Random Forest method, the classifier will not overfit the model if there are enough trees in the forest, which is a severe problem that can sabotage results. Missing data issues can be resolved by the Random Forest classifier, which can also be more beneficial for categorical values [21].

- KNN: The term K Nearest Neighbors (KNN) refers to the sample’s K closest neighbors. The idea behind the approach is that you can view the category of K known instances that are closest to the unknown instance when it is necessary to discover the category of an unknown instance [22]. The category that makes up the greatest percentage of the K instances is counted and is assumed to be the category of an unidentified case. Different K values have a significant impact on classification when chosen. To determine the categorization, the distance between each instance and the sample point must be determined. Three steps make up the specific implementation: locating the sample’s K closest neighbors first; then Third, choose the category with the highest percentage of categories in the closest neighbor as the classification category [23] by calculating the proportion of nearest neighbor categories. KNN algorithms use data to categorize new data elements entirely based on similarity metrics. The class with the closest neighbors is given the statistics [24].

- Ada booster: The AdaBoost (adaptive boosting) technique was created by Yoav Freund and Robert Shapire in 1995 to create a strong classifier out of a collection of poor classifiers. The Boosting family of algorithms includes AdaBoost (Adaptive Boosting) [25]. This kind of learner focuses more on incorrectly classified samples during training, modifies the sample distribution, and repeats this process until the weak classifier has undergone a predetermined amount of training, at which point learning is complete [26]. By retaining a collection of weights across training data and adaptively adjusting them after each weak learning cycle, the AdaBoost algorithm produces a series of poor learners. The weights of the training samples that will be misclassified by the weak learner that is currently in use will be increased, while the weights of the training samples that the learner will correctly classify will be dropped [27].

- Bagging: Bagging is another example of an ensemble technique in which a group of weak learners is combined to produce a strong learner who performs better than one. It is a meta-estimator that uses random subsets of the original dataset to fit base classifiers, and then it sums up individual predictions to provide a final prediction [20].

4. Result

- A.

- 10-Fold Cross-Validation Analysis

- B.

- Confusion Matrix Analysis

- True Positive (TP): Non-diabetic patients identified as non-diabetic.

- False Positive (FP): Misclassification of healthy patients as unhealthy.

- True Negative (TN): accurately identifying healthy patients as healthy.

- False Negative (FN): Incorrectly classifying diabetic patients as non-diabetic.

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Fitriyani, N.L.; Syafrudin, M.; Alfian, G.; Rhee, J. Development of Disease Prediction Model Based on Ensemble Learning Approach for Diabetes and Hypertension. IEEE Access 2019, 7, 144777–144789. [Google Scholar] [CrossRef]

- Banchhor, M.; Singh, P. Comparative study of ensemble learning algorithms on early stage diabetes risk prediction. In Proceedings of the 2021 2nd International Conference for Emerging Technology (INCET), Belagavi, India, 21–23 May 2021. [Google Scholar] [CrossRef]

- Warsi, G.G.; Saini, S.; Khatri, K. Ensemble Learning on Diabetes Data Set and Early Diabetes Prediction. In Proceedings of the 2019 International Conference on Computing, Power and Communication Technologies (GUCON), New Delhi, India, 27–28 September 2019; pp. 182–187. [Google Scholar]

- Ahmed, T.M. Developing a predicted model for diabetes type 2 treatment plans by using data mining. J. Theor. Appl. Inf. Technol. 2016, 90, 181–187. [Google Scholar]

- Lohani, B.P.; Thirunavukkarasan, M. A Review: Application of Machine Learning Algorithm in Medical Diagnosis. In Proceedings of the 2021 International Conference on Technological Advancements and Innovations (ICTAI), Tashkent, Uzbekistan, 10–12 November 2021; pp. 378–381. [Google Scholar] [CrossRef]

- Malini, M.; Gopalakrishnan, B.; Dhivya, K.; Naveena, S. Diabetic Patient Prediction using Machine Learning Algorithm. In Proceedings of the 2021 Smart Technologies, Communication and Robotics (STCR), Sathyamangalam, India, 9–10 October 2021. [Google Scholar] [CrossRef]

- Huda, S.M.A.; Ila, I.J.; Sarder, S.; Shamsujjoha, M.; Ali, M.N.Y. An Improved Approach for Detection of Diabetic Retinopathy Using Feature Importance and Machine Learning Algorithms. In Proceedings of the 2019 7th International Conference on Smart Computing & Communications (ICSCC), Sarawak, Malaysia, 28–30 June 2019. [Google Scholar] [CrossRef]

- Prem, S.S.; Umesh, A.C. Classification of Exudates for Diabetic Retinopathy Prediction using Machine Learning. In Proceedings of the 2020 IEEE 5th International Conference on Computing Communication and Automation (ICCCA), Greater Noida, India, 30–31 October 2020; pp. 357–362. [Google Scholar] [CrossRef]

- Bhat, S.S.; Ansari, G.A. Predictions of diabetes and diet recommendation system for diabetic patients using machine learning techniques. In Proceedings of the 2021 2nd International Conference for Emerging Technology (INCET), Belagavi, India, 21–23 May 2021; pp. 1–5. [Google Scholar] [CrossRef]

- Ahmed, U.; Issa, G.F.; Khan, M.A.; Aftab, S.; Khan, M.F.; Said, R.A.; Ghazal, T.M.; Ahmad, M. Prediction of Diabetes Empowered with Fused Machine Learning. IEEE Access 2022, 10, 8529–8538. [Google Scholar] [CrossRef]

- Samet, S.; Laouar, M.R.; Bendib, I. Diabetes mellitus early stage risk prediction using machine learning algorithms. In Proceedings of the 2021 International Conference on Networking and Advanced Systems (ICNAS), Annaba, Algeria, 27–28 October 2021. [Google Scholar] [CrossRef]

- Emon, M.U.; Zannat, R.; Khatun, T.; Rahman, M.; Keya, M.S.; Ohidujjaman. Performance Analysis of Diabetic Retinopathy Prediction using Machine Learning Models. In Proceedings of the 2021 6th International Conference on Inventive Computation Technologies (ICICT), Coimbatore, India, 20–22 January 2021; pp. 1048–1052. [Google Scholar] [CrossRef]

- Jyotheeswar, S.; Kanimozhi, K.V. Prediction of Diabetic Retinopathy using Novel Decision Tree Method in Comparison with Support Vector Machine Model to Improve Accuracy. In Proceedings of the 2022 International Conference on Sustainable Computing and Data Communication Systems (ICSCDS), Erode, India, 7–9 April 2022; pp. 44–47. [Google Scholar] [CrossRef]

- Paliwal, M.; Saraswat, P. Research on Diabetes Prediction Method Based on Machine Learning. In Proceedings of the 2022 2nd International Conference on Technological Advancements in Computational Sciences (ICTACS), Tashkent, Uzbekistan, 10–12 October 2022; pp. 415–419. [Google Scholar] [CrossRef]

- Islam, M.M.F.; Ferdousi, R.; Rahman, S.; Bushra, H.Y. Likelihood Prediction of Diabetes at Early Stage Using Data Mining Techniques. Adv. Intell. Syst. Comput. 2020, 992, 113–125. [Google Scholar] [CrossRef]

- Cherrington, M.; Thabtah, F.; Lu, J.; Xu, Q. Feature selection: Filter methods performance challenges. In Proceedings of the 2019 International Conference on Computer and Information Sciences (ICCIS), Sakaka, Saudi Arabia, 3–4 April 2019; pp. 1–4. [Google Scholar] [CrossRef]

- Jin, Z.; Shang, J.; Zhu, Q.; Ling, C.; Xie, W.; Qiang, B. RFRSF: Employee Turnover Prediction Based on Random Forests and Survival Analysis. In Proceedings of the 21st International Conference on Web Information Systems Engineering, WISE 2020, Amsterdam, The Netherlands, 20–24 October 2020. [Google Scholar] [CrossRef]

- Zhang, L.; Cui, H.; Welsch, R.E. A Study on Multidimensional Medical Data Processing Based on Random Forest. In Proceedings of the 2020 5th International Conference on Universal Village (UV), Boston, MA, USA, 24–27 October 2020; pp. 1–5. [Google Scholar] [CrossRef]

- Sapra, V.; Sapra, L.; Vishnoi, A.; Srivastava, P. Identification of Brain Stroke using Boosted Random Forest. In Proceedings of the 2022 International Conference on Advances in Computing, Communication and Materials (ICACCM), Dehradun, India, 10–11 November 2022; pp. 1–5. [Google Scholar] [CrossRef]

- Swarupa, A.N.V.K.; Sree, V.H.; Nookambika, S.; Kishore, Y.K.S.; Teja, U.R. Disease Prediction: Smart Disease Prediction System using Random Forest Algorithm. In Proceedings of the 2021 IEEE International Conference on Intelligent Systems, Smart and Green Technologies (ICISSGT), Visakhapatnam, India, 13–14 November 2021; pp. 48–51. [Google Scholar] [CrossRef]

- Abu-Aisheh, Z.; Raveaux, R.; Ramel, J.Y. Efficient k-nearest neighbors search in graph space. Pattern Recognit. Lett. 2020, 134, 77–86. [Google Scholar] [CrossRef]

- Zhu, Y.; Wang, J.; Li, X. Research on GA-KNN Image Classification Algorithm. In Proceedings of the 2022 4th International Conference on Artificial Intelligence and Advanced Manufacturing (AIAM), Hamburg, Germany, 7–9 October 2022; pp. 278–282. [Google Scholar] [CrossRef]

- Chethana, C. Prediction of heart disease using different KNN classifier. In Proceedings of the 2021 5th International Conference on Intelligent Computing and Control Systems (ICICCS), Madurai, India, 6–8 May 2021; pp. 1186–1194. [Google Scholar] [CrossRef]

- Harvey, P.K.; Brewer, T.S. On the neutron absorption properties of basic and ultrabasic rocks: The significance of minor and trace elements. Geol. Soc. Spec. Publ. 2005, 240, 207–217. [Google Scholar] [CrossRef]

- Zhang, Y.; Ni, M.; Zhang, C.; Liang, S.; Fang, S.; Li, R.; Tan, Z. Research and application of adaboost algorithm based on SVM. In Proceedings of the 2019 IEEE 8th Joint International Information Technology and Artificial Intelligence Conference (ITAIC), Chongqing, China, 24–26 May 2019; pp. 662–666. [Google Scholar] [CrossRef]

- Wang, R. AdaBoost for Feature Selection, Classification and Its Relation with SVM, A Review. Phys. Procedia 2012, 25, 800–807. [Google Scholar] [CrossRef]

- Ariza-López, F.J.; Rodríguez-Avi, J.; Alba-Fernández, M.V. Complete control of an observed confusion matrix. In Proceedings of the IGARSS 2018-2018 IEEE International Geoscience and Remote Sensing Symposium 2018, Valencia, Spain, 22–27 July 2018; Volume 2018, pp. 1222–1225. [Google Scholar] [CrossRef]

- Li, X.; Rai, L. Apple Leaf Disease Identification and Classification using ResNet Models. In Proceedings of the 2020 IEEE 3rd International Conference on Electronic Information and Communication Technology (ICEICT), Shenzhen, China, 13–15 November 2020; pp. 738–742. [Google Scholar] [CrossRef]

| Number of Attributes | Number of Instances | |

|---|---|---|

| Diabetics Patients Data | 16 | 520 |

| S_No. | Features | |||

|---|---|---|---|---|

| Features Name | Values | Missing | Non-Numeric | |

| 1. | Age | 1–100 | No | No |

| 2. | Sex | Male and Female | No | Yes |

| 3. | Polydipsia | Yes/No | No | Yes |

| 4. | Polyuria | Yes/No | No | Yes |

| 5. | Sudden weight loss | Yes/No | No | Yes |

| 6. | Polyphagia | Yes/No | No | Yes |

| 7. | Weakness | Yes/No | No | Yes |

| 8. | Genital thrush | Yes/No | No | Yes |

| 9. | Visual blurring | Yes/No | No | Yes |

| 10. | Irritability | Yes/No | No | Yes |

| 11. | Itching | Yes/No | No | Yes |

| 12. | Partial paresis | Yes/No | No | Yes |

| 13. | Delayed healing | Yes/No | No | Yes |

| 14. | Muscle stiffness | Yes/No | No | Yes |

| 15. | Obesity | Yes/No | No | Yes |

| 16. | Alopecia | Yes/No | No | Yes |

| 17. | Class | Yes/No | No | Yes |

| Training Models | ||||

|---|---|---|---|---|

| Random Forest | Ada Booster | KNN | Bagging | |

| Obtain Accuracy | 97.03% | 91.11% | 92.19% | 94.03% |

| Training Models | ||||

|---|---|---|---|---|

| Random Forest | Ada Booster | KNN | Bagging | |

| Accuracy attained through the use of 10-fold cross-validation | 95.03% | 90.88% | 91.39% | 92.94% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yadu, S.; Chandra, R.; Sinha, V.K. Comparing Different Machine Learning Techniques in Predicting Diabetes on Early Stage. Eng. Proc. 2024, 62, 20. https://doi.org/10.3390/engproc2024062020

Yadu S, Chandra R, Sinha VK. Comparing Different Machine Learning Techniques in Predicting Diabetes on Early Stage. Engineering Proceedings. 2024; 62(1):20. https://doi.org/10.3390/engproc2024062020

Chicago/Turabian StyleYadu, Shweta, Rashmi Chandra, and Vivek Kumar Sinha. 2024. "Comparing Different Machine Learning Techniques in Predicting Diabetes on Early Stage" Engineering Proceedings 62, no. 1: 20. https://doi.org/10.3390/engproc2024062020

APA StyleYadu, S., Chandra, R., & Sinha, V. K. (2024). Comparing Different Machine Learning Techniques in Predicting Diabetes on Early Stage. Engineering Proceedings, 62(1), 20. https://doi.org/10.3390/engproc2024062020