Text Summarization Using Deep Learning Techniques: A Review †

Abstract

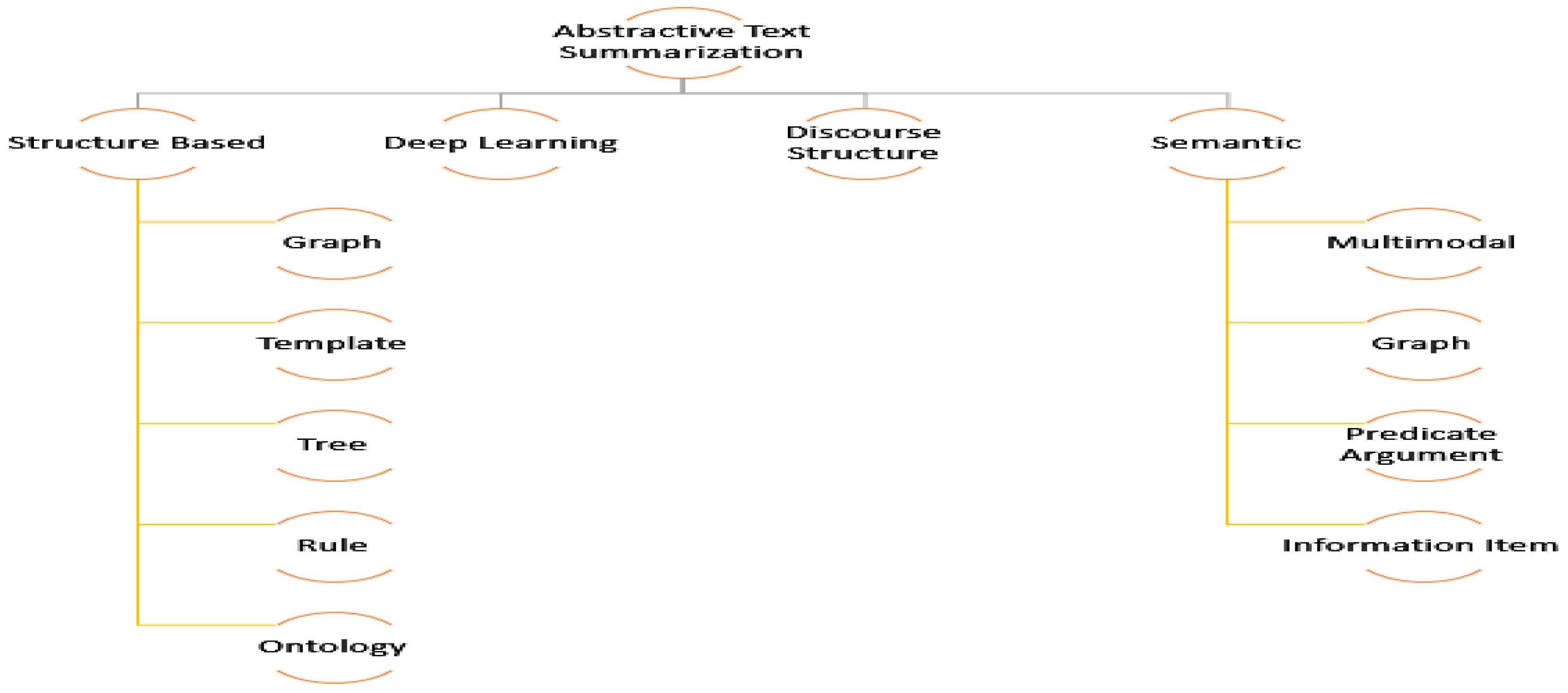

1. Introduction

2. Literature Survey

3. Competitive Analysis

4. Conclusions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Gambhir, M.; Gupta, V. Recent automatic text summarization techniques: A survey. Artif. Intell. Rev. 2017, 47, 1–66. [Google Scholar] [CrossRef]

- Gupta, S.; Gupta, S.K. Abstractive summarization: An overview of the state of the art. Expert Syst. Appl. 2019, 121, 49–65. [Google Scholar] [CrossRef]

- Thu, H.N.T.; Huu, Q.N.; Ngoc, T.N.T. A supervised learning method combine with dimensionality reduction in Vietnamese text summarization. In Proceedings of the 2013 Computing, Communications and IT Applications Conference (ComComAp), Hong Kong, China, 1–4 April 2013; pp. 69–73. [Google Scholar]

- Abuobieda, A.; Salim, N.; Kumar, Y.J.; Osman, A.H. Opposition differential evolution based method for text summarization. In Proceedings of the Asian Conference on Intelligent Information and Database Systems, Kuala Lumpur, Malaysia, 18–20 March 2013; Springer: Berlin/Heidelberg, Germany, 2013; pp. 487–496. [Google Scholar]

- Kabeer, R.; Idicula, S.M. Text summarization for Malayalam documents—An experience. In Proceedings of the 2014 International Conference on Data Science & Engineering (ICDSE), Chicago, IL, USA, 31 March–4 April 2014; pp. 145–150. [Google Scholar]

- Hong, K.; Nenkova, A. Improving the estimation of word importance for news multi-document summarization. In Proceedings of the 14th Conference of the European Chapter of the Association for Computational Linguistics, Gothenburg, Sweden, 26–30 April 2014; pp. 712–721. [Google Scholar]

- Fattah, M.A. A hybrid machine learning model for multi-document summarization. Appl. Intell. 2014, 40, 592–600. [Google Scholar] [CrossRef]

- Zhong, S.; Liu, Y.; Li, B.; Long, J. Queryoriented unsupervised multi-document summarization via deep learning model. Expert Syst. Appl. 2015, 42, 8146–8155. [Google Scholar] [CrossRef]

- Yao, C.; Shen, J.; Chen, G. Automatic document summarization via deep neural networks. In Proceedings of the 2015 8th International Symposium on Computational Intelligence and Design (ISCID), Hangzhou, China, 12–13 December 2015; Volume 1, pp. 291–296. [Google Scholar]

- Singh, S.P.; Kumar, A.; Mangal, A.; Singhal, S. Bilingual automatic text summarization using unsupervised deep learning. In Proceedings of the 2016 International Conference on Electrical, Electronics, and Optimization Techniques (ICEEOT), Chennai, India, 3–5 March 2016; pp. 1195–1200. [Google Scholar]

- Yousefi-Azar, M.; Hamey, L. Text summarization using unsupervised deep learning. Expert Syst. Appl. 2017, 68, 93–105. [Google Scholar] [CrossRef]

- Chopade, H.A.; Narvekar, M. Hybrid auto text summarization using deep neural network and fuzzy logic system. In Proceedings of the 2017 International Conference on Inventive Computing and Informatics (ICICI), Coimbatore, India, 23–24 November 2017; pp. 52–56. [Google Scholar]

- Shirwandkar, N.S.; Kulkarni, S. Extractive text summarization using deep learning. In Proceedings of the 2018 Fourth International Conference on Computing Communication Control and Automation (ICCUBEA), Pune, India, 16–18 August 2018; pp. 1–5. [Google Scholar]

- Sahba, R.; Ebadi, N.; Jamshidi, M.; Rad, P. Automatic text summarization using customizable fuzzy features and attention on the context and vocabulary. In Proceedings of the 2018 World Automation Congress (WAC), Stevenson, WA, USA, 3–6 June 2018; pp. 1–5. [Google Scholar]

- Song, S.; Huang, H.; Ruan, T. Abstractive text summarization using LSTM-CNN based deep learning. Multimed. Tools Appl. 2019, 78, 857–875. [Google Scholar] [CrossRef]

- Abujar, S.; Hasan, M.; Hossain, S.A. Sentence similarity estimation for text summarization using deep learning. In Proceedings of the 2nd International Conference on Data Engineering and Communication Technology, Pune, India, 15–16 December 2019; Springer: Singapore, 2019; pp. 155–164. [Google Scholar]

- Al-Maleh, M.; Desouki, S. Arabic text summarization using deep learning approach. J. Big Data 2020, 7, 109. [Google Scholar] [CrossRef]

- Wazery, Y.M.; Saleh, M.E.; Alharbi, A.; Ali, A.A. Abstractive Arabic Text Summarization Based on Deep Learning. Comput. Intell. Neurosci. 2022, 2022, 1566890. [Google Scholar] [CrossRef] [PubMed]

| Sr. No. | Summaries Depending on Various Criteria’s | Parameters |

|---|---|---|

| 1 | One or many input documents | Various types of text data documents |

| 2 | Some words, new words method of text summarization | Outcomes based on semantics |

| 3 | Query-focused, generic | Purpose |

| 4 | Unsupervised, supervised, and semi supervised | Data Availability |

| 5 | Monolithic, multiple, and cross-lingual | Language-Dependent |

| Ref. No. | Technique Used | Pros | Cons | Corpus Used | Accuracy |

|---|---|---|---|---|---|

| [3] | Three-layer feed-forward network | Used for Vietnamese Text. No other work on Vietnamese text summarization | Human-generated summary is the comparison and evaluation required | Manual Corpus is generated for training and testing; 16,117 Sentences; 300 documents | For 80% text, it has 0.875 precision |

| [4] | Opposition-based learning | Enhance the DE evolutionary search algorithm used for better text clustering | RNN outperforms the method with respect to ROUGE score level 1 | DUC 2002 dataset | ROUGE-2 score 0.22356 |

| [5] | Statistical method and semantic method | Used for Malayalam text summarization Syntactic structure is used for effective summary generation | Time complexity is the key factor while large text summary generation | Manual Corpus is generated using 25 documents containing Malayalam text | ROUGE-L score precision–statistical method 0.637 semantic method 0.466 |

| [6] | REGSUM model | Effective in word weight identification using a novel model to cluster high weight words | DPP outperforms the method with respect to ROUGE2 Score | DUC 2003 Dataset is utilized. | ROUGE-2 score 9.75 |

| [7] | Hybrid model | Effective feature extraction using hybrid approach | CNN outperforms the method with multi later model | DUC 2002 used for analysis. | ROUGE-1 score 0.3862 |

| [8] | Deep learning architecture | Query-based multi-document summarization is developed for English modelling | Ranking SVM outperforms in ROUGE 1,2 Score | DUC 2005, 2006, and 2007 datasets | ROUGE-SU-4 Score 0.1685 |

| [9] | Deep neural network | Outperforms random, LEAD, and LSA algorithms in terms of ROUGE-L Score | DSDR-nonlinear Outperform s in ROUGE-1,2 and L Score | DUC 2006, 2007 Datasets used for training and testing purposes | ROUGE-L score 0.31008, 0.36881 |

| [10] | Restricted Boltzmann machine | Used for bilingual text summarization | RNN outperforms the method in ROUGE-1 Score | Manual Corpus is created and used for testing purposes | ROUGE-1 score 0.85233 |

| [11] | Ensemble noisy AutoEncoder techniques | Summarization using auto encode is generated | Time complexity issue increases with number of connected layers | SKE, BC3 Datasets used for training and testing purpose | ROUGE-2 score 0.5031 |

| [12] | Hybrid model | Performs 31% better than individual models of fuzzy system and ANN | BNN outperform the methods using improved ROUGE-1 Score | Manual Corpus is generated for training and testing | ROUGE-1,2, L score for the model is 0.75 |

| [13] | Hybrid model | Outperforms RBM method in ROUGE-1 | CNN Outperform the Method in ROUGE-2 Score | Manual Corpus is generated for training and testing | ROUGE-1 score 0.84 |

| [14] | Combined model | Outperforms sequence-to-sequence model using ROUGE-1 score | Feature customization leads to complex vocabulary count | CNN, Daily Mail Dataset | ROUGE-1 score 0.3619 |

| [15] | LSTM-CNN model | Improved semantics and syntactic structure for word formation | Time complexity issue with increased layers | CNN, Daily Mail Corpus is used experiment | ROUGE-2 score 0.178 |

| [16] | Sentence similarity measuring model | Used for Bengali text summarization using vocabulary-focused model | Backtracking methods outperform in ROUGE-L Score | Manual Corpus is generated for training and testing | Wu and Palmer measure (WP) 1 |

| [17] | Sequence-to-sequence model for deep learning | Used for Arabic text summarization; structure-based approach is used | Transformer outperforms the method in ROGUE-2 score | Arabic dataset is generated for training and testing | ROUGE-1 score 0.4423 |

| [18] | BiLSTM Model | Used for Arabic Text summarizations using syntax | Time complexity issue | Arabic dataset is generated for training and testing. | ROUGE-L Score 0.3437 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Saiyyad, M.M.; Patil, N.N. Text Summarization Using Deep Learning Techniques: A Review. Eng. Proc. 2023, 59, 194. https://doi.org/10.3390/engproc2023059194

Saiyyad MM, Patil NN. Text Summarization Using Deep Learning Techniques: A Review. Engineering Proceedings. 2023; 59(1):194. https://doi.org/10.3390/engproc2023059194

Chicago/Turabian StyleSaiyyad, Mohmmadali Muzffarali, and Nitin N. Patil. 2023. "Text Summarization Using Deep Learning Techniques: A Review" Engineering Proceedings 59, no. 1: 194. https://doi.org/10.3390/engproc2023059194

APA StyleSaiyyad, M. M., & Patil, N. N. (2023). Text Summarization Using Deep Learning Techniques: A Review. Engineering Proceedings, 59(1), 194. https://doi.org/10.3390/engproc2023059194