Abstract

Brain tumour detection and classification are life-saving steps for humanity. There are many medical imaging techniques that can identify abnormal brain diseases. These include nuclear magnetic resonance, ultrasound, X-rays, radionuclides, lasers, electrons, and light. Because of the outstanding image quality and lack of ionising radiation, magnetic resonance imaging (MRI) is widely employed in medical imaging. Artificial Intelligence provides an easier way to interpret these MRIs, which is otherwise a tedious and time-consuming task. Deep learning networks and convolutional neural networks have been very good in the detection of brain tumours. In this work, the authors employ deep-learning transfer techniques for the classification of brain tumours. The VGG-16, ResNet-50, and Inception v3 models with CNN pre-training have been utilised by the authors to predict and categorise brain tumours automatically. Using a dataset of 7023 MRI brain tumour images divided into four different classifications, pre-trained models are shown to be effective. The performance of the VGG-16, ResNet-50, and Inception v3 models is compared, and it is established from the experimental evaluation that ResNet-50 outperforms VGG-16 and Inception v3. Thus, the employment of ResNet-50 in tumour classification is validated and advocated.

1. Introduction

Cell growth in or close to the brain is referred to as a brain tumour. The tissue of the brain is susceptible to brain tumours. Brain tumours can also develop close to tissue in the brain. Neural pathways and the pituitary, pineal, and brain surface membranes are among the nearby anatomical areas [1]. Primary brain cancers are brain tumours that originate there. Sometimes cancer will spread to the brain from another part of the body. These are secondary or metastatic brain tumours. Brain tumours can be classified as malignant (cancerous) or benign (non-cancerous). Benign brain tumours can be surgically removed, but malignant brain tumours—one of the deadliest types of cancer—may be lethal [2].

Early detection is important for the effective treatment of brain tumours [3]. Primary tumours that begin in the brain or from brain nerves can also be distinguished from metastatic tumours, which have travelled to the brain from other parts of the body. Primary central nervous system lymphomas and gliomas are the most common primary tumours in adults and account for more than 80% of malignant brain tumours and are the two most common primary brain tumour types. Headache, nausea, vision deterioration, seizures, and disorientation are just a few of the symptoms that vary depending on where the lesion is located. For a more detailed categorisation, brain cancers are categorised into four grades: the higher the grade, the more aggressive the tumour. The Global Cancer Statistics 2020 report estimated that in 2020, there would be approximately 308,000 new cases of brain cancer, or 1.6% of all new cancer cases, and approximately 251,000 brain cancer-related deaths, or 2.5% of all cancer-related fatalities [2].

Importance of MRI Biomedical Image Processing

One of the most important steps in medical image processing is classifying images of brain tumours. It aids medical professionals in creating accurate diagnoses and treatment plans. One of the primary imaging methods used to examine brain tissue is magnetic resonance imaging (MR imaging) [4].

Depending on the type of cancer, a skilled radiologist can analyse the brain MRI scan and determine the best course of therapy [5]. This procedure is laborious and prone to human error for large amounts of data and diverse types of brain tumours [6]. The magnetic resonance imaging (MRI) approach scans and displays internal organ images within the human body using a very strong magnet, magnetic field gradients, and computer-generated radio waves. In order to better treat patients, medical personnel must examine brain tumours using MRI scans. An analytical advancement in medicine called MRI creates high-resolution images that can be used to find and identify tumours in the human brain. Compared to CT scans, MRIs produce higher-quality, more detailed images. MRI scans the internal organ using magnetic radio waves.

2. Materials and Methods/Methodology

The dataset acquired in this study is taken from Kaggle Dataset. The considered database is classified into 4 different segments, namely, Glioma, Pituitary, Meningioma, and No tumour. It is a collection of 7023 raw MRI images of brain MRIs in JPEG format with different dimensions (width × height) usually measured in terms of pixel values.

2.1. Literature Review

In this section, the authors discuss the notable work completed by researchers in the related domain. The authors present state-of-the-art methods which primarily include CNNs. For instance, the authors in [7] used various categorisation algorithms based on CNN architectures, including VGGNets, GoogleNet, and ResNets, each with several repetitions. Per the results achieved in [7], it is observed that ResNet-50 aced the implementation by yielding an accuracy of 96.50%. This is followed by GoogleNet and VGGNets, achieving an accuracy of 93.45% and 89.33%, respectively.

Further, the authors in [8] implemented leave-one-out cross-validation and achieved the highest accuracy of 85% and 75% using support vector machine and linear discriminant analysis, respectively. The study contends that a set of vital metabolites aids in the differentiation of paediatric brain tumours. In another work by the authors in [9], several models were used including VGG-16, ResNet-50, and EfficientNet-B0. During comparative analysis, it was observed that EfficientNet-B0 outperforms the other models, yielding an accuracy of 98.8%.

Carrying out the research further, the authors in [10] proposed a 5-step methodology. The edges in the source image are found using linear contrast stretching in the first stage. A unique 17-layered deep neural network architecture is created specifically for the segmentation of brain tumours in stage 2. For feature extraction in step 3, a modified MobileNetV2 architecture is used. Transfer learning is then used to train the system. In step 4, M-SVM is utilised for brain tumour classification to detect the meningioma, glioma, and pituitary pictures. In step 5, the best features are selected using an entropy-based controlled technique in conjunction with an M-SVM (multiclass support vector machine). This 5-step process exceeds other ways both aesthetically and numerically, according to an experimental investigation that achieved an accuracy of 97.47% and 98.92%.

Further, in a hybrid-deep-learning-model-based study conducted by the authors in [11], an accuracy of 93.1% was achieved from the finely tuned Google-Net model. In the same work, the authors achieved an accuracy of 98.1% using Google-Net with an SVM. Extending the research further, the authors in [12] used 3 CNN-based pre-trained models, namely, MobileNetV2, VGG19, and Inception v3 to classify brain X-ray images. ImageNet was used to investigate a transfer learning technique for handling tiny data. The F1 scores for brain tumours and healthy individuals are 93% were 91%, respectively, for MobileNetv2.

Two standards datasets, namely, brain tumour segmentation (BraTS) Challenge 2017 and 2018, were also worked upon by the authors in [13] using a U-Net model. The model achieved an accuracy of 93.40% and 92.20%, respectively, with segmentation. In the work, the authors classified the images into 3 different categories, viz. tumour core (TC), enhancing core (EC), and whole tumour (WT). The efficacy of U-Net was established during the comparative evaluation. Similarly, the authors in [14] worked in this direction of tumour identification through hyperparameter tuning. The parameters that yielded better results were chosen. In that work, also, the efficacy of the CNN-based U-Net model was advocated as it yields an accuracy of 92%.

The authors in [15] also employed a transfer learning approach to perform a comparative evaluation of the VGG-16, ResNet-50, and Inception v3 models. It was observed that these models, namely, the VGG-16, ResNet-50, and Inception v3 models, yielded an accuracy of 96%, 89%, and 75%, respectively. Thus, it can be concluded from the results that VGG-16 outperformed the other two models. Further, the authors in [16] also used a CNN-based approach. During implementation, it was observed that learning accuracy was 47.02% during the first epoch, which escalated to 96% in 15 epochs. Further, when tested on 25 new MRI images, it yielded an accuracy of 96%. The authors in [2] explored the potential for using VGG-16 for brain tumour classification and achieved an accuracy of 91% which was increased to 94% after effective hyperparameter tuning.

Thus, according to the above literature survey, deep learning has a huge potential and capability which can be employed to devise an efficient model for automated brain tumour classification.

2.2. Proposed Methodology

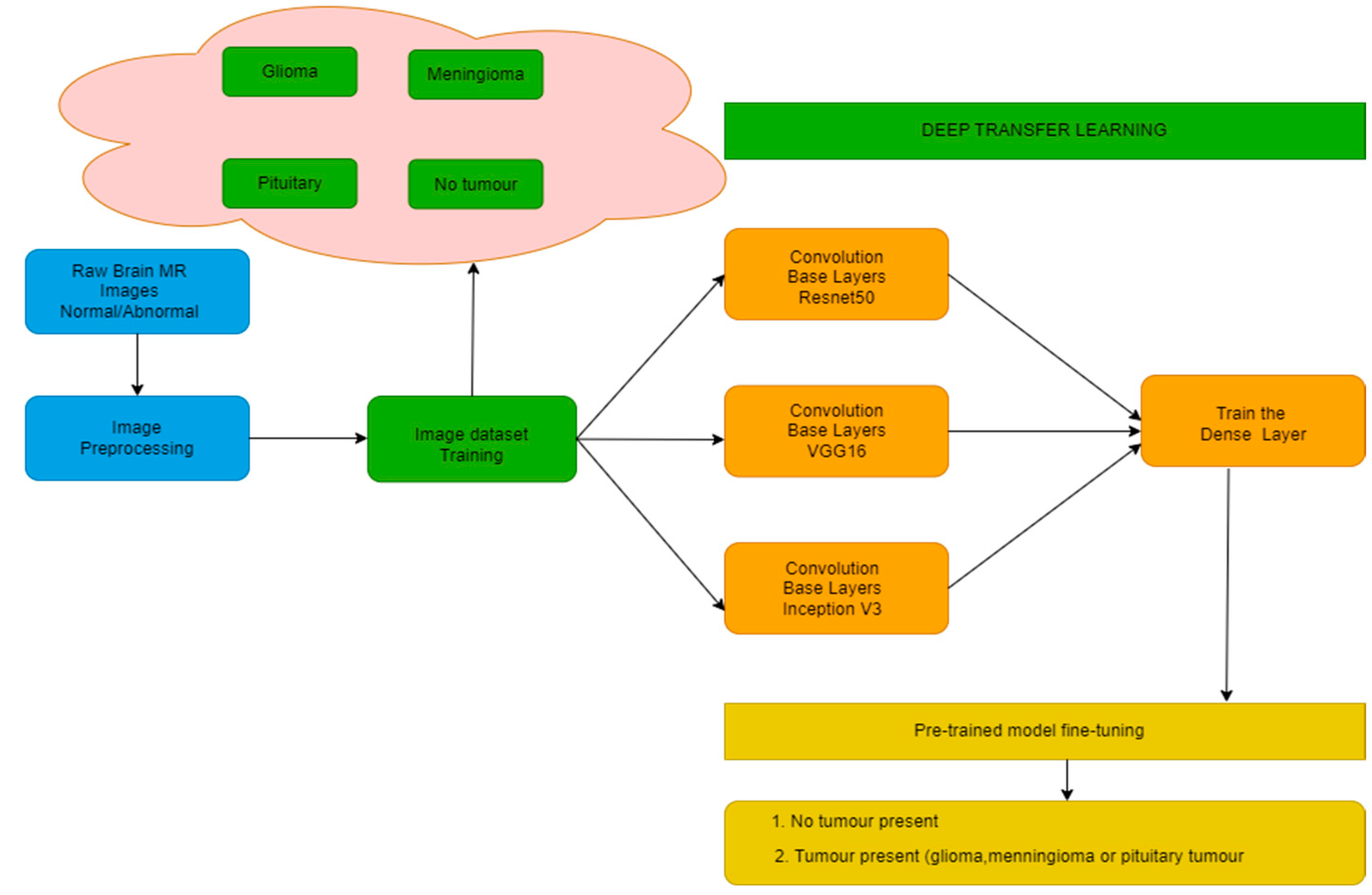

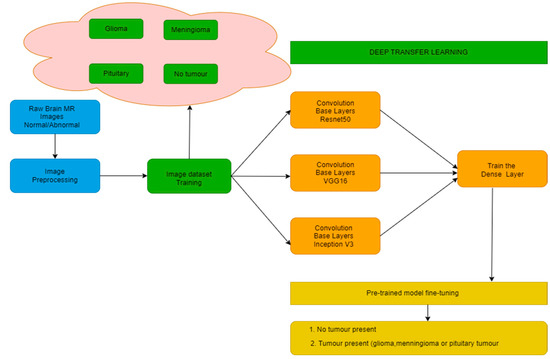

The authors have proposed a methodology consisting of various steps as shown in Figure 1. The steps include image preprocessing and training them using 3 different models, namely, VGG-16, ResNet-50, and Inception v3. This training is conducted with homogeneous as well as heterogeneous amounts of images for all target classes.

Figure 1.

Block Diagram for Proposed Methodology.

2.3. Data Collection and Preprocessing

Once the data is collected, it needs to be preprocessed to remove noise in terms of outliers and missing values. For the same, the authors have used Gaussian blur, Threshold, Erosion, and Dilation. Further, as the dataset consists of images of different sizes, it needs to be resized to maintain uniformity in the size of images. The authors identify contours in the threshold image for the same purpose before identifying extreme points to crop the picture. Finally, the image is resized to 256 × 256. Further, intensity normalisation is strongly recommended for brain MRI analysis. This is required, as a variety of scanners are used during image data acquisition at different time periods which may cause huge variations in pixel intensity [17]. Anomalies could also be observed in MRI brain images as a result of MRI equipment limitations in terms of inadequate image resolution, distortion, inhomogeneity, misinterpretation, and motion heterogeneity. These anomalies, if not handled properly, may lead to incorrect diagnosis, and hence may influence the treatment choices for patients. For the same, image in dataset is converted to grayscale, and a Gaussian blur is added to reduce image noise and detail. Then, every image undergoes Thresholding (partitioning the image into a foreground and background for better understanding of the main tumour part) [18]. Further, the image undergoes iterations of Erosion and Dilation to remove further noise. From these images, contours (outlines drawn to identify the tumour) are identified in the images. Then, extreme points in those contours are identified for advanced edge detection.

2.4. Transfer Learning Methods

The authors have used the different transfer learning methods as follows.

2.4.1. VGG-16 Transfer Learning Approach

The first model to be tested by the authors for the classification of brain tumours was the VGG-16 CNN model [19]. The previously trained layers were frozen to prevent any data they may have had from being lost during the following rounds. The frozen layers were followed by further layers. In a new dataset, these layers will discover how to forecast using the previous features. The model receives 224 × 224 × 3-dimensional brain MRI pictures as input. This input is passed to 3 × 3 filters of the convolutional layer along with 5 max-pooling layers, and output is obtained using activation function SoftMax. This is nearly 138 million hyperparameters. The model is fine-tuned for VGG-16 using validation accuracy as monitor for each epoch. The results were tested best with 20 epochs. Our model incorporates these layers along with a dense layer of 128 units with activation function ReLu and another dense layer of 4 units with activation function SoftMax. The optimiser, loss, epochs, and metrics are tuned to provide highly optimal results.

2.4.2. ResNet-50 Approach

Kaiming He et al. and colleagues at Microsoft Research first developed ResNet-50, a 50-layer residual network with 26M parameters, in 2015 [20] for image recognition and classification. The term “residual” in a residual network is referred to as “feature subtraction”. Rather than acquiring new features, we acquire knowledge from the removed features obtained from the input of the layer [21]. Stacking more layers results in a deep neural network; the ResNet-50 network establishes a direct connection between the nth layer input and some (n + x)th layer. When compared to the VGG-16 or VGG-19 models, the time complexity of this residual network is reduced. In our experiment, we adopted a pre-trained ResNet-50 model with 23 million hyperparameters and modified it to accept an input size of (150,150,3). It is further calibrated with one dense SoftMax and ReLu layer of 256 and 4 units, respectively. The model is fine-tuned using a very low starting learning rate of 2 × 10−6 and monitoring loss.

2.4.3. Inception v3 Approach

A 48-layer pre-trained convolutional neural network called Inception v3 has previously been trained on more than a million photos from the ImageNet collection [22]. This pre-trained network can categorise photos into 1000 different object categories, including a variety of animals, a keyboard, a mouse, and a pencil. Consequently, a variety of photo types now have thorough feature representations on the network. The network supports images with dimensions of 150 by 150 pixels. The model first collects geometric features from the input photographs, and then it uses those features to classify the images in the second portion [23]. The Inception v3 approach consists of adding two Dense layers and a Dropout layer between them to provide optimal results. This model is fine-tuned by keeping the learning rate at 1 × 10−4 and monitoring the validation accuracy at each epoch.

2.5. Performance Metrics

In this work, the authors have used widely used performance metrics to establish the comparative evaluation for various learning methods. Employed performance metrics are accuracy, precision, recall, support, and F1 score, which can be mathematically represented using Equations (1)–(4):

Accuracy = (TP + TN)/(TP + FN + FP + TN),

Precision = (TP)/(TP + FP),

Recall = TP/(TP + FN),

F1 = 2 × (Precision × Recall)/(Precision + Recall),

The efficacy of various methods is evaluated with respect to these performance metrics in the subsequent section.

3. Results

For the purpose of simulation of the proposed methodology, the authors have taken the dataset from Kaggle, available at https://www.kaggle.com/datasets/masoudnickparvar/brain-tumor-mri-dataset (accessed on 26 February 2023). As discussed earlier, the database has 4 different classes, namely, Glioma, Pituitary, Meningioma, and No tumour. Among 7023 total images, the classification of images into training and testing data is as shown in Table 1. Because the dataset is imbalanced, the authors have also experimented with a balanced dataset, and the results hold. This means that the dataset contains classes in an imbalance that does not affect the results.

Table 1.

Split of Training and Testing Data.

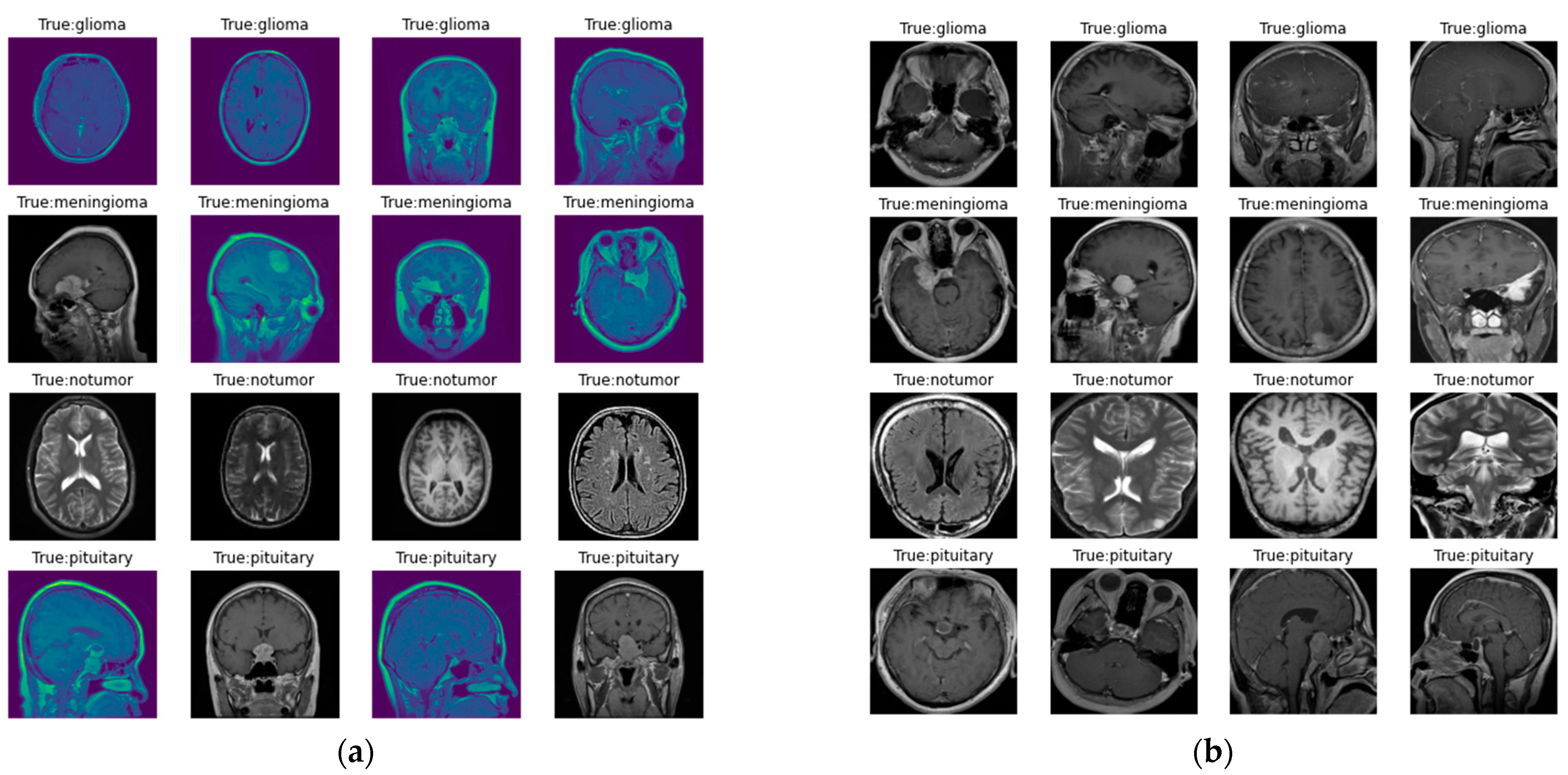

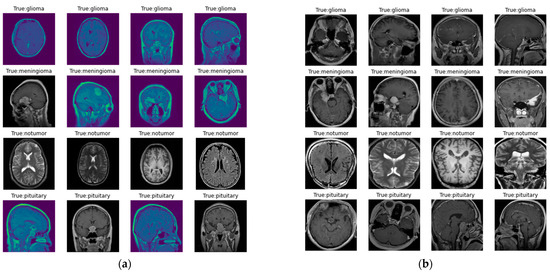

The images in the dataset are represented in Figure 2, where Figure 2a represents the original image, and Figure 2b represents the image following preprocessing. Here, as discussed earlier, preprocessing involves contour detection and image resizing to 256 × 256 pixels to maintain uniformity in size.

Figure 2.

Images in the dataset: (a) original images of the dataset; (b) images in the dataset after preprocessing.

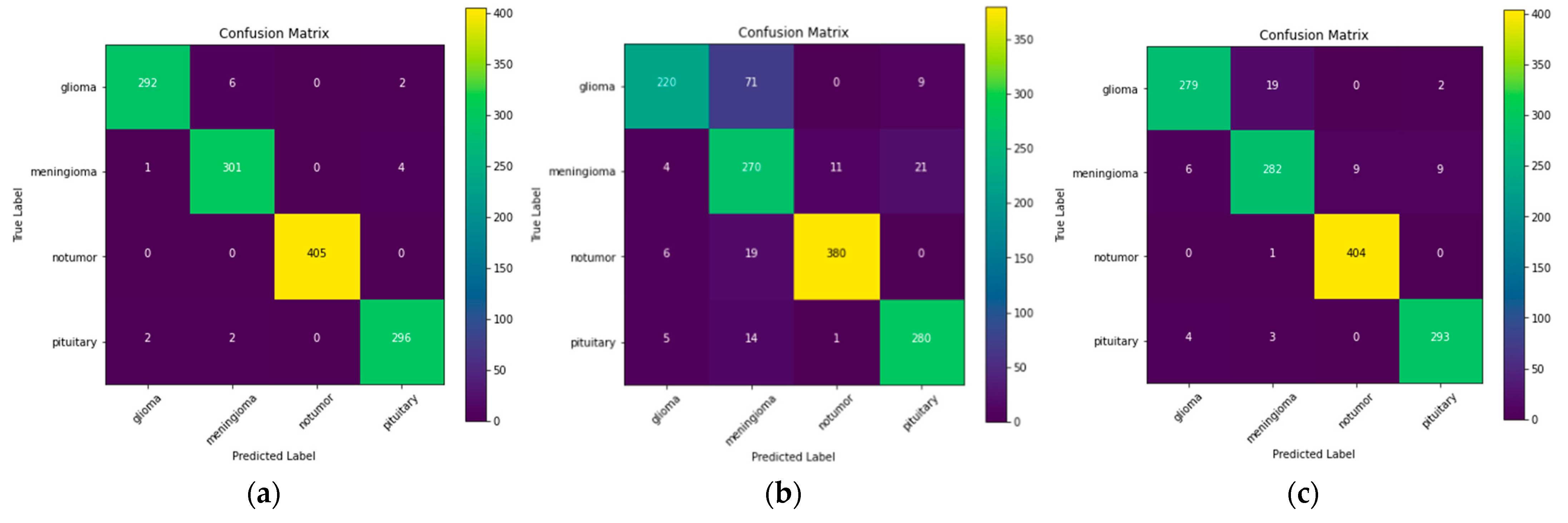

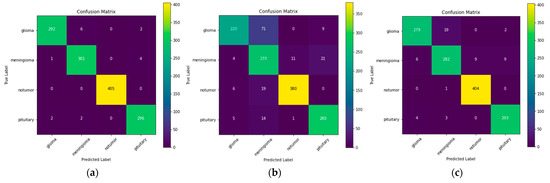

Now the authors have used three different models, namely, ResNet-50, VGG-16, and Inception v3. The confusion matrix for these three models is illustrated in Figure 3.

Figure 3.

Confusion matrix for: (a) ResNet-50; (b) VGG-16; (c) Inception v3.

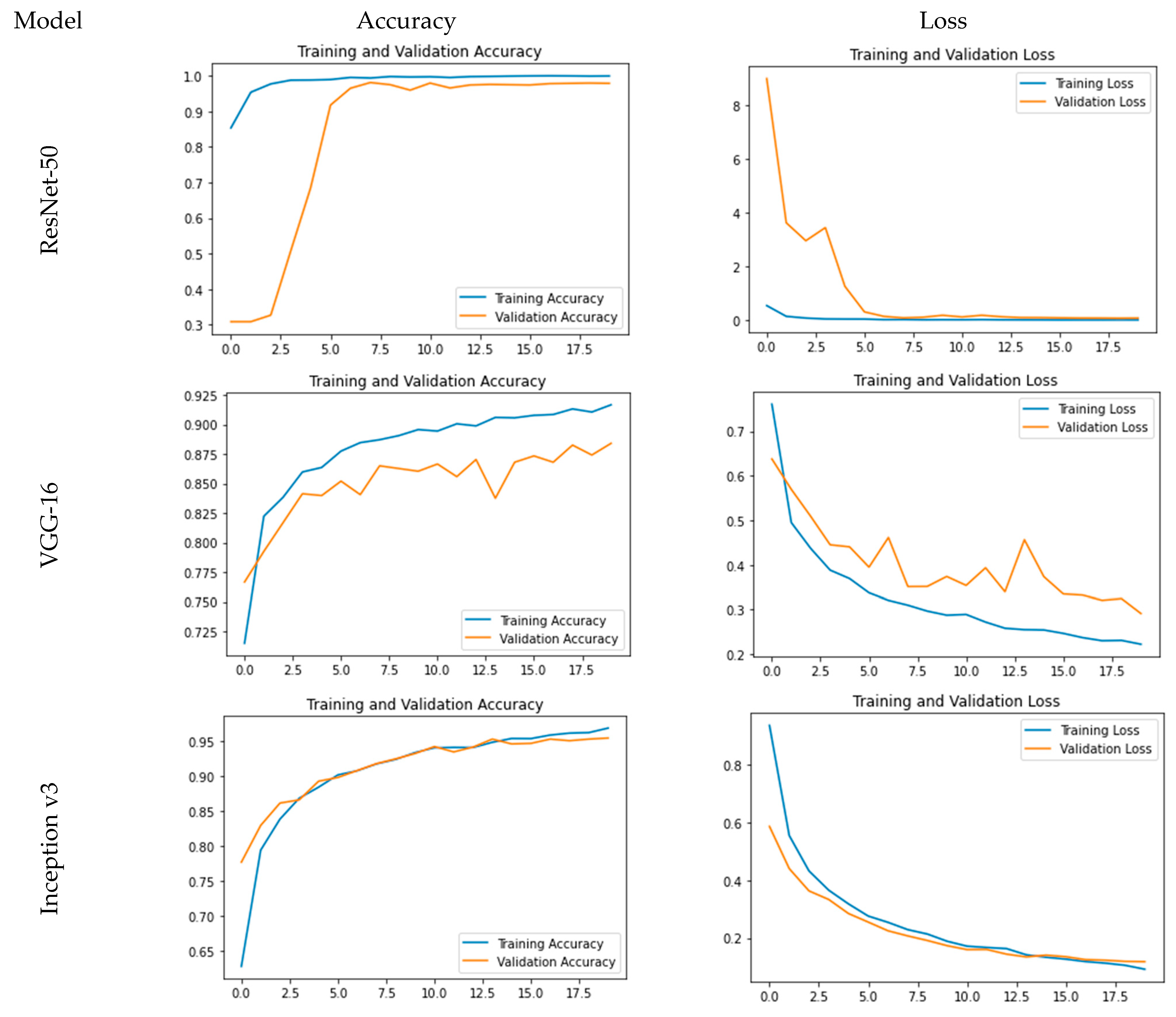

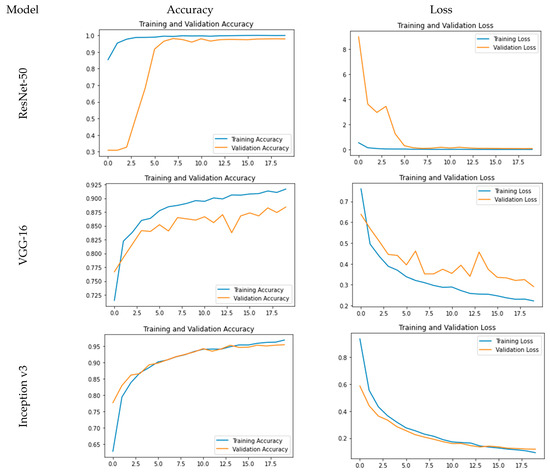

The accuracy and loss plots for the used models are pictorially represented in Figure 4. From Figure 4, it is evident that for ResNet-50, accuracy is a steep growing curve for training as well as validation accuracy. Also, the loss decreases and reaches a minimum of 0.24%, and the accuracy reaches a maximum of 99.93% with a validation accuracy of 97.86%. Further, for VGG-16, while the loss decreases and reaches a minimum of 22.23%, the accuracy reaches a maximum of 91.67% with a validation accuracy of 88.41%. As far as Inception v3 is concerned, it is clear that while the loss decreases and reaches a minimum of 7.81%, the accuracy reaches a maximum of 97.36% with a validation accuracy of 95.80%.

Figure 4.

Comparative analysis for training and validation accuracy and loss.

Also, the comparative analysis of the models is given in Table 2, which gives the precision, recall, and F1 score for all 3 models for all different categories. A precision score is used to assess how well the model performs in counting true positives, which comes out to be the highest for ResNet-50. The recall score is used to assess how well a model performs in terms of accurately counting true positives among all real positive values, which is also the highest for ResNet-50. The F1 score, which is the harmonic mean of the precision and recall scores, is also employed as a metric. From the comparative analysis, it is evident that ResNet-50 outperforms VGG-16 and Inception v3 in terms of all performance metrics. This improvement is significant and, thus, establishes the efficacy of ResNet-50 over comparative models.

Table 2.

Comparison of Precision, Recall, and F1 Score.

4. Discussion

This research work aims to improve the existing pre-trained deep convolutional neural networks with transfer learning architectures to identify tumours in the brain using MRI images. This study considers four different classes, viz. pituitary tumours, meningiomas, gliomas, and no tumours. The dataset, taken from Kaggle, consists of 7023 images. In order to carry out the research, the authors have used pre-trained transfer learning CNN architectures, namely, VGG-16, Inception v3, and ResNet-50, for classification. The experiment was carried out to perform comparative analysis, and during the simulation, it was observed that ResNet-50 achieves an accuracy of 99.93% and a validation accuracy of 97.86%, outperforming VGG-16 and Inception v3. This research work can be further extended by using CNN-based models that have already been trained, such as the VGG-19, ResNet-104, and EfficientNet-based CNN-based models, in the prediction analysis of brain tumours.

Author Contributions

The authors participated in this work as follows: conceptualisation, J.J. and M.K.; methodology, M.K. and P.T.; software, J.J. and M.M.; validation, J.J. and M.K.; formal analysis, M.M. and P.T.; investigation, M.M. and P.T.; resources, J.J. and M.K.; writing—original draft preparation, J.J. and M.K.; writing—review and editing, M.M. and P.T.; visualisation J.J. and M.K.; supervision, M.M. and P.T. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not Applicable.

Informed Consent Statement

Not Applicable.

Data Availability Statement

The dataset acquired in this study is taken from Kaggle, which is available at https://www.kaggle.com/datasets/masoudnickparvar/brain-tumor-mri-dataset (accessed on 26 February 2023).

Conflicts of Interest

The authors declare no conflicts of interest.

References

- DeAngelis, L.M. Brain tumors. New Engl. J. Med. 2001, 344, 114–123. [Google Scholar] [CrossRef] [PubMed]

- Gayathri, P.; Dhavileswarapu, A.; Ibrahim, S.; Paul, R.; Gupta, R. Exploring the Potential of VGG-16 Architecture for Accurate Brain Tumor Detection Using Deep Learning. J. Comput. Mech. Manag. 2023, 2, 13–22. [Google Scholar] [CrossRef]

- Gumaei, A.; Hassan, M.M.; Hassan, M.R.; Alelaiwi, A.; Fortino, G. A hybrid feature extraction method with regularized extreme learning machine for Brain Tumor Classification. IEEE Access 2019, 7, 36266–36273. [Google Scholar] [CrossRef]

- Gu, X.; Shen, Z.; Xue, J.; Fan, Y.; Ni, T. Brain tumor MR image classification using convolutional dictionary learning with local constraint. Front. Neurosci. 2021, 15, 679847. [Google Scholar] [CrossRef] [PubMed]

- Wasule, V.; Sonar, P. Classification of brain MRI using SVM and KNN classifier. In Proceedings of the 2017 Third International Conference on Sensing, Signal Processing and Security (ICSSS), Chennai, India, 4–5 May 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 218–223. [Google Scholar]

- Abiwinanda, N.; Hanif, M.; Hesaputra, S.T.; Handayani, A.; Mengko, T.R. Brain Tumor Classification Using Convolutional Neural Network; IFMBE Proceedings; Springer: Berlin/Heidelberg, Germany, 2018; pp. 183–189. [Google Scholar]

- Sangeetha, R.; Mohanarathinam, A.; Aravindh, G.; Jayachitra, S.; Bhuvaneswari, M. Automatic detection of brain tumor using deep learning algorithms. In Proceedings of the 2020 4th International Conference on Electronics, Communication and Aerospace Technology (ICECA), Coimbatore, India, 5–7 November 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 1–4. [Google Scholar]

- Zhao, D.; Grist, J.T.; Rose, H.E.L.; Davies, N.P.; Wilson, M.; MacPherson, L.; Abernethy, L.J.; Avula, S.; Pizer, B.; Gutierrez, D.R.; et al. Metabolite selection for machine learning in childhood brain tumour classification. NMR Biomed. 2022, 35, e4673. [Google Scholar] [CrossRef] [PubMed]

- Agrawal, V. ECG-Based User Authentication Using Deep Learning Architectures. Ph.D. Thesis, University of Victoria, Victoria, BC, Canada, 2023. [Google Scholar]

- Maqsood, S.; Damaševičius, R.; Maskeliūnas, R. Multi-modal Brain tumor detection using deep neural network and multiclass SVM. Medicina 2022, 58, 1090. [Google Scholar] [CrossRef] [PubMed]

- Rasool, M.; Ismail, N.A.; Boulila, W.; Ammar, A.; Samma, H.; Yafooz, W.M.; Emara, A.H.M. A Hybrid Deep Learning Model for Brain Tumour Classification. Entropy 2022, 24, 799. [Google Scholar] [CrossRef] [PubMed]

- Tazin, T.; Sarker, S.; Gupta, P.; Ayaz, F.I.; Islam, S.; Monirujjaman Khan, M.; Bourouis, S.; Idris, S.A.; Alshazly, H. A robust and novel approach for brain tumor classification using Convolutional Neural Network. Comput. Intell. Neurosci. 2021, 2021, 2392395. [Google Scholar] [CrossRef] [PubMed]

- Pedada, K.R.; Rao, B.; Patro, K.K.; Allam, J.P.; Jamjoom, M.M.; Samee, N.A. A novel approach for brain tumour detection using deep learning based technique. Biomed. Signal Process. Control. 2023, 82, 104549. [Google Scholar] [CrossRef]

- Kokila, B.; Devadharshini, M.S.; Anitha, A.; Abisheak Sankar, S. Brain tumor detection and classification using deep learning techniques based on MRI images. J. Phys. Conf. Ser. 2021, 1916, 012226. [Google Scholar] [CrossRef]

- Khan, H.A.; Jue, W.; Mushtaq, M.; Mushtaq, M.U. Brain tumor classification in MRI image using convolutional neural network. Math. Biosci. Eng. 2021, 17, 6203–6216. [Google Scholar] [CrossRef] [PubMed]

- Ahmed Mohammed, B.; Shaban Al-Ani, M. An efficient approach to diagnose brain tumors through deep CNN. Math. Biosci. Eng. 2021, 18, 851–867. [Google Scholar] [CrossRef] [PubMed]

- Deepak, S.; Ameer, P.M. Brain Tumor Classification using Deep CNN features via transfer learning. Comput. Biol. Med. 2019, 111, 103345. [Google Scholar] [CrossRef] [PubMed]

- Senthilkumaran, N.; Vaithegi, S. Image segmentation by using thresholding techniques for medical images. Comput. Sci. Eng. Int. J. 2016, 6, 1–13. [Google Scholar]

- Belaid, O.N.; Loudini, M. Classification of brain tumor by combination of pre-trained vgg16 cnn. J. Inf. Technol. Manag. 2020, 12, 13–25. [Google Scholar]

- Chato, L.; Latifi, S. Machine learning and deep learning techniques to predict overall survival of brain tumor patients using MRI images. In Proceedings of the 2017 IEEE 17th International Conference on Bioinformatics and Bioengineering (BIBE), Washington, DC, USA, 23–25 October 2017. [Google Scholar]

- Srinivas, C.; KS, N.P.; Zakariah, M.; Alothaibi, Y.A.; Shaukat, K.; Partibane, B.; Awal, H. Deep transfer learning approaches in performance analysis of brain tumor classification using MRI images. J. Healthc. Eng. 2022, 2022, 3264367. [Google Scholar] [CrossRef] [PubMed]

- Ganesan, M.; Sivakumar, N.; Thirumaran, M.; Vengattaraman, T. Internet of medical things and cloud enabled brain tumour diagnosis model using Deep Learning with Kernel Extreme Learning Machine. Int. J. Electron. Healthc. 2022, 12, 203. [Google Scholar] [CrossRef]

- Xenya, M.C.; Wang, Z. Brain Tumour Detection and Classification using Multi-level Ensemble Transfer Learning in MRI Dataset. In Proceedings of the 2021 International Conference on Artificial Intelligence, Big Data, Computing and Data Communication Systems (icABCD), Durban, South Africa, 5–6 August 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 1–7. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).