Abstract

Coronavirus (COVID-19) is a fast-spreading virus-related disease. On 28 March 2022, Worldometer (COVID-19 live update) reported that there were about 482,338,923 COVID-19 cases and 6,149,387 fatalities worldwide. Moreover, there were about 416,884,712 recovered patients. The primary clinical mechanism currently utilized for COVID-19 identification is the Reverse Transcription–Polymerase Chain Reaction (RT-PCR). Hospitals only have small quantities of COVID-19 test kits available due to the daily increase in cases. As an alternative diagnosis possibility, an automatic detection system was implemented. A vigorous technique for the automatic COVID-19 identification is the deep learning approach. Chest X-ray (CXR) imaging is a modest tool that can be an alternate for diagnosing COVID-19-infected patients. With the use of deep learning, deep layer characteristics that are hidden from human sight may be observed using CXR imaging. One of the largest public databases, the “COVID-19 Radiography Database”, comprises 21,164 CXR images and was taken from Kaggle. To achieve the best accuracy in this work, data cleansing and the balanced dataset approach were applied. The primary goal of data cleansing is to remove duplicate CXR images from the database. The accuracy of three distinct pre-trained Convolutional Neural Networks (CNNs) was compared and then analyzed (Xception, InceptionV3, and MobileNetV2). Among other models, Xception achieved the best testing accuracy of 94.13% with plain lung CXR pictures. The Gabor filtering image enhancement approach was also employed to identify COVID-19. Only for the MobileNetV2 model did enhance CXR images perform significantly better for classification than plain lung CXR images. This study attempts to enhance the system’s accuracy to 100%, outperforming previous tests.

Keywords:

COVID-19; Xception; InceptionV3; MobileNetV2; deep learning; CXR images; CNN; image enhancement 1. Introduction

COVID-19 is a highly transmittable disease [1]. A CXR image-based COVID-19 detection system is fast and widely available and has the ability to analyze multiple cases simultaneously. A CNN model was proposed to categorize COVID-19, viral pneumonia, lung opacity, and otherwise normal classes. In this paper, various previous works were focused on to formulate a deep learning technique to recognize COVID-19-infected individuals. Table 1 summarizes the previous related studies. Section 2 presents the methodology. Section 3 demonstrates the experiment’s results and the related discussion. Section 4 has the conclusion.

Table 1.

Database [2] and used dataset statistics.

2. Methodology

2.1. Dataset

In this experimental analysis, four classes of the ‘COVID-19 Radiography Database’ were used [2]. Normal, viral pneumonia, lung opacity, and COVID-19 were the classes that related to CXR images. Among the most significant public datasets with 21,164 CXR images is the “COVID-19 Radiography Database”. All data are CXR images, and each image is in PNG format with the resolution of 299 × 299 pixels. Table 2 displays the number of images in the dataset.

Table 2.

Summary of the related work.

2.2. Pre-Processing

Before applying CXR images as input to the systems, they were resized. Each CNN had a different set of input specifications. With CAD, the resizing procedure is integrated. Most CNNs were trained on 224 × 224 pixels image resolution [14]. Therefore, the size changed from 299 × 299 pixels to 224 × 224 pixels. An image data generator was used for data augmentation with rescaling at 1/255 and a rotation range value of 40. The range of height shift, width shift, shear, and zoom was the same as 0.2, and the horizontal flip was set as ‘True’.

The classes in the database contained a various number of images. The class imbalance issue affects accuracy [10], and hence the dataset was balanced by using an exact number of images in the viral pneumonia class because it has the least number of images.

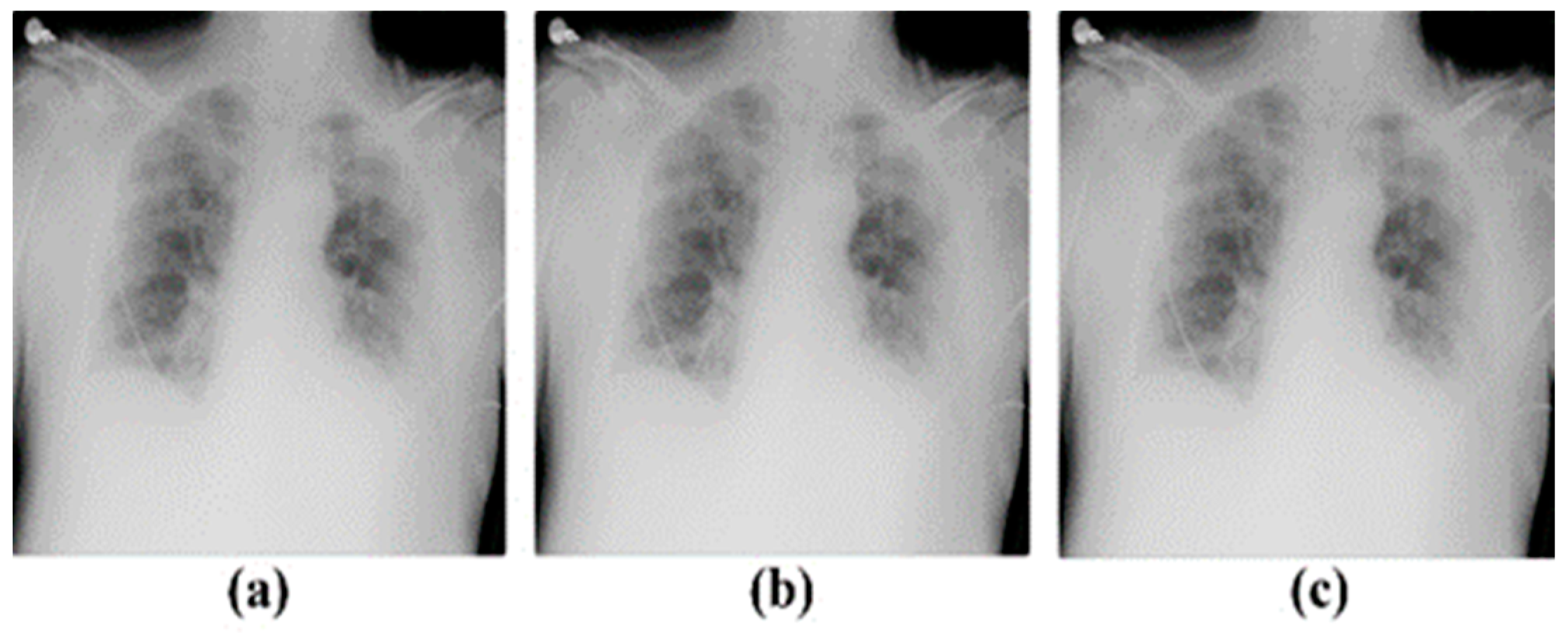

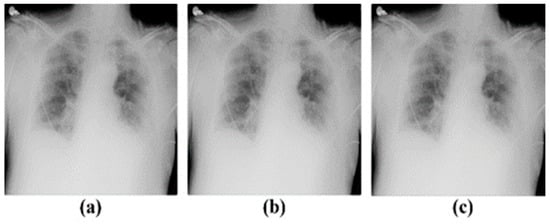

Many duplicate CXR images were available in the dataset, and the “dupeGuru” tool was used to identify the duplicates [15]. Some images had 100% similar copies, and some had more than 70% similarity. Figure 1 shows a set of sample duplicate images in the database. The CXR images with less than 70% similarity display some visible differences. Due to this, images with less than 70% similarity were selected to process. A new dataset was created by removing duplicates with more than 70% similarity. After the cleansing process, the “dupeGuru” tool was used several times to create a balanced dataset.

Figure 1.

A sample of duplicate CXR images: (a) COVID-1, (b) COVID-140, and (c) COVID-591.

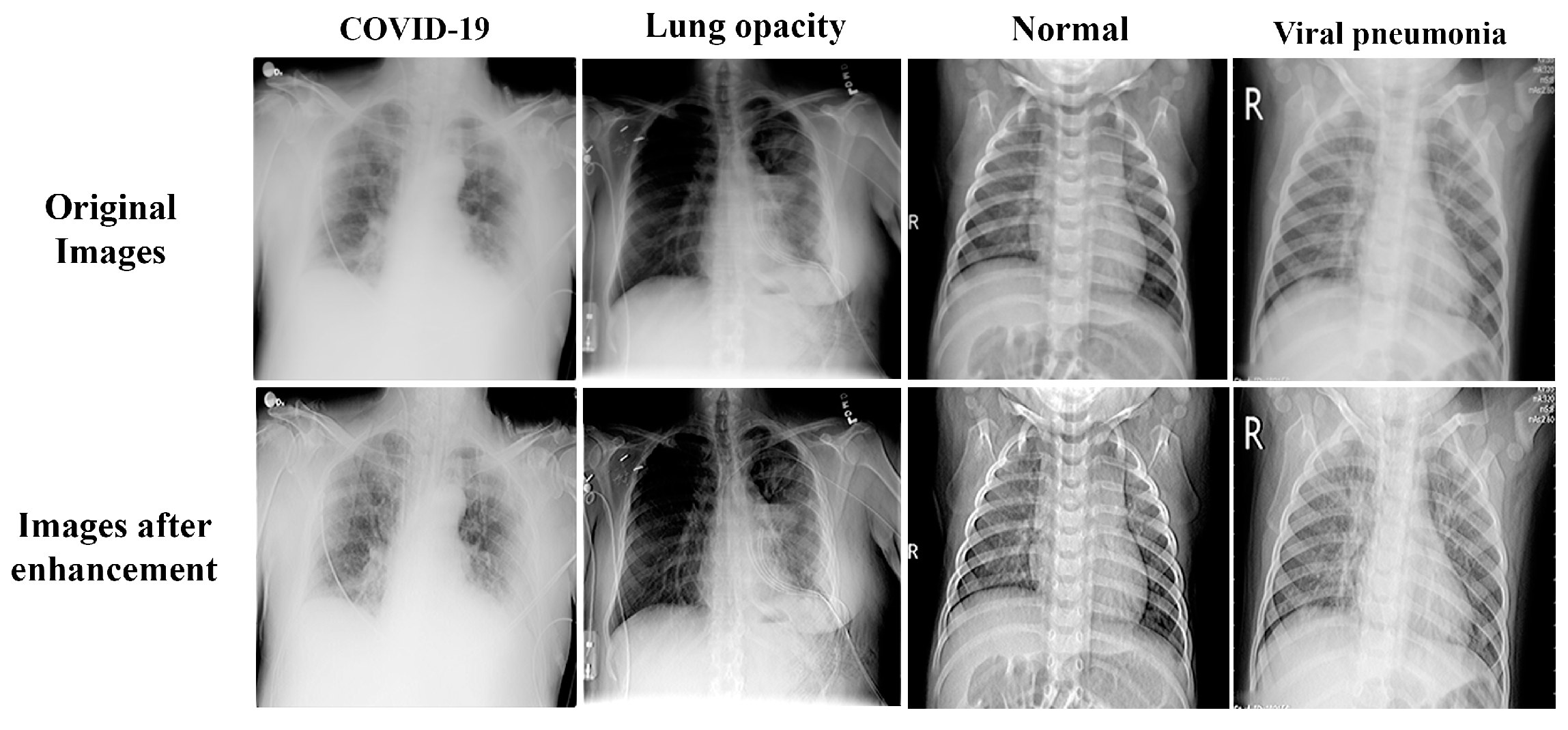

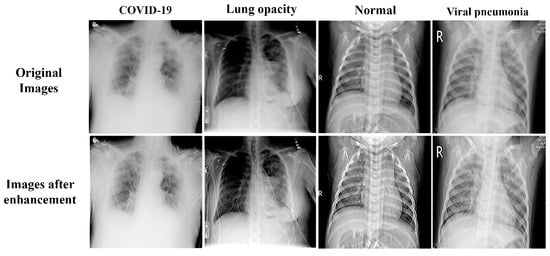

As a technique for the image enhancement process, Gabor filtering was used on the CXR image. A convolution filter called Gabor represents a term that combines sinusoidal and gaussian [16]. The sine element gives the directionality, whereas the Gaussian element yields the weights. The optimal parameter values were lambda = 0.785; Theta = 1.571; Gamma = 0.9; sigma = 1; phi = 0.8; ksize = 15 in Gabor filter. The variation between the original and enhanced images in the four classes is exhibited in Figure 2.

Figure 2.

The input CXR images and the output CXR images after passing through the Gabor filter bank.

2.3. Proposed Method

Xception: It is an alteration of the Inception Net. In this model, the Inception Net changed in terms of the size of the parameter, but the parameters of this method are closer to the inception net. And also, the performance of the model was moderately better. This Xception model has 22.9 million parameters and is 88 MB in size [17].

InceptionV3: It consists of a pre-trained CNN architecture with 48 layers. It is an earlier version of the network that was trained using the ImageNet database. This model has 23.9 million parameters and is 92 MB in size [17].

MobileNetV2: It is a CNN network that contains 53 layers. It has several advantages such as a reduced network, fewer parameters, a smaller number of operations, excellent efficiency, and low power consumption. This model has 3.5 million parameters and is 14 MB in size [17].

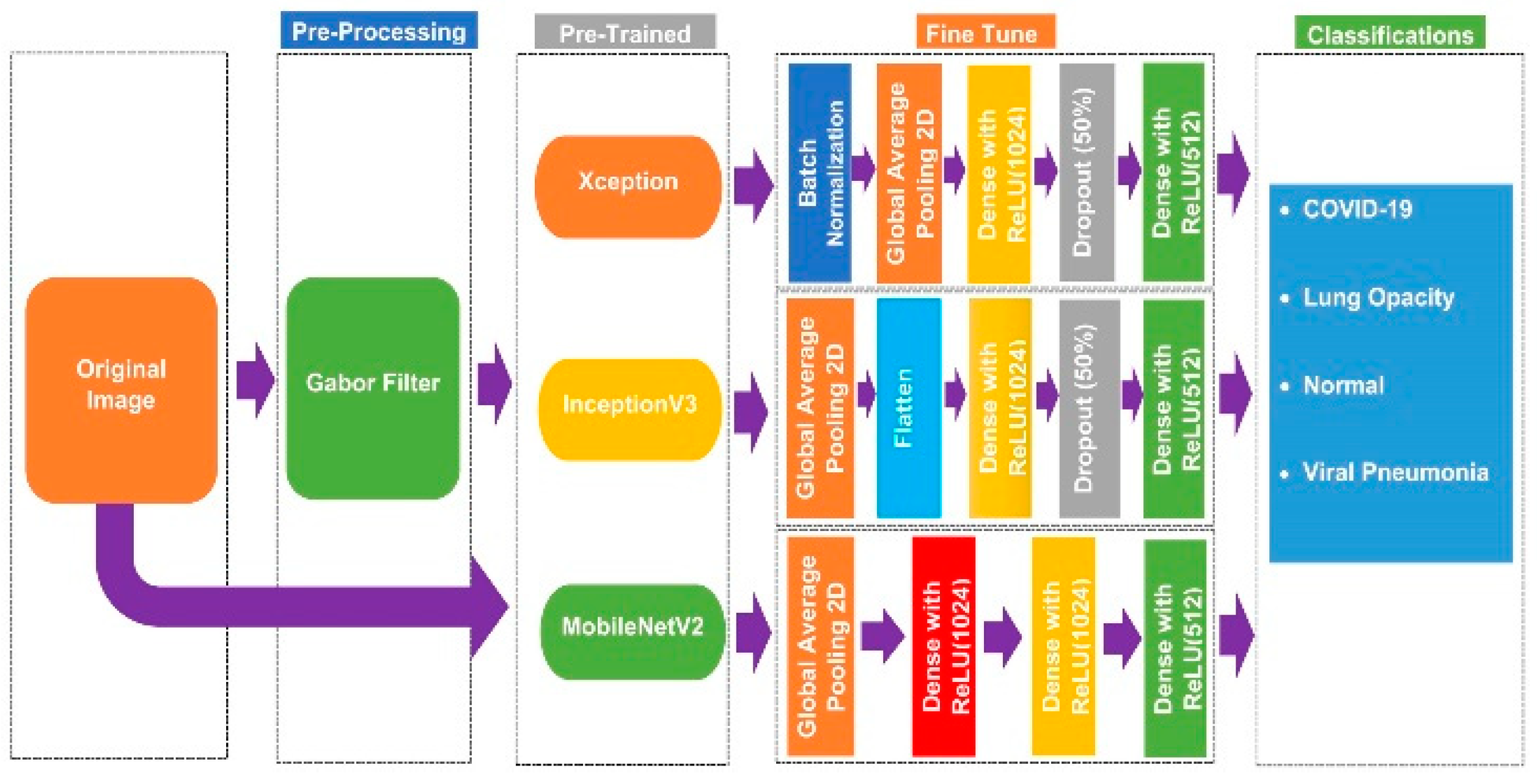

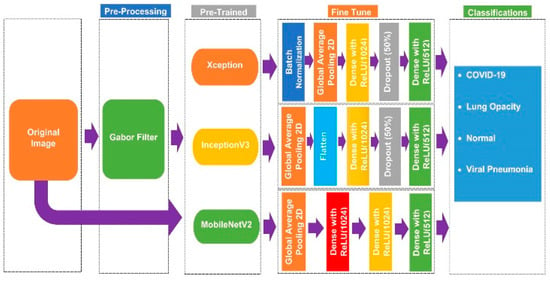

Fine-tuning is a common technique for transfer learning [18]. It uses a model that has already been trained to complete a specific task and then fine-tunes it to carry out a second similar task [19]. Fine-tuning was used to match the pre-trained model with the created dataset. The proposed architecture layout is shown in Figure 3.

Figure 3.

Proposed method architecture for multiclass classification.

2.4. Specification of Model Training

Many pre-trained CNN models were processed in this study. Pre-trained models were run on a Jupyter notebook with an Intel core i7 CPU, 2.8 GHz processor, and 16 GB Memory, as well as an NVIDIA GTX 1650 GeForce 4-GB Graphics Processor Unit (GPU) card running Windows 10 64-bit. Moreover, Google Colab was employed, which was linked to Python 3 Google Compute Engine (TPU) RAM: 35.25 GB Disk: 107.72 GB [20].

3. Result and Discussion

The experiments were performed to detect and categorize COVID-19, normal, lung-opacity, and pneumonia (viral) using the CXR images. The database contained cleansed data, and balanced CXR images were used during the process.

3.1. Without the Enhancement Technique

As the initial step, three different pre-trained CNN models were used to obtain training, testing, and validation accuracies and a confusion matrix. The CNN models were pre-trained with an adaptive moment estimation (ADAM) optimizer. Every experiment used an experimental parameter of 40, 0.0001, 100, and 20 for the batch size, learning rate, steps per epoch, and the total number of epochs. The last layer initiation function was softmax, while the loss function was categorical cross-entropy. In addition, here, we used 224 × 224 pixel-resized images for all model processing.

3.2. With the Enhancement Technique

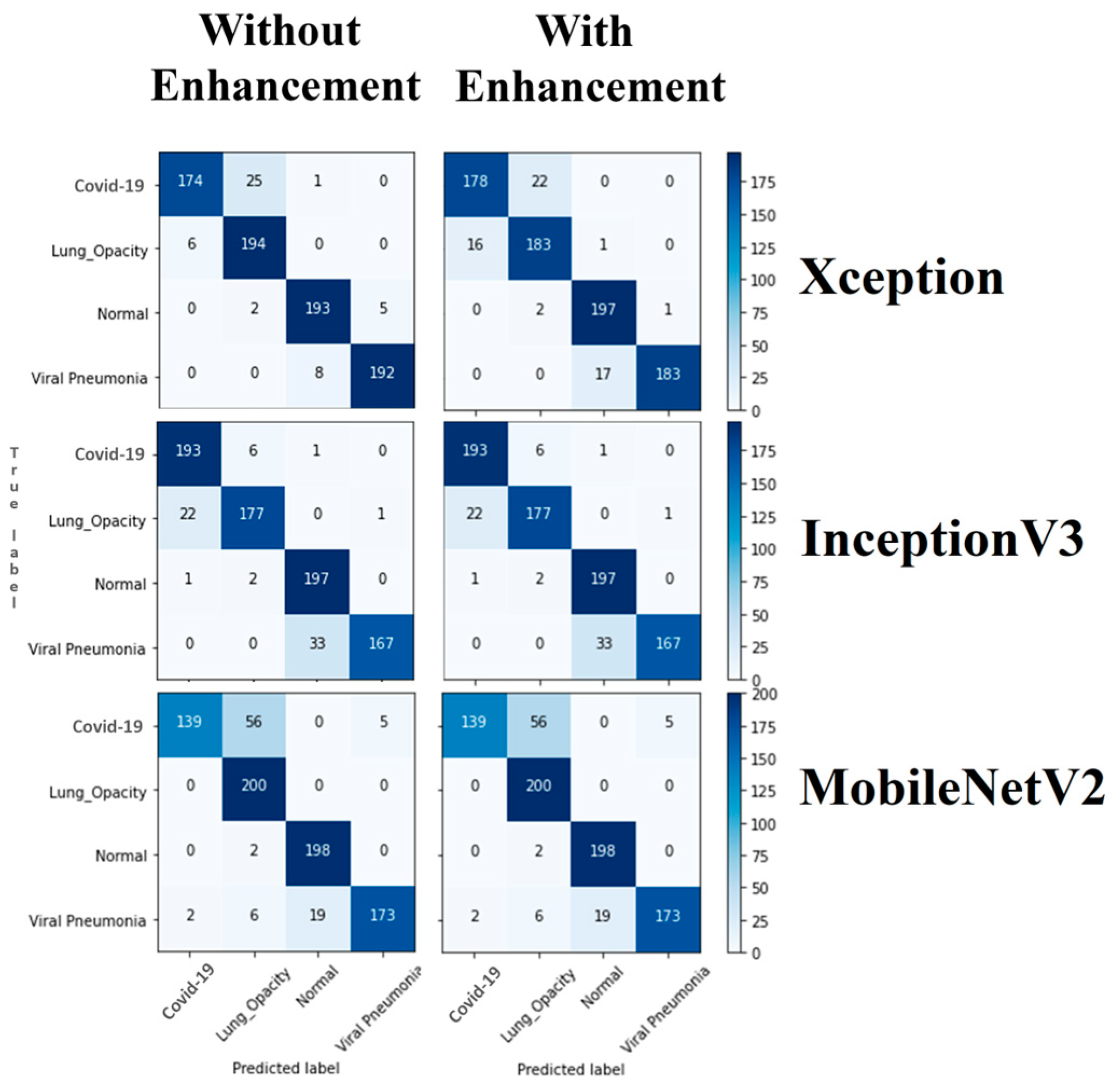

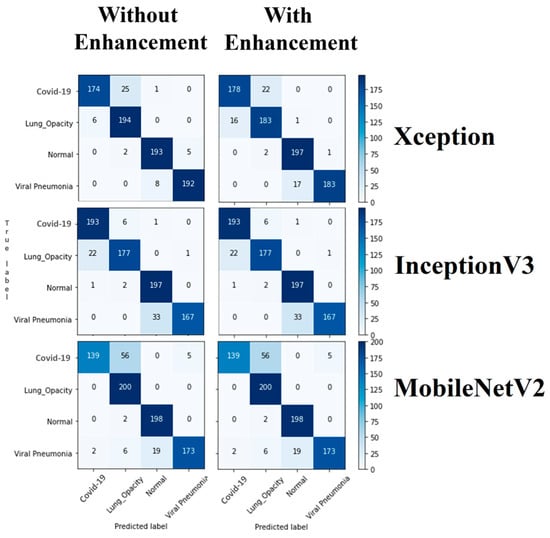

Secondly, three pre-trained CNN models (Xception, InceptionV3, and MobileNetV2) were again processed with an image enhancement technique named Gabor filtering. The hyperparameters were selected as the same as in the previous process. In a classification problem, the confusion matrix can be used to summarize prediction results. Figure 4 shows the comparison of confusion matrix plots for pre-trained CNN models with and without enhancement.

Figure 4.

Confusion matrix for pre-trained models with (without) enhancement.

Table 3 shows a comparison between training, validation, and testing accuracies for Xception, InceptionV3, and MobileNetV2 models with and without using the enhancement technique for the dataset. A comparison of performance evaluation matrices of different pre-trained models with and without enhancement for the classification of the four classes is shown in Table 4.

Table 3.

Accuracy of pre-trained models with and without enhancement.

Table 4.

Comparison of performance evaluation matrices with and without enhancement technique.

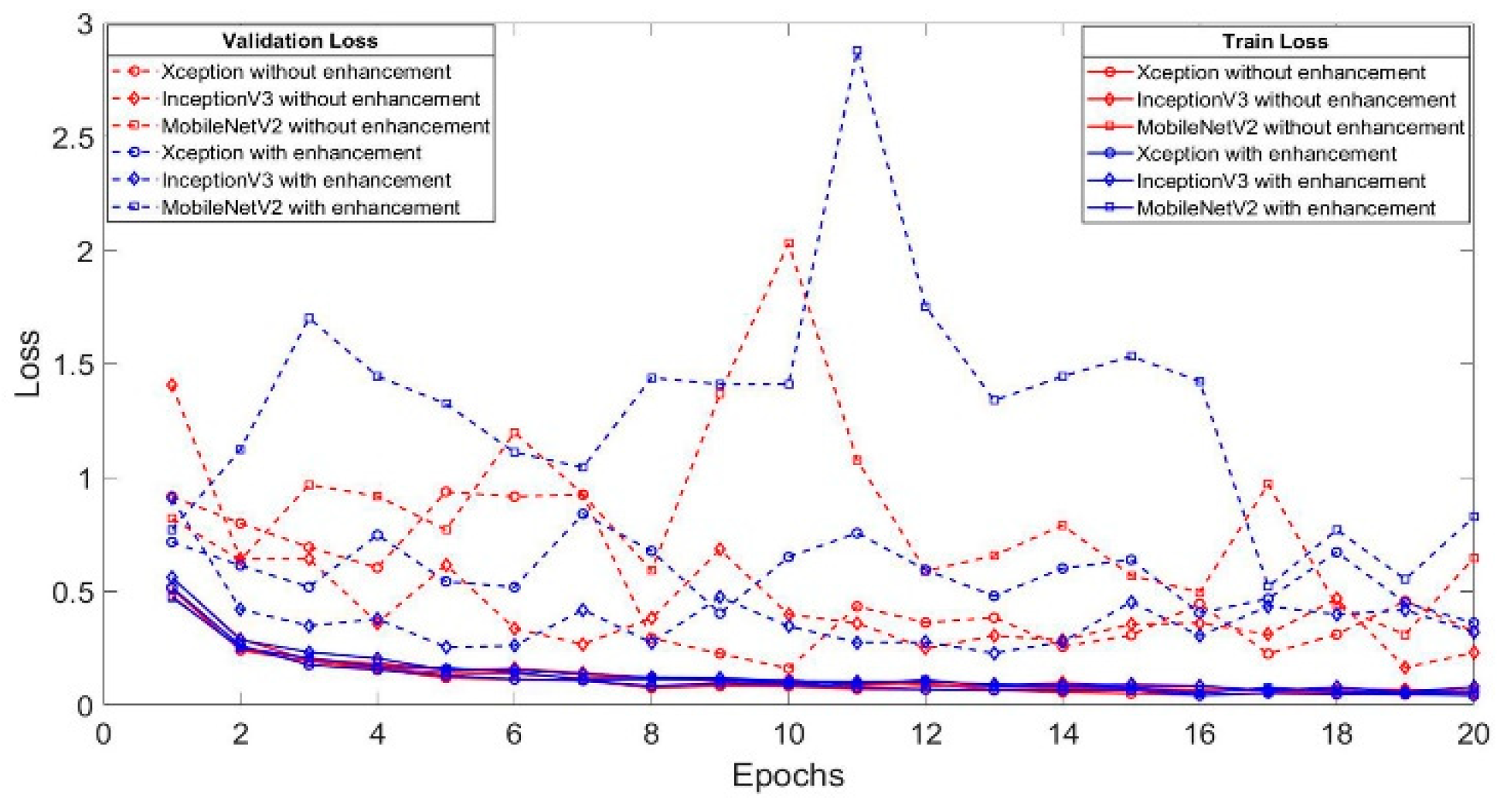

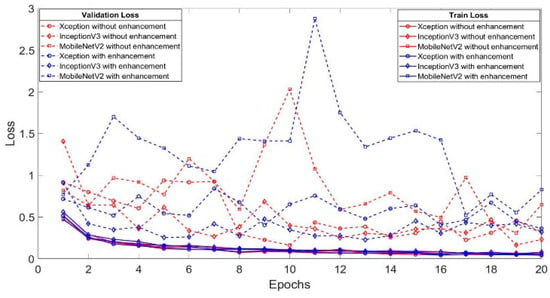

Training and validation loss values at each epoch were reduced in consecutive epochs. A comparison of training and validation losses in contrasting pre-trained models with and without containing the enhancement mechanism is shown in Figure 5.

Figure 5.

Comparison of three different pre-trained models with and without enhancement technique.

4. Conclusions

In this work, a deep learning-based method using CXR images to predict COVID-19 patients automatically was proposed. The Xception model showed the best testing accuracy of 94.13% without enhancement technique. Considering all of the models with Gabor filtering enhancement, the Xception model has the highest testing accuracy, but it is lower than the accuracy result without enhancement for the Xception model. Moreover, the Gabor filtering enhancement technique only positively affects MobileNetV2. As a result, training, validation, and testing accuracies increased when we used the Gabor filtering enhancement technique for MobileNetV2. In future work, the proposed methodology will be tested on different CNN models to increase the system’s accuracy toward 100%, exceeding the previous studies.

Author Contributions

Conceptualization, M.A.; methodology, C.T.K. and S.C.P.; software, C.T.K. and S.C.P.; validation, C.T.K., S.C.P., A.J.U., M.A., H.R. and C.W.K.; formal analysis, C.T.K. and S.C.P.; investigation, C.T.K. and S.C.P.; resources, M.A., H.R., and C.W.K.; data curation, C.T.K., S.C.P. and M.A.; writing—original draft preparation, C.T.K., S.C.P., and A.J.U.; writing—review and editing, M.A., H.R., and C.W.K.; visualization, C.T.K. and S.C.P.; supervision, M.A., H.R., and C.W.K.; project administration, M.A, H.R., and C.W.K.; All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data used for this research are from Kaggle and available at https://www.kaggle.com/tawsifurrahman/covid19-radiography-database/activity (accessed on 25 May 2021).

Conflicts of Interest

The authors declare no conflict of interest.

References

- COVID Live Update: 222,276,536 Cases and 4,593,898 Deaths from the Coronavirus—Worldometer. Available online: https://www.worldometers.info/coronavirus/?utmcampaign=homeAdvegas1? (accessed on 8 September 2021).

- Kaggle. COVID-19 Radiography Dataset. Available online: https://www.kaggle.com/tawsifurrahman/covid19-radiography-database/activity (accessed on 25 May 2021).

- Kadir, M.A.; Mahbub, Z.B.; Islam, K.R.; Khan, M.S.; Can, A.I. Help in Screening Viral and COVID-19 Pneumonia? IEEE Access 2020, 8, 132665–132676. [Google Scholar] [CrossRef]

- Narin, A.; Kaya, C.; Pamuk, Z. Automatic detection of coronavirus disease (COVID-19) using X-ray images and deep convolutional neural networks. Pattern Anal. Appl. 2021, 24, 1207–1220. [Google Scholar] [CrossRef] [PubMed]

- Jain, R.; Gupta, M.; Taneja, S.; Hemanth, D.J. Deep learning based detection and analysis of COVID-19 on chest X-ray images. Appl. Intell. 2021, 51, 1690–1700. [Google Scholar] [CrossRef] [PubMed]

- Rahman, T.; Khandakar, A.; Qiblawey, Y.; Tahir, A.; Kiranyaz, S.; Kashem, S.B.A.; Islam, M.T.; Al Maadeed, S.; Zughaier, S.M.; Khan, M.S.; et al. Exploring the effect of image enhancement techniques on COVID-19 detection using chest X-ray images. Comput. Biol. Med. 2021, 132, 104319. [Google Scholar] [CrossRef] [PubMed]

- Toğaçar, M.; Ergen, B.; Cömert, Z. COVID-19 detection using deep learning models to exploit Social Mimic Optimization and structured chest X-ray images using fuzzy color and stacking approaches. Comput. Biol. Med. 2020, 121, 103805. [Google Scholar] [CrossRef] [PubMed]

- Ozturk, T.; Talo, M.; Yildirim, E.A.; Baloglu, U.B.; Yildirim, O.; Acharya, U.R. Automated detection of COVID-19 cases using deep neural networks with X-ray images. Comput. Biol. Med. 2020, 121, 103792. [Google Scholar] [CrossRef] [PubMed]

- Wang, L.; Lin, Z.Q.; Wong, A. COVID-Net: A tailored deep convolutional neural network design for detection of COVID-19 cases from chest X-ray images. Sci. Rep. 2020, 10, 19549. [Google Scholar] [CrossRef] [PubMed]

- Khan, A.I.; Shah, J.L.; Bhat, M.M. CoroNet: A deep neural network for detection and diagnosis of COVID-19 from chest x-ray images. Comput. Methods Programs Biomed. 2020, 196, 105581. [Google Scholar] [CrossRef] [PubMed]

- Muralidharan, N.; Gupta, S.; Prusty, M.R.; Tripathy, R.K. Detection of COVID19 from X-ray images using multiscale Deep Convolutional Neural Network. Appl. Soft Comput. 2022, 119, 108610. [Google Scholar] [CrossRef] [PubMed]

- Asghar, U.; Arif, M.; Ejaz, K.; Vicoveanu, D.; Izdrui, D.; Geman, O. An Improved COVID-19 Detection using GAN-Based Data Augmentation and Novel QuNet-Based Classification. BioMed Res. Int. 2022, 2022, 8925930. [Google Scholar] [CrossRef] [PubMed]

- Sanida, T.; Sideris, A.; Tsiktsiris, D.; Dasygenis, M. Lightweight Neural Network for COVID-19 Detection from Chest X-ray Images Implemented on an Embedded System. Technologies 2022, 10, 37. [Google Scholar] [CrossRef]

- SIIM-ISIC Melanoma Classification. Available online: https://kaggle.com/c/siim-isic-melanoma-classification (accessed on 15 March 2022).

- Results—dupeGuru 4.0.3 Documentation. Available online: https://dupeguru.voltaicideas.net/help/en/results.html (accessed on 26 March 2022).

- Shah, A. (Exploring Neurons) Through the Eyes of Gabor Filter. Medium, 17 June 2018. Available online: https://medium.com/@anuj-shah/through-the-eyes-of-gabor-filter-17d1fdb3ac97 (accessed on 21 March 2022).

- Team, K. Keras Documentation: Keras Applications. Available online: https://keras.io/api/applications/ (accessed on 21 March 2022).

- Marcelino, P. Transfer Learning from Pre-Trained Models. Medium, 23 October 2018. Available online: https://towardsdatascience.com/transfer-learning-from-pre-trained-models-f2393f124751 (accessed on 15 September 2021).

- Team, K. Keras Documentation: Transfer Learning & Fine-Tuning. Available online: https://keras.io/guides/transfer-learning/ (accessed on 26 March 2022).

- Google Colaboratory. Available online: https://colab.research.google.com/?utm-source=scs-index (accessed on 26 March 2022).

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).