Analysing the Contributing Factors to Activity Recognition with Loose Clothing †

Abstract

1. Introduction

2. Materials and Methods

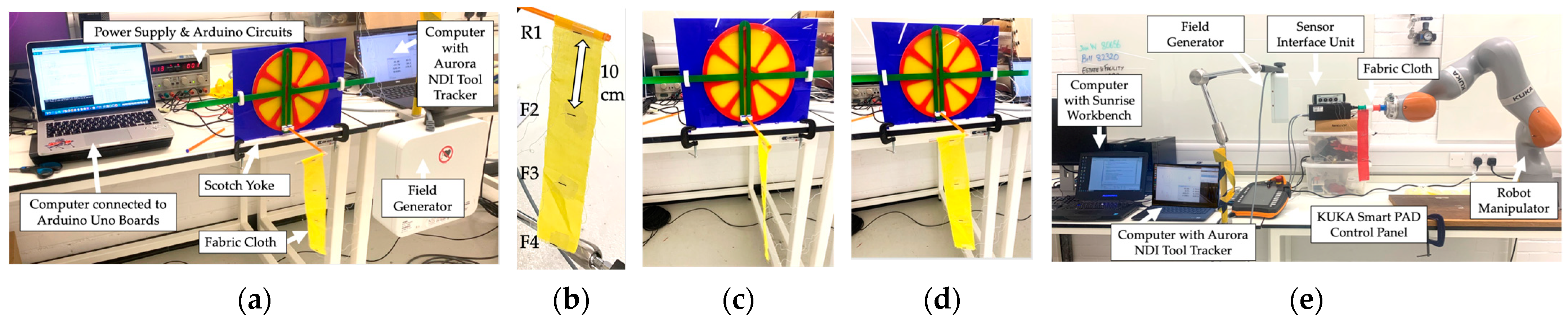

2.1. Experimental Setup

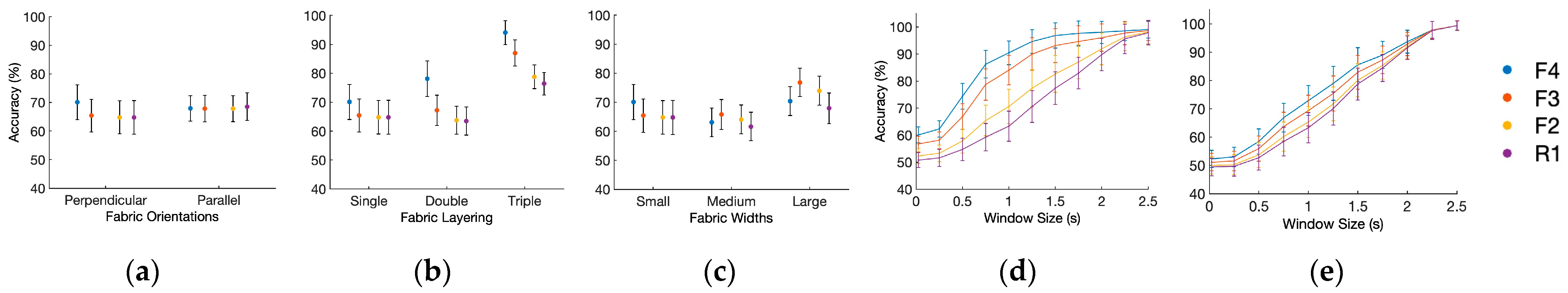

2.2. Experiments

2.3. Data Collection

2.4. Frequency Classification

3. Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Isakava, T. Human Activity Recognition: Everything You Should Know. InData Labs. Available online: https://indatalabs.com/blog/human-activity-recognition (accessed on 21 August 2023).

- Jayasinghe, U.; Hwang, F.; Harwin, W.S. Comparing Loose Clothing-Mounted Sensors with Body-Mounted Sensors in the Analysis of Walking. Sensors 2022, 22, 6605. [Google Scholar] [CrossRef] [PubMed]

- Chesser, M.; Jayatilaka, A.; Visvanathan, R.; Fumeaux, C.; Sample, A.; Ranasinghe, D.C. Super Low Resolution RF Powered Accelerometers for Alerting on Hospitalized Patient Bed Exits. In Proceedings of the 2019 IEEE International Conference on Pervasive Computing and Communications (PerCom), Kyoto, Japan, 11–15 March 2019; IEEE: New York, NY, USA, 2019; pp. 1–10. [Google Scholar] [CrossRef]

- Parkinson’s Disease—Symptoms, nhs.uk. Available online: https://www.nhs.uk/conditions/parkinsons-disease/symptoms/ (accessed on 23 October 2023).

- Essential Tremor—Symptoms and Causes, Mayo Clinic. Available online: https://www.mayoclinic.org/diseases-conditions/essential-tremor/symptoms-causes/syc-20350534 (accessed on 21 August 2023).

- Lara, O.D.; Labrador, M.A. A Survey on Human Activity Recognition using Wearable Sensors. IEEE Commun. Surv. Tutor. 2013, 15, 1192–1209. [Google Scholar] [CrossRef]

- Michael, B.; Howard, M. Eliminating motion artifacts from fabric-mounted wearable sensors. In Proceedings of the 2014 IEEE-RAS International Conference on Humanoid Robots, Madrid, Spain, 18–20 November 2014; pp. 868–873. [Google Scholar] [CrossRef]

- Michael, B.; Howard, M. Activity recognition with wearable sensors on loose clothing. PLoS ONE 2017, 12, e0184642. [Google Scholar] [CrossRef]

- Shen, T.; Di Giulio, I.; Howard, M. A Probabilistic Model of Human Activity Recognition with Loose Clothing. Sensors 2023, 23, 4669. [Google Scholar] [CrossRef] [PubMed]

- Chang, C.-C.; Lin, C.-J. LIBSVM—A Library for Support Vector Machines. Available online: http://www.csie.ntu.edu.tw/~cjlin/libsvm/ (accessed on 21 August 2023).

| Independent Variable | Dependent Variable | Controlled Variables | |

|---|---|---|---|

| Experiment 1 | Fabric Orientation (perpendicular, parallel) | Frequency Classification | Single layer, small width |

| Experiment 2 | Fabric Layering (single, double, triple) | Perpendicular orientation, small width | |

| Experiment 3 | Fabric Width (small, medium, large) | Perpendicular orientation, single layer |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Allagani, R.; Shen, T.; Howard, M. Analysing the Contributing Factors to Activity Recognition with Loose Clothing. Eng. Proc. 2023, 52, 10. https://doi.org/10.3390/engproc2023052010

Allagani R, Shen T, Howard M. Analysing the Contributing Factors to Activity Recognition with Loose Clothing. Engineering Proceedings. 2023; 52(1):10. https://doi.org/10.3390/engproc2023052010

Chicago/Turabian StyleAllagani, Renad, Tianchen Shen, and Matthew Howard. 2023. "Analysing the Contributing Factors to Activity Recognition with Loose Clothing" Engineering Proceedings 52, no. 1: 10. https://doi.org/10.3390/engproc2023052010

APA StyleAllagani, R., Shen, T., & Howard, M. (2023). Analysing the Contributing Factors to Activity Recognition with Loose Clothing. Engineering Proceedings, 52(1), 10. https://doi.org/10.3390/engproc2023052010