1. Introduction

Bitcoin price forecasting has been the focus of many studies in the literature over the years. Nevertheless, unexpected price movements, smaller and larger bubbles, and different short- and long-term trends mean this task is an ongoing topic for research. In one of the recent studies exploring this area of research [

1], the authors verify the performance of different machine learning algorithms and mention the current state of the knowledge in Bitcoin forecasting. One aspect refers to [

2] the price of Bitcoin being mainly driven by the spot market rather than the futures market. Another aspect is the division of approaches to forecasting into the “Blockchain approach” and “Financial market’s approach”. The former is based on technical variables such as hash rates or mining difficulty, while the latter uses standard econometric variables such as stocks, bonds, or gold price. We can add to this another approach, which involves taking variables related to investor sentiment such as Google trends data [

3], uncertainty indices (VIX, UCRY, see [

4]), or the Fear and Greed index, which has not been explored much in the literature. In our study, we used a mix of features from these three approaches and the open, high, low, and close prices to predict the Bitcoin price using a novel approach. Based on this, we were able to verify which variables contribute to the forecast accuracy the most. In fact, the features with the largest contribution are only the price-related ones. The other variables, whether coming from the “Blockchain approach”, the “Financial markets approach”, or the “Sentiment approach” have a negligible impact in terms of improving Bitcoin’s price performance.

We used a method that explores features and machine learning algorithms for Bitcoin’s closing price prediction. In recent forecasting competitions, it was observed that machine learning models and hybrid approaches demonstrated superiority over alternative methods [

5]. Therefore, in this study, we evaluated the following machine learning algorithms: Long Short-Term Memory (LSTM), Bidirectional Long-Term Memory (BiLSTM), Gated Recurrent Unit (GRU), Bidirectional Gated Recurrent Unit (BiGRU), and Light Gradient Boosting Machine (LightGBM). In addition we used ensembling. The results were evaluated observing the predicted and actual Bitcoin closing price measuring Root Mean Squared Error (RMSE), Mean Squared Error (MSE), Mean Absolute Error (MAE), and Directional Accuracy (DA).

Although previous studies show that the financial time series closing price, either in the case of stocks [

6,

7], commodities [

8], or cryptocurrencies [

9], is not enough for prediction when training deep learning models, we found that ensembling does help to improve the forecasting accuracy. In this work, we demonstrate that the Bitcoin closing price is not sufficient for forecasting and additional features are necessary when using machine learning algorithms. Evaluation on return shows that the method developed in this work presents one of the highest scores, with a directional accuracy score equal to 0.7645, exceeding a baseline by 58.24 percent.

We compare our work to related studies for Bitcoin prediction. In [

10], LSTM was used in combination with the Empirical Wavelet Transform (EWT) decomposition technique. The authors used the Intrinsic Mode Function (IMF) to optimize and estimate outputs with Cuckoo Search (CS) [

10]. In [

11], Linear Regression (LR) techniques and particle swarm optimization were used to train and forecast data from beginning of 2012 to the end of March 2020. The best setup for the model was obtained with 42 days plus 1 standard deviation [

11]. In [

12], Autoregressive Integrated Moving Average (ARIMA) was used for data from 1 May 2013 to 7 June 2019. This model works best for short-term predictions and can be used to predict Bitcoin for one to seven days ahead [

12]. Finally, in [

13], a BiLSTM with Low-Middle-High features (LMH-BiLSTM) was tested with two primary steps: data decomposition and bidirectional deep learning. The results demonstrate that the proposed model outperforms other benchmark models and achieved high investment returns in the buy-and-hold strategy in a trading simulation [

13].

In this work, we tested the previously mentioned machine learning algorithms one by one, and also in ensemble. We fed the algorithms with a new set of 13 time series (see

Section 2.1). In addition, we included 11 signals that come from Variational Mode Decomposition (MMD) as proposed in [

13]. However, in our experiments, data decomposition did not provide significant improvements. Next, we explain our proposed method.

2. Materials and Methods

An overview of the method is presented in

Figure 1. The input data is prepared in three steps. First, it is normalized between 0 and 1. Then, the train and test set partitions are created, where the train set is used for training several machine learning algorithms. The next phase considers algorithm training. The details regarding hyperparameter selection are described in

Section 2.4. Next, the evaluation phase is performed as a rolling forecast for 1-step ahead prediction over the test set.

2.1. Data Collection

We collected the daily Bitcoin closing price from 7 October 2013 to 6 November 2022. We used a public API from the Kraken page [

14]; the data collected were the values of close, open, high, low, volume, and date. With the Nasdaq-Data-Link library for Python [

15], the following values were obtained: transaction fee, estimated Bitcoin USD transaction volume, Bitcoin USD exchange trade volume, and Bitcoin hash rate. Bitcoin Google trends were obtained with the pytrends library [

16]. The gold to USD exchange rate was obtained from the Investing.com page. The Fear and Greed Index was obtained from the Kaggle page [

17]. The moving average of the closing value was added, taking the last 30 days.

Table 1 presents all features used in this study. A similar set of features was used in [

13]: Bitcoin price, Bitcoin transaction fees as Bitcoin miner’s revenue divided by transactions, USD trade volume from the top Bitcoin exchanges, Bitcoin transaction volume, USD exchange or trade volume from the top Bitcoin exchanges, gold exchange rate to US dollar, hash rate, and Google trends of Bitcoin.

2.2. Variational Mode Decomposition (VMD)

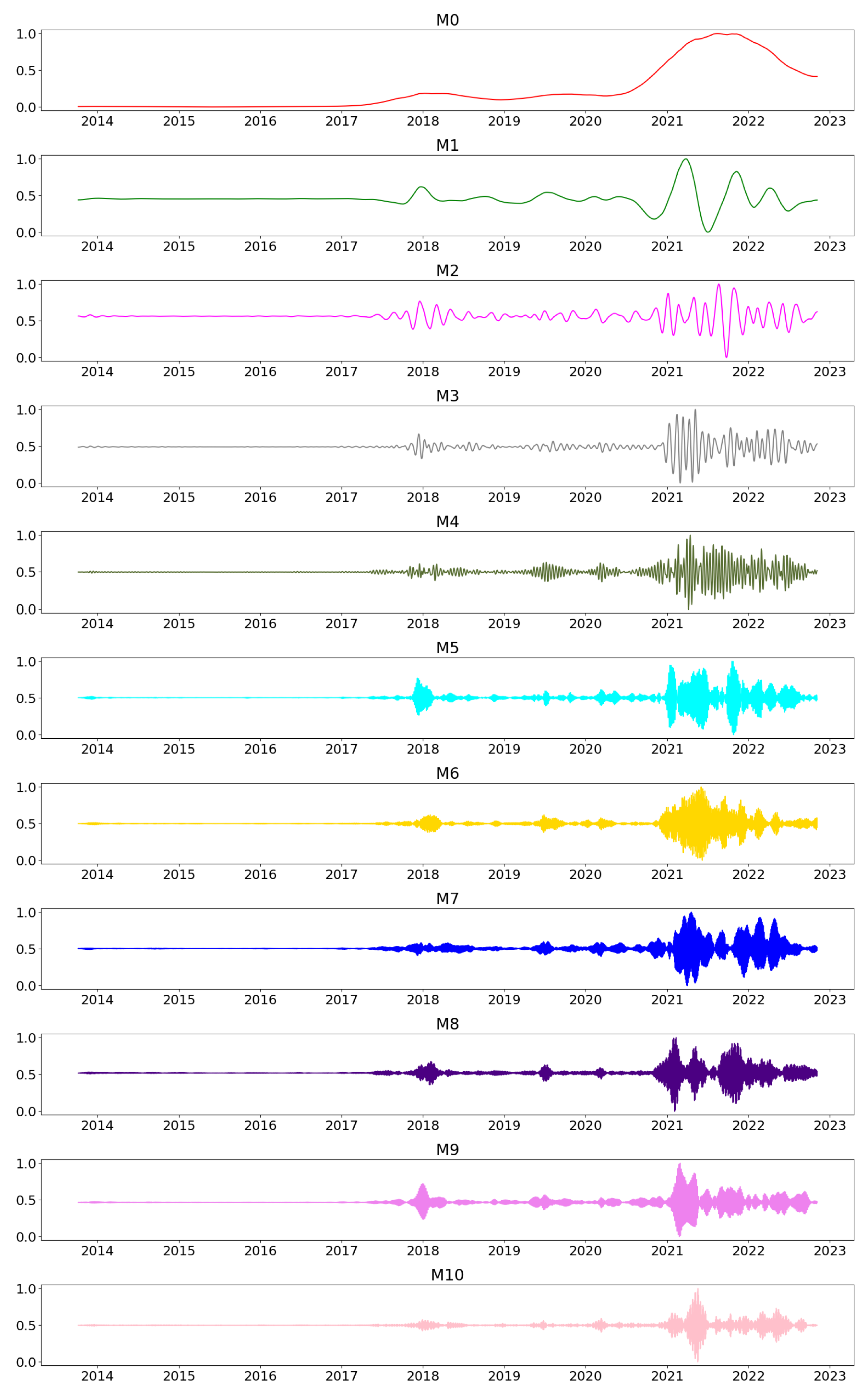

Bitcoin’ closing price was decomposed using the VMD method as proposed in [

13]. Each decomposed mode was labeled M0 through M10, where M0 has the lowest frequency and M10 has the highest. We can observe the graph of the decomposition in

Figure 2.

Variational Mode Decomposition (VMD) is a completely nonrecursive signal decomposition technique proposed by [

18]. VMD is a problem of variational optimization that aims to minimize the total bandwidth of each mode. This work used the vmdpy python library [

19], with parameters by default using Bitcoin’s close price as the input and a bandwidth of 5000.

2.3. Data Preparation

For the LSTM, BiLSTM, GRU, and BiGRU models, the data were scaled between 0 and 1 before training, except the LightGBM model for which data scaling was performed for evaluation only. Next, the data was divided into 25-day windows, converting the data table into 3D lists (arrays), where the first dimension corresponds to the batch size, the second to the number of time-steps, and finally, the third dimension to the number of units of one input sequence [

20]. Next to each list, the expected future value was saved. This last value is taken from the closing values of the previous day. For the LightGBM model, the complete data

Table 1 was used without modifications. Data partitioning was performed as follows: the set was trained from 7 October 2013 to 8 August 2022, and the set was tested from 9 August 2022 to 6 November 2022, where 90 days were used for testing, and the rest of data were used for training.

2.4. Model Training

We tested five deep learning architectures and one tree boosting method. All deep learning networks present an input layer of 90 units, a set value of 500 epochs, and a batch size of 64 without early stopping.

Long Short-Term Memory (LSTM): The network trains with five layers, an input layer with the activation function seen in [

6], the bias initializer glorot uniform, kernel regulator l1, l2, kernel constraint unit norm, and the time_major activated, followed by a dense layer of 90 units and linear activation. Then, an output layer and another dense layer is used. The model uses the Adam optimizer with a learning rate of 0.002;

Gated Recurrent Unit (GRU): The network trains with five layers, an input layer followed by a dropout layer set to 0.3, an output layer followed by another dropout layer, and a dense layer of one unit. The model uses the Adam optimizer with a learning rate of 0.0001. A similar network was used in [

21];

Bidirectional Long Short-Term Memory (BiLSTM): The network consists of an input layer with the

tanh activation function, followed by a backward learning layer and a dense layer. The model uses the Adam optimizer with a learning rate of 0.01. A similar network was used in [

13];

Bidirectional Long Short-Term Memory with dropout (BiLSTM_d): The network consists of an input layer with tanh activation function, followed by a dropout layer, followed by a backward learning layer, followed by a dropout layer and a dense layer. The model uses the Adam optimizer with a learning rate of 0.01 and the dropout set to 0.3;

Bidirectional Gated Recurrent Unit (BiGRU): The network trains with five layers, an input bidirectional layer followed by a dropout layer set to 0.3, an output bidirectional layer followed by another dropout layer and a dense layer. The model uses the Adam optimizer with a learning rate of 0.0001;

Light Gradient Boosting Machine (LighGBM): This presents an early stopping round set to 50, and verbose evaluation set to 30 with 3600 number of boost rounds. The model trains with a gradient booting decision tree, with the objective set to

tweedie and a variance power of 1.1, and uses an RMSE metric with n-jobs set to −1. In addition, it uses 42 seeds with a learning rate of 0.2, the bagging fraction is set to 0.85 and the bagging frequency is set to 7. Moreover, colsample by tree and colsample by node are set to 0.85 with a min data per leaf of 30, and the number of leaves is 200 with lambda

l1 and

l2 set to 0.5. A similar network was used in [

5].

2.5. Evaluation

The predicted results were normalized between 0 and 1 before evaluation measurements were made.

We measure the Mean Squared Error (MSE), Root Mean Squared Error (RMSE), Mean Absolute Error (MAE), and Directional Accuracy (DA) between the predicted and actual closing price as described in [

22], such that

n is the number of samples, and

and

are the predicted and actual closing price at time

t:

where

2.6. Return

The return is a financial measure used to assess the efficiency of an asset investment. It is an growth indicator of the value of an investment during a certain period of time. Return On Investment (ROI) is one of the main financial measures used both in the traditional stock market and in the world of cryptocurrencies [

23]. The formula can be expressed in terms of the final Value of Inversion (FVI) and the Initial Value of Inversion (IVI):

3. Results

Experiments were conducted to see how different time series influence Bitcoin closing price prediction and how different models perform. The experiments were carried out analyzing the results with different factors.

Table 2,

Table 3,

Table 4,

Table 5,

Table 6,

Table 7,

Table 8,

Table 9,

Table 10 and

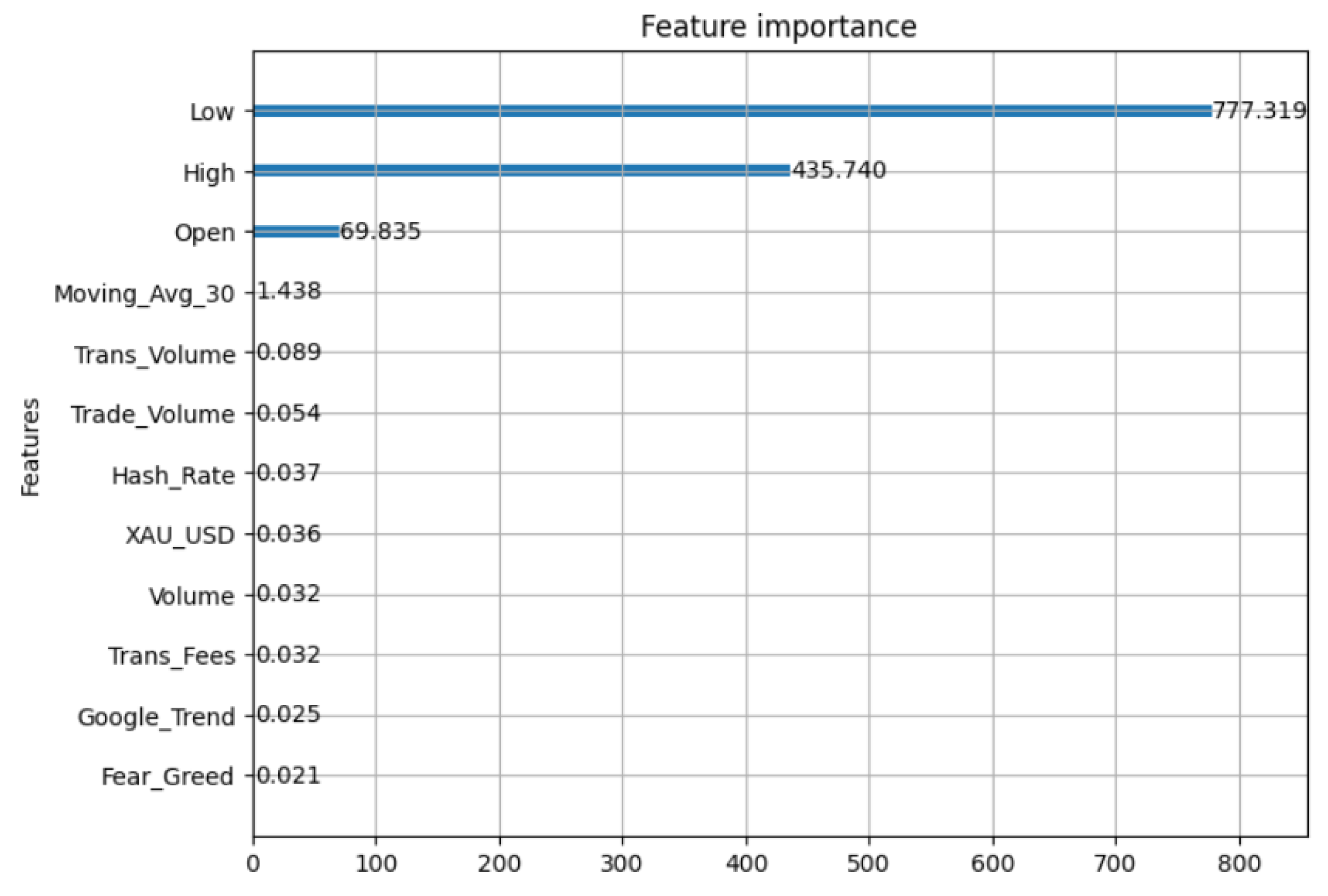

Table 11 show the results obtained in the different experiments. An RMSE, MSE, MAE close to zero and a DA close to one are preferred. The best results are highlighted. The values were compared with values obtained with a Baseline prediction. Baseline prediction means that the predicted value is the last observed value. Series importance for the prediction was obtained according to the LightGBM model in order to classify them later and continue with the experiments, as can be seen in

Figure 3.

We performed the following experiments:

Experiment 1. The first experiment sent a subset of all tested time series, as we assumed these features represent price action over a set period of time, and in combination could be used to predict price movements. The time series used were close, open, high, low, and volume of Bitcoin. These were obtained from the Kraken API [

14]. See

Table 2 for the results of the prediction.

Table 2 shows that BiLSTM was best DA = 0.5056 but BiGRU produced the lowest MAE = 0.0467;

Experiment 2. In the second experiment, we tested all time series presented in

Table 1: close, open, high, low, volume, transaction fee, estimated Bitcoin USD transaction volume, Bitcoin USD exchange trade volume, rate of Bitcoin hash, Bitcoin Google trends, and gold to USD exchange rate, Fear and Greed index, and the moving average of the closing value. The main idea was to enrich the input data to help improve the prediction. The results can be seen in

Table 3. LightGBM had the best performance and there was an improvement in comparison with Experiment 1 whereby less input data were used;

Experiments 3. For the following experiments, series importance for the prediction was analyzed according to the LightGBM model. The order of importance can be seen in

Figure 3. The experiments were performed indicating different combinations of the four most important series: open, high, low, and close values. These time series exhibited a significant correlation with the closing price of Bitcoin and exerted an influence over its behavior. For this experiment, open, high, and low values were used.

Table 4 shows that BiLSTM had the best DA = 0.4832, but GRU had the best MAE = 0.0496. The results show a decrease in performance;

Experiment 4. For this experiment, high and low values were used.

Table 5 shows that BiLSTM had the best performance with DA = 0.5169. There was an improvement in comparison with Experiment 1, but it did not reach the performance of Experiment 2;

Experiment 5. For this experiment, low values were used.

Table 6 shows that LSTM had the best performance with DA = 0.5169. The results show a similar performance in comparison with Experiment 4;

Experiment 6. For this experiment, open and low values were used.

Table 7 shows that LSTM had the best DA = 0.5393 and BiLSTM delivered the lowest errors. The results show an improvement in performance compared with previous experiments, but it was not better than Experiment 2;

Experiment 7. Since Experiment 2 had the best performance until this point, we tested all time series used in Experiment 2 and added 11 VMD modes to assess their impact on prediction. That is, the input data included the values of close, open, high, low, volume, transaction fee, estimated Bitcoin USD transaction volume, Bitcoin USD exchange trade volume, rate of Bitcoin hash, Bitcoin Google trends, and gold to USD exchange rate, Fear and Greed index, and the moving average of the closing value and 11 VMD modes. The results are presented in

Table 8. This time, BiGRU had the best DA = 0.5730 but LSTM had the lowest errors. The results show a big improvement in performance compared with the previous experiments;

Experiment 8. This experiment consisted of sending the values of all 11 VMD modes as input data, that is, only the 11 VMD modes were added to assess the impact these had on the prediction (see

Table 9). BiGRU had the best DA = 0.5618, BiLSTM the best MAE, and LSTM the best MSE and RMSE. Results show an improvement in performance compared with previous experiments, but it was not better than Experiment 7.

In all the experiments, we observed a noticeable improvement in the BiLSTM model when it did not present the dropout layer.