A Deep Learning Model Based on Multi-Head Attention for Long-Term Forecasting of Solar Activity †

Abstract

1. Introduction

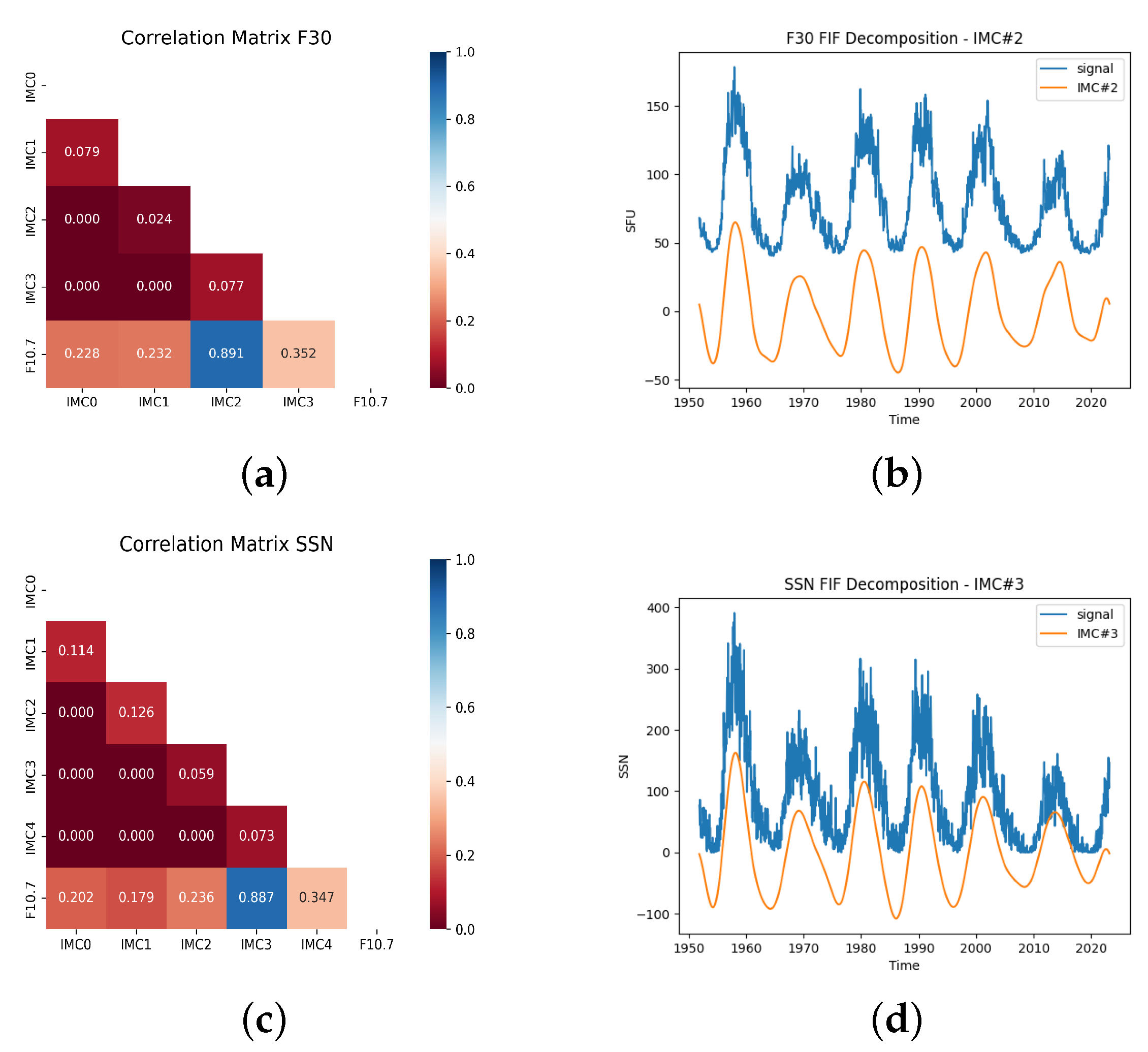

2. Deep Learning LSTM-Based Method for Long-Term F10.7 Time Series Forecasting

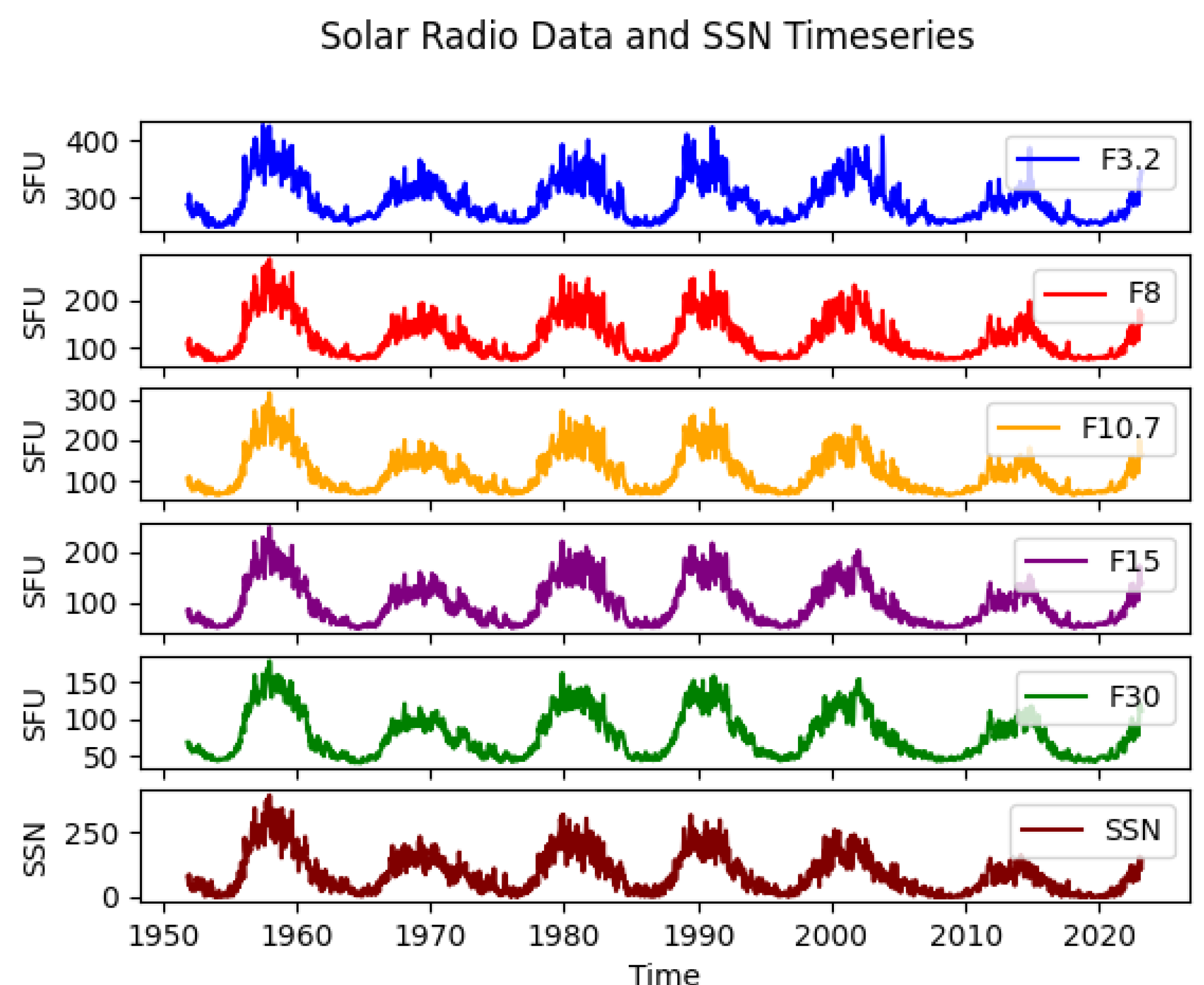

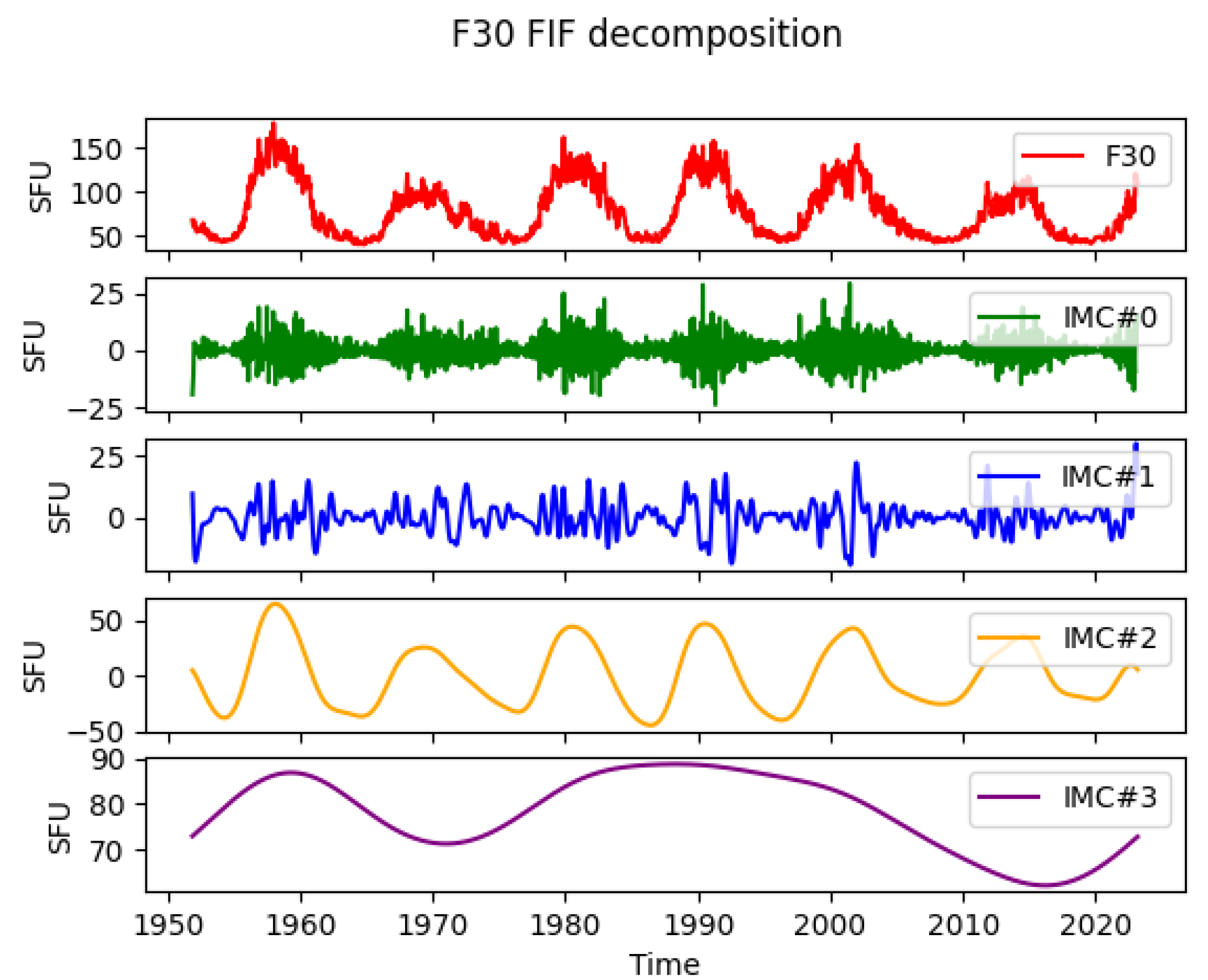

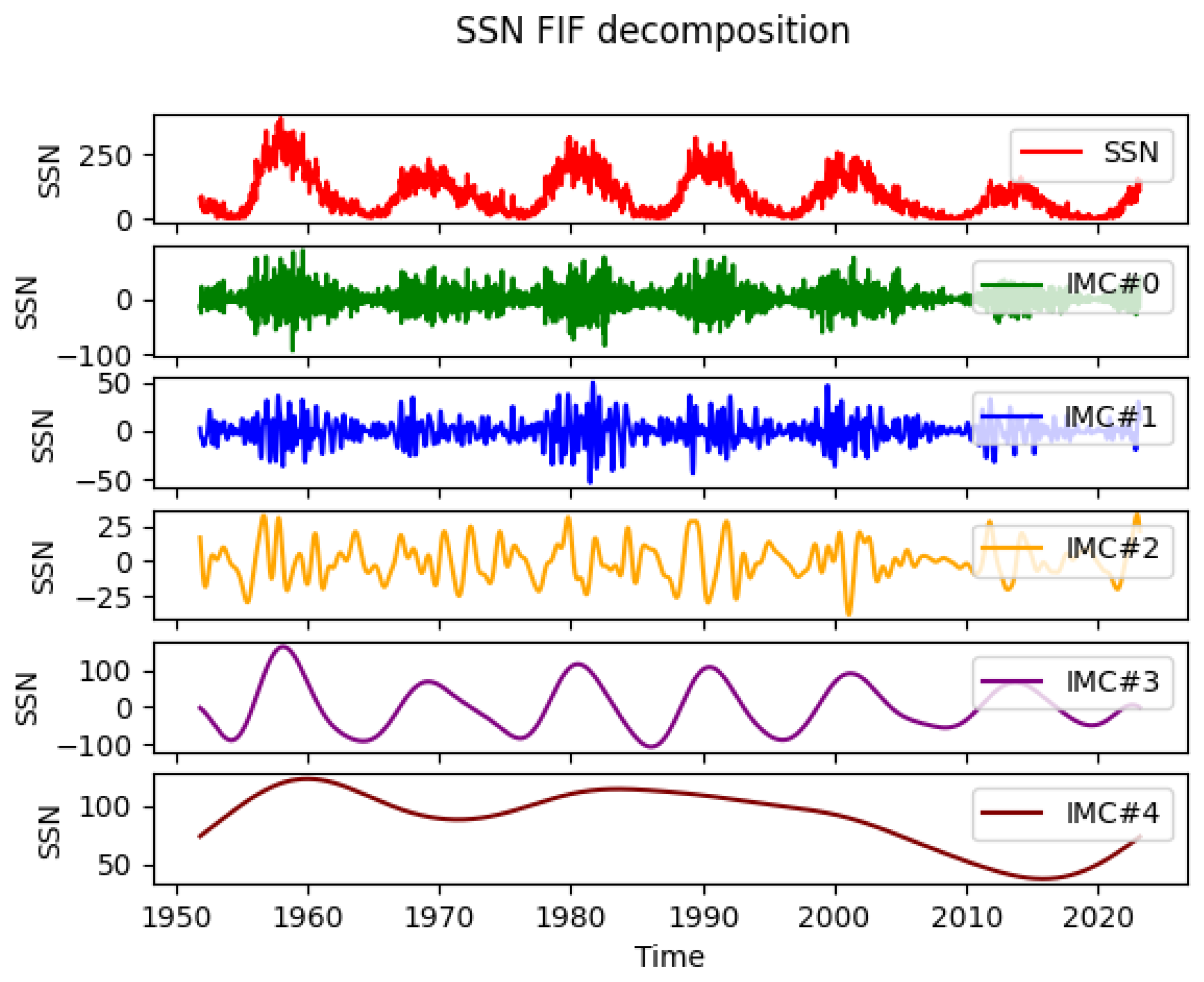

2.1. Data Description and Preparation

- F30: 30 cm radio flux from Toyokawa (historical data) and Nobeyama (recent data)

- F15: 15 cm radio flux from Toyokawa (historical data) and Nobeyama (recent data)

- F10.7: 10.7 cm radio flux from Ottawa (historical data) and Penticton (recent data)

- F8: 8 cm radio flux from Toyokawa (historical data) and Nobeyama (recent data)

- F3.2: 3.2 cm radio flux from Toyokawa (historical data) and Nobeyama (recent data)

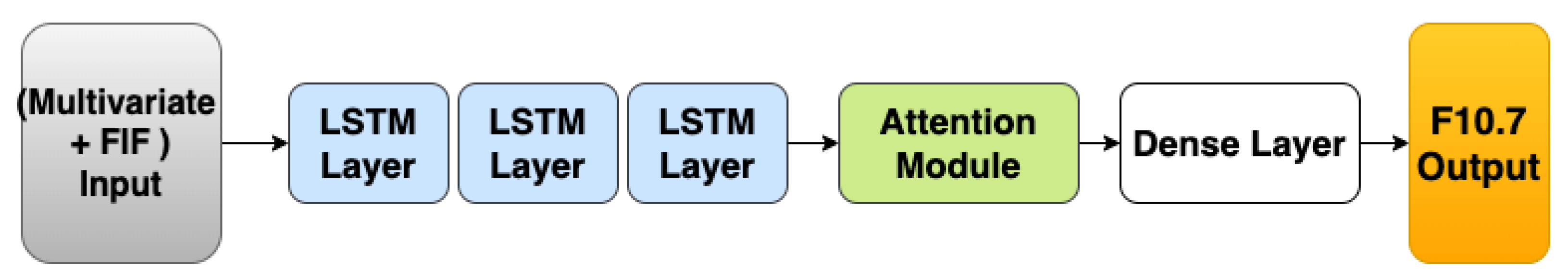

2.2. LSTM Model

2.3. Multi-Attention Module

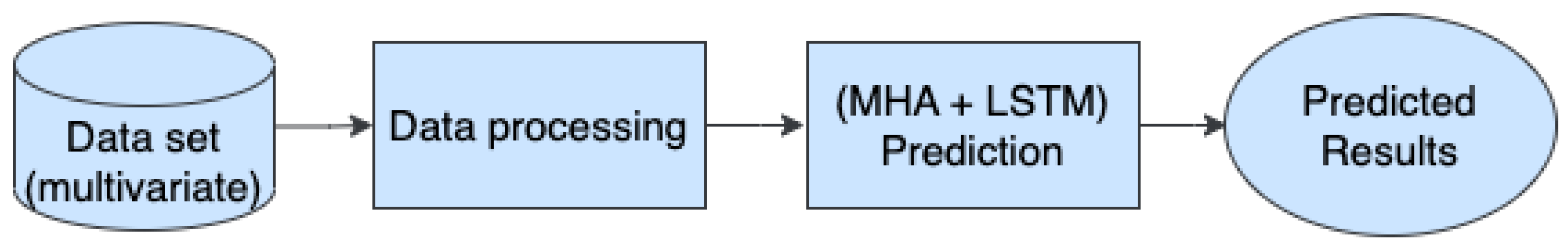

2.4. Proposed Multi-Head Attention LSTM Model Architecture

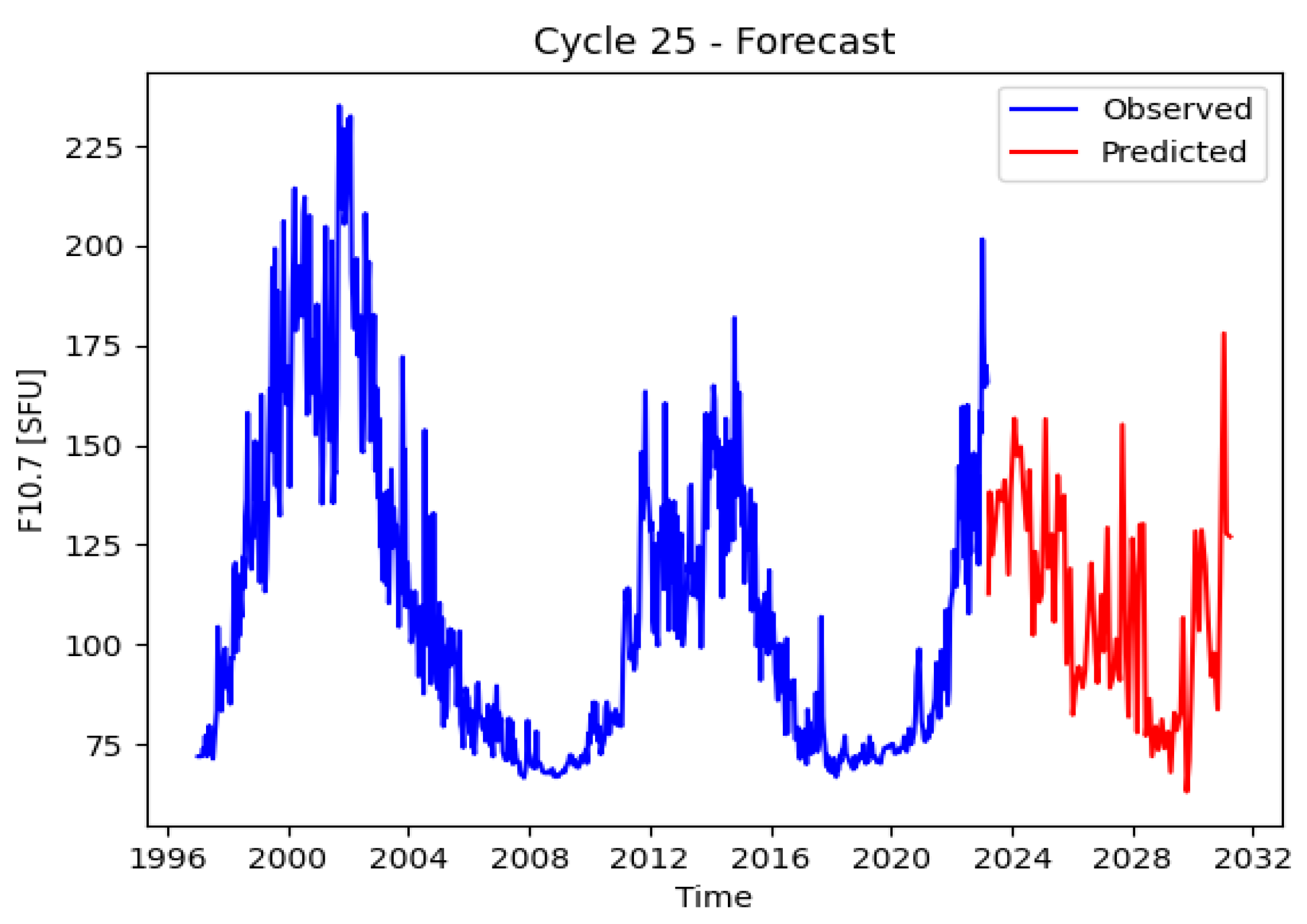

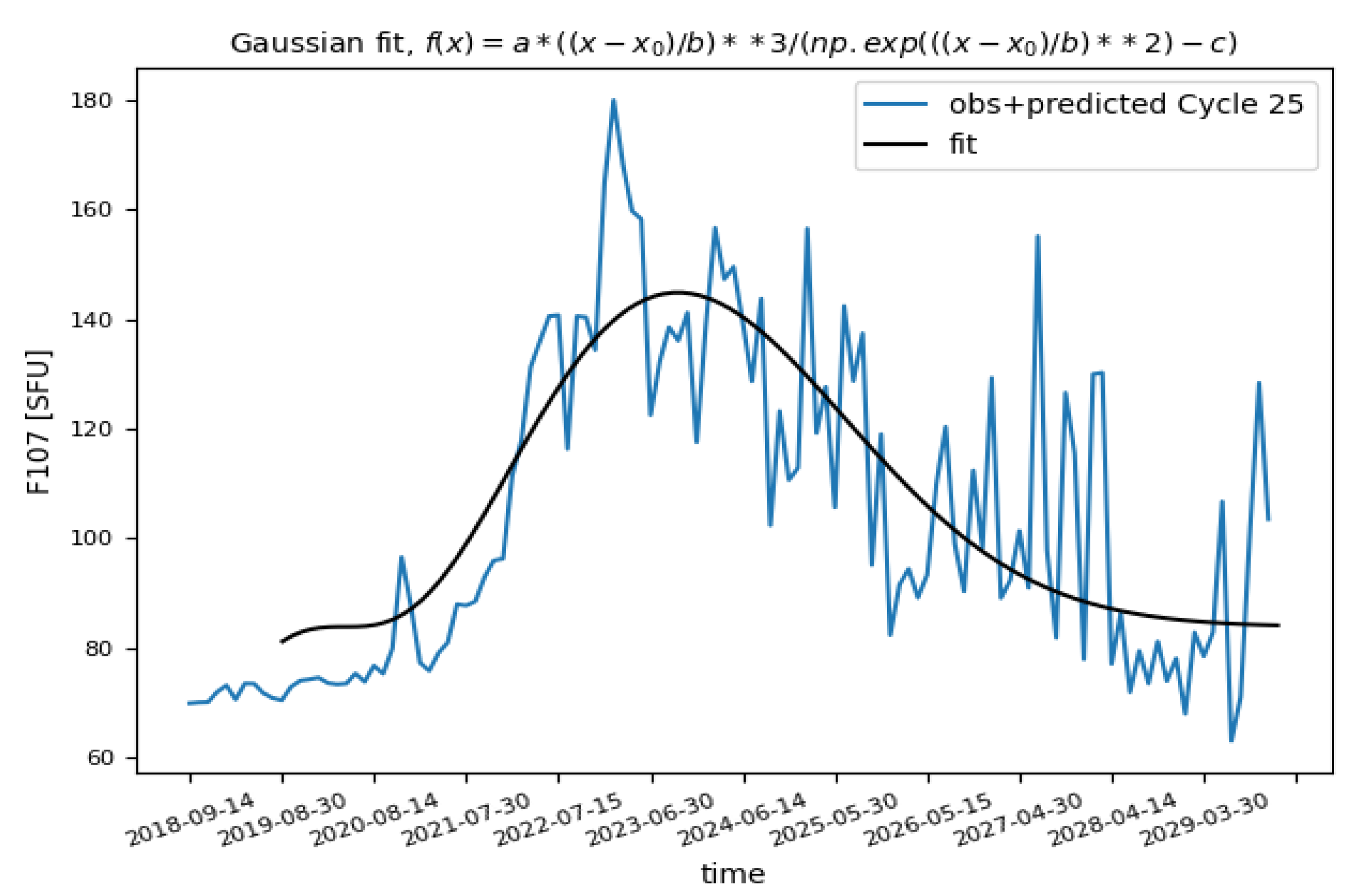

3. Experimental Results and Discussion

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Messerotti, M. Space Weather and Space Climate. In Life in the Universe. Cellular Origin and Life in Extreme Habitats and Astrobiology; Seckbach, J., Chela-Flores, J., Owen, T., Raulin, F., Eds.; Springer: Dordrecht, The Netherlands, 2004; Volume 7. [Google Scholar] [CrossRef]

- Petrova, E.; Podladchikova, T.; Veronig, A.M.; Lemmens, S.; Virgili, B.B.; Flohrer, T. Medium-term Predictions of F10.7 and F30 cm Solar Radio Flux with the Adaptive Kalman Filter. Astrophys. J. Suppl. Ser. 2021, 254, 9. [Google Scholar] [CrossRef]

- Deng, L.H.; Li, B.; Zheng, Y.F.; Cheng, X.M. Relative phase analyses of 10.7cm solar radio flux with sunspot numbers. New Astron. 2013, 23–24, 1–5. [Google Scholar] [CrossRef]

- Tobiska, W.K.; Bouwer, S.D.; Bowman, B.R. The development of new solar indices for use in thermospheric density modeling. J. Atmos. Sol.-Terr. Phys. 2008, 70, 803–819. [Google Scholar] [CrossRef]

- Prasad, A.; Roy, S.; Sarkar, A.; Chandra Panja, S.; Narayan Patra, S. Prediction of Solar Cycle 25 using deep learning based long short-term memory forecasting technique. Adv. Space Res. 2022, 69, 798. [Google Scholar] [CrossRef]

- Warren, H.P.; Emmert, J.T.; Crump, N.A. Linear forecasting of the F10.7 proxy for solar activity. Space Weather. 2017, 15, 1039–1051. [Google Scholar] [CrossRef]

- Wang, Z.; Hu, Q.; Zhong, Q.; Wang, Y. Linear multistep F10.7 forecasting based on task correlation and heteroscedasticity. Adv. Earth Space Sci. 2018, 5, 863–874. [Google Scholar] [CrossRef]

- Du, Y. Forecasting the daily 10.7 cm solar radio flux using an autoregressive model. Sol. Phys. 2020, 295, 1–23. [Google Scholar] [CrossRef]

- Camporeale, E. The challenge of machine learning in space weather: Nowcasting and forecasting. Space Weather. 2019, 17, 1166–1207. [Google Scholar] [CrossRef]

- Cicone, A. Iterative Filtering as a direct method for the decomposition of non-stationary signals. arXiv 2018. [Google Scholar] [CrossRef]

- Laboratory for Atmospheric and Space Physics. LASP Interactive Solar Irradiance Datacenter; Laboratory for Atmospheric and Space Physics: Boulder, CO, USA, 2005. [Google Scholar] [CrossRef]

- LASP Homepage. Available online: https://lasp.colorado.edu/lisird/ (accessed on 16 May 2023).

- CLS Homepage. Available online: https://spaceweather.cls.fr (accessed on 16 May 2023).

- SILSO Homepage. Available online: https://www.sidc.be/silso/home (accessed on 20 May 2023).

- LSTM Networks. Available online: http://colah.github.io/posts/2015-08-Understanding-LSTMs/ (accessed on 10 May 2023).

- Bahdanau, D.; Cho, K.; Bengio, Y. Neural Machine Translation by Jointly Learning to Align and Translate. In Proceedings of the 3rd International Conference on Learning Representations, ICLR, San Diego, CA, USA, 7–9 May 2015. [Google Scholar] [CrossRef]

- Hathaway, D.H.; Wilson, R.M.; Reichmann, E.J. The Shape of the Sunspot Cycle. Sol. Phys. 1994, 151, 177–190. Available online: https://ui.adsabs.harvard.edu/abs/1994SoPh..151..177H (accessed on 20 May 2023). [CrossRef]

- Du, Z.L. The solar cycle: A modified Gaussian function for fitting the shape of the solar cycle and predicting cycle 25. Astrophys. Space Sci. 2022, 367, 20. [Google Scholar] [CrossRef]

- Biesecker, D.A.; Upton, L. Solar Cycle 25 Consensus Prediction Update. AGU Fall Meet. Abstr. 2019, 2019, SH13B-03. [Google Scholar]

- Solar Cycle Progression. Available online: https://www.swpc.noaa.gov/products/solar-cycle-progression (accessed on 20 May 2023).

- Veronig, A.M.; Jain, S.; Podladchikova, T.; Pötzi, W.; Clette, F. Hemispheric sunspot numbers 1874–2020. Astron. Astrophys. 2021, 652, A56. [Google Scholar] [CrossRef]

- Papini, E. GitHub Repository. 2022. Available online: https://github.com/EmanuelePapini/FIF (accessed on 23 March 2023).

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Marcucci, A.; Jerse, G.; Alberti, V.; Messerotti, M. A Deep Learning Model Based on Multi-Head Attention for Long-Term Forecasting of Solar Activity. Eng. Proc. 2023, 39, 16. https://doi.org/10.3390/engproc2023039016

Marcucci A, Jerse G, Alberti V, Messerotti M. A Deep Learning Model Based on Multi-Head Attention for Long-Term Forecasting of Solar Activity. Engineering Proceedings. 2023; 39(1):16. https://doi.org/10.3390/engproc2023039016

Chicago/Turabian StyleMarcucci, Adriana, Giovanna Jerse, Valentina Alberti, and Mauro Messerotti. 2023. "A Deep Learning Model Based on Multi-Head Attention for Long-Term Forecasting of Solar Activity" Engineering Proceedings 39, no. 1: 16. https://doi.org/10.3390/engproc2023039016

APA StyleMarcucci, A., Jerse, G., Alberti, V., & Messerotti, M. (2023). A Deep Learning Model Based on Multi-Head Attention for Long-Term Forecasting of Solar Activity. Engineering Proceedings, 39(1), 16. https://doi.org/10.3390/engproc2023039016