Abstract

A construction site is an open and dynamic space. Construction accidents have been the top-ranked occupational accidents among all industries around the world. Due to the limited quality and quantity of occupational safety supervisors on construction sites, it is difficult to control or prevent risks in real-time. Therefore, a real-time safety warning system based on a deep learning technique (DL) is developed for lifting operations in building construction, called a portable lightweight lifting safety control station (PLSCS). Two modes can be switched manually by the supervisor. If the mode is switched to lifting control mode, PLSCS helps to ensure that nobody is in the hazardous area during lifting operations. The advantages and features of this system are as follows: (1) it warns of the potential safety hazards automatically during the operations; (2) it reduces the workload of occupational safety supervisors; (3) the system is self-powered and easy to carry and deploy. The system was tested and verified in the actual construction site. The results show that the system is useful for improving the safety of lifting operations.

1. Introduction

According to the data released by the Ministry of Labor in Taiwan [1], the “construction industry” accounted for the highest proportion of deaths from major occupational disasters in the workplace in 2019. The number of deaths was a total of 168 people, which was an increase of 35% compared with the previous year, and accounted for 53.2% of the 316 people who died that year. The primary reason for the high accident rate is that construction sites are highly open and dangerous. Due to the large-scale and high-rise building projects in the past decades, the utilization of heavy cranes is becoming popular. Moreover, uncertain factors, e.g., a tight schedule, temporary work station, and the moving of lifting tools, have caused the high risk of falling objects and injuries to construction workers. More attention needs to be paid to the safety control of construction lifting operations.

Due to the rapid development of artificial intelligence (AI) technology (such as deep neural network (DNN) and convolutional neural network (CNN)) [2], the traditional on-site occupational safety management problems that are difficult to improve in construction sites have gained an opportunity for improvement.

In this research, a “Real-time Safety Warning System for Lifting Operations in Construction Sites” based on YOLO [3] was developed. YOLO is a CNN-based image detection and recognition technique that is widely used in real-time applications. The YOLO technique is adopted to localize personnel, and then the detected personnel is checked to determine whether they are in the lifting control zone. Then, a warning is triggered to prevent the potential safety hazards.

The remaining sections of this paper are organized as follows. Section 2 collects and analyzes the relevant literature. Section 3 presents our proposed method with the framework. Then, the implementation of the system is given in the following sections. Finally, the last section concludes the findings.

2. Related Works

The definition of “hazard” is a potential factor that causes harm or damage to human health, and “hazard recognition” is the process of identifying the existence of hazards and defining the characteristics of hazards [4]. Generally, the techniques of hazard recognition and risk assessment include methods such as checklists [5]. There is a lack of hazard recognition methods and techniques for on-site implementation [6]. Recently, many scholars have applied advanced information technology to hazard recognition to enhance the effectiveness of automatic safety management [7,8,9].

Since 2012, deep learning technology (DL) has adopted the graphics processing unit (GPU) meaning that computer recognition capabilities have been improved rapidly. Industry and academia have also paid attention to the application of DL on laborer, machine, and material tracking and the management of construction sites [10,11].

We have reviewed the applications of AI and image recognition technology in the safety management of construction projects. The related literature included workers’ safety equipment recognition [4], worker unsafe behavior recognition [10], fall prevention from heights on construction sites [11], and construction site fall risk monitoring [12].

Due to the development of AI, the technology now is applied to real-time image recognition. The technologies involved include deep convolutional neural network (DCNN) [13], region-based convolutional neural network (R-CNN) [14], fast region-based convolutional neural network (Fast R-CNN) [15], faster region-based convolutional neural network (Faster R-CNN) [16], and YOLO image recognition technology (You Only Look Once: Unified, Real-Time Object Detection) [3,17].

Among these technologies, YOLO achieves a higher recognition speed than other technologies to identify object categories quickly. However, its disadvantage is its bad performance in the localization of objects [5]. Due to the requirement of real-time identification in this research, the latest version of YOLO was used for the recognition of workers.

Regarding the relevant research on crane safety management and control, the important studies are as follows. Price et al. [18,19] and Fang et al. [20] proposed to establish a 3D working model of the crane lifting environment through a variety of sensors, simulate the crane movement posture, and provide the operator with lifting assistance in real-time through a graphical interface. The research reduced the operator’s limited visibility during the lifting operation, causing collision hazards. Peng et al. [21] proposed the automatic monitoring and early warning of external power failures. Liu et al. [22] proposed an operator fatigue warning system, in which the authors focused on the automatic monitoring of worker safety using artificial intelligence technology for lifting operations.

According to the literature we mentioned before, the safety management of construction sites has evolved from traditional technology to automatic recognition with AI. Our research uses image recognition AI techniques and network communication to construct a safety control system for workers in regard to lifting operations on construction sites as part of the automation of construction management.

3. Proposed Method

3.1. Application Scenario

During the construction phase of a building project (including concrete pouring, formwork, and rebar assembling), it is necessary to employ cranes for material lifting operations frequently. In the lifting operation, if the object falls from the crane, it is easy for it to cause accidents. Therefore, our research will focus on the safety control of lifting operations.

In the traditional practice of occupational safety management, the operation supervisor checks visually whether the construction worker has left the lifting area or the worker is approaching or breaking into the control zone, according to the construction regulations. In recent years, closed-circuit television (CCTV) has been widely installed on construction sites to facilitate safety management. However, due to uncertain factors such as short lifting operations, temporary placement, location changes, and so on, CCTV cannot be relocated efficiently. Moreover, CCTV usually does not have dedicated monitoring personnel when it is online, which makes it impossible to monitor effectively.

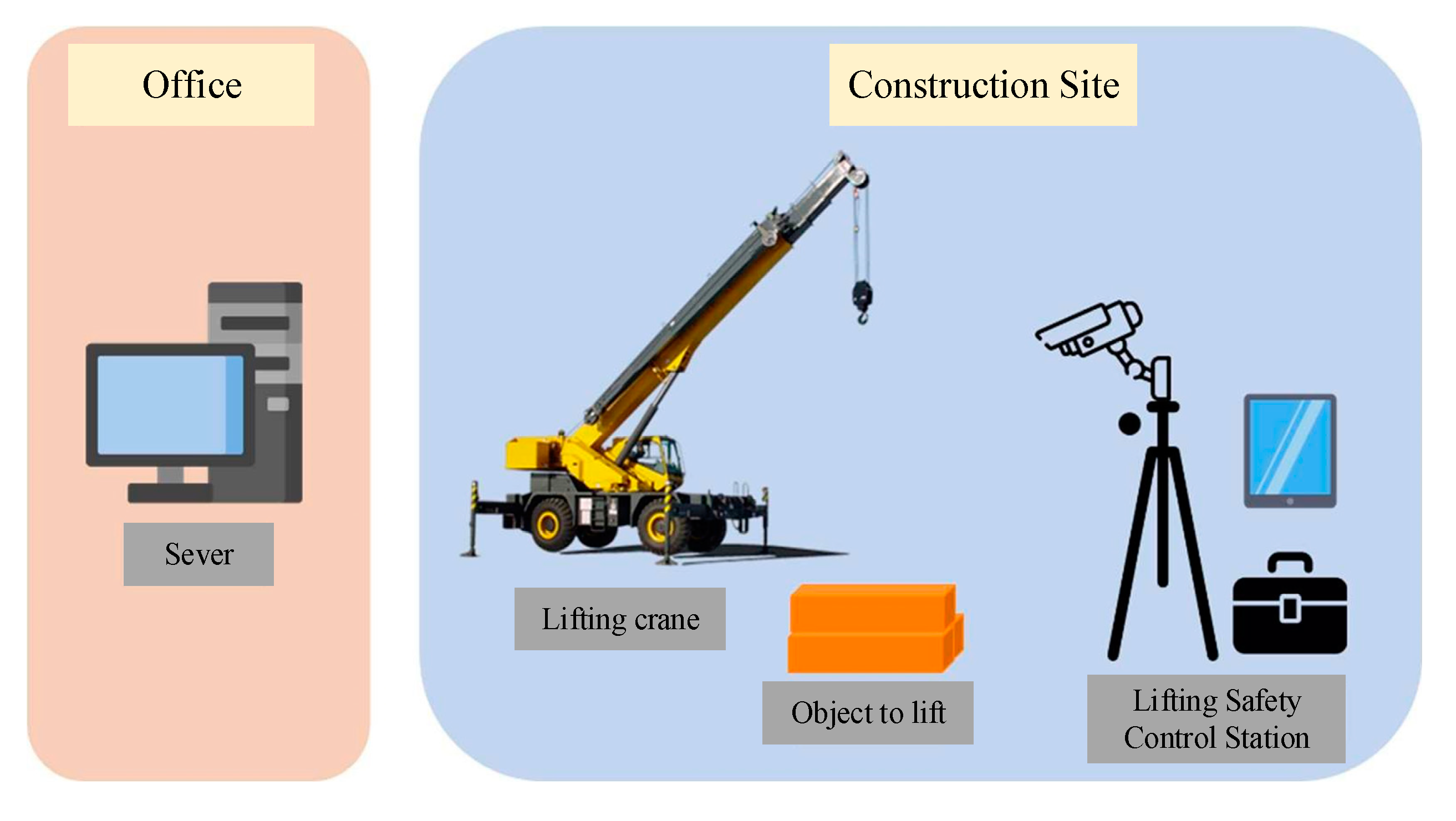

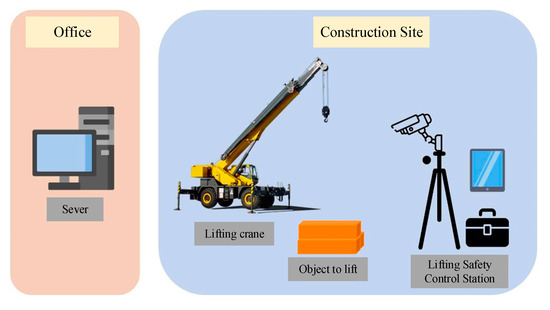

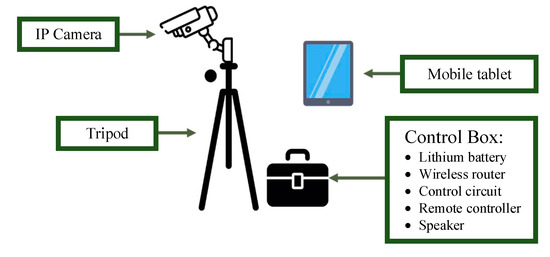

To solve the above problem, we proposed a portable lightweight lifting safety control station (PLSCS) to improve the safety problem encountered in traditional lifting operations. The application scenario of the proposed method is shown in Figure 1. The framework of the PLSCS is shown in Figure 2.

Figure 1.

Application scenario.

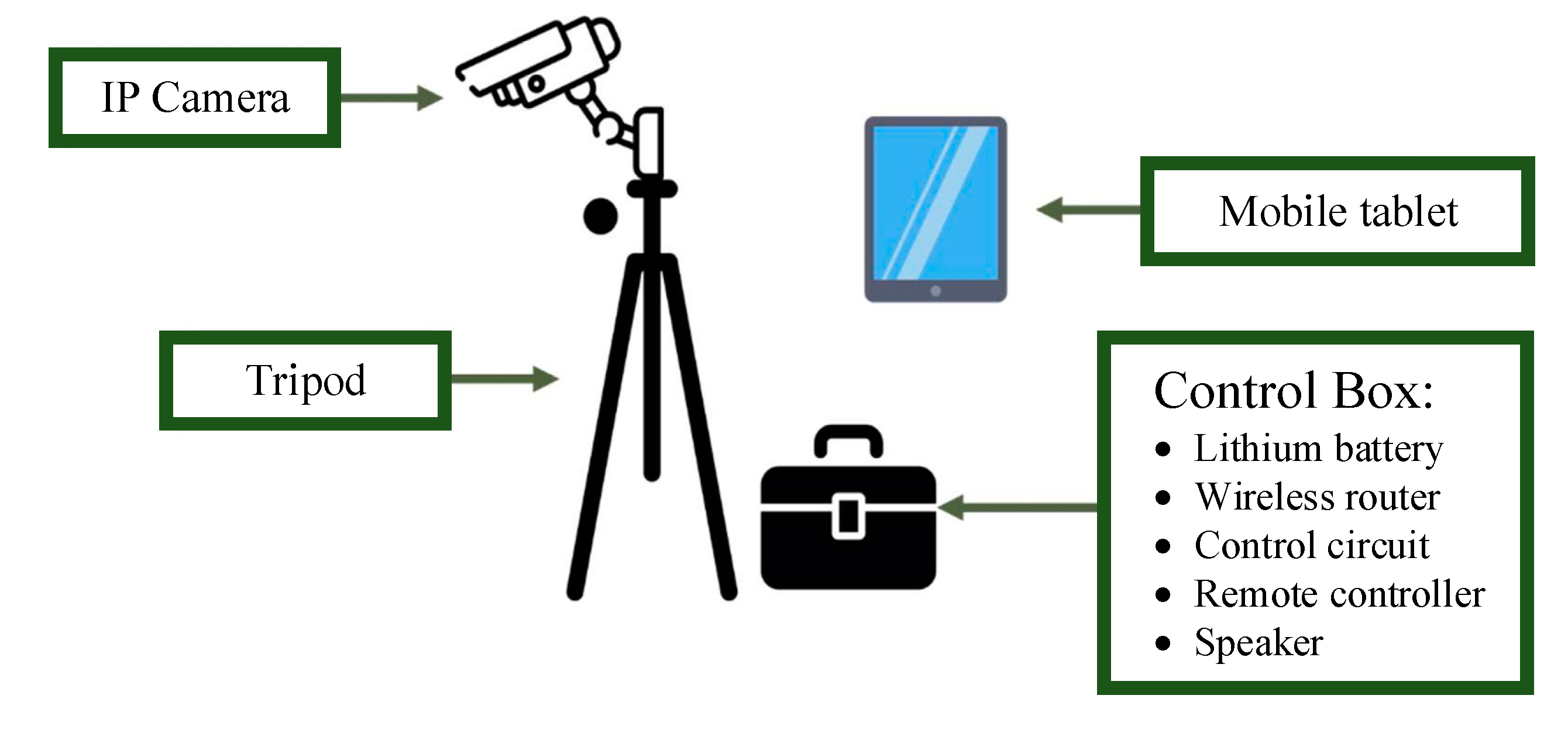

Figure 2.

Parts of lightweight lifting safety control station.

3.2. Framework and Flow

In this system, we use YOLO as a technique to recognize the worker, which is applicable in complex construction environments to achieve effective early warning in safety control.

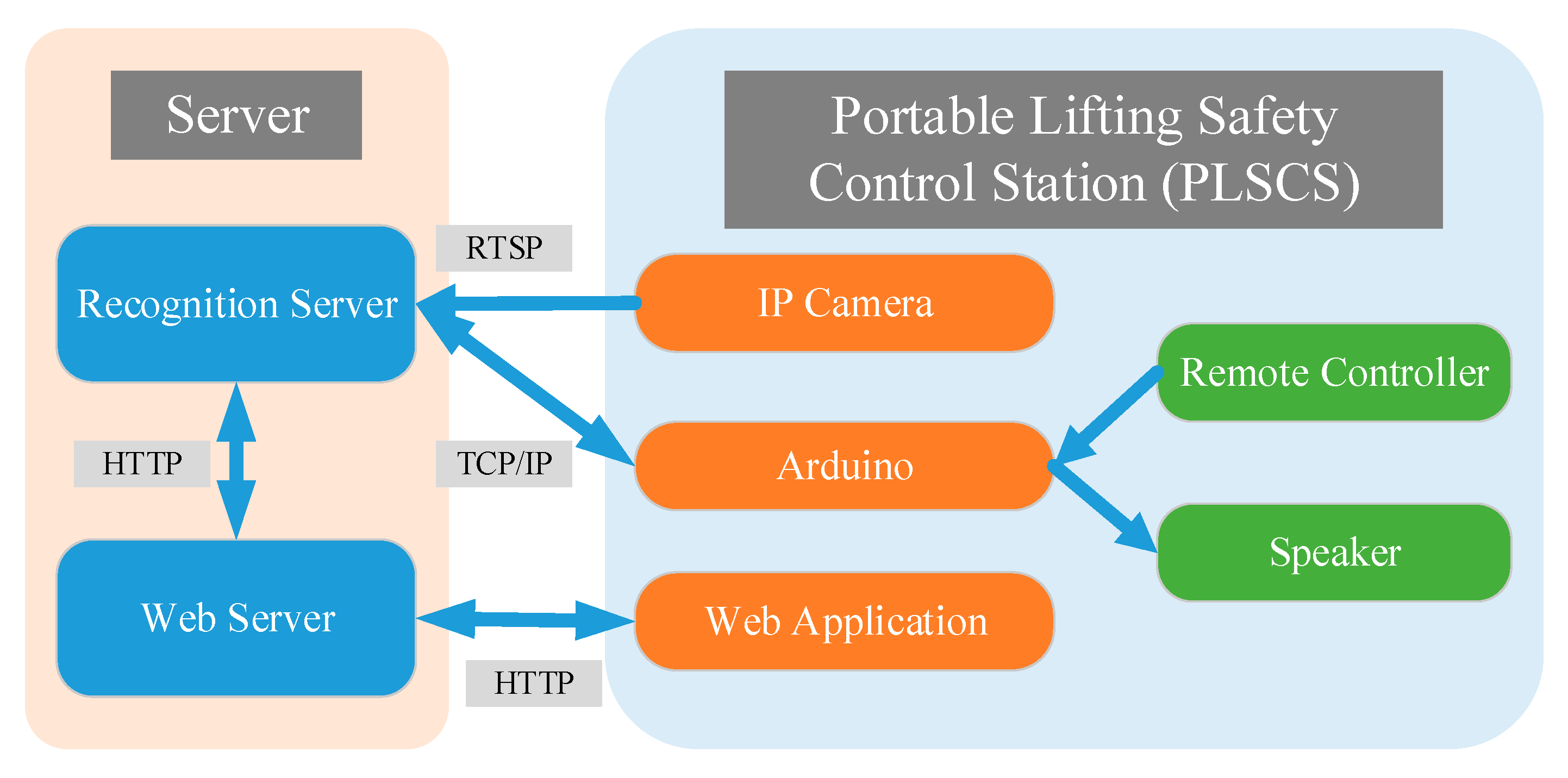

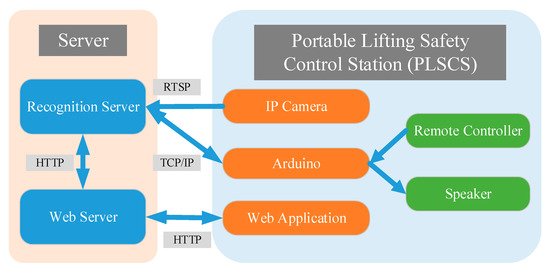

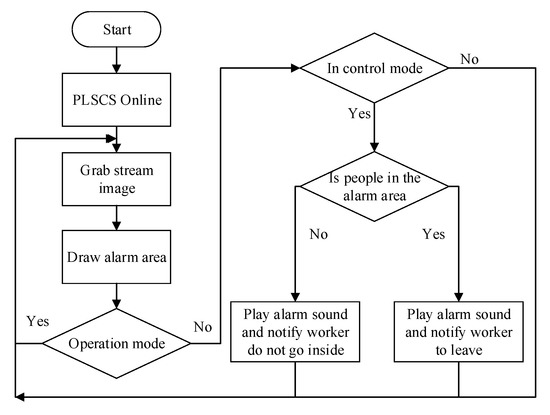

The proposed PLSCS can be deployed quickly and deployed on a large scale. After the deployment of PLSCS is completed, the supervisor only needs to set up the safety control zone (digital fence), according to the environment of the construction site, in order to solve the problem of difficult supervision during lifting operations. The system achieves full-time and real-time safety zone control to ensure the safety of the workers in lifting operations. The proposed system’s software architecture is shown in Figure 3. The flowchart is shown in Figure 4, and the description is shown as follows.

Figure 3.

System architecture.

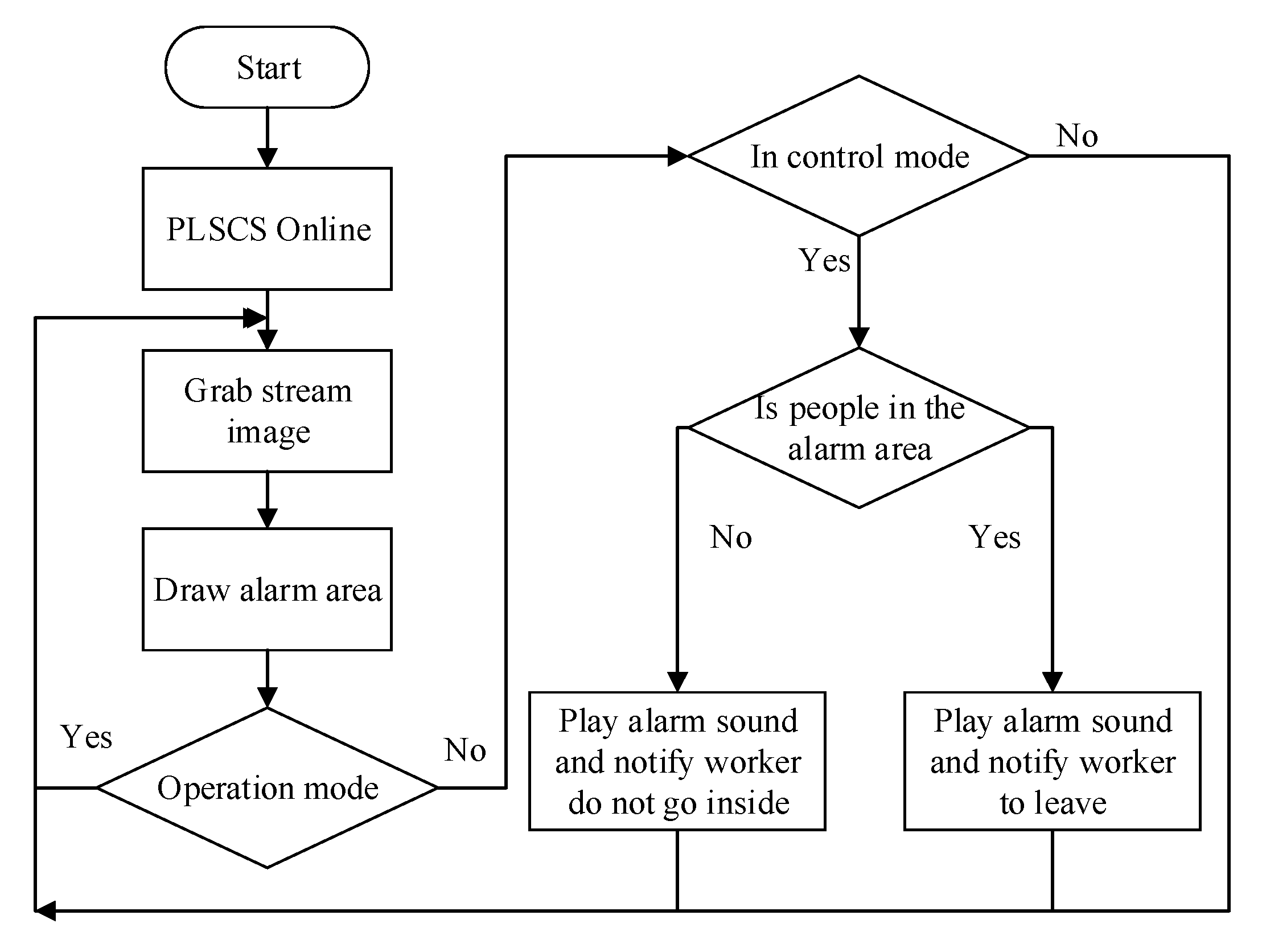

Figure 4.

System flowchart.

Firstly, when the PLSCS is powered on, it automatically notifies the server via the TCP/IP that the PLSCS is online. The microcontroller sends the ID of the PLSCS and the online message to the identification server. Once the PLSCS is online, the server obtains the real-time image from the IP camera. The microcontroller and the IP camera must connect to the same 4G router and use the same network to connect to the outside world. Therefore, the recognition server can obtain the IP address of the microcontroller and connect to the IP camera via a specified port to obtain real-time images.

Afterward, a safety control zone needs to be defined. The on-site operation supervisor uses the real-time image provided by the tablet computer and the server to define the safety control zone. The supervisor draws the area through the web application as a safety control zone for lifting operations.

Then, two modes can be set in the system, namely the worker operation mode and lifting control mode. In the worker operation mode, a reasonable phenomenon happens in order for the worker to enter the safety control zone before the lifting operation. In this mode, the image recognition function is turned off. The relevant personnel can watch real-time operation images through the Internet. In the lifting control mode, workers are not allowed to enter the safety control zone. The image recognition function is turned on and the automatic safety warning function is started. The alarm sound “Do not enter the lifting area!” plays through the speaker.

When the recognition system finds that a person has intruded into the safety control zone, an alarm is activated, and the information is provided to the safety personnel through the speaker. The on-site supervisor can choose to reset the status to the worker operation mode or the lifting control mode after the alarm is triggered.

4. System Implementation

The main server’s hardware specifications include a CPU with i5-9400 2.90 GHz, memory of 8 GB, and a GPU with Nvidia Geforce GTX 1650. The recognition server and the web server of the web application in this system are both set up in the same server to reduce the cost.

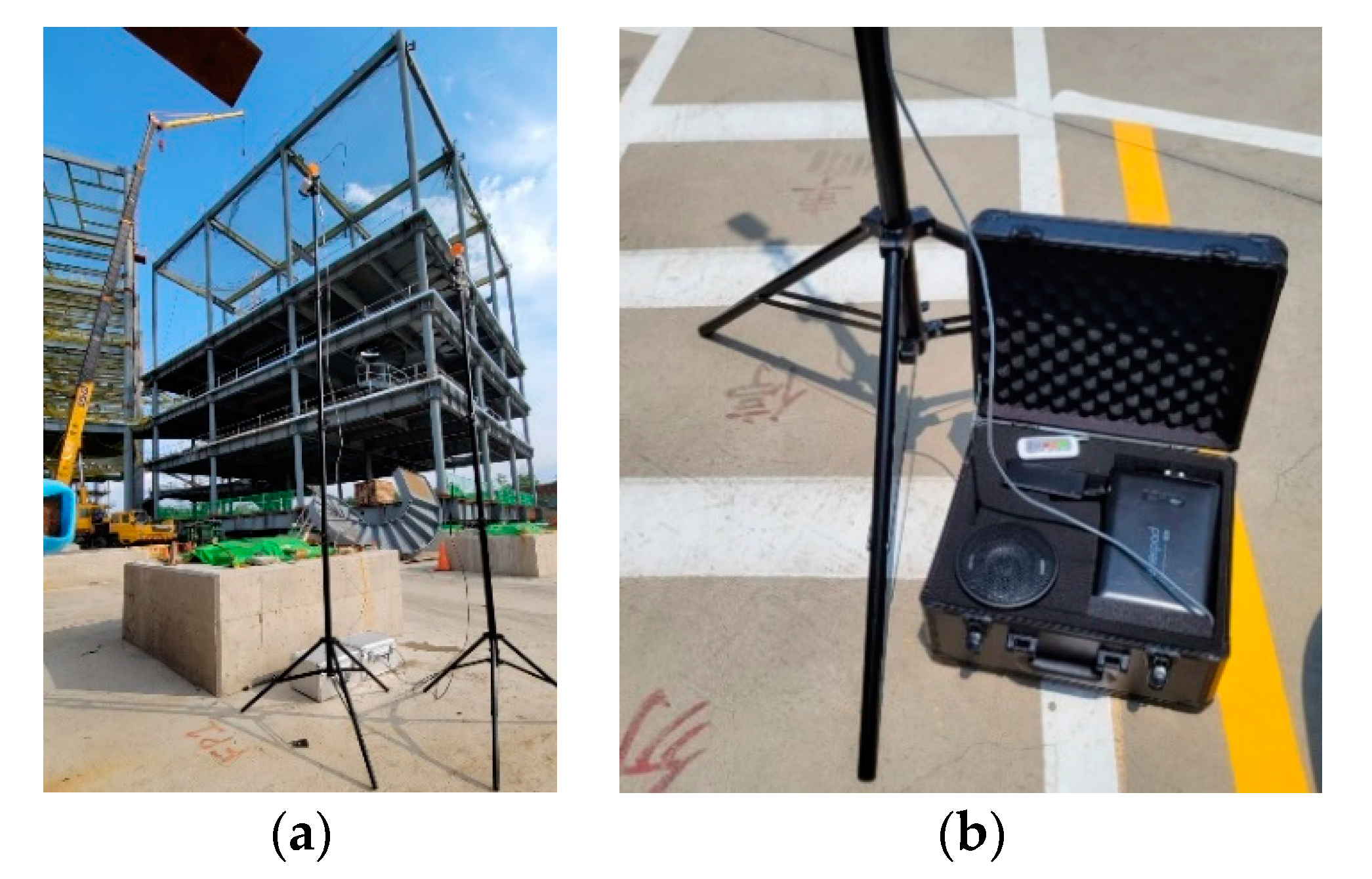

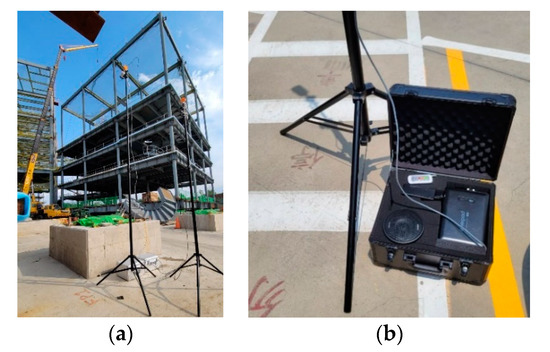

The system was tested and verified at the actual construction site. The on-site implementation of the proposed system is shown in Figure 5. The set of PLSCS hardware equipment included the following.

Figure 5.

PLSCS in the construction sites: (a) two monitoring cameras; (b) control box.

- An IP camera to obtain real-time images of the lifting worksite.

- A tripod mounted with the IP camera, which can adjust the shooting angle, height, and worksite position.

- A 4G router placed in a PLSCS control box, providing a webcam, tablet, and microcontroller internet services.

- A microcontroller responsible for receiving the signal sent by the wireless remote control, sending and identifying server messages, and providing an MP3 (warning audio) playback function.

- A speaker playing an alarm audio to inform workers of the current control status of the on-site situation.

- An AC power bank is placed in the control box of the PLSCS to supply power to IP cameras, 4G routers, and microcontrollers.

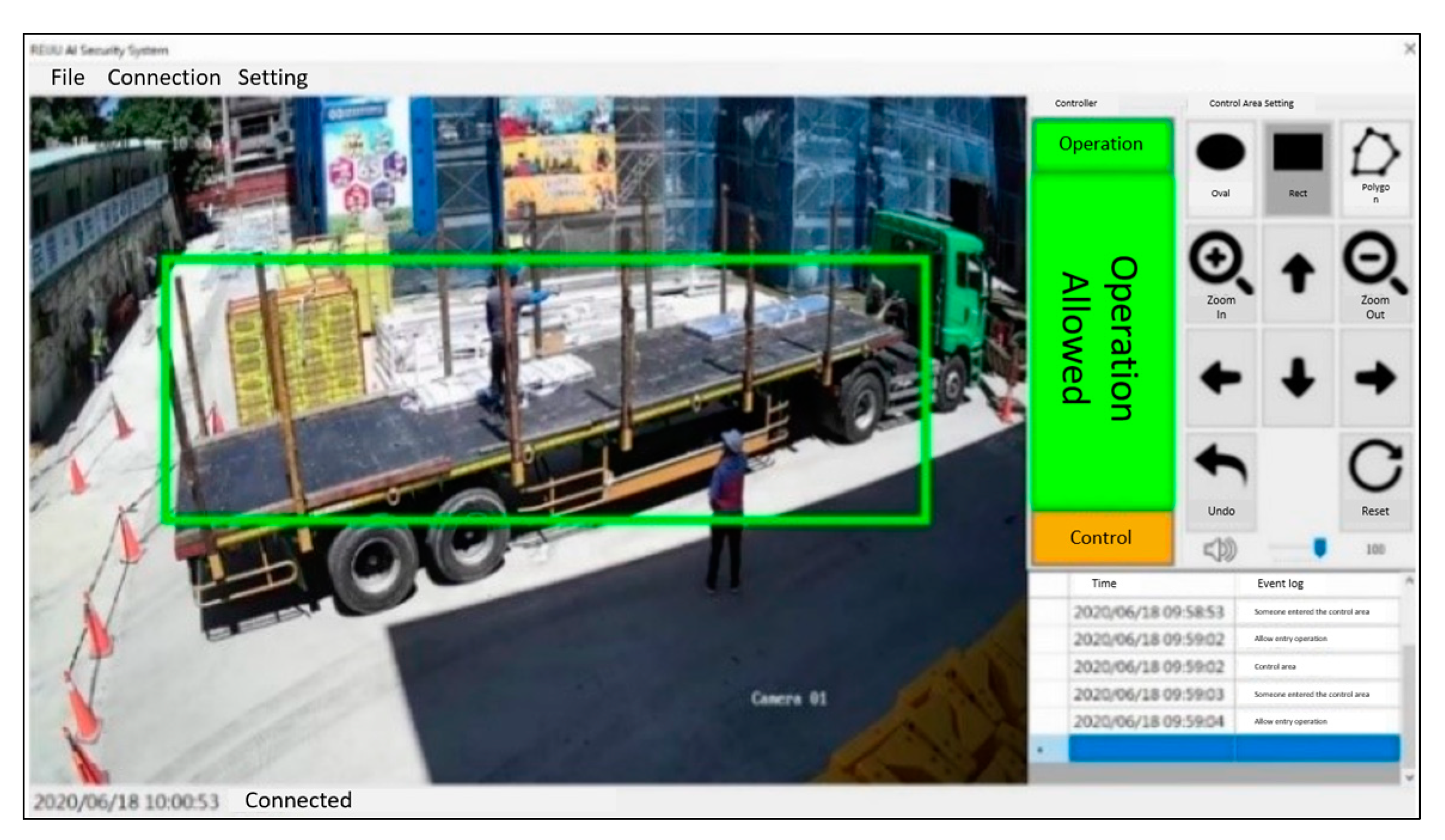

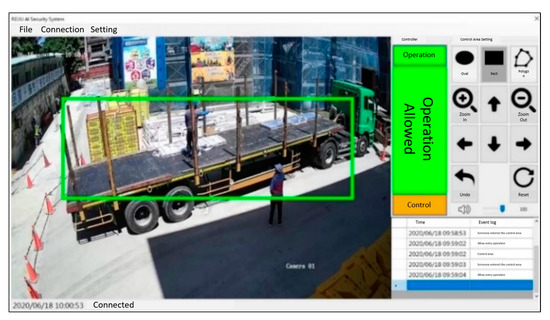

The system application user interface is shown in Figure 6, which includes the following.

Figure 6.

User interface of the proposed system.

- An image display area that shows real-time images from the IP cameras, safety control zone, and human recognition results.

- A warning status area that changes and has four phases: an initialize phase, operation phase, monitoring phase, and hazard warning phase.

- A safety control zone setting that includes the area type, size adjustment, and area movement.

- An event log that records hazardous events for the management supervisor to review.

The proposed system applies YOLO v3 for human recognition and adopts the latest training model provided by YOLO [17]. The model can detect 80 types of objects, but we only chose the “Person” types from the output from YOLO. Since the lifting operation site is a large area, the camera needs to be elevated and viewed from a slightly downward aerial view to fully capture the operation area. However, the height and viewing angle increase will affect the recognition accuracy, since the human features appear less clear in the image. Therefore, it is recommended to set up the camera with a height less than 4 m and a view angle less than 60° to achieve a balance between the recognition results and the shooting range. In addition, when the confidence threshold was set to 0.6, we found that the triangle cone or canvas was misjudged as a human in the preliminary tests. Finally, the confidence threshold was set to 0.7 to achieve a balance.

Regarding the setting of the safety control zone, the virtual digital fence indicates the safety control zone for lifting operations, which means that when the lifting crane is operating, people are prohibited from entering under or passing by the lifting crane and objects. The safety control zone settings consist of drawing, moving, and zooming operations. The user must select the drawing shape (includes rectangle, ellipse, and irregular shape) before drawing the safety control zone. Next, the user needs to click on the real-time image of the web application to set the coordinates of the shape. The user can move or zoom to fine-tune the drawn area after the shape is set. A simple example of the safety control zone setting and human recognition results is shown in Figure 7; the human is marked with a red color when he/she is in the safety control zone. Otherwise, they are marked with a green color if they are outside the safety control zone.

Figure 7.

Schematic diagram of human safety recognition.

5. Conclusions

We present the results of collaborative research between the construction industry and the university to propose a portable lightweight lifting safety control station (PLSCS). The proposed PLSCS integrates servers, tablets, cameras, and edge-computing equipment, and develops a real-time safety warning system for lifting operation in construction sites. The system implemented YOLO v3.0 for human detection, and we set the unsafety alert area with the concept of a virtual digital fence to detect whether any worker is breaking into the safety control zone during lifting operations. The system is triggered by a hazardous situation, i.e., when humans enter the safety control zone, and the system plays a warning alarm to warn the on-site laborers. Moreover, the system also sends a message through the communication application to inform the relevant management supervisor. Therefore, the field operation and the occupational safety management supervisors can monitor the multiple construction sites remotely to reduce the personnel requirements on-site, and also to decrease the occurrence of accidents. As a result, the proposed system has achieved the goal of ensuring the safety of workers in the construction project environment.

Author Contributions

Conceptualization, H.-C.L. and H.-K.C.; methodology, software, validation, L.-M.L. and Z.-Y.L.; investigation, H.-K.C.; writing—original draft preparation, L.-M.L. and H.-K.C.; writing—review and editing, Z.-Y.L.; supervision, H.-C.L.; project administration, W.-D.Y. All authors have read and agreed to the published version of the manuscript.

Funding

This research was partially funded by the National Science and Technology Council under No. MOST 111-2221-E-324-011-MY.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Data are unavailable due to privacy or ethical restrictions.

Acknowledgments

We acknowledge the actual testing site provided by Reiju Construction Co., Ltd. During the testing, the company’s Occupational Safety and Health Center and Taichung Green Museumbrary Public Works Office assisted us so that this research could be completed successfully.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Occupational Safety and Health Administration of Ministry of Labor (Taiwan). 2019 Labor Annual Report. Available online: https://www.osha.gov.tw/1106/1164/1165/1168/29804/ (accessed on 5 November 2021).

- Russell, S.; Norvig, P. Artificial Intelligence: A Modern Approach; Prentice Hall: New York, NY, USA, 2010. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- Yu, W.-D.; Liao, H.-C.; Hsiao, W.-T.; Chang, H.-K.; Wu, T.-Y.; Lin, C.-C. Application of The Hybrid Machine Learning Techniques for Real-Time Identification of Worker’s Personal Safety Protection Equipment. J. Technol. 2020, 35, 155–165. [Google Scholar]

- Ye, Y.-G. Application of Event Tree Analysis in Occupational Safety Risk Assessment. Master’s Thesis, Graduate Institute of Environmental Engineering, National Central University, Taoyuan City, Taiwan, 2009. [Google Scholar]

- Chang, R.-N. Training of Potential Hazard Identification on Construction Site Using Virtual Reality. Master’s Thesis, Department of Civil Engineering, National Yang Ming Chiao Tung University, Taipei, Taiwan, 2011. [Google Scholar]

- Brilakis, I.; Park, M.-W.; Jog, G. Automated Vision Tracking of Project Related Entities. Adv. Eng. Inform. 2011, 25, 713–724. [Google Scholar] [CrossRef]

- Park, M.-W.; Brilakis, I. Construction Worker Detection in Video Frames for Initializing Vision Trackers. Autom. Constr. 2012, 28, 15–25. [Google Scholar] [CrossRef]

- Gong, J.; Caldas, C.H. Data Processing for Real-Time Construction Site Spatial Modeling. Autom. Constr. 2008, 17, 526–535. [Google Scholar] [CrossRef]

- Ding, L.; Fang, W.; Luo, H.; Love, P.E.D.; Zhong, B.; Ouyang, X. A Deep Hybrid Learning Model to Detect Unsafe Behavior: Integrating Convolution Neural Networks and Long Short-Term Memory. Autom. Constr. 2018, 86, 118–124. [Google Scholar] [CrossRef]

- Fang, W.; Ding, L.; Luo, H.; Love, P.E.D. Falls from Heights: A Computer Vision-Based Approach for Safety Harness Detection. Autom. Constr. 2018, 91, 53–61. [Google Scholar] [CrossRef]

- Liao, H.-C.; Yu, W.-D.; Hsiao, W.-T.; Chang, H.-K.; Tsai, C.-K.; Lin, C.-C. Application of Image Semantic Segmentation Using the Deep Learning Technique in Monitoring the Fall Risk of Construction Workers in A Building Elevator Shaft. J. Technol. 2021, 36, 1–12. [Google Scholar]

- Szegedy, C.; Toshev, A.; Erhan, D. Deep Neural Networks for Object Detection. In Proceedings of the 26th Advances in Neural Information Processing Systems (NIPS 2013), Lake Tahoe, Nevada, 5–10 December 2013. [Google Scholar]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Region-Based Convolutional Networks for Accurate Object Detection and Segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 38, 142–158. [Google Scholar] [CrossRef] [PubMed]

- Girshick, R. Fast R-CNN. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef] [PubMed]

- Redmon, J.; Farhadi, A. YOLOv3: An Incremental Improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Price, L.C.; Chen, J.; Park, J.; Cho, Y.K. Multisensor-Driven Real-Time Crane Monitoring System for Blind Lift Operations: Lessons Learned from a Case Study. Autom. Constr. 2021, 124, 103552. [Google Scholar] [CrossRef]

- Price, L.C.; Chen, J.; Cho, Y.K. Dynamic Crane Workspace Update for Collision Avoidance During Blind Lift Operations. In Proceedings of the 18th International Conference on Computing in Civil and Building Engineering, São Paulo, Brazil, 18–20 August 2020; pp. 959–970. [Google Scholar]

- Fang, Y.; Cho, Y.K.; Chen, J. A Framework for Real-Time Pro-active Safety Assistance for Mobile Crane Lifting Operations. Autom. Constr. 2016, 72, 367–379. [Google Scholar] [CrossRef]

- Weifu, P.; Shu, D.; Shaolei, C.; Qing, Z.; Na, T. Research on The Application of Target Detection Based on Deep Learning Technology in Power Grid Operation Inspection. E3S Web Conf. 2021, 236, 01035. [Google Scholar] [CrossRef]

- Liu, P.; Chi, H.-L.; Li, X.; Li, D. Development of a Fatigue Detection and Early Warning System for Crane Operators: A Preliminary Study. In Proceedings of the Construction Research Congress, Tempe, Arizona, 8–10 March 2020. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).