Abstract

The use of reinforcement learning technology for the optimal control problem solution is considered. To solve the optimal control problem an evolutionary algorithm is used that finds control to ensure the movements of a control object along different trajectories with approximately the same values of the quality criterion. Additional conditions for passing the trajectory in the neighbourhood of given areas of the state space are included in the quality criterion. To build a stabilization system for the movement of an object along a given trajectory, machine learning control by symbolic regression is used. An example of solving the optimal control problem for a quadcopter is given.

1. Introduction

The optimal control problem with phase constraints often has a multi-modal functional. Therefore, with its numerical solution by direct approach, it is possible to obtain several control functions that ensure the movement of the object along different trajectories in the state space with approximately the same value of the control quality criterion which is close to the optimal.

A numerical solution to the optimal control problem leads to some difficulties. As a rule, in most optimal control problems, it is necessary to minimize not one but at least two criteria, reach the control goal or minimize the error of reaching the terminal state and still minimize the given quality criterion. Addition of weight coefficients into criteria does not significantly simplify the problem, since the problem of choosing weights arises.

Another search problem is defined as the loss of unimodality of the functional on the space of parameters of the approximating function. Even a piecewise linear approximation of the control function, when only one parameter needs to be found on each interval for each control component, does not guarantee the presence of a single minimum of the goal functional on the space of parameters.

The problem becomes more complicated in the presence of phase constraints that describe the areas of state space forbidden for the optimal trajectory. It is most likely that due to these reasons, and despite numerous attempts [1,2], a universal computational method for the optimal control problem has not been created.

Further studies have shown that if a strictly optimal solution is not needed and solutions close to the optimal are quite satisfactory, then evolutionary algorithms can be successfully applied to the optimal control problems [3].

Sometimes in practice the researcher knows how the object should move along the optimal trajectory, i.e., approximately knows the areas in the state space the optimal trajectory should pass through. If we introduce additional requirements in the form of passing through the given areas into the quality criterion, then the evolutionary algorithm should change the search area and look for a solution that satisfies the additional requirements. This approach is effective when using evolutionary algorithms. Due to the inheritance property, the improvement of the criterion value at each generation is performed on the basis of small evolutionary transformations of possible solutions to the previous generation. Therefore, if at some generation one of the possible solutions passes through the required areas specified by the researcher, then with a high probability the evolutionary algorithm will search for the optimal solution that preserves the obtained properties. A similar technique is used in machine learning with reinforcement [4,5], when the researcher awards the object by the change of the target functional value for the right actions. Currently, reinforcement learning is actively used in the practice of solving control problems [6]. The paper contains a formal description and practical application of reinforcement learning for solving the optimal control problem.

2. The Optimal Control Problem and Reinforcement Learning

Consider a formal statement of the optimal control problem.

The mathematical model of a control object is given in the Cauchy form of an ordinary differential equation system

where is a state space vector, is a control vector, , , and is a compact set.

The initial state is given by

The terminal state is given by

where is the time to reach the terminal state (3). Time is not given, but it is limited to , where is a given positive value.

The quality criterion is given by

Assume that the researcher knows the areas in the state space of where the optimal trajectory should be. Then, additional conditions are included in the quality criterion

where

p is a penalty coefficient, and is a Heaviside step function

, are given small positive values, and , are the centres of known areas.

According to the introduced additional conditions, if an optimal trajectory does not pass near some given point , then value of criterion (5) will grow.

3. Computation Experiment

Consider the optimal control problem for the spatial movement of a quadcopter. The mathematical model of the control object is

where .

Control is constrained

where , , , , , , and .

The initial state is given by

The terminal state is given by

The phase constraints are given by

where , , , , , .

It is necessary to find a control function, taking into account the constraints in (9), that minimizes the following criterion

where , .

To solve the control problem numerically, let us use a piecewise linear approximation. The time axis is divided into equal intervals , and the search for constant parameters is performed at the interval boundaries for each control component. Control is a piecewise linear function that consists of segments connecting points at the bounds of intervals. Given the control constraints, the desired control function is as follows

where

and K is a number of time interval boundaries

When solving the problem by a direct approach, the condition of reaching the terminal state is included in the quality criterion

where ,

To solve the problem, a hybrid evolutionary algorithm [7] is used.

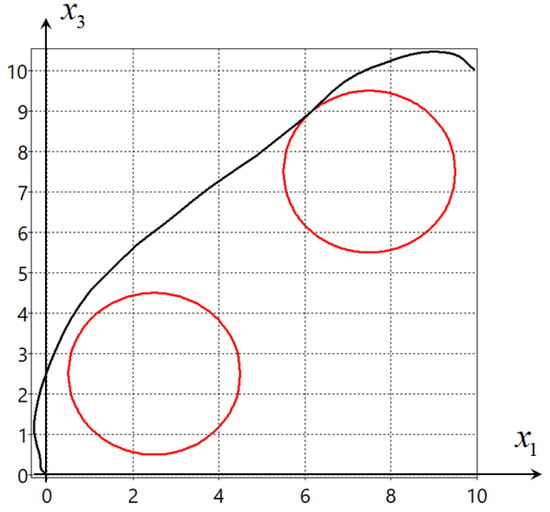

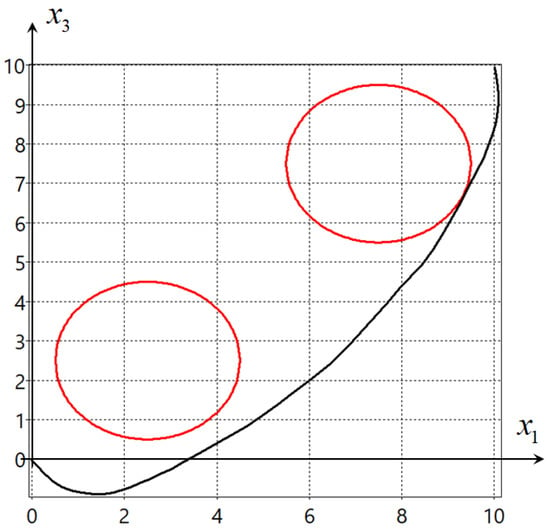

Figure 1 and Figure 2 show projections on the horizontal plane of the two found optimal trajectories. The big circles present the phase constraints in (12).

Figure 1.

Projection of optimal trajectory 1 on the horizontal plane.

Figure 2.

Projection of optimal trajectory 2 on the horizontal plane.

The criterion for the solutions found had the following values: for the solution in Figure 1 , for the solution in Figure 2 .

As can be seen from the experiment, the values of the criteria practically coincide, the solutions found ensure the movement of the object from the given initial state (10) to the given terminal state (11) without violation of the phase constraints. In the series of experiments, the hybrid evolutionary algorithm found solutions that bypass the phase constraints either from above, as in Figure 1, or from below, as in Figure 2.

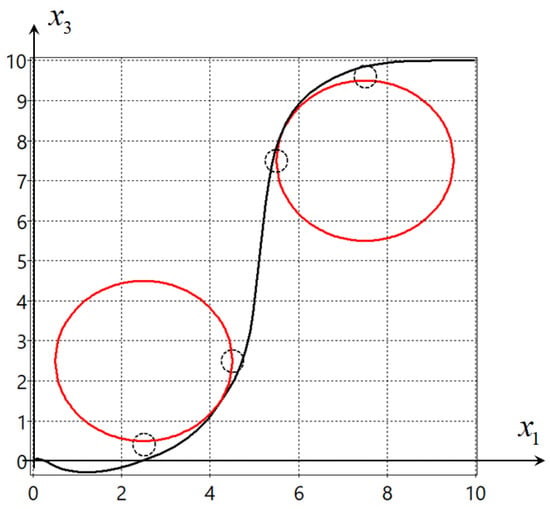

Suppose that we need the control object to move between obstacles. For this purpose the desired areas on the horizontal plane are defined. It is known that in the presence of interfering phase constraints, the optimal trajectory should be close to the boundary of these constraints. For the given problem four desired areas are defined as

The conditions for passing through the desired areas (19) are included in the quality criterion

where .

The hybrid evolutionary algorithm found the following optimal solution , , , , , , , , , , , , , , , , , , , , , , , , , , , , , , , , , , , , , , , , , , , , , , , , , , , , , , , , , , , .

Figure 3 shows the projection of the found optimal trajectory for the solution with quality criterion . Small dashed circles are the desired areas, while big circles are the constraints.

Figure 3.

Projection on the horizontal plane of the optimal trajectory found by reinforcement learning.

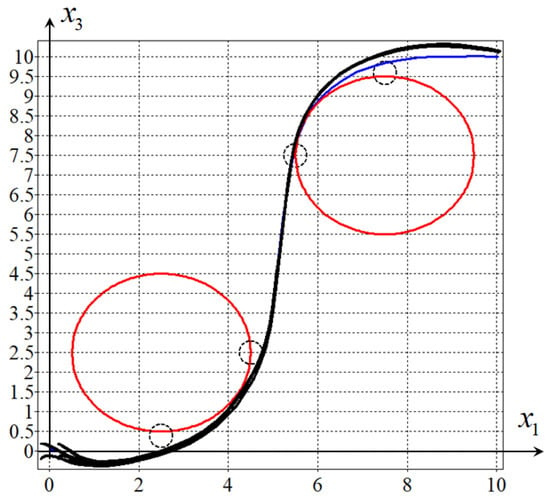

To implement the obtained solution according to the extended statement of the optimal control problem, it is necessary to build a system to stabilize the movement of the object along the optimal trajectory [8]. For this purpose, machine learning control is used [9]. The control function structure search is carried out by symbolic regression [10].

The obtained solution is

where

where , , , , , and is a state vector of the reference model.

Figure 4 shows the trajectories from eight initial states on the horizontal plane.

Figure 4.

Projection on the horizontal plane of the optimal trajectories from eight initial states.

4. Results

The paper presents the use of machine learning technology with reinforcement to solve the optimal control problem with phase constraints using an evolutionary algorithm. To implement reinforcement learning, additional conditions defining the form of the optimal trajectory are introduced into the quality criterion. The optimal trajectory should pass through specified areas whose positions depend on the phase constraints. An example of solving the optimal control problem for a quadcopter by machine learning with reinforcement was given.

5. Discussion

The use of reinforcement learning technology to solve the optimal control problems of robotic devices is advisable, since in most cases the developer approximately knows the form of the optimal trajectory for the problem being solved.

Author Contributions

Conceptualization, A.D. and E.S.; methodology, A.D. and S.K.; software, A.D., S.K. and E.S.; validation, A.D. and V.M.; formal analysis, E.S.; investigation, S.K.; data curation, A.D., S.K. and E.S.; writing—original draft preparation, A.D, E.S. and V.M.; writing—review and editing, E.S.; visualization, V.M.; supervision, A.D. All authors have read and agreed to the published version of the manuscript.

Funding

The work was performed with partial support from the Russian Science Foundation, Project No 23-29-00339.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data that support the findings of this study are openly available in https://cloud.mail.ru/public/pKW4/fmrfqjkv3 (for optimal trajectory 1), https://cloud.mail.ru/public/mVZ5/Kr2yb9jJn (for optimal trajectory 2).

Conflicts of Interest

The authors declare no conflict of interest.

References

- Rao, A.V. A Survey of Numerical Methods for Optimal Control. Adv. Astronaut. Sci. 2010, 135, 497–528. [Google Scholar]

- Grachev, N.I.; Evtushenko, Y.G. A Library of Programs for Solving Optimal Control Problems. USSR Comput. Math. Math. Phys. 1980, 2, 99–119. [Google Scholar] [CrossRef]

- Diveev, A.I.; Konstantinov, S.V. Study of the Practical Convergence of Evolutionary Algorithms for the Optimal Program Control of a Wheeled Robot. J. Comput. Syst. Sci. Int. 2018, 4, 561–580. [Google Scholar] [CrossRef]

- Brown, B.; Zai, A. Deep Reinforcement Learning in Action; Manning, Publications Co.: Shelter Island, NY, USA, 2019; 475p. [Google Scholar]

- Morales, M. Grokking Deep Reinforcement Learning; Manning Publications Co.: Shelter Island, NY, USA, 2020; 472p. [Google Scholar]

- Duriez, T.; Brunton, S.; Noack, B.R. Machine Learning Control–Taming Nonlinear Dynamics and Turbulence; Springer: Cham, Switzerleand, 2017; 229p. [Google Scholar]

- Diveev, A. Hybrid Evolutionary Algorithm for Optimal Control Problem. In Lecture Notes in Networks and Systems; Springer: Berlin/Heidelberg, Germany, 2023; Volume 543, pp. 726–738. [Google Scholar]

- Diveev, A.; Sofronova, E. Synthesized Control for Optimal Control Problem of Motion Along the Program Trajectory. In Proceedings of the 2022 8th International Conference on Control, Decision and Information Technologies (CoDIT), Istanbul, Turkey, 17–20 May 2022; pp. 475–480. [Google Scholar]

- Diveev, A.I.; Shmalko, E.Y. Machine Learning Control by Symbolic Regression; Springer: Cham, Switzerleand, 2021; 155p. [Google Scholar]

- Koza, J.R.; Keane, M.A.; Streeter, M.J.; Mydlowec, W.; Yu, J.; Lanza, G. Genetic Programming IV. Routine Human-Competitive Machine Intelligence; Springer: Boston, MA, USA, 2003; 590p. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).