Abstract

Recently, gesture recognition technology has attracted increasing attention because it provides another means of information exchange in some special occasions, especially for auditory impaired individuals. At present, the fusion of sensor signals and artificial intelligence algorithms is the mainstream trend of gesture recognition technology. Therefore, this article designs a machine-learning-empowered gesture recognition glove. We fabricate a flexible strain sensor with a sandwich structure, which has high sensitivity and good cycle stability. After the sensors are configured in the knitted gloves, the smart gloves can respond to different gestures. Additionally, according to the representation characteristics and recognition targets of sampled signal data, we explore a segmented processing method of dynamic gesture recognition based on Logit Adaboost algorithm. After classification training, the recognition accuracy of smart gloves can reach 97%.

1. Introduction

As a method of nonverbal communication [1], standardized gestures play an important role in the transmission of message on specific occasions, such as communication between auditory-impaired individuals [2] and information interaction in AR/VR environments [3] or military fields [4]. However, without long-term systematic learning, it is difficult for most people to understand the meaning of gestures [5]. At present, gesture recognition technology offers a feasible solution to this communication barrier, which can effectively judge the specific movements of the hand.

Machine-vision-based image taking and processing has received extensive attention as a means of gesture recognition due to simple equipment requirements [6]. However, it is troubled by a series of problems in the process of application, including complex operation mechanism and variable lighting condition [7]. Accelerometers and gyroscopes are widely used in commercial gesture recognition devices, which can track the direction of the hand movement in three-dimensional space [8]. However, rigid materials and excessive volume limit the application scenarios of these devices, which bring inconvenience to users. Benefiting from the rapid advancement of flexible electronics and artificial intelligence, wearable flexible data gloves based on flexible sensors have been developed. Common flexible sensors for gesture recognition include piezoresistive sensors [9], capacitive sensors [10], piezoelectric sensors [11], triboelectric sensors [12] and EMG sensor arrays [13]. Through the electromechanical performance of flexible sensors, the deformation degree of the hand can be reflected correspondingly.

Furthermore, the classification of sensor signals and the extraction of gesture features are the key factors to determine the accuracy of gesture recognition. It generally requires intelligent algorithms to train sensor signals and generate appropriate classifiers [14]. However, due to the differences in gesture behavior patterns of individuals, the generalization ability of the trained classifiers is low if the sample capacity is limited [15]. A common approach is to increase the number of people participating in algorithm training, which is time- and energy-consuming [16]. Therefore, there is a new idea to remove the irrelevant factors of sensor signals as much as possible through special designs.

In this study, we report on the machine-learning-empowered gesture recognition glove. Flexible piezoresistive strain sensors with high sensitivity and cycle stability were configured in knitted gloves. Then, in order to enhance the generalization ability of gesture recognition model, a multi segment classification model of dynamic gesture recognition based on Logit AdaBoost algorithm was explored. Static signals collected at intervals of gesture changes are usually considered invalid signals because of unobvious characteristics and uncertain duration. So, the model distinguished static signals from effective dynamic signals due to finger movements. Additionally, subsequent training and classification was only related to dynamic signals, avoiding misjudgment caused by static signals. With sufficient training for the defined gestures, the recognition accuracy reached 97%, showing great classification performance.

2. Materials and Methods

2.1. Materials

Reduced graphene oxide (r-GO) was supplied by Qingdao University. Polydimethylsiloxane (PDMS) was purchased from Microflu Co., Ltd. (Changzhou, China). N,N-Dimethylformamide (DMF, 99.5%) was bought from Aladdin Co., Ltd. (Shanghai, China).

2.2. Fabrication of the Flexible Strain Sensor

The r-Go dispersion (6 wt%) was prepared by two steps. The reduced graphene oxide powders were firstly dispersed in N,N-Dimethylformamide by mechanical shearing for 1 h at 3500 r/min, and, secondly, it was necessary to remove air bubbles from the solution using ultrasonic for 5 min. Then, the solution was poured onto the PDMS substrate (the volume ratio of PDMS matrix to curing agent was 10:1 and the curing condition was 70 °C for 1 h) and heated at 50 °C for 3 h using a hot plate. After the solvent evaporates completely, there was a composite film containing the r-Go layer and PDMS substrate. Finally, two copper wires were adhered to both sides of the r-Go layer with silver paste, which used as the electrodes of the strain sensor. Additionally, PDMS was poured and cured on the top of the composite film, resulting in a sandwich structure. The size of the final sample is about 35 mm × 4 mm × 1 mm.

2.3. Characterization

A scanning electron microscope (SEM, S-4800, Hitachi, Japan) was used to characterize the surface morphology of the R-Go film. Additionally, in order to test the sensing performance of the strain sensors, the flexible strain sensors were fixed between the metallic clamps of a stretching machine (ZQ-CI701G, 1000 N, ZHIQU, Dongguan, China), and a digital source-meter (Keithley 2400, USA) was used to measure the resistance of the sensors continuously in the process of stretching. The stretching speed was maintained at 30 mm/min.

2.4. The Preparation of Data Gloves and Data Acquisition

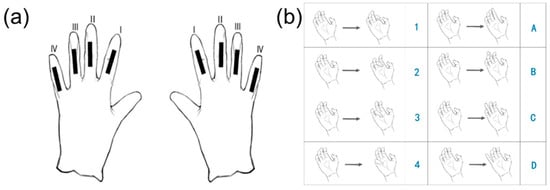

The configuration of strain sensors in data glove is shown in Figure 1a. To monitor the bending degree of fingers, the sensors were attached to the corresponding finger joint position of knitted gloves. Figure 1b shows the gesture library of this article. When making these gestures, the resistance signal acquisition of sensors was realized by the data acquisition card (Ni-6211). In the detection circuit, sensors were connected in parallel, and divider resistors were connected in series with the sensor on each branch. Based on the differential mode of the data acquisition card, the voltage on both sides of the sensors were collected completely and displayed in a laptop program designed with LabVIEW. The resistance of the sensor can be converted by Ohm’s law.

Figure 1.

(a) Configuration of flexible sensor in data glove. (b) Gesture library.

2.5. The Design of Machine-Learning Algorithm

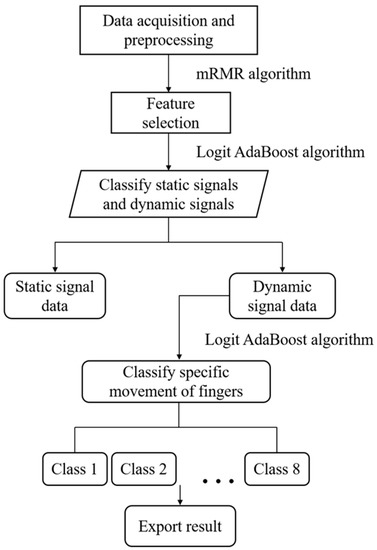

A flow diagram of the machine-learning process is shown in Figure 2. During the training process, the change in voltage due to predetermined gestures was firstly merged into a matrix as the input of mRMR algorithm to discard useless information and retain relevant signals, which improves the generalization ability of the model and reduces the complexity of the algorithm. Subsequently, the multi-segment classification model was applied to classify gestures by using the extracted features with Logit AdaBoost algorithm. Based on the training results of the previous weak classifier, the Logit AdaBoost algorithm evaluated and redefined the parameter weights to update the classifier. Additionally, the Logit AdaBoost algorithm was not sensitive to noise and outliers, which prevented the model from over fitting to a certain extent. The classification process included two steps. First, static signals and dynamic signals were effectively distinguished. Then, combined with the sample characteristics and distribution, the motion trajectory of the finger was further subdivided. Finally, 10-fold cross-validation method was used to evaluate the model. It can make full use of the samples in the training process, which avoids over fitting.

Figure 2.

The process of a multi-segment classification model.

3. Results and Discussions

3.1. Morphology Characterization

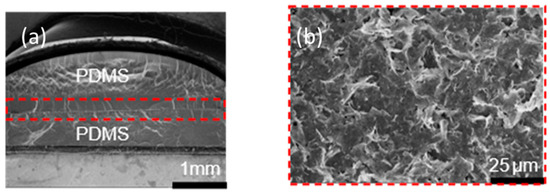

As shown in Figure 3a, based on a sandwich structure composed of a r-GO layer and two PDMS layers, the overlapping state of graphene sheets can change during the deformation of the sensor and recover under the constraint of elastic material (PDMS), resulting in the electromechanical response of sensors.

Figure 3.

Topography characterization of the sensor. (a) Cross-section of the sensor. (b) SEM images of the r-GO film.

Figure 3b shows the that reduced graphene oxide is uniformly distributed in the r-GO layer, which is the basis of ensuring the stable electrical performance of the sensor.

3.2. Piezoresistive Sensing Response

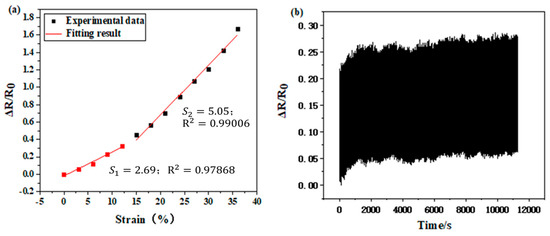

Figure 4a shows the relationship between the sensor resistance and applied strains, from which the sensitivity of the strain sensor can be obtained. The sensitivity of the strain sensor can be calculated by the following equation:

where is the strain of the strain sensor, R represents the resistance of sensor after stretching, R0 is the resistance of sensors at = 0%. Figure 4a shows the strain sensor provides high sensitivity for detecting hand movements (GF~2.69 at strains below 12%, GF~5.05 at the strain ranges from 12% to 36%). Furthermore, the strain sensors also show a stability response over a large number of stretch–release cycles (above 1500 cycles), as shown in Figure 4b.

Figure 4.

(a) The relationship between the sensor resistance and the applied strain. (b) Sensor stability under 1500 stretch−release cycles at 10% strain.

3.3. The Selection of Classification Algorithm and Feature

In order to find the data correlation, we extracted 10 characteristics as signal features, which are related to indicators of time domain, frequency domain and entropy. Then, we used the mRMR algorithm to check the importance of the features in the two stages. As shown in Table 1, waveform factors and skewness have the great correlation with the classification results of the first stage. Skewness and waviness are of significance for the classification of the second stage.

Table 1.

Feature importance evaluation based on mRMR algorithm.

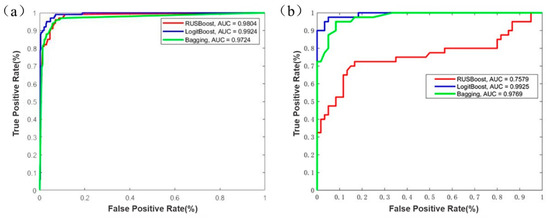

RUSBoost, Logit AdaBoost and Bagging are the common machine learning algorithms in data classification. Based on selected features through the mRMR algorithm, the classification results of the three algorithms on gesture data are shown in Figure 5. Area under curve (AUC) is a statistical concept. It represents the probability that the predicted value of positive cases is greater than the predicted value of negative cases. Whether it is the first stage or the second stage, the AUC of Logit AdaBoost algorithm is maximum. It shows that the model generated by Logit AdaBoost algorithm is most effective for gesture classification.

Figure 5.

Comparison of model performance by AUC for RUSBoost, Logit AdaBoost, Bagging. (a) Performance of different algorithm models in the first stage. (b) Performance of different algorithm models in the second stage.

3.4. The Gesture Recognition Modeling

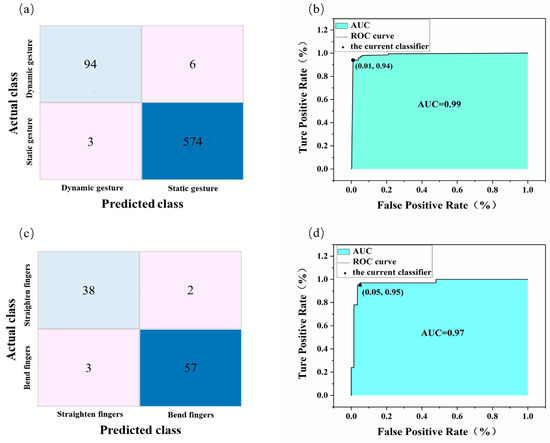

In the first stage, dynamic signals are separated from all samples for subsequent training. As shown in Figure 6a, the classification model can correctly classify most of the static gesture data and dynamic gesture data. Table 2 further shows the classification results of static signals and dynamic signals. Positive predictive value (PPV) is an index to evaluate the level of correct classification. The lower PPV of dynamic signals, the more sample capacity is lost in the second stage, resulting in unobvious and incomplete features as gesture output. Additionally, false discovery rate (FDR) represents the probability of misclassification, which affects the interference degree of data directly. High PPV level (96.9%) and low FDR level (3.1%) illustrate that effective gesture features are completely preserved.

Figure 6.

Test results for classification model of two phases. (a) Confusion matrix of dynamic gesture and static gesture. (b) ROC curve of classification model assuming dynamic gesture is positive. (c) Confusion matrix of straightening fingers and bending fingers. (d) ROC curve of classification model assuming straightening fingers is positive.

Table 2.

Evaluation of classification model to distinguish static signals and dynamic signals.

The ROC curve after 10-fold cross-validation is shown in Figure 6b. Assuming that the dynamic signal is positive, the AUC value of the model in the first stage is 0.99 and the accuracy reaches 98.4%, indicating that the model can further eliminate invalid static signals.

Based on defined gestures, the movement of the hand is divided into the bending and straightening of fingers. Figure 6c and Table 3 shows the accuracy of classification remains at a high level. Additionally, Figure 6d illustrates the AUC value of the model is 0.97, which only has subtle decreases compared with the first stage. The good classification results of two stages indicates that the multi-segment classification model has the generalization ability for gesture recognition.

Table 3.

Evaluation of classification model to judge finger motion trajectory.

4. Conclusions

This article reported a type of machine-learning empowered gesture recognition glove. Combined with the electrical characteristics of flexible strain sensors and the signal processing ability of machine learning algorithms, data gloves judged the specific actions of hands accurately, which showed many commendable features, such as low weight and good comfortableness. At the same time, we paid attention to the interference of invalid static signals in the training and classification process. The model successfully extracted effective dynamic signals from all signals through multi-segment classification based on Logit AdaBoost algorithm. It is of significance to enhance the generalization ability of the recognition model and increase the accuracy of gesture recognition. However, not all irrelevant factors have been excluded for this model, which requires further research.

Author Contributions

Conceptualization, K.Z.; methodology, J.L., Y.Q. and Z.G.; writing—original draft preparation, J.L., Y.Q. and Z.G.; writing—review and editing, J.L., Y.Q., Z.G., L.Z., Q.Z. and K.Z.; supervision, L.Z., Q.Z. and K.Z.; funding acquisition, K.Z. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Fundamental Research Funds for the Central Universities (2232022G-01 and 19D110106), the National Natural Science Foundation of China (NO. 51973034).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data presented in this study are available on request from the corresponding author.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Galván-Ruiz, J.; Travieso-González, C.M.; Tejera-Fettmilch, A.; Pinan-Roescher, A.; Esteban-Hernández, L.; Domínguez-Quintana, L. Perspective and Evolution of Gesture Recognition for Sign Language: A Review. Sensors 2020, 20, 3571. [Google Scholar] [CrossRef] [PubMed]

- Pan, W.; Zhang, X.; Ye, Z. Attention-based sign language recognition network utilizing keyframe sampling and skeletal features. IEEE Access 2020, 8, 215592–215602. [Google Scholar] [CrossRef]

- Tsironi, E.; Barros, P.; Weber, C.; Wermter, S. An analysis of convolutional long short-term memory recurrent neural networks for gesture recognition. Neurocomputing 2017, 268, 76–86. [Google Scholar] [CrossRef]

- Saggio, G.; Cavrini, F.; Pinto, C.A. Recognition of arm-and-hand visual signals by means of SVM to increase aircraft security. Stud. Comput. Intell. 2017, 669, 444–461. [Google Scholar]

- Xue, Q.; Li, X.; Wang, D.; Zhang, W. Deep forest-based monocular visual sign language recognition. Appl. Sci. 2019, 9, 1945. [Google Scholar] [CrossRef]

- Weichert, F.; Bachmann, D.; Rudak, B.; Fisseler, D. Analysis of the Accuracy and Robustness of the Leap Motion Controller. Sensors 2013, 13, 6380–6393. [Google Scholar] [CrossRef] [PubMed]

- Ameen, S.; Vadera, S. A convolutional neural network to classify American Sign Language fingerspelling from depth and colour images. Expert Syst. 2017, 34, e12197. [Google Scholar] [CrossRef]

- Shukor, A.Z.; Miskon, M.F.; Jamaluddin, M.H.; bin Ali, F.; Asyraf, M.F.; bin Bahar, M.B. A new data glove approach for Malaysian sign language detection. Procedia Comput. Sci. 2015, 76, 60–67. [Google Scholar] [CrossRef]

- Lee, B.G.; Lee, S.M. Smart wearable hand device for sign language interpretation system with sensors fusion. IEEE Sens. J. 2018, 18, 1224–1232. [Google Scholar] [CrossRef]

- Abhishe, K.S.; Qubeley, L.C.F.; Ho, D. Glove-based hand gesture recognition sign language translator using capacitive touch sensor. In Proceedings of the 2016 IEEE International Conference on Electron Devices and Solid-State Circuits (EDSSC), Hong Kong, China, 3–5 August 2016; pp. 334–337. [Google Scholar]

- Lecun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef]

- Zhou, Z.; Chen, K.; Li, X.; Zhang, S.; Wu, Y.; Zhou, Y.; Meng, K.; Sun, C.; He, Q.; Fan, W.; et al. Sign-to-speech translation using machine-learning-assisted stretchable sensor arrays. Nat. Electron. 2020, 3, 571–578. [Google Scholar] [CrossRef]

- Wu, J.; Sun, L.; Jafari, R. A wearable system for recognizing American sign language in real-time using IMU and surface EMG sensors. IEEE J. Biomed. Health Inform. 2016, 20, 1281–1290. [Google Scholar] [CrossRef] [PubMed]

- Rivera-Acosta, M.; Ortega-Cisneros, S.; Rivera, J.; Sandoval-Ibarra, F. American sign language alphabet recognition using a neuromorphic sensor and an artifcial neural network. Sensors 2017, 17, 2176. [Google Scholar] [CrossRef] [PubMed]

- Cai, Y.D.; Feng, K.Y.; Lu, W.C.; Chou, K.C. Using LogitBoost classifier to predict protein structural classes. J. Theor. Biol. 2006, 238, 172–176. [Google Scholar] [CrossRef] [PubMed]

- Ho, C.Y.F.; Ling, B.W.K.; Deng, D.X.; Liu, Y. Tachycardias Classification via the Generalized Mean Frequency and Generalized Frequency Variance of Electrocardiograms. Circuits Syst. Signal Process. 2022, 41, 1207–1222. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).