1. Introduction

Space exploration of celestial bodies such as the Moon, Mars, other planets and natural satellites, is of relevance for the establishment of human colonies or the utilization of resources from those bodies. The exploration previous to all these initiatives, need to be carried away with many remote methods and sensing, and can be achieved by using CubeSats or nanosatellites with features that could help acquire local knowledge of those bodies without the costs of having vehicles in the surface. The study of those bodies before having a mission for exploration is of relevance, because it allows the basic fundamental knowledge that needs to be known in order to execute such missions. This knowledge comes from many data sources that could be used before the actual exploration in order to know what the mission will find. Data from images, athosphere, environment, etc. will be integrated when these multimodal data fusion techniques are derived.

Environmental survey data must currently be segmented, organized, and analyzed to be useful. The task is becoming increasingly complex because of a growth in near-continuous imaging platforms (i.e., constellations), improved imaging resolutions, and a greater number of satellites in all environments. In recent years, satellite imagery services have expanded considerably as an industry, making it increasingly challenging to deliver processed data for users with different requirements. Space exploration will require the application of all these tools to study and characterize different celestial bodies, e.g., the Moon and Mars with certain urgency, and others farther away.

Together, these have created a demand for increasingly efficient data analysis methods. The possibility of fusing multi-spectral imaging, other remote sensing capabilities, and in situ data present the cutting edge of environmental data processing and analysis.

We propose leveraging the fusion of multimodal data sets with Machine Learning (ML) methods for feature recognition. These digested data can be used for classification, anomaly detection, and with appropriate parameter selection, the prediction of features of interest such as chemical composition, and atmospheric properties. This yields useful, digested data for decision making, and feedback will allow for the selection of appropriate processing methods. This is loosely based on a multimodal processing technique applied for cardiovascular risk identification, see [

1,

2].

In

Section 2, we introduce and discuss the most representative ML methods with potential to be used in this complex task of multimodal data fusion. We provide a brief insight on the features of interest and the fusion of the data, introducing a vertical/horizontal method to allow the use of multimodal data with only those features of interest. In

Section 3, we introduce the conclusions with a brief view of the next steps on this problem of multimodal data fusion.

2. Methods for Multimodal Data Fusion

There is an abundance of heterogeneous data that is hard to reconcile into all encompassing environmental models of different space environments, see [

3]. This multimodal data of space objects includes information from visual sources such as cameras, infrared and ultraviolet images, images from different wavelengths, synthetic aperture radar scans, material composition profiles, and data from other sensing sources. Automated processes of these parallel data streams will confer a degree of predictability to their results.

The multimodal data fusion system will integrate information from many different sources and also of many different kinds as just explained. As part of the fusion process, the information needs to be organized in order to be able to handle such amounts. We propose, firstly, to reduce data sets so that information comprises only highlighted features of interest depending on the purpose of the analysis. For space exploration, this includes repeated remote observations made by many satellites and a large number of ground stations. The variability in observation frequency, and the studied parameters (i.e., vector fields, scalar fields, feedback from robotic systems, etc.) requires that the pre/post-processing techniques be intentionally selected for each data set.

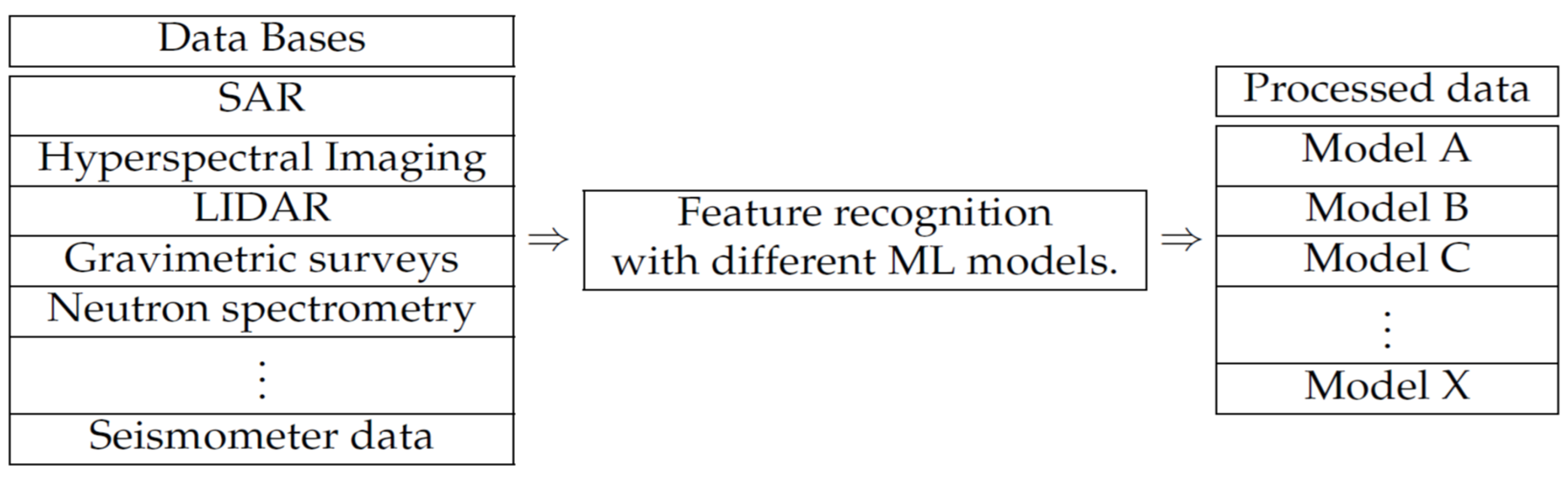

The block diagram shown as

Figure 1, contains the main idea of the data collection and analysis scheme proposed. In such a diagram, one can observe that it does consider the subsequent application of algorithms with the objective of improving efficiency and effectiveness at the time of processing and transmission of information.

The method we propose consists of a horizontal analysis of information coming from different sources as the left block in the diagram (Data Bases) of

Figure 1 shows. With this horizontal analysis one can obtain the necessary and interesting features from each of the data sources through the application of ML algorithms and models as needed. Once the features are obtained, we proceed to execute a data fusion of such relevant features, but this fusion is to extract first features that is inter-related in the different relevant features extracted in the previous horizontal method. We call this inter-relation data fusion, the vertical analysis. The scheme in

Figure 1 proposes, firstly, the recognition and extraction of relevant features carried out by using different ML algorithms for each type of data (a horizontal process). Afterwards, those features are merged into classes (a vertical process), and would make the process of multimodal data fusion, see [

4,

5]. In

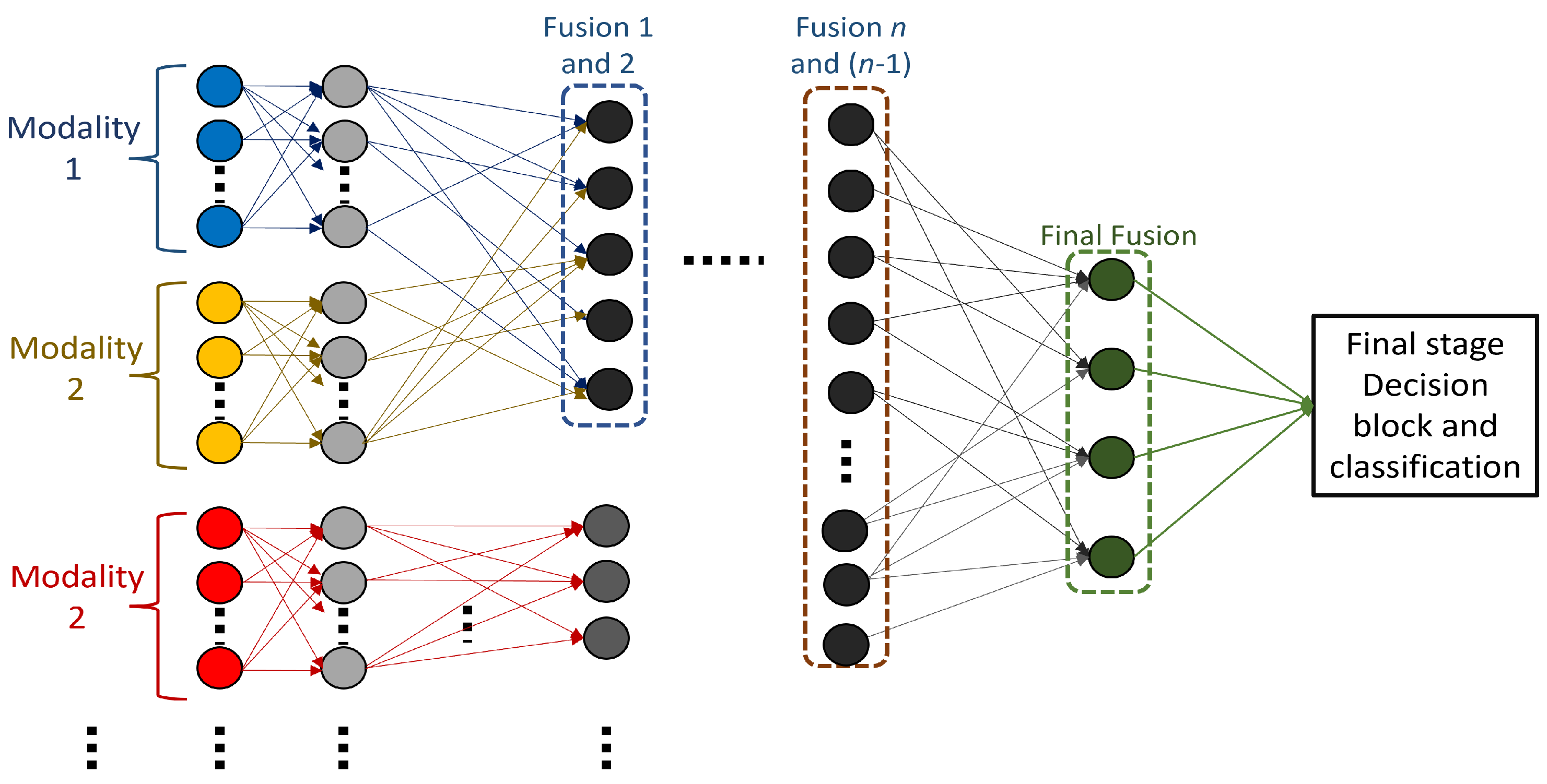

Figure 2, we show that the relevant features of each of the models being used are to be used through, for example, a neural network or deep learning system for the fusion. The following subsections describe the methods and algorithms used for this processing.

2.1. Data Encoding in Machine Learning

We first apply data encoding to build a system to compile the information into one convenient structure; just to add a complementary definition, we define this as the action of encoding data attributes, and saving the information in an efficient way. This method takes us one step further for a better classification and prediction.

For example, the data classification for lunar environments in the proposed methods, takes place by involving aspects as altitude, light, and depth in the different areas of the moon in images, and the method merges this information to create the map of the lunar surface, and at the same time assign the necessary information that is taken simultaneously by the different sensors of the mission, for a better analysis and classification. Taking into account the list of requirements of the different missions, we plan to synthesize and filter the information to avoid trash data. This implies the fusion of the parallel data coming from the same phenomena into one final classification.

2.2. Stages of Data Fusion

Our methodology is staging the data fusion process so we can replicate it for future adjacent projects. Therefore, we define “data fusion” as one of the main tasks for this paper along with the final classification process. The staging of the data fusion process reflects the modularity of our system’s architecture. It allows us to process multimodal data by combining the relevant features for an arbitrary number of parallel data streams in a common neural network for classification.

2.2.1. ‘Horizontal’ Processing Models

For the purpose of classifying data sets, we conduct feature recognition using convolutions and other methods to highlight useful features. After feature extraction from different data sources, a dimensionality reduction algorithm (such as PCA or Gram–Schmidt orthogonalization) will be used to create a unified classification. Having the databases previously selected, the horizontal process consists of choosing an ML for each type of data, which will be in charge of lightening and compacting the amount of data to facilitate the processing of these.

We make use of convolution filters for processing image data for spatial patterns in two dimensional arrangements before being introduced to a deep neural network. This will allow for the basic discrimination of objects that produce shadows, to present preferential emissivity in certain frequencies, or show anomalies in underground structures with ground penetrating radar.

2.2.2. ‘Vertical’ Fusion Techniques

For the classification step, all processed data are taken as input for a deep neural network that can be expanded to have an arbitrary number of input neurons (computational complexity notwithstanding). This allows for previous abstraction of data by independent neuronal layers and subsequent learning of associations between the different data sets. An example of one network that could be considered for this vertical fusion is shown in

Figure 3 based on an architecture in [

6]. The input at the bottom comes from the data extracted as in

Figure 2.

2.2.3. Dimensionality Reduction

The numerous sources of data, e.g., data from rovers, satellites and different similar missions—will generate problematically high dimensional data that will have to be correlated. Dimensionality reduction will allow for a more comprehensive display of the results and reduce computational complexity.

We propose using the orthogonalization methods such as Gram–Schmidt process for dimensionality reduction using time series. This will combine features from different modalities of imaging and generate more digestible and complete terrain data. The Gram–Schmidt process consists of an algorithm to obtain an orthogonal basis of vectors that spans a vector space where data can be seen as point clouds that could further be modeled and classified. This method is commonly used to make the dimensionality reduction process. For our process we will use this method after determining the amount of data generated by applying ML models to each of the data types.

There are many different ML algorithms that could be considered for the multimodal data fusion, and one should have performance metrics of interest to evaluate such algorithms applied in multidata fusion tasks. Such metrics could be of perfromance (error, accuracy, processing time, false positives, etc.) or could be of complexity (number of operations, time consumed to generate results, flops, etc.). Depending on the objectives, the performance needs to be evaluated accordingly.

3. Conclusions

The modularity of the classification model allows for the inclusion of different data modalities, irrespective of the parameters measured. It also presents the possibility of comparing different data processing techniques and the quantification of their impact on the quality of the analysis. Outside of the scope of one main application to lunar environments is that this strategy can be used in other environmental awareness applications with multisensory data for different celestial bodies such as Mars.

The automated recognition of features of interest in data will in turn generate vast amounts of data to be included in decision making. This can both reduce risk in exploration and optimize the selection of sites for further data acquisition. The degree to which this can be achieved with reduced neural network size is an open question and possibility for future research.

Author Contributions

Conceptualization, J.A.M.-E. and S.G.-I.; methodology, A.C.C., M.M.-S. and S.G.-I.; investigation, A.C.C. and J.A.M.-E.; resources, M.M.-S., R.V.-H. and C.V.-R.; data curation, S.G.-I.; writing—original draft preparation, all authors; writing—review and editing, C.V.-R.; supervision, C.V.-R. and R.V.-H. All authors have read and agreed to the current version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Acknowledgments

The administrative and technical support, was given by Cesar Vargas-Rosales.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Amal, S.; Safarnejad, L.; Omiye, J.A.; Ghanzouri, I.; Cabot, J.H.; Ross, E.G. Use of Multi-Modal Data and Machine Learning to Improve Cardiovascular Disease Care. Front. Cardiovasc. Med. 2022, 9, 840262. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Y.; Sheng, M.; Liu, X.; Wang, R.; Lin, W.; Ren, P.; Song, W. An heterogeneous multi-modal medical data fusion framework supporting hybrid data exploration. Health Inf. Sci. Syst. 2022, 10, 22. [Google Scholar] [CrossRef] [PubMed]

- Gao, J.; Li, P.; Chen, Z.; Zhang, J. A Survey on Deep Learning for Multimodal Data Fusion. Neural Comput. 2020, 32, 829–864. [Google Scholar] [CrossRef] [PubMed]

- Li, J.; Li, T.; Xie, P.; Du, S.; Teng, F.; Yang, X. Urban big data fusion based on deep learning: An overview. Inf. Fusion 2020, 53, 123–133. [Google Scholar] [CrossRef]

- Li, J.; Hong, D.; Gao, L.; Yao, J.; Zheng, K.; Zhang, B.; Chanussot, J. Deep learning in multimodal remote sensing data fusion: A comprehensive review. Comput. Vis. Pattern Recognit. 2022, 32, 829–864. [Google Scholar] [CrossRef]

- Stahlschmidt, S.R.; Ulfenborg, B.; Synnergren, J. Multimodal deep learning for biomedical data fusion: A review. Briefings Bioinform. 2022, 23, bbab569. [Google Scholar] [CrossRef]

| Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).