A Self-Learning and Adaptive Control Scheme for Phantom Prosthesis Control Using Combined Neuromuscular and Brain-Wave Bio-Signals †

Abstract

:1. Introduction

2. Materials and Methods

2.1. Biosensors

2.1.1. Electromyography (EMG)

- -

- EMG Sensors

2.1.2. Electroencephalography (EEG)

- -

- EEG Sensors

2.2. Data Collection and Demographic Information

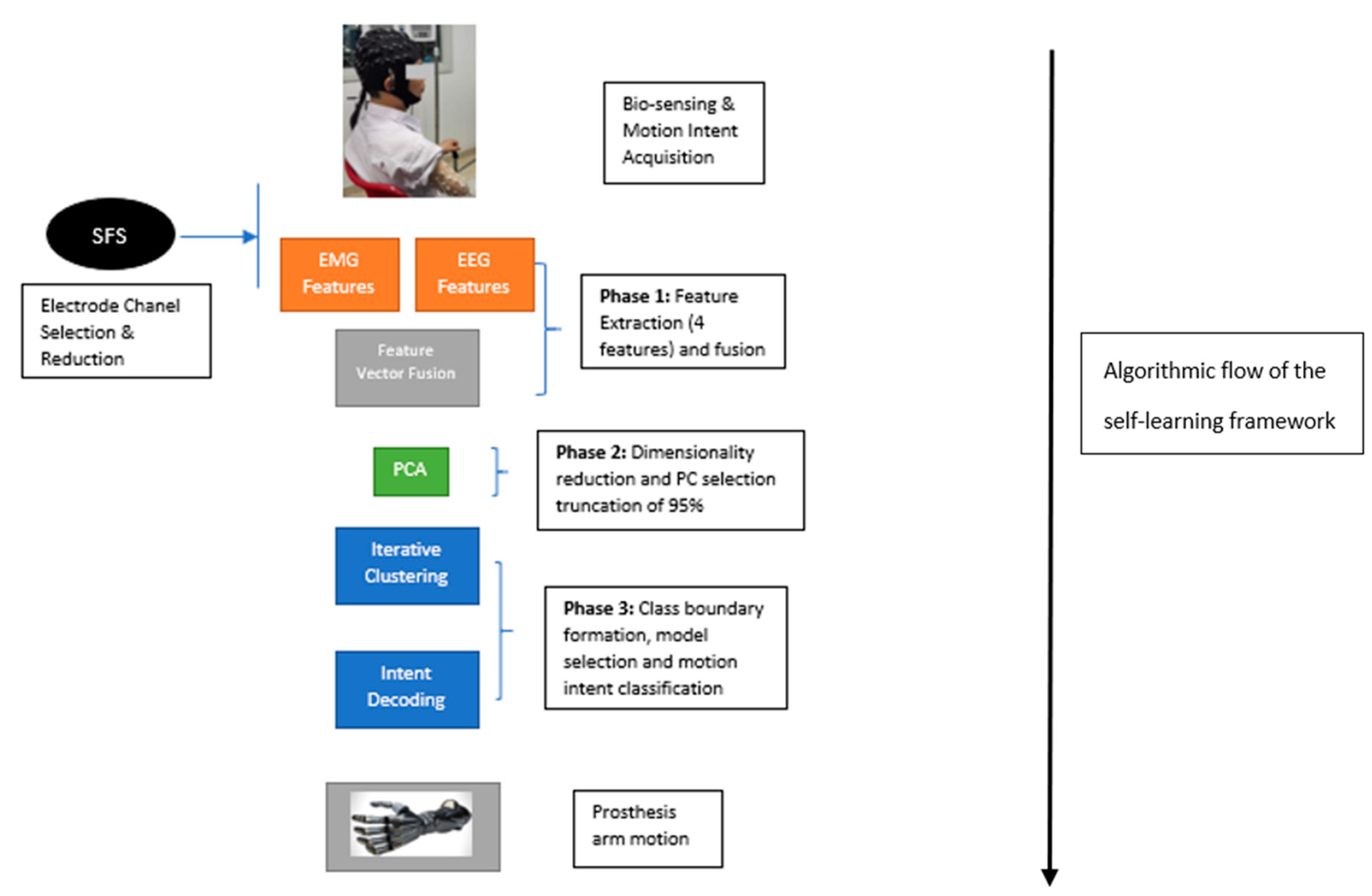

2.3. Self-Learning Controller Framework

- -

- Electrode Channel Selection: The electrode channel selection was carried out separately as part of the framework being described in this paper. This aspect was done by Li et al. [5], and involved the use of the Sequential Forward Selection (SFS) algorithm which is a variant of a greedy search algorithm, as can be seen in Equation (3):where Acc represents classification accuracy, is a selected electrode, is an electrode channel and jth element in the set k. Using this framework, the 10 electrodes from both the EMG and EEG biosensors were identified by Li et al. [5]. These electrodes formed the signal channels used for the signal processing stage and represent a first-stage dimensionality reduction process.

2.3.1. Feature Extraction and Feature Vector Fusion

2.3.2. Dimensionality Reduction

- -

- Mean centring of the data to produce a standardised vector B

- -

- Calculation of the covariance matrixwhere is the covariance matrix, * is the conjugate transpose operator, and is used in this case due to Bessel’s correction factor used to negate the effect of bias on sample variance.

- -

- Calculation of the eigenvalues and eigenvectors of the covariance matrix which produces a diagonal of the covariance matrix , which can be formulated as , where represents the eigenvalues of the covariance matrix and is the matrix of the right-side eigenvalues.

- -

- Arrange eigenvalues and eigenvectors in descending order and calculate the energy for all columns in the feature vector.

- -

- Truncate the eigenvectors whilst ensuring that 90% of the cumulative energy is preserved, and project the feature vector in a new basis , where the columns in G represent PC’s .

2.3.3. Iterative Clustering and Motion Intent Classification

- -

- K-means: Is a form of iterative clustering algorithm where the data are segmented into K different classes using a centroid mean and Euclidean distance metric. During iterations, the algorithm aims to maximise the distance between classes, and sorts data points into their respective classes by their proximity to assigned clusters within Euclidean space [10]. This approach uses the expectation-maximisation (E-M) framework assuming a random initialisation: The E step involves the assignments of clusters using , where is a data point and is the centroid mean; while the M step involves the recalculation of the class centroid using the expression , where is a binary metric used to indicate whether or not a data point belongs in a certain class [10]. Due to the random cluster centroid initialisation, running the K-means algorithm at different times could yield different results; thus, a model selection phase has been included where the model selected was that which produced the lowest error for the performance index J defined in equation 8 after five separate runs of the algorithm. The number of clusters was defined a priori from the number of gesture motions performed.where is a data point assigned to a specific class k

- -

- Gaussian mixture model (GMM): Working with a Gaussian assumption, the GMM is a probabilistic framework which is an extension of the K-means algorithm, with the GMM providing flexibility between a hard clustering option which sorts the data into a solitary class, while the soft clustering allows for data to belong to more than one class [11]. The GMM model can be described and parametrised as containing a mixture proportion, mean and covariance. A multidimensional model of the GMM framework can be seen in Equations (9) and (10):where is a datapoint, is the mean, is the covariance, symbolises a Gaussian distribution, k is the number of mixture components, and are the various component weights with a normalisation constraint as shown in equation 11 to ensure the total probability sums up to 1. The learning model for the GMM is also based on the E-M framework where the model parameters are estimated iteratively with the maximum likelihood estimation approach [11]. The hard clustering option was utilised in this work and, as with the K-means algorithm, the number of clusters and mixture components were defined a priori, and included a model selection phase as mentioned with the performance index defined in equation 8 used for the selection process. The algorithm was run with a full covariance option and converged within 15 iterations on average.

3. Results

3.1. Intent Decoding

Extension towards an Adaptive Control Framework

4. Conclusions

Author Contributions

Acknowledgments

Conflicts of Interest

References

- Fumero, R.; Costantino, M.L. Organi artificiali. In Storia della Bioingegneria; Patron: Bologna, Italy, 2001; pp. 341–365. [Google Scholar]

- Fougner, A.; Stavdahl, O.; Kyberd, P.J.; Losier, Y.G.; Parker, P.A. Control of upper limb prostheses: Terminology and proportional myoelectric control—A review. IEEE Trans. Neural Syst. Rehabil. Eng. 2012, 20, 663–677. [Google Scholar] [CrossRef] [PubMed]

- Nsugbe, E.; Phillips, C.; Fraser, M.; McIntosh, J. Gesture Recognition for Trans-humeral Prosthesis Control Using EMG and NIR. IET Cyber-Syst. Robot. 2020, 2, 122–131. [Google Scholar] [CrossRef]

- Huang, Q.; Yang, D.; Jiang, L.; Zhang, H.; Liu, H.; Kotani, K. A Novel Unsupervised Adaptive Learning Method for Long-Term Electromyography (EMG) Pattern Recognition. Sensors (Basel) 2017, 17, 1370. [Google Scholar] [CrossRef] [PubMed]

- Li, X.; Samuel, O.W.; Zhang, X.; Wang, H.; Fang, P.; Li, G. A motion-classification strategy based on sEMG-EEG signal combination for upper-limb amputees. J. NeuroEng. Rehabil. 2017, 14, 2. [Google Scholar] [CrossRef] [PubMed]

- Rodriguez-Falces, J.; Navallas, J.; Malanda, A. EMG Modeling. In Computational Intelligence in Electromyography Analysis-A Perspective on Current Applications and Future Challenges; Naik, G.R., Ed.; InTech: London, UK, 2012. [Google Scholar] [CrossRef]

- Darbas, M.; Lohrengel, S. Review on Mathematical Modelling of Electroencephalography (EEG). Jahresber. Dtsch. Math. Ver. 2019, 121, 3–39. [Google Scholar] [CrossRef]

- Towards Data Science. Available online: https://towardsdatascience.com/dimensionality-reduction-for-machine-learning-80a46c2ebb7e (accessed on 12 September 2020).

- Jolliffe, I.T.; Cadima, J. Principal component analysis: A review and recent developments. Philos. T. R. Soc. A 2016, 374. [Google Scholar] [CrossRef] [PubMed]

- Towards Data Science. Available online: https://towardsdatascience.com/k-means-clustering-algorithm-applications-evaluation-methods-and-drawbacks-aa03e644b48a (accessed on 12 September 2020).

- Towards Data Science. Available online: https://towardsdatascience.com/gaussian-mixture-models-explained-6986aaf5a95 (accessed on 12 September 2020).

- Samuel, O.W.; Zhou, H.; Li, X.; Wang, H.; Zhang, H.; Sangaiah, A.K.; Li, G. Pattern recognition of electromyography signals based on novel time domain features for amputees’ limb motion classification. Comput. Electr. Eng. 2018, 67, 646–655. [Google Scholar] [CrossRef]

- Chen, X.; Zhang, D.; Zhu, X. Application of a self-enhancing classification method to electromyography pattern recognition for multifunctional prosthesis control. J. Neuroeng. Rehabil. 2013, 10, 1–13. [Google Scholar] [CrossRef] [PubMed]

- Asogbon, M.G.; Samuel, O.W.; Geng, Y.; Oluwagbemi, O.; Ning, J.; Chen, S.; Ganesh, N.; Feng, P.; Li, G. Towards resolving the co-existing impacts of multiple dynamic factors on the performance of EMG-pattern recognition based prostheses. Comp. Meth. Prog. Biol. 2020, 184, 105278. [Google Scholar] [CrossRef] [PubMed]

| GMM-EMG Only | K-Means-EMG Only | GMM-EEG Only | K-Means-EEG Only | GMM-EMG-EEG | K-Means- EMG-EEG | |

|---|---|---|---|---|---|---|

| Cluster Model 1 Accuracy | 83% | 81% | 64% | 63% | 68% | 83% |

| Cluster Model 2 Accuracy | 99% | 81% | 64% | 58% | 98% | 83% |

| Cluster Model 3 Accuracy | 99% | 81% | 64% | 58% | 98% | 83% |

| Cluster Model 4 Accuracy | 99% | 81% | 64% | 58% | 98% | 83% |

| Clustering Model 5 Accuracy | 99% | 81% | 64% | 58% | 70% | 83% |

| Hold-Out Test Accuracy | 100% | 80% | 90% | 60% | 100% | 80% |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Nsugbe, E.; Samuel, O.W.; Asogbon, M.G.; Li, G. A Self-Learning and Adaptive Control Scheme for Phantom Prosthesis Control Using Combined Neuromuscular and Brain-Wave Bio-Signals. Eng. Proc. 2020, 2, 59. https://doi.org/10.3390/ecsa-7-08169

Nsugbe E, Samuel OW, Asogbon MG, Li G. A Self-Learning and Adaptive Control Scheme for Phantom Prosthesis Control Using Combined Neuromuscular and Brain-Wave Bio-Signals. Engineering Proceedings. 2020; 2(1):59. https://doi.org/10.3390/ecsa-7-08169

Chicago/Turabian StyleNsugbe, Ejay, Oluwarotimi Williams Samuel, Mojisola Grace Asogbon, and Guanglin Li. 2020. "A Self-Learning and Adaptive Control Scheme for Phantom Prosthesis Control Using Combined Neuromuscular and Brain-Wave Bio-Signals" Engineering Proceedings 2, no. 1: 59. https://doi.org/10.3390/ecsa-7-08169

APA StyleNsugbe, E., Samuel, O. W., Asogbon, M. G., & Li, G. (2020). A Self-Learning and Adaptive Control Scheme for Phantom Prosthesis Control Using Combined Neuromuscular and Brain-Wave Bio-Signals. Engineering Proceedings, 2(1), 59. https://doi.org/10.3390/ecsa-7-08169