Abstract

In this paper, we study the autoregressive (AR) models with Cauchy distributed innovations. In the AR models, the response variable depends on previous terms and a stochastic term (the innovation). In the classical version, the AR models are based on normal distribution which could not capture the extreme values or asymmetric behavior of data. In this work, we consider the AR model with Cauchy innovations, which is a heavy-tailed distribution. We derive closed forms for the estimates of parameters of the considered model using the expectation-maximization (EM) algorithm. The efficacy of the estimation procedure is shown on the simulated data. The comparison of the proposed EM algorithm is shown with the maximum likelihood (ML) estimation method. Moreover, we also discuss the joint characteristic function of the AR(1) model with Cauchy innovations, which can also be used to estimate the parameters of the model using empirical characteristic function.

1. Introduction

Autoregressive (AR) models with stable and heavy-tailed innovations are of great interest in time series modeling. These distributions can easily assimilate the asymmetry, skewness, and outliers present in time series data. The Cauchy distribution is a special case of stable distribution with undefined expected value, variance, and higher order moments. The Cauchy distribution and its mixture has many applications in the field of economics [1], seismology [2], biology [3], and various other fields, but only a few studies have been conducted concerning time series models with Cauchy errors. In [4], the maximum likelihood (ML) estimation of AR(1) model with Cauchy errors is studied.

The standard estimation techniques for the AR(p) model with Cauchy innovations, particularly the Yule–Walker method and conditional least squares method, cannot be used due to the infinite second order moments of the Cauchy distribution. Therefore, it is worthwhile to study and assess the alternate estimation techniques for the AR(p) model with Cauchy innovations. In the literature, several estimation techniques have been proposed to estimate the parameters of AR models with infinite variance errors (see e.g., [5,6,7]).

In this paper, we propose to use the EM algorithm to estimate the parameters of the distribution and model simultaneously. It is a general iterative algorithm for model parameter estimation which iterates between two steps, namely the expectation step (E-step) and the maximization step (M-step) [8]. It is an alternative to the numerical optimization of the likelihood function which is proven to be numerically stable [9]. We also provide the formula based on the characteristic function (CF) and empirical characteristic function (ECF) of Cauchy distribution for AR(p) model estimation. The idea to use ECF in a time series stable ARMA model has been discussed in [10].

The remainder of the paper is organized as follows. In Section 2, we present a brief overview of the Cauchy AR(p) model, followed by a discussion of estimation techniques, namely the EM algorithm and estimation by CF and ECF. Section 3 checks the efficacy of the estimation procedure on simulated data. We also present the comparative study, where the proposed technique is compared with ML estimation for Cauchy innovations. Section 4 concludes the paper.

2. Cauchy Autoregressive Model

We consider the AR(p) univariate stationary time-series , with Cauchy innovations defined as

where is a p-dimensional column vector, is a vector of p lag terms, and , are i.i.d. innovations distributed as Cauchy. The pdf of Cauchy [11] is

The conditional distribution of given the preceding data is given by [4]

where is the realization of . In the next subsection, we propose the methods to estimate the model parameters and innovation parameters and simultaneously.

2.1. Parameter Estimation Using EM Algorithm

We estimate the parameters of the AR(p) model using an EM algorithm which maximizes the likelihood function iteratively. Further, we discuss the time series using the characteristic function (CF) and estimation method using CF and ECF. Recently [7], the exponential-squared estimator for AR models with heavy-tailed errors was introduced and proven to be -consistent under some regularity conditions; similarly, the self-weighted least absolute deviation estimation method was also studied for the infinite variance AR model [12]. The ML estimation of AR models with Cauchy errors with intercept and with linear trend is studied, and the AR coefficient is shown to be -consistent under some conditions [4]. For the AR(p) model with Cauchy innovations with n samples, the log likelihood is defined as

where

Proposition 1.

Consider the AR(p) time-series model given in Equation (1) where error terms follow Cauchy. The maximum likelihood estimates of the model parameters using EM algorithm are as follows

where .

Proof.

Consider the AR(p) model

where follows Cauchy distribution Cauchy. Let for denote the complete data for innovations . The observed data are assumed to be from Cauchy and the unobserved data follow inverse gamma IG. A random variable V∼IG if the pdf is given by

We can rewrite as The stochastic relation with i.e., standard normal and is used to generate Cauchy distribution. Then, the conditional distribution is

Now, we need to estimate the unknown parameters . To apply the EM algorithm for estimation, we first find the conditional expectation of log-likelihood of complete data with respect to the conditional distribution of V given . As the unobserved data are assumed to be from IG, the posterior distribution is again an inverse gamma i.e.,

The following conditional inverse first moment and will be used in calculating the conditional expectation of the log-likelihood function:

The complete data likelihood is given by

The log likelihood function will be

Now, we will use the relation in further calculations. In the first step at kth iteration, E-step of EM algorithm, we need to compute the expected value of the complete data log likelihood known as , which is expressed as

where . In the next M-step, we estimate the parameters by maximizing the Q function using the equations below:

Solving the above equations at each iteration, we find the following closed form estimates of the parameters at th iteration:

where . □

2.2. Characteristic Function for Estimation

Thus far, we have considered the conditional distribution of given the preceding data . Now, we include the dependency of time series by defining the variable for In each variable , there are p terms the same as adjacent variable. The distribution of will be multivariate Cauchy with dimension .

The CF of each is and the ECF is where

To estimate the parameters using CF and ECF, we make sure that the joint CF of the AR(p) model has a closed form. In the next result, the closed form expression for the joint CF of the AR(1) model with Cauchy innovations is given.

Proposition 2.

The joint CF of stationary AR(1) model with Cauchy innovations is

Proof.

For stationary AR(1) model , we can rewrite it as

Note that are i.i.d from Cauchy distribution, and the CF of Cauchy is [11]. Then, the joint CF of is calculated as follows:

□

The joint CF for a higher dimension can be obtained in similar manner. Now, the model parameters can be estimated by solving the following integral with CF and ECF as defined in [10]:

where optimal weight function

Remark 1.

For a stationary AR(l) process with , the ECF estimator defined by Equation (5) with optimal weight function defined in Equation (6) is a conditional ML (CML) estimator and hence asymptotically efficient. The conditional log pdf for Cauchy distribution is:

The proof is similar to the proof of Proposition in [10].

3. Simulation Study

In this section, we assess the proposed model and the introduced estimation technique using a simulated data set. We discuss the estimation procedure for the AR(2) model with Cauchy innovations. The AR model defined in (1) is simulated with and as model parameters. We generate 1000 trajectories, each of size of Cauchy innovations using the normal variance-mean mixture form with , i.e., standard normal and We then use the following simulation steps to generate the Cauchy innovations:

- step 1: Generate standard normal variate Z;

- step 2: Generate inverse gamma random variate IG with ;

- step 3: Using the relation , we simulate the Cauchy innovations with ;

- step 4: The time series data is generated with model parameters and

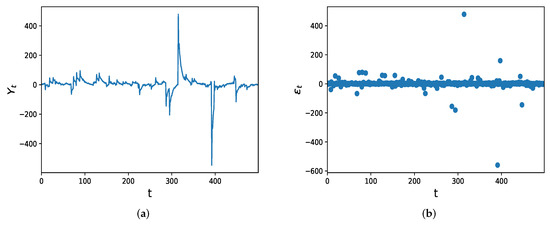

The exemplary time series data plot and scatter plot of innovation terms are shown in Figure 1. We apply the discussed EM algorithm to estimate the model parameters and distribution parameters. The relative change in the parameters is considered to terminate the algorithm. The following is the stopping criteria which is commonly used in literature:

Figure 1.

(a) The data plot of exemplary time series of length and (b) the scatter plot of the corresponding innovation terms of the AR(2) model with Cauchy innovations. The chosen parameters of the model are and .

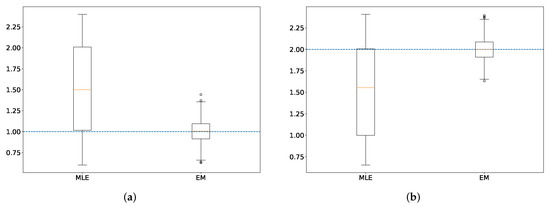

We compare the estimation results of Cauchy with the EM algorithm and maximum likelihood (ML) estimation. The ML estimates are computed using the inbuilt function “mlcauchy” in R, which uses the exponential transform of the location parameter and performs non-linear minimization by a Newton-type algorithm. The comparison of the estimates of Cauchy() are shown in boxplots in Figure 2. From the boxplots, we find that the EM algorithm converges near to the true value of the Cauchy as compared to the ML estimation. There is a possibility of achieving a better result from ML method if a different algorithm or inbuilt function for optimization are used for estimation.

Figure 2.

Boxplots of the estimates of the AR(2) model’s parameters with theoretical values (a) and (b) represented with blue dotted lines. The boxplots are created using 1000 trajectories, each of length 500.

4. Conclusions and Future Scope

In this work, we derive the closed form of estimates of AR model with Cauchy innovations using an EM algorithm. The performance of the proposed algorithm is compared with the ML method using simulated data. The ML estimation is found using an inbuilt function in R. Another benefit of using the EM algorithm is that it calculates the model as well as the innovation parameters simultaneously. It is evident from the boxplot that the EM algorithm outperforms the ML method. Further, we discuss another approach based on CF to estimate the AR model parameters with stable distribution. In the future, we plan to study and compare the proposed algorithm and ECF based estimation method with the existing techniques in [5,6,7] for an AR model with infinite variance. Further, the real life phenomena can be studied using the proposed model and methods.

Author Contributions

Conceptualization, A.K. and M.S.D.; Methodology, A.K. and M.S.D.; WritingOriginal Draft Preparation, M.S.D. and A.K.; WritingReview & Editing, A.K. and M.S.D. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Acknowledgments

M.S.D. would like to thank her institute Indian Institute of Technology Ropar, India and the Ministry of Education (MoE), Government of India, for supporting her research work.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Liu, T.; Zhang, P.; Dai, W.S.; Xie, M. An intermediate distribution between Gaussian and Cauchy distributions. Phys. A Stat. Mech. Appl. 2012, 391, 5411–5421. [Google Scholar] [CrossRef] [Green Version]

- Kagan, Y.Y. Correlations of earthquake focal mechanism. Geophys. J. Int. 1992, 110, 305–320. [Google Scholar] [CrossRef] [Green Version]

- Bjerkedal, T. Acquisition of resistance in guinea pigs infected with different doses of virulent tubercle bacilli. Am. J. Hyg. 1960, 72, 130–148. [Google Scholar] [PubMed]

- Choi, J.; Choi, I. Maximum likelihood estimation of autoregressive models with a near unit root and Cauchy errors. Ann. Inst. Stat. Math. 2019, 71, 1121–1142. [Google Scholar] [CrossRef]

- Jiang, Y. An exponential-squared estimator in the autoregressive model with heavy-tailed errors. Stat. Interface 2016, 9, 233–238. [Google Scholar] [CrossRef]

- Li, J.; Liang, W.; He, S.; Wu, X. Empirical likelihood for the smoothed LAD estimator in infinite variance autoregressive models. Statist. Probab. Lett. 2010, 80, 1420–1430. [Google Scholar] [CrossRef]

- Tang, L.; Zhou, Z.; Wu, C. Efficient estimation and variable selection for infinite variance autoregressive models. J. Appl. Math. Comput. 2012, 40, 399–413. [Google Scholar] [CrossRef]

- Dempster, A.P.; Laird, N.M.; Rubin, D.B. Maximum likelihood from incomplete data via EM Algorithm. J. R. Stat. Soc. Ser. B 1977, 39, 1–22. [Google Scholar]

- McLachlan, G.J.; Krishnan, T. The EM Algorithm and Extensions, 2nd ed.; John Wiley & Sons: Hoboken, NJ, USA, 2007. [Google Scholar]

- Yu, J.; Knight, J.L. Empirical characteristic function in time series estimation. Econom. Theory 2002, 18, 691–721. [Google Scholar]

- Feller, W. An Introduction to Probability Theory and Its Applications, 2nd ed.; John Wiley & Sons: New York, NY, USA, 1991; Volume 2. [Google Scholar]

- Ling, S. Self-weighted least absolute deviation estimation for infinite variance autoregressive models. J. R. Stat. Soc. Ser. B 2005, 67, 381–393. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).