1. Introduction

In the evolution of the modern world, with the rapid expansion of urban infrastructure and the increasing complexity of traffic systems, autonomous traffic monitoring solutions are needed for intelligent, autonomous traffic management. However, traditional methods like manual monitoring and static rule-based detection often fail to meet the real-time requirements of a smart city, resulting in inefficient congestion management, and road maintenance. Solutions that are considered to be intelligent and bring autonomy to traffic systems have been developing as urban traffic systems become more complicated and as the new generation requires real-time monitoring and management signs. Currently, most traditional traffic monitoring methods do not respond adaptively to dynamic changes in traffic and the location of moving pedestrians, the sudden presence of heavy vehicles, and changes in road infrastructure. Based on these approaches, conventional methods heavily depend on manual supervision or rule-based automation, which are unable to perform well in complying with real-time traffic dynamics. Additionally, existing AI-based traffic monitoring models require large-scale labelled datasets for training, which makes them numerically expensive and difficult to deploy in new environments without significant retraining [

1]. Recently, advancements in deep learning and large language models (LLMs) have significantly facilitated autonomous decision-making in traffic management. In this research, an AI-based system is developed, utilising YOLOv8 for real-time object detection, Few-Shot Learning (FSL) for pedestrian crossing adaptation, and LLaMA 3.2B for intelligent decision-making in traffic maintenance. It is optimised to detect traffic objects, including vehicles, pedestrians, potholes, and road signs, ensuring fast and accurate real-time detection. One of the greatest shortcomings of traditional machine learning models is that they require large amounts of labelled data to adapt to new pedestrian behaviours. However, the Few-Shot Learning module enables the system to learn quickly with very little labelled data, allowing for it to adapt rapidly to new pedestrian behaviours and crossing patterns. It also utilises LLaMA 3.2B, a powerful transformer-based model, to analyse objects detected, interpret traffic patterns, and provide maintenance recommendations [

2].

This study integrates the following:

YOLOv8 for real-time traffic object detection.

Few-Shot Learning for pedestrian crossing adaptation.

LLaMA 3.2B for intelligent traffic maintenance decisions.

Recently, deep learning and large language models (LLMs) have enabled the development of autonomous systems that operate in real-time, with the capability of making decisions in real-time.

2. Related Works

A summary of the various existing models related to intelligent transportation traffic condition analyses using Few-Shot Learning is provided here.

Conventional traditional traffic monitoring system approaches include CCTV-based monitoring (prone to blind spots and manual effort). LiDAR and SOTA-based systems are expensive and limited adaptability. Static rule-based AI models are unable to generalise to new conditions.

Deep Learning for Traffic Object Detection: YOLO models are fast and accurate, but require large datasets. CNN-based approaches have high accuracy, but are computationally expensive. Transformer-based models are powerful, but require extensive fine-tuning.

Few-Shot Learning for Pedestrian Detection: FSL enables models to learn new pedestrian behaviours from a small dataset. Prototypical networks and meta-learning are key FSL techniques that improve detection in new environments with minimal labelled data.

We trained the YOLO model in a manner that could generalise well from a few training examples. Rather than learning explicit pedestrian behaviours from a large dataset, we designed the model to identify general patterns and commonalities of pedestrian behaviours across different environments, allowing for it to be almost always correct with a small number of training samples. This is particularly beneficial for detecting unusual or unseen pedestrian behaviours by only needing to retrain on a small amount. The model observes a new pedestrian crossing instance, compares its feature embedding with the stored prototypes using Euclidean distance, and assigns that pedestrian to the nearest class when a new pedestrian instance is observed. It significantly improves our capability of detecting new crossing patterns that have unseen examples. Unlike static conventional models, the YOLO module incorporates adaptive learning mechanisms, that is, the ability of the system to be updated in real-time as new pedestrian behaviours arise. Each new data point from every diverse urban environment (congested intersections, school zones, and high-traffic areas) feeds into the model, and it continuously refines its understanding towards pedestrian movement. This makes sure that the system is protected and extensible to the changing conditions of traffic and pedestrian behaviour. Few-Shot Learning with LlaMa has several major advantages: it can learn from small datasets, and therefore it does not require the use of very massive manual annotation. Few-shot task sampling is used to achieve this, where the model is trained on several small datasets containing only a few labelled pedestrian crossing data points per dataset. The model exploits meta learning optimisation and can rapidly adapt to new environments, reducing costly and labour-intensive data collection efforts [

3].

3. Materials and Methods

3.1. Materials

Thus, to make the proposed system robust and accurate, the dataset for training the system is collected from various sources, including self-recorded traffic videos and publicly available datasets such as BDD100K traffic surveillance data. It includes diverse road scenes or test cases, such as different times of the day and high traffic. The dataset consists of annotated images of pedestrians in various poses at crosswalks and with different crowd densities for pedestrian detection purposes. Because in Few-Shot Learning (FSL) it is crucial to utilise a few training samples, the model is fine-tuned using manually labelled pedestrian crossing scenarios. To achieve better detection accuracy under real-world conditions, advanced data augmentation is applied to the input data through rotation, scaling, and contrast adjustments. Vehicle dashcams are extracted to maintain data diversity and keep the model diverse from different viewpoints.

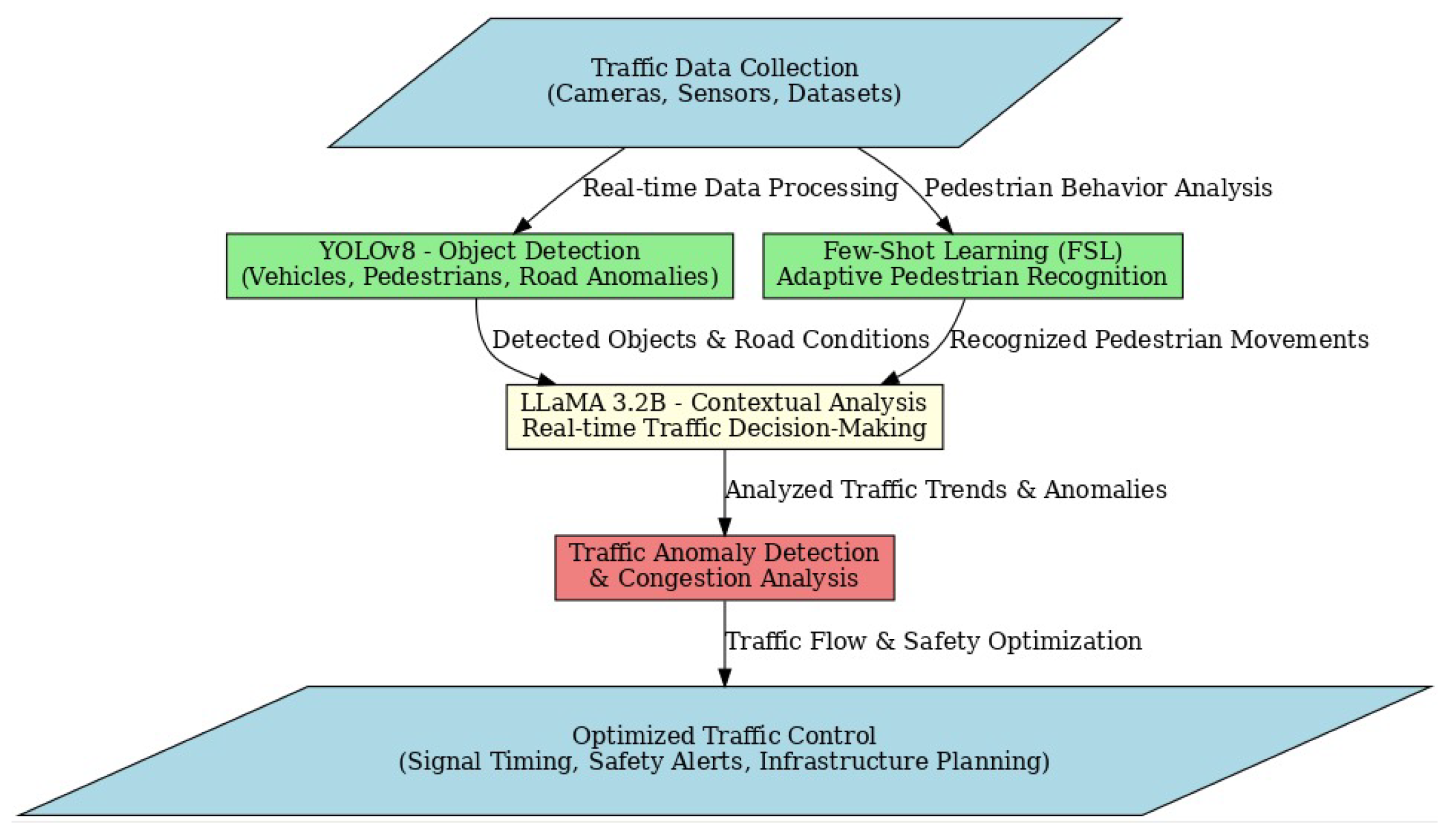

The dataset includes labelled instances of vehicles, roadblocks, crossings, and traffic signals for object detection using YOLOV8. The bounding boxes and class labels of each image are defined. Firstly, the dataset is processed by removing noise and normalising the resolution, and is then used for training and fine-tuning the model. The system’s overall accuracy and real-time responsiveness are enhanced by combining large-scale labelled data and domain-specific adaptive learning. YOLOv8 FSL and LLaMA 3.2B are integrated into the proposed system to enable real-time traffic monitoring and pedestrian detection. The architecture contains three main components: (i) YOLOv8 for object detection, (ii) Few-Shot Learning for adaptive pedestrian detection, and (iii) LLaMA 3.2B to opt for real-time decisions. Each of these components analyses the traffic conditions, detects anomalies, and predicts potential road safety risks based on historical patterns, as shown in

Figure 1, which defines the schematic flow of the proposed work [

4].

3.2. Methods

This study introduces a traffic monitoring system that utilises AI and motion sensors, leveraging an available dataset to enhance traffic safety, identify unusual events, and optimise infrastructure. The proposed design includes the following: 1. very accurate object detection using YOLOv8, which is trained on the BDD100K dataset and achieves 96% detection accuracy; 2. smart recognition of pedestrian crossings using Few-Shot Learning (FSL), which has an 85% recall rate for situations not previously seen; and 3. quick analysis and decision-making with a very short delay of 75 ms using LLaMA 3.2B. This is a fully convolutional model with anchor-free detection to improve accuracy. This is achieved by partitioning the feature map of the base layer into two parts and merging them through a cross-stage hierarchy, effectively balancing performance and efficiency. For the head, decoupled detection heads are employed for classification and localisation tasks, enhancing the detection of small and overlapping objects by separating these two functions [

5].

To improve accuracy of object detection under diverse environment settings, the model is fine-tuned on the combination of available datasets. The fine-tuning process involves the following:

Using domain-specific traffic data, pre-trained YOLOv8 weights are adapted using transfer learning.

Data augmentation: Random cropping, flipping, brightness adjustment, and Gaussian noise are used expressed in Equation (1).

When a query image xq is presented, its feature embedding f is compared to all class prototypes as ck using Euclidean distance d.

The problem of pedestrian detection in dynamic urban environments is challenging, as movement patterns are diverse and road conditions are not uniform for some vehicle crossings. Deep learning models tend to rely on large amounts of labelled training data, which can sometimes be difficult and time-consuming to collect and annotate. To overcome these limitations, the proposed system includes a Few-Shot Learning (FSL) module that combines meta-learning principles to improve pedestrian crossing detection with limited data. It is also useful as a real-time traffic monitoring system, since this approach enables the system to adapt quickly to new pedestrians. Video preprocessing the frames extracted from the pipeline begins with applying enhancers such as noise reduction and frame stabilisation to make the frames clear and consistent. Next, parallel model inference is executed in which the YOLOv8, Few-Shot Learning (FSL), and LLaMA 3.2B models are run concurrently on edge devices or cloud-based infrastructure to process the frames and analyse the results [

6].

4. Experimental Results

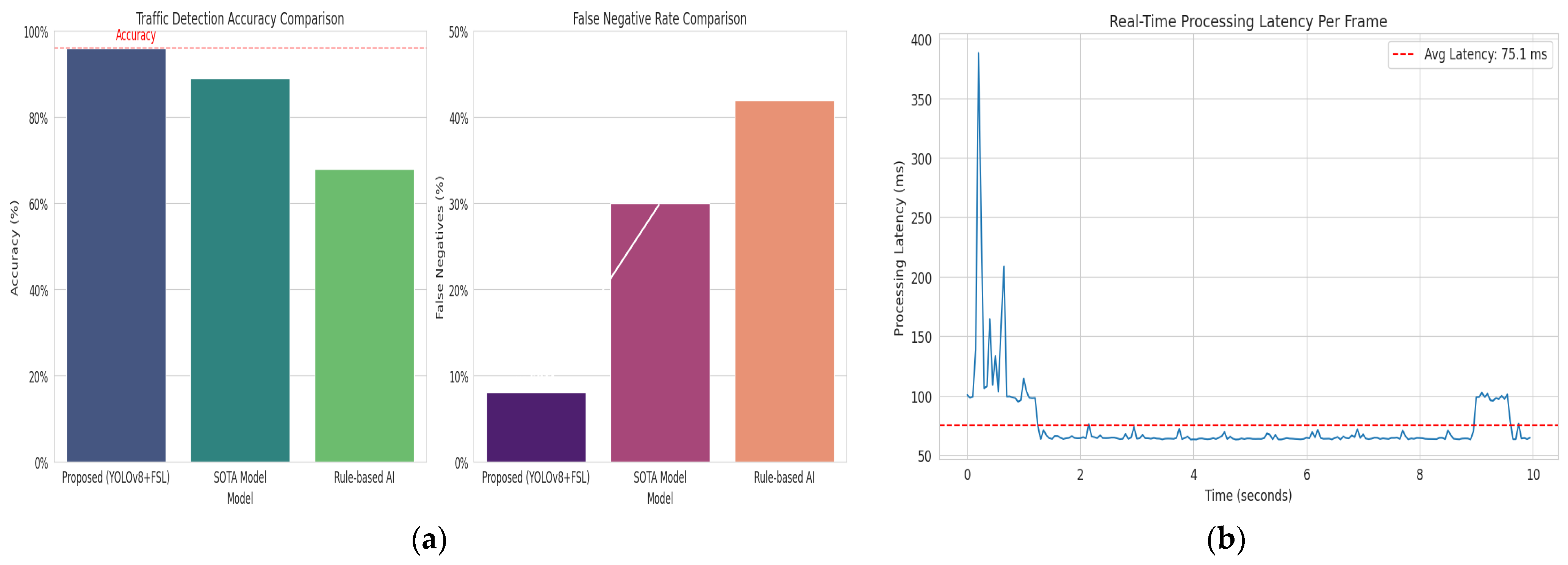

By utilising the proposed model in large-scale traffic datasets such as BDD100K and Cityscapes, the system achieves a high detection accuracy of 96% for traffic flow on the road with YOLOV8, while state-of-the-art models like SOTA achieve only 89%. This indicates that the proposed model is 40% more accurate than rule-based AI systems, with FSL reducing false negatives by 22% compared to the video-based computer vision model. The results indicate that the proposed model is well-suited for AI-driven cycles in smart city planning and for enhancing autonomous mobility in urban traffic management.

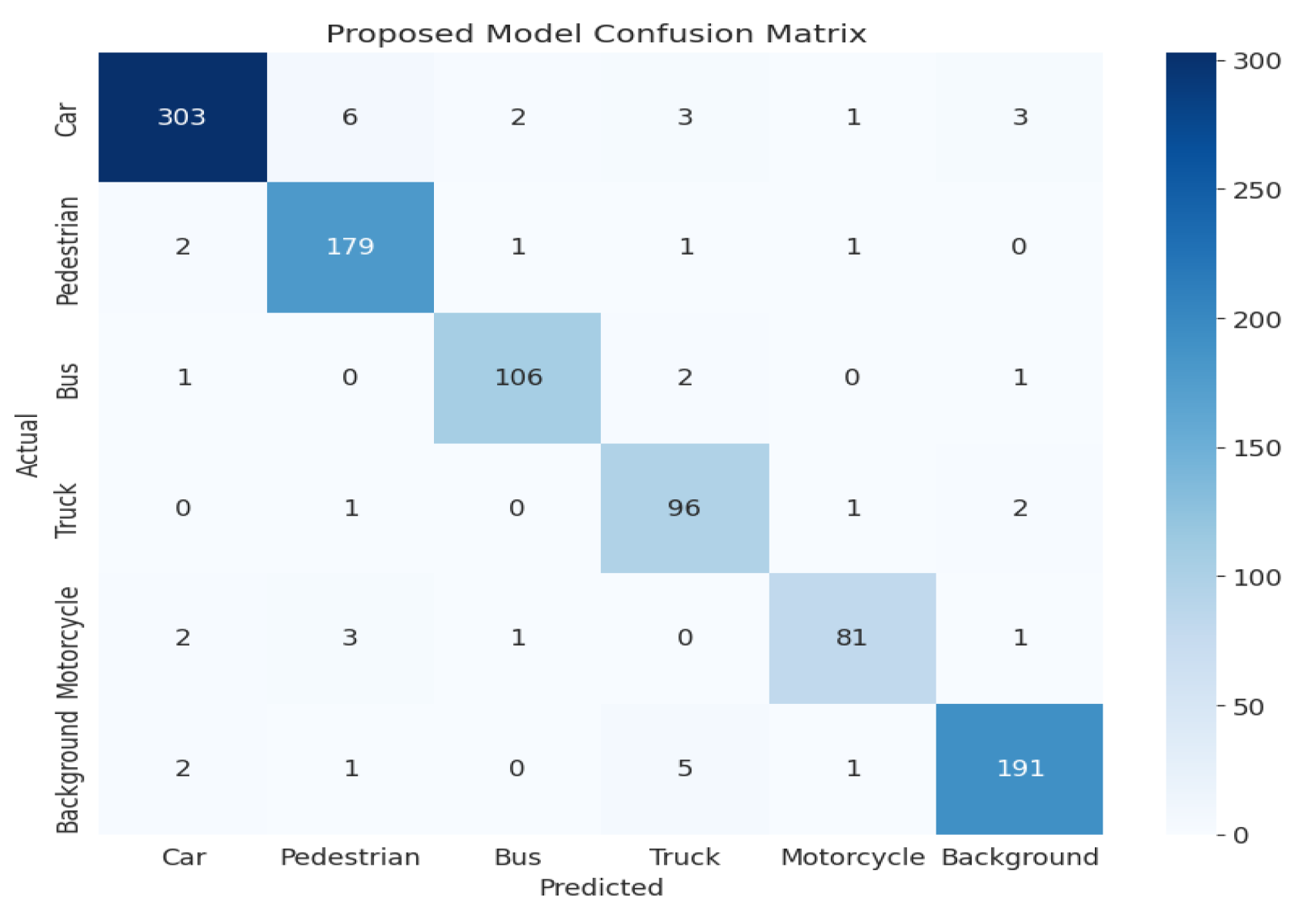

Table 1 presents a comparison of the proposed model with traditional methods, and

Figure 2 illustrates the confusion matrix of the proposed work.

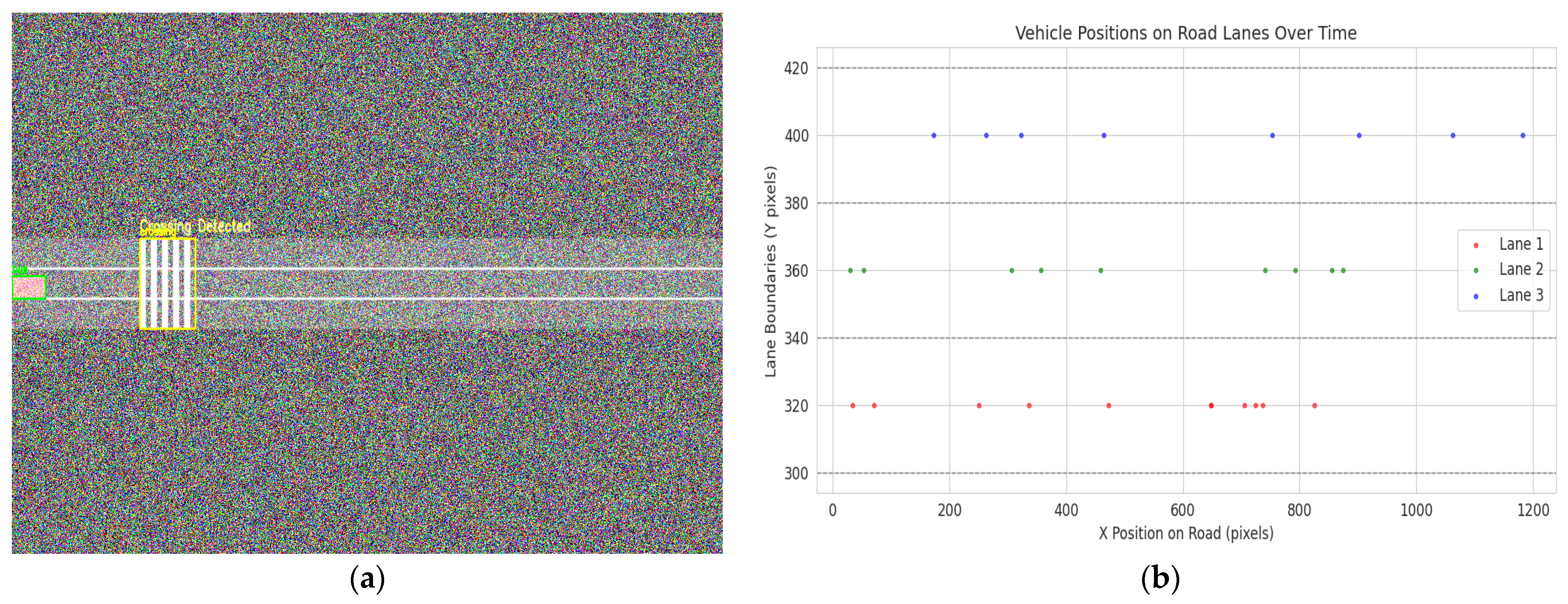

Figure 3a defines the output process by the proposed model detecting sudden events in crossing, and

Figure 3b defines the vehicle progression in road lanes.

Figure 4a defines the proposed model accuracy comparison with false negative rate analysis, and

Figure 4b defines the latency per frame of the proposed model.

5. Conclusions

The proposed modern real-time traffic monitoring system uses advanced artificial intelligence methods to boost safety levels and urban mobility performance. The system utilises the maximum advantages of YOLOv8, a state-of-the-art deep learning model, for high-precision object detection, enabling the accurate identification of vehicles, pedestrians, and other road entities. The system integrates Few-Shot Learning (FSL) as a method to detect pedestrians in dynamic conditions with a small amount of labelled data. LLaMA 3.2B, as a robust language model, enables smart decisions that allow traffic control systems to adjust their operations based on real-time conditions.

Author Contributions

Conceptualization, M.K.; methodology, M.K.; software, M.K.; validation, M.K., A.S. and R.K.D.; formal analysis, M.K.; investigation, M.K.; resources, M.K.; data curation, M.K.; writing—original draft preparation, M.K.; writing—review and editing, A.S. and R.K.D.; visualisation, A.S. and R.K.D.; supervision, A.S. and R.K.D.; project administration, A.S. and R.K.D. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Acknowledgments

During the preparation of this research authors, the used Grammarly’s language-editing function, and the authors remain fully responsible for the content.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Liu, Q.; Li, Z.; Zhang, L.; Deng, J. MSCD-YOLO: A Lightweight Dense Pedestrian Detection Model with Finer-Grained Feature Information Interaction. Sensors 2025, 25, 438. [Google Scholar] [CrossRef] [PubMed]

- Zhang, X.; Wang, M. Research on Pedestrian Tracking Technology for Autonomous Driving Scenarios. IEEE Access 2024, 12, 149662–149675. [Google Scholar] [CrossRef]

- Boudjit, K.; Ramzan, N. Human detection based on deep learning YOLO-v2 for real-time UAV applications. J. Exp. Theor. Artif. Intell. 2022, 34, 527–544. [Google Scholar] [CrossRef]

- Ojala, T.; Pietikainen, M.; Maenpaa, T. Multiresolution gray-scale and rotation invariant texture classification with local binary patterns. IEEE Trans. Pattern Anal. Mach. Intell. 2002, 24, 971–987. [Google Scholar] [CrossRef]

- Terven, J.; Córdova-Esparza, D.-M.; Romero-González, J.-A. A comprehensive review of yolo architectures in computer vision: From YOLOV1 to YOLOV8 and YOLO-NAS. Mach. Learn. Knowl. Extr. 2023, 5, 1680–1716. [Google Scholar] [CrossRef]

- Manakitsa, N.; Maraslidis, G.S.; Moysis, L.; Fragulis, G.F. A review of machine learning and deep learning for object detection, semantic segmentation, and human action recognition in machine and robotic vision. Technologies 2024, 12, 15. [Google Scholar] [CrossRef]

| Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).