Smart Cattle Behavior Sensing with Embedded Vision and TinyML at the Edge †

Abstract

1. Introduction

- We demonstrate the feasibility of deploying a quantized YOLOv2-MobileNet_0.75 model on the K210 for real-time livestock behavior detection (standing, eating, drinking, sitting).

- We characterize system performance in terms of inference latency, memory usage, and detection confidence under class imbalance conditions.

2. Materials and Methods

2.1. Dataset and Preprocessing

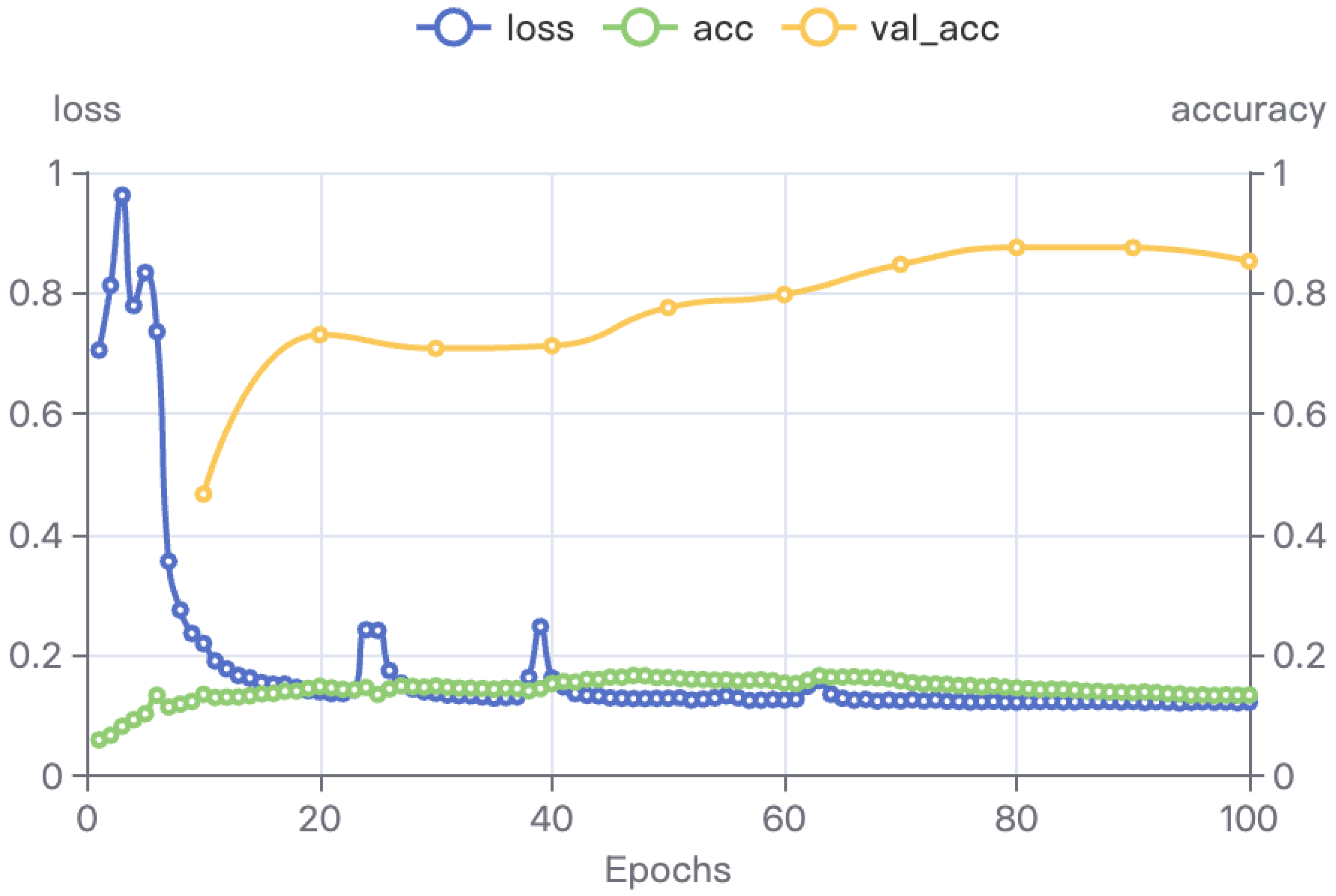

2.2. Model Architecture and Training

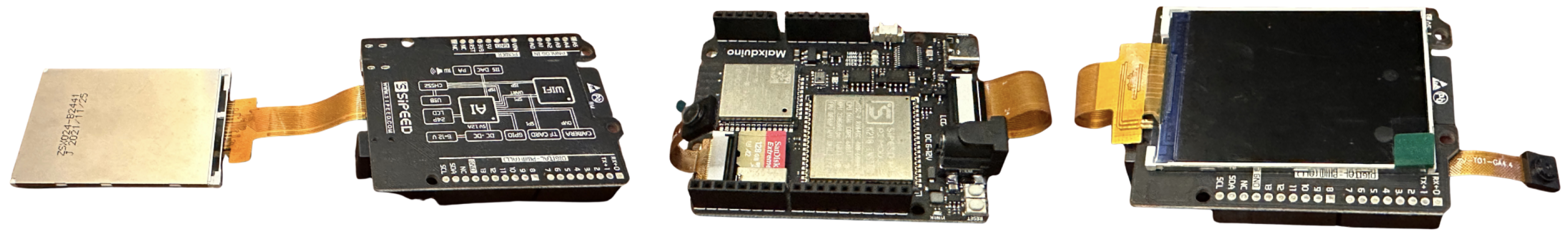

2.3. Embedded Deployment Pipeline

2.4. Sensor and Hardware Integration

2.5. System Workflow

3. Results and Discussion

3.1. On-Device Inference with Sipeed Maixduino

3.2. Detection Performance

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Kleen, J.L.; Guatteo, R. Precision Livestock Farming: What Does It Contain and What Are the Perspectives? Animals 2023, 13, 779. [Google Scholar] [CrossRef] [PubMed]

- Ding, L.; Zhang, C.; Yue, Y.; Yao, C.; Li, Z.; Hu, Y.; Yang, B.; Ma, W.; Yu, L.; Gao, R.; et al. Wearable Sensors-Based Intelligent Sensing and Application of Animal Behaviors: A Comprehensive Review. Sensors 2025, 25, 4515. [Google Scholar] [CrossRef] [PubMed]

- Senoo, E.E.K.; Anggraini, L.; Kumi, J.A.; Karolina, L.B.; Akansah, E.; Sulyman, H.A.; Mendonça, I.; Aritsugi, M. IoT solutions with artificial intelligence technologies for precision agriculture: Definitions, applications, challenges, and opportunities. Electronics 2024, 13, 1894. [Google Scholar] [CrossRef]

- Hayajneh, A.M.; Aldalahmeh, S.A.; Alasali, F.; Al-Obiedollah, H.; Zaidi, S.A.; McLernon, D. Tiny machine learning on the edge: A framework for transfer learning empowered unmanned aerial vehicle assisted smart farming. IET Smart Cities 2024, 6, 10–26. [Google Scholar] [CrossRef]

- Srinivasagan, R.; El Sayed, M.S.; Al-Rasheed, M.I.; Alzahrani, A.S. Edge intelligence for poultry welfare: Utilizing tiny machine learning neural network processors for vocalization analysis. PLoS ONE 2025, 20, e0316920. [Google Scholar] [CrossRef] [PubMed]

- Ivković, J.; Ivković, J.L. Exploring the potential of new AI-enabled MCU/SOC systems with integrated NPU/GPU accelerators for disconnected Edge computing applications: Towards cognitive SNN Neuromorphic computing. In Proceedings of the LINK IT & EdTech International Scientific Conference, Belgrade, Serbia, 26–27 May 2023; pp. 12–22. [Google Scholar]

- Torres-Sánchez, E.; Alastruey-Benedé, J.; Torres-Moreno, E. Developing an AI IoT application with open software on a RISC-V SoC. In Proceedings of the 2020 XXXV Conference on Design of Circuits and Integrated Systems (DCIS), Segovia, Spain, 18–20 November 2020; IEEE: Piscateway, NJ, USA, 2020; pp. 1–6. [Google Scholar]

- Nagar, M.S.; Chauhan, V.; Chinchavde, K.M.; Swati; Patel, S.; Engineer, P. Energy-Efficient Acceleration of Deep Learning based Facial Recognition on RISC-V Processor. In Proceedings of the 2023 11th International Conference on Intelligent Systems and Embedded Design (ISED), Dehradun, India, 15–17 December 2023; pp. 1–6. [Google Scholar] [CrossRef]

- Christofas, V.; Amanatidis, P.; Karampatzakis, D.; Lagkas, T.; Goudos, S.K.; Psannis, K.E.; Sarigiannidis, P. Comparative Evaluation between Accelerated RISC- V and ARM AI Inference Machines. In Proceedings of the 2023 6th World Symposium on Communication Engineering (WSCE), Thessaloniki, Greece, 27–29 September 2023; pp. 108–113. [Google Scholar] [CrossRef]

- Zhang, G.; Li, Z.; Huang, D.; Luo, W.; Lu, Z.; Hu, Y. A Traffic Sign Recognition System Based on Lightweight Network Learning. J. Intell. Robot. Syst. 2024, 110, 139. [Google Scholar] [CrossRef]

- Zhang, Q.; Kanjo, E. MultiCore+ TPU Accelerated Multi-Modal TinyML for Livestock Behaviour Recognition. arXiv 2025, arXiv:2504.11467. [Google Scholar]

- Viswanatha, V.; Ramachandra, A.; Hegde, P.T.; Hegde, V.; Sabhahit, V. Tinyml-based human and animal movement detection in agriculture fields in india. In Advances in Communication and Applications, Proceedings of the International Conference on Emerging Research in Computing, Information, Communication and Applications, Bangalore, India, 24–25 February 2023; Springer: Berlin/Heidelberg, Germany, 2023; pp. 49–65. [Google Scholar]

- Chen, Y.S.; Rustia, D.J.A.; Huang, S.Z.; Hsu, J.T.; Lin, T.T. IoT-Based System for Individual Dairy Cow Feeding Behavior Monitoring Using Cow Face Recognition and Edge Computing. Internet Things 2025, 33, 101674. [Google Scholar] [CrossRef]

- Smink, M.; Liu, H.; Döpfer, D.; Lee, Y.J. Computer Vision on the Edge: Individual Cattle Identification in Real-Time With ReadMyCow System. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision (WACV), Waikoloa, HI, USA, 4–8 January 2024; pp. 7056–7065. [Google Scholar]

- Raza Shirazi, S.A.; Fatima, M.; Wahab, A.; Ali, S. A Novel Active RFID and TinyML-based System for Livestock Localization in Pakistan. Sir Syed Univ. Res. J. Eng. Technol. (SSURJET) 2024, 14, 33–38. [Google Scholar] [CrossRef]

- Bartels, J.; Tokgoz, K.K.; A, S.; Fukawa, M.; Otsubo, S.; Li, C.; Rachi, I.; Takeda, K.I.; Minati, L.; Ito, H. TinyCowNet: Memory- and Power-Minimized RNNs Implementable on Tiny Edge Devices for Lifelong Cow Behavior Distribution Estimation. IEEE Access 2022, 10, 32706–32727. [Google Scholar] [CrossRef]

- Farhan, M.; Wijaya Thaha, G.S.; Alvito Kristiadi, E.; Mutijarsa, K. Cattle Anomaly Behavior Detection System Based on IoT and Computer Vision in Precision Livestock Farming. In Proceedings of the 2024 International Conference on Information Technology Systems and Innovation (ICITSI), Bandung, Indonesia, 12 December 2024; pp. 342–347. [Google Scholar] [CrossRef]

- Martinez-Rau, L.S.; Chelotti, J.O.; Giovanini, L.L.; Adin, V.; Oelmann, B.; Bader, S. On-Device Feeding Behavior Analysis of Grazing Cattle. IEEE Trans. Instrum. Meas. 2024, 73, 2512113. [Google Scholar] [CrossRef]

| Class | Total Detections | Avg. Score | Max Score | Min Score |

|---|---|---|---|---|

| Standing | 1484 | 0.78 | 0.99 | 0.50 |

| Eating | 606 | 0.82 | 0.98 | 0.51 |

| Drinking | 22 | 0.77 | 0.96 | 0.59 |

| Sitting | 6 | 0.62 | 0.77 | 0.54 |

| Metric | Average |

|---|---|

| Latency (ms) | 35.0 |

| Memory Allocated (bytes) | 350,427 |

| Memory Free (bytes) | 167,717 |

| Detections per Frame | 2.18 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Jao, J.R.; Vallar, E.A.; Hameed, I. Smart Cattle Behavior Sensing with Embedded Vision and TinyML at the Edge. Eng. Proc. 2025, 118, 81. https://doi.org/10.3390/ECSA-12-26519

Jao JR, Vallar EA, Hameed I. Smart Cattle Behavior Sensing with Embedded Vision and TinyML at the Edge. Engineering Proceedings. 2025; 118(1):81. https://doi.org/10.3390/ECSA-12-26519

Chicago/Turabian StyleJao, Jazzie R., Edgar A. Vallar, and Ibrahim Hameed. 2025. "Smart Cattle Behavior Sensing with Embedded Vision and TinyML at the Edge" Engineering Proceedings 118, no. 1: 81. https://doi.org/10.3390/ECSA-12-26519

APA StyleJao, J. R., Vallar, E. A., & Hameed, I. (2025). Smart Cattle Behavior Sensing with Embedded Vision and TinyML at the Edge. Engineering Proceedings, 118(1), 81. https://doi.org/10.3390/ECSA-12-26519