Abstract

This study presents a validated AI-based data acquisition system for precision agriculture, fully modeled in MATLAB/Simulink R2025a. The system integrates virtual sensors, convolutional neural networks (CNNs), and image-based root analysis to support the ancestral “Huacho Rosado” potato cultivation technique. It is structured into three layers: Environmental Data Acquisition, AI-driven processing, and agronomic Decision Support. Virtual sensors simulate soil temperature, moisture, and density, while CNN modules classify soil texture, estimate moisture, and detect root density using RGB images. The decision-support layer computes agronomic forces—bit, shear, and inertial—which are essential for soil management. Simulation results demonstrate real-time inference below 200 ms, moisture prediction errors under 5%, and root density classification accuracy of 90%. A continuous 24-h simulation confirmed the system’s stability and responsiveness. This approach provides a low-cost, scalable, and reproducible framework that bridges indigenous knowledge with modern AI tools, supporting sustainable agriculture in resource-limited environments.

1. Introduction

The global transition toward sustainable agriculture and food security has accelerated the integration of advanced technologies into modern farming systems [1,2]. Precision agriculture, as a central innovation, incorporates sensor networks, automation, and artificial intelligence (AI) to enhance productivity while reducing environmental impacts [3]. Integrating ancestral agricultural wisdom with digital tools presents a novel approach to improving sustainability in agroecosystems [4]. A relevant example is the pre-Columbian potato cultivation technique known as “Huacho Rosado”, which relies on green manuring and minimal soil disturbance to enhance fertility, preserve microbial activity, and reduce erosion [5] (p. 54), [6]. Combining such traditional methods with AI-enabled Decision Support systems can lead to highly resilient and sustainable agricultural models.

Model-based simulation platforms like MATLAB/Simulink R2025a enable the virtual testing of agro-environmental conditions [7,8,9], supporting the development and validation of smart farming systems [10,11]. Simulated acquisition of soil moisture, temperature, and optical properties enables detailed analysis prior to field deployment [12,13,14,15], particularly when combined with real-time soil sensors [16]. In contrast to these previous studies, which mainly focused on greenhouse control, machinery testing, or generic IoT-based frameworks, the proposed system advances the state of the art in two key aspects. First, it integrates CNN-based modules for soil classification, moisture estimation, and root density analysis directly into a Simulink environment, achieving real-time operation with validated accuracy. Second, it incorporates the ancestral potato cultivation technique “Huacho Rosado”, bridging advanced AI methods with traditional Andean agronomic practices. This combination delivers both technical robustness and cultural integration, clearly distinguishing our work from existing approaches. In parallel, convolutional neural networks (CNNs) have shown outstanding performance in soil classification, plant disease recognition, and visual phenotyping [17,18,19], offering automated high-precision assessments [20,21,22]. The fusion of image processing, multi-modal learning, and environmental modeling contributes to a new generation of AI-driven systems that support precision agriculture at both smallholder and industrial scales [23,24].

This study presents the design and Simulink-based simulation of an AI-driven data acquisition system for precision agriculture, integrating simulated sensor data (soil temperature, moisture, and density) with convolutional neural networks (CNNs) for soil classification, image-based moisture estimation, and root density analysis [25,26]. All components converge in a unified decision-support module. By incorporating the ancestral “Huacho Rosado” potato cultivation technique, the system links modern AI with traditional Andean practices to strengthen sustainable crop management. This integration underscores both the scientific value of merging advanced computational tools with indigenous knowledge and its practical role in optimizing cultivation strategies. The main contributions of this work are as follows.

The main contributions of this work are the integration of ancestral agricultural knowledge with AI and model-based methodologies, the development of a fully simulated sensing system in MATLAB/Simulink R2025a with virtual sensors for temperature, moisture, and soil density, and the implementation of CNN modules for soil classification, moisture estimation, and root density analysis. Additionally, a decision-support layer was designed to compute agronomic forces (bit, shear, inertial) for real-time tillage optimization, resulting in a reproducible, scalable, and low-cost framework for experimentation, education, and deployment in resource-limited agricultural contexts.

2. Methodology

AI-driven data acquisition in precision agriculture requires simulating physical sensors and intelligent processing. Using MATLAB/Simulink R2025a, this work develops a digital twin of the “Huacho Rosado” potato cultivation method—based on green manuring, minimal tillage, and biodiversity preservation—enhanced with real-time monitoring of soil temperature, moisture, and root density to support agronomic decisions and resource efficiency.

2.1. System Architecture

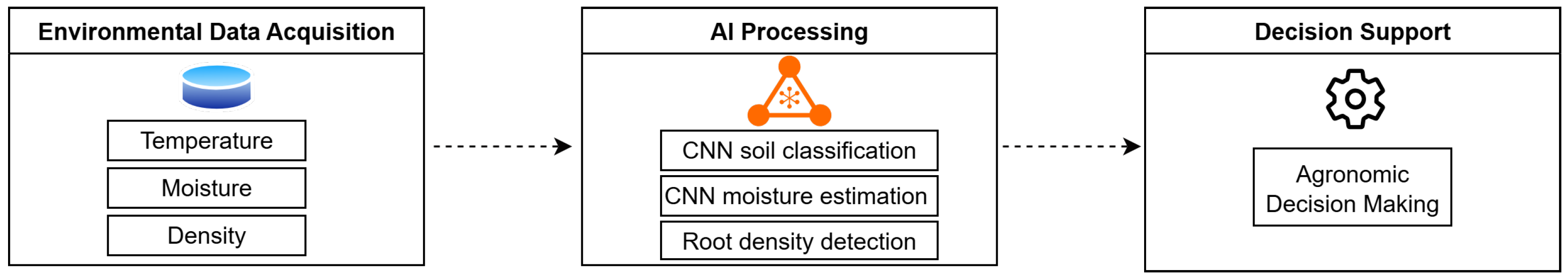

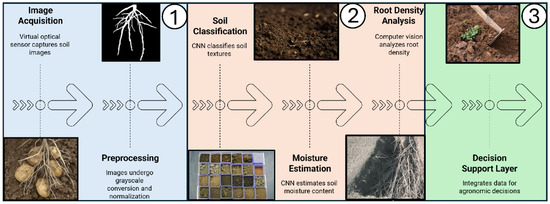

The model consists of three layers (Figure 1): (1) Environmental Data Acquisition, simulating virtual sensors for temperature (PT100), soil moisture (capacitive), and soil density (binary classifier); (2) AI Processing, where CNN modules classify soil textures, estimate moisture, and detect root density from image analysis; and (3) Decision Support, which integrates sensor and AI outputs to guide agronomic decisions in line with the “Huacho Rosado” technique.

Figure 1.

Architecture of the AI-based data acquisition system. It includes (1) Environmental Data Acquisition with virtual sensors for temperature, soil moisture, and density; (2) AI Processing using CNNs for soil, moisture, and root-density analysis; and (3) Decision Support, generating agronomic recommendations aligned with the “Huacho Rosado” technique.

Each layer was implemented as an independent Simulink subsystem, enabling modular development and future integration with robotic platforms or IoT-based agricultural systems.

2.2. Simulation of Environmental Sensors

The Environmental Data Acquisition layer (Figure 1) simulates key variables—soil temperature, moisture, and optical analysis of soil and roots—based on commercial sensor specifications and validated through agronomic and simulation-based methods. This virtual framework enables rapid prototyping and real-time performance assessment, supporting low-cost implementation in rural environments.

2.2.1. Temperature Sensor

A PT100 resistance temperature detector (RTD) was simulated using its standard resistance–temperature characteristic, based on the DIN/EN/IEC 60751 specification [27]. The model includes

- Linear calibration:where is the resistance at temperature T (in ohms, ), T is the temperature (in degrees Celsius, °C), is the nominal resistance at 0 °C, and is the temperature coefficient of resistance (°C−1).

- Signal conditioning: Output scaled to 4–20 (mA), following typical industrial interface standards.

The temperature sensor enables monitoring of soil thermal dynamics, which are critical for tuber development in “Huacho Rosado” cultivation.

2.2.2. Capacitive Soil Moisture Sensor and Soil Density

A capacitive soil moisture sensor was simulated using a non-linear transfer function derived from manufacturer datasheets [28]. The model emulates a current-based analog response, processes the signal through conditioning and regulation stages, and calculates volumetric water content (VWC), classifying it into three agronomic ranges: dry (0–40%), moderate (40–60%), and wet (60–100%). This was complemented by a soil density estimation block, which infers compaction from the regulated moisture signal and outputs a binary classification: “Dense” or “Not dense.” In parallel, a convolutional neural network (CNN) module estimated soil moisture from RGB ground images. This dual approach enhanced robustness and accuracy: the analog model ensured physical consistency, while the CNN enabled non-contact estimation through computer vision. Combining both methods provided redundancy and cross-validation, supporting adaptive irrigation and monitoring of soil preparation during the early stages of the “Huacho Rosado” cultivation method in traditional Andean agriculture.

2.2.3. Optical Sensor for Soil and Root Imaging

An optical camera [29] was modeled as a virtual image acquisition block, simulating images of: soil surface (used as input for CNN-based soil classification) and soil cross-sections (used for root density analysis).

Images were preprocessed using grayscale conversion, contrast enhancement, and image normalization [30]. This multi-purpose optical sensing enables advanced AI-based analysis within the system’s Processing Layer.

2.3. Development of AI-Based Modules

The Processing Layer (Figure 1) integrates three core artificial intelligence modules that support automated agronomic decision-making:

- A CNN-based soil classification system,

- A CNN-based moisture estimator,

- An image processing module for root density analysis.

2.3.1. Soil Classification Using Convolutional Neural Networks

A convolutional neural network (CNN) was trained to classify four soil texture types—clayey, sandy, limestone, and humiferous—based on visual texture from RGB images of soil samples. Preprocessing included grayscale normalization, histogram equalization, and noise filtering using Gaussian blur to improve feature extraction.

The training dataset consisted of 3200 RGB soil images (800 samples per texture class). Images were resized to pixels and normalized. To improve robustness, data augmentation was applied through random rotations (20°), horizontal flips, and brightness variations (). The dataset was split into 70% training, 15% validation, and 15% testing. The CNN was trained for 50 epochs with a batch size of 32, using the Adam optimizer (learning rate = 0.001) and categorical cross-entropy loss. Early stopping (monitoring validation loss; patience = 10) was implemented to prevent overfitting.

2.3.2. Moisture Estimation Using CNN

This subsystem estimates soil moisture using a convolutional neural network (CNN) trained on soil surface images. The input image is resized, normalized (scaled by ), and converted to single precision before being fed into the CNN block. The network processes the visual features and outputs a predicted moisture level, which is then scaled and displayed as the percentage Volumetric Water Content (VWC).

The training dataset included 2400 soil surface images representing the full 0–100% VWC range. Images were resized to pixels and normalized. Data augmentation was performed with random rotations (20°) and brightness variations () to simulate variable field lighting. The dataset was divided into 70% training, 15% validation, and 15% testing. Training was conducted for 50 epochs with a batch size of 32, using the Adam optimizer (learning rate = 0.001) and mean squared error (MSE) loss. Early stopping (monitoring validation loss; patience = 10) ensured generalization without overfitting. Validation and testing accuracy, latency, and F1-scores are summarized in Table 1.

Table 1.

Performance comparison of CNN and baseline methods with the number of test samples (N).

2.3.3. Root Density Estimation via Image Processing

To simulate root development in soil, the system incorporates an image analysis pipeline that estimates root density from cross-sectional soil images. The process includes image acquisition simulation (camera block), contrast enhancement, binary thresholding, and morphological operations to extract root features. The extracted root area index (RAI) is calculated as the ratio of root pixels to total pixels in the region of interest. This value feeds into the Decision Support Layer to adjust recommendations on nutrient availability, irrigation, and harvest timing.

This modular AI approach enables the system to autonomously interpret environmental cues and emulate expert decision-making strategies based on traditional knowledge enhanced by machine learning.

2.4. Integration and Testing Within Simulink

The system was integrated and tested in MATLAB/Simulink R2025a, enabling modular development and real-time simulation of all layers shown in Figure 1. The platform supports multidomain simulation and hardware-in-the-loop (HIL), both essential for smart agriculture. Data exchange between modules was handled through Data Store Memory blocks, emulating a centralized data bus, while virtual sensors generated 1 Hz time-series signals to reproduce field dynamics. AI components were implemented with MATLAB Function blocks, embedding customized CNNs and image-processing pipelines validated using synthetic datasets under varying soil and lighting conditions. System performance was evaluated through test cases assessing sensor latency, classification accuracy, and root-density estimation errors across diverse environmental scenarios.

3. Implementation and Results

3.1. Environmental Sensor Simulation

The data acquisition layer includes a virtual PT100 temperature model, a capacitive moisture model, and a soil density classifier derived from the analog moisture response. Each model was validated against manufacturer specifications or literature data, using independent test sets of 180–200 samples. The resulting accuracy metrics are presented in Table 2.

Table 2.

Simulated parameters of environmental sensors including the number of test samples (N).

The simulation of environmental sensors focused on replicating the behavior of key agronomic variables using MATLAB/Simulink R2025a. As shown in Table 2, the temperature sensor was modeled as a linear RTD (PT100), providing accurate measurements across a 0–50 °C range with a root mean square error (RMSE) of 0.42 °C. The soil moisture sensor was designed using a non-linear exponential model, calibrated to reflect volumetric water content (VWC) values from 10% to 45%, achieving a simulation RMSE of 1.9%. For soil density, a binary classifier was implemented using thresholds derived from the water retention (VWC) curve, distinguishing between dense and non-dense substrates. This classification was critical for enhancing root–soil interaction modeling, although no RMSE was reported due to its discrete nature.

These results confirm the simulated sensors’ ability to generate reliable input signals for downstream AI-based processing and decision-making modules, enabling robust testing under varying soil conditions without the need for physical hardware.

3.2. AI-Based Image Modules

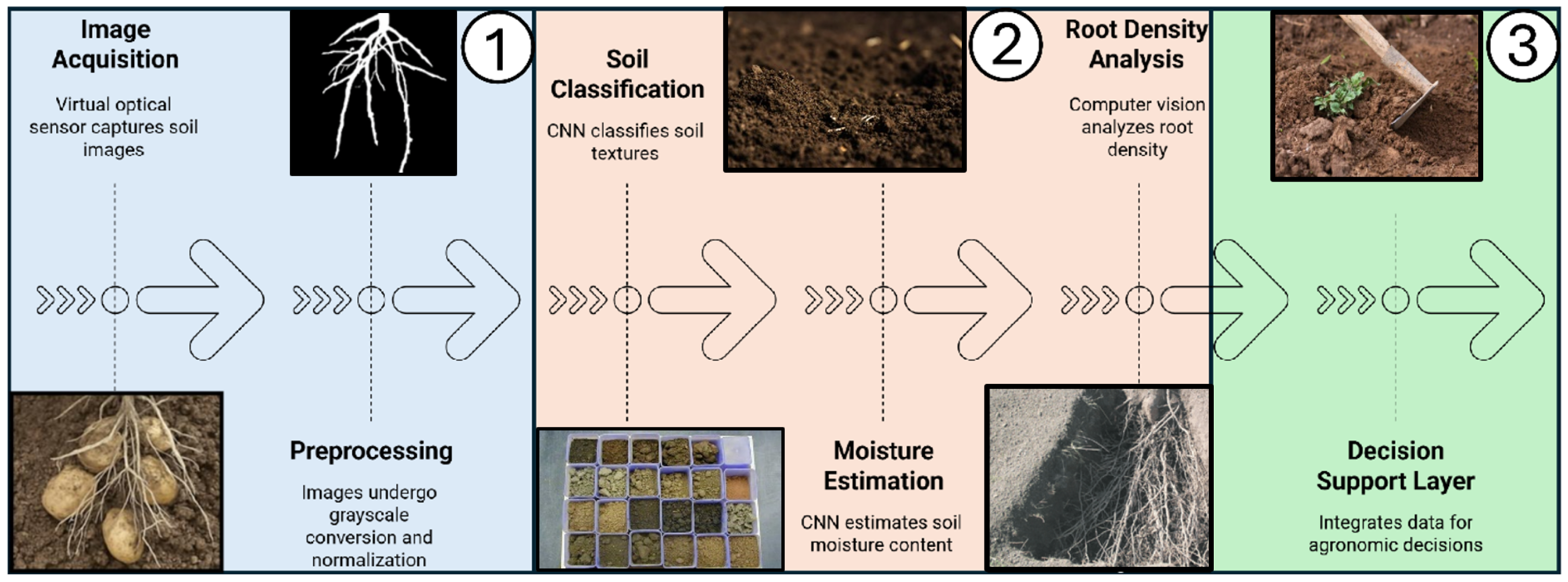

RGB soil images were processed using two CNN blocks (soil type and moisture) and a root-density module. Results are summarized in Table 1: soil classification achieved 93.4% accuracy (F1 = 0.92), moisture estimation 91.8% (F1 = 0.90), and root-density classification 90.2% (F1 = 0.89), all with inference latencies below 200 ms. Figure 2 shows the image-processing pipeline, where soil images are preprocessed and analyzed by CNNs, and extracted features feed into the decision-support layer for agronomic optimization.

Figure 2.

Image acquisition and preprocessing pipeline (left) leading to CNN-based classification of soil textures, moisture estimation, and root-density analysis (center), with integration into the decision-support layer (right).

As shown in Figure 2, the binarized root images are directly used for root-density classification. The corresponding quantitative results in Table 1 confirm the reliability of this pipeline, with the root-density module achieving 90.2% accuracy and an F1-score of 0.89. This explicit link between visual transformations and numerical performance demonstrates how qualitative image analysis translates into robust quantitative evaluation.

For comparison, classical machine learning models were also tested on the same datasets. A Support Vector Machine (SVM, RBF kernel) and a Decision Tree used grayscale histograms and texture descriptors as input features.

As seen in Table 1, classical methods performed reasonably (70–82%) but were consistently outperformed by CNNs (90–93%). These results were obtained using independent test subsets of samples for soil classification, for moisture estimation, and for root-density classification. Although SVM and Decision Trees had lower inference latency (<70 ms), CNNs provided superior accuracy and F1-scores, confirming their advantage for complex soil and moisture classification tasks while remaining real-time capable.

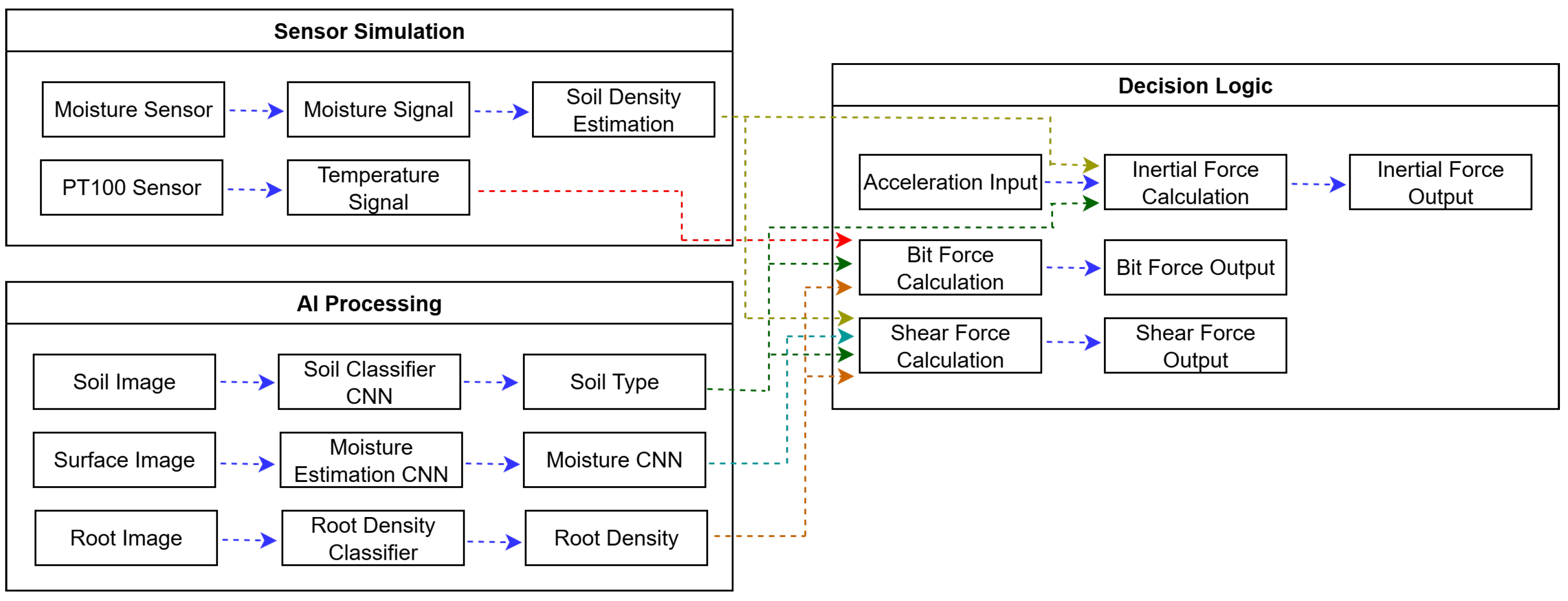

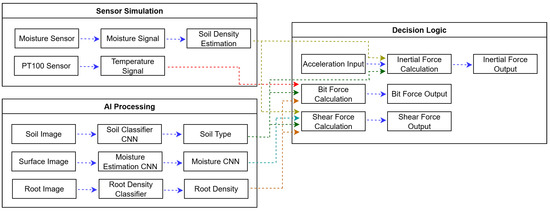

3.3. Integrated Decision-Support

Figure 3 illustrates the complete integration of the AI-based modules within the Simulink environment. The diagram shows how temperature, moisture, and soil density data—obtained from simulated sensors and neural networks—are combined with image-based classifiers for soil type, root density, and image-estimated moisture. These variables are fed into decision logic blocks that calculate three critical agronomic outputs, bit force, shear force, and inertial force, which support tillage optimization in the context of “Huacho Rosado” cultivation. The system architecture ensures real-time data flow through a centralized processing bus, enabling synchronized computation and supporting smart decision-making under variable field conditions.

Figure 3.

Integrated AI-based data acquisition and decision system in Simulink. The framework includes (1) Sensor Simulation, generating temperature, moisture, and density signals; (2) AI Processing, where CNN modules classify soil type, estimate moisture, and analyze root density; and (3) Decision Logic, which calculates inertial, bit, and shear forces to support agronomic decision-making.

Outputs from the sensor layer and CNNs are merged in the decision-support layer, where the agronomic forces required for “Huacho Rosado” tillage are calculated. Typical operating ranges are listed in Table 3. Each output is computed based on critical input parameters such as soil type, root density, and soil moisture.

Table 3.

Agronomic Output Variables Derived from AI-Based Environmental and Image Inputs.

Bit force (0–120 N) is determined primarily by soil temperature, density, and texture, serving as a key metric for optimizing tillage depth and minimizing mechanical resistance during sub-surface field operations. Shear force (0–95 N), derived from root density, soil granularity, and moisture levels, provides critical input for calibrating root-cutting mechanisms, reducing crop damage and energy inefficiencies. Inertial force (0–150 N), calculated based on acceleration and soil density, plays a central role in evaluating implement stability, enabling real-time adjustments to mitigate vibration and maintain operational precision across heterogeneous soils. Collectively, these agronomic outputs highlight the decision-support capabilities of the integrated system, which combines analog simulations with CNN-based image analysis. In the context of “Huacho Rosado” potato cultivation, this synergy enables site-specific management and enhances the adaptability of smart agro-robotic platforms. During a continuous 24-h simulation, the integrated model demonstrated operational stability, with CNN inference latencies consistently below 200 ms and no communication bottlenecks observed. The fusion of vision-based AI and simulated sensor data yielded robust performance, delivering redundant moisture estimations and consistent soil-density classification—parameters essential for informed irrigation scheduling and adaptive traction control in precision agriculture.

4. Conclusions

This study presented a digital twin for sustainable potato cultivation, integrating virtual sensors, CNN-based modules for soil classification, moisture estimation, and root-density analysis into a MATLAB/Simulink R2025a environment. The system demonstrated reliable performance in simulation, with accuracy levels above 90% for the CNN models and inference latencies below 200 ms, ensuring real-time applicability. A key contribution of the study is the combination of advanced AI methods with the ancestral “Huacho Rosado” potato cultivation technique, which emphasizes biodiversity preservation, minimal tillage, and green manuring. By merging traditional knowledge with computational intelligence, the framework highlights both cultural relevance and technical innovation, offering a reproducible and low-cost solution for resource-limited agricultural contexts. Future work will focus on validating the CNN-based modules with experimental field datasets from local potato plots, testing the robustness of the digital twin under diverse environmental and operational conditions, and extending the framework with UAV-based imaging to enable scalable soil and crop monitoring. These steps will provide experimental evidence of system accuracy, enhance its generalizability, and support its eventual deployment as a practical decision-support tool for precision agriculture.

Author Contributions

Conceptualization, A.C.S.; methodology, A.C.S.; software, S.M.Q.; validation, P.P. and S.M.Q.; formal analysis, P.P.; investigation, A.C.S. and S.M.Q.; writing—original draft preparation, A.C.S.; writing—review and editing, P.P.; visualization, S.M.Q.; supervision, A.C.S. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Ethical review and approval were waived for this study due to the fact that no humans or animals were used.

Informed Consent Statement

Not applicable.

Data Availability Statement

Data are contained within the article.

Acknowledgments

During the preparation of this manuscript, the authors used OpenAI GPT-4 to support the translation from Spanish to English and to improve the clarity and technical consistency of the text. The authors reviewed and edited all AI-generated suggestions and take full responsibility for the final content of this publication.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Zhang, C.; Kovacs, J.M. The Application of Small Unmanned Aerial Systems for Precision Agriculture: A Review. Precis. Agric. 2012, 13, 693–712. [Google Scholar] [CrossRef]

- Arshad, J.; Sheheryar, C.A.A.; Rahmani, M.K.I.; Qayyum, A.; Nasir, R.; Chauhdary, S.T.; Almalki, K.J. Simulink-Driven Digital Twin Implementation for Smart Greenhouse Environmental Control. Eng. Innov. J. 2025, 10, 100679. [Google Scholar] [CrossRef]

- Mulla, D.J. Twenty-Five Years of Remote Sensing in Precision Agriculture: Key Advances and Remaining Knowledge Gaps. Biosyst. Eng. 2013, 114, 358–371. [Google Scholar] [CrossRef]

- Gebbers, R.; Adamchuk, V.I. Precision Agriculture and Food Security. Science 2010, 327, 828–831. [Google Scholar] [CrossRef] [PubMed]

- Pumisacho, M.; Sherwood, S. El Cultivo de la Papa en Ecuador; INIAP: Quito, Ecuador, 2002. [Google Scholar]

- Altieri, M.A.; Nicholls, C.I. Agroecology and the Search for a Truly Sustainable Agriculture; United Nations Environment Programme (UNEP): Nairobi, Kenya, 2005. [Google Scholar]

- Cutini, M.; Bisaglia, C.; Brambilla, M.; Bragaglio, A.; Pallottino, F.; Assirelli, A.; Romano, E.; Montaghi, A.; Leo, E.; Pezzola, M.; et al. A Co-Simulation Virtual Reality Machinery Simulator for Advanced Precision Agriculture Applications. Agriculture 2023, 13, 1603. [Google Scholar] [CrossRef]

- Tapia-Mendez, E.; Hernandez-Sandoval, M.; Salazar-Colores, S.; Cruz-Albarran, I.A.; Tovar-Arriaga, S.; Morales-Hernandez, L.A. A Novel Deep Learning Approach for Precision Agriculture: Quality Detection in Fruits and Vegetables Using Object Detection Models. Agronomy 2025, 15, 1307. [Google Scholar] [CrossRef]

- Chourlias, A.; Violos, J.; Leivadeas, A. Virtual Sensors for Smart Farming: An IoT- and AI-Enabled Approach. Internet Things 2025, 21, 101611. [Google Scholar] [CrossRef]

- MathWorks Inc. Simulink User’s Guide; Mathworks: Natick, MA, USA, 2021; Available online: https://www.mathworks.com (accessed on 12 June 2025).

- Padhiary, M.; Roy, P.; Kumar, K. Simulation Software in the Design and AI-Driven Automation of All-Terrain Farm Vehicles and Implements for Precision Agriculture. Recent Prog. Sci. Eng. 2025, 1, 6. [Google Scholar] [CrossRef]

- López-Granados, F. Weed Detection for Site-Specific Weed Management: Mapping and Real-Time Approaches. Weed Res. 2011, 51, 1–11. [Google Scholar] [CrossRef]

- Zhang, X.; Feng, G.; Sun, X. Advanced Technologies of Soil Moisture Monitoring in Precision Agriculture: A Review. J. Agric. Food Res. 2024, 13, 101473. [Google Scholar] [CrossRef]

- Santos, T.T.; Valle, R.C.S.C.; Silva, F.M.; Amaral, A.J.; Campos, A.T.; Saraz, J.A.O. Modeling and Simulation of Temperature and Relative Humidity Inside a Growth Chamber. Energies 2019, 12, 4056. [Google Scholar] [CrossRef]

- Tzounis, A.; Katsoulas, N.; Bartzanas, T.; Kittas, C. Internet of Things in Agriculture, Recent Advances and Future Challenges. Biosyst. Eng. 2017, 164, 31–48. [Google Scholar] [CrossRef]

- Adamchuk, V.I.; Hummel, J.W.; Morgan, M.T.; Upadhyaya, S.K. On-the-Go Soil Sensors for Precision Agriculture. Comput. Electron. Agric. 2004, 44, 71–91. [Google Scholar] [CrossRef]

- Kamilaris, A.; Prenafeta-Boldú, F.X. Deep Learning in Agriculture: A Survey. Comput. Electron. Agric. 2018, 147, 70–90. [Google Scholar] [CrossRef]

- Babalola, E.O.; Asad, M.H.; Bais, A. Soil Surface Texture Classification Using RGB Images Acquired Under Uncontrolled Field Conditions. IEEE Access 2023, 11, 67774–67785. [Google Scholar] [CrossRef]

- Dwivedi, R.K.; Kumari, N.; Bishnoi, A.; Pandey, R.P. Soil Identification and Classification Using Machine Learning: A Review. IEEE Access 2022, 11, 958–963. [Google Scholar] [CrossRef]

- Liakos, K.G.; Busato, P.; Moshou, D.; Pearson, S.; Bochtis, D. Machine Learning in Agriculture: A Review. Sensors 2018, 18, 2674. [Google Scholar] [CrossRef]

- Kim, D.; Kim, T.; Jeon, J.; Son, Y. Convolutional Neural Network-Based Soil Water Content and Density Prediction Model for Agricultural Land Using Soil Surface Images. Appl. Sci. 2023, 13, 2936. [Google Scholar] [CrossRef]

- Wang, Y.; Shi, L.; Hu, Y.; Hu, X.; Song, W.; Wang, L. A Comprehensive Study of Deep Learning for Soil Moisture Prediction: Insights on Multiple Network Structures. Hydrol. Earth Syst. Sci. 2024, 28, 917–943. [Google Scholar] [CrossRef]

- Jiang, C.; Guo, X.; Li, Y.; Lai, N.; Peng, L.; Geng, Q. Multimodal Deep Learning Models in Precision Agriculture: Cotton Yield Prediction Based on Unmanned Aerial Vehicle Imagery and Meteorological Data. Agronomy 2025, 15, 1217. [Google Scholar] [CrossRef]

- Jiang, H.; Zhang, C.; Qiao, Y.; Zhang, Z.; Zhang, W.; Song, C. CNN Feature Based Graph Convolutional Network for Weed and Crop Recognition in Smart Farming. Comput. Electron. Agric. 2020, 179, 105450. [Google Scholar] [CrossRef]

- Linaza, M.; Posada, J.; Bund, J.; Eisert, P.; Quartulli, M.; Döllner, J.; Pagani, A.; Olaizola, I.; Barriguinha, A.; Moysiadis, T.; et al. Data-Driven Artificial Intelligence Applications for Sustainable Precision Agriculture. Agronomy 2021, 11, 1227. [Google Scholar] [CrossRef]

- Sattar, K.; Maqsood, U.; Hussain, Q.; Majeed, S.; Kaleem, S.; Babar, M.; Qureshi, B. Soil Texture Analysis Using Controlled Image Processing. Agric. Technol. 2024, 4, 100588. [Google Scholar] [CrossRef]

- Baumer Group. Pt100 Sensor Data Sheet. 2020. Available online: https://www.baumer.com (accessed on 17 June 2025).

- DFRobot. Capacitive Soil Moisture Sensor SKU: SEN0193. 2020. Available online: https://www.dfrobot.com (accessed on 20 June 2025).

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep Learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef]

- Pinter, P.J.; Hatfield, J.L.; Schepers, J.S.; Barnes, E.M.; Moran, M.S.; Daughtry, C.S.T.; Upchurch, D.R. Remote Sensing for Crop Management. Photogramm. Eng. Remote Sens. 2003, 69, 647–664. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).