Leveraging Large Language Models and Data Augmentation in Cognitive Computing to Enhance Stock Price Predictions †

Abstract

1. Introduction

2. Related Works

3. Materials and Methods

3.1. Word Embedding

3.2. Cognitive Computing Models

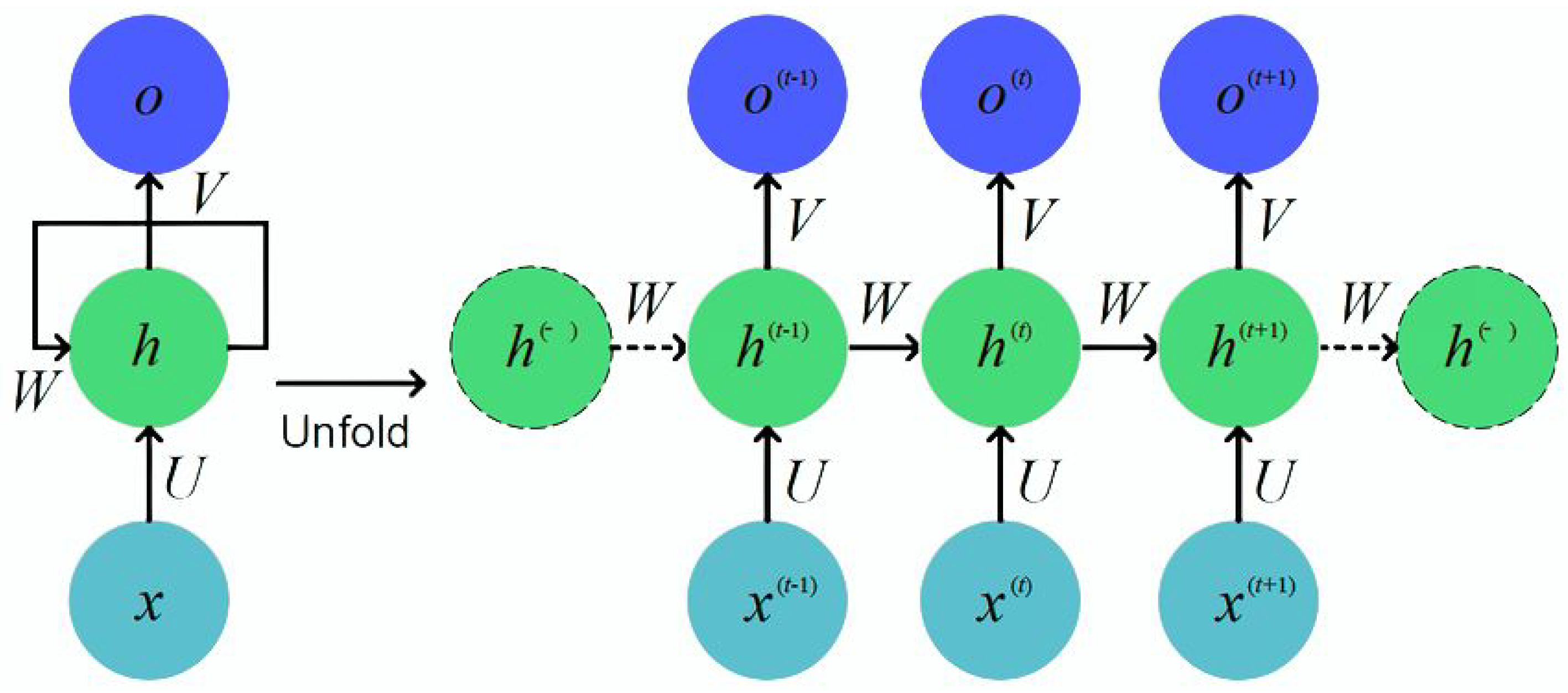

3.2.1. RNN

3.2.2. Multilingual BERT (mBERT)

3.2.3. RoBERTa

3.2.4. GPT-4

4. Results and Discussion

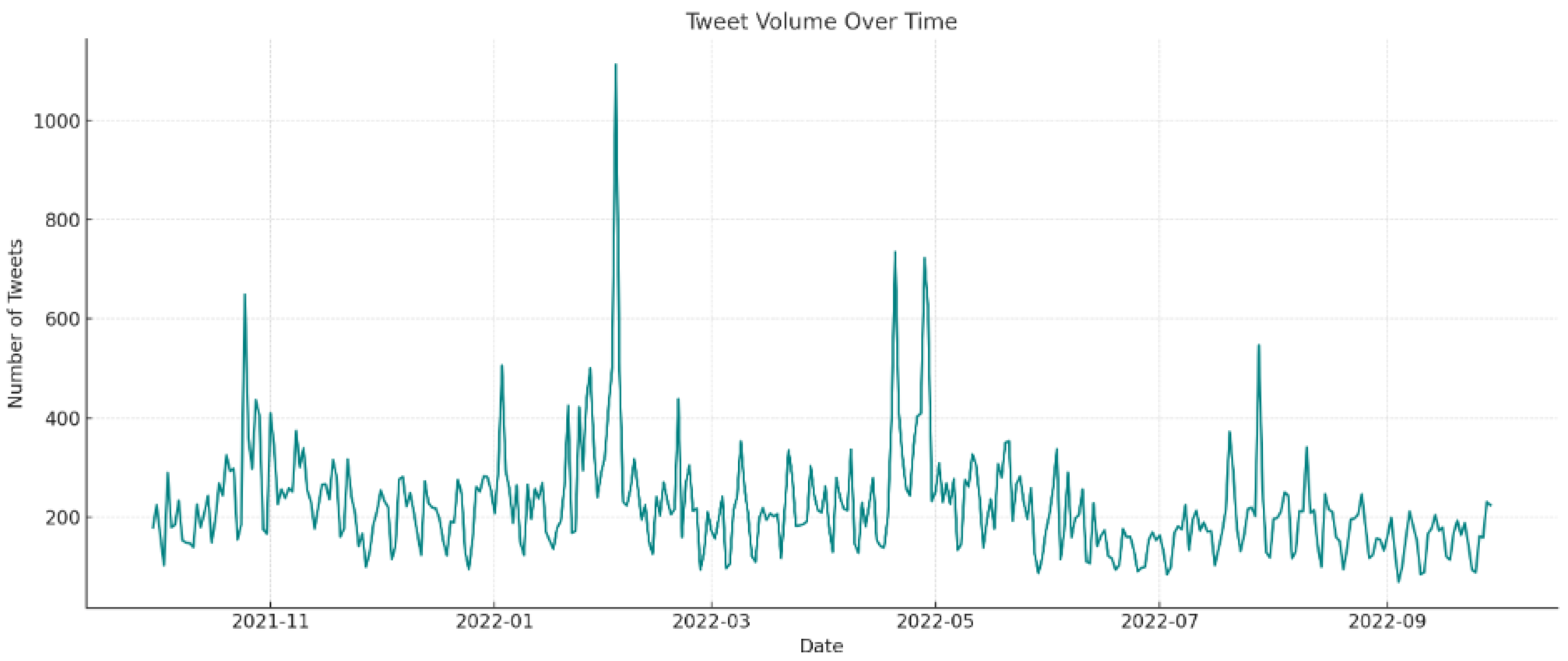

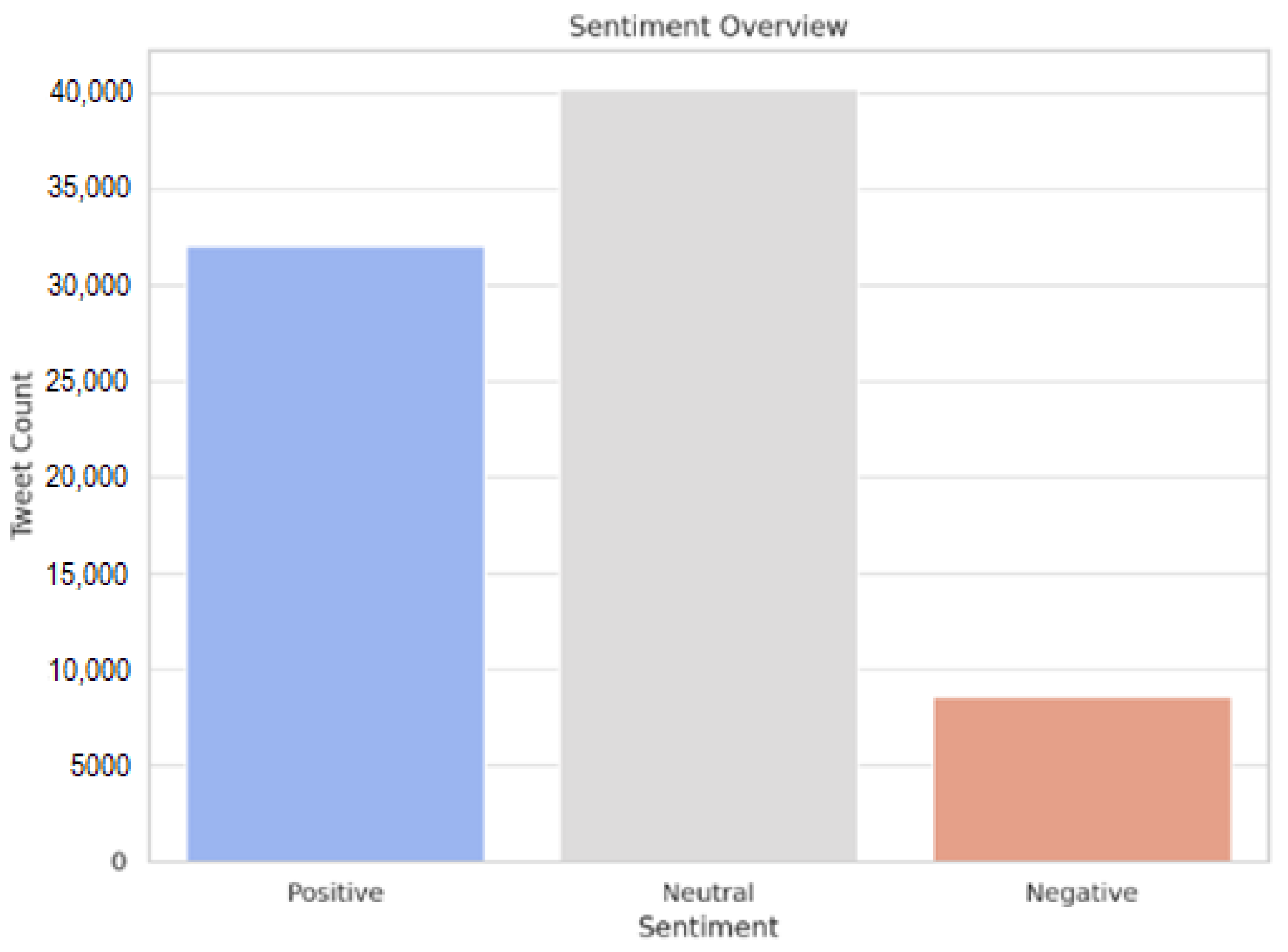

4.1. Dataset

4.2. Pre-Processing Using NLP

4.3. Results Analysis

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| BERT | Bidirectional Encoder Representations from Transformers |

| NLP | Natural Language Processing |

| LLM | Large Language Model |

| GPT | Generative Pre-trained Transformer |

| RNN | Recurrent Neural Network |

References

- Banik, S.; Sharma, N.; Mangla, M.; Mohanty, S.N.; Shitharth, S. LSTM based decision support system for swing trading in stock market. Knowl.-Based Syst. 2022, 239, 107994. [Google Scholar] [CrossRef]

- Bordoloi, M.; Biswas, S.K. Sentiment analysis: A survey on design framework, applications and future scopes. Artif. Intell. Rev. 2023, 56, 12505–12560. [Google Scholar] [CrossRef]

- Hicham, N.; Karim, S.; Habbat, N. Enhancing Arabic Sentiment Analysis in E-Commerce Reviews on Social Media Through a Stacked Ensemble Deep Learning Approach. Math. Model. Eng. Probl. 2023, 10, 790–798. [Google Scholar] [CrossRef]

- Huang, J.-Y.; Tung, C.-L.; Lin, W.-Z. Using Social Network Sentiment Analysis and Genetic Algorithm to Improve the Stock Prediction Accuracy of the Deep Learning-Based Approach. Int. J. Comput. Intell. Syst. 2023, 16, 93. [Google Scholar] [CrossRef]

- Yadav, K.; Yadav, M.; Saini, S. Stock values predictions using deep learning based hybrid models. CAAI Trans. Intell. Technol. 2022, 7, 107–116. [Google Scholar] [CrossRef]

- Nouri, H.; Sabri, K.; Habbat, N. A Comprehensive Analysis of Consumers Sentiments Using an Ensemble Based Approach for Effective Marketing Decision-Making. In Artificial Intelligence and Industrial Applications; Masrour, T., Ramchoun, H., Hajji, T., Hosni, M., Eds.; Lecture Notes in Networks and Systems; Springer Nature: Cham, Switzerland, 2023; Volume 772, pp. 323–333. [Google Scholar]

- Hicham, N.; Nassera, H. Improving emotion classification in e-commerce customer review analysis using GPT and meta-ensemble deep learning technique for multilingual system. Multimed. Tools Appl. 2024, 83, 87323–87367. [Google Scholar] [CrossRef]

- Hicham, N.; Habbat Nassera, H. Customer behavior forecasting using machine learning techniques for improved marketing campaign competitiveness. Int. J. Eng. Market. Dev. (IJEMD) 2023, 1, 1–15. [Google Scholar] [CrossRef]

- De Souza, O.A.P.; Miguel, L.F.F. CIOA: Circle-Inspired Optimization Algorithm, an algorithm for engineering optimization. SoftwareX 2022, 19, 101192. [Google Scholar] [CrossRef]

- Mu, G.; Gao, N.; Wang, Y.; Dai, L. A Stock Price Prediction Model Based on Investor Sentiment and Optimized Deep Learning. IEEE Access 2023, 11, 51353–51367. [Google Scholar] [CrossRef]

- Li, J.; Yang, J. Financial shocks, investor sentiment, and heterogeneous firms’ output volatility: Evidence from credit asset securitization markets. Finance Res. Lett. 2024, 60, 104860. [Google Scholar] [CrossRef]

- He, N.; Wang, L.; Zheng, P.; Zhang, C.; Li, L. CBSASNet: A Siamese Network Based on Channel Bias Split Attention for Remote Sensing Change Detection. IEEE Trans. Geosci. Remote Sens. 2024, 62, 1–17. [Google Scholar] [CrossRef]

- Kanakaraj, M.; Guddeti, R.M.R. Performance analysis of Ensemble methods on Twitter sentiment analysis using NLP techniques. In Proceedings of the 2015 IEEE 9th International Conference on Semantic Computing (IEEE ICSC 2015), Anaheim, CA, USA, 7–9 February 2015; pp. 169–170. [Google Scholar]

- Anaraki, M.V.; Farzin, S. Humboldt Squid Optimization Algorithm (HSOA): A Novel Nature-Inspired Technique for Solving Optimization Problems. IEEE Access 2023, 11, 122069–122115. [Google Scholar] [CrossRef]

- Fan, W.; Ding, Y.; Ning, L.; Wang, S.; Li, H.; Yin, D.; Chua, T.; Li, Q. A Survey on RAG Meeting LLMs: Towards Retrieval-Augmented Large Language Models. arXiv 2024, arXiv:2405.06211. [Google Scholar] [CrossRef]

- Agrawal, S.; Trenkle, J.; Kawale, J. Beyond Labels: Leveraging Deep Learning and LLMs for Content Metadata. In Proceedings of the RecSys ’23: Seventeenth ACM Conference on Recommender Systems, Singapore, 18–22 September 2023; p. 1. [Google Scholar]

- Karimi, A.; Rossi, L.; Prati, A. AEDA: An Easier Data Augmentation Technique for Text Classification. arXiv 2021, arXiv:2108.13230. [Google Scholar] [CrossRef]

- Ansari, G.; Garg, M.; Saxena, C. Data Augmentation for Mental Health Classification on Social Media. arXiv 2021, arXiv:2112.10064. [Google Scholar] [CrossRef]

- Zhao, Y.; Yang, G. Deep Learning-based Integrated Framework for stock price movement prediction. Appl. Soft Comput. 2023, 133, 109921. [Google Scholar] [CrossRef]

- Wang, Z.; Hu, Z.; Li, F.; Ho, S.-B.; Cambria, E. Learning-Based Stock Trending Prediction by Incorporating Technical Indicators and Social Media Sentiment. Cogn. Comput. 2023, 15, 1092–1102. [Google Scholar] [CrossRef]

- Alghisi, S.; Rizzoli, M.; Roccabruna, G.; Mousavi, S.M.; Riccardi, G. Should We Fine-Tune or RAG? Evaluating Different Techniques to Adapt LLMs for Dialogue. arXiv 2024, arXiv:2406.06399. [Google Scholar] [CrossRef]

- Bayer, M.; Kaufhold, M.-A.; Buchhold, B.; Keller, M.; Dallmeyer, J.; Reuter, C. Data augmentation in natural language processing: A novel text generation approach for long and short text classifiers. Int. J. Mach. Learn. Cybern. 2023, 14, 135–150. [Google Scholar] [CrossRef]

- Pires, T.; Schlinger, E.; Garrette, D. How multilingual is Multilingual BERT? arXiv 2019, arXiv:1906.01502. [Google Scholar] [CrossRef]

- Feng, W.; Guan, N.; Li, Y.; Zhang, X.; Luo, Z. Audio visual speech recognition with multimodal recurrent neural networks. In Proceedings of the 2017 International Joint Conference on Neural Networks (IJCNN), Anchorage, AK, USA, 14–19 May 2017; pp. 681–688. [Google Scholar] [CrossRef]

- Liu, Y.; Ott, M.; Goyal, N.; Du, J.; Joshi, M.; Chen, D.; Levy, O.; Lewis, M.; Zettlemoyer, L.; Stoyanov, V. RoBERTa: A Robustly Optimized BERT Pretraining Approach. arXiv 2019, arXiv:1907.11692. Available online: http://arxiv.org/abs/1907.11692 (accessed on 9 August 2023).

- Equinxx. Stock Tweets for Sentiment Analysis and Prediction. Kaggle Datasets, 2025. Available online: https://www.kaggle.com/datasets/equinxx/stock-tweets-for-sentiment-analysis-and-prediction (accessed on 20 April 2025).

- Hicham, N.; Karim, S.; Habbat, N. Customer sentiment analysis for Arabic social media using a novel ensemble machine learning approach. Int. J. Electr. Comput. Eng. (IJECE) 2023, 13, 4504–4515. [Google Scholar] [CrossRef]

| Model | Accuracy | F1-Score | Recall | Precision | Preprocessing | Data Augmentation |

|---|---|---|---|---|---|---|

| RNN | 0.823 | 0.810 | 0.792 | 0.836 | Yes | Yes (GPT-generated) |

| mBERT | 0.877 | 0.869 | 0.855 | 0.884 | Yes | Yes (GPT-generated) |

| RoBERTa | 0.891 | 0.882 | 0.871 | 0.893 | Yes | Yes (GPT-generated) |

| GPT-4 | 0.919 | 0.920 | 0.911 | 0.928 | Yes | Yes (GPT-generated) |

| Model | Accuracy | F1-Score | Recall | Precision | Preprocessing | Data Augmentation |

|---|---|---|---|---|---|---|

| RNN | 0.808 | 0.795 | 0.777 | 0.820 | Yes | No |

| mBERT | 0.861 | 0.853 | 0.839 | 0.868 | Yes | No |

| RoBERTa | 0.874 | 0.866 | 0.855 | 0.876 | Yes | No |

| GPT-4 | 0.902 | 0.903 | 0.894 | 0.911 | Yes | No |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Habbat, N.; Nouri, H.; Berradi, Z. Leveraging Large Language Models and Data Augmentation in Cognitive Computing to Enhance Stock Price Predictions. Eng. Proc. 2025, 112, 40. https://doi.org/10.3390/engproc2025112040

Habbat N, Nouri H, Berradi Z. Leveraging Large Language Models and Data Augmentation in Cognitive Computing to Enhance Stock Price Predictions. Engineering Proceedings. 2025; 112(1):40. https://doi.org/10.3390/engproc2025112040

Chicago/Turabian StyleHabbat, Nassera, Hicham Nouri, and Zahra Berradi. 2025. "Leveraging Large Language Models and Data Augmentation in Cognitive Computing to Enhance Stock Price Predictions" Engineering Proceedings 112, no. 1: 40. https://doi.org/10.3390/engproc2025112040

APA StyleHabbat, N., Nouri, H., & Berradi, Z. (2025). Leveraging Large Language Models and Data Augmentation in Cognitive Computing to Enhance Stock Price Predictions. Engineering Proceedings, 112(1), 40. https://doi.org/10.3390/engproc2025112040