Abstract

The review “Symbolic Approaches for Explainable Artificial Intelligence” discusses the potential of symbolic AI to improve transparency, contrasting it with opaque deep learning systems. Though connectionist models perform well, their poor interpretability means that they are of concern for bias and trust in high-stakes fields such as healthcare and finance. The authors integrate symbolic AI methods—rule-based reasoning, ontologies, and expert systems—with neuro-symbolic integrations (e.g., DeepProbLog). This paper covers topics such as scalability and integrating knowledge, proposing solutions like dynamic ontologies. The survey concludes by advocating for hybrid AI approaches and interdisciplinary collaboration to reconcile technical innovation with ethical and regulatory demands.

1. Introduction

Artificial intelligence (AI) is revolutionizing healthcare, finance, industry, and transport. Yet its global adoption is hampered by a real problem: the lack of explainability. Being able to understand AI decisions is critical in maintaining trust, safety, and ethical practices in safety-critical use cases, e.g., medical diagnosis, legal decisions, and autonomous driving [1]. Medical physicians must justify AI-driven treatment decisions, while autonomous cars must justify emergency actions [2]. Whilst deep learning has revolutionized image, text, and data processing, it remains black-boxed due to its reliance on complex, difficult-to-analyze computations [3]. Black-boxing creates many risks: Hidden bias can lead to unintended discrimination [4]; there are legal liability issues due to errors being difficult to track [5]; and low adoption follows as users, such as physicians and engineers, are not happy to trust systems that they do not comprehend [6]. Symbolic AI, whose rule-based explainability (e.g., MYCIN [7]) was ubiquitous in the past, is now being blended with deep learning and knowledge graphs in developing explainable hybrid systems [8].

For instance, a neural network might identify a tumor, whilst symbolic rules justify why it did this [9].

- This review aims to:

- 1.

- Provide a comprehensive overview of the theoretical foundations, current applications, and challenges of symbolic approaches in explainable AI.

- 2.

- Analyze the limitations of traditional symbolic systems (e.g., scalability, rigidity) and explore emerging solutions, including neuro-symbolic AI [8].

- 3.

- Discuss future directions in critical fields (e.g., healthcare, cybersecurity, autonomous vehicles) where explainability is a non-negotiable requirement.

- 4.

- Foster interdisciplinary research by bridging technical advancements with ethical, legal, and societal considerations.

- This paper is structured as follows:

- Foundations of symbolic approaches: Logic, ontologies, and expert systems.

- Comparison with connectionist approaches: Advantages, limitations, and complementarities.

- Current applications: Use cases in healthcare, finance, industry, and law.

- Technical challenges: Scalability, integration with deep learning.

- Future directions: Hybrid AI, dynamic ontologies, regulatory frameworks.

By synthesizing recent research and identifying gaps in the literature, this paper aims to serve as a reference for researchers, practitioners, and policymakers committed to the development of responsible and transparent AI.

2. Methods

This chapter details the research process followed to seek and choose relevant research, and to analyze research pertaining to symbolic approaches to explainable artificial intelligence (AI). The literature search strategy, inclusion and exclusion criteria, and the method of analysis and synthesis are described in detail.

2.1. Literature Search Strategy

The literature search was conducted systematically to identify the most relevant and recent work in symbolic approaches for explainable AI. The process followed established guidelines for systematic reviews in AI research [10].

2.1.1. Sources Used

The following scientific databases were queried:

- IEEE Xplore: for technical articles and conferences in computer science and engineering.

- PubMed: for medical applications of explainable AI [9].

- Google Scholar: for broad, interdisciplinary coverage.

- ACM Digital Library: for works in theoretical and applied computer science.

- SpringerLink: for articles and books on theoretical foundations and practical applications [11].

2.1.2. Keywords and Search Terms

Queries were constructed using combinations of relevant keywords, including: “symbolic AI”; “explainable AI”; “hybrid AI”; “neuro-symbolic AI”; “logical reasoning in AI”; “knowledge representation in AI”; “expert systems and explainability”; “ontologies in AI”. Searches were refined using Boolean operators (AND, OR, NOT) and limiting results to articles published in the last five years (2018–2023) [6].

2.2. Inclusion and Exclusion Criteria

In order to guarantee the relevance and quality of the work selected, inclusion and exclusion criteria were applied.

2.2.1. Inclusion Criteria

- Recent articles: Works published between 2018 and 2023 were preferred to reflect the most recent advances [12].

- Literature reviews: Systematic reviews and meta-analyses providing an overview of the field were included [13].

- Applied studies: Works demonstrating practical applications of symbolic approaches in fields such as healthcare, finance, or robotics were selected [8].

- Thematic relevance: Only works directly related to AI explicability and symbolic approaches were included.

2.2.2. Exclusion Criteria

- Irrelevant works: Articles not dealing with explicability or symbolic approaches were excluded.

- Obsolete works: Publications prior to 2018 were excluded, unless they were considered seminal references [14].

- Non-scientific works: Non-peer-reviewed articles, blogs, and opinion pieces were excluded [7].

2.2.3. Selection Process

In the first search in IEEE Xplore, ScienceDirect, and ACM Digital Library, 1532 articles were found. After discarding 282 duplicates, 1250 records remained for screening. Title and abstract screening resulted in the exclusion of 983 articles and 267 full-text articles were assessed. Of the full-text articles assessed, 180 articles were excluded for not meeting the inclusion criteria; thus, 87 studies were included in the final review.

2.3. Analysis and Synthesis Approach

Once the relevant documents had been identified, an in-depth analysis was carried out to classify the works and identify trends and gaps in the existing literature [15]. Works were classified according to the approaches used (Table 1).

Table 1.

The classification of works based on approaches.

Operationalization of the Classification Process

The classification of works into logical, ontological, expert systems, and hybrid approaches was conducted through a structured process. First, explicit inclusion criteria were defined for each category:

- Logical approaches: Works primarily relying on formal logic, predicate logic, or probabilistic reasoning.

- Ontological approaches: Studies using ontologies or structured knowledge representation frameworks.

- Expert systems: Research focused on rule-based or knowledge-based expert systems, particularly modern implementations.

- Hybrid approaches: Works integrating symbolic methods (e.g., logic, ontologies) with deep learning or other neural techniques.

Two independent reviewers performed the initial categorization to ensure consistency and mitigate bias. Discrepancies were resolved through discussion, with a third reviewer consulted when consensus could not be reached. This multi-reviewer process strengthened the reliability of the classification, as documented in Table 1.

Recent advancements in AI highlight a growing synergy between symbolic and connectionist approaches, particularly in domains requiring transparency, such as healthcare and finance. This neuro-symbolic integration addresses the demand for AI systems that balance performance with interpretability, ensuring decisions can be logically justified. However, key challenges remain, including the difficulty of incorporating uncertainty into symbolic reasoning and the inefficiency of manually constructing knowledge bases. The study underscores three critical insights: first, symbolic methods are essential for explainability in high-risk applications; second, technical barriers like scalability and neural-symbolic integration must be overcome; and third, neuro-symbolic AI represents a transformative opportunity by merging structured reasoning with data-driven learning. The findings suggest future research should prioritize hybrid architectures and automated knowledge engineering to realize AI that is both powerful and transparent. The study’s rigorous methodology, combining systematic literature review and structured analysis, provides a comprehensive assessment of the field.

3. Foundations of Symbolic Approaches in Explainable AI

This section explores the foundations of symbolic approaches in artificial intelligence (AI), focusing on their role in the development of explainable AI. It discusses the definition and principles of symbolic approaches, their historical evolution, and the key concepts underlying them, such as logic, ontologies, expert systems and knowledge representation.

3.1. Definition and Principles of Symbolic Approaches

Symbolic AI is a core paradigm that models cognition through structured symbol manipulation and logical rules, contrasting with connectionist approaches [15]. It relies on three key principles: (1) representing knowledge as discrete symbols with defined syntax [16], (2) applying formal inference (deduction, abduction, induction) to derive knowledge [17], and (3) ensuring computational transparency for traceable reasoning [18]. These traits make symbolic systems ideal for explainability-critical fields like medicine or law, using formalisms such as production rules, modal logics, and semantic networks. However, their inability to handle uncertainty or contextual data has spurred hybrid models blending symbolic rigor with neural learning [19].

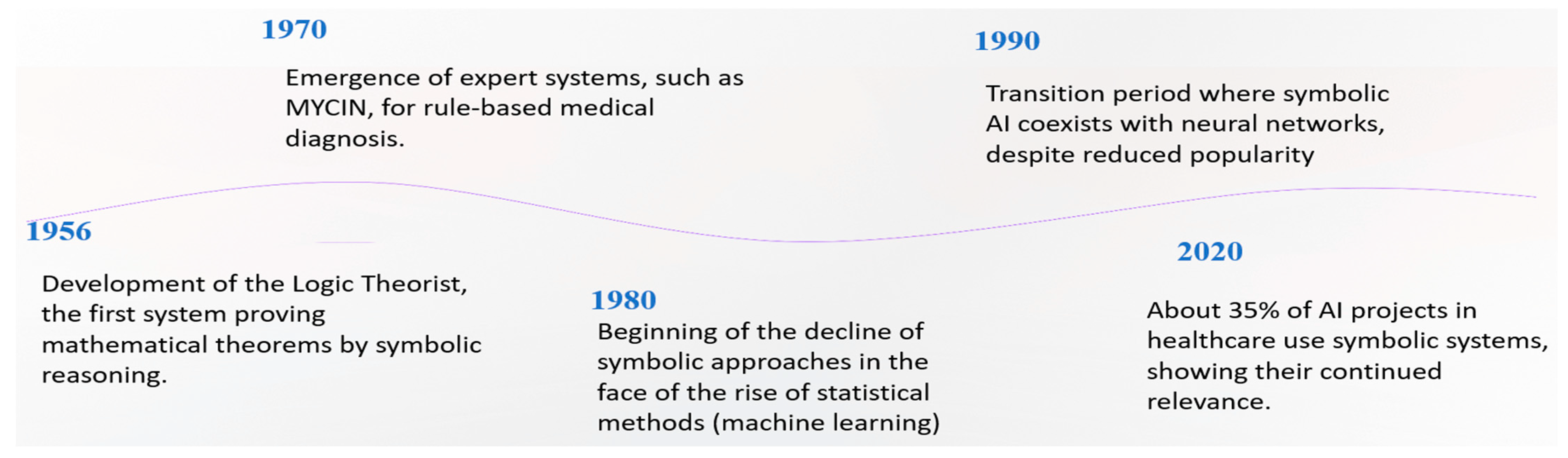

3.2. Historical Development and Evolution of Symbolic Approaches

Symbolic approaches played a central role in the early days of AI, before being partially eclipsed by statistical and connectionist methods. Their evolution can be divided into several phases as shown in Figure 1:

Figure 1.

Historical evolution of symbolic approaches.

3.3. Key Concepts of Symbolic Approaches

Symbolic approaches remain fundamental for developing explainable AI, built upon decades of research and conceptual pillars that continue to shape intelligent systems.

3.3.1. Logical Foundations

- Predicate logic models complex relationships, propositional logic handles simple statements.

- Fuzzy logic captures gradations; probabilistic logic integrates uncertainty.

- Enables transparent, rigorous reasoning [20].

3.3.2. Ontological Structures

- Formal knowledge organization (e.g., SNOMED CT in medicine).

- Ensure unambiguous interpretation and knowledge reuse [21].

3.3.3. Expert Systems

- Combine knowledge bases with inference engines [22].

- Provide traceable solutions in specialized domains.

3.3.4. Knowledge Representation

- Production rules, conceptual graphs, and frames [23].

- Translate human knowledge into computable, structured formats.

Though sometimes considered to be old-fashioned, symbolic approaches are nevertheless essential for explainable AI, particularly in applications where they are really important. Their combination with machine learning in neuro-symbolic systems leverages both worlds to produce more robust and explainable AI solutions.

Here is a comparative table (Table 2) summarizing the differences and complementarities between “symbolic” and “connectionist” approaches, as well as the prospects for “hybrid approaches.” The table clearly shows the characteristics of each approach.

Table 2.

Comparative table: Symbolic vs. Connectionist vs. Hybrid.

4. Current Applications of Symbolic Approaches

Thanks to their transparency and ability to provide clear explanations, symbolic approaches are used in a variety of fields where explicability and logical rigor are essential (Table 3). Their adoption aligns with growing regulatory demands for trustworthy AI systems [26]

Table 3.

Summary table of applications.

Symbolic approaches are widely used in fields where explicability, transparency, and compliance are essential. Whether in healthcare, finance, industry, or law, these systems offer robust, interpretable solutions to complex problems. Their ability to provide clear explanations and comply with strict rules makes them an indispensable tool for building trusted AI.

Symbolic approaches are widely used in fields where explainability, transparency, and compliance are essential. Whether in healthcare, finance, industry, or law, these systems offer robust and interpretable solutions for complex problems. Their ability to provide clear explanations and adhere to strict rules makes them an indispensable tool for building trustworthy AI.

5. Towards Hybrid Explainable AI: Trends and Future Directions

Hybrid systems that combine symbolic and connectionist systems present a significant avenue to powerful and explainable AI. This section explores current hybridization trends, what role ontologies and knowledge graphs play, and what is on the horizon in healthcare, cybersecurity, and autonomous vehicles.

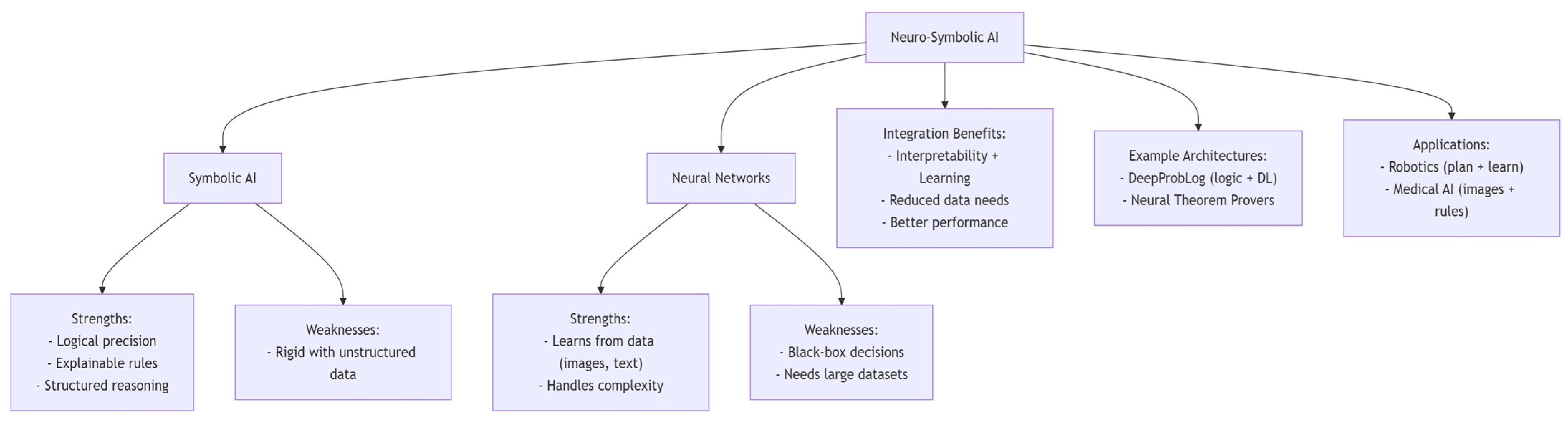

5.1. Hybridization of Symbolic and Connectionist Approaches

Neuro-symbolic AI combines symbolic systems’ logical reasoning with neural networks’ learning capabilities [29], creating models that maintain interpretability while processing complex data [8]. This synergy leverages symbolic modules for structured knowledge representation and connectionist components for handling unstructured data like images and language Figure 2.

Figure 2.

How Neuro-Symbolic AI Combines Neural Networks and Symbolic Reasoning.

Key architectures demonstrate this integration:

- DeepProbLog merges probabilistic logic with deep learning [26]

- Neural theorem provers apply deep learning to formal reasoning [20]

- IBM’s frameworks combine ontologies with neural models [30]

Applications span multiple domains:

- Robotics (symbolic planning with adaptive learning)

- Healthcare (combining neural image analysis with diagnostic rules)

This hybrid approach enables more robust, transparent AI systems that require less training data while maintaining explainability—crucial for real-world applications [2].

5.2. Using Ontologies and Knowledge Graphs

Ontologies (e.g., SNOMED CT in medicine) and knowledge graphs enable machine learning with structured domain knowledge by making rules, relationships, and concepts formal [31]. The models support data interoperability between heterogeneous sources such as sensors, databases, and text files.

Knowledge graphs like Google’s [32] link entities (individuals, places, events) to make search more effective and deliver helpful medical information by relating genes, treatments, and diseases.

For integration with deep learning, symbolic embeddings [33] map symbolic ideas to neural network-friendly representations. Examples such as RAVEN [34] illustrate this combination by carrying out reasoning on knowledge graphs directly using relational embeddings, thus marrying symbolic AI’s accuracy with machine learning’s flexibility.

5.3. Future Prospects in Critical Areas

Hybrid artificial intelligence opens up new perspectives in several key fields by combining symbolic and connectionist approaches. In the healthcare sector, it is enabling the development of more personalized and ethical medicine. Hybrid systems analyze medical records while integrating explicit ethical rules, thus avoiding algorithmic bias. For example, such a system can recommend personalized treatments by cross-referencing genomic data with current medical protocols while scrupulously complying with regulations such as the RGPD [35].

Cybersecurity also benefits from this hybrid approach. The combined analysis of system logs makes it possible, on the one hand, to identify suspicious patterns thanks to clear symbolic rules and, on the other, to detect complex anomalies via neural networks. A system of this type can thus spot a cyberattack in real time [36] by correlating behavioral rules with dynamic analysis of network traffic, while continually adapting to new threats thanks to machine learning mechanisms.

Autonomous vehicles are a perfect illustration of the importance of hybrid systems in reconciling performance and transparency. Critical decisions, such as obstacle avoidance, are based on explicit symbolic rules while exploiting the perceptual capabilities of convolutional neural networks processing sensor data. This architecture enables the vehicle to clearly explain its actions—for example, justifying emergency braking with reference to a precise safety rule and the detection of an object by the vision system. The main technical challenge lies in optimizing response times to guarantee real-time reactions while maintaining this decision-making transparency [37].

6. Conclusions

Symbolic methods are an important area for explainability in AI due to their transparency in critical industries like healthcare, finance, and autonomous systems. However, they come with obstacles (their rigidity, the knowledge engineering costs, and their lack of scalability) and they may not always capture the complexity in human thought processes. Their unification with neural nets (so-called neuro-symbolic systems) provides the opportunity to encompass rational (symbolic) decisions and flexibility from (connectionist) neural networks. In particular, research on automated rule extraction, addressing uncertainty, and creating benchmarks for standardizing explainability are areas of potential advancement.

In industry, hybrid systems could transform medical diagnostics (e.g., augment reasoning with deep learning predictions) and cybersecurity (e.g., detecting attacks through multimodal learning and establishing an explainable record of events), but the education, personnel, systems and resource investments required need to be factored in by organizations.

The complementarity between symbolic and connectionist techniques will be needed to implement responsible AI that looks to balance performance and explanation. For future work, focus areas could be determining system scale, automating knowledge acquisition, and ensuring seamless integrations. The coexistence between the two methods is not only an opportunity for technical advancement but is also essential for the delivery of trustworthy AI for real-world applications.

Author Contributions

Conceptualization, L.M., W.A., S.A.; validation, L.M., W.A.; methodology, L.M.; writing—original draft preparation, L.M.; writing—review and editing, L.M., W.A.; supervision: S.Z., W.A., B.E.B. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

No new data were created or analyzed in this study.

Conflicts of Interest

The authors declare no conflict of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| ProbLog | a probabilistic logic programming language |

| DeepProbLog | deep learning with probabilistic logic reasoning |

| ML | Machine Learning |

| AI | Artificial Intelligence |

| XAI | Explainable Artificial Intelligence |

| MYCIN | An Early Expert System for Medical Diagnosis |

| SNOMED CT | Systematized Nomenclature of Medicine—Clinical Terms |

| NLP | Natural Language Processing (NLP) is a branch of Artificial Intelligence (AI) |

| TLN | Temporal Logic Network a type of neural-symbolic AI model |

| GPT | Generative Pre-trained Transformer |

| RGPD | Règlement Général sur la Protection des Données |

| RAVEN | Relational and Analogical Visual Reasoning |

References

- Doshi-Velez, F.; Kim, B. Towards a rigorous science of interpretable machine learning. arXiv 2017, arXiv:1702.08608. [Google Scholar] [CrossRef]

- Samek, W.; Wiegand, T.; Müller, K.-R. Explainable artificial intelligence: Understanding, visualizing and interpreting deep learning models. ITU J. ICT Discov. 2017, 1, 39–48. [Google Scholar]

- Marcus, G. Deep learning: A critical appraisal. arXiv 2018, arXiv:1801.00631. [Google Scholar] [CrossRef]

- Mehrabi, N.; Morstatter, F.; Saxena, N.; Lerman, K.; Galstyan, A. A survey on bias and fairness in machine learning. ACM Comput. Surv. 2021, 54, 115. [Google Scholar] [CrossRef]

- Wachter, S.; Mittelstadt, B.; Russell, C. Counterfactual explanations without opening the black box: Automated decisions and the GDPR. Harv. J. Law Technol. 2017, 31, 841–887. [Google Scholar] [CrossRef]

- Arrieta, A.B.; Díaz-Rodríguez, N.; Del Ser, J.; Bennetot, A.; Tabik, S.; Barbado, A.; Garcia, S.; Gil-Lopez, S.; Molina, D.; Benjamins, R.; et al. Explainable artificial intelligence (XAI): Concepts, taxonomies, opportunities and challenges toward responsible AI. Inf. Fusion 2020, 58, 82–115. [Google Scholar] [CrossRef]

- Shortliffe, E.H.; Buchanan, B.G. A model of inexact reasoning in medicine. Math. Biosci. 1975, 23, 351–379. [Google Scholar] [CrossRef]

- Besold, T.R.; d’Avila Garcez, A.; Bader, S.; Bowman, H.; Domingos, P.; Hitzler, P.; Kühnberger, K.-U.; Lamb, L.C.; Lima, P.M.V.; de Penning, L.; et al. Neural-Symbolic Learning and Reasoning: A Survey and Interpretation. Neuro-Symbolic Artificial Intelligence: The State of the Art; IOS Press: Amsterdam, The Netherlands, 2021; pp. 1–51. [Google Scholar]

- Holzinger, A.; Langs, G.; Denk, H.; Zatloukal, K.; Müller, H. Causability and explainability of artificial intelligence in medicine. WIREs Data Min. Knowl. Discov. 2019, 9, e1312. [Google Scholar] [CrossRef]

- Kitchenham, B.; Charters, S. Guidelines for Performing Systematic Literature Reviews in Software Engineering; EBSE Technical Report EBSE-2007-01; Software Engineering Group, School of Computer Science and Mathematics, Keele University: Keele, UK, 2007; Volume 2. [Google Scholar]

- Baader, F.; McGuinness, D.L.; Nardi, D.; Patel-Schneider, P.F. The Description Logic Handbook: Theory, Implementation, and Applications; Cambridge University Press: Cambridge, UK, 2017. [Google Scholar]

- Gunning, D.; Stefik, M.; Choi, J.; Miller, T.; Stumpf, S.; Yang, G.Z. XAI—Explainable artificial intelligence. Sci. Robot. 2019, 4, eaay7120. [Google Scholar] [CrossRef]

- Endsley, M.R. From here to autonomy: Lessons learned from human-automation research. Hum. Factors 2017, 59, 5–27. [Google Scholar] [CrossRef]

- Wing, J.M. Trustworthy AI. Commun. ACM 2021, 64, 64–71. [Google Scholar] [CrossRef]

- Braun, V.; Clarke, V. Thematic Analysis: A Practical Guide; SAGE Publications: London, UK, 2021. [Google Scholar]

- McCarthy, J. Programs with common sense. In Proceedings of the Teddington Conference on the Mechanization of Thought Processes; Her Majesty’s Stationary Office: Edinburgh, UK, 1959. [Google Scholar]

- Newell, A.; Simon, H.A. Computer science as empirical inquiry: Symbols and search. Commun. ACM 1976, 19, 113–126. [Google Scholar] [CrossRef]

- Brachman, R.; Levesque, H. Knowledge Representation and Reasoning; Morgan Kaufmann: San Mateo, CA, USA, 2004. [Google Scholar]

- Smith, B.C. Reflection and Semantics in a Procedural Language; MIT Press: Cambridge, MA, USA, 1982. [Google Scholar]

- De Raedt, L.; Dumančić, S.; Manhaeve, R.; Marra, G. From statistical relational to neuro-symbolic AI. AIJ 2022, 307, 4943–4950. [Google Scholar]

- Russell, S.; Norvig, P. Artificial Intelligence: A Modern Approach, 4th ed.; Pearson: London, UK, 2020. [Google Scholar]

- Smith, B.; Ashburner, M.; Rosse, C.; Bard, J.; Bug, W.; Ceusters, W.; Goldberg, L.J.; Eilbeck, K.; Ireland, A.; Mungall, C.J.; et al. The OBO Foundry. Appl. Ontol. 2007, 25, 1251–1255. [Google Scholar]

- Hayes-Roth, F. Rule-based systems. Commun. ACM 1985, 28, 921–932. [Google Scholar] [CrossRef]

- Sowa, J. Knowledge Representation; Brooks/Cole: Pacific Grove, CA, USA, 2000. [Google Scholar]

- Gunning, D. Explainable AI (XAI); DARPA Technical Report; DARPA: Arlington, VA, USA, 2017. [Google Scholar]

- Manhaeve, R.; Dumančić, S.; Kimmig, A.; Demeester, T.; De Raedt, L. Neural probabilistic logic programming in DeepProbLog. Artif. Intell. 2021, 298, 103504. [Google Scholar] [CrossRef]

- Wachter, S.; Mittelstadt, B.; Floridi, L. Why a right to explanation of automated decision-making does not exist in the General Data Protection Regulation. Int. Data Priv. Law 2017, 7, 76–99. [Google Scholar] [CrossRef]

- Holzinger, A. Interactive machine learning for health informatics. Brain Inform. 2016, 3, 119–131. [Google Scholar] [CrossRef] [PubMed]

- d’Avila Garcez, A.; Lamb, L.C. Neurosymbolic AI: The 3rd wave. arXiv 2020, arXiv:2012.05876. [Google Scholar] [CrossRef]

- Riegel, R.; Gray, A.; Luus, F.; Khan, N.; Makondo, N.; Akhalwaya, I.Y.; Qian, H.; Fagin, R.; Barahona, F.; Sharma, U.; et al. Logical Neural Networks. arXiv 2020. [Google Scholar] [CrossRef]

- Hogan, A.; Blomqvist, E.; Cochez, M.; D’amato, C.; De Melo, G.; Gutierrez, C.; Kirrane, S.; Gayo, J.E.L.; Navigli, R.; Neumaier, S.; et al. Knowledge Graphs. ACM Comput. Surv. 2021, 54, 71. [Google Scholar] [CrossRef]

- Singhal, A. Introducing the Knowledge Graph: Things, Not Strings. Available online: https://blog.google/products/search/introducing-knowledge-graph-things-not/ (accessed on 18 September 2024).

- Ali, H.; Fatima, T. Integrating Neural Networks and Symbolic Reasoning: A Neurosymbolic AI Approach for Decision-Making Systems. ResearchGate 2025. [Google Scholar] [CrossRef]

- Zhang, C.; Gao, F.; Jia, B.; Zhu, Y.; Zhu, S.C. Raven: A dataset for relational and analogical visual reasoning. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 5312–5322. [Google Scholar]

- Riou, C.; El Azzouzi, M.; Hespel, A.; Guillou, E.; Coatrieux, G.; Cuggia, M. Ensuring general data protection regulation compliance and security in a clinical data warehouse from a university hospital: Implementation study. JMIR Med. Inform. 2025, 13. [Google Scholar] [CrossRef]

- Ghadermazi, J.; Hore, S.; Shah, A.; Bastian, N.D. GTAE-IDS: Graph Transformer-Based Autoencoder Framework for Real-Time Network Intrusion Detection. IEEE Trans. Inf. Forensics Secur. 2025, 20, 4026–4041. [Google Scholar] [CrossRef]

- AlNusif, M. Explainable AI in Edge Devices: A Lightweight Framework for Real-Time Decision Transparency. Int. J. Eng. Comput. Sci. 2025, 14, 27447–27472. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).