Abstract

Agriculture, food security, and economic stability are impacted by plant diseases, making their identification and diagnosis essential. This article illustrates the research trends in plant disease detection using transformers through a bibliometric analysis based on visualization. The publications used in this work were collected from Scopus and Web of Science databases. For visualization, programs such as Biblioshiny and VOSViewer 1.6.20 were used. The results demonstrate that China is the most productive country, accounting for 11 total publications and 126 citations. China Agricultural University was the most productive institute, with six publications, while the Frontiers in Plant Science journal was the most productive journal, with six publications and 102 citations. It also demonstrates that the most used research topics in this field are “deep learning”, “plant disease”, and “vision transformer”. This study provides insights into the application of transformers for plant disease detection, enabling researchers to better understand and explore this field.

1. Introduction

Early detection and accurate diagnosis of crop disease play a vital role in protecting crop growth and increasing production. Crop disease detection and management help to improve crop yield and quality, as well as to optimize the use of resources [1]. Within the domain of deep learning (DL), pest and plant disease detection are critical; deep learning techniques have replaced traditional methods of plant disease identification [2]. Recently, deep learning techniques have demonstrated outstanding results in various domains, significantly enhancing the accuracy of object detection. Currently, deep learning is utilized to identify leaf diseases in crops like potatoes, citrus, wheat, and maize. These diseases include bacterial, viral, and fungal infections [3]. Manually identifying plant diseases is tedious and can cause errors in large cultivation areas. This requires a lot of time and labor. Accurate manual disease detection requires a lot of resources and a high level of skill. Early identification of plant diseases can reduce crop loss and pathogen transmission.

More recently, the use of transformers for plant disease detection has started to increase [4,5,6]. Several studies have employed transformers for the classification of grape diseases [7,8,9]. Aboelenin et al. [10] introduced a hybrid framework that combines convolutional neural networks (CNNs) and vision transformers (ViT) for the classification of plant leaf diseases. The authors utilized pre-trained models, to extract leaf features, while the ViT was employed to extract the deep features of the leaves. Apple and corn datasets from the PlantVillage dataset were utilized. The proposed method can successfully detect and classify plant leaf diseases, surpassing diverse cutting-edge models. Karthik et al. [11] presented a novel method for crop disease categorization using a dual-track architecture that integrates the swin transformer to extract global features, and a Dual-Attention Multi-Scale Fusion Network (DAMFN) that captures local features at multiple scales using specialized convolutional blocks. The CCMT dataset was used, which contains 102,976 images of various crop diseases. The proposed model had an accuracy of 95.68%. Maqsood et al. [12] proposed a customized architecture that combines vision transformers (ViT) and graph neural networks to accurately detect wheat diseases. It reached an accuracy of 99.20%. Chakrabarty et al. [13] developed an advanced model based transformer (BEiT model) for the detection of rice leaf diseases. Two public datasets were used. The model attained an accuracy score of 98.1%. Chen et al. [14] presented a hybrid architecture that integrates CNNs and transformers for the detection of tomato leaf diseases. The model was evaluated using three datasets. The authors utilized CyTrGAN, a Cycle-Consistent GAN-based model, to address data scarcity and class imbalance. The findings demonstrated that the proposed model, compared to ResNet, MobileNet, Swin Transformer, and Vision Transformer, achieves the best accuracy with fewer parameters and lower computational cost. Hamdi and Hidayaturrahman [15] proposed the use of pre-trained ViT models for plant disease categorization. Both the PlantDoc and PlantVillage datasets, were merged to create a final dataset comprising 38 classes and 56,857 images, addressing class imbalance issues. The results demonstrate that ensembles of Vision Transformer (ViT) models outperform individual models in plant disease classification, achieving a high accuracy of 98.16%. Remya et al. [16] proposed combining vision transformers (ViTs) with acoustic sensors to detect whitefly infestations in cotton crops. The authors utilized a custom dataset comprising 32,000 images and acoustic recordings collected from agricultural fields. Furthermore, the AgriPK dataset was used for experimental validation. The study demonstrated outstanding results for detecting cotton pests using vision transformers (ViTs) and acoustic sensors. The model attained an accuracy of 99%. Liu et al. [17] presented a NanoSegmenter model for high-precision tomato disease detection. It achieved 98% precision, 97% recall, and 95% mean Intersection over Union (mIoU), outperforming existing models such as DeepLabv3+, UNet++, and UNet. Ait Nasser and Akhloufi [18] presented a novel hybrid architecture to detect and classify plant foliar diseases. The authors used two datasets, Plant Pathology FGVC versions 7 and 8, and obtained accuracies of 98.28% and 95.96%, respectively. Wang et al. [19] presented a novel method to continual learning for crop disease identification using vision transformer total variation (ViT-TV), which achieved an accuracy of 95.38%, surpassing CNN architectures. Megalingam et al. [20] developed a classification system using vision transformers for accurate detection of diseases in cowpea plants, achieving an accuracy score of 96%.

This paper presents a bibliometric analysis of transformer-based plant disease detection. The aim of this work is to explore research trends in this field. It provides insights for future researchers by identifying the most relevant keywords, and the most influential journals and publications. Additionally, it analyzes journal productivity and citation impact. The rest of the paper is structured in this order: Section 2 outlines the methodology, including the publication selection criteria and the analytical tools employed. Section 3 presents the results and discussion, providing statistical insights into the distribution of research by institution, country, journal, and keywords. Finally, Section 4 concludes the paper and suggests future research directions.

2. Methodology

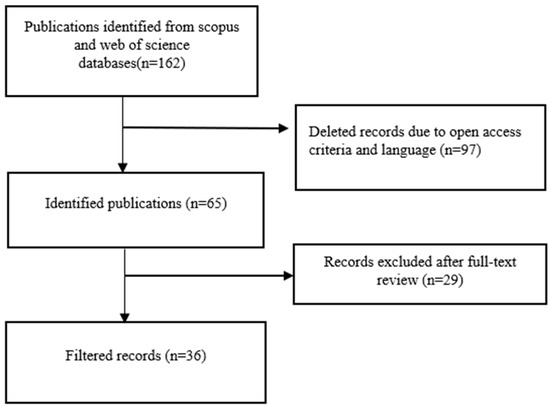

The Scopus and Web of Science databases were used to conduct a literature review for this study in January 2025. Articles with the keywords “transformers”, “plant diseases”, and “detection” in the title, abstract, or author’s keywords were found. Articles in the English language and all open-access criteria were included in the review. The timeframe was from “2015 to January 2025,” and the document types included “Article”, “Book Chapter”, “Conference Paper”, and “Review”. The method of publication selection is illustrated in Figure 1. The results were chosen using both the “BibText” and “plain text” formats. Each article was collected with the following information: title, abstract, source title, author, keywords, country, and institution. To conduct the bibliometric analysis, we used two analysis tools: Biblioshiny and VOSViewer. Biblioshiny 4.3.2 is an open-source R package 2024.12.0 used for bibliometric analysis. It offers a user-friendly interface for analyzing scientific articles, visualizing research trends, and exploring collaboration networks. The main features include importing data from sources such as Scopus and Web of Science, descriptive analysis of publications and citations, network analysis of co-citations, co-authorship, keyword co-occurrence, collaboration analysis, and graphical visualization that provides interactive plots, graphs, and charts for analyzing bibliometric data [21]. VOSviewer is a software program used for building and displaying bibliometric networks. These networks can comprise researchers, journals or individual articles, etc. It also offers a text-mining feature that can be used to create and display co-occurrence networks of significant phrases utilized in the scientific literature [22].

Figure 1.

Publications selection method.

3. Results and Discussion

This section is dedicated to discussing the results of transformers for plant disease detection. The Transformers and Vision Transformers are both neural network models primarily used for natural language processing (NLP). The Transformer’s main innovation is the use of self-attention mechanisms, which enable the model to weigh the importance of different parts of the input when making predictions. The Vision Transformer is an extension of the Transformer model that handles images as a sequence of patches, instead of treating them as a grid of pixels. The existing studies in this field demonstrated outstanding results, which can motivate researchers using transformers for plant disease detection.

3.1. Annual Production

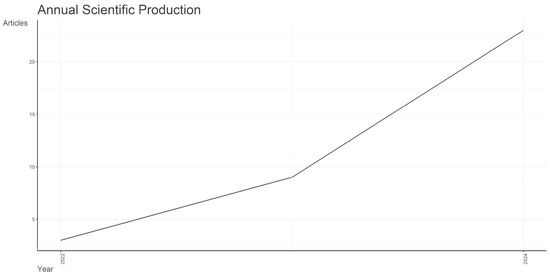

Analyzing the evolution of scientific productions, we observe that the number of publications related to plant disease identification, as shown in Figure 2, started to increase significantly from the year 2022, with 3 publications. In 2023, the number of publications increased to 9 and reached a peak of 23 publications in 2024.

Figure 2.

Annual scientific publications.

3.2. Affiliations Analysis

The affiliations and number of articles contributed by each institution are listed in Table 1, providing insight into their research productivity in a particular field. China Agricultural University contributed 6 articles, followed by Murdoch University with 4 articles. Sejong University, VIT, and VIT Chennai each contributed 3 articles, while five others contributed 2 articles each.

Table 1.

Most relevant affiliations.

3.3. Journals Analysis

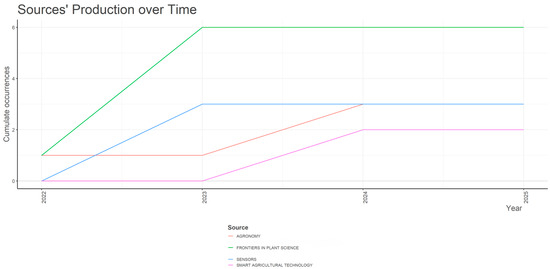

Table 2 demonstrates the most productive journals organized by citation counts. The Frontiers in Plant Science journal has the most citations (102) among its six publications. Sensors ranks second with 52 citations, followed by Agronomy (43 citations), Agriculture (Switzerland) (21 citations), and Computers and Electronics in Agriculture (16 citations). Figure 3 illustrates the trend of source production over time in the top six journals. Three journals, including Frontiers in Plant Science, Agronomy, and Agriculture (Switzerland), an publishing articles on DL-based plant disease detection in 2022. However, we can conclude, based on the number of citations, that the Frontiers in Plant Science journal has a greater reach for DL-based plant disease detection publications compared to the Agronomy journal and the Journal of Agriculture (Switzerland), even though the number of publications in these journals is almost the same in this field.

Table 2.

Top 10 relevant sources.

Figure 3.

The trend of the sources’ production over time in the top six journals.

3.4. Countries Production Analysis

Table 3 shows the most productive countries. China has the most articles published on DL-based plant disease detection, with 11 publications (30.5%) and 126 citations. India ranks second with 10 (33.3%) articles and 12 citations, followed by Australia with 6 (16.6%) publications and 15 citations. Saudi Arabia ranks fourth with 5 (13.8%) publications and 21 citations. It is observed that countries like Australia, Saudi Arabia, South Africa, and Pakistan have a smaller number of publications compared to India. However, they have a more significant number of citations than India due to the collaboration or outreach of publications produced by Australia, Saudi Arabia, South Africa, and Pakistan. In summary, transformer-based plant disease detection has been dominated by China and India.

Table 3.

Countries’ scientific production.

3.5. Top Documents by Citations

The impact of articles on the literature is evaluated by document citation analysis. A citation analysis was conducted to identify the most influential publications and summarize their content. Table 4 presents the top ten most influential publications, ranked by total citations (TC) and total citations per year.

Table 4.

Most-cited global documents.

Zhang et al. [23] conducted a study on automatic plant disease detection using Generative Adversarial Networks (GANs) and transformer architectures to enhance model performance. The authors used anchor-free object detection methods and data augmentation techniques to improve the accuracy of disease identification from leaf images. An agricultural robot was built to apply the model to the real world. The PlantDoc dataset was used, which contains 13 plant species and 27 categories. The findings showed that the model carried out the best performance compared to traditional models. It reached a precision of 51.7%, and recall of 48.1% on the validation set. Jajja et al. [24] suggested a compact convolutional transformer (CCT)-based method to detect whitefly attacks on cotton crops. The authors created a new dataset named AgriPK, which was categorized into five classes: healthy, unhealthy, mild infection, severe infection, and nutritional deficiency. Another dataset, the cotton disease dataset, was used for comparison purposes to evaluate the performance and generalization. The findings demonstrated that the proposed approach attained an accuracy of 97.2%, outperforming all other deep learning methods, including MobileNetv2, ResNet152v2, VGG-16, SVM, and YOLOv5.

3.6. Keyword Co-Occurrence Analysis

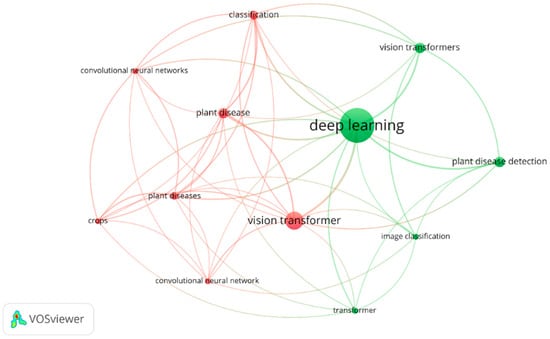

Keyword co-occurrence analysis in bibliometric analysis helps identify key topics, trends, and relationships within a research field. VOSviewer was used to create a keyword co-occurrence network, as shown in Figure 4. The minimum number of keyword occurrences is set to 4. From 207 saved keywords, 12 met the criteria and were divided into 2 clusters (red and green).

Figure 4.

A keyword co-occurrence network.

4. Conclusions

This paper presents a bibliometric analysis of transformer-based plant disease detection research trends. The existing studies highlight the efficiency of transformers for plant disease detection, showing impressive results in terms of accuracy. This study analyzed 36 publications published between 2021 and January 2025 from Scopus and Web of Science. We presented scientific maps that illustrate annual publication numbers, countries, journals, research institutes, and author productivity for DL transformer-based plant disease detection. The bibliometric analysis demonstrates that the most productive countries are China, India, Australia, Saudi Arabia, and South Korea. The most used topics include deep learning, classification, plant disease, vision transformer, and plant disease detection. For research institutes, China Agricultural University published more articles than other institutes. Frontiers in Plant Science was the most relevant source, with a maximum of 102 citations. The analysis also includes the most cited documents. In future work, we will look to search additional databases for relevant recent papers.

Author Contributions

Writing—original draft, methodology and conceptualization, R.E.; writing—review and editing, W.A. and A.A.; review and validation, S.Z. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Data available on request.

Conflicts of Interest

All the authors declare that they have no established conflicting financial interests or personal relationships that may have influenced the research presented in this paper.

References

- Feng, J.; Ong, W.E.; Teh, W.C.; Zhang, R. Enhanced Crop Disease Detection With EfficientNet Convolutional Group-Wise Transformer. IEEE Access 2024, 12, 44147–44162. [Google Scholar] [CrossRef]

- Nyarko, B.N.E.; Wu, W.B.; Zhou, Z.J.; Mohd, M.M.J. Improved YOLOv5m model based on Swin Transformer, K-means++, and Efficient Intersection over Union (EIoU) loss function for cocoa tree (Theobroma cacao) disease detection. J. Plant Prot. Res. 2024, 64, 265–274. [Google Scholar] [CrossRef]

- Chen, J.; Wang, S.; Guo, J.; Chen, F.; Li, Y.; Qiu, H. Improved FasterViT model for citrus disease diagnosis. Heliyon 2024, 10, e36092. [Google Scholar] [CrossRef]

- Christakakis, P.; Giakoumoglou, N.; Kapetas, D.; Tzovaras, D.; Pechlivani, E.-M. Vision Transformers in Optimization of AI-Based Early Detection of Botrytis cinerea. AI 2024, 5, 1301–1323. [Google Scholar] [CrossRef]

- De Silva, M.; Brown, D. Multispectral Plant Disease Detection with Vision Transformer–Convolutional Neural Network Hybrid Approaches. Sensors 2023, 23, 8531. [Google Scholar] [CrossRef]

- Yong, W.-C.; Ng, K.-W.; Haw, S.-C.; Naveen, P.; Ng, S.-B. Leaf Condition Analysis Using Convolutional Neural Network and Vision Transformer. Int. J. Comput. Digit. Syst. 2024, 16, 1685–1695. [Google Scholar] [CrossRef]

- Karthik, R.; Vardhan, G.V.; Khaitan, S.; Harisankar, R.N.R.; Menaka, R.; Lingaswamy, S.; Won, D. A dual-track feature fusion model utilizing Group Shuffle Residual DeformNet and swin transformer for the classification of grape leaf diseases. Sci. Rep. 2024, 14, 14510. [Google Scholar] [CrossRef] [PubMed]

- Hernández, I.; Gutiérrez, S.; Barrio, I.; Íñiguez, R.; Tardaguila, J. In-field disease symptom detection and localisation using explainable deep learning: Use case for downy mildew in grapevine. Comput. Electron. Agric. 2024, 226, 109478. [Google Scholar] [CrossRef]

- Kunduracioglu, I.; Pacal, I. Advancements in deep learning for accurate classification of grape leaves and diagnosis of grape diseases. J. Plant Dis. Prot. 2024, 131, 1061–1080. [Google Scholar] [CrossRef]

- Aboelenin, S.; Elbasheer, F.A.; Eltoukhy, M.M.; El-Hady, W.M.; Hosny, K.M. A hybrid Framework for plant leaf disease detection and classification using convolutional neural networks and vision transformer. Complex Intell. Syst. 2025, 11, 142. [Google Scholar] [CrossRef]

- Karthik, R.; Ajay, A.; Singh Bisht, A.; Illakiya, T.; Suganthi, K. A Deep Learning Approach for Crop Disease and Pest Classification Using Swin Transformer and Dual-Attention Multi-Scale Fusion Network. IEEE Access 2024, 12, 152639–152655. [Google Scholar] [CrossRef]

- Maqsood, Y.; Usman, S.M.; Alhussein, M.; Aurangzeb, K.; Khalid, S.; Zubair, M. Model Agnostic Meta-Learning (MAML)-Based Ensemble Model for Accurate Detection of Wheat Diseases Using Vision Transformer and Graph Neural Networks. Comput. Mater. Contin. 2024, 79, 2795–2811. [Google Scholar] [CrossRef]

- Chakrabarty, A.; Ahmed, S.T.; Islam, M.d.F.U.; Aziz, S.M.; Maidin, S.S. An interpretable fusion model integrating lightweight CNN and transformer architectures for rice leaf disease identification. Ecol. Inform. 2024, 82, 102718. [Google Scholar] [CrossRef]

- Chen, Z.; Wang, G.; Lv, T.; Zhang, X. Using a Hybrid Convolutional Neural Network with a Transformer Model for Tomato Leaf Disease Detection. Agronomy 2024, 14, 673. [Google Scholar] [CrossRef]

- Hamdi, E.B. Hidayaturrahman Ensemble of pre-trained vision transformer models in plant disease classification, an efficient approach. Procedia Comput. Sci. 2024, 245, 565–573. [Google Scholar] [CrossRef]

- Remya, S.; Anjali, T.; Abhishek, S.; Ramasubbareddy, S.; Cho, Y. The Power of Vision Transformers and Acoustic Sensors for Cotton Pest Detection. IEEE Open J. Comput. Soc. 2024, 5, 356–367. [Google Scholar] [CrossRef]

- Liu, Y.; Song, Y.; Ye, R.; Zhu, S.; Huang, Y.; Chen, T.; Zhou, J.; Li, J.; Li, M.; Lv, C. High-Precision Tomato Disease Detection Using NanoSegmenter Based on Transformer and Lightweighting. Plants 2023, 12, 2559. [Google Scholar] [CrossRef] [PubMed]

- Ait Nasser, A.; Akhloufi, M.A. A Hybrid Deep Learning Architecture for Apple Foliar Disease Detection. Computers 2024, 13, 116. [Google Scholar] [CrossRef]

- Wang, B. Zero-exemplar deep continual learning for crop disease recognition: A study of total variation attention regularization in vision transformers. Front. Plant Sci. 2024, 14, 1283055. [Google Scholar] [CrossRef] [PubMed]

- Megalingam, R.K.; Gopakumar Menon, G.; Binoj, S.; Asandi Sai, D.; Kunnambath, A.R.; Manoharan, S.K. Cowpea leaf disease identification using deep learning. Smart Agric. Technol. 2024, 9, 100662. [Google Scholar] [CrossRef]

- Aria, M.; Cuccurullo, C. Biblioshiny Bibliometrix for No Coders. Available online: https://bibliometrix.org/biblioshiny/biblioshiny1.html (accessed on 14 February 2025).

- VOSviewer—Visualizing Scientific Landscapes. Available online: https://www.vosviewer.com/ (accessed on 14 February 2025).

- Zhang, Y.; Wa, S.; Zhang, L.; Lv, C. Automatic Plant Disease Detection Based on Tranvolution Detection Network With GAN Modules Using Leaf Images. Front. Plant Sci. 2022, 13, 875693. [Google Scholar] [CrossRef] [PubMed]

- Jajja, A.I.; Abbas, A.; Khattak, H.A.; Niedbała, G.; Khalid, A.; Rauf, H.T.; Kujawa, S. Compact Convolutional Transformer (CCT)-Based Approach for Whitefly Attack Detection in Cotton Crops. Agriculture 2022, 12, 1529. [Google Scholar] [CrossRef]

- Barman, U.; Sarma, P.; Rahman, M.; Deka, V.; Lahkar, S.; Sharma, V.; Saikia, M.J. ViT-SmartAgri: Vision Transformer and Smartphone-Based Plant Disease Detection for Smart Agriculture. Agronomy 2024, 14, 327. [Google Scholar] [CrossRef]

- Rezaei, M.; Diepeveen, D.; Laga, H.; Jones, M.G.K.; Sohel, F. Plant disease recognition in a low data scenario using few-shot learning. Comput. Electron. Agric. 2024, 219, 108812. [Google Scholar] [CrossRef]

- Parez, S.; Dilshad, N.; Alghamdi, N.S.; Alanazi, T.M.; Lee, J.W. Visual Intelligence in Precision Agriculture: Exploring Plant Disease Detection via Efficient Vision Transformers. Sensors 2023, 23, 6949. [Google Scholar] [CrossRef]

- Li, G.; Wang, Y.; Zhao, Q.; Yuan, P.; Chang, B. PMVT: A lightweight vision transformer for plant disease identification on mobile devices. Front. Plant Sci. 2023, 14, 1256773. [Google Scholar] [CrossRef]

- Shaheed, K.; Qureshi, I.; Abbas, F.; Jabbar, S.; Abbas, Q.; Ahmad, H.; Sajid, M.Z. EfficientRMT-Net—An Efficient ResNet-50 and Vision Transformers Approach for Classifying Potato Plant Leaf Diseases. Sensors 2023, 23, 9516. [Google Scholar] [CrossRef]

- Wu, J.; Wen, C.; Chen, H.; Ma, Z.; Zhang, T.; Su, H.; Yang, C. DS-DETR: A Model for Tomato Leaf Disease Segmentation and Damage Evaluation. Agronomy 2022, 12, 2023. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).