Abstract

This paper presents a rule-based negation and litotes detection system for Modern Standard Arabic. Unlike purely statistical approaches, the proposed pipeline leverages linguistic structures, lexical resources, and dependency parsing to identify negated expressions, exception clauses, and instances of litotic inversion, where rhetorical negation conveys an implicit positive meaning. The system was applied to a large-scale subset of the Arabic OSCAR corpus, filtered by sentence length and syntactic structure. The results show the successful detection of 5193 negated expressions and 1953 litotic expressions through antonym matching. Additionally, 200 instances involving exception prepositions were identified, reflecting their syntactic specificity and rarity in Arabic. The system is fully interpretable, reproducible, and well-suited to low-resource environments where machine learning approaches may not be viable. Its ability to scale across heterogeneous data while preserving linguistic sensitivity demonstrates the relevance of rule-based systems for morphologically rich and structurally complex languages. This work contributes a practical framework for analyzing negation phenomena and offers insight into rhetorical inversion in Arabic discourse. Although coverage of rarer structures is limited, the pipeline provides a solid foundation for future refinement and domain-specific applications in figurative language processing.

1. Introduction

Litotes is a versatile rhetorical device that can be identified by its characteristic use of negating the opposite to express an affirmative. It is prevalent across various forms of communication, serving functions from understatement to irony, and its identification relies on recognizing these patterns and understanding the context in which they are used.

Litotes is applied in different contexts, including in academic writing, where it is used to maintain a cautious and impartial tone; in political speeches, where it is used to emphasize or incite, often breaching conversational maxims to create implicature; and in literature and rhetoric, where it is used to enhance expressive power and serve argumentative or epistemic functions [1].

Identifying litotes within a text involves recognizing a rhetorical device where an affirmative is expressed by negating its opposite. This figure of speech is often used for understatement, emphasis, or irony. Its key characteristics include the following:

- Negation of the Opposite: Litotes typically involves a double negative or a negation of the contrary to express an affirmative. For example, saying “not bad” to mean “good” [2,3,4].

- Understatement: It often serves to understate or soften the expression, making it less direct or forceful [5,6].

- Emphasis and Irony: While it can emphasize a point subtly, it can also introduce irony by suggesting the opposite of what is literally stated.

Identifying Litotes in Text

- Look for Double Negatives: Phrases that use two negatives to express a positive, such as “not uncommon” or “not unhappy”.

- Contextual Clues: Consider the context to determine whether the negation is used to soften a statement or to add an ironic twist.

- Functional Variation: Litotes can vary in function across different disciplines and genres, such as academic writing, political speeches, and literature, where it might be used more frequently or with different intentions [7].

2. Related Work

Mitrović et al. [8] offers a methodical summary of computer-based methods for identifying lesser-known rhetorical devices such as litotes. It explores how litotes functions by creating an “Unexcluded Middle,” where the meaning lies between traditional binary oppositions (e.g., “not unhappy”). Using Image Schema Theory, particularly the concept of CONTAINMENT, the authors argue that litotes forms cognitive containers that shape meaning and concept activation. The authors develop an OWL ontology to represent these structures, making it publicly available for further research and computational application. They highlight the challenges of underspecified ontology structures and propose future directions to refine the model and apply it in computational rhetoric systems. Kühn et al. [9] provides a systematic overview of computational techniques for detecting lesser-known rhetorical figures, including litotes. It discusses the challenges of litotes detection, particularly the difficulty of distinguishing litotes from standard negation. While most approaches are rule-based, leveraging syntactic patterns like negation cues followed by negated adjectives, the authors note the scarcity of large annotated datasets for comprehensive evaluation. The study also emphasizes the need for better language resources and more refined detection algorithms to address the complex, context-dependent nature of litotes and similar figures.

Yadav et al. [10] propose the FFBC algorithm within a Binary-Clustered Sentences (BCS) framework to address challenges in sentiment analysis, particularly in detecting negation and double negation. Their approach utilizes a majority voting system within binary clusters, significantly improving sentiment classification accuracy. The model was trained on IMDb and Amazon Reviews datasets and validated on real-world Twitter data related to the Farmers’ Protest. Compared to state-of-the-art models like BERT, LSTM, and VADER, the proposed method demonstrated superior performance in terms of precision, recall, F1-score, and accuracy. The results highlight the framework’s capability to tackle complex linguistic structures, advancing the state of sentiment analysis.

Szczygłowska [5] looks into how litotes is used in English-language research articles in the social and biological sciences, focusing on differences in frequency, structure, and syntactic function. Analyzing 300 articles from each discipline, the study found that litotes was twice as frequent in social sciences and displayed a broader functional range. Social sciences favored complex and stylistically diverse constructions, while life sciences used more straightforward forms. The results suggest that litotes contributes to the rhetorical style of each discipline, with social sciences leveraging it for nuanced expression and cautious argumentation, and life sciences prioritizing clarity and precision. The findings highlight how linguistic choices reflect disciplinary conventions, emphasizing the importance of understanding rhetorical patterns in academic writing.

Karp et al. [7] employ text mining techniques using R to explore litotes and meiosis in The Catcher in the Rye. Through concordance building (KWIC), word frequency analysis, and sentiment analysis, they examine how these rhetorical devices contribute to the stylistic features of the text. The authors identify common patterns of litotes (e.g., not bad, not unwilling), but observe that such constructions are relatively rare compared to straightforward expressions. They highlight the contextual difficulties in automating sentiment analysis of litotes, as standard tools often misinterpret this device’s nuanced meanings. The study suggests that while text mining can aid stylistic analysis, it requires sophisticated approaches to account for the complexities of negation and rhetorical understatement.

Mukherjee et al. [11] introduce NegAIT, a rule-based parser designed to detect morphological, sentential, and double negation in medical texts. The system achieved high precision and recall for morphological and sentential negations, though double negation detection remains challenging. Using large-scale medical corpora, the authors found that morphological negation was more prevalent in difficult texts, while sentential negation appeared more in easier texts. Additionally, a classification study showed that using negation features significantly improved the accuracy of predicting text difficulty. The parser’s contribution lies in advancing text simplification efforts, especially within medical contexts, by highlighting the role of complex negation in readability.

Khandelwal and Sawant propose NegBERT [12], a BERT-based model for detecting negation cues and resolving their scopes across diverse textual domains. The system achieved state-of-the-art results across BioScope, Sherlock, and SFU Review Corpus, significantly improving F1-scores over previous models. NegBERT uses a two-stage approach, first detecting negation cues and then resolving their scopes using BERT’s contextual embeddings. Despite its strong performance, the model faced challenges in cross-domain generalization, especially with inconsistently annotated datasets like SFU. The study highlights the value of transfer learning for nuanced NLP tasks and underscores the importance of high-quality, diverse datasets.

The rhetorical devices of irony, understatement, and litotes are examined by Neuhaus [13], who offers unique classifications depending on necessary criteria. Irony is marked by situational disparity and a suggested critical mindset, understatement by saying less to imply more, and litotes by negation combined with the salience of an opposite term. The paper highlights how these figures can overlap—litotes can serve as a device for understatement, and understatement can be used to achieve irony. However, litotes can also function independently as denial or mitigation. The study emphasizes that litotic irony cannot exist without an understated base, providing a nuanced understanding of this rhetorical mechanism.

Yuan [4] systematically analyzes litotes in The Analects, defining it as negation of the opposite and classifying instances of it into contradictory, contrary, and relative subtypes. A particular focus is placed on Conditional Double Negations (CDNs), which structure arguments through necessary condition–result relationships. These CDNs serve to enhance logical precision, establish high-probability premises, and provide elaborate definitions within Confucian philosophy. The paper concludes that CDNs offer significant epistemic value, contributing to the propagation of knowledge in Chinese discourse. While manual detection ensures accuracy, future computational approaches would need to account for linguistic complexities.

A thorough examination of more than 40 studies on the algorithmic detection of rhetorical figures is provided by Kühn and Mitrovic [14], focusing on lesser-known types like litotes. They identify ten key challenges, such as inconsistent definitions, dataset imbalance, and the limitations of rule-based methods. The study emphasizes that most litotes detection approaches are heavily rule-based, relying on negation patterns, but these methods often fail to capture nuanced or creative expressions. The authors advocate for hybrid approaches that integrate LLMs and rhetorical ontologies to improve detection accuracy and encourage broader linguistic coverage. This paper offers strategic recommendations to advance research in computational rhetoric.

3. Methodology

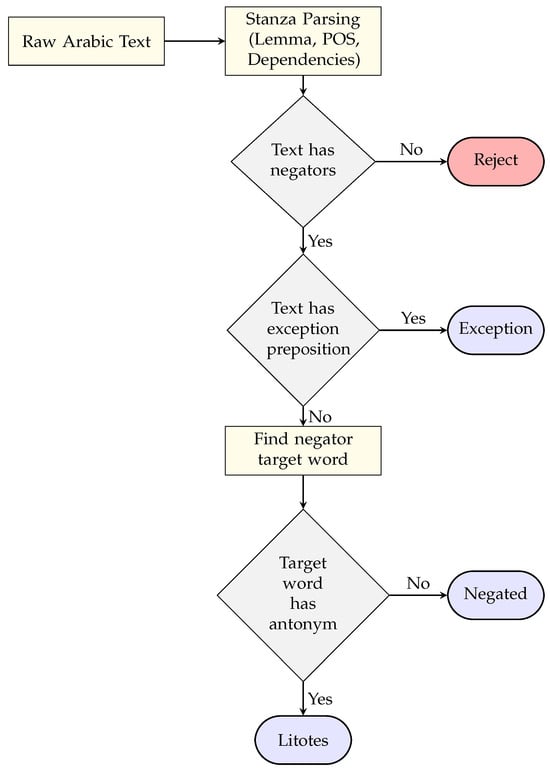

We constructed a custom Arabic dataset designed to identify and classify negation patterns in text through an automated pipeline. Each entry includes the full sentence (full_text), a relevant focus segment (text), and syntactic features such as lemma and POS tags, which were extracted using the Stanza Arabic pipeline. Negation status (negated) was determined using rule-based heuristics applied to dependency parsing outputs, detecting common negators (e.g.,  ) and associating them with target tokens. Additional fields include flags for exception phrases, automatically derived antonyms (via WordNet mapping), and final categorical labels: negated, exception, or litotes. The process is illustrated in Figure 1.

) and associating them with target tokens. Additional fields include flags for exception phrases, automatically derived antonyms (via WordNet mapping), and final categorical labels: negated, exception, or litotes. The process is illustrated in Figure 1.

) and associating them with target tokens. Additional fields include flags for exception phrases, automatically derived antonyms (via WordNet mapping), and final categorical labels: negated, exception, or litotes. The process is illustrated in Figure 1.

) and associating them with target tokens. Additional fields include flags for exception phrases, automatically derived antonyms (via WordNet mapping), and final categorical labels: negated, exception, or litotes. The process is illustrated in Figure 1.

Figure 1.

Data annotation pipeline.

The process begins with a raw Arabic sentence as input. To ensure consistency and manage complexity, only short texts consisting of a single sentence and under a defined token limit are retained. This filtering step is applied before any linguistic annotation is performed.

The selected sentence is passed through the Stanza pipeline for Arabic, which performs tokenization, lemmatization, part-of-speech tagging, and dependency parsing. Diacritics are removed from lemmas to reduce morphological sparsity. POS tags are normalized where needed (e.g., auxiliary verbs are reclassified as verbs), and each token is enriched with syntactic attributes used in the downstream decision process.

In this stage, the system checks whether the parsed sentence contains any recognized negation markers. A predefined lexicon of Arabic negators (e.g.,  ) is used to identify their presence within the syntactic structure. If no such negators are found, the sentence is excluded from further analysis. In the opposite case, the system proceeds to verify whether the sentence contains any exception constructs that may alter its semantic polarity, thus transforming negators into limiters (

) is used to identify their presence within the syntactic structure. If no such negators are found, the sentence is excluded from further analysis. In the opposite case, the system proceeds to verify whether the sentence contains any exception constructs that may alter its semantic polarity, thus transforming negators into limiters ( ). These typically include prepositions or particles such as

). These typically include prepositions or particles such as  (except), which often signal exception-based meanings. If such an exception word is found in proximity to the negated segment, the sentence is labeled as exception. When no exception is found, the system attempts to identify the syntactic target of the negator. This involves tracing dependency links to determine which word (typically an adjective) is being negated. The identified token becomes the focal point for subsequent semantic checks, particularly antonym lookup. For this task, we used Arabic WordNet. Once the target word of the negation has been identified, the system queries Arabic WordNet to determine whether the word has a known antonym. This step is crucial for detecting litotic constructions, where negation combined with a semantically opposite term conveys intensified meaning. If an antonym is found, the sentence is labeled as litotes; otherwise, the label defaults to negated.

(except), which often signal exception-based meanings. If such an exception word is found in proximity to the negated segment, the sentence is labeled as exception. When no exception is found, the system attempts to identify the syntactic target of the negator. This involves tracing dependency links to determine which word (typically an adjective) is being negated. The identified token becomes the focal point for subsequent semantic checks, particularly antonym lookup. For this task, we used Arabic WordNet. Once the target word of the negation has been identified, the system queries Arabic WordNet to determine whether the word has a known antonym. This step is crucial for detecting litotic constructions, where negation combined with a semantically opposite term conveys intensified meaning. If an antonym is found, the sentence is labeled as litotes; otherwise, the label defaults to negated.

) is used to identify their presence within the syntactic structure. If no such negators are found, the sentence is excluded from further analysis. In the opposite case, the system proceeds to verify whether the sentence contains any exception constructs that may alter its semantic polarity, thus transforming negators into limiters (

) is used to identify their presence within the syntactic structure. If no such negators are found, the sentence is excluded from further analysis. In the opposite case, the system proceeds to verify whether the sentence contains any exception constructs that may alter its semantic polarity, thus transforming negators into limiters ( ). These typically include prepositions or particles such as

). These typically include prepositions or particles such as  (except), which often signal exception-based meanings. If such an exception word is found in proximity to the negated segment, the sentence is labeled as exception. When no exception is found, the system attempts to identify the syntactic target of the negator. This involves tracing dependency links to determine which word (typically an adjective) is being negated. The identified token becomes the focal point for subsequent semantic checks, particularly antonym lookup. For this task, we used Arabic WordNet. Once the target word of the negation has been identified, the system queries Arabic WordNet to determine whether the word has a known antonym. This step is crucial for detecting litotic constructions, where negation combined with a semantically opposite term conveys intensified meaning. If an antonym is found, the sentence is labeled as litotes; otherwise, the label defaults to negated.

(except), which often signal exception-based meanings. If such an exception word is found in proximity to the negated segment, the sentence is labeled as exception. When no exception is found, the system attempts to identify the syntactic target of the negator. This involves tracing dependency links to determine which word (typically an adjective) is being negated. The identified token becomes the focal point for subsequent semantic checks, particularly antonym lookup. For this task, we used Arabic WordNet. Once the target word of the negation has been identified, the system queries Arabic WordNet to determine whether the word has a known antonym. This step is crucial for detecting litotic constructions, where negation combined with a semantically opposite term conveys intensified meaning. If an antonym is found, the sentence is labeled as litotes; otherwise, the label defaults to negated.4. Experimental Results

To evaluate the effectiveness of our negation and litotes detection pipeline, we applied it to the Arabic portion of the OSCAR corpus, a large-scale multilingual dataset extracted from Common Crawl. The dataset, which contains billions of words, offers a diverse and realistic distribution of informal and formal Arabic text. We filtered the corpus using our length and structure constraints and processed the resulting subset through the full pipeline described in the methodology.

The processed subset yielded promising results. Out of the total samples evaluated, the system detected 5193 negated expressions and 1953 litotic expressions with detectable antonyms. Notably, 200 instances involving exception prepositions were identified—a low figure, but linguistically significant due to the rarity and subtlety of this construction.

These outputs were classified using a three-label scheme: negated, exception, and litotes. This categorization provides a more nuanced representation of negation in Arabic, emphasizing interpretability over brute-force performance. While exceptions remained sparsely distributed, their accurate detection validates the system’s linguistic sensitivity, particularly in distinguishing layered rhetorical structures.

Despite the challenges posed by the full dataset—increased noise, inconsistent syntactic structure, and contextually ambiguous negation—the pipeline exhibited stable behavior and maintained output consistency with the subset-based trial. This indicates that the approach is scalable and adaptable to broader negation-focused tasks, even beyond the scope of litotes.

One limitation of this study is the absence of a gold-standard annotated corpus for Arabic litotes; thus, traditional assessment criteria such as precision, recall, and F1-score are ineffective. Additionally, while error analysis was performed manually during rule refinement, the pipeline has not yet undergone structured human validation. Incorporating annotated datasets and formal evaluation protocols remains a crucial next step to quantitatively assess detection quality and support generalizability claims.

The relatively low number of detected exception prepositions (200 instances) compared to the high volume of negated (5193) and litotic (1953) expressions may be attributed to several linguistic and corpus-specific factors. First, exception prepositions such as  represent a distinct syntactic construction that is not essential for the formation of standard negation or litotes; these typically rely on more direct adjective–antonym negations (e.g.,

represent a distinct syntactic construction that is not essential for the formation of standard negation or litotes; these typically rely on more direct adjective–antonym negations (e.g.,  ). Second, many exception constructions occur in more formal or rhetorical contexts, whereas the OSCAR corpus includes a significant proportion of informal and web-based content, where such structures are less commonly used. Third, due to the syntactic complexity of exception structures and known limitations in Arabic dependency parsing, some instances may have gone undetected or misparsed. Consequently, while the number of exceptions appears low, it remains linguistically meaningful given the rarity and structural specificity of exception-based negation.

). Second, many exception constructions occur in more formal or rhetorical contexts, whereas the OSCAR corpus includes a significant proportion of informal and web-based content, where such structures are less commonly used. Third, due to the syntactic complexity of exception structures and known limitations in Arabic dependency parsing, some instances may have gone undetected or misparsed. Consequently, while the number of exceptions appears low, it remains linguistically meaningful given the rarity and structural specificity of exception-based negation.

represent a distinct syntactic construction that is not essential for the formation of standard negation or litotes; these typically rely on more direct adjective–antonym negations (e.g.,

represent a distinct syntactic construction that is not essential for the formation of standard negation or litotes; these typically rely on more direct adjective–antonym negations (e.g.,  ). Second, many exception constructions occur in more formal or rhetorical contexts, whereas the OSCAR corpus includes a significant proportion of informal and web-based content, where such structures are less commonly used. Third, due to the syntactic complexity of exception structures and known limitations in Arabic dependency parsing, some instances may have gone undetected or misparsed. Consequently, while the number of exceptions appears low, it remains linguistically meaningful given the rarity and structural specificity of exception-based negation.

). Second, many exception constructions occur in more formal or rhetorical contexts, whereas the OSCAR corpus includes a significant proportion of informal and web-based content, where such structures are less commonly used. Third, due to the syntactic complexity of exception structures and known limitations in Arabic dependency parsing, some instances may have gone undetected or misparsed. Consequently, while the number of exceptions appears low, it remains linguistically meaningful given the rarity and structural specificity of exception-based negation.While direct comparisons are limited due to the lack of large-scale annotated Arabic datasets for litotes, we contrast our approach with existing negation detection systems such as NegAIT [11] and NegBERT [12]. NegAIT, like our method, follows a rule-based strategy, but is specialized for medical English texts and focuses on morphological and sentential negation. In contrast, our pipeline targets rhetorical negation in Arabic and integrates antonym-based litotic inference. Compared to NegBERT—a transformer-based model for negation cue and scope detection—our approach emphasizes linguistic interpretability and syntactic traceability, offering transparency, which is critical in low-resource and educational contexts.

5. Discussion

The results obtained from the executed experiment confirm the feasibility of a rule-based approach to negation and litotes detection in Modern Standard Arabic. The overall distribution of detected negated expressions (5193 instances) aligns with expectations, given the prevalence of negation in natural discourse. The system successfully identified 1953 litotic expressions based on antonym detection, demonstrating its ability to detect rhetorical inversion in Arabic.

The relatively low frequency of exception prepositions (200 cases) may initially seem disproportionate; however, this is linguistically consistent with Arabic usage. Exception structures typically occur in formal contexts and are syntactically more constrained compared to standard negation. Furthermore, dependency parsing limitations, especially regarding ellipsis or irregular clause boundaries, likely contributed to undercounting. Nevertheless, the detection of these exceptions remains significant given the linguistic nuance they represent.

An acknowledged limitation of our pipeline is its reliance on canonical syntactic patterns. More creative or metaphorical instances of litotic inversion—especially those depending on semantic contradiction or idiomatic phrases—may escape rule-based capture. Addressing this requires future extensions incorporating metaphor detection or contextual semantic embeddings, ideally through a hybrid rule–ML framework.

While the system does not rely on machine learning, its behavior is fully explainable and reproducible, a feature which is particularly valuable for low-resource settings and linguistically informed tasks. Future work may explore semi-supervised integration or error-guided refinement to improve coverage without sacrificing transparency.

Additionally, the interpretability of the system provides a distinct advantage. Every output label is directly traceable to a lexical or syntactic decision, offering full transparency in the detection process. This characteristic makes the pipeline not only useful as a research tool, but also practical in educational or linguistic annotation settings where traceability is essential. The results demonstrate that a targeted, linguistically-informed rule set can yield meaningful insights, even with noisy real-world text.

While our method prioritizes interpretability and linguistic traceability, we acknowledge that it does not benchmark against neural or hybrid models such as NegBERT. This choice stems from the lack of litotes-specific annotated data in Arabic, which limits the viability of training or fine-tuning such models in a meaningful way. Nonetheless, future work may explore the integration of transformer-based representations to enhance coverage, particularly for syntactically irregular or idiomatic constructions, while maintaining explainability.

6. Conclusions

The present paper describes a linguistically justified pipeline for detecting and categorizing negation and litotes in Modern Standard Arabic. The system distinguishes between ordinary negation, litotic inversion, and exception-based structures using dependency parsing, lexical resources, and antonym matching.

The output schema improves granularity and interpretability over earlier approaches, enabling more precise analysis of negation phenomena in Arabic text. Despite limitations due to parser accuracy and the inherent rarity of litotes and exception prepositions, the system demonstrated scalability across a large corpus and robustness in capturing core negation patterns.

This work contributes to Arabic linguistic resource development and lays the groundwork for future applications in rhetorical structure analysis, figurative language processing, and culturally aware sentiment analysis. Beyond academic exploration, the system can be integrated into downstream NLP applications such as sentiment analysis by identifying implicit polarity shifts that enhance classification accuracy. It also holds value in literary and political discourse analysis, where figures like litotes contribute significantly to tone, persuasion, and irony. Moreover, its explainable output makes it suitable for educational tools in computational linguistics and stylistic annotation. Future extensions may include multilingual evaluation, semi-supervised refinement, and integration with neural models while preserving rule-based interpretability.

Author Contributions

Conceptualization, methodology, formal analysis, data curation, investigation, and writing—original draft preparation, Z.B.; supervision, project administration, conceptualization support, validation, and writing—review and editing, S.E.F. and E.H.B.; resources, data collection, and visualization, F.-Z.A.; resources, investigation, and writing—review and editing, L.E.J. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not Applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

No new data were created or analyzed in this study. Data sharing is not applicable to this article.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Croy, N. Litotes in Paul. Novum Testamentum 2024, 66, 462–481. [Google Scholar] [CrossRef]

- Horn, L.R. Lie-toe-tease: Double negatives and unexcluded middles. Philos. Stud. 2017, 174, 79–103. [Google Scholar] [CrossRef]

- Mokhlos, W.; Mukheef, A.A. A Pragmatic Study of Litotes in Trump’s Political Speeches. Int. J. Innov. Creat. Change 2020, 11, 1–12. [Google Scholar]

- Yuan, Y. The argumentative litotes in The Analects. Argum. Comput. 2017, 8, 253–266. [Google Scholar] [CrossRef]

- Szczygłowska, T. Litotes in English research articles: Disciplinary variation across life and social sciences. Linguist. Pragensia 2020, 30, 51–70. [Google Scholar] [CrossRef]

- Monakhova, E. Litotes as a Means of Mitigating Communicative Aggression. In Proceedings of the 10th SWS International Scientific Conferences on SOCIAL SCIENCES—ISCSS Proceedings 2023, Albena, Bulgaria, 24–31 August 2023. [Google Scholar] [CrossRef]

- Karp, M.; Kunanets, N.; Kucher, Y. Meiosis and Litotes in The Catcher in the Rye by Jerome David Salinger: Text Mining. In Proceedings of the 5th International Conference on Computational Linguistics and Intelligent Systems, Kharkiv, Ukraine, 20–21 April 2023; pp. 166–178. [Google Scholar]

- Mitrović, J.; O’Reilly, C.; Harris, R.; Granitzer, M. Cognitive Modeling in Computational Rhetoric: Litotes, Containment and the Unexcluded Middle. In Proceedings of the International Conference on Agents and Artificial Intelligence, Valletta, Malta, 22–24 February 2020; pp. 806–813. [Google Scholar] [CrossRef]

- Kühn, R.; Mitrović, J.; Granitzer, M. Computational Approaches to the Detection of Lesser-Known Rhetorical Figures: A Systematic Survey and Research Challenges. arXiv 2024, arXiv:2406.16674. [Google Scholar] [CrossRef]

- Yadav, P.; Kashyap, I.; Bhati, B.S. Impact of Double Negation through Majority Voting of Machine Learning Algorithms. Evergreen 2024, 11, 331–342. [Google Scholar] [CrossRef]

- Mukherjee, P.; Leroy, G.; Kauchak, D.; Rajanarayanan, S.; Diaz, D.Y.R.; Yuan, N.P.; Pritchard, T.G.; Colina, S. NegAIT: A New Parser for Medical Text Simplification Using Morphological, Sentential and Double Negation. J. Biomed. Inform. 2017, 69, 55. [Google Scholar] [CrossRef] [PubMed]

- Khandelwal, A.; Sawant, S. NegBERT: A Transfer Learning Approach for Negation Detection and Scope Resolution. arXiv 2019, arXiv:1911.04211. [Google Scholar]

- Neuhaus, L. On the Relation of Irony, Understatement, and Litotes. Pragmat. Cogn. 2016, 23, 117–149. [Google Scholar] [CrossRef]

- Kühn, R.; Mitrović, J. The Elephant in the Room: Ten Challenges of Computational Detection of Rhetorical Figures. In Proceedings of the 4th Workshop on Figurative Language Processing (FigLang 2024), Mexico City, Mexico, 20 June 2024; pp. 45–52. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).