1. Introduction

Accurate indoor localization (IL) is essential for applications such as asset tracking, emergency response, and indoor navigation [

1]. However, achieving high IL accuracy remains challenging due to signal multipath fading, signal fluctuation, and dynamic changes in the environment [

2,

3]. These factors affect signal measurements used in IL, introducing inconsistencies that degrade localization accuracy and limit the reliability of applications that depend heavily on precise IL.

In previous studies [

2,

4,

5,

6,

7], received signal strength indicator (RSSI) values are used in IL systems. These systems rely on signal propagation models to estimate distances between devices [

2,

7]. However, challenges such as multipath effects, signal fluctuations, and environmental dynamics make it difficult to accurately map RSSI measurements to specific locations, even with advanced models including the adaptive path loss model (ADAM) [

2]. These persistent challenges highlight the need for more robust and adaptable methods.

Quantum machine learning (QML) [

8,

9,

10,

11,

12] offers promising solutions to longstanding challenges in IL, including multipath effects, signal fluctuations, and environmental dynamics. Unlike classical methods, QML operates within the Hilbert space, a complex vector space of infinite dimensions, to allow quantum systems to handle and analyze RSSI data [

12]. This enables quantum algorithms to take advantage of key quantum properties, such as superposition, where data entangles in multiple states simultaneously with data points to reveal hidden patterns [

10]. These quantum properties enhance QML’s ability to recognize complex patterns and relationships in RSSI data, which is particularly useful for handling uncertainty and noise in IL. Among QML models, the quantum random forest (QRF) has emerged as a promising technique for improving feature representation in IL by utilizing quantum properties.

Existing research on QRF has focused on classification [

10,

11,

12]. Khadiev and Safina [

10] introduced an early quantum version of the random forest for binary classification. Safina et al. [

11] later developed a quantum circuit-based prediction method that is also limited to classification. Srikumar et al. [

12] used quantum kernels to find complex patterns in data using the QRF model. However, the ability of the QRF to handle regression tasks, such as predicting continuous values, has received little attention although such a limitation is significant for IL applications, where precise numerical regression is essential for determining accurate locations.

We developed a quantum random forest for indoor localization (QRF-IL) method, an RSSI-based IL method using the QRF, which is a quantum-inspired extension of the classical random forest. QRF is designed to leverage quantum computing principles for improved classification and regression, especially in complex and noisy datasets. Similarly to its classical counterpart, QRF is an ensemble learning method that constructs multiple quantum decision trees (QDTs) during training and outputs the aggregated result, including the average prediction for regression or the majority vote for classification. QDT is a quantum-based version of traditional decision trees to improve learning efficiency. Srikumar et al. proposed incorporating the Nyström quantum kernel estimation (NQKE) into the QDT to further refine data processing [

12]. QRF-IL also adopts the NQKE strategy to extract important features from noisy signals more effectively. Unlike earlier QRF models focused only on classification, QRF-IL applies regression techniques, making it well-suited for estimating precise indoor locations. Additionally, QRF-IL incorporates weighted centroid regression (WCR), which adjusts location predictions based on confidence levels to reduce localization errors. The mean localization error of QRF-IL is calculated based on a publicly available dataset [

3] and compared with those of the IL method, using the standalone QRF proposed in ref. [

12] and the ADAM IL method proposed in Ref. [

2].

2. QRF-IL and Mechanisms

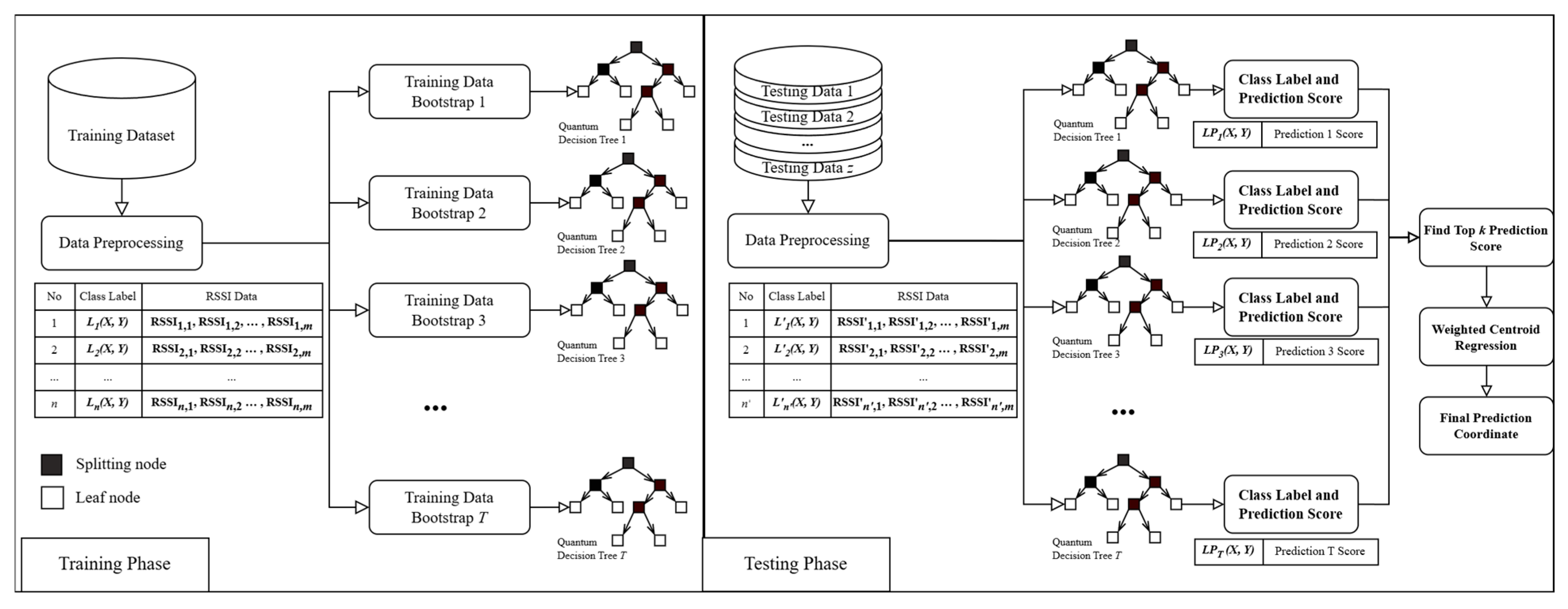

The workflow for the IL regression of QRF-IL is illustrated in

Figure 1, which consists of the training phase and the testing phase which are adapted from the QRF framework for the classification task [

10]. The QRF model is an ensemble of

T-independent QDTs, denoted as

. During the training phase, the developed QRF-IL method is used to construct a QRF model by training multiple QDTs on different subsets of the dataset. The training dataset contains

n tuples of RSSI values, where the

ith tuple contains

m RSSI values and is associated with its corresponding location coordinate label

Li(

X,

Y),

1 ≤

i ≤

n. Before training, the data undergoes preprocessing, which includes data cleaning, normalization, and transformation. The transformation converts classical RSSI data into quantum states for QDT learning; its details will be provided later. Once preprocessed, bootstrap sampling [

7] is conducted to generate

T random subsets of the training data. Each subset is used to train an individual QDT, which serves as an essential decision-making unit in the QRF model consisting of

T QDTs. Unlike classical decision trees, QDTs leverage quantum properties, such as superposition and entanglement, to enhance feature representation and pattern recognition. This enables QRF to capture complex relationships in RSSI data effectively, improving its ability to predict location coordinates across various indoor environments.

In the testing phase, the trained QRF-IL model predicts the associated locations for new test data samples. To ensure consistency with the training data, the test dataset undergoes the same data preprocessing, such as cleaning and normalization, before being fed into the model. After data preprocessing, each test data sample is passed through trained QDT to generate LPt (X, Y), 1 ≤ t ≤ T, a location prediction of the coordinate label, along with a confidence score, indicating prediction reliability. Different QDTs generate different location predictions, which are collected and ranked according to their associated confidence scores. The WCR method is then used to calculate the final location prediction. WCR selects the top K predictions with the highest confidence scores and assigns greater weights to the more reliable ones for the final predicted location coordinate. This method reduces the influence of less confident predictions, leading to more accurate IL results.

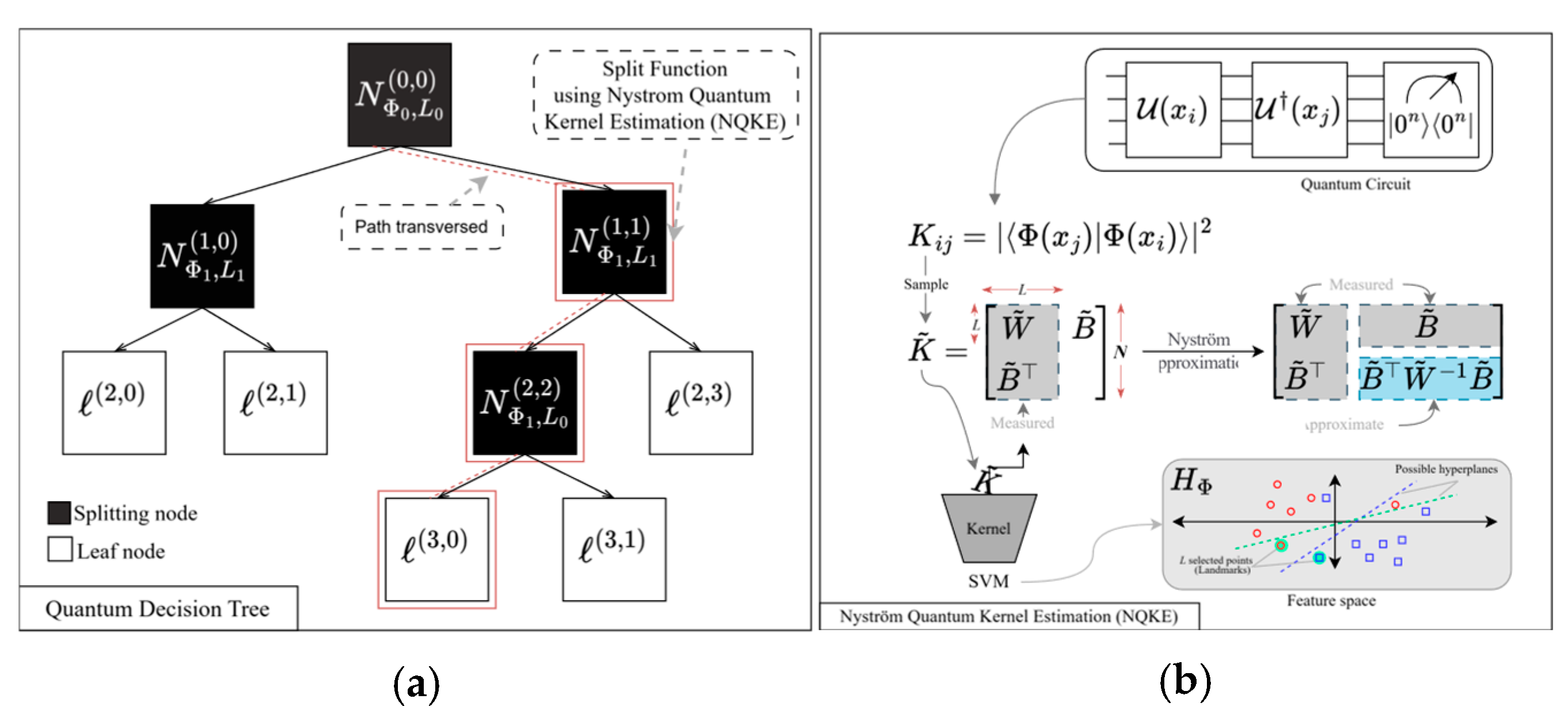

2.1. QDTs

QDT used in QRF [

12] enhances classical decision trees by integrating quantum computation. To maintain the fundamental binary tree structure, QDTs recursively partition data at each node until reaching leaf nodes representing classification outcomes. In the hierarchical structure (

Figure 2a), each black-colored node represents a splitting node, while white-colored nodes correspond to leaf nodes that indicate classification labels.

Unlike classical decision trees, which rely on simple threshold-based splitting, QDTs employ a quantum support vector machine (QSVM) at each splitting node to determine optimal splits. A QSVM operates in a higher-dimensional feature space defined by a quantum kernel, measuring the similarity between data points using quantum mechanical principles. This feature transformation enables QDTs to uncover complex, non-linear relationships in the data, which classical decision trees might miss.

However, computing the full quantum kernel matrix for the QSVM is computationally expensive, scaling quadratically with the number of data points. Srikumar et al. [

12] proposed NQKE to reduce computational overheads. In

Figure 2a, the dashed box labeled “Split Function using Nyström Quantum Kernel Estimation” signifies the role of Nyström approximation in estimating the kernel function efficiently at the QDT nodes. Instead of computing the full quantum kernel matrix, NQKE approximates it through a subset of landmark points. The NQKE split function notation (e.g.,

) represents a splitting node in QDT where the tuple of indices (0, 0) refers to the depth or position,

denotes the quantum embedding used at that node, and

0 indicates the number of landmark points used. NQKE is a hybrid technique that combines the quantum kernel estimation (QKE) [

13] with the Nyström approximation [

14]. It uses quantum embedding and landmark points to approximate the quantum kernel matrix, which measures the similarity between data points in a quantum feature space [

12].

2.2. WCR

The QRF model classifies QDTs via majority voting over predictions of all QDTs. Taking class

i for example, 1 ≤

i ≤

C (i.e., the number of classes), the voting process aggregates class probabilities by averaging the prediction probabilities (or scores) of class

i across all QDTs, as defined in Equation (1).

where

i represents the final prediction probability for class

i,

T is the number of QDTs, and

is the class

i prediction probability produced by the

t-th tree.

To improve the accuracy of IL, the proposed QRF-IL method uses WCR instead of the above-mentioned majority voting process. Rather than simply averaging the probabilities of classification predictions, WCR enhances the final prediction by focusing on the top K most probable classes and mapping them to their corresponding spatial coordinates. The prediction process of WCR is modified as follows:

Instead of using only the most probable class, WCR selects the top

K classes based on their prediction probabilities, as shown in Equation (2).

where

K* represents the set of top

K classes with the top

K probabilities or confidence scores from the QDTs ensemble.

- 2.

Coordinate mapping

The selected top K classes are mapped to their corresponding spatial coordinates (Xj, Yj) based on a precomputed dataset, where 1 ≤ j ≤ K.

- 3.

Weighted centroid computation

The final location prediction (

,

) is obtained by computing a weighted average of the top

K location coordinates by using their corresponding prediction probabilities or confidence scores as weights, as defined in Equation (3).

where the weights

wj are normalized based on the prediction scores, as defined in Equation (4):

WCR is used to ensure that the location prediction is influenced by the most confident classifications while incorporating spatial information and improving localization accuracy.