Advanced Machine Learning Method for Watermelon Identification and Yield Estimation †

Abstract

1. Introduction

2. Method and Material

2.1. Dataset

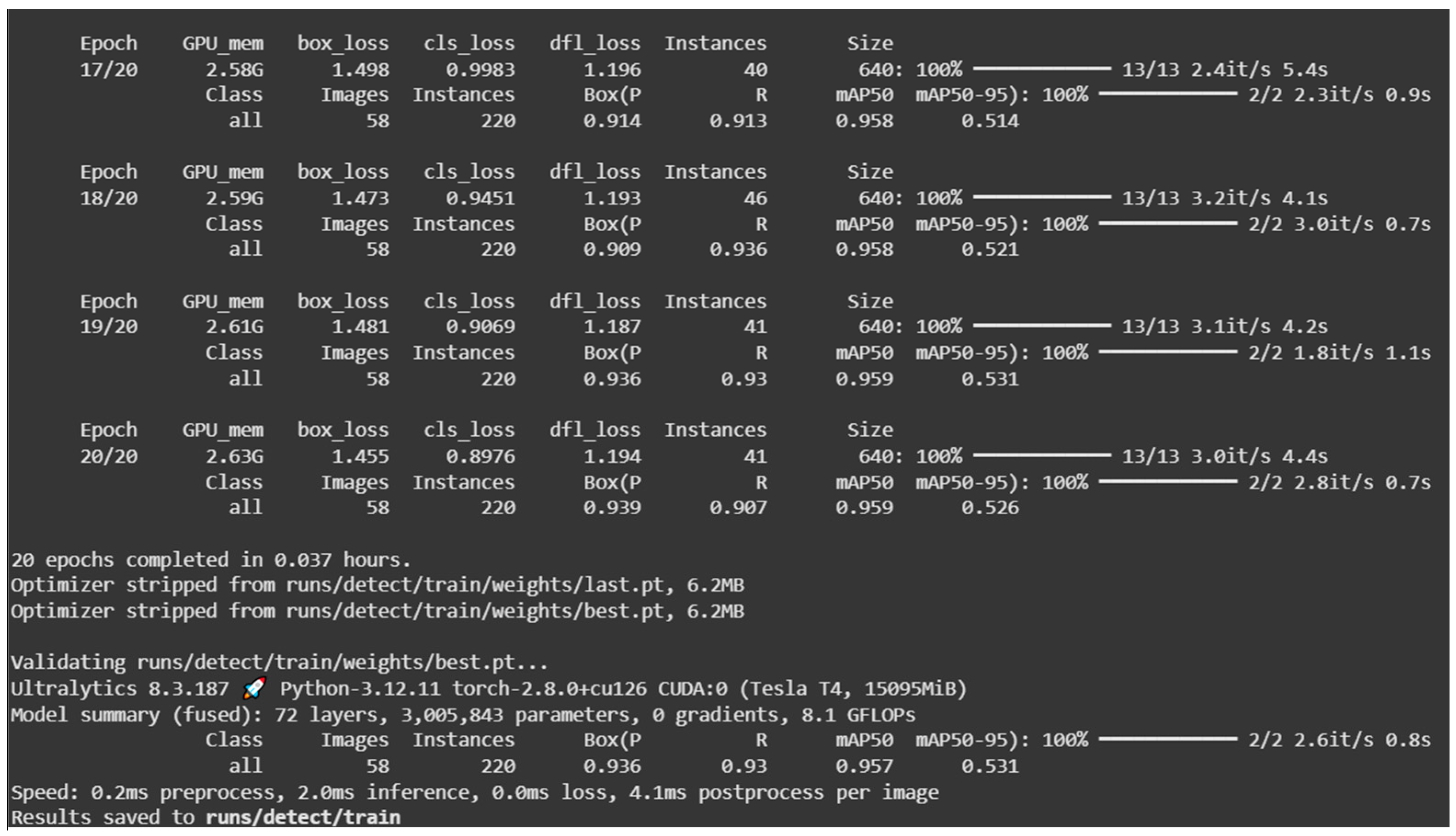

2.2. YOLOv8 Model

2.3. Equations

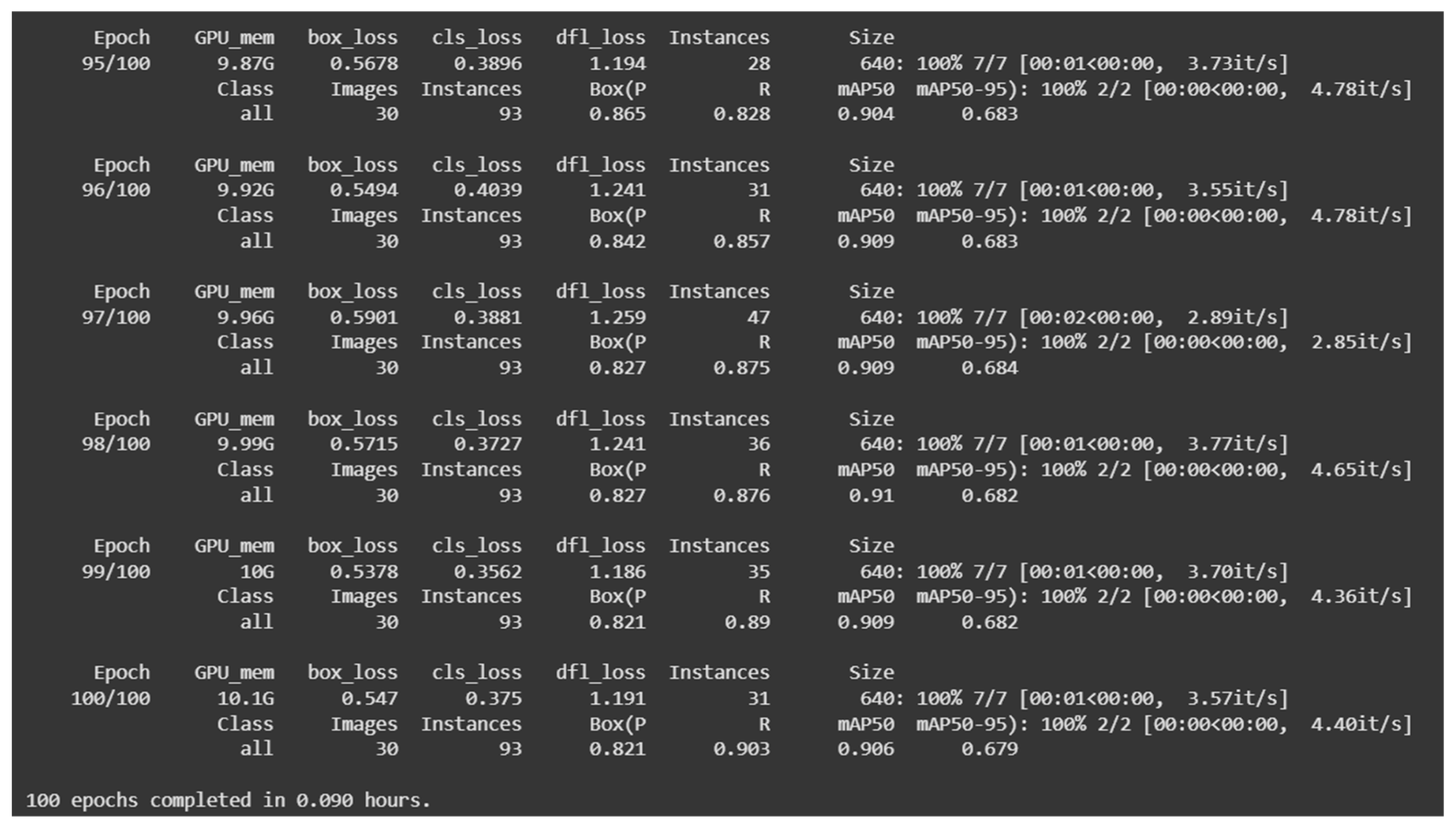

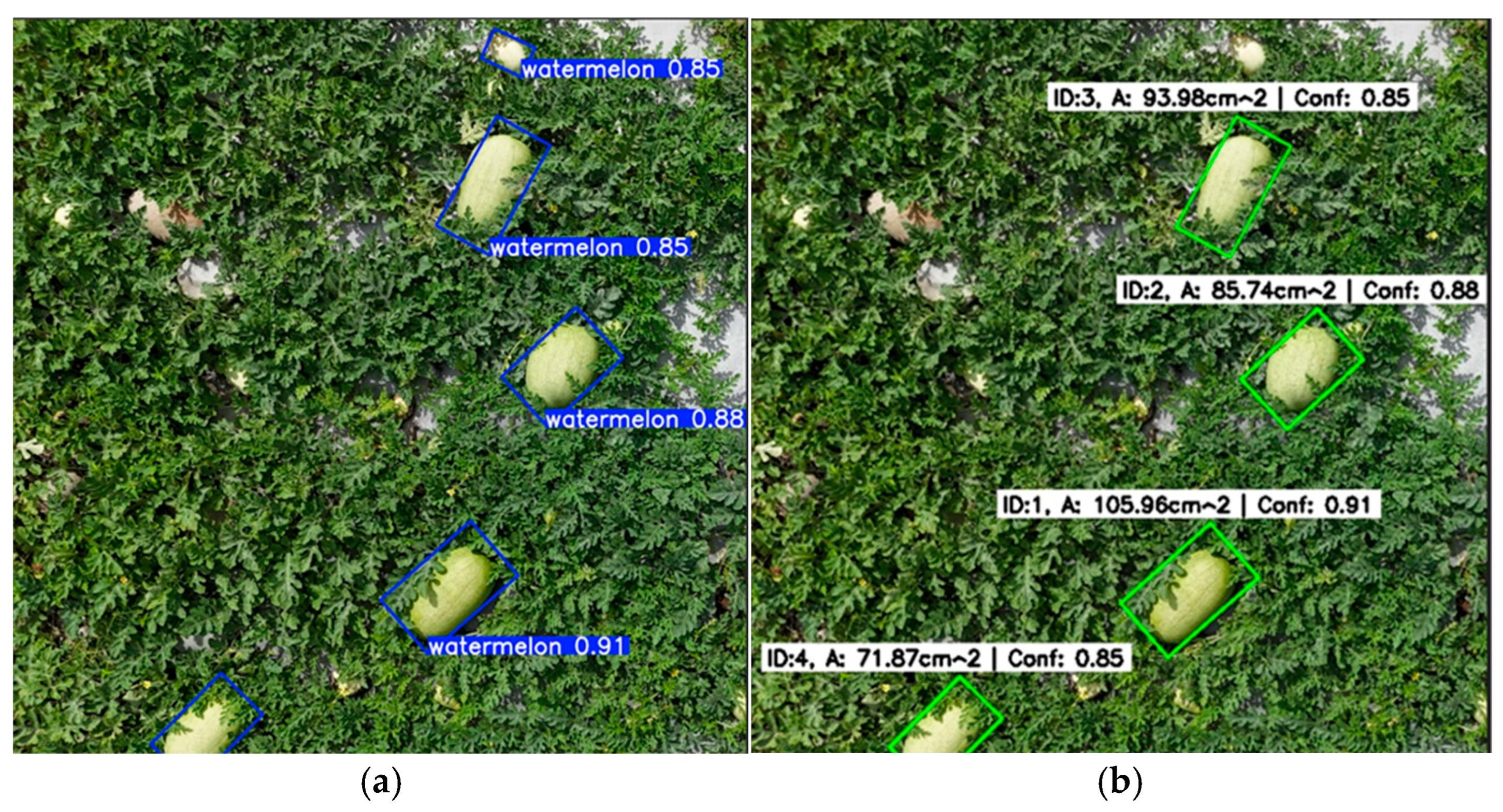

2.4. YOLOv8-OBB

2.5. Method

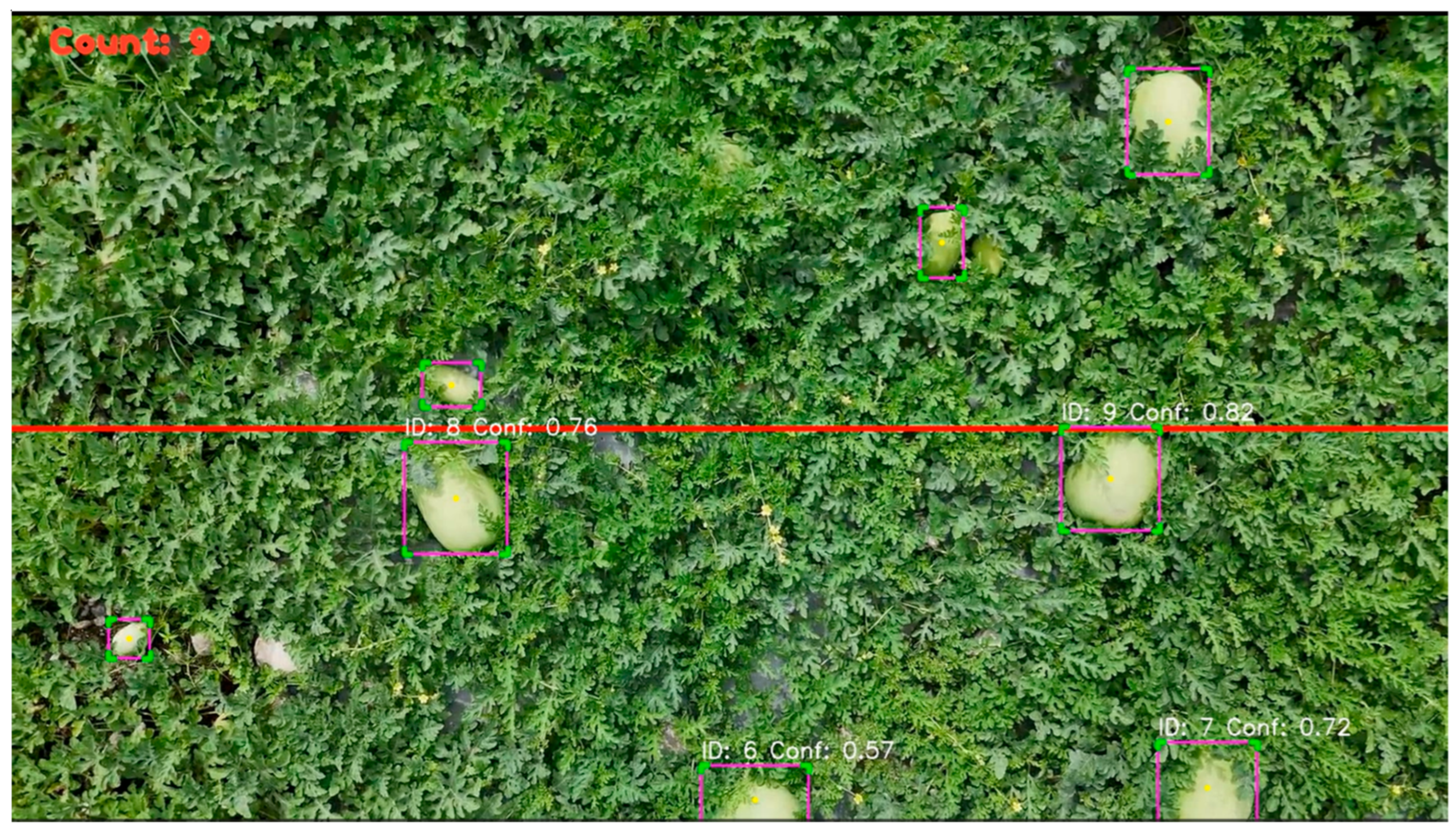

3. Results and Discussion

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Banerjee, D.; Kukreja, V.; Gupta, A.; Singh, V.; Brar, T.P.S. CNN and SVM-based model for effective watermelon disease classification. In Proceedings of the 2023 3rd Asian Conference on Innovation in Technology (ASIANCON), Ravet IN, India, 25–27 August 2023; pp. 1–6. [Google Scholar]

- Adda, S.A.; Nwaogwugwgu, I.B.; Wama, A. Detection of cucumber and watermelon diseases based on image processing techniques using K-means algorithm. Int. J. Multidisc. Res. Growth Eval. 2023, 4, 38–46. [Google Scholar] [CrossRef]

- Nazulan, W.N.S.W.; Asnawi, A.L.; Ramli, H.A.M.; Jusoh, A.Z.; Ibrahim, S.N.; Azmin, N.F.M. Detection of sweetness level for fruits (watermelon) with machine learning. In Proceedings of the 2020 IEEE Conference on Big Data and Analytics (ICBDA), Langkawi Island, Malaysia, 16–17 November 2020; pp. 79–83. [Google Scholar]

- Nasaruddin, A.S.; Baki, S.R.M.S.; Tahir, N.M. Watermelon maturity level based on rind colour as categorization features. In Proceedings of the 2011 IEEE Colloquium on Humanities, Science and Engineering, Penang, Malaysia, 5–6 December 2011; pp. 545–550. [Google Scholar]

- Cheng, Y.S.; Wang, S.C.; Liu, Y.H.; Peng, B.R. An intelligent noninvasive taste detection app for watermelons. In Proceedings of the 2017 5th International Conference on Applied Computing and Information Technology/4th International Conference on Computational Science/Intelligence and Applied Informatics/2nd International Conference on Big Data, Cloud Computing, Data Science (ACIT-CSII-BCD), Hamamatsu, Japan, 9–13 July 2017; pp. 90–94. [Google Scholar]

- Ho, M.J.; Lin, Y.C.; Hsu, H.C.; Sun, T.Y. An efficient recognition method for watermelon using faster R-CNN with post-processing. In Proceedings of the 2019 8th International Conference on Innovation, Communication and Engineering (ICICE), Hangzhou, China, 25–30 October 2019; pp. 86–89. [Google Scholar]

- Yu, Y.; Zhang, K.; Liu, H.; Yang, L.; Zhang, D. Real-time visual localization of the picking points for a ridge-planting strawberry harvesting robot. IEEE Access. 2020, 8, 116556–116568. [Google Scholar] [CrossRef]

- Gao, F.; Fang, W.; Sun, X.; Wu, Z.; Zhao, G.; Li, G.; Zhang, Q. A novel apple fruit detection and counting methodology based on deep learning and trunk tracking in modern orchard. Comput. Electron. Agric. 2022, 197, 107000. [Google Scholar] [CrossRef]

- Wang, Z.; Walsh, K.; Koirala, A. Mango fruit load estimation using a video based MangoYOLO-Kalman filter-Hungarian algorithm method. Sensors 2019, 19, 2742. [Google Scholar] [CrossRef] [PubMed]

- Guo, Y.; Aggrey, S.E.; Yang, X.; Oladeinde, A.; Qiao, Y.; Chai, L. Detecting broiler chickens on litter floor with the YOLOv5-CBAM deep learning model. Artif. Intell. Agric. 2023, 9, 36–45. [Google Scholar] [CrossRef]

- Van Etten, A. You Only Look Twice: Rapid Multi-Scale Object Detection in Satellite Imagery. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018. [Google Scholar]

- Jiang, L.; Jiang, H.; Jing, X.; Dang, H.; Li, R.; Chen, J.; Fu, L. UAV-based field watermelon detection and counting using YOLOv8s with image panorama stitching and overlap partitioning. Artif. Intell. Agric. 2024, 13, 117–127. [Google Scholar] [CrossRef]

| Models | mAP50 | mAP50–95 | Precision | Memory |

|---|---|---|---|---|

| YOLOv8m | 0.946 | 0.519 | 0.819 | 52.0 MB |

| YOLOv8n | 0.95 | 0.526 | 0.915 | 6.2 MB |

| YOLOv8l | 0.964 | 0.508 | 0.806 | 87.6 MB |

| YOLOv8s | 0.951 | 0.528 | 0.820 | 22.5 MB |

| YOLOv8x | 0.961 | 0.477 | 0.798 | 136.7 MB |

| Unique ID | Confidence | Width (cm) | Height (cm) | Area | Time (s) |

|---|---|---|---|---|---|

| 1 | 0.67 | 11.68 | 20.43 | 238.62 | 1.13 |

| 2 | 0.82 | 17.13 | 18.78 | 321.7 | 1.67 |

| 3 | 0.79 | 10.91 | 15.86 | 173.03 | 1.73 |

| 4 | 0.71 | 8.76 | 11.29 | 98.9 | 1.8 |

| 5 | 0.34 | 7.36 | 6.22 | 45.78 | 2.53 |

| 6 | 0.8 | 16.62 | 16.37 | 272.07 | 3.1 |

| 7 | 0.81 | 15.86 | 20.05 | 317.99 | 3.27 |

| 8 | 0.77 | 17.77 | 18.91 | 336.03 | 4.67 |

| 9 | 0.81 | 17.13 | 17.64 | 302.17 | 4.77 |

| 10 | 0.47 | 9.52 | 7.36 | 70.07 | 5.23 |

| 11 | 0.44 | 5.46 | 7.74 | 42.26 | 5.9 |

| 12 | 0.62 | 6.85 | 11.55 | 79.12 | 5.97 |

| 13 | 0.81 | 13.07 | 18.53 | 242.19 | 6.57 |

| 14 | 0.74 | 12.31 | 15.48 | 190.56 | 7.93 |

| 15 | 0.73 | 12.18 | 17.01 | 207.18 | 8.7 |

| 16 | 0.76 | 12.31 | 15.61 | 192.16 | 9.57 |

| 17 | 0.8 | 14.09 | 18.27 | 257.42 | 9.87 |

| 18 | 0.51 | 7.49 | 7.74 | 57.97 | 12.07 |

| 19 | 0.81 | 12.69 | 19.04 | 241.62 | 12.3 |

| 20 | 0.83 | 17.13 | 21.57 | 369.49 | 13.47 |

| 21 | 0.78 | 14.59 | 16.88 | 246.28 | 13.83 |

| 22 | 0.45 | 5.33 | 9.26 | 49.36 | 14.17 |

| 23 | 0.52 | 6.47 | 7.99 | 51.7 | 14.2 |

| 24 | 0.55 | 10.03 | 7.61 | 76.33 | 14.33 |

| 25 | 0.76 | 12.44 | 19.29 | 239.97 | 14.57 |

| Models | mAP50 | mAP50–95 | Precision | Memory |

|---|---|---|---|---|

| YOLOv8m-OBB | 0.921 | 0.685 | 0.895 | 53.4 MB |

| YOLOv8n-OBB | 0.933 | 0.694 | 0.897 | 6.2 MB |

| YOLOv8l-OBB | 0.922 | 0.702 | 0.919 | 89.6 MB |

| YOLOv8s-OBB | 0.90 | 0.685 | 0.938 | 23.5 MB |

| YOLOv8x-OBB | 0.906 | 0.701 | 0.924 | 139.7 MB |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Farooq, M.; Chen, C.-Y.; Wang, C.-P. Advanced Machine Learning Method for Watermelon Identification and Yield Estimation. Eng. Proc. 2025, 108, 10. https://doi.org/10.3390/engproc2025108010

Farooq M, Chen C-Y, Wang C-P. Advanced Machine Learning Method for Watermelon Identification and Yield Estimation. Engineering Proceedings. 2025; 108(1):10. https://doi.org/10.3390/engproc2025108010

Chicago/Turabian StyleFarooq, Memoona, Chih-Yuan Chen, and Cheng-Pin Wang. 2025. "Advanced Machine Learning Method for Watermelon Identification and Yield Estimation" Engineering Proceedings 108, no. 1: 10. https://doi.org/10.3390/engproc2025108010

APA StyleFarooq, M., Chen, C.-Y., & Wang, C.-P. (2025). Advanced Machine Learning Method for Watermelon Identification and Yield Estimation. Engineering Proceedings, 108(1), 10. https://doi.org/10.3390/engproc2025108010