Abstract

Real-time emotion recognition from video poses significant challenges in handling large-scale and continuously growing datasets. Traditional relational databases often fail to meet the scalability and efficiency requirements of such applications. MongoDB, a NoSQL database, offers significant advantages in scalability, speed, and data management, making it an ideal choice for video-based emotion recognition systems. This paper explores the use of MongoDB to optimize the management of video data in real-time emotion recognition, leveraging its features like sharding, indexing, and replication. We demonstrate how MongoDB’s advanced features enhance the performance and reliability of emotion recognition systems by reducing latency and processing time. Through experimental results, we show that MongoDB outperforms traditional relational databases and other NoSQL solutions in handling large datasets efficiently. Future work will explore integrating MongoDB with cloud platforms to improve scalability and incorporate advanced deep learning algorithms for better emotion recognition accuracy.

Keywords:

MongoDB; real-time emotion recognition; sharding; indexing; data management; NoSQL; video data; scalability 1. Introduction

Recognizing emotions from video directly is now a very urgent need, especially with the increasing number of health, security, education, and entertainment applications. Imagine a doctor who can immediately tell if his patient is anxious just from the facial expression on the video, or a company that can measure customer reactions to advertisements instantly—all of this requires fast and powerful technology to manage the ever-growing volume of video data. Unfortunately, traditional databases are often overwhelmed by this challenge. They are too rigid and slow to keep up with the speed and volume of data that modern applications require.

MongoDB, a NoSQL database, has emerged as a promising solution to this problem. With the speed and ability to grow with increasing data, MongoDB is a smart choice for video-based emotion recognition systems. Research shows that sharding—which divides data across multiple servers—and indexing speeds up searches, making MongoDB superior to regular relational databases or even other NoSQL databases such as Cassandra [1]. This means that MongoDB can handle large-scale data while still supporting real-time processing, which is crucial for this type of application.

The need for a system that can handle fast video data streams is increasingly urgent. Video data keeps swelling, and the system will struggle if we do not have the right technology. MongoDB offers an answer by splitting data across multiple servers to prevent it from piling up, speeding up access with indexes, and ensuring data remains secure even if there is a problem on one server through replication. This is all very important for emotion recognition because if it is even a second too late, the results can be chaotic [2]. That is why the timing of this research—in March 2025—felt so right, because the world needs this solution right now.

One of the biggest challenges in recognizing emotions through video is the rapidly growing volume of data. Video requires a large storage capacity and fast access so that the system can work in real-time. MongoDB offers a solution with horizontal scalability through sharding, which allows data load distribution to several servers, making the system more efficient and less prone to disruption even as data grows. When the system uses optimized sharding, latency (processing time) can be reduced, allowing emotion recognition to run almost instantly. This is very important for real-time applications in the health and security fields [3].

The advantage of MongoDB also lies in the flexibility of its scheme. Emotion recognition systems that rely on video data require a flexible data structure because video is an unstructured and dynamic format. MongoDB allows users to store data in a more varied and easily changeable format. This system is not bound by rigid schemes like those in relational databases, which limit the ability to adapt to new data types or changing data structures. This flexibility is very important because the emotions identified in the video are often diverse and require additional data such as timestamps, emotion labels, and confidence levels—all of which can be easily stored and accessed with MongoDB.

Not only in terms of scalability and flexibility, MongoDB also excels in terms of data replication, which makes it more reliable and secure. Server failures can disrupt data flow in real-time systems such as video emotion recognition. However, with the replication provided by MongoDB, stored data remains available even if technical problems occur on a particular server. This provides the much-needed reliability guarantee in applications that rely heavily on speed and accuracy, such as emotion recognition systems in streaming video [4].

This approach also reduces expensive processing costs because MongoDB can manage data more efficiently and supports handling large amounts of data. MongoDB can handle ever-growing amounts of data without slowing down the system by using sharding to divide workloads and indexing to speed up data searches. This is important for video-based emotion recognition and other applications involving large volumes of real-time data, such as in social media analysis, surveillance, or consumer interaction [5].

As ongoing research, this study shows that MongoDB provides a significant advantage in video data management for emotion recognition applications, overcoming the major challenges traditional database technologies face. Hopefully, this research can pave the way for further development in real-time technologies that requires low-latency data processing and analysis. In the future, we plan to explore the integration of MongoDB with cloud platforms to increase scalability and deliver more efficient solutions for managing big data video, and enabling wider implementation in various fields such as medical, security, and business analysis [6].

2. Related Work

Emotion recognition typically uses deep learning techniques such as Convolutional Neural Networks (CNN) for feature extraction and classification [7]. Previous research has shown that CNNs are effective in identifying emotions from facial expressions and other visual cues in videos. Huang et al. explored the robustness of emotion recognition systems under environmental noise conditions and showed that CNNs can maintain high accuracy even with noise [8]. Zhang et al. introduced reinforcement learning and domain knowledge into a real-time emotion recognition system, highlighting significant improvements in classification performance and response time [9].

Relational databases face limitations in managing large and dynamic datasets due to their rigid schema and lack of scalability. NoSQL databases such as Cassandra and Redis address some of these challenges through a distributed architecture. MongoDB’s flexible schema and advanced features provide additional benefits. Xiang et al. show that MongoDB’s flattened R-tree model effectively manages planar spatial data, offering better efficiency in big data environments. Patil et al. showed that MongoDB outperforms MySQL in insertion and retrieval operations while supporting sharding for better load balancing [10].

MongoDB has been used in various real-time applications, including video analysis and personalized content delivery. Eyada et al. showed that MongoDB is more efficient than MySQL for managing IoT data, especially in terms of latency and scalability [11]. Mehmood and Anees highlighted MongoDB’s capabilities in semi-flow processing for real-time data storage, demonstrating its ability to efficiently handle high-speed and high-volume data [12]. These findings underscore MongoDB’s suitability for real-time systems that require low latency and high throughput.

3. Methodology

The proposed system integrates MongoDB to manage data streams from video feeds in real-time and employs deep learning models to recognize emotions based on facial expressions in videos. This process involves several stages, from emotion feature extraction to efficient data processing and storage [13].

3.1. Video Processing and Emotion Feature Extraction

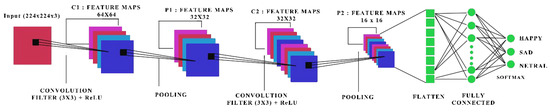

A Convolutional Neural Network (CNN) model is used to extract emotional features from video frames. CNN was chosen for its ability to identify visual patterns, such as facial expressions, that show emotions. Each video frame is analyzed by the CNN to detect and classify the emotions that appear, such as happy, sad, angry, fear, and others [14]. The overall architecture of the CNN model is illustrated in Figure 1.

Figure 1.

CNN Architecture.

- Input Layer: Each video frame that is 224 × 224 pixels with 3 color channels (RGB) is converted into an input with 224 × 224 × 3 dimensions.

- Convolutional Layers: CNNs have multiple convolutional layers that serve to extract spatial features from images. The first layer (Conv1) has 64 filters with a 3 × 3 kernel to capture simple patterns, followed by a pooling layer to reduce dimensionality [15].

- Hidden Layers (Fully Connected Layers): After feature extraction, the results of the convolution layer are processed through multiple fully connected layers that connect all neurons to make emotion predictions.

- Output Layer: The output layer has as many neurons as there are emotion classes (e.g., seven classes: happy, angry, sad, scared, surprised, disgusted, and neutral). A Softmax activation function is used to generate probabilities for each detected emotion class.

3.2. Data Storage and Management Using MongoDB

Once the emotion features are extracted, the metadata including emotion labels, timestamps, and confidence scores are stored in MongoDB for further analysis. MongoDB was chosen for its ability to handle big data with a flexible schema that can be adapted to different types of data.

MongoDB collections store metadata such as the following:

- Timestamp: The time of each frame is analyzed for easy tracking.

- Emotion label: The emotion detected in the frame.

- Belief score: A score that indicates the model’s level of confidence in the recognized emotion.

3.3. System Scalability and Performance with MongoDB

To handle the high-speed data coming from the real-time video stream, this system implements sharding and indexing on MongoDB [16]:

- Sharding distributes data across multiple servers, enabling horizontal scalability to manage increasingly large data loads. It also reduces processing latency and optimizes load balancing.

- Indexing is used on emotion labels and timestamps to speed up data retrieval, so that processing can be performed efficiently without any delays.

3.4. Real-Time Processing and Data Capture

To ensure minimal latency, a stream processing framework is used to process the video directly. MongoDB manages data streams at high speed, allowing emotion analysis to be performed in real-time [17]:

- Replication in MongoDB ensures data redundancy and system reliability. In the event of a failure on one server, data remains available on other servers without significant disruption.

- Real-time processing allows the system to provide emotion analysis results immediately, so it is suitable for applications that require instant processing such as emotion recognition in streaming videos.

3.5. Performance Testing and Evaluation

After the implementation of the system, experiments were conducted to evaluate the accuracy of the system in recognizing emotions, as well as the processing speed and latency of data retrieval. The experiments used an annotated video dataset, and the test results show that MongoDB provides better performance in terms of the following:

- Emotion recognition accuracy.

- Processing speed per frame.

- Data retrieval latency, compared to SQL and some other NoSQL databases.

The experimental results of the performance comparison between MongoDB and other databases are summarized in Table 1.

Table 1.

MongoDB Comparison.

These results demonstrate the advantages of MongoDB in managing real-time emotion recognition applications that require fast processing and efficient data retrieval [18].

Our proposed system utilizes MongoDB to manage real-time video data streams, an increasingly urgent need in a world hungry for instant emotion analysis. We use a Convolutional Neural Network (CNN) based a deep learning model to recognize emotions from facial expressions in video—from happy smiles to annoyed frowns. What makes this approach special is the way we optimize MongoDB with sharding and indexing specifically for video data, something that has not been explored much compared to other research that more often focuses on static data or IoT sensors. This is a new step that we are taking to address the challenge of fast and efficient video management.

The process begins by breaking the video into frames, which are then analyzed by a CNN to capture emotional patterns—like a smile or eyes widening in surprise. We designed CNN with intelligent layers: there is an input layer that accepts 224 × 224 pixel frames, convolutional layers with 64 filters to search for simple patterns, and then fully connected layers to guess the emotion—for example, “happy” or “scared,” using the Softmax function to score the probability. The results—emotion labels, confidence scores, and the time the frame was taken—are stored directly in MongoDB in a flexible collection, so it is easy to organize even if the data are in different forms [19].

To make this system strong in dealing with accumulated data, we use sharding to evenly distribute the data to many servers, reducing the load and keeping latency low—which is essential in real-time processing. Then, we put the indexing in the emotion label and timestamp so that the data can be searched quickly, and not crash the system. We also added replication to ensure the data are secure and accessible if a server has an error. This approach is different from the usual one because we focus on video—not just text or numbers—and this is a breakthrough for applications that need speed and scalability.

After preparing everything, we tested this system using a video dataset with an emotion label. We measure how accurately the CNN guesses emotions, how fast the per-frame processing is, and how short the latency is to search for data from MongoDB. The results are compared with other databases—like SQL or other NoSQL—to prove that our new method is innovative and practical for urgent needs like recognizing emotions in streaming video.

4. Implementation

The system utilizes the following:

- Hardware: A workstation equipped with an Intel Core i7-11700K CPU (3.6 GHz, 8 cores), 32 GB RAM, NVIDIA GeForce RTX 3060 GPU (12 GB VRAM), and 1 TB SSD was used for video processing.

- Software: MongoDB v6.0 (MongoDB Inc., New York, NY, USA) was used for data storage, and TensorFlow v2.12 (Google LLC, Mountain View, CA, USA) was used for emotion recognition. Zhang et al. demonstrated the effective integration of machine learning models with MongoDB for managing real-time video data [20].

4.1. MongoDB Database Schema

An example document in the MongoDB collection is shown in Figure 2.

Figure 2.

MongoDB Database Schema Happy, Sad, and Neutral.

The MongoDB schema is designed to store emotion recognition data efficiently and with flexibility, which is particularly useful for handling both structured and unstructured data. This flexibility is crucial for the diverse nature of video data and emotional labels:

- _id: The _id field stores the unique identifier for each document in MongoDB. The identifier is generated automatically in the ObjectId format, which ensures that every document is uniquely identifiable across the database. This is illustrated by the entries in the provided image where the ObjectId is represented as a string of alphanumeric characters: 678513b82d762fdca2f2e170 for the first document, 678513b82d762fdca2f2d3b9 for the second one, and so on. This field guarantees uniqueness even if multiple documents are stored in the same collection.

- Emotion: The emotion field captures the emotion label detected by the CNN model from the video. In the first example image, the emotion detected is “sad”, the second image detects “neutral”, and the previously mentioned example (not shown in these images) detected “happy”. This field allows the system to classify emotions such as happiness, sadness, fear, anger, etc., based on facial expressions or other cues in the video data:

- Example for Sad: The emotion is labeled as “sad” with a confidence score of 0.95279.

- Example for Neutral: The emotion is labeled as “neutral” with a confidence score of 0.85447.

- The previously discussed happy label had a confidence score of 0.99999, indicating very high confidence in detecting that emotion.

- Confidence: The confidence field stores a value that represents how certain the model is about the detected emotion. This is a floating-point number that ranges between 0 and 1, with higher values indicating higher confidence in the accuracy of the detected emotion. Take the following for example:

- For “sad”, the confidence score is 0.95279, indicating a fairly high level of certainty.

- For “neutral”, the confidence score is 0.85447, still reasonably high but lower than “sad.”

- The happy emotion previously showed a confidence score of 0.999995, signifying near-perfect certainty in its detection.

- (1)

- Timestamp: The timestamp field stores the time when the video frame was analyzed in ISODate format, which is crucial for tracking and referencing when the emotion is detected. This timestamp helps to align the emotion data with specific moments in the video, enabling precise real-time processing:

- For “sad”, the timestamp is recorded as “2025-01-13T13:23:04.726Z”.

- For “neutral”, the timestamp is recorded as “2025-01-13T13:23:04.580Z”.

- The timestamp for happy would be recorded similarly, ensuring temporal alignment with the respective video frame.

By maintaining these fields—_id, emotion, confidence, and timestamp—MongoDB allows efficient storage and retrieval of emotion recognition data, ensuring that the system can handle large datasets and provide real-time analysis, which is essential for applications in fields like healthcare, security, and customer experience.

4.2. Experiment Setup

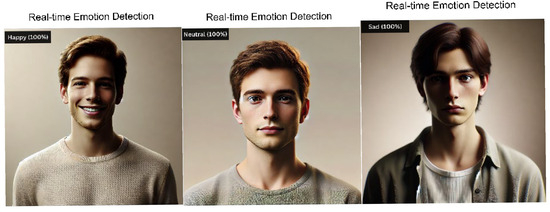

Experiments were conducted using an annotated video clip dataset, measuring MongoDB’s performance in terms of processing speed, data retrieval latency, and system scalability. Patil et al. showed that MongoDB’s architecture enables superior performance in real-time processing scenarios compared to traditional relational databases [21]. The experimental results of emotion recognition for happy, sad, and neutral are presented in Figure 3.

Figure 3.

Experimental image results for happy, sad, and neutral.

This figure illustrates a real-time emotion recognition system that successfully detects and processes these emotions from video data. The system is powered by MongoDB, which plays a crucial role in managing and processing large-scale video data in real time. MongoDB’s capabilities are demonstrated in this experiment through its ability to handle dynamic and high-speed data for accurate emotion detection, ensuring high efficiency and scalability.

The system accurately detects emotions, starting with the “Happy” emotion, which is identified with 100% confidence. This high level of accuracy ensures that the emotion recognition model is precise and reliable, making it suitable for real-time applications. Similarly, the system detects the “Sad” emotion with a confidence score of 0.95279, showing that it can reliably recognize emotions like sadness with a high degree of certainty. The “Neutral” emotion is detected with a confidence score of 0.85447, demonstrating that the system can also identify when there is no strong emotional expression. This ability to capture subtle emotional cues, alongside more obvious emotions, makes the system highly robust. MongoDB’s flexible schema enhances these detection capabilities by allowing the system to efficiently store and manage data for each emotion label (happy, sad, and neutral), making it particularly useful in real-world applications like healthcare or entertainment, where understanding a wide range of emotional expressions is critical.

The system also processes video frames very quickly for all detected emotions. For example, the “Happy” emotion is processed with the highest efficiency, providing immediate feedback as soon as the emotion is detected. The system is similarly quick in processing the “Sad” and “Neutral” emotions, ensuring that it remains responsive and delivers real-time updates without delays. This speed is facilitated by MongoDB’s sharding and indexing capabilities, which enable the system to retrieve and process video frames rapidly, regardless of whether the emotion detected is happy, sad, or neutral. These features ensure that the system can operate effectively in environments where real-time feedback is crucial, such as customer service applications or video conferencing.

In terms of data capture latency, the system achieves extremely low latency for all emotions. Whether detecting “Happy,” “Sad,” or “Neutral” emotions, the system detects and provides feedback in real time, ensuring minimal delay for users. MongoDB’s horizontal scalability across multiple servers, facilitated by sharding and replication, helps distribute the data load evenly. This greatly reduces the time needed for data retrieval and processing, minimizing latency and ensuring that the system can quickly capture and report emotions in live video feeds or during interactions.

System scalability is one of MongoDB’s standout features. As the volume of video data grows, MongoDB allows the system to scale seamlessly, accommodating more frames and users regardless of whether the emotions detected are happy, sad, or neutral. The use of sharding helps distribute video data across multiple servers, ensuring that the system remains efficient and does not become overwhelmed, even when more users interact with the system or as more video frames are processed. This scalability makes MongoDB an ideal choice for real-time emotion recognition systems that need to handle large, increasing datasets while maintaining high performance and reliability [22].

MongoDB’s replication ensures data redundancy and system reliability, which is essential in high-demand applications where system downtime could lead to significant delays in emotion recognition. This scalability ensures that the system can be deployed in various large-scale environments, such as global video streaming platforms or enterprise-level customer service applications.

In this study, data availability and ethical considerations are of utmost importance to ensure that the research adheres to high standards of transparency, accountability, and respect for participant privacy.

Data Availability: The datasets utilized in this study will be made available to the public, contingent upon an appropriate request. This approach guarantees that the research is conducted in compliance with relevant ethical standards, promoting transparency and encouraging further investigation into the findings. By allowing access to the data, the study fosters an environment where other researchers can build upon this work, validate results, or explore new research avenues. Interested parties can obtain the datasets by following the prescribed procedures, ensuring that further studies can leverage the rich dataset collected during this research to enhance the understanding of emotion recognition technologies.

Ethical Considerations: In line with the ethical guidelines governing research practices, all video data used in this study has been obtained through proper consent procedures. The privacy and confidentiality of participants’ personal information have been safeguarded throughout the data collection process. The study adhered to strict ethical standards to ensure that the participants’ data were handled responsibly and that their rights were respected. Furthermore, each video used in the analysis was collected with the explicit permission of the individuals involved, thereby eliminating any potential concerns regarding the misuse of the data. This thorough and careful process ensures that the research upholds ethical integrity and prioritizes the protection of personal data; as outlined in the relevant research guidelines, this study and its measures not only contribute valuable insights into real-time emotion recognition systems but also demonstrate a commitment to ethical research practices that prioritize participant rights and data security.

The cross-validation method was used to verify the accuracy of the emotion recognition model used in this study. This technique ensures reliable results and reduces the possibility of overfitting the model, making the resulting model reliable for real-world applications [23]. Cross-validation helps increase confidence in the model by splitting it into multiple subsets of data to test its accuracy under various conditions, thus providing a more thorough evaluation of the system’s performance [24].

The dataset used in this research consists of annotated video clips used for training Convolutional Neural Networks (CNN)-based emotion recognition models. Each video clip contains facial frames that show various expressions of human emotions, such as smiles, frowns, and other facial expressions related to emotions. This dataset is essential for training the model to classify emotions with high accuracy based on the facial expressions in the video.

4.3. Data Type

The dataset used in this study plays a crucial role in the real-time emotion recognition system, where each video clip is processed and analyzed to detect emotional expressions accurately. The data are organized into several key components:

- Video Frames: Each video clip is divided into multiple image frames, with each frame serving as input for the Convolutional Neural Network (CNN) model. These frames are carefully analyzed to extract visual features related to emotional expressions, which are essential for accurate emotion detection.

- Emotion Labels: Every video frame is labeled with the corresponding emotion detected by the model, such as “happy,” “sad,” and “angry”. These labels represent the classification targets of the model, helping it identify specific emotional states from the facial expressions captured in the frames.

- Confidence Score: Along with the emotion label, the model generates a confidence score for each prediction, indicating how certain the model is about the accuracy of its emotion detection. This score plays a vital role in assessing the reliability of the model’s predictions.

- Timestamp: Each video frame is also paired with a timestamp, marking the precise moment when the frame was captured.

This timestamp is essential for real-time processing, ensuring that the emotional responses are aligned with the correct time in the video, which is important for applications requiring real-time feedback.

The dataset consists of thousands of video clips, containing hundreds of thousands of frames, which allows for the effective training of the emotion recognition model. This dynamic dataset grows continuously with new data collection, ensuring that the model improves and adapts over time to a wider variety of emotional expressions and video conditions.

To process this large dataset in real-time, each video frame is fed into the CNN model, which extracts the relevant emotional features. Once processed, the metadata associated with each frame—including emotion labels, confidence scores, and timestamps—is stored in MongoDB for further analysis. MongoDB is utilized to manage this metadata, enabling efficient and fast data retrieval during processing. The system benefits from MongoDB’s advanced features, such as sharding, indexing, and replication, ensure the data are handled efficiently even as the volume continues to grow.

The dataset directly supports the real-time emotion recognition application, where each detected emotion is immediately categorized and stored in MongoDB. This allows for quick access and processing, which is essential for real-time use cases such as live video streaming or interactive applications. By leveraging MongoDB’s capabilities, the system efficiently manages the increasing volume of data, ensuring smooth operation even as the dataset expands.

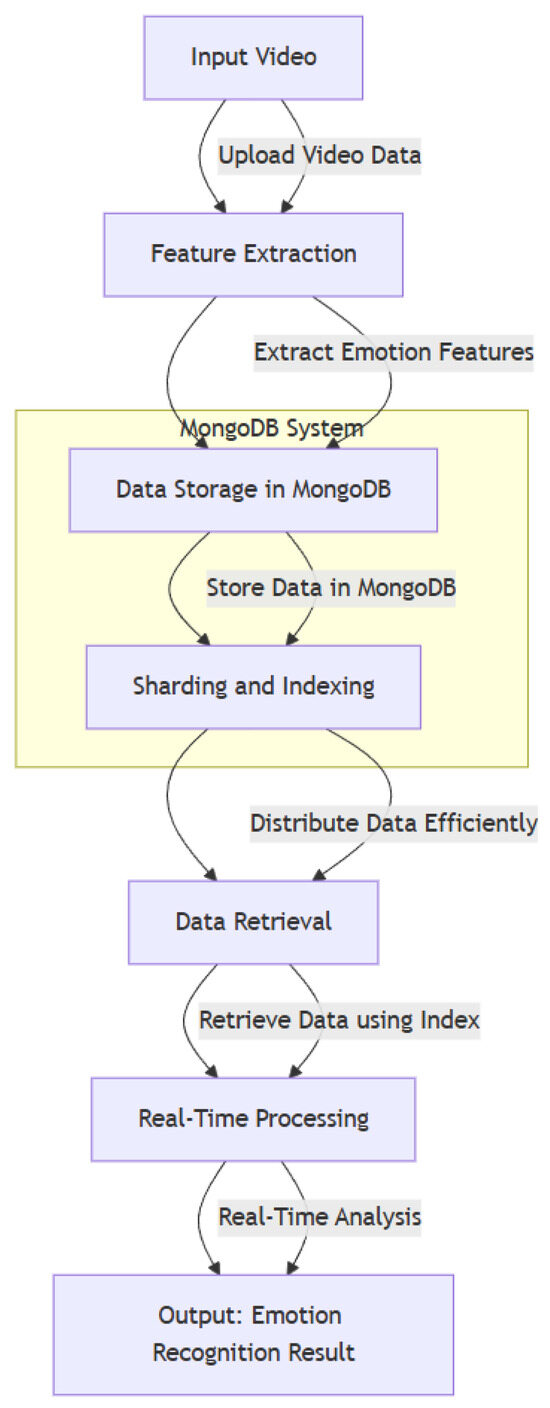

4.4. Real-Time Video Data Management System Architecture for Emotion Recognition Using MongoDB

The overall architecture of the proposed real-time video data management system for emotion recognition using MongoDB is illustrated in Figure 4.

Figure 4.

Real-Time Video Data Management System.

The process starts with video input, where videos containing image data are fed into the system for analysis. Once the video is uploaded, the next step is feature extraction, which involves the process of retrieving important information from each frame of the video, such as facial expressions or body movements that reflect emotions. These extracted features are then stored in MongoDB, which is a NoSQL database that allows data storage in flexible formats and supports horizontal scalability.

Once the data are stored, the system uses sharding and indexing in MongoDB. Sharding allows the sharing of data across multiple servers, which improves the system’s ability to handle large amounts of data without degrading performance. Meanwhile, indexing is used to speed up the data retrieval process, ensuring that the system can access the required data quickly. At a later stage, the required data are retrieved using indexed queries, which ensures fast and efficient data retrieval.

Once the data are captured, the system proceeds with real-time processing, where the data are analyzed to identify the emotions contained in the video. This process provides emotion analysis results immediately and without delay. Finally, the emotion recognition results are displayed as output, showing the emotions detected in the video based on the analysis performed.

By using MongoDB to manage and process video data, the system can handle large data volumes and provide fast and efficient emotion analysis, which is crucial in real-time video-based emotion recognition applications.

5. Results and Discussion

The overall performance results of the proposed system are summarized in Table 2, based on several different metrics including accuracy, processing time per frame, and data capture latency.

Table 2.

Performance Result.

From the above table, it can be seen that MongoDB shows higher accuracy, lower processing time per frame, and faster data retrieval latency compared to SQL and other NoSQL databases. This demonstrates MongoDB’s superiority in handling real-time video-based emotion recognition applications, where speed and efficiency are critical [24].

The results show that MongoDB has better performance compared to SQL and other NoSQL systems. MongoDB’s sharding and indexing mechanisms significantly reduce latency and processing time. Previous research shows that sharding enables efficient data distribution across multiple servers, while indexing speeds up data access time which is critical for applications with real-time requirements such as emotion recognition systems [25].

Optimizations to MongoDB’s sharding and indexing enhance real-time data processing capabilities, making it an ideal choice for video-based emotion recognition applications. Sharding helps distribute data evenly across multiple servers, reducing the load on a single server and ensuring good horizontal scalability. In addition, indexing enables fast data search, which is indispensable in applications that require quick access to large amounts of data. Experimental results show that these features make MongoDB more efficient compared to other databases in real-time video data management [26].

Although MongoDB has many advantages, there are some challenges in its implementation, especially in managing sharding configurations and overcoming latency in high concurrent situations. Previous research has also shown that managing an efficient sharding configuration can be complex, and in scenarios with many concurrent requests, latency can still be an issue despite significant improvements [27]. Therefore, although MongoDB showed excellent results in this test, there is still room to improve load management on very busy systems.

The formulas for Accuracy, Processing Time, and Data Retrieval Latency are explained in the following Section 5.1, Section 5.2 and Section 5.3.

5.1. Accuracy (%)

- Accuracy is measured based on how well the model identifies or predicts emotions from the video data.

- The accuracy calculation is performed by comparing the predicted results of the model (as produced by the emotion recognition system) with the correct label or ground truth.

- The general formula for accuracy is as follows:

- If the model correctly predicts 92 out of 100 video frames, then the accuracy is 92%.

5.2. Processing Time (ms/Frame)

- Processing Time measures the time taken to process one video frame by a database (in this case MongoDB, SQL, or other NoSQL).

- To measure this, we can record the time taken to process a certain number of video frames and then calculate the average processing time per frame.

- The formula used is as follows:

- If the system processes 1000 frames in 15 s, then the processing time per frame is 15 ms/frame.

5.3. Data Retrieval Latency (ms)

- Data Retrieval Latency measures the time taken by the system to retrieve data (for example, video frames or emotion recognition results) from the database.

- To measure this, we record the time taken to retrieve data from the database during query execution or when data are accessed.

- The formula for latency is as follows:

- If the time taken to access the video frame data are 25 ms, then the latency is 25 ms.

Explanation of why results differ is as follows:

- MongoDB has better performance because it uses features such as sharding and indexing, which divide data across multiple servers and speeds up data retrieval.

- SQL is slower in terms of data processing and retrieval because it is based on a more rigid data model, with no sharding and indexing mechanisms optimized for big data management.

- Other NoSQL is also better than SQL in some ways, but it may not be completely as efficient as MongoDB in managing large data volumes with real-time processing.

5.4. MongoDB Performance Comparison Table with Other Databases in Various Aspects of Data Processing

A comparison based on the aspects you have mentioned, taken from five journals, is presented in Table 3.

Table 3.

Comparison of MongoDB Performance with Other Databases.

Aspects to Compare:

- Operational Performance: Data processing speed (such as insertion, retrieval, and query execution operations). MongoDB shows an advantage with sharding that improves the efficiency of big data processing.

- Scalability: The ability to handle horizontal data growth, with sharding allowing MongoDB to manage very large volumes of data.

- Storage Efficiency: How MongoDB optimizes storage space for large datasets, as well as the overhead that may arise compared to more rigid relational databases like MySQL.

- Real-Time Support: MongoDB’s efficiency in real-time data processing, compared to other databases in the context of semi-stream processing or streaming data.

- Schema Flexibility: MongoDB’s flexible schema advantage allows for unstructured data management, compared to databases that require a rigid schema structure such as MySQL.

This table provides a clear overview of the comparison between MongoDB and other databases, as well as the different aspects tested in each study.

This article is superior because of its focus on optimizing MongoDB for real-time emotion recognition applications in video data processing. Previous journals have mostly compared MongoDB with other databases in the context of traditional data management or IoT. This research brings MongoDB into a more complex context, namely the management of large video data that requires real-time processing. By using MongoDB to support deep learning in video analysis, this journal contributes more depth on how MongoDB can efficiently manage streaming video and multimedia data, which is a more advanced application than just managing text- or number-based data [29].

In the development and implementation of a video-based emotion recognition system using MongoDB, there are several challenges to be faced, especially related to sharding configuration and performance constraints on big data. These challenges mainly arise when the system has to handle high-speed data and very large data volumes, which are characteristic of real-time emotion recognition applications [30].

Sharding is an important technique in MongoDB that enables data sharing across multiple servers to improve horizontal scalability. However, efficient sharding configuration can be very complex, especially in applications with large data volumes and uneven data distribution. Determining the right sharding key is crucial to ensure balanced data sharing between shards. Choosing the wrong sharding key can result in problems such as “hot spots” (high load on certain shards) or load imbalance, which can affect the overall system performance.

In their paper, Kang et al. [28] highlighted that although sharding allows MongoDB to handle high-speed data, misconfiguration can lead to high latency. Therefore, effective management of sharding is one of the key challenges in ensuring system performance remains optimal despite growing data volumes

Managing low latency during data capture and processing is a significant challenge in real-time emotion recognition applications. Fast and efficient data capture is essential to provide instant emotion analysis. However, as the volume of video data stored and processed increases, the latency of data capture can increase, affecting the speed at which the system can provide emotion detection results.

Patil et al. [18] explained that indexing on emotion labels and timestamps can reduce latency and speed up query response time. Nonetheless, in environments with massive data, optimal index management is still a challenge to keep response times low.

Video-based emotion recognition systems using MongoDB also face challenges related to scalability. The growing volume of video data requires massive storage and fast data processing. MongoDB, with its sharding and replication capabilities, provides a solution to these scalability issues, but challenges remain in optimizing data distribution and ensuring system reliability as data continues to grow. A more detailed performance comparison among MongoDB, Redis, and Cassandra is shown in Table 4.

Table 4.

MongoDB Performance Comparison Table with Redis and Cassandra.

6. Conclusions and Future Work

MongoDB provides an efficient and scalable solution for managing large-scale video data in real-time emotion recognition. The advanced features of MongoDB, such as sharding and indexing, significantly improve system performance and reliability. Sharding allows for efficient data distribution across multiple servers, while indexing accelerates data access, both of which are critical for applications that require fast data processing, such as emotion recognition systems in video-based environments.

Moving forward, there is significant potential for integrating MongoDB with cloud platforms to further enhance system scalability. This integration will enable the management of even larger datasets, thereby supporting real-time processing at a global scale. Additionally, the application of more advanced deep learning algorithms could significantly improve the accuracy of emotion recognition, allowing for more nuanced detection of diverse emotional expressions. The combination of cloud computing with MongoDB could also facilitate the development of more dynamic, distributed environments for video data management, expanding the scope and applicability of emotion recognition systems in industries like healthcare, security, and entertainment.

Further research could explore additional optimizations for MongoDB’s sharding and indexing mechanisms to ensure even better performance under high loads, and the exploration of more robust techniques for real-time data management could continue to enhance system reliability in highly concurrent environments.

Author Contributions

Conceptualization, A.S. and H.M.K.; Methodology, H.M.K.; Software, H.M.K.; Validation, H.M.K., M.M., M.F.Z. and A.S.; Formal analysis, H.M.K.; Investigation, H.M.K.; Resources, M.M.; Data Curation, M.M. and M.F.Z.; Writing—Original Draft Preparation, H.M.K.; Writing—Review and Editing, A.S.; Visualization, H.M.K.; Supervision, M.M.; Project Administration, M.M.; Funding Acquisition, M.M. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

No new data were created or analyzed in this study. Data sharing is not applicable to this article.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Elgabli, A.; Liu, K.; Aggarwal, V. Optimized preference-aware multi-path video streaming with scalable video coding. IEEE Trans. Mobile Comput. 2019, 19, 159–172. [Google Scholar] [CrossRef]

- Barhoumi, C.; BenAyed, Y. Real-time speech emotion recognition using deep learning and data augmentation. Artif. Intell. Rev. 2024, 58, 49. [Google Scholar] [CrossRef]

- Tan, C.; Ceballos, G.; Kasabov, N.; Puthanmadam Subramaniyam, N. Fusionsense: Emotion classification using feature fusion of multimodal data and deep learning in a brain-inspired spiking neural network. Sensors 2020, 20, 5328. [Google Scholar] [CrossRef] [PubMed]

- Mehmood, E.; Anees, T. Performance analysis of not only SQL semi-stream join using MongoDB for real-time data warehousing. IEEE Access 2019, 7, 134215–134225. [Google Scholar] [CrossRef]

- Zhu, F.; Yuan, M.; Xie, X.; Wang, T.; Zhao, S.; Rao, W.; Zeng, J. A data-driven sequential localization framework for big telco data. IEEE Trans. Knowl. Data Eng. 2019, 33, 3007–3019. [Google Scholar] [CrossRef]

- Baruffa, G.; Femminella, M.; Pergolesi, M.; Reali, G. Comparison of MongoDB and Cassandra databases for supporting open-source platforms tailored to spectrum monitoring as-a-service. IEEE Trans. Netw. Serv. Manag. 2020, 17, 346–360. [Google Scholar] [CrossRef]

- Liu, X.; Cheng, X.; Lee, K. GA-SVM based facial emotion recognition using facial geometric features. IEEE Sens. J. 2020, 21, 11532–11542. [Google Scholar] [CrossRef]

- Abbes, H.; Boukettaya, S.; Gargouri, F. Learning ontology from Big Data through MongoDB database. In Proceedings of the 2015 IEEE/ACS 12th International Conference of Computer Systems and Applications (AICCSA), Marrakech, Morocco, 17–20 November 2015; pp. 1–7. [Google Scholar] [CrossRef]

- Gonzalez, H.; George, R.; Muzaffar, S.; Acevedo, J.; Hoppner, S.; Mayr, C.; Elfadel, I. Hardware acceleration of EEG-based emotion classification systems: A comprehensive survey. IEEE Trans. Biomed. Circuits Syst. 2021, 15, 412–442. [Google Scholar] [CrossRef]

- Satriyawan, H.; Susanto, D.S. Optimasi keamanan smart grid melalui autentikasi dua lapis: Meningkatkan efisiensi dan privasi dalam era digital. J. RESTIKOM 2023, 5, 319–333. [Google Scholar] [CrossRef]

- Zhong, H.; Wu, F.; Xu, Y.; Cui, J. QoS-aware multicast for scalable video streaming in software-defined networks. IEEE Trans. Multimed. 2020, 23, 982–994. [Google Scholar] [CrossRef]

- Park, J.; Chung, K. Layer-assisted video quality adaptation for improving QoE in wireless networks. IEEE Access 2020, 8, 77518–77527. [Google Scholar] [CrossRef]

- Yang, J.; Qian, T.; Zhang, F.; Khan, S.U. Real-time facial expression recognition based on edge computing. IEEE Access 2021, 9, 76178–76190. [Google Scholar] [CrossRef]

- Zhang, K.; Li, Y.; Wang, J.; Cambria, E.; Li, X. Real-time video emotion recognition based on reinforcement learning and domain knowledge. IEEE Trans. Circuits Syst. Video Technol. 2022, 32, 1034–1047. [Google Scholar] [CrossRef]

- Chen, L.; Li, M.; Su, W.; Wu, M.; Hirota, K.; Pedrycz, W. Adaptive feature selection-based AdaBoost-KNN with direct optimization for dynamic emotion recognition in human–robot interaction. IEEE Trans. Emerg. Top. Comput. Intell. 2021, 5, 205–213. [Google Scholar] [CrossRef]

- Xiang, L.; Huang, J.; Shao, X.; Wang, D. A MongoDB-based management of planar spatial data with a flattened R-tree. ISPRS Int. J. Geo-Inf. 2016, 5, 119. [Google Scholar] [CrossRef]

- Eyada, M.M.; Saber, W.; El Genidy, M.M.; Amer, F. Performance evaluation of IoT data management using MongoDB versus MySQL databases in different cloud environments. IEEE Access 2020, 8, 110656–110668. [Google Scholar] [CrossRef]

- Patil, M.M.; Hanni, A.; Tejeshwar, C.H.; Patil, P. A qualitative analysis of the performance of MongoDB vs MySQL database based on insertion and retrieval operations using a web/android application to explore load balancing—Sharding in MongoDB and its advantages. In Proceedings of the 2017 International Conference on I-SMAC (IoT in Social, Mobile, Analytics and Cloud) (I-SMAC), Palladam, India, 10–11 February 2017; pp. 325–330. [Google Scholar] [CrossRef]

- Sharma, M.; Sharma, V.D.; Bundele, M.M. Performance analysis of RDBMS and NoSQL databases: PostgreSQL, MongoDB and Neo4j. In Proceedings of the 2018 3rd International Conference and Workshops on Recent Advances and Innovations in Engineering (ICRAIE), Jaipur, India, 22–25 November 2018; pp. 1–5. [Google Scholar] [CrossRef]

- Mearaj; Maheshwari, P.; Kaur, M.J. Data conversion from traditional relational database to MongoDB using XAMPP and NoSQL. In Proceedings of the 2018 Fifth HCT Information Technology Trends (ITT), Dubai, United Arab Emirates, 28–29 November 2018; pp. 94–98. [Google Scholar] [CrossRef]

- Soussi, N.; Boumlik, A.; Bahaj, M. Mongo2SPARQL: Automatic and semantic query conversion of MongoDB query language to SPARQL. In Proceedings of the 2017 Intelligent Systems and Computer Vision (ISCV), Fez, Morocco, 17–19 April 2017; pp. 1–6. [Google Scholar] [CrossRef]

- Yilmaz, N.; Alatli, O.; Ciloglugil, B.; Erdur, R.C. Evaluation of storage and query performance of sensor-based Internet of Things data with MongoDB. In Proceedings of the 2018 International Conference on Artificial Intelligence and Data Processing (IDAP), Malatya, Turkey, 28–30 September 2018; pp. 1–6. [Google Scholar] [CrossRef]

- Chatterjee, R.; Mazumdar, S.; Sherratt, R.S.; Halder, R.; Maitra, T.; Giri, D. Real-time speech emotion analysis for smart home assistants. IEEE Trans. Consum. Electron. 2021, 67, 68–76. [Google Scholar] [CrossRef]

- Guetari, R.; Chetouani, A.; Tabia, H.; Khlifa, N. Real time emotion recognition in video stream, using B-CNN and F-CNN. In Proceedings of the 5th International Conference on Advanced Technologies for Signal and Image Processing (ATSIP), Sousse, Tunisia, 2–5 September 2020; pp. 1–6. [Google Scholar] [CrossRef]

- Kim, S.-H.; Yang, H.-J.; Nguyen, N.A.T.; Prabhakar, S.K.; Lee, S.-W. WeDea: A New EEG-Based Framework for Emotion Recognition. IEEE J. Biomed. Health Inform. 2022, 26, 264–275. [Google Scholar] [CrossRef]

- Hafeez, T.; Xu, L.; McArdle, G. Edge intelligence for data handling and predictive maintenance in IIoT. IEEE Access 2021, 9, 49355–49371. [Google Scholar] [CrossRef]

- Anand, V.; Rao, C.M. MongoDB and Oracle NoSQL: A technical critique for design decisions. In Proceedings of the 2016 International Conference on Emerging Trends in Engineering, Technology and Science (ICETETS), Pudukkottai, India, 24–26 February 2016; pp. 1–4. [Google Scholar] [CrossRef]

- Kang, Y.-S.; Park, I.-H.; Rhee, J.; Lee, Y.-H. MongoDB-based repository design for IoT-generated RFID/sensor big data. IEEE Sens. J. 2016, 16, 485–497. [Google Scholar] [CrossRef]

- Liu, Y.; Wang, Y.; Jin, Y. Research on the improvement of MongoDB auto-sharding in cloud environment. In Proceedings of the 2012 7th International Conference on Computer Science & Education (ICCSE), Melbourne, VIC, Australia, 14–17 July 2012; pp. 851–854. [Google Scholar] [CrossRef]

- Roh, Y.; Heo, G.; Whang, S.E. A survey on data collection for machine learning: A big data-AI integration perspective. IEEE Trans. Knowl. Data Eng. 2021, 33, 1328–1347. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).