1. Introduction

In the era of digital transformation, network bandwidth has emerged as a vital resource for both organizations and individuals. The rapid growth of internet usage and data-intensive applications has made it essential to implement robust mechanisms for managing access and allocating bandwidth efficiently. Such mechanisms play a crucial role in preventing service disruptions and ensuring a high quality of service, particularly in networks experiencing heavy traffic loads [

1]. For Internet Service Providers (ISPs), meeting the diverse and ever-increasing demands of their customers requires deploying advanced technologies. A major challenge faced by ISPs lies in optimizing bandwidth utilization to maintain seamless network operations and enhance user satisfaction [

2,

3]. Effective bandwidth allocation is critical for ensuring smooth data transmission and avoiding performance bottlenecks [

4].

Traditional bandwidth allocation methods, which often rely on static configurations or heuristic approaches, are becoming increasingly inadequate in modern networks. Not only are these conventional mechanisms resource-intensive, they also fail to adapt to the dynamic and complex nature of user access patterns. This underscores the necessity for intelligent and adaptive systems that can optimize bandwidth allocation in real time. With advancements in computational power, particularly through GPUs, deep neural networks (DNNs) have emerged as powerful tools in various domains [

5,

6]. However, training and deploying neural networks remains computationally intensive, which necessitates efficient design and implementation strategies.

Machine learning, a subset of artificial intelligence, has demonstrated remarkable potential in addressing complex problems across a wide range of fields. Neural networks, in particular, excel in modeling non-linear relationships and adapting to evolving datasets. The backpropagation algorithm, a cornerstone of neural network training, is renowned for its effectiveness in minimizing errors and enhancing model accuracy [

7,

8,

9,

10,

11]. Despite their capabilities, certain neural network architectures, such as recurrent neural networks (RNNs), face challenges like handling long-term dependencies and requiring a large number of parameters compared to feed-forward and convolutional neural networks. Recent advancements in network architectures, optimization techniques, and computational resources have mitigated these challenges, enabling more efficient training and deployment of such models [

12].

This paper introduces a novel approach to optimizing network bandwidth allocation using the Backpropagation Neural Network (BPNN) algorithm. The proposed model aims to analyze user access patterns, identify trends, and dynamically allocate bandwidth to maximize network efficiency. By integrating artificial intelligence and network optimization principles, this research addresses the limitations of traditional methods and establishes a robust framework for real-time bandwidth management [

13]. While machine learning techniques have proven highly effective in modeling non-linear relationships and managing complex datasets, their applications in network resource allocation have largely been confined to traffic forecasting. This study proposes a cost-effective scheme for estimating required bandwidth based on predicted internet traffic demand, offering a significant contribution to resource-aware network design [

14]. Additionally, effective transmit resource allocation remains a critical aspect of resource optimization, and recent research has emphasized the importance of developing strategies to achieve superior performance under resource constraints [

15].

2. Materials and Methods

2.1. Related Work

Previous research emphasizes the importance of accurately estimating the required bandwidth to ensure efficient allocation in network environments. This estimation is typically based on the predicted offered traffic at a given time. The goal is to prevent under-provisioning (which causes congestion and degraded performance) and over-provisioning (which leads to resource wastage). For instance, some approaches rely on predictive models that estimate bandwidth needs dynamically, aligning resource allocation with traffic demand [

14,

16].

Deep neural networks (DNNs) are widely used in prediction tasks due to their ability to learn complex patterns in data. The training process begins with the random initialization of parameters, followed by iterations involving forward and backward propagation. During forward propagation, the network computes outputs based on the input data and evaluates the prediction error using a loss function. Backward propagation then computes the gradients of the loss function with respect to each parameter, enabling the model to adjust its weights and minimize the error over successive iterations. This iterative process is key to improving the accuracy of DNN-based prediction models [

17].

Reinforcement learning (RL) has emerged as another significant machine learning approach. Unlike traditional supervised learning, RL mimics human decision-making processes. It trains agents to make decisions by interacting with the environment, where each action is evaluated based on a cumulative reward system. Over time, the agents learn to maximize rewards by reflecting on the outcomes of previous actions, making RL particularly suitable for dynamic decision-making tasks in complex systems [

17,

18].

Recent advancements have seen the integration of AI techniques, particularly machine learning (ML) and deep learning (DL), into optical networks. These technologies address challenges in dynamic bandwidth allocation (DBA) and enhance the quality of service (QoS). AI-based models can efficiently handle complex classification and prediction tasks, making them invaluable for dynamic network environments where traffic pat-terns are highly variable [

19]. Deep learning (DL) models leverage diverse architectures, such as convolutional neural networks (CNNs), to address different challenges. Tools like Keras and TensorFlow provide the flexibility to design and implement these architectures for specific applications. For instance, CNNs, known for their ability to process spatial data, can be adapted for traffic prediction and bandwidth allocation tasks, demonstrating their versatility in network optimization scenarios [

20].

2.2. Research Method

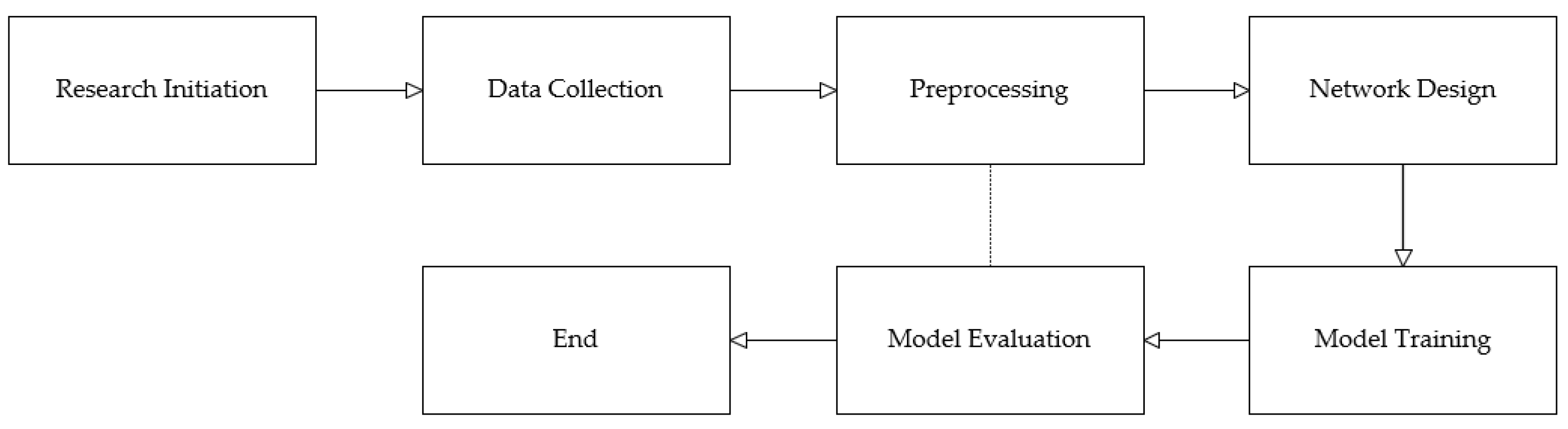

Design of backpropagation neural network model for network bandwidth allocation:

The research process follows a structured approach, as illustrated in

Figure 1.

The research begins by defining its objectives and scope, with a primary focus on optimizing network bandwidth allocation using the Backpropagation Neural Network algorithm.

In this phase, relevant data is gathered, including user information and network access patterns. This data is essential for analyzing user interactions with the network and identifying key factors influencing bandwidth allocation.

Once the data is collected, preprocessing is conducted to ensure consistency and usability. This step includes normalizing the data to maintain a uniform scale and dividing it into two subsets: one for model training and the other for testing.

At this stage, the neural network architecture is developed. Key design decisions include determining the number of layers, the number of neurons per layer, and other parameters necessary for optimizing model performance.

The neural network model is trained using the backpropagation algorithm. During this process, the model iteratively adjusts its weights and biases to minimize prediction errors and improve accuracy.

Following training, the model’s performance is assessed using test data. Various evaluation metrics are applied to measure accuracy and ensure the model’s effectiveness in real-world scenarios.

The research process concludes with a comprehensive analysis of the results. The findings are documented, and final conclusions are drawn based on the study’s outcomes.

2.2.1. Dataset Retrieval

For the initial stage of our study, we utilized a dataset provided by Kaggle user ajithdari (

https://www.kaggle.com/datasets/ajithdari/resource-allocation-6g, accessed on 17 August 2025) To better suit the objectives of our research, we edited the dataset by removing the “Timestamp”, “Allocated_Bandwidth”, and “Resource_Allocation” columns and adding a new column labeled “Priority”, which served as the target variable for our training dataset. This modification allowed us to focus on prioritization during resource allocation as a key aspect of the training process.

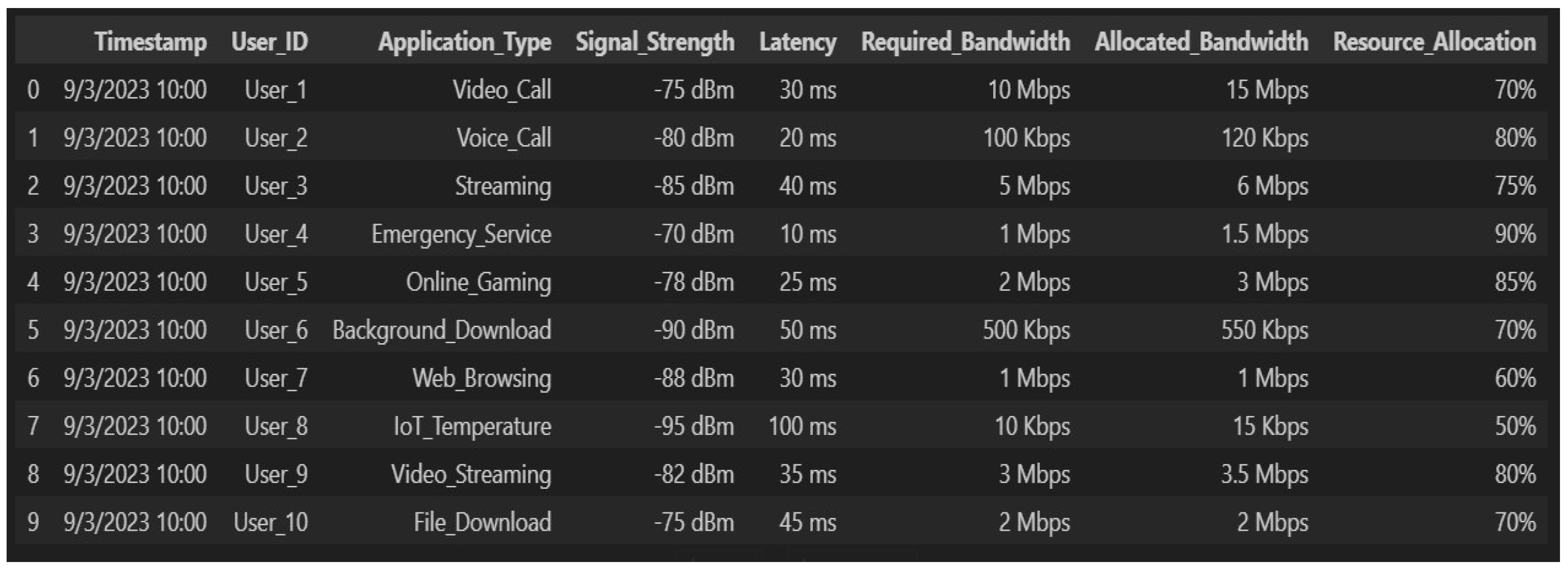

The dataset used in this study consists of 400 data entries sourced from Kaggle. For the analysis, we employed a sigmoid binary classification approach, with the output represented as [0, 1]. As illustrated in

Figure 2, the dataset comprises the following columns: Timestamp, User_ID, Application_Type, Signal_Strength, Latency, Required_Band-width, Allocated_Bandwidth, and Resource_Allocation. The evolution of 6G networks introduces significant advancements in resource allocation, necessitated by emerging applications and stringent performance requirements. The key aspects of this allocation include the following:

Application Types: next-generation applications such as holographic communications, real-time extended reality (XR), and large-scale IoT deployments demand highly adaptive and precise network resource distribution to ensure seamless functionality.

Signal Strength: Maintaining ultra-reliable and high-throughput connectivity in the presence of millimeter-wave and terahertz frequencies presents critical challenges. Innovative techniques are required to mitigate propagation losses and interference, ensuring robust signal integrity.

Latency: The pursuit of sub-millisecond latency in 6G networks necessitates strategic resource allocation mechanisms. Dynamic scheduling and adaptive network provisioning play vital roles in achieving ultra-low latency to support mission-critical applications.

Allocated Bandwidth: the increasing need for high-capacity bandwidth to support immersive technologies, autonomous systems, and global-scale IoT applications drives the necessity for efficient spectrum utilization and advanced bandwidth management strategies.

Resource Allocation: by leveraging artificial intelligence (AI) and machine learning (ML) techniques, 6G networks can achieve optimized resource allocation, enhancing overall performance with adaptive and data-driven decision-making processes.

This study delves into these aspects, emphasizing how AI-driven resource management can address the complexities of next-generation wireless networks.

To analyze the dataset in detail, we utilized Python 3.12.1 for data visualization and inspection.

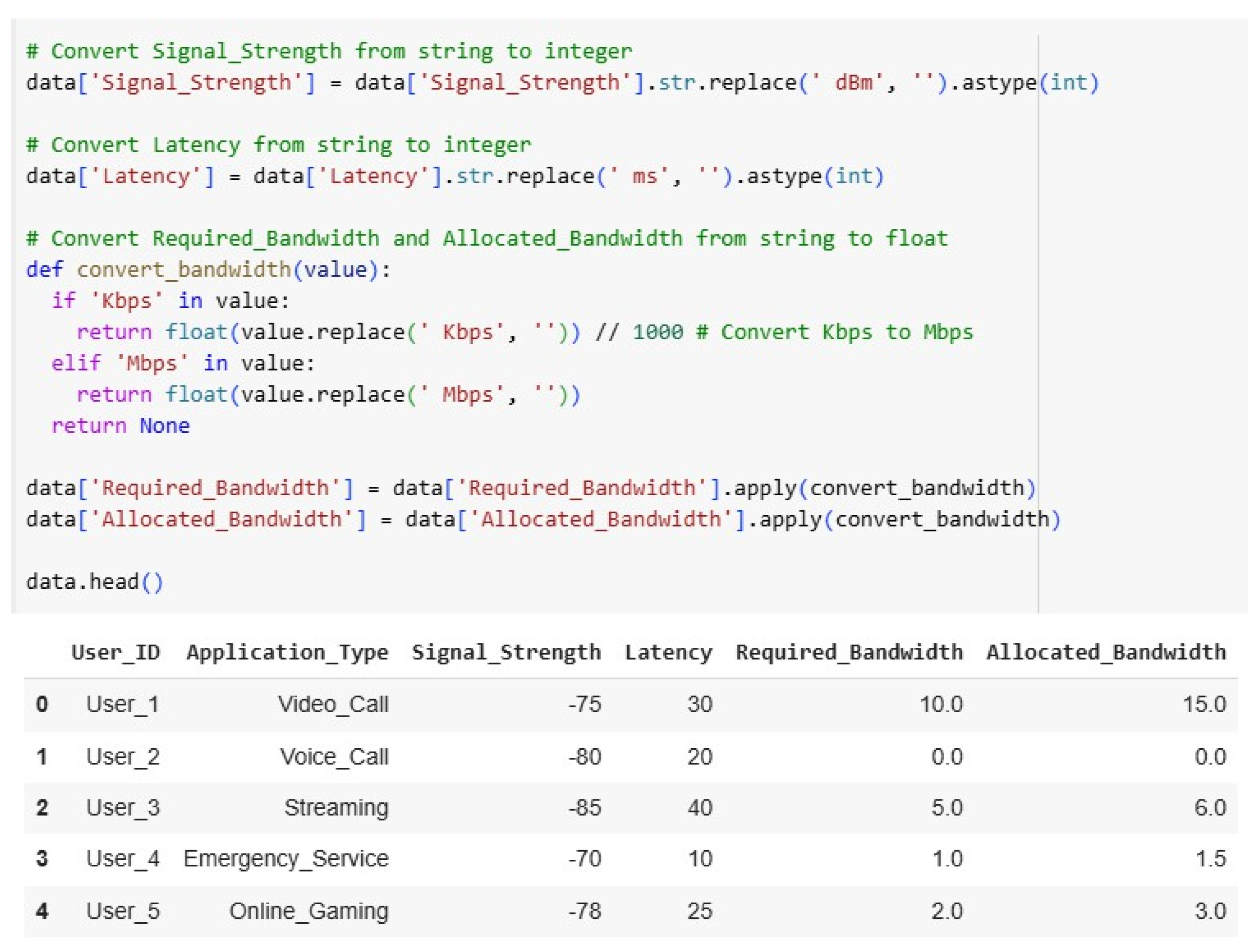

During the next step, the dataset contains various parameters, including signal strength, latency, required bandwidth, and allocated bandwidth. The Signal_Strength column, originally stored as a string with “dBm” units, is converted into an integer format for numerical analysis. The Latency column, initially recorded in “ms” (milliseconds), is transformed into an integer format to facilitate computational operations. The Required_Bandwidth and Allocated_Bandwidth columns contain values in either “Kbps” (kilobits per second) or “Mbps” (megabits per second). To ensure consistency, a function is applied to convert “Kbps” values into “Mbps” by dividing by 1,000. This ensures uniform measurement units across the dataset. This preprocessing step enhances data consistency and allows for effective analysis of bandwidth allocation efficiency in different network applications, as shown in

Figure 3.

2.2.2. Training Dataset

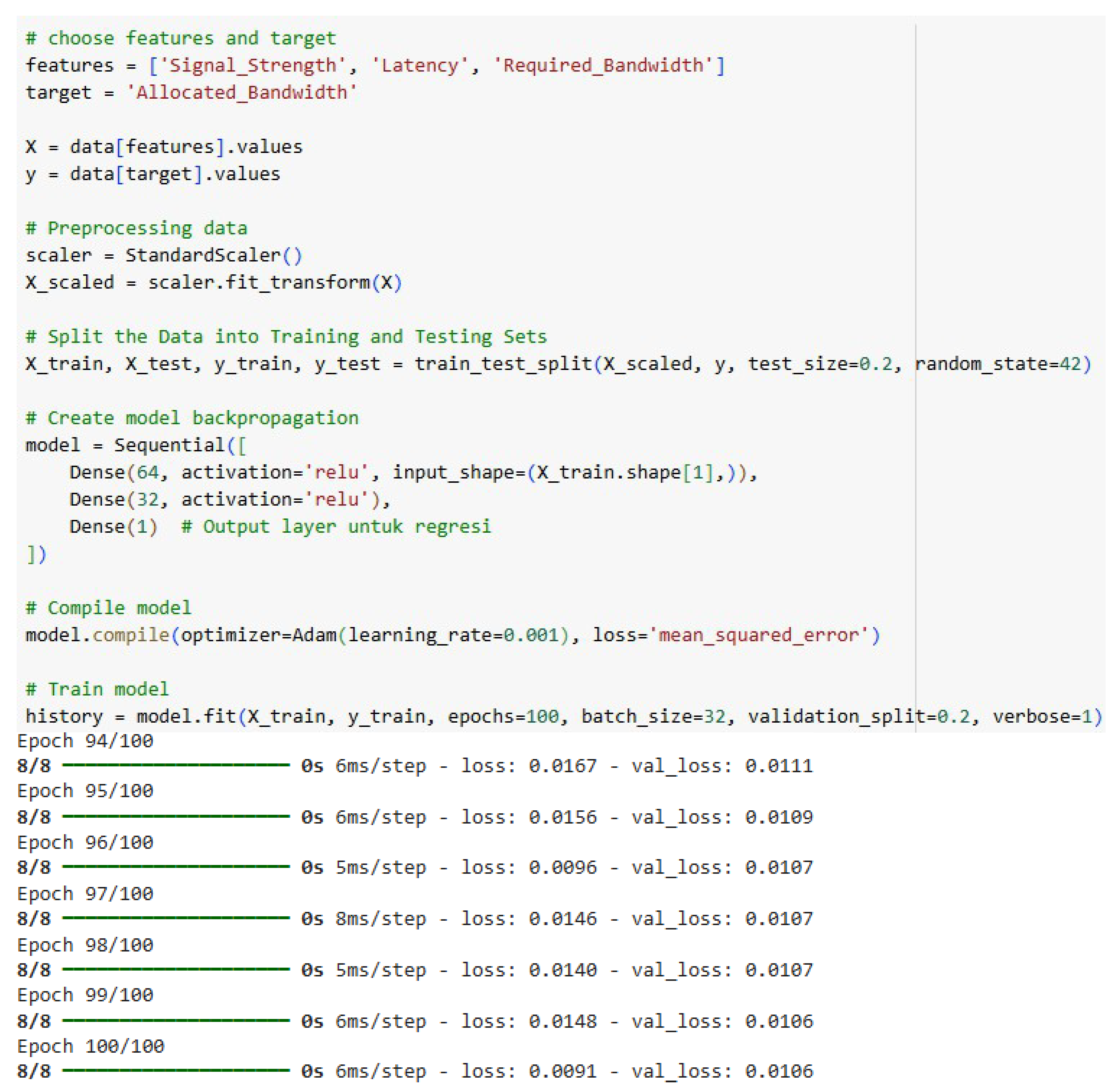

In this research, as shown in

Figure 4, the model uses Signal_Strength, Latency, and Required_Bandwidth as input features for data preprocessing, while Allocated_Bandwidth is the target variable. To ensure a uniform data distribution, the input features are normalized using StandardScaler. The dataset is then divided into training and testing sets, with 80% used for training and 20% for testing. The neural network consists of three layers: an input layer with 64 neurons and ReLU activation, a hidden layer with 32 neurons and ReLU activation, and an output layer with a single neuron for regression. The model is compiled using the Adam optimizer with a learning rate of 0.001 and mean squared error (MSE) as the loss function. It is trained for 100 epochs with a batch size of 32 and a validation split of 20%.

3. Results

3.1. Model Performance

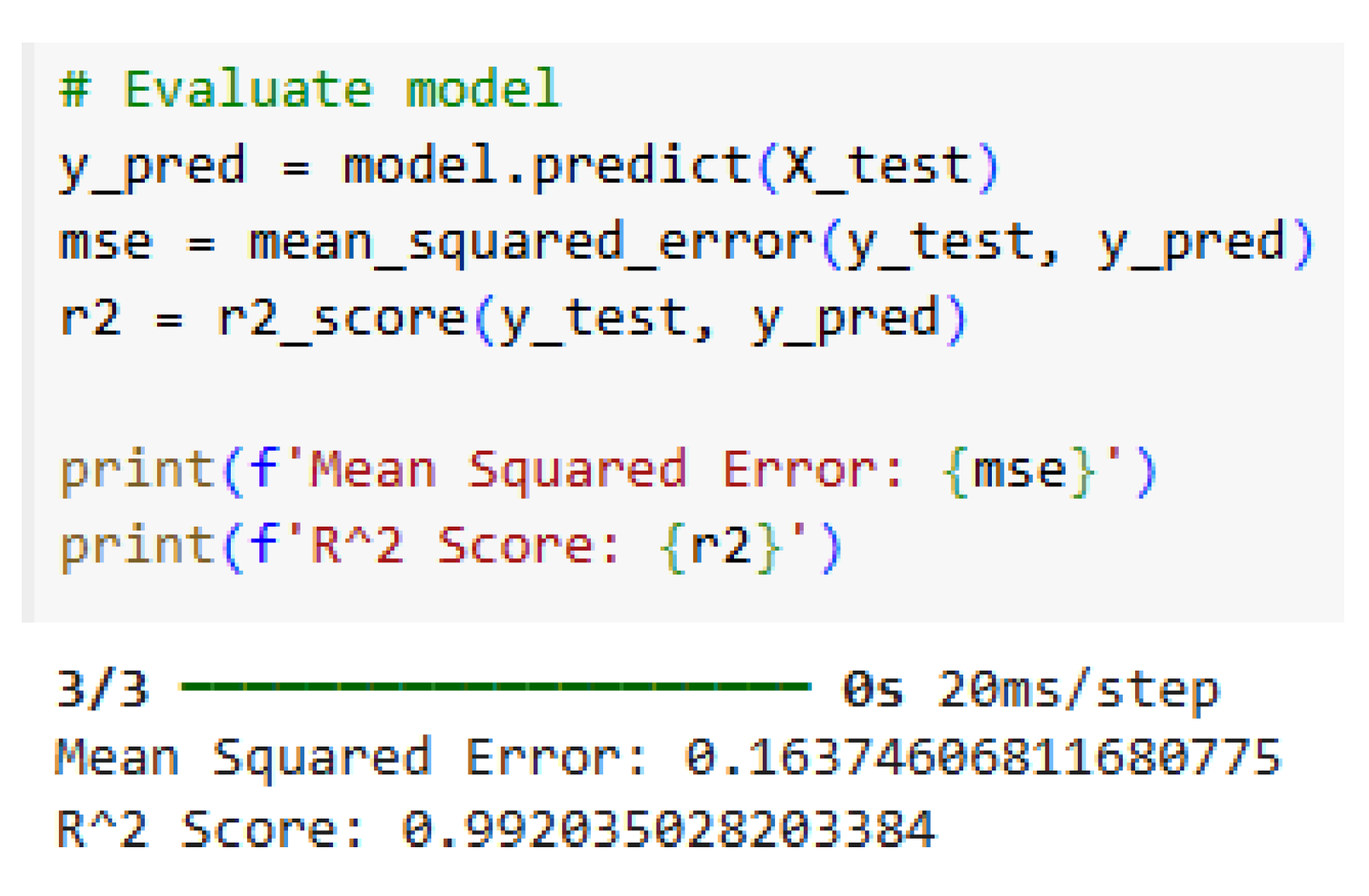

To assess the effectiveness of the Backpropagation Neural Network (BPNN) model for bandwidth allocation, two standard metrics were utilized: the Mean Squared Error (MSE) and the coefficient of determination (R

2). The trained model achieved an MSE of 0.1637, indicating a low prediction error across the test dataset. Additionally, the R

2 score was recorded at 0.9920, suggesting a strong correlation between predicted and actual values, as shown in

Figure 5.

These results confirm the model’s ability to capture underlying patterns in user access behavior and accurately predict bandwidth requirements. The near-unity R2 value highlights the robustness and reliability of the model for practical implementation in real-time network environments.

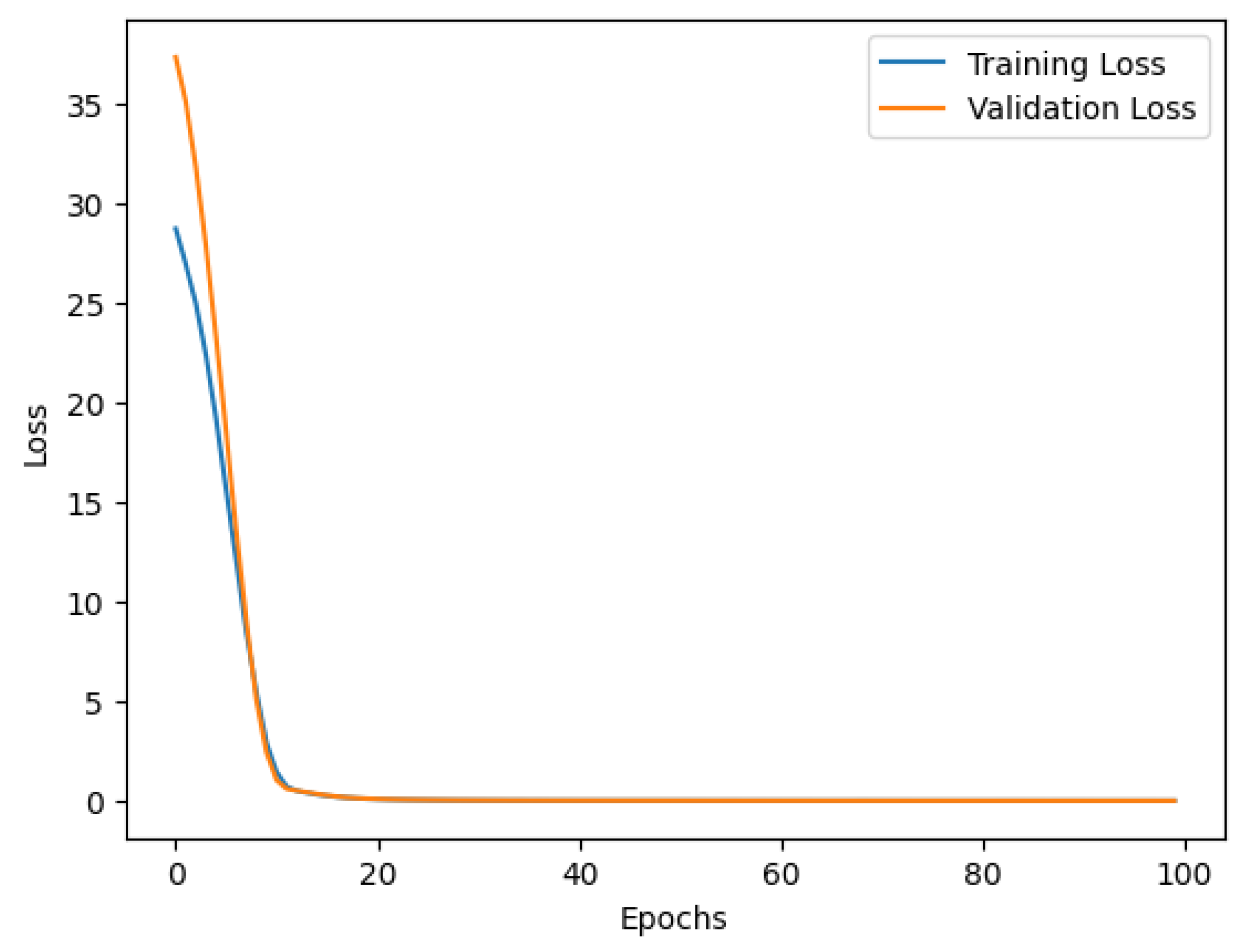

3.2. Training Convergence

The learning behavior of the BPNN model was further analyzed through its training and validation loss trends, as illustrated in

Figure 6. A rapid decline in both losses was observed within the first epochs, followed by convergence at minimal loss values. This behavior indicates efficient learning with minimal overfitting.

Such trends demonstrate the model’s capability to generalize well to unseen data, validating its potential for use in dynamic network scenarios where accuracy and consistency are critical.

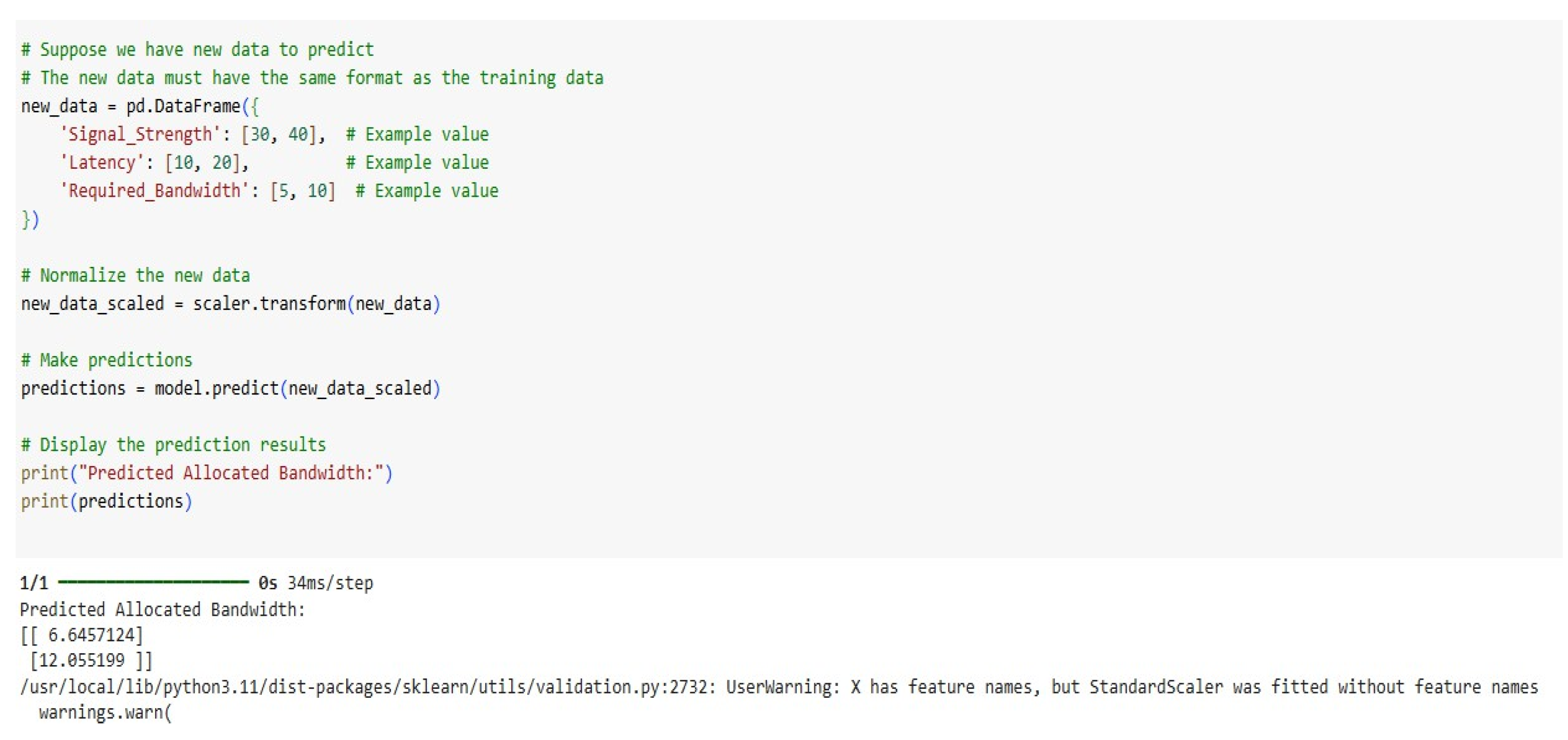

3.3. Prediction Using New Data

To evaluate the model’s real-world applicability, it was tested using new input data reflecting network parameters like signal strength, latency, and required bandwidth. These values were preprocessed using the same normalization technique applied during training. The model output the following predictions for allocated bandwidth:

The process and results are shown in

Figure 7.

These outputs confirm that the trained BPNN model can effectively respond to varying input conditions and make dynamic bandwidth allocation decisions, fulfilling the core objective of the research.

4. Discussion

The findings of this study highlight the potential of Backpropagation Neural Network (BPNN) algorithms to address critical challenges in network bandwidth allocation. With an MSE of 0.1637 and an R2 score of 0.9920, the model demonstrated excellent predictive accuracy and reliability. These results indicate that BPNN can effectively learn from historical access patterns and dynamically adjust bandwidth allocation based on real-time network conditions.

This performance is notably superior to traditional allocation methods, which often rely on static rules or threshold-based configurations and lack adaptability to varying user demands. The integration of signal strength, latency, and required bandwidth as key features enables the model to represent complex, nonlinear relationships that influence bandwidth usage.

The convergence trends observed in the training and validation loss curves further support the model’s robustness. Minimal overfitting and rapid convergence reflect the effectiveness of the network design and training configuration. This is particularly important for deployment in real-world systems, where the model must generalize well across unseen data and adapt to evolving traffic patterns.

Moreover, the application of feature normalization and structured data preprocessing contributed significantly to performance improvements. The conversion of metrics such as latency and bandwidth to consistent units (e.g., converting Kbps to Mbps) ensured uniformity and reduced noise in the training process.

These outcomes align with prior studies emphasizing the suitability of machine learning and deep learning methods for bandwidth prediction and resource optimization tasks. For example, earlier works utilizing probabilistic neural networks and fuzzy logic models have shown benefits in similar applications, but often fall short in terms of adaptability and fine-grained prediction accuracy. The use of BPNN in this study offers a more scalable and generalizable solution, particularly in high-demand network environments such as 6G, smart IoT systems, and real-time multimedia applications.

However, several limitations remain. First, the dataset used was limited in scale (400 entries) and sourced from a single domain. While this is sufficient for a proof-of-concept study, a larger and more diverse dataset would be necessary to validate the model across heterogeneous networks. Second, the model’s inference time and computational efficiency were not explored in depth, and these are important considerations for deployment in low-latency environments.

Future research should investigate the integration of recurrent architectures or transformer-based models to capture temporal dependencies in user access behavior. Additionally, exploring hybrid models that combine BPNN with reinforcement learning or evolutionary algorithms could further enhance decision-making capabilities in dynamic networks.

5. Conclusions

This study demonstrates the effectiveness of a Backpropagation Neural Network (BPNN) algorithm in optimizing bandwidth allocation for dynamic network environments. By leveraging normalized input features, such as signal strength, latency, and required bandwidth, the proposed model achieves high prediction accuracy, with an MSE of 0.1637 and an R2 score of 0.9920. These results validate the model’s reliability in accurately predicting bandwidth requirements while minimizing computational overhead. The training and validation loss trends further highlight the robustness of the model, ensuring consistent performance with minimal overfitting.

The findings emphasize the critical role of machine learning in addressing the limitations of traditional bandwidth allocation methods. By dynamically adapting to traffic patterns, the BPNN model ensures efficient resource utilization and supports high-quality service delivery. This research contributes to advance network optimization by integrating artificial intelligence with resource-aware design, paving the way for scalable and cost-effective bandwidth management solutions in modern digital infrastructures.

Author Contributions

Conceptualization, S. and M.S.A.; Methodology, A.N.F.; Software, A.N.F.; Validation, M.S.A., A.R.J. and S.; Formal Analysis, A.N.F.; Investigation, M.S.A.; Resources, M.S.A.; Data Curation, A.R.J.; Writing—Original Draft Preparation, A.R.J.; Writing—Review and Editing, A.N.F.; Visualization, M.S.A.; Supervision, S.; Project Administration, S.; All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Conflicts of Interest

The authors declare no conflict of interest.

References

- Usman, A.I.; Mawarzi, H. Optimasi Penggunaan Bandwidth Internet Dengan Metode Fuzzy Sugeno; Seminar Nasional Teknologi Informasi, Komunikasi dan Industri (SNTIKI): Pekanbaru, Indonesia, 2020. [Google Scholar]

- Hassan, E.S.; Abdelaal, A.E.A.; Oshaba, A.S.; El-Emary, A.; Dessouky, M.I.; El-Samie, F.E.A.; Singh, R. Optimizing bandwidth utilization and traffic control in ISP networks for enhanced smart agriculture. PLoS ONE 2024, 19, e0300650. [Google Scholar] [CrossRef]

- Cheng, B.; Li, D.; Zhu, X.; Ayub, N. Optimizing load scheduling and data distribution in heterogeneous cloud environments using fuzzy-logic based two-level framework. PLoS ONE 2024, 19, e0310726. [Google Scholar] [CrossRef] [PubMed]

- Dhinnesh, A.D.C.N.; Sabapathi, T. Probabilistic neural network based efficient bandwidth allocation in wireless sensor networks. J. Ambient. Intell. Humaniz. Comput. 2021, 13, 2001–2012. [Google Scholar] [CrossRef]

- Oh, H.; Lee, J.; Kim, H.; Seo, J. Out-of-order backprop. In Proceedings of the 17th European Conference on Computer Systems, Tel Aviv, Israel, 23–27 October 2022; pp. 435–452. [Google Scholar]

- Labonne, M.; Chatzinakis, C.; Olivereau, A. Predicting Bandwidth Utilization on Network Links Using Machine Learning. In Proceedings of the 2020 European Conference on Networks and Communications (EuCNC), Dubrovnik, Croatia, 15–18 June 2020; pp. 242–247. [Google Scholar]

- Lillicrap, T.P.; Santoro, A.; Marris, L.; Akerman, C.J.; Hinton, G. Backpropagation and the brain. Nat. Rev. Neurosci. 2020, 21, 335–346. [Google Scholar] [CrossRef] [PubMed]

- Boovarahan, N.; Lakshmibai, T.; DineshKumar, T.; Saraswathi, K.; Sugapriya, K.; Sundar, T. Back Propagation Model Based Machine Learning In Feed Forward Neural Networks for 6G Communication. In Proceedings of the 2024 2nd International Conference on Self Sustainable Artificial Intelligence Systems (ICSSAS), Erode, India, 23–25 October 2024; pp. 726–735. [Google Scholar]

- Hayat, C.; Soenandi, I.A.; Limong, S.; Kurnia, J. Modeling of prediction bandwidth density with backpropagation neural network (BPNN) methods. In IOP Conference Series: Materials Science and Engineering; IOP Publishing: London, UK, 2020. [Google Scholar]

- Vlachas, P.; Pathak, J.; Hunt, B.; Sapsis, T.; Girvan, M.; Ott, E.; Koumoutsakos, P. Backpropagation algorithms and Reservoir Computing in Recurrent Neural Networks for the forecasting of complex spatiotemporal dynamics. Neural Netw. 2020, 126, 191–217. [Google Scholar] [CrossRef] [PubMed]

- Liu, B.; Zhang, L.; Wang, F.; Liu, M.; Mao, Y.; Zhao, L.; Sun, T.; Xin, X. Adaptive dynamic wavelength and bandwidth allocation algorithm based on error-back-propagation neural network prediction. Opt. Commun. 2019, 437, 276–284. [Google Scholar] [CrossRef]

- Kumari, N.; Parvathi, M.H.; Gangarapu, S.K.; Subramaniam, K. Deep Recurrent Neural Network for Bandwidth Prediction in Software Defined Data Center Networks. In Proceedings of the 2020 IEEE International Conference on Electronics, Computing and Communication Technologies (CONECCT), Bangalore, India, 2–4 July 2020; pp. 1–6. [Google Scholar]

- Lee, H.; Kang, Y.; Gwak, M.; An, D. Bi-LSTM model with time distribution for bandwidth prediction in mobile networks. ETRI J. 2023, 46, 205–217. [Google Scholar] [CrossRef]

- Odim, M.; Osamor, V.C.; Odim, M.O. Required Bandwidth Capacity Estimation Scheme For Improved Internet Service De-livery: A Machine Learning Approach. Int. J. Sci. Technol. Res. 2019, 8, 8. Available online: www.ijstr.org (accessed on 15 January 2025).

- Ding, L.; Shi, C.; Wang, F.; Zhou, J. Low probability of intercept-based cooperative node selection and transmit resource allocation for multi-target tracking in multiple radars architecture. IET Signal Process. 2022, 16, 515–527. [Google Scholar] [CrossRef]

- Ke, Z.; Xu, J.; Zhang, Z.; Cheng, Y.; Wu, W. A Consolidated Volatility Prediction with Back Propagation Neural Network and Genetic Algorithm. In Proceedings of the 2024 International Conference on Image Processing, Computer Vision and Machine Learning (ICICML), Shenzhen, China, 22–24 November 2024; pp. 1671–1675. [Google Scholar]

- Jayarajan, A.; Wei, J.; Gibson, G.; Fedorova, A.; Pekhimenko, G. Priority-Based Parameter Propagation for Dis-Tributed DNN Training. 2019. Available online: https://github.com/anandj91/p3 (accessed on 14 January 2025).

- Xu, J.; Wang, H. Client Selection and Bandwidth Allocation in Wireless Federated Learning Networks: A Long-Term Perspective. IEEE Trans. Wirel. Commun. 2020, 20, 1188–1200. [Google Scholar] [CrossRef]

- Liem, A.T.; Hwang, I.-S.; Ganesan, E.; Taju, S.W.; Sandag, G.A. A Novel Temporal Dynamic Wavelength Bandwidth Allocation Based on Long-Short-Term-Memory in NG-EPON. IEEE Access 2023, 11, 82095–82107. [Google Scholar] [CrossRef]

- Olatinwo, S.O.; Joubert, T.-H. Deep Learning for Resource Management in Internet of Things Networks: A Bibliometric Analysis and Comprehensive Review. IEEE Access 2022, 10, 94691–94717. [Google Scholar] [CrossRef]

| Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).