1. Introduction

Throughout history, human societies have developed complex communication systems to convey feelings, intentions, and thoughts. These systems are not solely dependent on spoken language but also include expressions through visually observable cues such as emotions, postures, and facial features [

1]. Due to the prominent role of facial cues in communication, humans have honed the ability to recognize physical and emotional states by interpreting facial expressions [

2]. This trait is rooted in a biological imperative, shared by humans and animals, to avoid diseases. It has evolved as a mechanism to limit contamination by enabling the early identification of potentially sick individuals [

3]. A study conducted by [

4] established a correlation between several facial cues and the accurate identification of individuals in a sleep-deprived state. These cues include droopy eyelids, drooping mouth corners, swollen eyelids, dark circles, pale skin, wrinkles, and eye redness. Given that sleep deprivation is a byproduct of fatigue and can also be a symptom of more serious health conditions, the use of visually observable cues may aid in its detection.

Detecting multiple visually observable facial cues using computer vision techniques remains underexplored, as noted in [

4]. A crucial step for successful detection is extracting regions of interest (ROIs), which are informative areas of an image isolated from the background [

5], to enhance the reliability and specificity of the analysis. While deep learning models such as Convolutional Neural Networks (CNNs) fused with a Multi-Block Local Binary Pattern (MB-LBP) [

6] and state-of-the-art object detectors like YOLOv5 and YOLOv11 [

7,

8] have demonstrated high accuracy in facial analysis tasks, these models are typically resource-intensive and require substantial annotated datasets to achieve adequate generalization, often limiting their effectiveness for detecting fine-grained facial cues such as eye redness, droopy eyelids, and mouth asymmetry in real-time or constrained environments. Vision Transformers (ViTs), which model long-range dependencies through self-attention rather than local receptive fields, offer promising results in fine-grained image classification [

9], but their heavy computational overhead makes them impractical for lightweight applications. MediaPipe [

10], a product from Google LLC, located in Mountain View, CA, USA, while efficient and widely used in mobile environments, is focused on general facial landmarks and lacks sensitivity to pathological or clinical facial cues. In contrast, traditional techniques like Haar cascade classifiers [

11] remain highly suitable for applications that demand speed, low computational load, and interpretable models. Their design, which relies on handcrafted features and integral image calculations, allows for fast ROI detection, making them ideal for edge deployment and embedded systems. Moreover, Haar cascade’s rule-based architecture enables precise tuning for specific facial areas such as the eye and perioral regions, allowing it to be adapted for cues that require high sensitivity despite limited computational resources.

Computer vision seeks to replicate human visual behavior, making the brain’s decision-making processes a critical consideration. A study by [

12] examined the processing patterns of the human brain in facial recognition, revealing that the visual system follows a retinotopic bias, with the eyes typically appearing in the upper visual field and the mouth in the lower visual field. The study further emphasized that the eye and mouth regions are crucial for both recognition and categorization. When recognizing faces and facial features, the arrangement of facial elements significantly impacts accuracy [

13], suggesting that a feature is more easily identifiable when part of the whole face rather than in isolation. Additionally, symmetry plays a vital role in facial recognition. Humans tend to recognize faces more easily by distinguishing symmetrical features in the left and right profiles, even when accounting for viewpoint invariance [

14].

This study aims to extract the periorbital and perioral regions as regions of interest (ROIs) for facial cue detection, with a specific focus on accurately identifying the eyes and mouth. To achieve this, OpenCV’s Haar cascade classifiers are employed to detect the eyes and mouth within the facial image. Following the initial detection, a correction algorithm, grounded in normal human facial anatomy, is applied to refine the results. This correction algorithm accounts for typical facial symmetry and anatomical placement, improving the accuracy of the detected regions. The extracted features are expected to accurately delineate the two eyes within the periorbital region and the mouth within the perioral region, which are critical areas for detecting various facial cues related to emotional and physical states. By focusing on these specific regions, this method aims to enhance the precision of facial feature detection while addressing challenges such as droopy eyelids or mouths and variations in facial expressions.

This study utilizes Haar cascade classifiers with a high recall value, ensuring that facial features are correctly recognized with minimal concern for false positives. The high recall rate will ensure that the most relevant features are detected, even at the risk of including some false positives. To address this, a subsequent correction algorithm is implemented to filter out false positives by referencing the expected locations of facial features based on typical human facial anatomy. This algorithm refines the initial detection results, ensuring greater accuracy by retaining only valid detections of eyes and mouths within their respective regions.

2. Materials and Methods

This section describes the procedures undertaken to enhance the performance of the Haar cascade classifier for facial feature detection. The study focuses on refining existing model architecture and parameters to improve accuracy in identifying key facial features such as the eyes, nose, and mouth. The dataset, preprocessing steps, training configurations, and evaluation metrics used are detailed in the following subsections to ensure the reproducibility and clarity of the improvement process.

2.1. Cascade Classifier Configuration and Training Process

For facial feature detection, this study employed Haar cascade classifiers. OpenCV’s readily available eye cascade classifier was used to detect the eyes, while for the mouth, a custom classifier was trained due to the absence of an existing mouth classifier. The researchers trained this classifier using 300 positive mouth images and 2500 negative background images, with 30 iterations. The Cascade Trainer GUI provided by [

15] was utilized for the training process. A study by [

16] demonstrated that fewer than 300 training images can be effective for certain categories, especially when supported by cross-object identification. In this study, image augmentation techniques, including random rotations, flipping, and automatic brightness adjustment, were applied to the training images to enhance the dataset and improve the classifier’s robustness. The classifier was additionally trained using publicly available images of facial cues that met the feature criteria described in

Table 1.

Although augmentation techniques, including random rotation, flipping, and brightness adjustment, were applied to increase robustness, the dataset remained relatively small. Furthermore, the use of images sourced from dermatology and facial surgery websites limited diversity and representativeness, potentially affecting generalization across diverse real-world conditions.

2.2. Preparation of Test Images

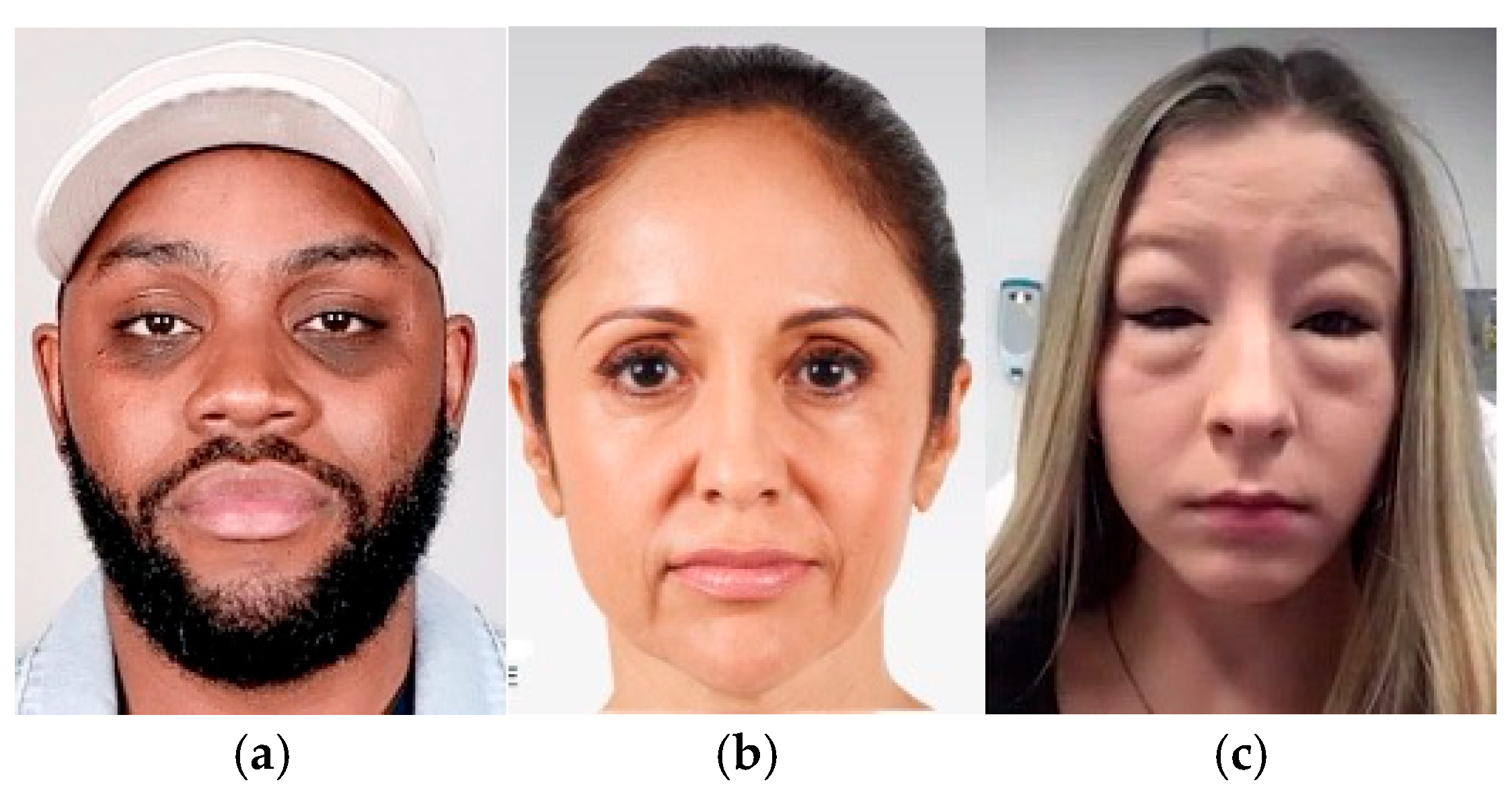

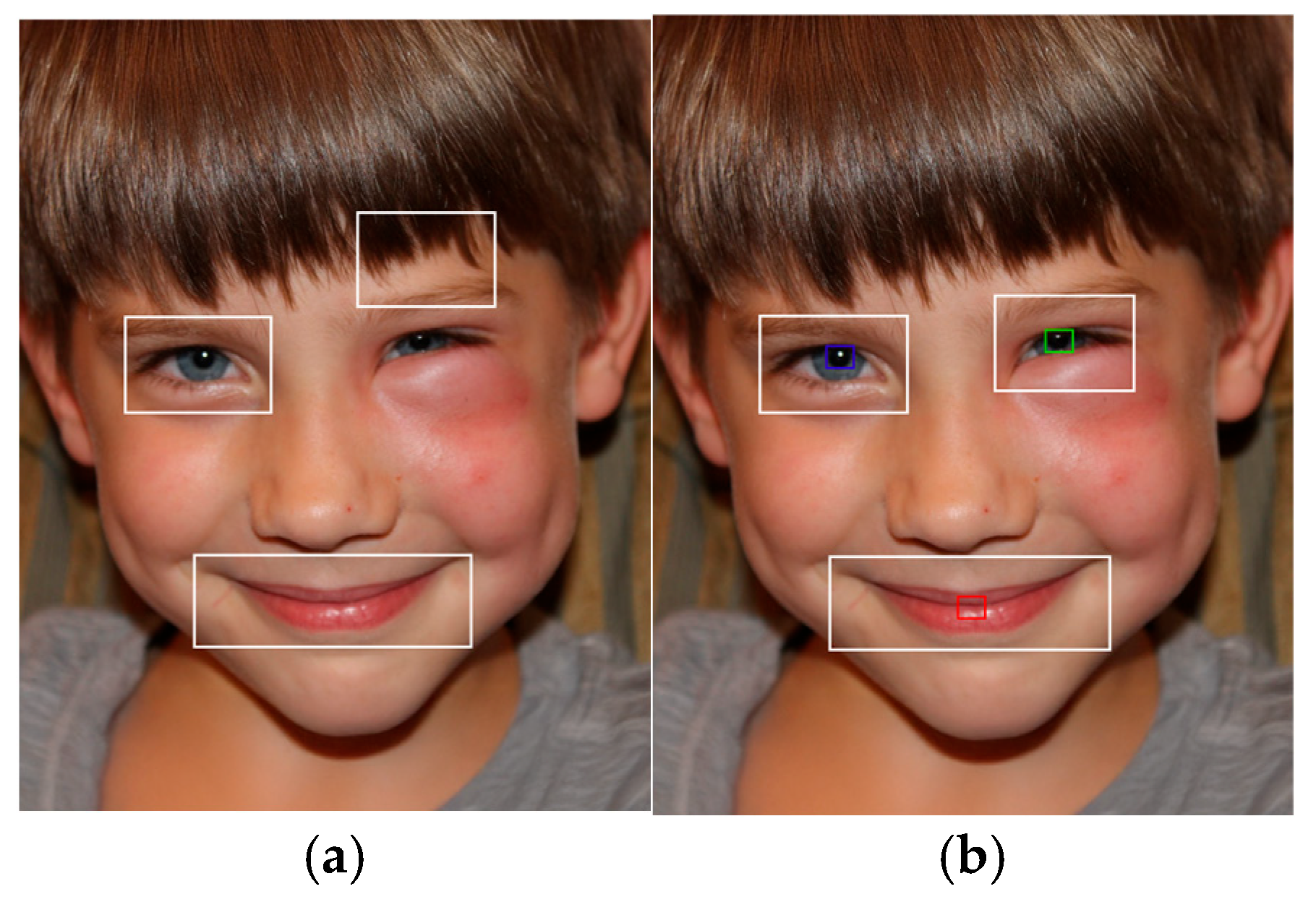

The test images were sourced from various online platforms. Images of dark circles, droopy eyelids, and droopy mouth corners were obtained from dermatology and facial surgery clinic websites. Eye redness images were collected from cases of conjunctivitis, eye infections, and allergies, while swollen eyelid images were sourced from instances of periorbital edema, insect bites, and allergies as shown in

Figure 1. The researchers manually marked coordinates corresponding to the central points of the eyes and mouth, typically the iris and the center of the lips, respectively. These coordinates were then recorded and saved in a CSV file to be read by the system.

2.3. Eye Detection and Correction Pipeline

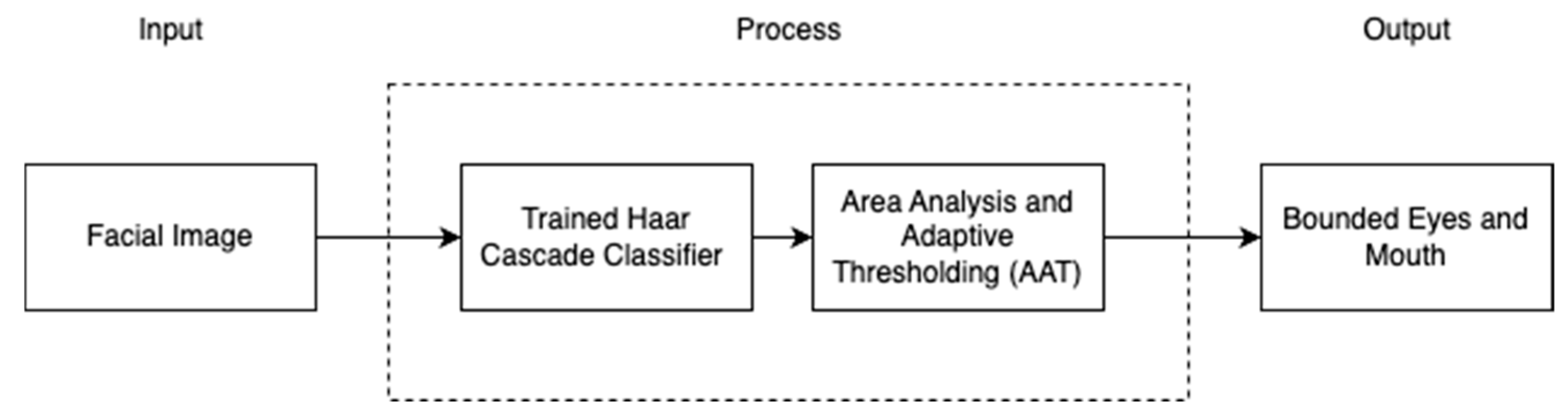

Several methods can be employed for eye extraction, with some of the most common techniques including Hough Transform, as utilized in [

1]; image segmentation using HSV analysis, as demonstrated in [

1]; and Haar cascade classifiers (

Figure 2), which are the focus of this study. The researchers selected OpenCV’s eye cascade classifier due to its robust training on a significantly larger dataset compared to the data currently available to the researchers.

2.4. Eye Detection and Correction Algorithm

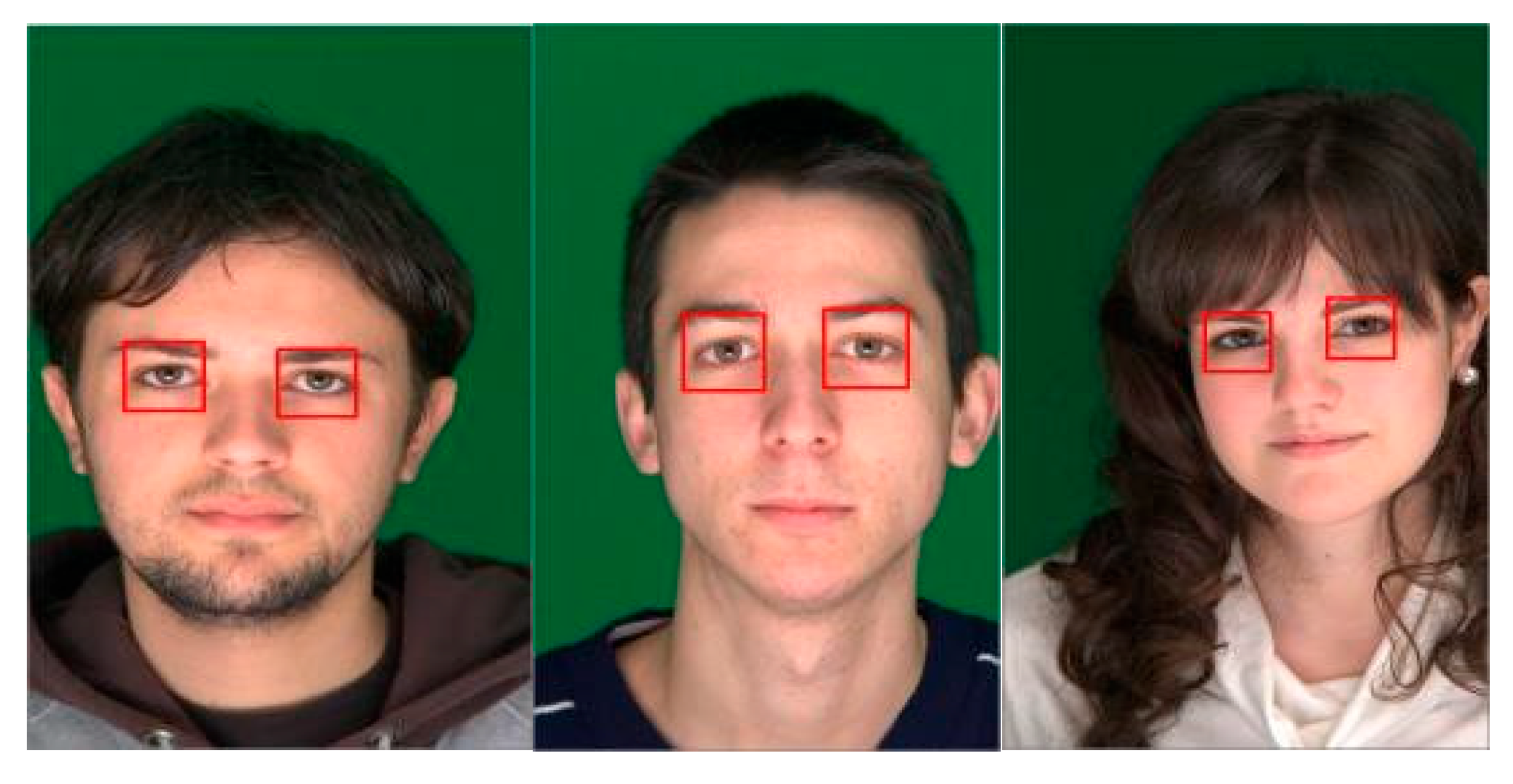

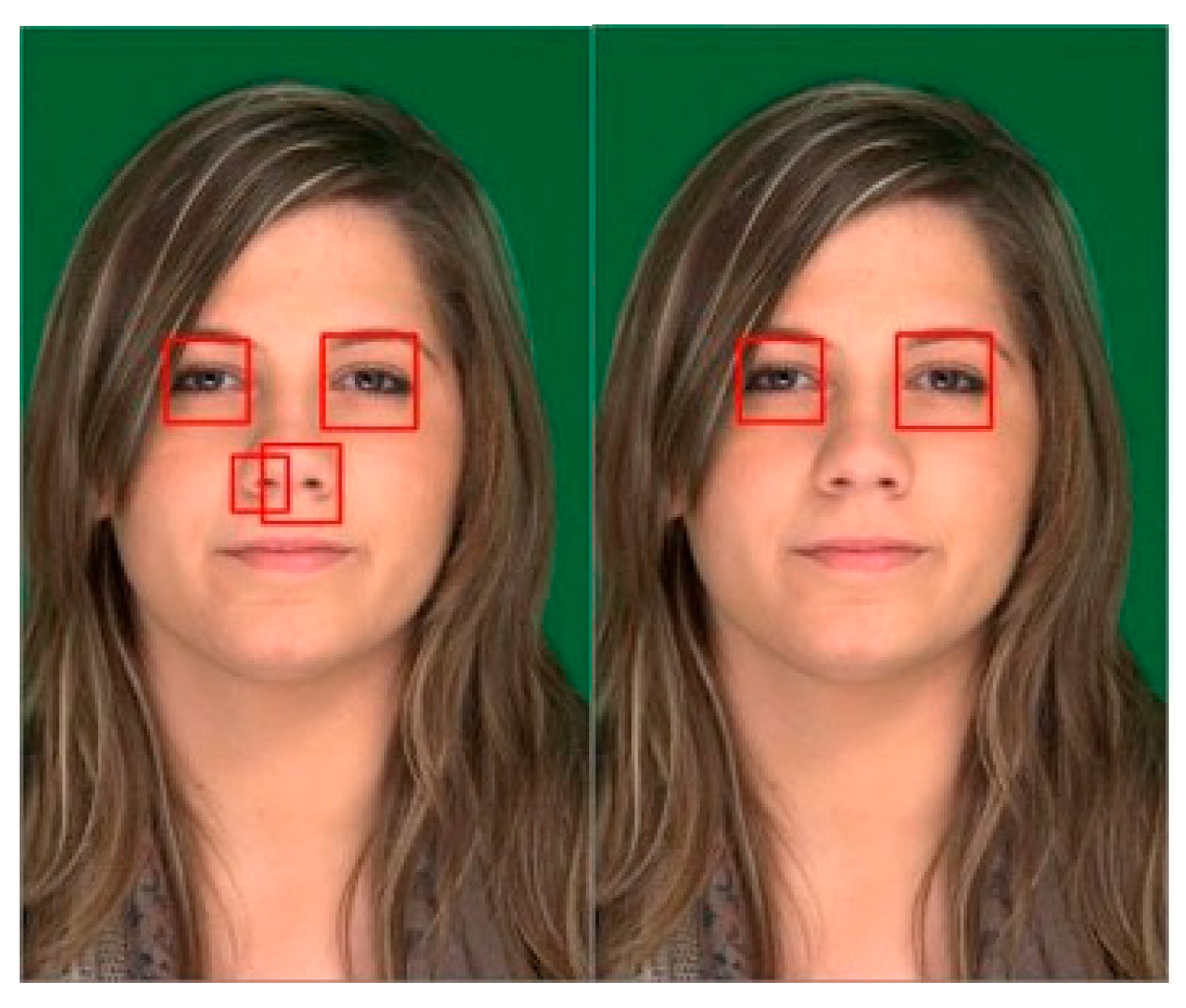

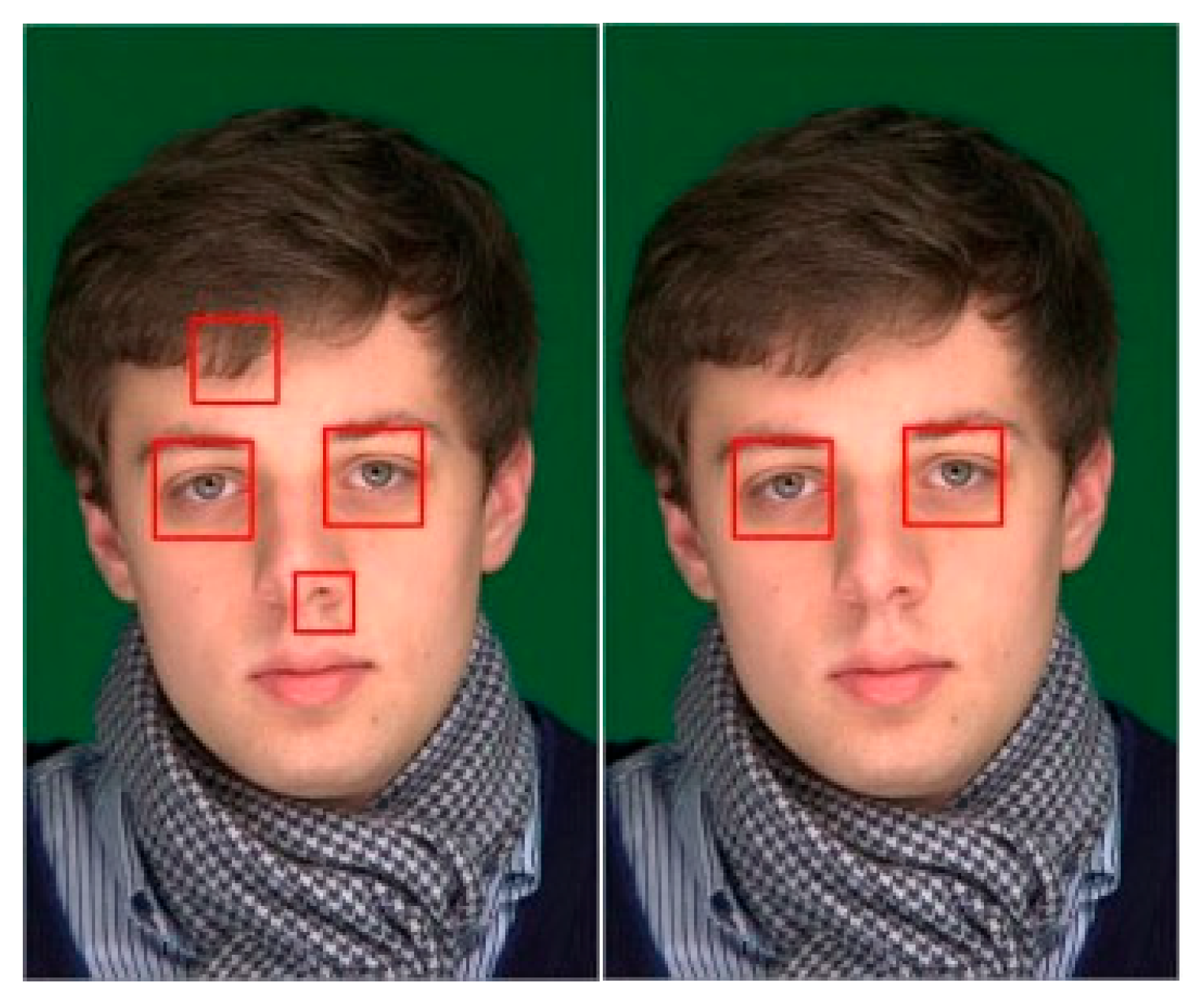

To evaluate its performance, the classifier was tested on 160 sample images from the Siblings dataset [

16] in

Figure 3 and

Figure 4.

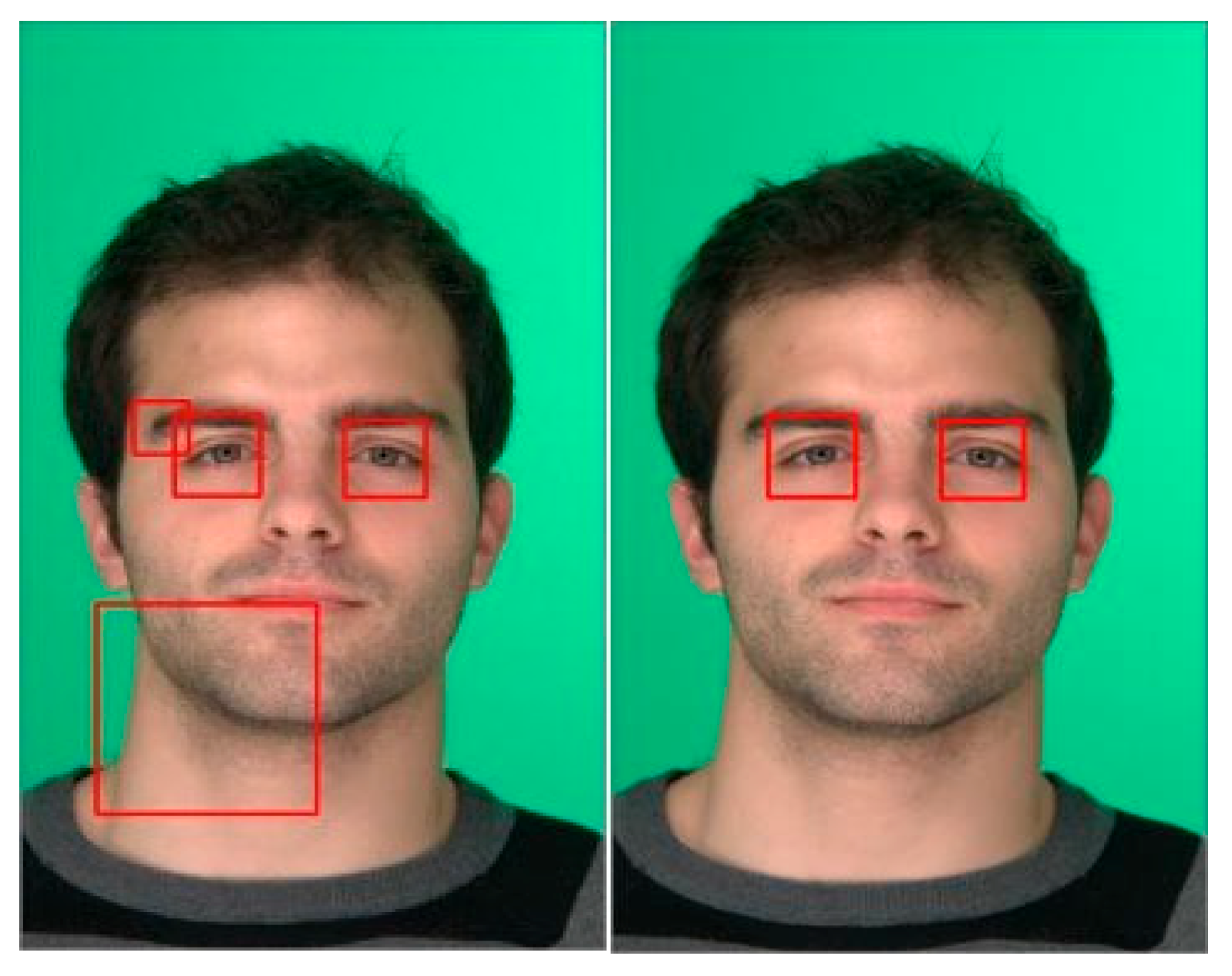

To address the issue of false-positive results as seen

Figure 4, the researchers adopted the concept proposed in [

13], which relates specific facial features to other features on the face. In this study, the high recall value of the eye cascade detection ensured that two eyes were reliably included in the detection results, while other detections were classified as false positives. For a typical human face in an upright position, two eyes are expected to exhibit symmetry, characterized by similar sizes, nearly identical vertical alignment, and noticeable spacing between them. It is important to acknowledge that the degree of symmetry may vary across individuals; therefore, a range of acceptable values was incorporated into the evaluation. The logical implementation of this correction process is illustrated in Algorithm 1.

| Algorithm 1: Compare Detection Pairs |

procedure CompareDetectionPairs():

Initialize found ← false

if the detection pair is within LocationThreshold then

if the detection size is within SizeThreshold then

if detection is within VerticalTolerance then

if detection is within VerticalTolerance then

if detection spacing is within SpaceThreshold then

return found

end procedure |

In the process of determining the two eyes, the Haar cascade detection results are processed in pairs. An initial iterator traverses through the resulting detection list, comparing each item with the remaining subsequent items. This comparison is carried out within a subprocess, as illustrated in Algorithm 1. The transversing continues until a pair that satisfies all the conditions outlined in the subprocess is found or until all items in the list have been checked. The conditions are based on several key considerations regarding the typical positioning of facial features. First, the detected pair is assessed to ensure that it is in the upper region of the face, using a pre-set location threshold value. This step ensures that only eyes in the upper part of the face are considered, avoiding confusion with objects in the lower region (such as the mouth), which share similar shapes. The location threshold is defined relative to the image height, so if the eyes are detected in the lower part of the image, they will be disregarded. Next, the sizes of the pairs are compared to check whether they fall within a specific range when scaled, ensuring that the detected eyes are of roughly equal size. Finally, the last two conditions evaluate the vertical tolerance and horizontal spacing between the two eyes, both of which are also defined by initial values. These conditions help confirm the symmetry between the eyes, ensuring that their horizontal and vertical displacements are consistent with each other.

In the implementation of the eye extraction correction algorithm, several threshold values were tested, yielding varying results. From these tests, the combination of threshold values that provided the best accuracy was selected. The resulting detections, both before and after the correction algorithm was applied, are shown in

Figure 5,

Figure 6 and

Figure 7.

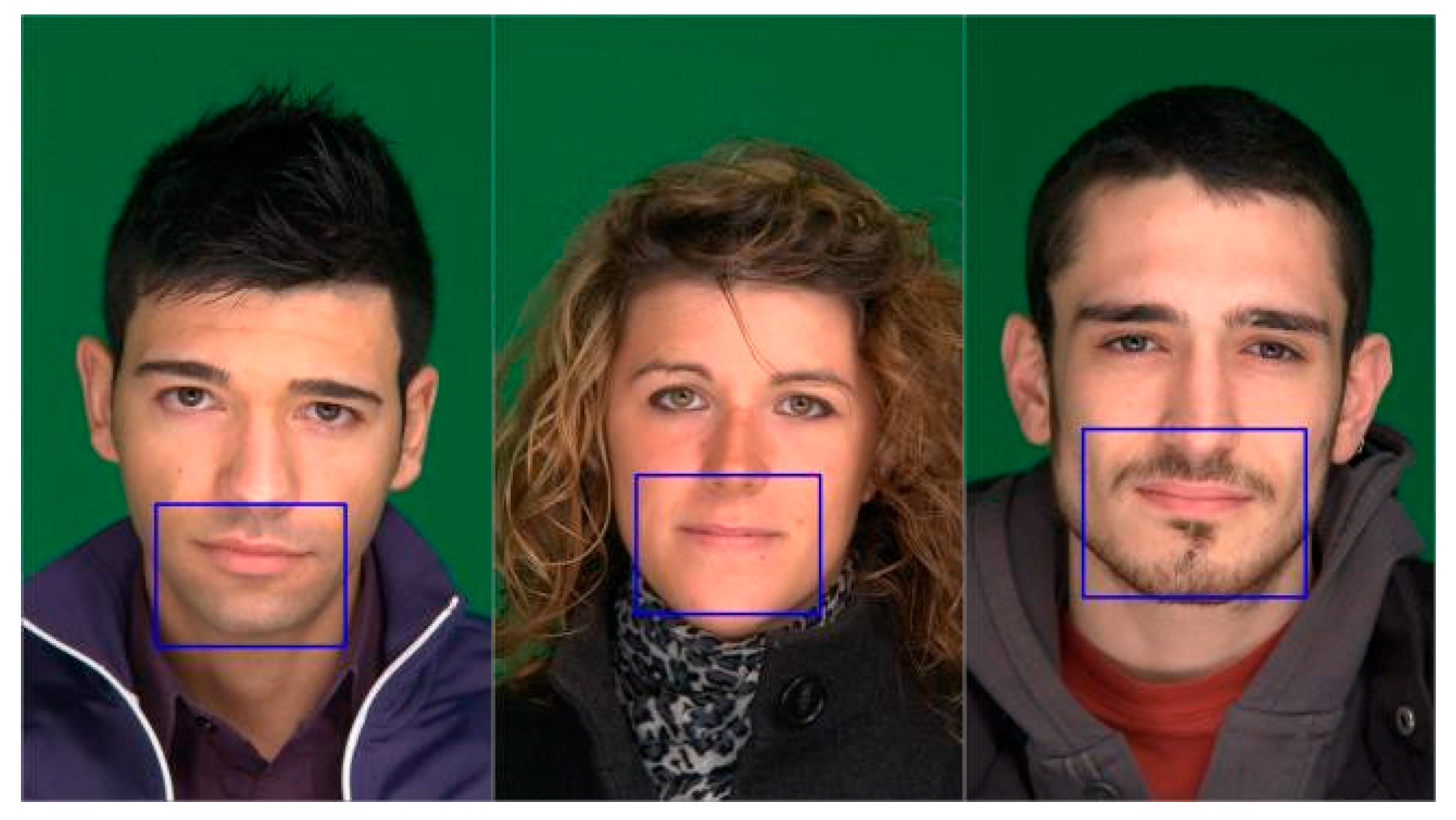

2.5. Mouth Detection Optimization

The trained mouth cascade classifier was tested, and it was found that its recall value was lower compared to OpenCV’s eye detection. This discrepancy is likely due to the limited training data available, with only 300 positive images used. Despite this limitation, a significant number of true positives were detected, largely due to the use of image augmentation and a high iteration count. Examples of these results are shown in

Figure 8. Based on the typical positioning of a normal, upright human face, the mouth is expected to be located at a certain distance below the eyes, with its horizontal center aligned with the area between the two eyes. Using these considerations, the logic for selecting the true positive mouth is illustrated in Algorithm 2.

| Algorithm 2: Checking Mouth Considerations |

procedure DetectMouthBasedOnEyeLocation(detection, eyeDetails):

mouthDetected ← false

for each eye in EyeDetails:

eyeX, eyeY, eyeW, eyeH ← eye

if detection is below eyeY + eyeH and within threshold:

mouthDetected ← true

return mouthdetected

end procedure |

2.6. Estimation of Eye and Mouth Areas

Separate detections for the eyes and mouth were achieved with acceptable accuracy. However, when either of these features is not detected, an estimation is made based on the other detected facial features. Specifically, if the eyes are detected but the mouth is not, the mouth area is estimated, and vice versa. This contingency step helps increase the system’s accuracy, especially in cases where one of the features is unrecognizable. Compared to the Haar cascade detection, the area selected for estimation is intentionally larger to account for varying facial conditions, as this contingency is often triggered under unusual circumstances. The estimations are based on the previously mentioned expectations in [

13], considering normal human facial anatomy with symmetrical features. It is important to note that these estimations still depend on the accuracy of the initial Haar cascade detection, as a false detection of one feature will lead to an inaccurate estimation.

2.7. Mean IoU Computation

To quantitatively assess the accuracy of eye and mouth region location, the Mean Intersection over Union (IoU) was used as a primary evaluation metric. IoU is widely used in object detection tasks to measure the overlap between the predicted bounding box

Bp and the ground truth bounding box

Bgt. It is calculated as the ratio of the area of their intersection to the area of their union, as shown in Equation (1):

For each image sample, the IoU was computed and then averaged across all test samples to determine the Mean IoU for each facial region. This metric provides a reliable indication of how closely the predicted areas align with manually annotated ground truth data. Higher Mean IoU values suggest better regional localization, which is critical for applications that rely on accurate identification of the eyes and mouth. This method aligns with standard practices in evaluating object detection algorithms, as discussed in [

19,

20].

3. Results and Discussion

The Haar cascade-based facial feature ROI extraction was tested on images containing various facial cues. A total of 180 images were collected, divided into six groups, with 30 images in each group representing one of the following facial cues: dark circles, droopy eyelids, droopy mouth corners, eye redness, swollen eyelids, and a control group with no facial cues.

3.1. Feature Extraction Testing and Acceptance

Facial features are input into a neural network. As such, the results of the extraction are categorized into accepted and rejected images. An image is accepted if all three features (i.e., the left eye, the right eye, and the mouth) are detected and the central points marked fall within the boundaries of the detected regions. Additionally, the researchers aimed to assess the effectiveness of including an estimation step by conducting a separate test without it.

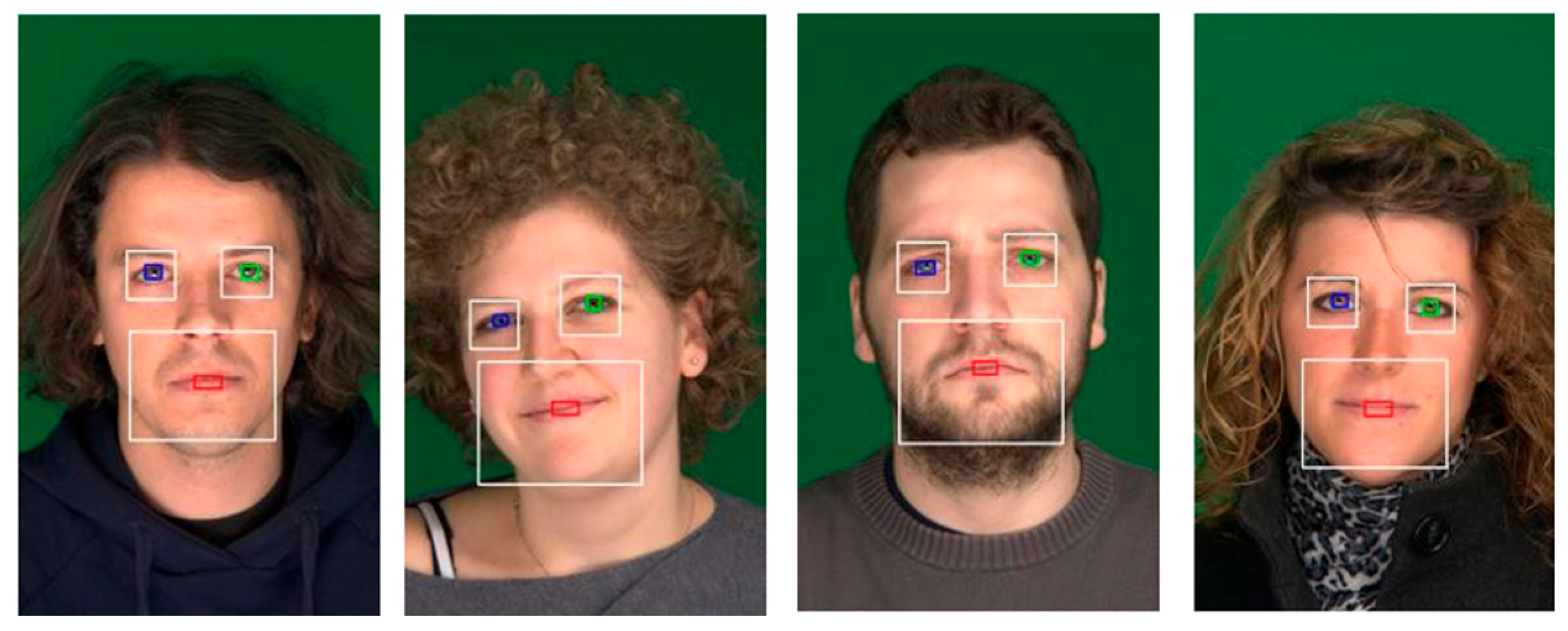

Figure 9 illustrates an example of detection, where the marked points are shaded, and the detected regions are outlined in white.

Table 2 presents the results of the system without the estimation, while

Table 3 includes the results with the estimation.

Table 2 shows the extraction of eye and mouth features without thresholding or estimation, as also shown in

Figure 9. The highest detection rate was observed in individuals with droopy mouth corners, achieving a maximum of 80%, while the lowest detection rate was achieved for swollen eyelids, with a minimum of 40%. Out of the 180 test images, 59 were rejected due to failure to correctly detect the facial cue.

Table 3 shows the feature extraction of the eyes and mouth after applying estimation and thresholding. With these modifications, the detection rate for individuals with droopy mouth corners increased to 86.67%. Notably, the detection rate for droopy mouth corners reached 100% after applying both estimation and thresholding. These improvements demonstrate the effectiveness of the estimation and thresholding techniques in enhancing detection accuracy.

3.2. Performance of Haar Cascade with AAT Technique

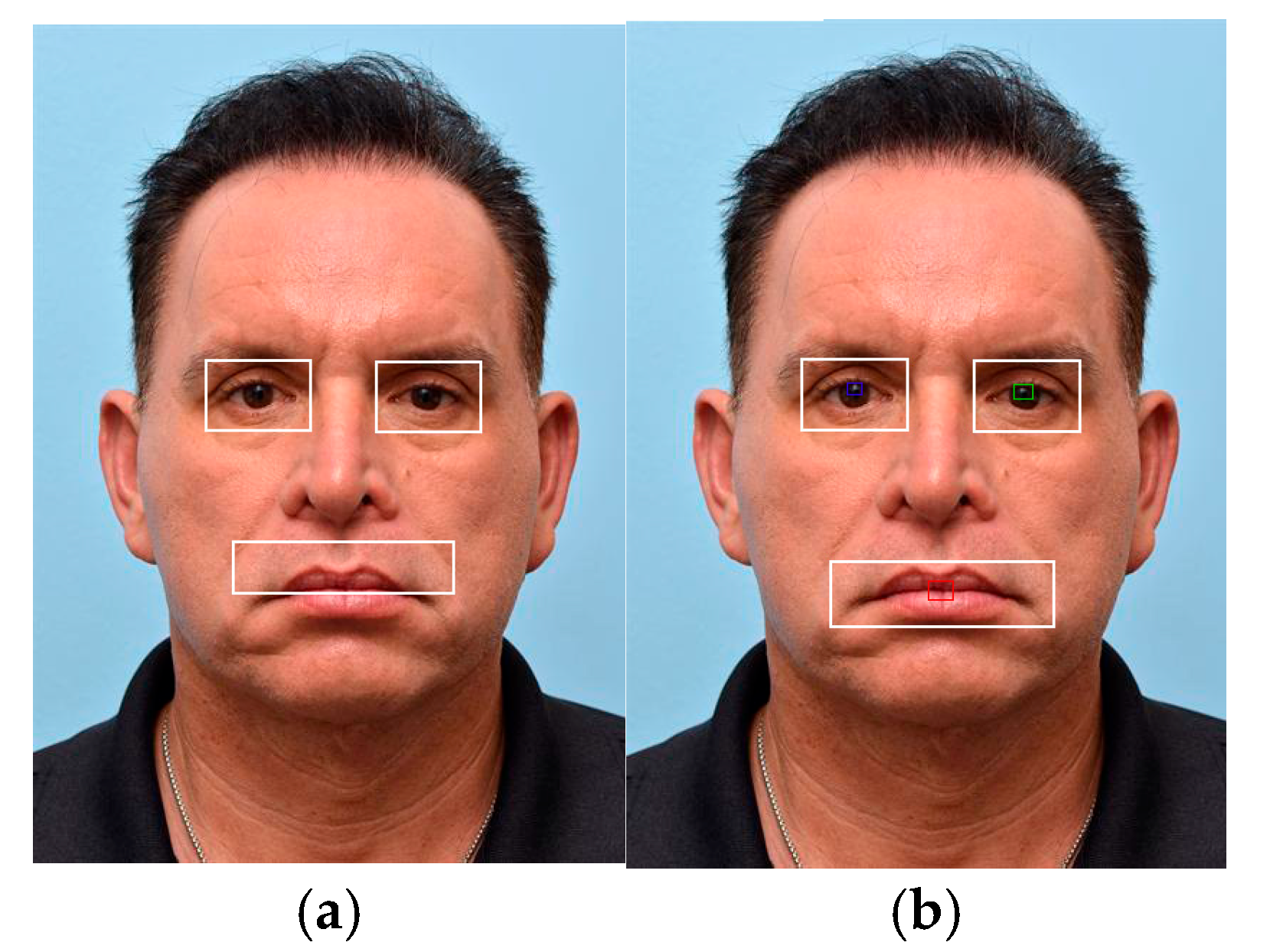

The results show how the Haar cascade classifier, combined with the Adaptive Analysis Technique (AAT), detects facial cues in digital images. AAT helps improve the accuracy of detection by focusing on important parts of the face. As the trained model reads the image, it marks the facial areas based on the cues it has learned. Some example results are shown in

Figure 10 and

Figure 11.

To evaluate the accuracy of the Haar cascade classifier with the Adaptive Analysis Technique (AAT), the Mean Intersection over Union (IoU) was computed based on the overlap between the predicted and ground truth bounding boxes. As shown in

Table 4, the inclusion of AAT led to higher IoU scores across most facial cues, indicating improved localization and more reliable detection performance.

Each facial region (e.g., eye redness and droopy eyelids) was manually labeled with bounding boxes in a subset of 180 images, which were then used to calculate the Mean Intersection over Union (IoU) between the model’s predictions and the reference regions.

Overall, the results demonstrate that integrating the Adaptive Analysis Technique (AAT) with the Haar cascade classifier significantly enhances the detection of eyes and mouths with known facial cues by improving both accuracy and localization. The quantitative metrics, including acceptance rates and Mean IoU values, support the effectiveness of this approach in identifying key regions of interest. These findings highlight the potential of lightweight computer vision techniques for practical facial feature analysis, paving the way for further improvements and broader applications.