Enhancing the Precision of Eye Detection with EEG-Based Machine Learning Models †

Abstract

1. Introduction

2. Literature Review

3. Methodology

3.1. Dataset Features

3.2. Tool

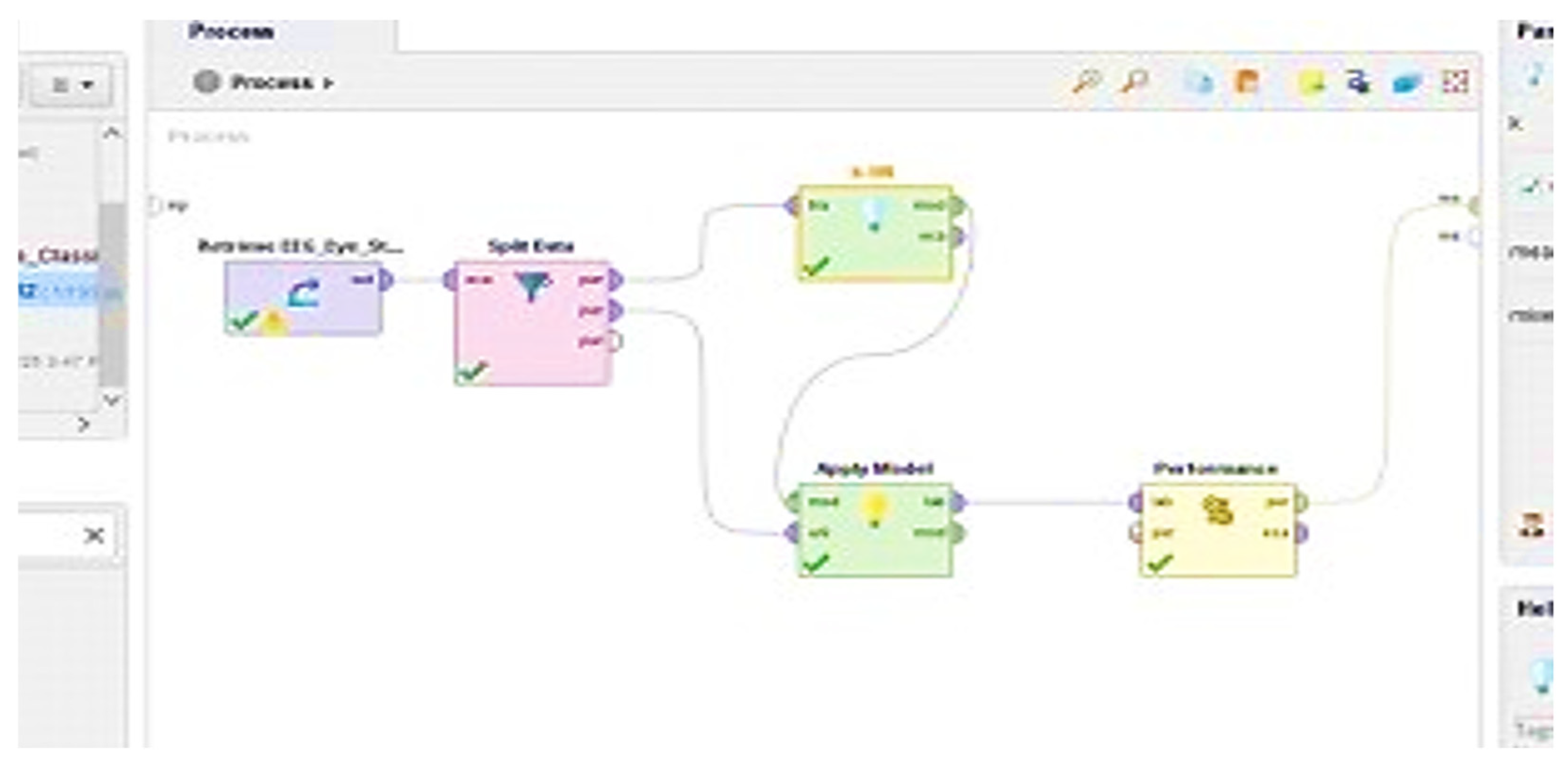

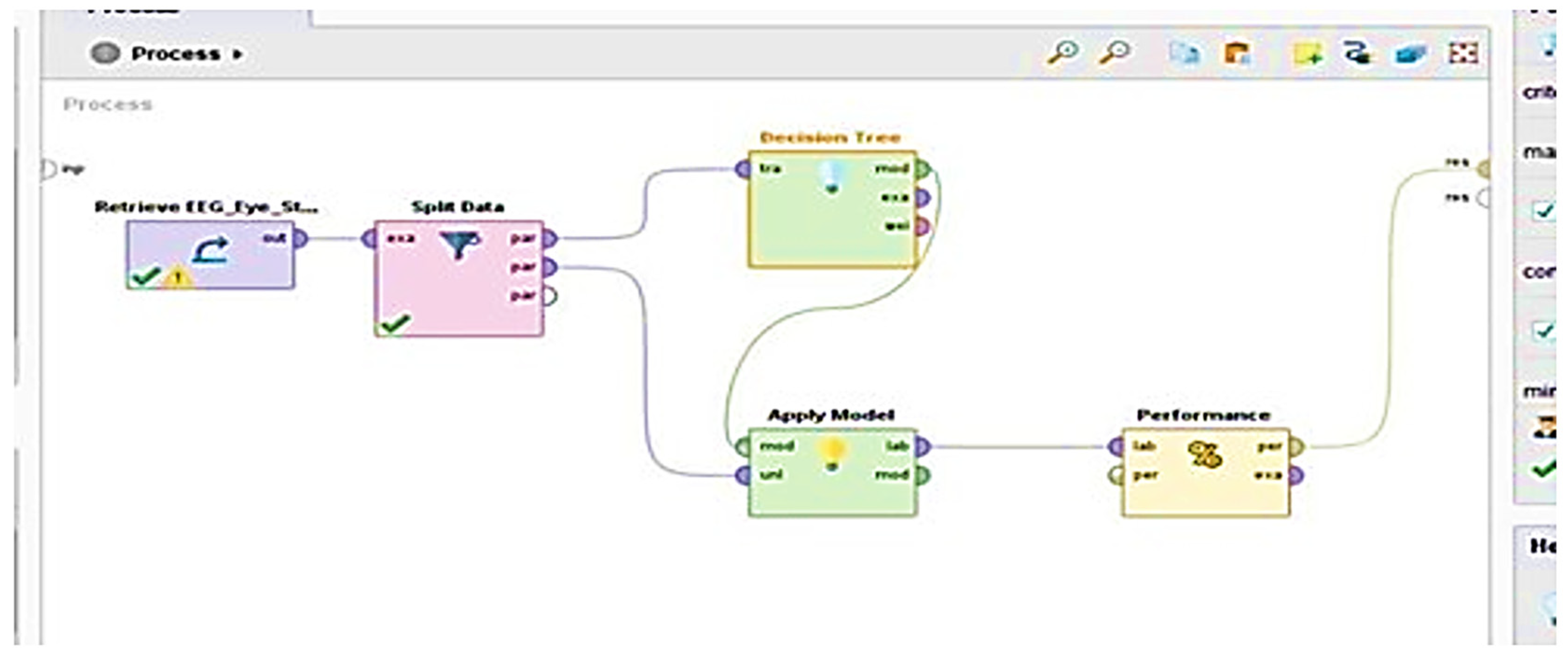

3.3. Predictive Analytic Workflow

3.4. K-NN Model

3.5. Deep Learning

3.6. Decision Tree

3.7. Random Forest

4. Results

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Ramos-Garcia, R.I.; Tiffany, S.; Sazonov, E. Using respiratory signals for the recognition of human activities. In Proceedings of the 38th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Orlando, FL, USA, 16–20 August 2016. [Google Scholar]

- Lankford, C. Effective Eye-gaze Input into Windows. In Proceedings of the Symposium on Eye Tracking Research & Applications (ETRA ’00), ACM, New York, NY, USA, 6–8 November 2000; pp. 23–27. [Google Scholar]

- Weiser, M. The Computer for the 21st Century. Sci. Am. 1991, 265, 94–105. [Google Scholar] [CrossRef]

- Perelman, B.S. Detecting deception via eyeblink frequency modulation. PeerJ 2014, 2, e260. [Google Scholar] [CrossRef] [PubMed]

- Grossmann, T. The eyes as windows into other minds: An integrative perspective. Perspect. Psychol. Sci. 2017, 12, 107–121. [Google Scholar] [CrossRef] [PubMed]

- Walczyk, J.J.; Griffith, D.A.; Yates, R.; Visconte, S.R.; Simoneaux, B.; Harris, L.L. Lie detection by inducing cognitive load: Eye movements and other cues to the false answers of ‘witnesses’ to crimes. Crim. Justice Behav. 2012, 39, 887–909. [Google Scholar] [CrossRef]

- Ekenel, H.K.; Stallkamp, J.; Stiefelhagen, R. A video-based door monitoring system using local appearance-based face models. Comput. Vis. Image Underst. 2010, 114, 596–608. [Google Scholar] [CrossRef]

- Ahlstrom, U.; Friedman-Berg, F.J. Using eye movement activity as a correlate of cognitive workload. Int. J. Ind. Ergon. 2006, 36, 623–636. [Google Scholar] [CrossRef]

- Hoffman, J.E. Visual attention and eye movements. In Attention; Pashler, H., Ed.; Psychology Press: Hove, UK, 1998; pp. 119–154. [Google Scholar]

- Kuo, S.C.; Lin, C.J.; Liao, J.R. 3D reconstruction and face recognition using kernel-based ICA and neural networks. Expert Syst. Appl. 2011, 38, 5406–5415. [Google Scholar] [CrossRef]

- Yang, J.; Ling, X.; Zhu, Y.; Zheng, Z. A face detection and recognition system in color image series. Math. Comput. Simul. 2008, 77, 531–539. [Google Scholar] [CrossRef]

- Babiker, I.; Faye, I.; Malik, A. Pupillary behavior in positive and negative emotions. In Proceedings of the IEEE International Conference on Signal and Image Processing Applications, Melaka, Malaysia, 8–10 October 2013; pp. 379–383. [Google Scholar] [CrossRef]

- Diwaker, C.; Tomar, P.; Solanki, A.; Nayyar, A.; Jhanjhi, N.Z.; Abdullah, A.; Supramaniam, M. A New Model for Predicting Component-Based Software Reliability Using Soft Computing. IEEE Access 2019, 7, 147191–147203. [Google Scholar] [CrossRef]

- Kok, S.H.; Abdullah, A.; Jhanjhi, N.Z.; Supramaniam, M. A review of intrusion detection system using machine learning approach. Int. J. Eng. Res. Technol. 2019, 12, 8–15. [Google Scholar]

- Airehrour, D.; Gutierrez, J.; Ray, S.K. GradeTrust: A secure trust based routing protocol for MANETs. In Proceedings of the 25th International Telecommunication Networks and Applications Conference (ITNAC), Sydney, Australia, 18–20 November 2015; pp. 65–70. [Google Scholar]

| Algorithm | Accuracy |

|---|---|

| K-NN | 96.08% |

| Deep learning | 55.5% |

| Decision Tree | 55.56% |

| Random Forest | 56.72% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ahmad, M.; Ali, T.M.; Arianti, N.D. Enhancing the Precision of Eye Detection with EEG-Based Machine Learning Models. Eng. Proc. 2025, 107, 128. https://doi.org/10.3390/engproc2025107128

Ahmad M, Ali TM, Arianti ND. Enhancing the Precision of Eye Detection with EEG-Based Machine Learning Models. Engineering Proceedings. 2025; 107(1):128. https://doi.org/10.3390/engproc2025107128

Chicago/Turabian StyleAhmad, Masroor, Tahir Muhammad Ali, and Nunik Destria Arianti. 2025. "Enhancing the Precision of Eye Detection with EEG-Based Machine Learning Models" Engineering Proceedings 107, no. 1: 128. https://doi.org/10.3390/engproc2025107128

APA StyleAhmad, M., Ali, T. M., & Arianti, N. D. (2025). Enhancing the Precision of Eye Detection with EEG-Based Machine Learning Models. Engineering Proceedings, 107(1), 128. https://doi.org/10.3390/engproc2025107128