Evaluating Low-Code Development Platforms: A MULTIMOORA Approach †

Abstract

1. Introduction

2. Literature Review

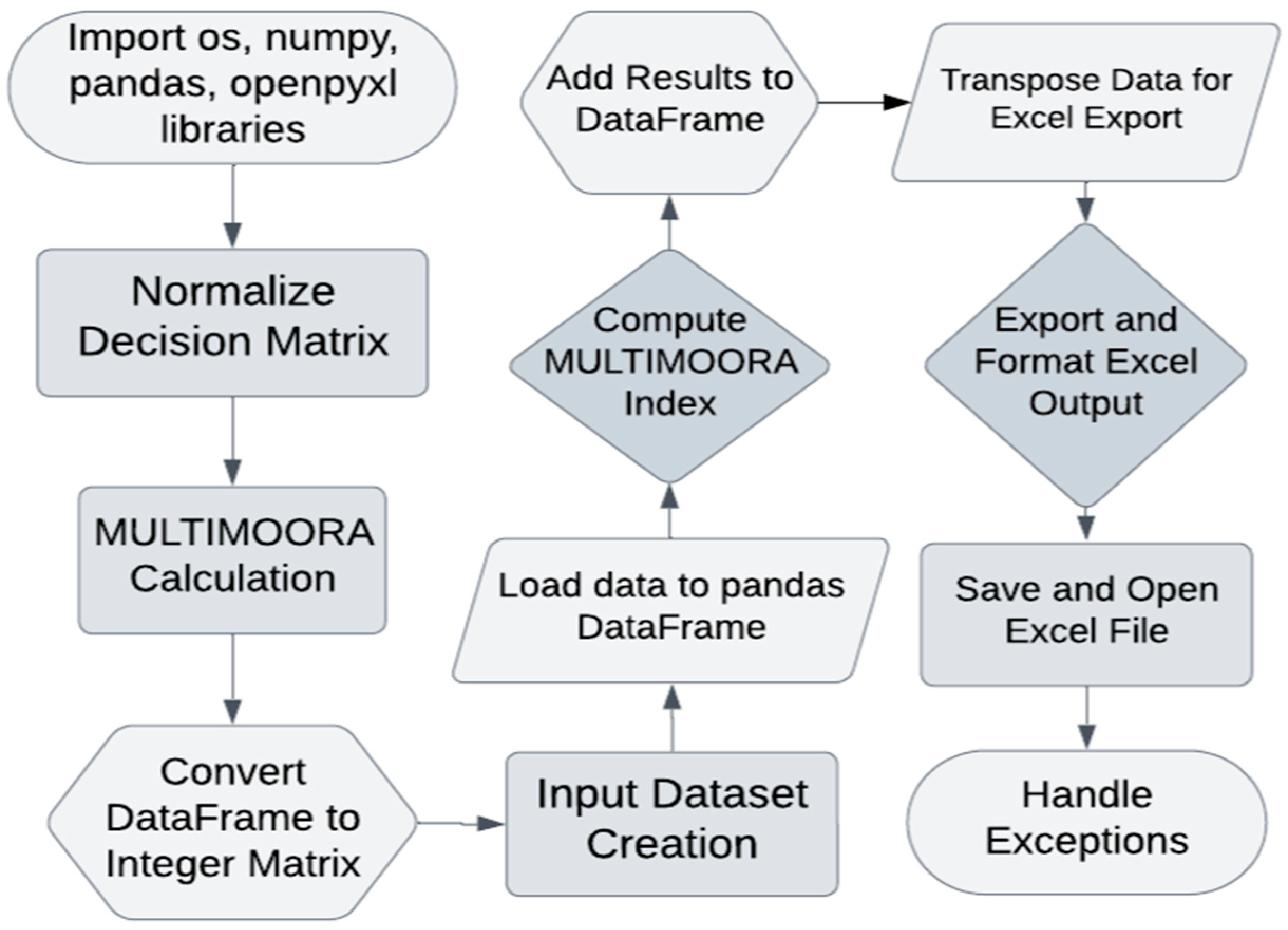

3. Materials and Methods

3.1. MULTIMOORA Framework

- is the normalized score of alternative with respect to criterion ;

- is the evaluation of the effectiveness of alternative with respect to criterion ;

- is the total number of possible alternatives.

- is the aggregated performance score of alternative ;

- is the weight of criterion ;

- is the total number of criterion.

3.2. MULTIMOORA Framework

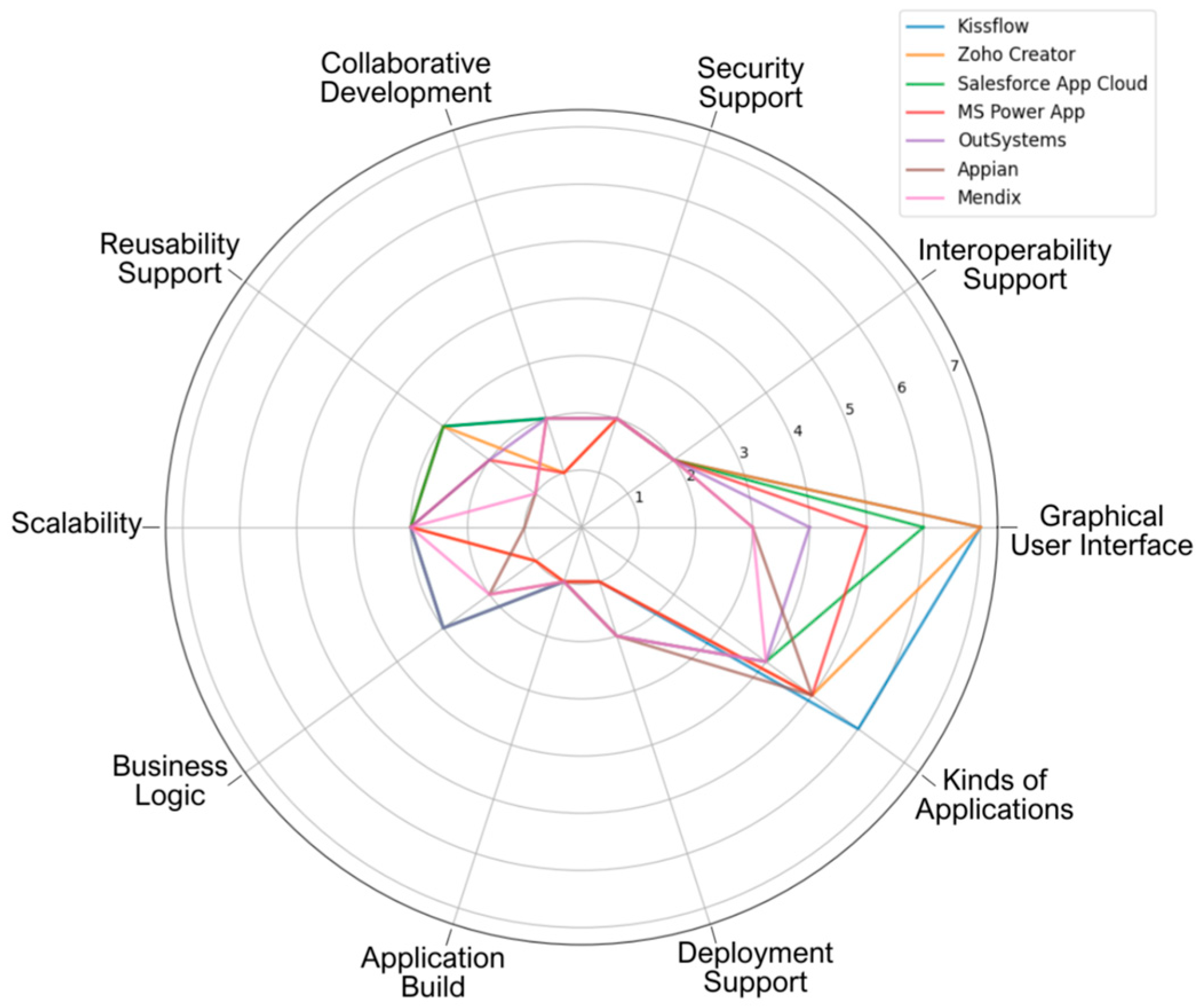

3.3. Evaluation

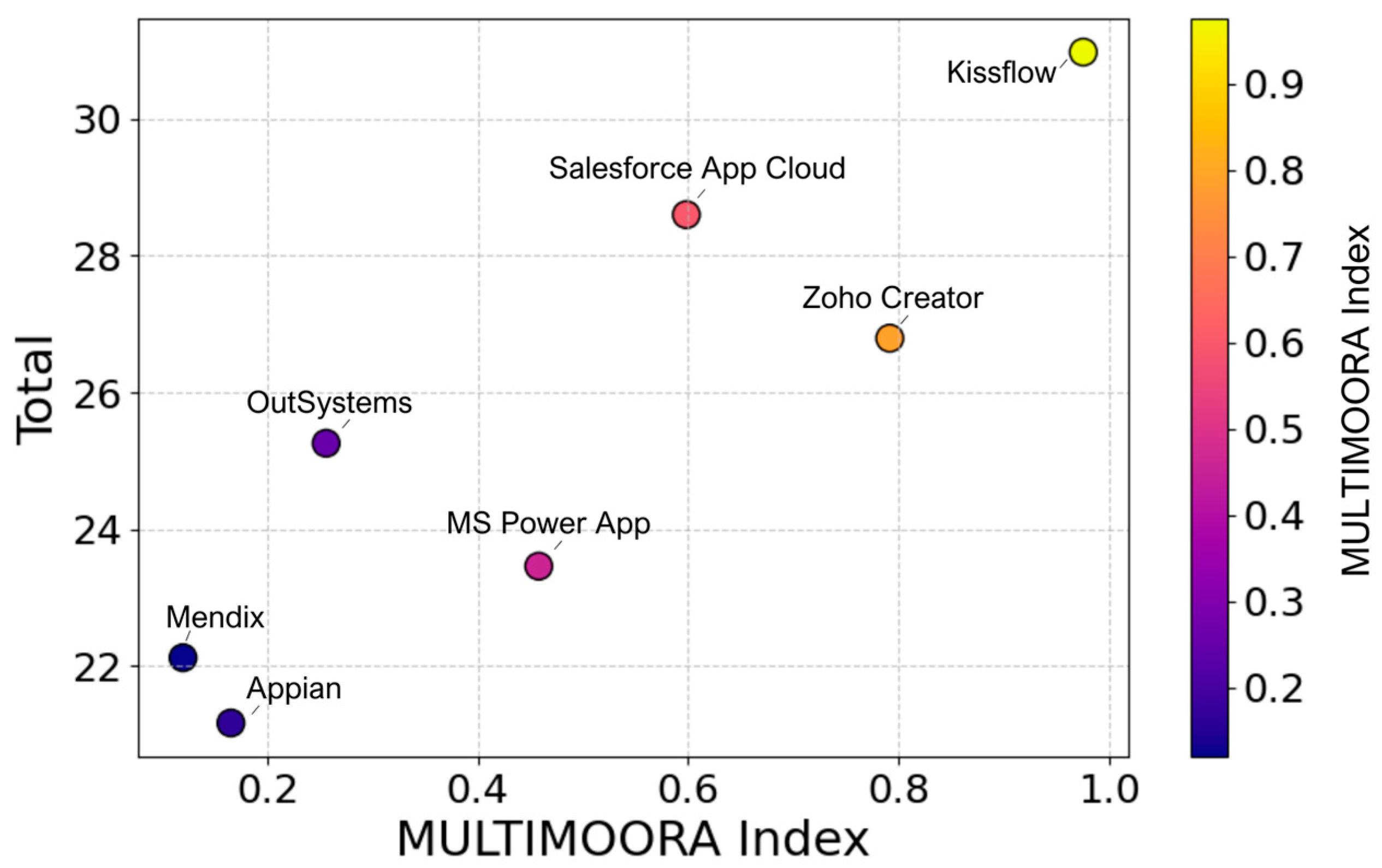

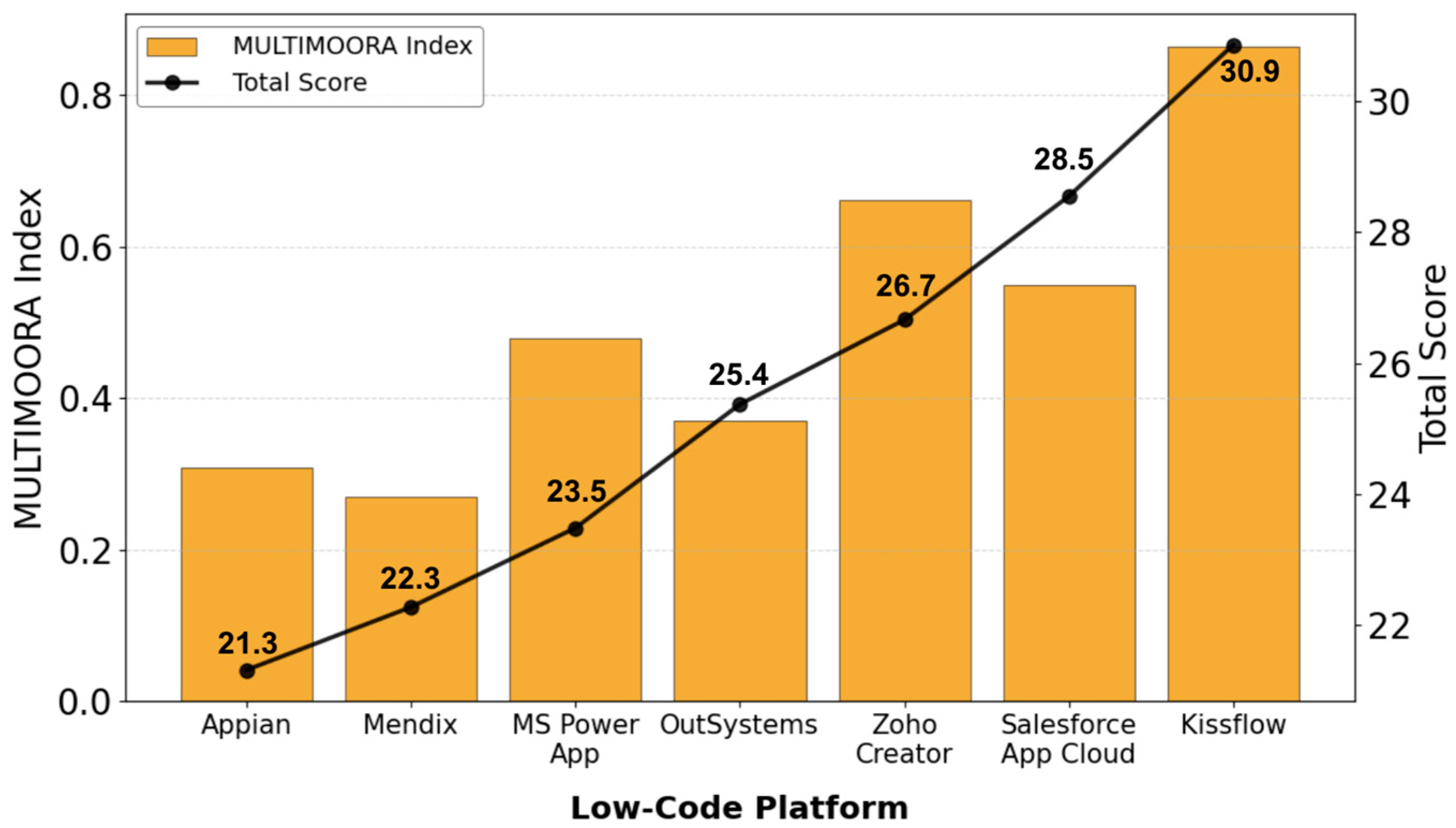

4. Results

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| LCDP | Low-code development platform |

| MCDM | Multi-criteria decision-making |

| RS | Ratio system |

| LD | Linear dichroism |

| MOORA | Multi-Objective Optimization on the Basis of Ratio Analysis |

References

- Bock, A.C.; Frank, U. Low-code platform. Bus. Inf. Syst. Eng. 2021, 63, 733–740. [Google Scholar] [CrossRef]

- Picek, R. Low-code/no-code platforms and modern ERP systems. In Proceedings of the 2023 International Conference on Information Management (ICIM), Oxford, UK, 17–19 March 2023; pp. 44–49. [Google Scholar] [CrossRef]

- Di Ruscio, D.; Kolovos, D.; de Lara, J.; Pierantonio, A.; Tisi, M.; Wimmer, M. Low-code development and model-driven engineering: Two sides of the same coin? Softw. Syst. Model. 2022, 21, 437–446. [Google Scholar] [CrossRef]

- Phalake, V.; Joshi, S.; Rade, K.; Phalke, V. Modernized application development using optimized low code platform. In Proceedings of the 2nd Asian Conference on Innovation in Technology (ASIANCON), Ravet, India, 26–28 August 2022; pp. 1–4. [Google Scholar] [CrossRef]

- Phalake, V.S.; Joshi, S.D.; Rade, K.A.; Phalke, V.S.; Molawade, M. Optimization for achieving sustainability in low code development platform. Int. J. Interact. Des. Manuf. 2023, 18, 1–8. [Google Scholar] [CrossRef] [PubMed]

- Juhas, G.; Molnár, L.; Juhásová, A.; Ondrišová, M.; Mladoniczky, M.; Kováčik, T. Low-code platforms and languages: The future of software development. In Proceedings of the 20th International Conference on Emerging eLearning Technologies and Applications (ICETA), Stary Smokovec, Slovakia, 20–21 October 2022; pp. 286–293. [Google Scholar] [CrossRef]

- Tang, L. ERP low-code cloud development. In Proceedings of the 2022 IEEE 13th International Conference on Software Engineering and Service Science (ICSESS), Beijing, China, 21–23 October 2022; pp. 319–323. [Google Scholar] [CrossRef]

- Liu, D.; Jiang, H.; Guo, S.; Chen, Y.; Qiao, L. What’s wrong with low-code development platforms? An empirical study of low-code development platform bugs. IEEE Trans. Reliab. 2023, 73, 695–709. [Google Scholar] [CrossRef]

- Overeem, M.; Jansen, S. Proposing a framework for impact analysis for low-code development platforms. In Proceedings of the ACM/IEEE International Conference on Model Driven Engineering Languages and Systems Companion (MODELS-C), Fukuoka, Japan, 10–15 October 2021; pp. 88–97. [Google Scholar] [CrossRef]

- Almonte, L.; Cantador, I.; Guerra, E.; de Lara, J. Towards automating the construction of recommender systems for low-code development platforms. In Proceedings of the 23rd ACM/IEEE International Conference on Model Driven Engineering Languages and Systems: Companion Proceedings, Virtual Event, 16–23 October 2020; pp. 1–10. [Google Scholar] [CrossRef]

- Dalibor, M.; Heithoff, M.; Michael, J.; Netz, L.; Pfeiffer, J.; Rumpe, B.; Varga, S.; Wortmann, A. Generating customized low-code development platforms for digital twins. J. Comput. Lang. 2022, 70, 101117. [Google Scholar] [CrossRef]

- Käss, S.; Strahringer, S.; Westner, M. Practitioners’ perceptions on the adoption of low code development platforms. IEEE Access 2023, 11, 29009–29034. [Google Scholar] [CrossRef]

- Sahay, A.; Indamutsa, A.; Di Ruscio, D.; Pierantonio, A. Supporting the understanding and comparison of low-code development platforms. In Proceedings of the 2020 46th Euromicro Conference on Software Engineering and Advanced Applications (SEAA), Portoroz, Slovenia, 26–28 August 2020; pp. 171–178. [Google Scholar] [CrossRef]

- Philippe, J.; Coullon, H.; Tisi, M.; Sunyé, G. Towards transparent combination of model management execution strategies for low-code development platforms. In Proceedings of the 23rd ACM/IEEE International Conference on Model Driven Engineering Languages and Systems: Companion Proceedings, Virtual Event, 16–23 October 2020; pp. 1–10. [Google Scholar] [CrossRef]

- Ibrahimi, I.; Moudilos, D. Towards model reuse in low-code development platforms based on knowledge graphs. In Proceedings of the 25th International Conference on Model Driven Engineering Languages and Systems: Companion Proceedings, Montreal, QC, Canada, 23–28 October 2022; pp. 826–836. [Google Scholar] [CrossRef]

- Brauers, W.K.M.; Zavadskas, E.K. The MOORA method and its application to privatization in a transition economy. Control Cybern. 2006, 35, 445–469. [Google Scholar]

- Ji, S.; Agunbiade, M.; Rajabifard, A.; Kalantari, M. Strategies for improving land delivery for residential development: A case of the north-west metropolitan Melbourne. Int. J. Geogr. Inf. Sci. 2015, 29, 1649–1667. [Google Scholar] [CrossRef]

- Baležentis, T.; Baležentis, A. A survey on development and applications of the multi-criteria decision making method MULTIMOORA. J. Multi-Criteria Decis. Anal. 2014, 21, 209–222. [Google Scholar] [CrossRef]

- Brauers, W.K.M.; Zavadskas, E.K. Robustness of MULTIMOORA: A method for multi-objective optimization. Informatica 2012, 23, 1–25. [Google Scholar] [CrossRef]

- Käss, S.; Strahringer, S.; Westner, M. A multiple mini case study on the adoption of low code development platforms in work systems. IEEE Access 2023, 11, 118762–118786. [Google Scholar] [CrossRef]

- Abdurrasyid; Susanti, M.N.I.; Indrianto. Implication of low-code development platform on use case point methods. In Proceedings of the 2022 International Conference on ICT for Smart Society (ICISS), Bandung, Indonesia, 10–11 August 2022; pp. 1–6. [Google Scholar] [CrossRef]

- Gomes, P.M.; Brito, M.A. Low-code development platforms: A descriptive study. In Proceedings of the 2022 17th Iberian Conference on Information Systems and Technologies (CISTI), Madrid, Spain, 22–25 June 2022; pp. 1–4. [Google Scholar] [CrossRef]

- Ihirwe, F.; Di Ruscio, D.; Gianfranceschi, S.; Pierantonio, A. Assessing the quality of low-code and model-driven engineering platforms for engineering IoT systems. In Proceedings of the 2022 IEEE 22nd International Conference on Software Quality, Reliability and Security (QRS), Guangzhou, China, 5–9 December 2022; pp. 583–594. [Google Scholar] [CrossRef]

- Gabdullin, M.T.; Suinullayev, Y.; Kabi, Y.; Kang, J.W.; Mukasheva, A. Comparative Analysis of Hadoop and Spark Performance for Real-time Big Data Smart Platforms Utilizing IoT Technology in Electrical Facilities. J. Electr. Eng. Technol. 2024, 19, 4595–4606. [Google Scholar] [CrossRef]

- Gabdullin, M.T.; Mukasheva, A.; Koishiyeva, D.; Umarov, T.; Bissembayev, A.; Kim, K.-S.; Kang, J.W. Automatic cancer nuclei segmentation on histological images: Comparison study of deep learning methods. Biotechnol. Bioproc. 2024, 29, 1034–1047. [Google Scholar] [CrossRef]

| Feature | Components | Weight |

|---|---|---|

| Graphical User Interface | Drag-and-Drop Builder, Click-to-Configure Tools, Prebuilt Forms/Reports, Ready Dashboards, Responsive Forms, Milestone Monitoring, Detailed Reporting, Integrated Workflows, Flexible Workflows | 0.9 |

| Compatibility Assistance | Third-Party Service Integration, Data Access Connectivity | 0.2 |

| Safety Assistance | Software Safety, Environment-Level Safety | 0.2 |

| Cooperative Development | Offline Team Interaction, Real-Time Interaction | 0.2 |

| Reusability Support | Embedded Workflow Templates, Pre-Designed Forms/Reports, Pre-Configured Dashboards | 0.3 |

| Scalability | User Capacity Expansion, Data Traffic Handling, Data Capacity Growth | 0.3 |

| Business Process Definition | Rules Management System, Graphical Workflow Designer, AI-Enhanced Tools | 0.3 |

| Application Development Methods | Automated Generation of Code, Runtime Model Execution | 0.2 |

| Deployment Support | Cloud-Based Deployment, On-Premises Deployment | 0.2 |

| Kinds of Supported Applications | Event Tracking, Workflow Automation, Approval Management, Escalation Tracking, Inventory Tracking, Quality Management, Workflow Management | 0.7 |

| Feature | Description |

|---|---|

| Graphical User Interface | Simplifies interaction with drag-and-drop builders, prebuilt forms, ready dashboards, progress tracking, and customizable workflows. |

| Compatibility Assistance | Provides seamless integration with data flows and various services. |

| Safety Assistance | Provides robust application- and platform-level safety measures. |

| Cooperative Development | Enhances teamwork with offline and real-time online interaction tools. |

| Reusability Support | Includes reusable templates, forms, and dashboards for efficiency and consistency. |

| Scalability | Supports user expansion, data traffic handling, and capacity and storage growth. |

| Business Process Definition | Streamlines logic with a rules engine, graphical editors, and AI-powered tools. |

| Application Development Methods | Enables quick development with automated generation of code and runtime execution. |

| Deployment Support | Offers cloud-based and on-premises deployment options. |

| Kinds of Supported Applications | Facilitates event tracking, workflow automation, approval management, and more. |

| Feature | Kissflow | Salesforce App Cloud | Zoho Creator | OutSystems | MS Power App | Mendix | Appian |

|---|---|---|---|---|---|---|---|

| Graphical User Interface (GUI) | 7 | 6 | 7 | 4 | 5 | 3 | 3 |

| Drag-and-Drop Builder | + | + | + | + | − | + | + |

| Click-to-Configure Tools | − | − | − | − | + | − | − |

| Prebuilt Forms/Reports | + | + | + | + | + | + | + |

| Ready Dashboards | + | + | + | + | + | − | − |

| Responsive Forms | − | − | + | − | + | − | − |

| Milestone Monitoring | + | + | + | + | + | + | + |

| Detailed Reporting | + | − | − | − | − | − | − |

| Integrated Workflows | + | + | + | − | − | − | − |

| Flexible Workflows | + | + | + | − | − | − | − |

| Compatibility Assistance | 2 | 2 | 2 | 2 | 2 | 2 | 2 |

| Third-Party Service Integration | + | + | + | + | + | + | + |

| Data Access Connectivity | + | + | + | + | + | + | + |

| Safety Assistance | 2 | 2 | 2 | 2 | 2 | 2 | 2 |

| Software Safety | + | + | + | + | + | + | + |

| Environment-Level Safety | + | + | + | + | + | + | + |

| Cooperative Development | 2 | 2 | 1 | 2 | 1 | 2 | 2 |

| Offline Team Interaction | + | + | + | + | + | + | + |

| Real-Time Interaction | + | + | − | + | - | + | + |

| Reusability Support | 3 | 3 | 3 | 2 | 2 | 1 | 1 |

| Embedded Workflow Templates | + | + | + | − | − | − | − |

| Pre-Designed Forms/Reports | + | + | + | + | + | + | + |

| Pre-Configured Dashboards | + | + | + | + | + | − | - |

| Scalability | 3 | 3 | 3 | 3 | 3 | 3 | 1 |

| User Capacity Expansion | + | + | + | + | + | + | + |

| Data Traffic Handling | + | + | + | + | + | + | − |

| Data Storage Growth | + | + | + | + | + | + | − |

| Business Process Definition | 3 | 3 | 1 | 3 | 1 | 2 | 2 |

| Rules Management System | + | + | + | + | + | + | + |

| Graphical Workflow Designer | + | + | − | + | − | + | − |

| AI-Enhanced Tools | + | + | − | + | − | − | + |

| Application Development Methods | 1 | 1 | 1 | 1 | 1 | 1 | 1 |

| Automated Generation of Code | − | − | − | + | − | − | − |

| Runtime Model Execution | + | + | + | − | + | + | + |

| Deployment Support | 1 | 2 | 1 | 2 | 1 | 2 | 2 |

| Cloud-Based Deployment | + | + | + | + | + | + | + |

| On-Premises Deployment | − | + | − | + | − | + | + |

| Kinds of Supported Applications | 6 | 4 | 5 | 4 | 5 | 4 | 5 |

| Event Tracking | + | + | + | + | + | + | + |

| Workflow Automation | + | − | + | + | + | − | + |

| Approval Management | − | − | − | − | − | − | − |

| Escalation Tracking | + | − | − | − | − | − | − |

| Inventory Tracking | + | + | + | + | + | + | + |

| Quality Management | + | + | + | − | + | + | + |

| Workflow Management | + | + | + | + | + | + | + |

| MULTIMOORA Index | 0.8638 | 0.5497 | 0.661 | 0.3697 | 0.479 | 0.2696 | 0.3081 |

| Total | 30.864 | 28.5497 | 26.661 | 25.3697 | 23.479 | 22.2696 | 21.308 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Serekov, D.; Bissembayev, A.; Iliev, T.; Mukasheva, A.; Kang, J.W. Evaluating Low-Code Development Platforms: A MULTIMOORA Approach. Eng. Proc. 2025, 104, 15. https://doi.org/10.3390/engproc2025104015

Serekov D, Bissembayev A, Iliev T, Mukasheva A, Kang JW. Evaluating Low-Code Development Platforms: A MULTIMOORA Approach. Engineering Proceedings. 2025; 104(1):15. https://doi.org/10.3390/engproc2025104015

Chicago/Turabian StyleSerekov, Danial, Alibek Bissembayev, Teodor Iliev, Assel Mukasheva, and Jeong Won Kang. 2025. "Evaluating Low-Code Development Platforms: A MULTIMOORA Approach" Engineering Proceedings 104, no. 1: 15. https://doi.org/10.3390/engproc2025104015

APA StyleSerekov, D., Bissembayev, A., Iliev, T., Mukasheva, A., & Kang, J. W. (2025). Evaluating Low-Code Development Platforms: A MULTIMOORA Approach. Engineering Proceedings, 104(1), 15. https://doi.org/10.3390/engproc2025104015