1. Introduction

The analysis of time series with complex values is an important area in signal processing. Although complex-valued time series do not occur directly in real-life data, they are constructed from two related features [

1,

2] and can also arise as a result of applying the discrete Fourier transform to real data [

3,

4].

Singular spectrum analysis (SSA) [

5,

6,

7,

8] is a powerful method of time series analysis that does not require a parametric model of the time series to be specified in advance. The method has a natural extension to the case of complex time series, called Complex SSA (CSSA) [

9,

10]. In fact, one could say that SSA is a special case of CSSA. A class of signals governed by linear recurrence relations can be used to obtain theoretical results regarding SSA and CSSA.

Let the observed complex time series be of the form . To obtain an estimate of the signal S, we will use the CSSA method.

To analyse the signal estimation error, we use the perturbation theory [

11,

12], which has been applied to the case of signal extraction by the SSA method in a number of papers: see, for example, [

13,

14]. Although Kato’s perturbation theory gives a form of the full error, its examination is a difficult task. Therefore, we will only consider the first-order error, which is the part that is linear in the perturbation.

Even for the first-order error, obtaining its explicit form is a rather complicated task. Theoretically, we consider only the case of a constant signal. Two types of perturbation are examined: an outlier and random noise. The theoretical results obtained allow us to study how CSSA deals with signal extraction. The importance of perturbation in the form of noise is quite clear. There are robust variants of the SSA method for analysing time series with possible outliers [

15,

16,

17,

18,

19], but they are mostly much more time-consuming than the basic version of SSA, so studying the influence of outliers on the basic version of SSA and CSSA is of considerable interest.

Certainly, constant signals are not a common case in time series analysis. Therefore, special attention is given to a possible generalisation of the theoretical results, which are demonstrated numerically.

The rest of the paper is organized as follows.

Section 2 contains the CSSA algorithm.

Section 3 outlines the theoretical approach to error computation and presents the theoretical results obtained for the constant series. In

Section 4, an extension of the theoretical results to the general case of signals is suggested; the assumed results on estimation accuracy are confirmed by numerical experiments.

Section 5 concludes this paper.

2. Algorithm CSSA

Let us briefly describe singular spectrum analysis and its features. Algorithm 1 presents the steps of CSSA for estimating the signal.

| Algorithm 1: CSSA |

1 Input: Complex time series , window length L, signal rank r. 2 Output: Signal estimate . 3 Algorithm: - 1.

Embedding. Construct , the L-trajectory matrix of the time series X:

where and are the L-embedding vectors: . - 2.

Decomposition. Construct the singular value decomposition (SVD) of the matrix X: , where means the Hermitian conjugate, and are the left and right singular vectors of the matrix X, are the singular values in decreasing order. - 3.

Grouping. Group the matrix components associated with the signal: . - 4.

Diagonal averaging. Apply the hankelization (which is the projection into the linear space of Hankel matrices): , and return back to the series form: .

|

Recommendations for the choice of the window length

L can be found in [

20,

21]. The choice of

r is a much more sophisticated problem; information criteria for the choice of

r are proposed in [

22,

23].

The SSA/CSSA estimation of the signal can be presented in short form:

where

is the projector on the space of Hankel matrices (in Frobenius norm), and

is the projector on the set of matrices of ranks not larger than

r.

The

L-rank of a time series is the rank of its trajectory matrix. For further considerations we need to know the ranks of certain series. It is known from [

8] that the rank of a complex signal for which its real and imaginary parts are sinusoids with the same frequency

,

, is equal to 2 if the absolute phase shift of the sinusoids, given in the interval from

to

, is not equal to

and is equal to 1 in the case of a complex exponential. The rank of a real sine wave is 2 under the same frequency constraints. The ranks of complex and real constants are equal to 1 as they can be considered as a special case with

.

The signal is considered to be a sum of r exponentially modulated complex exponentials, with the sum having a rank of r.

3. Application of Perturbation Theory to CSSA

Let us observe

, where

, and let

be the CSSA estimate of the signal. It is known from [

13] that the reconstruction error F has the form

.

For simplicity, let

. We call the first-order reconstruction error the part of the error that is linear in

:

. Theorem 2.1 from [

13] gives the following formula for

in the case of a sufficiently small perturbation:

where

is the projector onto the column space of

S;

is the projector onto the row space of

S;

;

is the identity matrix.

3.1. Relation Between Complex and Real First-Order Errors for Rank 1

In [

13], formulas were derived for

for real series of ranks 1 and 2. Below, we give the complex form for the case of rank 1. For

and the leading left and right singular vectors

U and

V of the trajectory matrix of the series S,

Proposition 1. Let . Then,where is the first-order error of the CSSA estimate of is the first-order error of the SSA estimate of , and is the first-order error of the SSA estimate of . Proof. By the linearity of (

2) in,

Since the rank is equal to 1, the singular vectors of the trajectory matrices of

,

, and S coincide. We can derive, from (

3) using the linearity of the diagonal averaging operation and the equality of the singular vectors, that

□

Note that although the perturbation E can take any form in the statement of the proposition, the proposition has practical application only if the first-order error adequately describes the full error.

An example where the conditions of Proposition 1 are fulfilled is the constant signal. In

Section 3.2, we consider the perturbation in the form of an outlier; in

Section 3.3, the perturbation is white noise.

Corollary 1. Let and be independent. Then, in the conditions of Proposition 1, 3.2. Case of Constant Signals with Outliers

In this section, we consider the signal perturbed by the outlier at position k, i.e., the series E consists of zeros except for the value at the k-th position. Based on Proposition 1, it is sufficient to compute the first-order reconstruction error for a real signal perturbed by an real outlier a at position k.

By substituting

,

, and

into (

2) and then performing the diagonal averaging of the matrix

, an explicit form of the first-order reconstruction error was obtained. It appears that the first-order error does not depend on

c, and therefore, the formulas are valid for the complex

. Due to linear dependence on

a, the obtained formulas are correct for the complex outlier

. Below, we present the final results depending on the location of the outlier and the relation between

L and

K.

Let us consider different locations

k of the outlier. For

, we have

Below we consider the location , since case can be reduced to by inverting the time series and taking the outlier at position .

Then, the first-order error has the following form:

3.3. Case of Noisy Constant Signals

Consider the time series S with common terms and the white noise with zero mean and variances of real and imaginary parts and , respectively. The conditions of Proposition 1 are obviously satisfied, and therefore, by Corollary 1, the variance of the CSSA first-order error is expressed by the variances of the first-order errors of the real and imaginary part of the SSA estimates.

In [

25], the analytic formula was obtained for a real constant signal, i.e., for the variances of

and

separately. Let

,

, and

. Following [

25] and correcting an error, we get, for the general complex case,

where

Formulas are written down for . For , the error at l is equal to the error at position .

4. Numerical Investigation

4.1. Numerical Comparison of First-Order and Full Signal Estimation Errors

For the case of outlier perturbation, the example with signal

and outlier perturbation

at position

was considered. The results are shown in

Table 1. The columns of

Table 1 correspond to different time series lengths

N. The rows correspond to different outlier locations

k and different window lengths

L as a function of

N. For each pair of

k and

L, we give the maximum full error (‘full’) and the maximum absolute difference between the full and first-order errors (‘diff’). One can see the convergence of this difference to 0 for all cases, while the full error tends to 0 only for

L proportional to

N.

The analogous result that the first-order error adequately estimates the full error of signal reconstruction at each point was also verified numerically in the case of noisy constant series (see the example concerning a noisy signal in

Section 4.2). For both perturbations, it was verified that this result is valid for signals of larger ranks (not shown).

4.2. Numerical Validation of Generalisation to Larger Ranks

The theoretical results for constant signals seem to be rather limited. In this section, we numerically demonstrate that the shape of the errors for signals of larger ranks is similar to the error for signals of rank 1, and their size differs by a multiplier.

Let us start with a perturbation in the form of an outlier. Set the series length to 3999 and locate the outlier

at the point

.

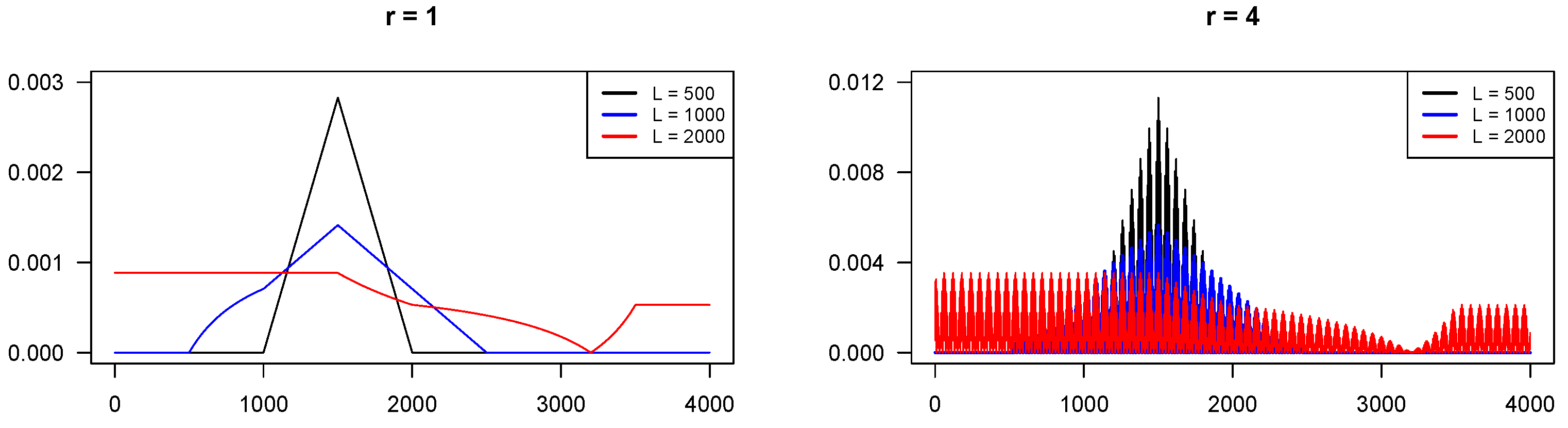

Figure 1 shows the behaviour of the absolute errors as a function of the series point.

Figure 1 (left) corresponds to the case

(constant signal) and is given by the theoretical formulas presented in

Section 3.2.

Figure 1 (right) corresponds to the signal

of rank 4. One can see that the shapes of the errors are similar. The ratio of the summed squared errors is approximately 4 for each of the considered window lengths of 500, 1000, and 2000.

Thus, the theoretical formulas for an elementary case help understand the error behaviour for a general case.

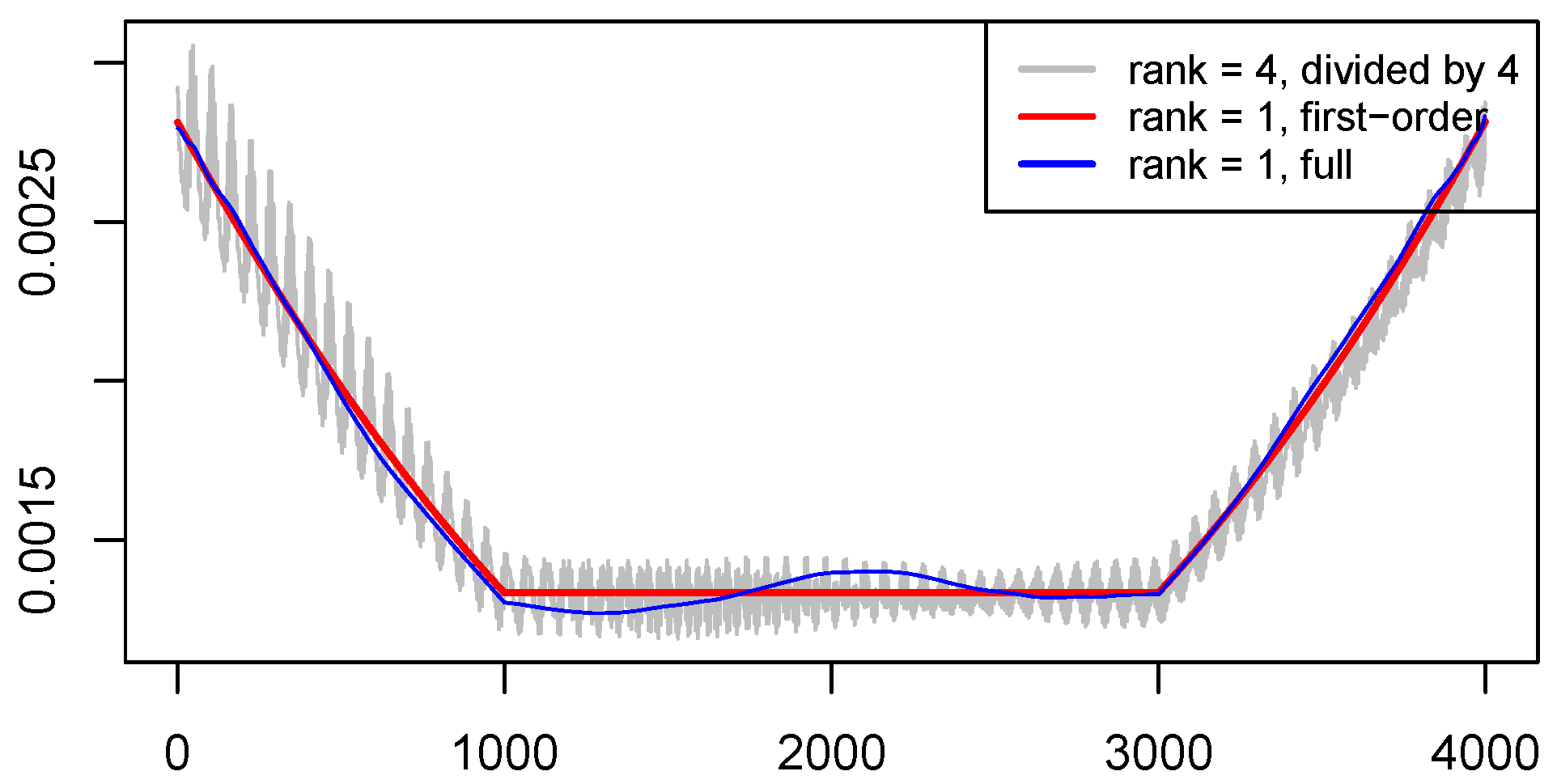

The similar relationship of error variances applies to random noise perturbations. We consider the same signals and the complex independent white Gaussian noise with variance 1 for real and imaginary parts. The MSE for

estimated by 500 realisations of the noise is shown in

Figure 2. The ratio of the MSE averaging along the series is about 4; this is confirmed by the proximity of the black and blue lines in

Figure 2. We also add the line corresponding to the first-order error calculated by (

6). One can see a good agreement between the first-order error and the full error.

All numerical results were obtained using the Rssa package,

http://CRAN.R-project.org/package=Rssa, accessed on 3 May 2025 and described in [

8]. In the implementation of CSSA, the fast numerical method PRIMME was used to compute the truncated SVDs.

5. Conclusions

An explicit form of the first-order error of signal estimation at each point was obtained for a complex constant signal and for both outliers and random noise perturbations. For the random noise, the error was measured by the variance. It appears that the error does not depend on the signal scale. For both examples, the proximity between the first-order error and the full error was confirmed numerically.

We have demonstrated numerically how the theoretical results on the accuracy of signal estimation in the simple case of a constant signal can help to explain error behaviour in the more general case of a sum of complex exponentials, . These results allow one to use the obtained error formulas in the general case, and they are promising for obtaining theoretical results for ranks larger than one.

Author Contributions

Conceptualization, N.G.; methodology, N.G.; software, M.S. and A.K.; validation, N.G., M.S., and A.K.; formal analysis, N.G., M.S., and A.K.; investigation, N.G., M.S., and A.K.; writing—original draft preparation, N.G. and M.S.; writing—review and editing, N.G. and A.K.; visualization, N.G. and M.S.; supervision, N.G.; project administration, N.G. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The raw data supporting the conclusions of this article will be made available by the authors upon request.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Pang, B.; Tang, G.; Tian, T. Complex Singular Spectrum Decomposition and its Application to Rotating Machinery Fault Diagnosis. IEEE Access 2019, 7, 143921–143934. [Google Scholar] [CrossRef]

- Journé, S.; Bihan, N.L.; Chatelain, F.; Flamant, J. Polarized Signal Singular Spectrum Analysis with Complex SSA. In Proceedings of the ICASSP 2023-2023 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Rhodes Island, Greece, 4–10 June 2023; pp. 1–5. [Google Scholar] [CrossRef]

- Trickett, S. F-xy Cadzow noise suppression. In SEG Technical Program Expanded Abstracts 2008; Society of Exploration Geophysicists: Houston, TX, USA, 2008; pp. 2586–2590. [Google Scholar] [CrossRef]

- Yuan, S.; Wang, S. A local f-x Cadzow method for noise reduction of seismic data obtained in complex formations. Pet. Sci. 2011, 8, 269–277. [Google Scholar] [CrossRef][Green Version]

- Broomhead, D.; King, G. Extracting qualitative dynamics from experimental data. Phys. D 1986, 20, 217–236. [Google Scholar] [CrossRef]

- Vautard, R.; Ghil, M. Singular spectrum analysis in nonlinear dynamics, with applications to paleoclimatic time series. Phys. D 1989, 35, 395–424. [Google Scholar] [CrossRef]

- Elsner, J.B.; Tsonis, A.A. Singular Spectrum Analysis: A New Tool in Time Series Analysis; Plenum Press: New York, NY, USA, 1996. [Google Scholar]

- Golyandina, N.; Korobeynikov, A.; Shlemov, A.; Usevich, K. Multivariate and 2D extensions of singular spectrum analysis with the Rssa package. J. Stat. Softw. 2015, 67, 1–78. [Google Scholar] [CrossRef]

- Kumaresan, R.; Tufts, D. Estimating the parameters of exponentially damped sinusoids and pole-zero modeling in noise. IEEE Trans. Acoust. 1982, 30, 833–840. [Google Scholar] [CrossRef]

- Keppenne, C.; Lall, U. Complex Singular Spectrum Analysis and Multivariate Adaptive Regression Splines Applied to Forecasting the Southern Oscillation. Exp. Long-Lead Forcst. Bull. May 1996. Available online: https://www.cpc.ncep.noaa.gov (accessed on 3 May 2025).

- Kato, T. Perturbation Theory for Linear Operators; Springer: Berlin/Heidelberg, Germany, 1966. [Google Scholar]

- Xu, Z. Perturbation analysis for subspace decomposition with applications in subspace-based algorithms. IEEE Trans. Signal Process. 2002, 50, 2820–2830. [Google Scholar] [CrossRef]

- Nekrutkin, V. Perturbation expansions of signal subspaces for long signals. Stat. Interface 2010, 3, 297–319. [Google Scholar] [CrossRef]

- Hassani, H.; Xu, Z.; Zhigljavsky, A. Singular spectrum analysis based on the perturbation theory. Nonlinear Anal. Real World Appl. 2011, 12, 2752–2766. [Google Scholar] [CrossRef]

- Trickett, S.; Burroughs, L.; Milton, A. Robust Rank-Reduction Filtering for Erratic Noise. In SEG Technical Program Expanded Abstracts 2012; Society of Exploration Geophysicists: Houston, TX, USA, 2012; pp. 1–5. Available online: https://geoconvention.com/wp-content/uploads/abstracts/2012/042_GC2012_Robust_Rank-Reduction_Filters_for_Erratic_Noise.pdf (accessed on 3 May 2025). [CrossRef]

- Chen, K.; Sacchi, M. Robust reduced-rank filtering for erratic seismic noise attenuation. Geophysics 2015, 80, V1–V11. [Google Scholar] [CrossRef]

- Kalantari, M.; Yarmohammadi, M.; Hassani, H. Singular Spectrum Analysis Based on L1-Norm. Fluct. Noise Lett. 2016, 15, 1650009. [Google Scholar] [CrossRef]

- Bahia, B.; Sacchi, M. Robust singular spectrum analysis via the bifactored gradient descent algorithm. In SEG Technical Program Expanded Abstracts 2019; Society of Exploration Geophysicists: Houston, TX, USA, 2019; pp. 4640–4644. [Google Scholar] [CrossRef]

- Kazemi, M.; Rodrigues, P.C. Robust singular spectrum analysis: Comparison between classical and robust approaches for model fit and forecasting. Comput. Stat. Comput. Stat. 2025, 40, 3257–3289. [Google Scholar] [CrossRef]

- Golyandina, N. On the choice of parameters in Singular Spectrum Analysis and related subspace-based methods. Stat. Interface 2010, 3, 259–279. [Google Scholar] [CrossRef]

- Khan, M.A.R.; Poskitt, D.S. A note on window length selection in singular spectrum analysis. Aust. N. Z. J. Stat. 2013, 55, 87–108. [Google Scholar] [CrossRef]

- Khan, M.A.R.; Poskitt, D.S. Signal Identification in Singular Spectrum Analysis. Aust. N. Z. J. Stat. 2016, 58, 71–98. [Google Scholar] [CrossRef]

- Golyandina, N.; Zvonarev, N. Information Criteria for Signal Extraction Using Singular Spectrum Analysis: White and Red Noise. Algorithms 2024, 17, 395. [Google Scholar] [CrossRef]

- Nekrutkin, V.V. On the Robustness of Singular Spectrum Analysis for Long Time Series. Vestn. St. Petersburg Univ. Math. 2024, 57, 335–344. [Google Scholar] [CrossRef]

- Golyandina, N.; Vlassieva, E. First-order SSA-errors for long time series: Model examples of simple noisy signals. In Proceedings of the 6th St.Petersburg Workshop on Simulation, St. Petersburg, Russia, 28 June–4 July 2009; Volume 1, pp. 314–319. [Google Scholar]

| Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).