1. Introduction

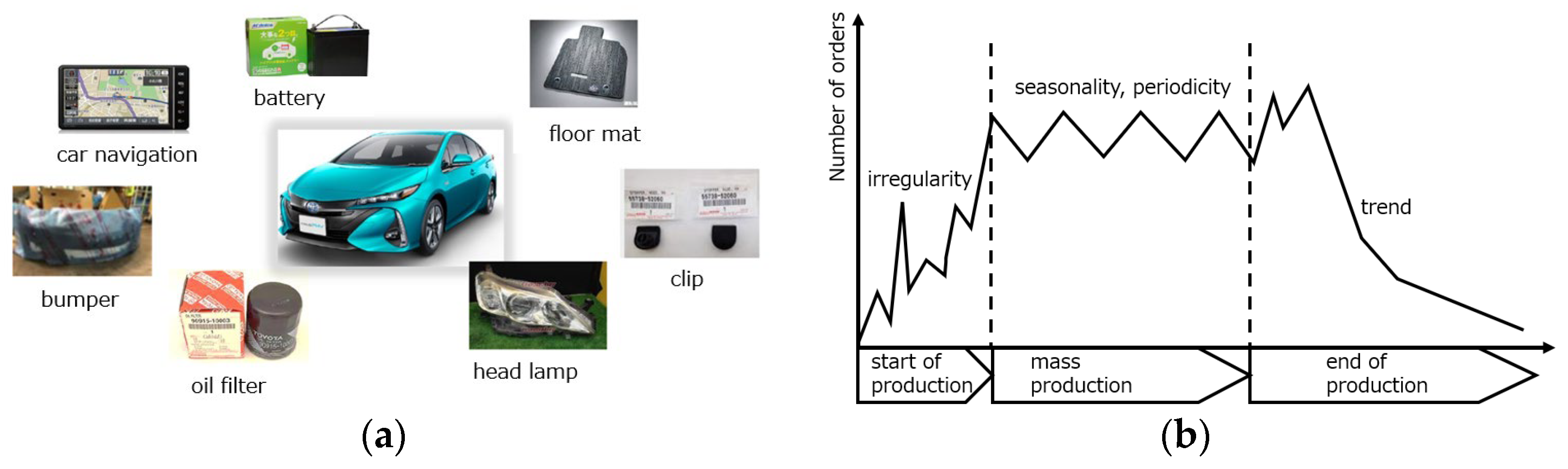

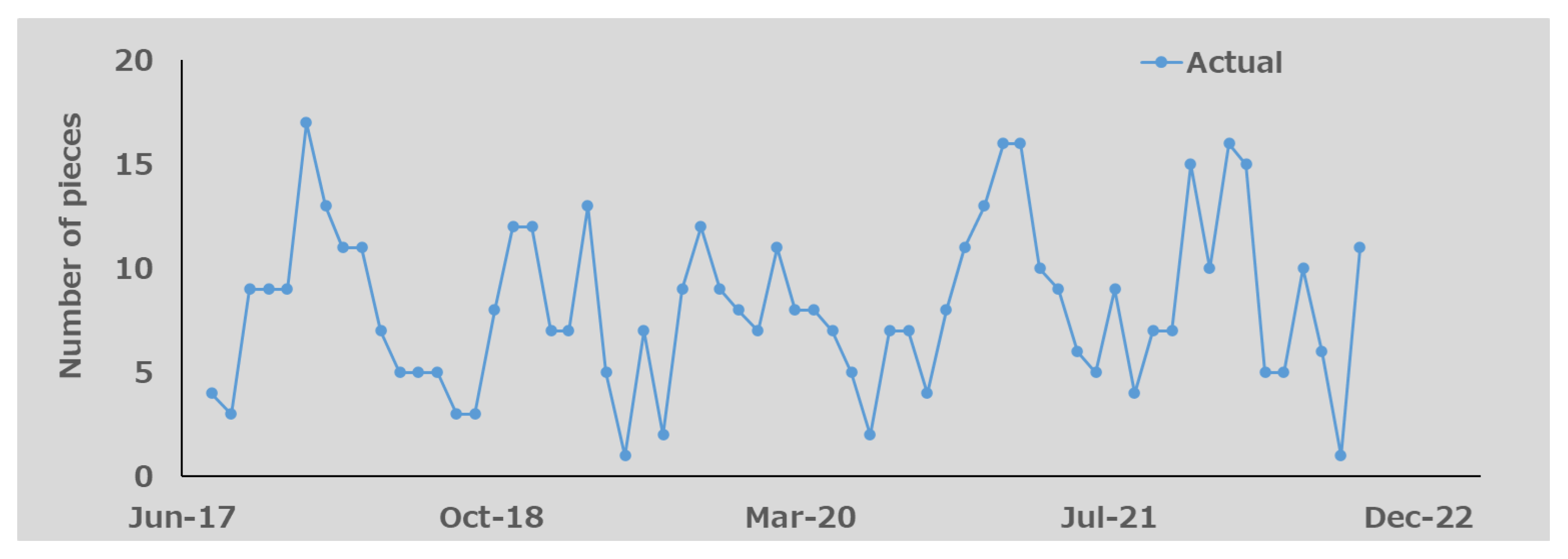

Service parts play an important role in the sustainable life of vehicles. In the automotive industry, the supply of service parts—such as bumpers, batteries, and aerodynamic parts (see

Figure 1a)—is required even after the end of vehicle production, as customers need them for maintenance and repairs. For manufacturers, it is essential to provide a timely supply of service parts not only for regular maintenance but also for parts that need to be replaced due to accidents [

1]. However, if service parts are out of stock when a failure occurs, repairs cannot be conducted until the parts are procured. To gain customer confidence, it is necessary to estimate the adequate quantity of service parts in advance and maintain a safe inventory level. On the other hand, if too much stock is held to mitigate the risk of shortages, it can lead to excess inventory, resulting in high maintenance costs and putting pressure on warehouse space. Therefore, it is crucial to estimate actual orders through demand forecasting to prevent both the risk of stockouts and the high costs associated with excess inventory, thereby maintaining appropriate inventory levels.

Service parts are essential for customers who wish to use their vehicles for a long time. Therefore, it is necessary to prepare for customer demand not only during vehicle production but also afterward, as maintenance may last over ten years after the product launch. Consequently, long-term changes in demand characteristics must be considered in the demand forecasting of service parts. The demand for these parts varies over their life cycle, with different characteristics in the early, middle, and late stages (see

Figure 1b). Additionally, from a short-term perspective, the demand for service parts exhibits periodic fluctuations, such as seasonal changes, along with long-term variations. Therefore, to accurately predict the demand for a specific part, it is necessary to consider its historical order data.

In traditional inventory management, basic forecasting methods based on the quantity and frequency of historical service part orders are implemented using simple moving average or exponential smoothing [

2,

3]. While these methods are straightforward and widely used due to their ease of understanding and operation, they struggle to deliver sufficient accuracy for parts with significant seasonal fluctuations and high order variability. As a result, in practice, logistics staff have to carefully monitor the forecast values for certain parts that have experienced stockouts in the past and adjust them as necessary based on their own know-how. Due to this, dealing with a large number of parts that exhibit significant demand variability poses a challenge, as traditional methods may incur high costs in order to obtain safe inventory levels.

To overcome accuracy issues, the establishment of an effective forecasting method for various demand patterns is required. There are a number of time series forecasting methods based on statistical approaches, including the previously mentioned simple moving average and exponential smoothing. Autoregressive (AR) models [

4] estimate future values based on a linear combination of past observations. In addition, combinations of moving averages, integral processes, and seasonal components have led to the proposal of autoregressive moving average (ARMA), autoregressive integrated moving average (ARIMA), and seasonal autoregressive integrated moving average (SARIMA) models [

5]. However, all models are based on different assumptions about the nature of the underlying time series data; thus, their effectiveness is limited to specific applications. Recently, neural networks have emerged as a powerful alternative to these statistical approaches in time series forecasting [

6]. Moreover, deep learning methods such as recurrent neural networks (RNNs) [

7] and long short-term memory networks (LSTM) [

8] have been extensively developed, with reports of deep learning models achieving higher accuracy compared to traditional models. On the other hand, deep learning forecasting models often have a large number of parameters and require substantial amounts of training data. Additionally, as the volume of training data increases, so does the time needed for training, making it difficult to maintain long-term dependencies [

9]. Therefore, careful model selection is necessary for time series with such characteristics.

In this paper, a new forecasting method that can handle small univariate time series data with reliable accuracy, such as monthly historical orders of a single service part over several years, in predicting a wide range of demand patterns is proposed using Ensemble Empirical Mode Decomposition (EEMD) [

10] and Dynamic Mode Decomposition (DMD) [

11]. EEMD and DMD are, respectively, utilized to adaptively decompose arbitrary time series that change at different time scales into multiple Intrinsic Mode Functions (IMFs) and to represent the dynamics of the time series in each mode. In the proposed method, the order history of a single service part is decomposed into modes using EEMD. Subsequently, corresponding state variables for each mode are computed, and the transition prediction for each mode is conducted using DMD. Finally, the sum of the transition predictions is calculated as the demand forecast value for the individual part.

This paper is organized as follows: In the next section, the theoretical derivations of the proposed method, based on a dynamical approach, are described. The validation method using historical order data of service parts is presented in the third section. The fourth section discusses the validation results. In the last section, the benefits and limitations of the proposed method, as well as future work, are summarized.

2. Theoretical Backgrounds

To accurately forecast various demand patterns of service parts that change complexly over time, the forecasting model should ensure accuracy not only within the range of available historical order data (interpolation range) but also in the range where no training data exists (extrapolation range). Existing forecasting methods utilizing common statistical and machine learning techniques often face the challenge of ‘extrapolation’ [

12]. In this section, a forecasting method is proposed to deal with the difficult task of ‘extrapolative forecasting’, drawing inspiration from dynamical systems. As shown in

Figure 2, in a dynamical system, the future states of the rolling motion of a ball can be determined by using information about its previous position and momentum under a governing equation, such as the Euler–Lagrange equation [

13]. In time series data, if we can define the information of state variables corresponding to position and momentum at each time point from the time series data and can also simulate the laws of transition for such state variables at each time point, it will become possible to estimate the future states in a manner similar to dynamical systems.

This section describes a new formulation of state variables for time series data. To represent the law of transition for state variables, the theoretical background of the Koopman operator and the algorithm for DMD [

14] are introduced, and a new formulation for demand forecasting based on this theory is established. Forecasting trials using test functions are also conducted to confirm the validity of the theories. Finally, EEMD and its applications are utilized to enhance forecast accuracy.

2.1. Formulation of State Variables

This subsection discusses state variables that represent time series dynamics and derives formulations for the position-related and momentum-related components that constitute these state variables from time series information. First, in the formulation of the position-related components, the observation of an analytic function

applied to the signal

at time

denoted as

can be approximated by a polynomial in

. Therefore, as shown in the following Equation (1), the observation can be represented as the inner product of a vector formed from the powers of the signal

and a constant vector composed of the coefficients of the polynomial.

where the constant vector

= (

,

,

and the coefficient

denote the inherent information of the observation function

and the truncation order of the approximation in Equation (1), respectively. Since the vector

=

is analogous to the coordinate information of a position

in three-dimensional space, it can be considered as the position-related vector in a multidimensional space generated by the signal

.

On the other hand, in the formulation of the momentum-related components, the calculation is performed using the difference in position-related vectors between the current time

and the previous time

as shown in Equation (2).

After simplifying the above equation by removing the information regarding the powers of the signal

that are already included in the position-related vector, the right side of Equation (2) can be written as follows.

Furthermore, using the approximation shown in the following Equation (4), the momentum-related components can be expressed as the difference between the current time signal

and the past signals

.

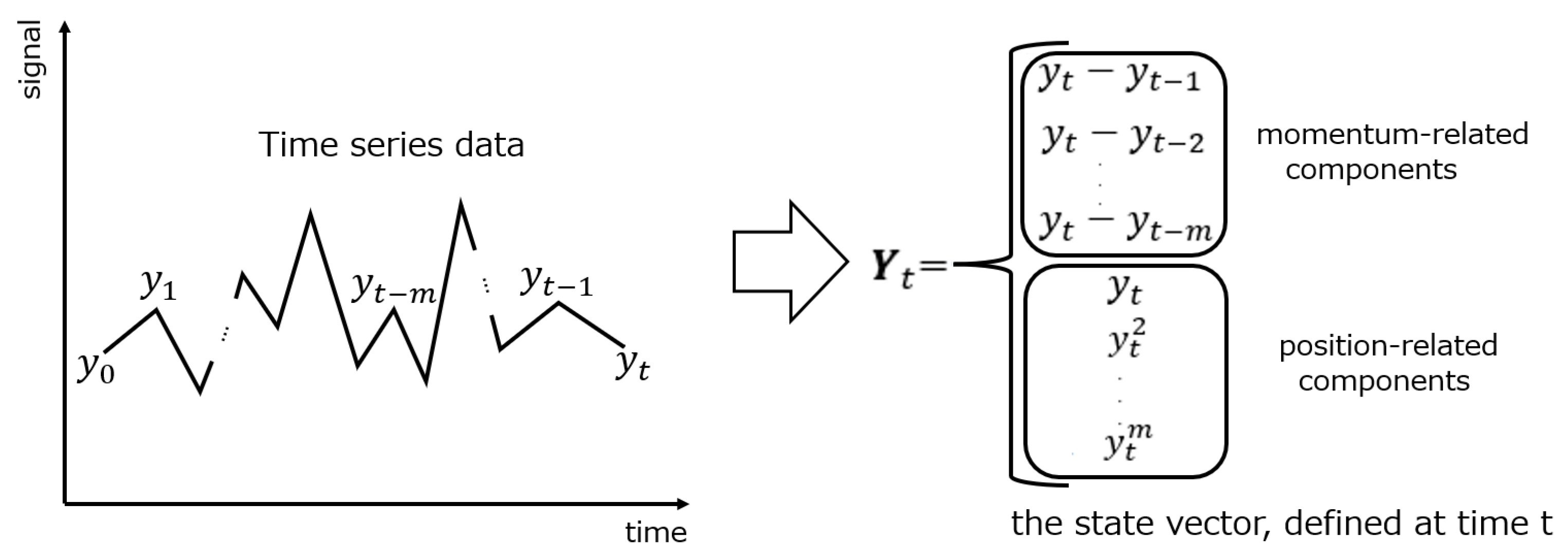

Therefore, as shown in

Figure 3, state variables of the time series at each time

can be represented as a vector consisting of the position-related components derived from the powers of

and the momentum-related components formed by the difference between

and the past signals

.

2.2. Koopman Operator and Dynamic Mode Decomposition

To simulate time evolution of state variables mentioned in the previous subsection, we introduce here the theoretical backgrounds of Koopman operator and its numerical algorithm, Dynamic Mode Decomposition (DMD). The Koopman operator is an operator that describes the time evolution of observables in dynamical systems. It was introduced by Koopman in the 1930s, inspired by the development of quantum mechanics, in relation to the observables of classical Hamiltonian systems [

15,

16]. In the 21st century, discussions about the applications of the theory have been expanded by Mezić and others to include dissipative dynamical systems, significantly broadening the range of applicable subjects and leading to active research in fields such as applied mathematics and control theory [

17,

18,

19]. Particularly in the field of fluid dynamics, a connection has been reported between data analysis of fluid motion, referred to as Dynamic Mode Decomposition (DMD), and the theory of the Koopman operator [

20], and DMD has been widely applied to the analysis of fluid motion. The key point of the theory of dynamical systems using the Koopman operator is the introduction of a function space that fits well with the characteristics of the dynamical system and relates those characteristics to the properties of linear mappings acting on that function space.

This subsection outlines the global linearization of nonlinear dynamical systems using the Koopman operator and demonstrates the potential to characterize the time evolution of state variables based on the spectrum of the Koopman operator. First, we start with a discrete dynamical system described by the following equation.

where

represents discrete time,

is the state vector at time

, and

is a nonlinear mapping in the state space. Due to its nonlinearity, the computation and analysis for

become difficult. In Koopman theory, a function

is introduced as an observable, which maps from the state space

to the complex number field

, and the space formed by the set of functions

is defined as the observable space

. A mapping

, called the Koopman operator, that creates a new observable

from the observable

on the observable space

, is defined as follows.

The operator

is an infinite-dimensional linear operator that maps functions to functions, while the original dynamical system, as shown in Equation (5), is finite-dimensional and nonlinear. In fact, for two observables

,

and scalars

,

, the linearity of the Koopman operator can be demonstrated as follows.

Therefore, it can be considered that the dynamics described in Equation (5) can be analyzed and estimated using the spectrum of

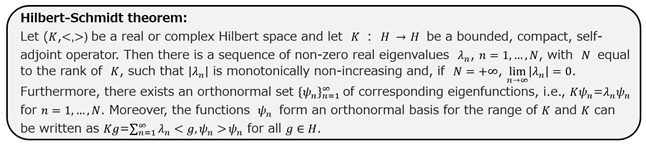

. According to the following Hilbert–Schmidt theorem in functional analysis [

21], if the mapping

is bounded and the observable space is finite-dimensional,

becomes a compact operator, and its spectrum can be represented by discrete eigenvalues. Consequently, any observable can be expanded using the eigenfunctions of

as a basis.

![Engproc 101 00003 i001 Engproc 101 00003 i001]()

The observable

in the above Equation (6) can be extended to a multidimensional observable vector

, with

satisfying the following relationships.

Since Equation (9) holds for any observable vector, we can take

as the identity mapping, and the matrix relationship shown in the following Equation (10) is obtained.

where

represents an approximate matrix representation of

, and

on the left side and

on the right side can be constructed from the state variables of the time series. Therefore, by calculating the eigenvalues and eigenvectors of

, we can approximate the spectrum of

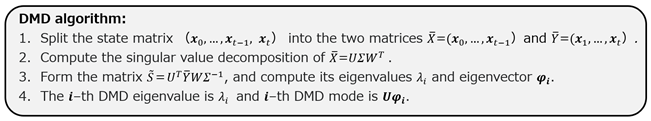

based on finite time series data. To achieve this, we utilize the following Dynamic Mode Decomposition (DMD) algorithm [

10] to determine the eigenvalues and eigenvectors of

.

![Engproc 101 00003 i002 Engproc 101 00003 i002]()

Furthermore, we introduce the function

(

)=

using the eigenvector

obtained from DMD. The function

(

) becomes an approximation for the eigenfunction of the Koopman operator

according to the following equation.

where

and

denote the complex conjugate of

and the conjugate matrix of

, respectively. Since

and

are the eigenvalues of the Koopman operator

as shown in Equation (11), the spectral information of

can be obtained from the results of DMD calculations using past information of the state variables.

2.3. Formulation for Demand Forecasting

In the application of demand forecasting, the number of future orders

for an individual service part that we want to forecast for

months ahead at the current time

can be considered as the observed results of the observable

on the current state vector

.

According to the Hilbert–Schmidt theorem,

can be expanded using the eigenfunctions

as the basis, as shown in Equation (13).

where

indicates the truncation order in the expansion. Additionally, the eigenfunction

is the inner product of the state vector

and the DMD eigenvector

, which can be calculated using the information from order history up to the current time

. Therefore, if the coefficient

, which represents the inner product

, is known, we can determine the future number of orders.

Since the coefficient

is independent of time, it can be calculated using the following optimization and machine learning techniques. In optimization techniques, the determination of

is reduced to minimizing the sum of the squares of the differences between the number of orders n months ahead and its approximated value based on the expansions using the eigenfunction

, as shown in Equation (15).

On the other hand, in the machine learning techniques, the determination of

can be reformulated as a regression model that takes the vector for the inner products of the state vector

at the current time

and the eigenvector

as input, and outputs the number of orders in

months ahead based on the data available up to the current time

.

In demand forecasting, the best representation of can be selected from the results of the optimization techniques and the machine learning techniques for making predictions.

2.4. Forecasting Trials Using Test Functions

Forecasting trials using test functions were carried out to verify the adequacy of the dynamical forecasting process discussed in

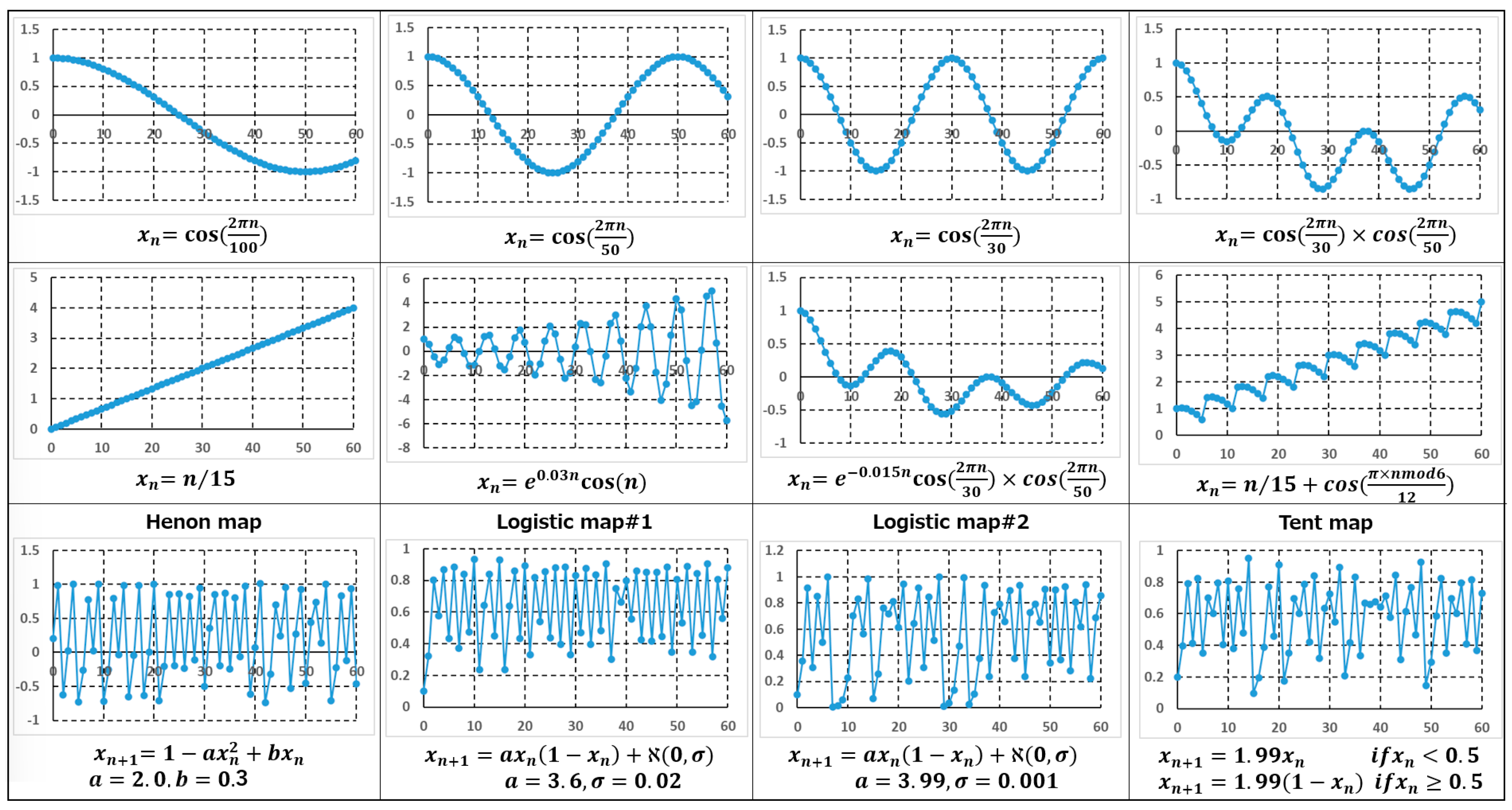

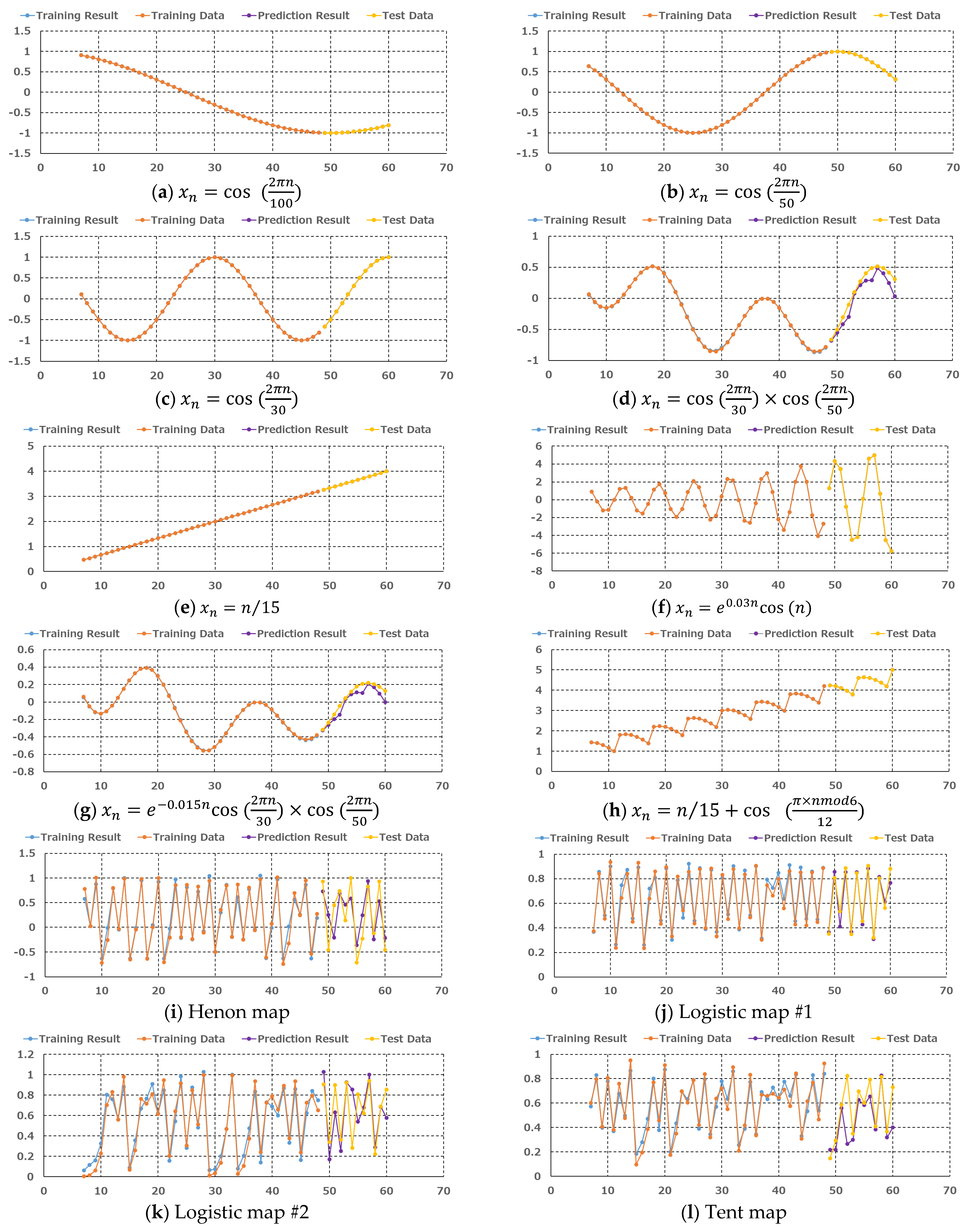

Section 2.3 above. The test functions consisted of twelve functions shown in

Figure 4. In the figure, the four functions in the top row represent periodicity, the four functions in the middle row indicate trends of increase and decrease, and the four functions in the bottom row show irregularity. The predictability of data containing periodicity and trend variations can be evaluated based on the forecasting results using the function sets from the top and middle rows. On the other hand, the functions in the bottom row, including the Henon map [

22], logistic maps [

23], and the tent map [

24], exhibit chaotic behaviors. The data from logistic maps are also added with a small level of random noise. Therefore, the forecasting capability of data characterized by irregularity and randomness can be assessed using the results of those functions.

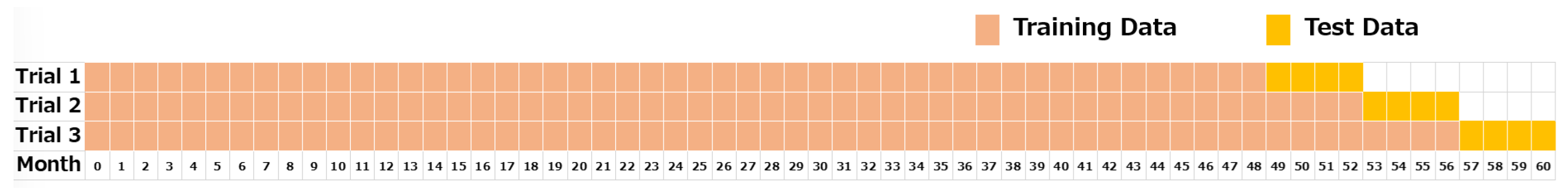

The test functions simulate the order history data of service parts, creating time series data corresponding to monthly orders over a period of 61 months. In the forecasting trials, the test data, as shown in

Figure 5, sequentially use four months of data obtained by splitting the most recent 12 months of data into three equal segments as the forecast targets, while the prior data is applied as training data.

The results of the forecasting trials are presented in

Figure 6. In the figure, the time series data of the test functions are divided into the first set of training data used in trial 1 (see

Figure 5) and the second set of all test data, along with the corresponding training results and forecasting results. As shown in

Figure 6a–h, the training and forecasting results for data with periodicity and trend variations are almost perfectly coincident with the training and forecasting data, indicating very good training and forecasting accuracy. In particular, the forecasting for time series representing trend variations demonstrates high accuracy even outside the range of the training data. This reflects the validity of the dynamical representation for the transition of the regular time series data, and as a result, high accuracy for extrapolated forecasting becomes possible. On the other hand, as shown in

Figure 6i–l, while the training and forecasting results for irregular time series data generally reproduce the training and test data, there is a tendency for training and forecasting errors to be larger compared to the results for the regular time series data. One factor contributing to this may be the sensitivity to initial conditions in chaotic systems [

25] and the influence of random noise. Therefore, in forecasting time series data that mix regular and irregular variations, better forecasting accuracy can be achieved by separating these two types of variations from the original data.

2.5. Improvement Using EEMD

The results in the previous subsection show some impacts of mode mixing and noise in the numerical calculations of forecasting values. In forecasting a time series pattern that includes both regular and irregular fluctuations, the influence of irregular components may lead to a decrease in overall forecasting accuracy. As a solution to this issue, decomposing complex time series patterns into regular and irregular components using mode decomposition, and then utilizing the mode results for forecasting, will lead to improved forecasting accuracy.

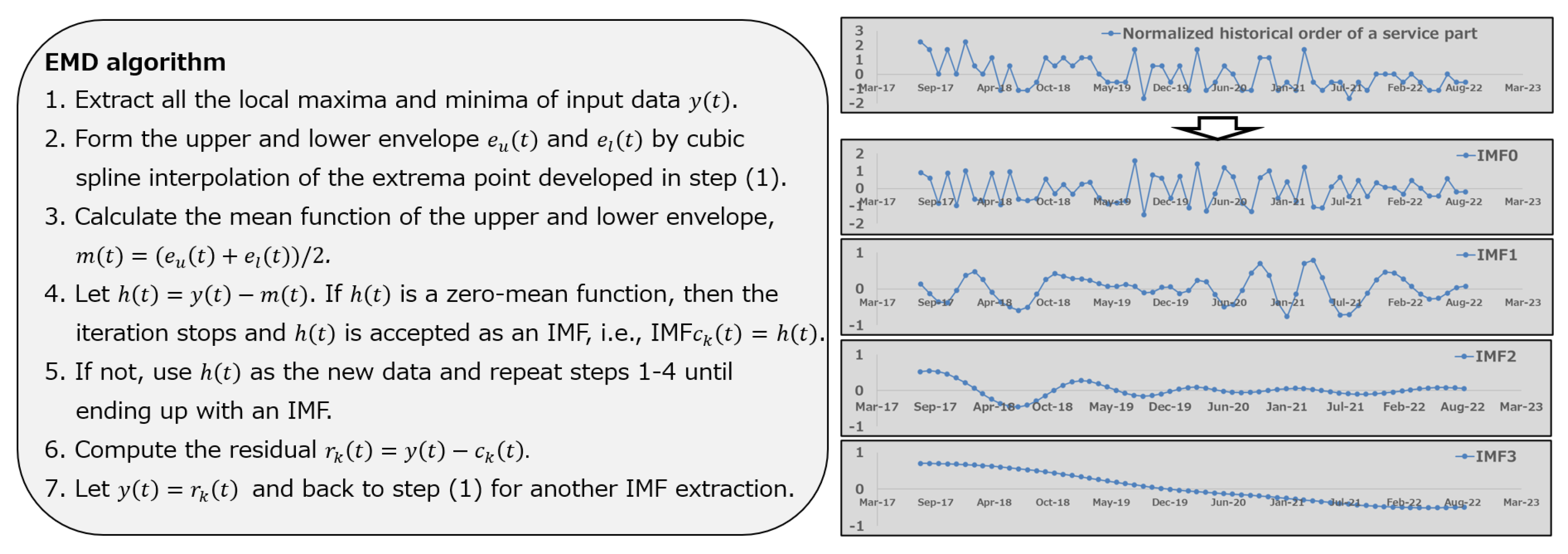

This subsection introduces Ensemble Empirical Mode Decomposition (EEMD) to adaptively decompose any time series into multiple Intrinsic Mode Functions (IMFs) that change at different time scales. EEMD is an improved version of the signal processing algorithm EMD (Empirical Mode Decomposition) proposed by Huang et al. [

26]. As shown in

Figure 7, the traditional EMD algorithm calculates the upper and lower envelopes of the target signal (time series), determines the average envelope, and then computes the IMF by finding the difference between the average envelope and the target signal, thus decomposing the target signal into multiple intrinsic modes. The characteristic of EMD is that it decomposes the given target signal without assuming basis functions or window functions, allowing for the easy identification of nonlinear oscillation modes at different time scales while preserving the time-frequency characteristics of the target signal. However, since no basis functions are used, the decomposition results may include mixed modes, which presents the challenge of mode mixing [

10]. In contrast, the EEMD algorithm, which is an improvement on EMD, adds white noise to the given target signal, then decomposes the signal into various time scales and calculates the decomposition results. This process is repeated multiple times to calculate the ensemble average of the decomposition results, yielding the IMF mode functions. Consequently, EEMD, which enables stable mode decomposition, is considered useful when the data includes complex phenomena such as nonlinearity, non-stationarity, and the presence of noise [

27,

28,

29].

In demand forecasting, EEMD is performed using the historical order data of a single service part as input. An example of EEMD results, shown in

Figure 7, reveals that as the order of the IMF increases, the waveform of the IMFs changes from high-frequency fluctuations to low-frequency fluctuations, and eventually to a monotonic increase or decrease. This characteristic allows for a reduction in the complexity of forecasting modeling by decomposing into IMF modes, compared to building a regression model that directly uses order history data. As a result, we can expect enhanced forecasting accuracy for various demand patterns.

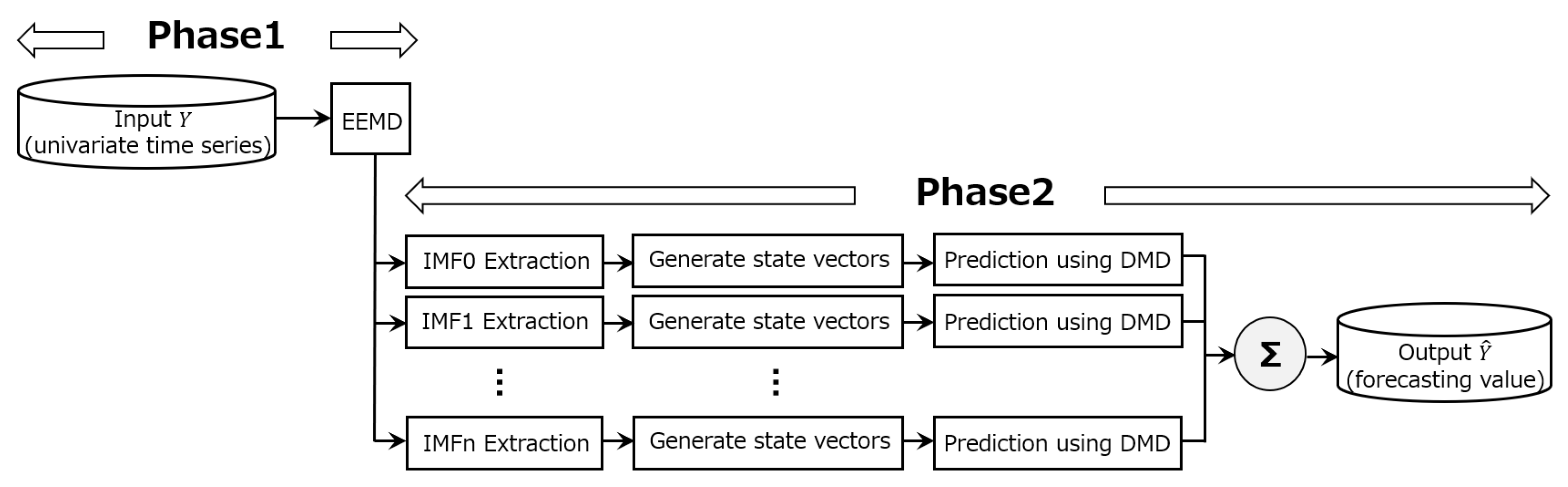

Based on the above considerations, we establish a forecasting process that combines Ensemble Empirical Mode Decomposition (EEMD) and Dynamic Mode Decomposition. The process, shown in

Figure 8, consists of the following two phases.

Phase 1: The order history for each service part, i.e., univariate time series, is decomposed into Intrinsic Mode Functions (IMFs) to separate the long-term fluctuation components (regular components) from the short-term fluctuation components (irregular components) included in the data.

Phase 2: After generating state vectors for each IMF mode, a regression model using Dynamic Mode Decomposition (DMD) based on state vector information as input is trained and used to predict the future values corresponding to each IMF. Finally, the demand forecast is obtained by summing the forecast values for each IMF.

4. Results and Discussion

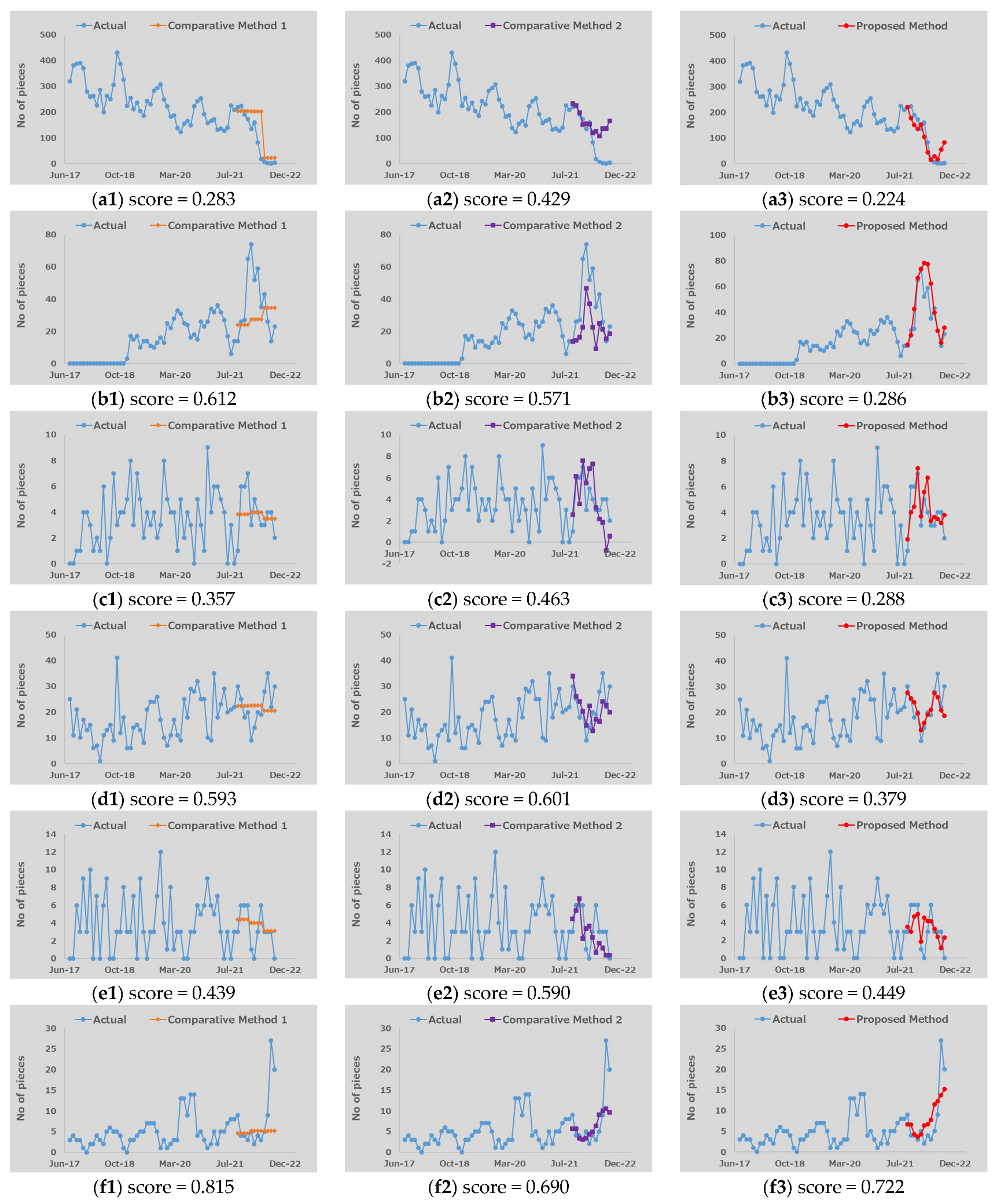

A comparison of score results between the proposed method and two comparative methods is shown in

Table 1. In the results of average scores, which represent the overall forecasting accuracy of 8,605 parts, the higher score (0.433) of Comparative Method 2 compared to Comparative Method 1 (0.370) highlights the difficulties of ensuring demand forecasting accuracy across a wide range of service parts even when using sophisticated statistical and machine learning techniques for time series forecasting. On the other hand, the forecast produced by the proposed method reduces the average score to 0.269, a decrease of 27% and 38% compared to Comparative Method 1 and Comparative Method 2, respectively. The results indicate that overall forecasting accuracy with high reliability can be achieved by utilizing the proposed method. In the analysis of the relationships between the score values and the number of parts, the number of parts with good forecasting accuracy (those with score values under 0.3) in the proposed method greatly exceeds that of the comparative methods, with a significant increase of about 1500 parts. As a result, the number of parts with intermediate forecasting accuracy (those with score values between 0.3 and 0.7) and low forecasting accuracy (those with score values above 0.7) in the proposed method decreases significantly. However, there is still a small percentage of service parts with low forecasting accuracy in the proposed method, indicating a need to consider improvements in accuracy and management of the forecast values for the demand of these service parts.

Figure 10 illustrates the comparison results of forecasting accuracy advantages in the dataset between the proposed method and each comparative method. The proposed method shows a greater number of advantageous parts compared to the comparative methods, with a ratio of part numbers of 8:1 between the proposed method and Comparative Method 1, and a ratio of part numbers of 7:2 between the proposed method and Comparative Method 2. The results suggest that the use of DMD and EEMD can enhance forecasting accuracy across various demand patterns for different individual parts. Meanwhile, the number of service parts that achieve better forecasting accuracy using the comparative methods implies that there are some demand patterns in which the proposed method is not superior to the others.

The actual historical orders, forecast waveforms, and the corresponding score values for some representative parts with multiple demand patterns are shown in

Figure 11 and

Figure 12. In each figure, the results in a row show the forecast results for an individual service part while the left column, the middle column, and the right column show the forecast results using Comparative Method 1, Comparative Method 2, and the proposed method, respectively. First, the results for demand patterns with strong seasonality (annual periodicity) are presented in

Figure 11a. The forecast waveforms of Comparative Method 2 and the proposed method demonstrate good accuracy in reproducing annual periodic fluctuations compared to Comparative Method 1, with Comparative Method 2 showing the best results due to its assumption of annual demand patterns in the historical order data of service parts. Conversely, for the demand patterns representing weak annual periodicity (where order quantities fluctuate within a trend of annual periodicity) shown in

Figure 11b and the two-year periodic demand pattern shown in

Figure 11c, the forecast accuracy of the proposed method exceeds that of Comparative Method 2, suggesting the capabilities of DMD and EEMD for forecasting demand patterns with different periodicities.

Figure 11e,f show the forecasting results in demand patterns dominated by increasing and decreasing trends. In such demand patterns, the proposed method shows slight superiority over the comparative methods even without any assumption of trends. Furthermore, in the demand patterns where the forecast range differs from those in the training range, as shown in

Figure 12a,b, the extrapolation forecasts using DMD in the proposed method demonstrate very good accuracy. This result confirms the capability of DMD in extrapolated forecasting not only in test functions but also in real data situations.

In demand patterns with high order quantities and weak variability shown in

Figure 11d, all methods yield good accuracy in their forecasting results. On the other hand, in demand patterns characterized by low order quantities and strong variability, the results in

Figure 12c,d show the clear superiority of forecasts made by the proposed method. Generally, the proposed method consistently delivers good accuracy across all previously discussed demand patterns, indicating its capability for forecasting both regular and irregular demand patterns where randomness is not a dominant factor.

The qualitative reproducibility of the proposed method in the demand patterns characterized by strong random fluctuations at low order quantity levels is shown in

Figure 12e. However, its quantitative forecasting results are still inadequate. In addition, in the demand patterns shown in

Figure 12f, where unprecedented demands occur, the current forecasting approaches based on historical order data cannot accurately represent such sudden fluctuations, making reliable forecasting impossible. Therefore, it is necessary to consider improvement measures for the proposed method, which not only predicts future demand but also assesses the range of its possibilities. As a potential solution, we can focus on IMF0, which exhibits the most irregular fluctuations among the modes obtained by EEMD, and consider expressing the time evolution of IMF0 using DMD while taking uncertainty into account. Consequently, the DMD theory that considers probabilities becomes significant, and its development and application can be seen as one of the future prospects of the proposed method.

5. Conclusions

To gain customer satisfaction, manufacturers are required to provide a timely supply of vehicle service parts for accidents and regular consumable replacements. Therefore, while ensuring a safe inventory level to avoid delays in part supply due to stock shortages, excess inventory can lead to an increase in warehouse management costs. For these reasons, demand forecasting of service parts is a critical process for maintaining appropriate inventory levels.

In this paper, a demand forecasting method for vehicle service parts, based on a dynamical approach and time series data, has been developed to satisfy requirements in terms of operation costs and forecasting accuracy. An analytical derivation based on Koopman theory and Dynamic Mode Decomposition (DMD) algorithm, under the assumption that demand transitions can be represented by the dynamics of the corresponding state variables in time series, shows that forecasting for future demands can be achieved by using historical orders of a single service part. Due to this fact, the calculation of the proposed method can be easily applied with reasonable computational requirements.

Forecasting trials using test functions were conducted to theoretically confirm the validity of the proposed method. Excellent agreement between the forecasting values and the test function data shows the capability of the proposed method to predict the future values of various time series data with complex characteristics such as periodicities, trends, nonlinear variability, and chaotic behaviors. Based on trial results, an improvement using Ensemble Empirical Mode Decomposition (EEMD) was implemented to enhance the effectiveness of the proposed method in practice. By applying EEMD, univariate time series data can be decomposed into multiple modes with lower complexity, and as a result, the transition prediction of each mode using DMD can be conducted with higher accuracy.

An application of the proposed method to an order dataset of service parts was also conducted to confirm its effectiveness in practice. A significant reduction in forecasting errors obtained by the proposed method, in comparison with two other forecasting methods, shows its superiority in forecasting various demand patterns of service parts.

Based on all of the above results, the proposed method can be used with high confidence to predict and analyze future demands at the individual service part level. The application of the proposed method is not only for the prediction and evaluation of demands for vehicle service parts but can also be extended to other time series forecasting problems. On the other hand, it was also found that the forecasting accuracy of the proposed method is not satisfactory for unprecedented demand patterns or those that change randomly with low order quantities. In demand patterns dominated by unpredictable factors, achieving safe inventory levels and optimal stock management using current forecasts is still challenging. To overcome this problem, improvements and expansions of the proposed method by applying theories [

38,

39] that account for uncertainty in time series are required, and this will be addressed in a future work.

Furthermore, we introduce the function ()= using the eigenvector obtained from DMD. The function () becomes an approximation for the eigenfunction of the Koopman operator according to the following equation.

Furthermore, we introduce the function ()= using the eigenvector obtained from DMD. The function () becomes an approximation for the eigenfunction of the Koopman operator according to the following equation.