Abstract

Recent advances in Machine Learning have significantly improved anomaly detection in industrial screw driving operations. However, most existing approaches focus on binary classification of normal versus anomalous operations or employ unsupervised methods to detect novel patterns. This paper introduces a comprehensive dataset of screw driving operations encompassing 25 distinct error types and presents a multi-tiered analysis framework for error-specific classification. Our results demonstrate varying detectability across different error types and establish the feasibility of multi-class error detection in industrial settings. The complete dataset and analysis framework are made publicly available to support future research in manufacturing quality control.

1. Introduction

Threaded fasteners are among the most common joining methods in manufacturing, with countless screw connections made daily across industries. Their quality directly impacts product integrity and safety, with faulty connections potentially causing product failures. Traditional quality control methods often rely on rule-based torque monitoring, which detects major deviations but lacks sensitivity for identifying specific error types [1].

Recent Machine Learning applications in manufacturing quality control have demonstrated success in anomaly detection for screw driving operations. Previous research has employed both supervised and unsupervised methods, with studies achieving competitive accuracies for mostly binary classification [2,3,4,5,6,7]. However, while distinguishing between normal and anomalous operations has been extensively studied in past research, the classification of specific error types has received limited attention.

This paper addresses this gap by introducing a comprehensive dataset encompassing 25 distinct error types and evaluating the feasibility of multi-class error detection in industrial settings. We contribute (1) a public dataset of labeled screw driving operations covering diverse error types, (2) a multi-tiered analysis framework for evaluating error detection capabilities, and (3) empirical evidence demonstrating varying detectability across different error categories. Our approach moves beyond simply identifying that an error exists to determining which specific error is occurring, enabling more targeted corrective actions in industrial applications.

2. Related Work

Machine learning applications in manufacturing quality control have evolved from statistical process control to data-driven methods that identify complex quality-related patterns. Recent reviews have documented this transition, highlighting trends toward end-to-end systems integrating sensing, feature extraction, and decision-making [2,3]. For time series data specifically, appropriate feature selection can help to enhance both accuracy and efficiency in anomaly detection systems, with methods like Dynamic Time Warping improving classification while reducing computational complexity.

Screw driving monitoring has progressed from basic torque measurement to sophisticated multi-sensor approaches. Traditional methods analyze torque-angle curves but have limited capability for detecting subtle variations [1]. Aronson et al. [5] demonstrated data-driven classification approaches for screwdriving operations, showing the feasibility of supervised learning techniques for distinguishing between different operational characteristics in fastening processes. Similarly, Zhou and Paffenroth [4] utilize Deep Autoencoders to effectively detect anomalies in noisy screw driving data without requiring clean training data, demonstrating superior performance in distinguishing between normal operations and various defects.

Most existing work on screw driving quality control focused on binary classification. Teixeira et al. [3] applied statistical process control techniques for monitoring screwing processes in smart manufacturing environments. Ribeiro et al. [2] achieved high accuracy in identifying faulty operations using isolation forests and deep autoencoders but treated all anomalies as a single class. Unsupervised approaches have also shown promise, with West et al. [1] achieving competitive accuracies using k-means clustering with DTW, particularly effective for imbalanced datasets. Sakamoto et al. [7] demonstrated successful real-time implementation of isolation forest for detecting bottom-touch defects, achieving 0% false negatives with minimal false positives in an actual production environment.

The classification of specific errors has received little attention in manufacturing contexts. While some research attempted to classify broad categories of screw driving errors or proposed hierarchical classification approaches for assembly errors, approaches to multi-class error detection in screw driving operations remain largely unexplored, particularly regarding the physical similarity between different error mechanisms that often leads to classification confusion. Cheng et al. [6] addressed part of this challenge through sensor selection and multi-class classification approaches for automated screw driving, demonstrating methods to distinguish between different error types despite the physical similarity between error mechanisms that often leads to classification confusion.

This gap underscores the need for systematic analysis of distinct error types in screw driving operations, which our present study addresses through a comprehensive evaluation of 25 error classes. By examining the separability of these errors in the feature space, we move beyond identifying that an anomaly exists to determining which specific error is occurring, a crucial advancement for targeted measures of quality control.

3. Dataset and Experimental Setup

Our experimental data was collected using an automated screw driving station equipped with an electric screwdriver (Bosch Rexroth AG, Germany, with CS351S-D controller) previously used in production but relocated to a laboratory setting for controlled data collection. The system operated on a multi-step tightening program with a target torque of 1.4 Nm (process window: 1.2–1.6 Nm), maintaining identical settings as in its serial production. All experiments were conducted under consistent environmental conditions to minimize external influences and were gathered over the course of a week.

The dataset systematically explores 25 experimental conditions organized into 5 error groups that represent different fault mechanisms in screw driving operations, as shown in Table 1. Group 1 (Screw Quality Deviations, classes 101–105) represents alterations to the fastener itself, such as deformed threads and coated screws. Group 2 (Contact Surface Modifications, classes 201–205) encompasses variations affecting the interface between screw and component, including damaged surfaces and inserted rings. Group 3 (Component or Thread Modifications, classes 301–305) features alterations impacting the structural interaction between screw and threaded component, such as enlarged holes and reduced material strength. Group 4 (Environmental Conditions, classes 401–405) includes variations in surface conditions affecting frictional and material properties, like lubricants and temperature changes. Group 5 (Process Parameter Variations, classes 501–504) represents modified tightening parameters including velocity and torque adjustments. The control group was added to Group 5 to maintain a set of five classes for all groups.

Table 1.

Error classes organized by logical groups representing different fault mechanisms.

The data was gathered using 2500 workpieces (plastic housing consisting of two halves joined together), with two screws each, resulting in 5000 observations. Since each screw position (left and right) exhibits different characteristics, this analysis focuses exclusively on the left position with 2500 observations. The control group containing normal operations was categorized within Group 5 as the baseline condition to maintain a set of five classes for all groups and enable consistent comparative analysis.

Each operation’s data includes time series measurements of torque, angle, gradient, and time values throughout the tightening process. This comprehensive fastening data capture enables analysis of how different fault conditions manifest in the measurement profiles.

We published the dataset under an open-access license (West, N.; Deuse, J. Industrial screw driving dataset collection: Time series data for process monitoring and anomaly detection (v1.2.2), 2025. Available online: http://doi.org/10.5281/zenodo.14729547, accessed on 7 August 2025). To facilitate data access, we developed PyScrew, a dedicated Python (3.11) library providing streamlined functions for working with the screw driving time series data (West, N. PyScrew: A Python package designed to simplify access to industrial research data from screw driving experiments, 2025. Available online: http://github.com/nikolaiwest/pyscrew, accessed on 7 August 2025). The library implements a preprocessing pipeline including run filtering, fixing quality related issues like missings and duplicates as well as length standardization by padding. The interface provides access to multiple screw driving datasets, facilitating comparative analysis across different experimental conditions and enabling reproducible research in industrial quality control (A more comprehensive introduction to the developed library can be found here: http://arxiv.org/pdf/2505.11925, accessed on 7 August 2025 ).

4. Classification Framework and Implementation

4.1. Classification Framework

We implement a progressive classification framework to comprehensively evaluate error detection capabilities in screw driving operations, moving from simpler to more complex scenarios. Firstly, we conduct two binary classification experiments to evaluate the fundamental detectability of the collected errors classes from our dataset:

- Balanced Binary Classification: For each error type, we perform a balanced binary classification distinguishing between normal operations (50 OK samples) and faulty operations of that specific type (50 NOK samples). This provides an idealized baseline for error detectability and establishes which error types are most distinguishable from normal operations under controlled conditions.

- Imbalanced Binary Classification: To simulate real-world manufacturing conditions where errors are rare events, we perform an imbalanced classification comparing each error type (50 NOK samples) against the combined set of all normal operations (1250 OK samples). This scenario, with a 25:1 class imbalance, tests the robustness of our classification approaches when confronted with proportions closer to reality.

Secondly, building upon the binary experiments, we implement two additional multi-class classification scenarios with increasing complexity:

- Grouped Multi-class Classification: We perform a 6-class classification to distinguish between the five error groups and normal operations. Each error group contains 250 samples (50 from each of the 5 error types within the group), while the normal class contains 1250 samples. This approach evaluates whether logically grouped errors sharing similar mechanical principles can be effectively identified.

- Full Multi-class Classification: In the most comprehensive scenario, we conduct a 26-class classification across all 25 individual error types plus normal operations. This challenging task represents the ultimate goal of specific error identification, enabling targeted remedial actions in manufacturing environments.

4.2. Implementation and Evaluation

We evaluate five models selected for their demonstrated effectiveness in time series classification: Support Vector Machines (SVM) with RBF kernel for capturing non-linear relationships; Random Forest for ensemble learning and handling complex feature interactions; TimeSeries ForestClassifier (TSF), a specialized ensemble method capturing both local and global patterns; ROCKET (RandOm Convolutional KErnel Transform), which uses random convolutional kernels for efficient feature extraction; and a Random Classifier baseline for reference. The models were implemented using scikit-learn and sktime estimators to ensure standardized implementation and consistent evaluation.

All implementations use our PyScrew library for preprocessing. The time series data is first padded to its maximum length of 2000 observations, focusing exclusively on the left screw position. We then apply Piecewise Aggregate Approximation (PAA) to reduce the dimensionality to 200 features, followed by normalization. For consistent evaluation, we implement stratified 5-fold cross-validation to ensure balanced representation of each class across folds, particularly important given the class imbalance in our datasets.

Due to imbalances based on sampling, we report the averaged F1-scores to give a balanced measure of precision and recall. The complete implementation, including preprocessing steps, model configurations, and evaluation procedures, is available in our public repository (West, N. Companion repository for supervised error detection in screw driving operation, 2025. Available online: http://github.com/nikolaiwest/2025-supervised-error-detection-itise, accessed on 7 August 2025), enabling reproducibility and facilitating more research in this domain.

5. Results

5.1. Results of the Binary Classifications

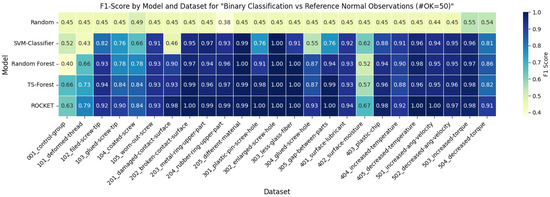

Figure 1 presents the F1-scores for five models across all 25 errors in the balanced binary classification scenario, i.e., 50 error samples vs. 50 normal samples. This represents an idealized baseline for error detectability under controlled conditions. TSF achieved the highest overall performance, with F1-scores exceeding 0.90 for the majority of error types, demonstrating strong discriminative power even with the relatively small training dataset. The ROCKET classifier showed comparable performance, particularly for error Group 3 (component/thread modifications) and 4 (environmental conditions). In contrast, the SVM classifier exhibited more variable performance across error types due to limited feature optimization, although still substantially outperforming the random baseline.

Figure 1.

F1-Score comparison across ML models for binary classification of manufacturing defects against reference.

Error detectability varied across different categories. Notably, error types 103_glued-screw-tip, 203_metal-ring-upper-part, and 301_plastic-pin-screw-hole were consistently identified with F1-scores above 0.95 across multiple classifiers, indicating distinct signatures that are easily distinguishable from normal operations. In contrast, error types such as 205_different-material and some process parameter variations (Group 5) showed more variable detectability, suggesting greater signature similarity to normal operations.

Error group analysis revealed that component/thread modifications (Group 3, classes 301–305) and environmental conditions (Group 4, classes 401–405, with the exception of 402_surface-moisture) were generally the most detectable error categories, with high F1-scores across most classifiers, suggesting more distinct torque-angle signatures.

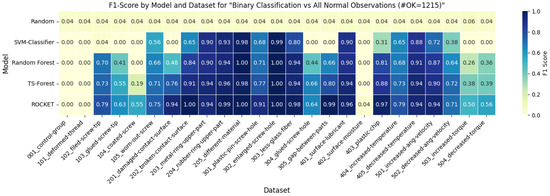

To better approximate real-world conditions, Figure 2 presents F1-scores for the imbalanced scenario, where each error type (50 samples) was classified against all normal operations (1215 samples), representing a substantial class imbalance. The introduction of class imbalance resulted in an expected overall decrease in performance across all classifiers, though the magnitude of this decrease varied substantially by both classifier and error type. TSF maintained the most robust performance under imbalanced conditions, with only moderate decreases in F1-scores for many error classes.

Figure 2.

F1-Score comparison across ML models for binary classification of manufacturing defects against all references.

The most notable performance degradation occurred for error types in Group 5 and for 101_deformed-thread, where F1-scores dropped dramatically compared to the balanced scenario. In contrast, error types involving physical modifications like 103_glued-screw-tip, 203_metal-ring-upper-part, and 301_plastic-pin-screw-hole maintained good F1-scores (>0.85) even under imbalanced conditions, reinforcing their distinct detectability.

Group 3 and 4 demonstrated the highest resilience to class imbalance, with average F1-scores above 0.80 across most classifiers. This finding has significant practical implications, suggesting that these categories of errors remain highly detectable even in realistic production scenarios where errors are rare events.

To conclude the binary classification analysis, examination of the results reveals some insights for industrial implementation. While most error types show at least moderate detectability in balanced conditions, some, like 402_surface-moisture, perform poorly across all classifiers in both scenarios, suggesting inherent limitations in their detection using torque-angle signatures alone. As expected, the control group, containing only normal operations, predictably shows random-level performance in binary classification. These findings highlight the importance of error-specific detection strategies and suggest that certain error types may require additional sensing modalities beyond torque-angle measurements for reliable identification in manufacturing environments.

5.2. Multi-Class Classification Results

Building upon the binary results, we extend the analysis to multi-class classification, which represents an advancement towards the detection of specific errors types. Rather than identifying the presence of an anomaly, these experiments evaluate the ability to determine which specific error is occurring, which is crucial for targeted quality control interventions. This progression reflects the real-world industrial need to not only detect that a process has deviated from normal operation but to diagnose the underlying issue. By identifying specific error types, maintenance personnel can implement focused corrective actions, reducing downtime and improving overall efficiency.

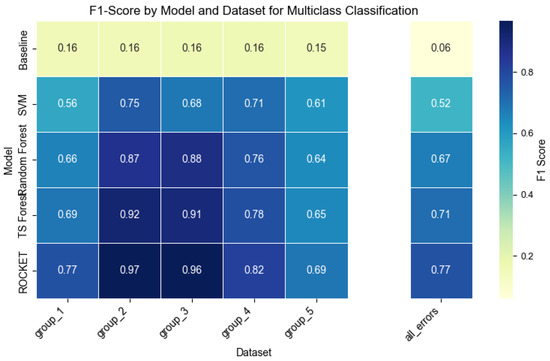

The first multi-class scenario involved a 6-class classification problem distinguishing between the five error groups (each containing 250 samples) and normal operations (1250 samples). This approach evaluates whether logically grouped errors sharing similar mechanical principles can be effectively identified. As shown in Figure 3, the TSF achieved the best overall performance in this grouped classification task, with F1-scores ranging from 0.86 to 0.92 across the five error groups. The ROCKET classifier showed comparable results, particularly excelling at detecting Group 3 (component/thread modifications) with an F1-score of 0.96, the highest observed across all classifiers and error groups.

Figure 3.

F1-Score comparison across models for grouped multi-class classification (five columns left) and multi-class classification with all available errors (right column).

All classifiers showed their lowest performance for Group 1, suggesting that the various screw quality issues produce more similar signatures that are challenging to distinguish as a cohesive group. In contrast, Group 3 was the most consistently identifiable error category across all classifiers, reinforcing our earlier observation from binary classification that these error types produce particularly distinctive signatures.

The most comprehensive and challenging scenario involved a 26-class classification across all 25 individual error types plus normal operations. As expected, the increased granularity of classification resulted in overall performance decreases compared to the grouped classification. The TSF classifier maintained the strongest performance (F1 = 0.71 overall), demonstrating its robustness even with the substantially increased number of classes. ROCKET followed with an overall F1-score of 0.77, indicating that its random convolutional kernel approach scales effectively to complex multi-class problems.

For the full 26-class classification, we observed several important patterns from the detailed class-level metrics. Errors within the same group were more frequently confused with each other than with errors from different groups, validating our logical grouping based on mechanical principles. This was particularly pronounced for error types 101–105 (screw quality deviations) and 501–504 (process parameter variations). Certain error types maintained exceptional distinguishability even in the 26-class scenario, notably 103_glued-screw-tip (F1 > 0.85), 203_metal-ring-upper-part (F1 > 0.83), and 301_plastic-pin-screw-hole (F1 > 0.88). These error types produce such distinctive signatures that they remain identifiable regardless of classification complexity.

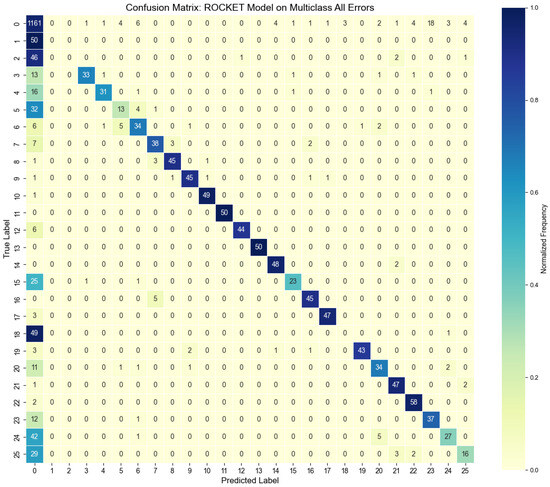

The confusion matrix in Figure 4 provides a detailed view of classification results for the ROCKET model across all 26 classes. The strong diagonal pattern confirms the overall effectiveness of the classifier in distinguishing between different error types, with most errors correctly assigned to their true class. Normal operations (labeled as class 0) show high precision and recall, with minimal misclassification as specific error types.

Figure 4.

Confusion matrix for the ROCKET model across all 26 classes (0 = normal, 1–25 = errors).

When examining the confusion patterns by error groups, we observe varying degrees of classification performance. Group 1 shows moderate inter-group confusion, particularly between classes 1 (deformed thread) and 3 (glued screw tip). Group 2 demonstrates very good separation with minimal inter-group confusion, with class 8 (203_metal-ring-upper-part) showing near-perfect classification. Group 5 demonstrates the most inter-group confusion, particularly between class 21 (501_increased-ang-velocity) and class 22 (502_decreased-ang-velocity).

Interestingly, there is minimal confusion between different error groups, with most misclassifications occurring within the same group. This validates our logical grouping of errors based on their mechanical principles and suggests that errors affecting similar aspects of the screw driving process produce more similar signatures.

The multi-class classification results show that error-specific detection is feasible in industrial screw driving, with several classifiers achieving F1-scores well above 0.70 even for the challenging 26-class scenario. This represents a significant advancement beyond binary anomaly detection, enabling more targeted quality control interventions by identifying not just that an error exists, but which specific error is occurring.

6. Discussion and Conclusions

Our evaluation of multi-class error detection in industrial screw driving operations demonstrates that most error types can produce signatures distinct from normal operations, with time series models like TSF and ROCKET generally outperforming other algorithms. However, this finding is highly dependent on the experimental setup and the examined error types: Different manufacturing processes or equipment configurations may yield different detectability patterns. Here, errors involving physical modifications generated distinctive signatures, while subtle parameter variations present greater challenges. This variation in detectability has implications for implementation priorities.

The confusion patterns observed in our multi-class experiments provide empirical support for our error grouping based on mechanical principles. Misclassifications occur primarily within rather than between groups, suggesting errors affecting similar aspects of the screw driving process produce similar signatures. This finding supports potential hierarchical classification approaches, where a model could first identify the error group before determining the specific type, simplifying interpretation for maintenance personnel while potentially improving overall performance.

While our experiments show promising results in a controlled laboratory setting, industrial deployment would introduce additional challenges from environmental variability, component tolerances, and equipment wear. Such factors were absent in our controlled setup as data was recorded over the course of a single week. Future validation should incorporate actual production data to identify which errors maintain distinctive signatures under increased environmental noise to prioritize implementation efforts.

Another limitation of our supervised approach is the requirement for substantial labeled data for each error type, a condition rarely met in manufacturing environments where errors are infrequent and labeling requires expert knowledge. Semi-supervised and transfer learning approaches represent particularly promising future directions to address this constraint. Semi-supervised methods could leverage abundant unlabeled normal operation data while requiring fewer labeled examples of each error type, directly addressing practical implementation limitations.

In conclusion, this work establishes the feasibility and potential of multi-class error detection in screw driving data. By moving beyond binary anomaly detection to specific error identification, we enable a new paradigm of targeted quality control interventions. The public release of both our comprehensive dataset and analysis framework provides valuable resources for advancing manufacturing intelligence. Our findings demonstrate that certain error types maintain high detectability even under challenging conditions, offering immediate implementation opportunities while highlighting areas requiring further research. This approach represents an advancement in quality control, promising reduced downtime, improved product reliability, and more efficient maintenance strategies through precise diagnostic capabilities rather than generic anomaly alerts.

Author Contributions

Conceptualization, N.W. and J.D.; methodology, N.W.; software, N.W.; validation, N.W. and J.D.; formal analysis, N.W.; investigation, N.W.; resources, J.D.; data curation, N.W.; writing—original draft preparation, N.W.; writing—review and editing, N.W. and J.D.; visualization, N.W.; supervision, J.D.; project administration, J.D.; funding acquisition, J.D. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the German Ministry of Education and Research (BMBF) and the European Union’s “NextGenerationEU” program that is part of the funding program “Data competencies for early career researchers” (Funding reference: 16DKWN119A).

Data Availability Statement

The complete dataset used in this study is publicly available under the https://doi.org/10.5281/zenodo.14729547. This dataset contains all time series data from the screw driving operations described in this paper, including torque, angle, gradient, and time measurements for all 25 error types and normal operations. To facilitate data access and preprocessing, we maintain PyScrew, a Python library providing streamlined functions for working with screw driving time series data, available at http://github.com/nikolaiwest/pyscrew (accessed on 7 August 2025, currently in v1.2.2). Additionally, all code of this paper, including preprocessing steps, model configurations, and evaluation procedures used, is available in our companion repository at http://github.com/nikolaiwest/2025-supervised-error-detection-itise (accessed on 7 August 2025).

Conflicts of Interest

The authors declare no conflict of interest.

References

- West, N.; Schlegl, T.; Deuse, J. Unsupervised anomaly detection in unbalanced time series data from screw driving processes using k-means clustering. Procedia CIRP 2023, 120, 1185–1190. [Google Scholar] [CrossRef]

- Ribeiro, D.; Matos, L.M.; Moreira, G.; Pilastri, A.; Cortez, P. Isolation Forests and Deep Autoencoders for Industrial Screw Tightening Anomaly Detection. Computers 2022, 11, 54. [Google Scholar] [CrossRef]

- Teixeira, H.N.; Lopes, I.; Braga, A.C.; Delgado, P.; Martins, C. Screwing Process Monitoring Using MSPC in Large Scale Smart Manufacturing. In Innovations in Mechatronics Engineering; Machado, J., Soares, F., Trojanowska, J., Yildirim, S., Eds.; Springer International Publishing: Cham, Switzerland, 2022; pp. 1–13. ISBN 978-3-030-79167-4. [Google Scholar]

- Zhou, C.; Paffenroth, R.C. Anomaly detection with robust deep autoencoders. In Proceedings of the 23rd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, Halifax, NS, Canada, 13–17 August 2017; pp. 665–674. [Google Scholar] [CrossRef]

- Aronson, R.M.; Bhatia, A.; Jia, Z.; Guillame-Bert, M.; Bourne, D.; Dubrawski, A.; Mason, M.T. Data-driven classification of screwdriving operations. In Proceedings of the International Symposium on Experimental Robotics, Nagasaki, Japan, 3–8 October 2016; pp. 244–253. [Google Scholar] [CrossRef]

- Cheng, X.; Jia, Z.; Bhatia, A.; Aronson, R.M.; Mason, M.T. Sensor selection and stage & result classifications for automated miniature screwdriving. In Proceedings of the International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 1–5 October 2018; pp. 6078–6085. [Google Scholar]

- Sakamoto, Y.; Nakamura, Y.; Sugioka, M. A case study of real-time screw tightening anomaly detection by machine learning using real-time processable features. Omron Tech. 2023, 55, 1–11. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).