1. Introduction

Emotions are one of humanity’s most distinguishing characteristics; they influence a person’s behavior [

1]. Understanding and studying human emotions is a crucial aspect of existence. Automatic emotion categorization using machine learning and artificial intelligence has recently sparked interest, as it may be utilized in a range of human–computer interface (HCI) applications [

2,

3,

4]. To be effective, an artificial emotional intelligence system must have a thorough understanding of human emotional understanding and the connection between affective expression and emotions [

5]. Human–machine interfaces and cooperation may be found in a variety of fields, including medicine, therapy, and gaming, to mention a few. Researchers are continually working to improve the flexibility and efficiency of computer–human interaction, with the goal of achieving high levels of user happiness. As a result, HCI systems must be capable of gaining a complete grasp of various human emotions and emotional expressions. Human ideas and emotions can be communicated verbally or nonverbally. Therefore, HCI systems must be able to recognize, interpret, and evaluate nonverbal human expressions.

The rest of the paper is laid out in this manner.

Section 2 discusses the associated work. The datasets and experimental methodology, along with model configuration, are described in

Section 3. The result of the proposed model is described in

Section 4 along with a comparison with the existing models. The conclusion is found in

Section 5.

2. Related Work

Specialists are progressively paying attention to emotion identification from EEG data as BCI (brain computer interface) advances, and they often employ a discrete or dimension-based model for emotion categorization. Ekman suggests that anger, contempt, fear, pleasure, sadness, and surprise are six primary emotions in a discrete paradigm [

6], whereas Plutchik created an eight-emotion wheel [

7]. Because each of these emotions may be exhibited more strongly or less strongly, Russel and Mehrabian suggested both the 2D [

8,

9] and 3D [

10] models portray emotions in electro negativity and enjoyment regions, respectively. Classical machine learning methods have been proposed in the past, but as more publicly released datasets with massive volumes of experimentally acquired brainwaves become available, scholars have begun to use deep learning methods [

11]. The authors of [

1,

12,

13,

14] used classical ML classifiers SVM, RF, LR, and KNN and achieved accuracy of 93%, 90%, 89.06, and 94.06%, respectively.

Some authors [

15,

16] also use LSTM architecture and achieve accuracy of 63.6 and 87.25 percent, respectively. Some authors [

17,

18] reported accuracy rates of 89.66% and 84.21%, respectively, using BiLSTM. The same dataset, GAMEEMO, was used by the author [

19] with an accuracy rate of 80%.

Our emotional state might have been disclosed by a brain signal like EEG, and our state of mind could be the only trustworthy means to comprehend it. The most essential challenges for designing a machine learning-based prediction model for emotional state categorization are feature extraction, feature selection, and establishing the correct classifier. To address these concerns, the current study offers a technique for recognizing emotions from EEG data while playing emotional video games.

3. Experimental Methodology

3.1. Selected Data Set and Participants

The GAMEEMO dataset [

19], which is freely available, was used in this investigation. The EEG dataset contains signals collected from participants while they were playing emotional video games. Participants were requested to complete the trials for four sessions each in order to compare neurological signals and significant reactions across different individuals and EEG sessions. EEG signals from computer games are included in this collection. They were obtained from 28 distinct people using an Emotive Epoc+ with a 14-channel wearable and portable electroencephalography (EEG) device. Subjects played four different emotional computer games for five minutes each (boring, calm, frightening, and hilarious), with a total of 20 min of EEG data available for each subject. This dataset is intended to serve as an alternative source of data for the emotion recognition process, as well as to compare the performance of wearable EEG devices to standard EEG devices.

3.2. EEG Signal Preprocessing

Because EEG signals respond more quickly to changes in emotional state than other peripheral physiological markers, we will use EEG signals to classify emotions in this work. At a sample rate of 2048 Hz, raw EEG signals were recorded and subsequently down-sampled to 128 Hz in the data gathering experiment. A 0.16–43 Hz band-pass filter is used to remove the EOG artefact from the EEG recordings.

3.3. EEG Signal Classification Method

A memory cell is a new structure introduced by LSTM networks. An input gate (i), a forget gate (f), an output gate (o), and a self-recurrent neuron are the four main aspects of a memory block. Cells can use these gates to store and retrieve info for longer periods of time. The LSTM creates a “constant error carousel” (CEC) for RNNs using the concept of “constant error flow”. This assures that gradients do not decrease. A memory cell serves as the main component, acting as an accumulator (keeping track of the identification relationship) throughout time. Instead of computing the difference between the new and old states as a matrix product, it computes the difference between them. The expressivity is the same, but the gradients are more consistent. The “DEEPHER” deep learning model consists of three LSTM layers of 512,1024, and 2048 neurons, as well as a fully connected embedding layer, one dropout layer, and a dense layer. The LSTM and dropout layers are used to learn attributes from real EEG data. The dropout layer, on the other hand, prevents a large number of units from “co-adapting”, resulting in less over fitting. Finally, the dense layer and the softmax activation function are used to conduct classification. Here, 70% of the EEG data are used to train the model, which is then evaluated on 30% of the EEG data. The Adam optimizer is used in the training phase, with the learning rate of 0.0001.

3.4. Model Complexity

The input length has no influence on the network’s storage needs as LSTM is local in both time and space [

20] and, for each time step, the time complexity per weight (for LSTM) is O(1). As a result, the LSTM’s total complexity for every time step is O (w), with w being the number of weights.

4. Result and Discussion

The efficiency of the ‘DEEPHER’ approach on the GAMEEMO database is shown in

Table 1.

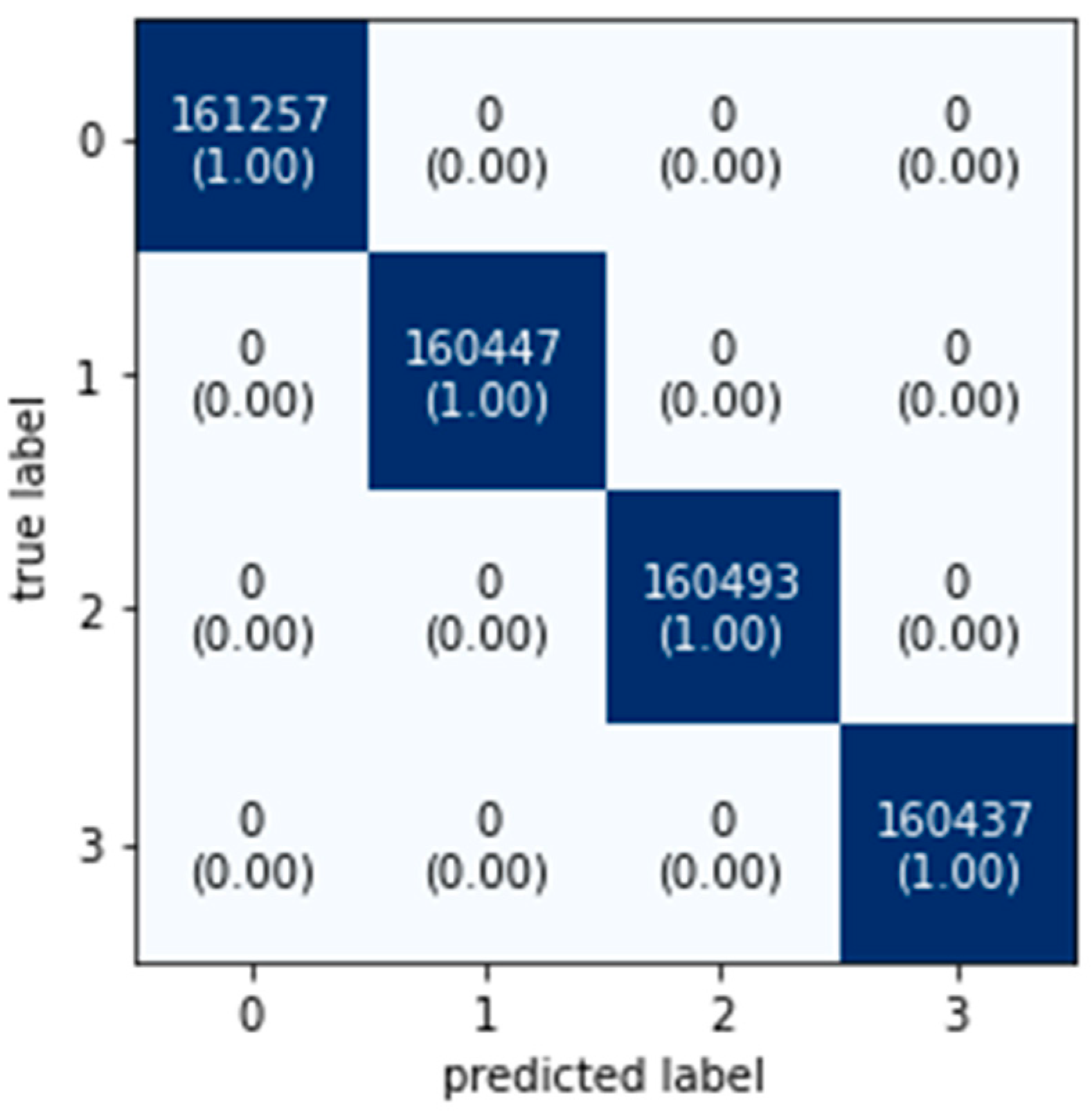

Figure 1 shows a confusion matrix of our results influencing the performance factors for each emotion; 0: boring, 1: calm, 2: horror, and 3: funny. It demonstrates the exact precision and proportion of properly detected genuine positives and negatives of sensations. As a result, the information gathered can be utilized as a standard by other researchers. The model is trained using the GAMEEMO database, and it achieves an accuracy of 99.99%.

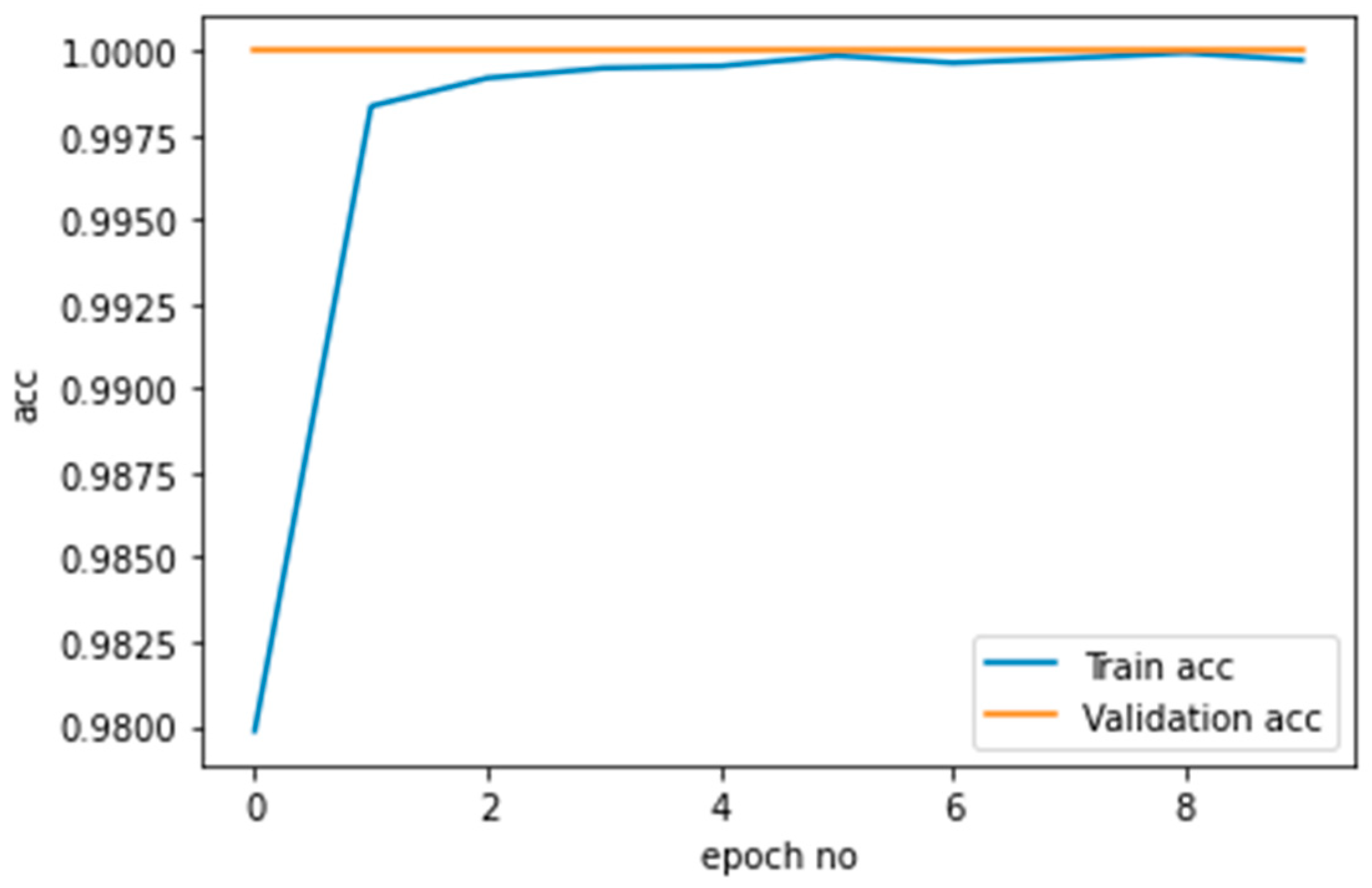

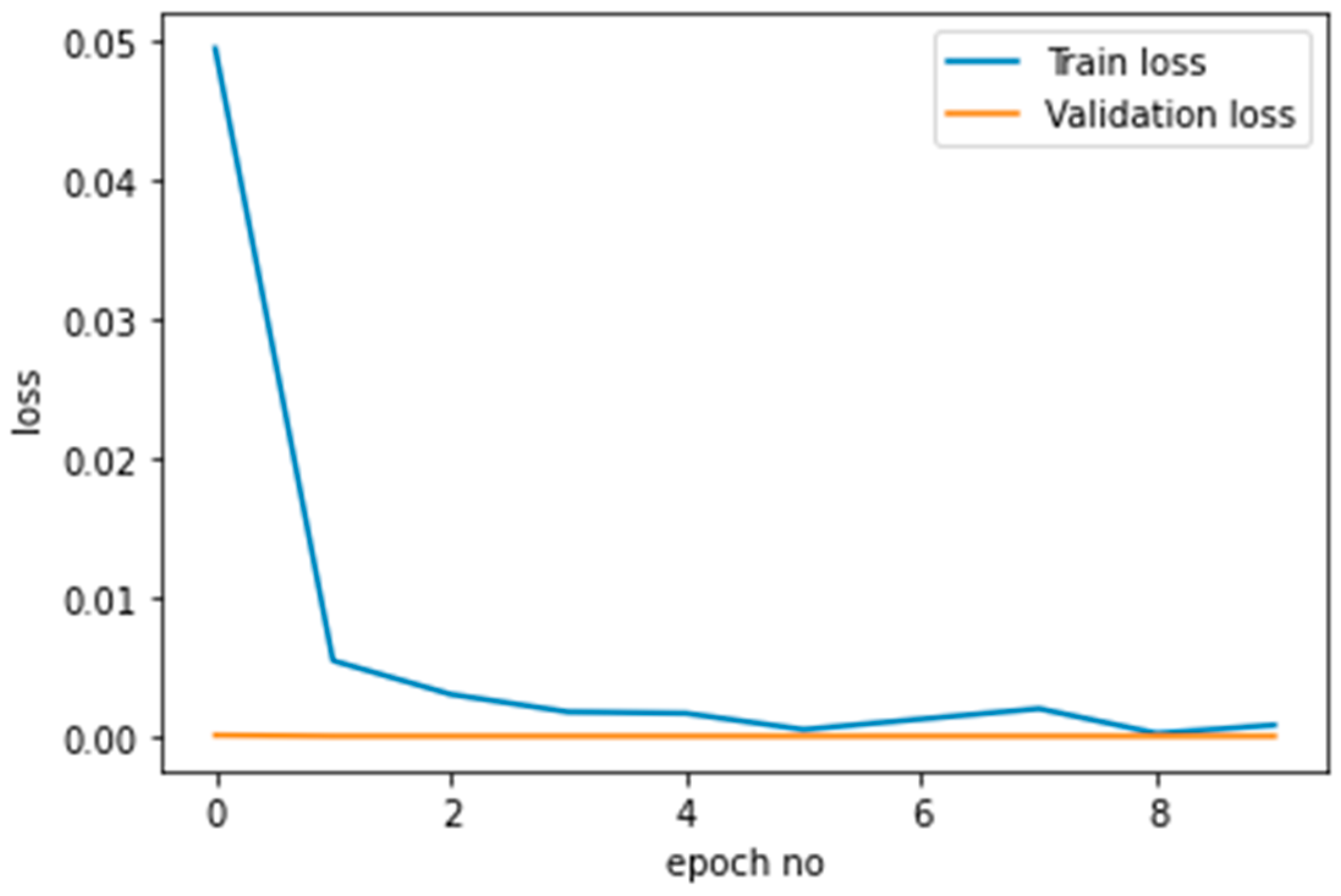

Figure 2 depicts the training-validation accuracy, whereas

Figure 3 depicts the training-validation loss. In this study, the greatest identification rate for emotion detection using EEG waves was 99.99 percent.

5. Conclusions and Future Work

In this paper, we describe previously used long short-term memory recurrent neural network (LSTM) architecture for the task of in-depth emotion recognition. This model shows that DEEPHER classification is compatible with cognitive perceptions of emotion, meaning that the model accurately identifies all EEG-related emotions during game play. This allows us to describe the performance of the model and demonstrate what the network is learning. Visualizing what the network learnt provides us with a valuable tool for investigating how emotions were identified and comprehending our network’s behavior. This indicates that the LSTM network is trained to recognize emotions. In this approach, we will be able to correlate our approach to other emotion recognition and classification systems.

During game play, the system can detect four emotion settings for EEG raw data. The technology requires users to wear an EPOC headset and stand in front of a camera to collect raw EEG data. The system can detect emotion in 99.99 percent of EEG data, according to the findings. The suggested model cannot presently detect emotions in real time, which is one of the most significant hurdles in future research.

Author Contributions

This research was led by A.K. (Akhilesh Kumar) and A.K. (Awadhesh Kumar) of the Department of Computer Science, Institute of Science, BHU, Varanasi, India, who designed a detailed methodology and documented the whole research into a structured manuscript. A.K. (Akhilesh Kumar) wrote the article, analyzed the datasets using software tools, and fined tuned the results. A.K. (Awadhesh Kumar) supervised and validated the results. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Conflicts of Interest

The authors declare no conflict of interest.

References

- Subasi, A.; Tuncer, T.; Dogan, S.; Tanko, D.; Sakoglu, U. EEG-based emotion recognition using tunable Q wavelet transform and rotation forest ensemble classifier. Biomed. Signal Process. Control 2021, 68, 102648. [Google Scholar] [CrossRef]

- Malete, T.N.; Moruti, K.; Thapelo, T.S.; Jamisola, R.S. EEG-based Control of a 3D Game Using 14-channel EmotivEpoc+. In Proceedings of the 2019 IEEE International Conference on Cybernetics and Intelligent Systems (CIS) and IEEE Conference on Robotics, Automation and Mechatronics (RAM), Bangkok, Thailand, 18–20 November 2019; pp. 463–468. [Google Scholar] [CrossRef]

- Al-Nafjan, A.; Hosny, M.; Al-Ohali, Y.; Al-Wabil, A. Review and classification of emotion recognition based on EEG brain-computer interface system research: A systematic review. Appl. Sci. 2017, 7, 1239. [Google Scholar] [CrossRef] [Green Version]

- Pan, C.; Shi, C.; Mu, H.; Li, J.; Gao, X. EEG-based emotion recognition using logistic regression with gaussian kernel and laplacian prior and investigation of critical frequency bands. Appl. Sci. 2020, 10, 1619. [Google Scholar] [CrossRef] [Green Version]

- Zhong, P.; Wang, D.; Miao, C. EEG-Based Emotion Recognition Using Regularized Graph Neural Networks. IEEE Trans. Affect. Comput. 2020, 3045, 1–12. [Google Scholar] [CrossRef]

- Ekman, P. An Argument for Basic Emotions. Cogn. Emot. 1992, 6, 169–200. [Google Scholar] [CrossRef]

- Ben-Zeev, A. The nature of emotions. Philos. Stud. 1987, 52, 393–409. [Google Scholar] [CrossRef]

- Li, J.; Zhang, Z.; He, H. Hierarchical Convolutional Neural Networks for EEG-Based Emotion Recognition. Cognit. Comput. 2018, 10, 368–380. [Google Scholar] [CrossRef]

- Asghar, M.A.; Khan, M.J.; Fawad; Amin, Y.; Rizwan, M.; Rahman, M.; Badnava, S.; Mirjavadi, S.S. EEG-based multi-modal emotion recognition using bag of deep features: An optimal feature selection approach. Sensors 2019, 19, 5218. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Alazrai, R.; Homoud, R.; Alwanni, H.; Daoud, M.I. EEG-based emotion recognition using quadratic time-frequency distribution. Sensors 2018, 18, 2739. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Rafi, T.H.; Farhan, F.; Hoque, M.Z.; Quayyum, F.M. Electroencephalogram (EEG) brainwave signal-based emotion recognition using extreme gradient boosting algorithm. Ann. Eng. 2020, 1–13. [Google Scholar]

- Zhang, J.; Chen, P. Selection of optimal EEG electrodes for human emotion recognition. IFAC-PapersOnLine 2020, 53, 10229–10235. [Google Scholar] [CrossRef]

- Rahman, M.; Poddar, A.; Alam, G.R.; Kumar, S. Affective State Recognition through EEG Signals Feature Level Fusion and Ensemble Classifier. arXiv 2021, arXiv:2102.07127. [Google Scholar]

- Satyanarayana, K.N.V.; Shankar, T.; Raju, P.V.R. An Approach for Finding Emotions Using Seed Dataset with Knn Classifier. Turk. J. Comput. Math. Educ. 2021, 12, 2838–2846. [Google Scholar]

- Jeevan, R.K.; Venu Madhava Rao, S.P.; Pothunoori, S.K.; Srivikas, M. EEG-based emotion recognition using LSTM-RNN machine learning algorithm. In Proceedings of the 2019 1st International Conference on Innovations in Information and Communication Technology (ICIICT), Chennai, India, 25–26 April 2019; pp. 1–4. [Google Scholar] [CrossRef]

- Hassouneh, A.; Mutawa, A.M.; Murugappan, M. Development of a Real-Time Emotion Recognition System Using Facial Expressions and EEG based on machine learning and deep neural network methods. Inform. Med. Unlocked 2020, 20, 100372. [Google Scholar] [CrossRef]

- Joshi, V.M.; Ghongade, R.B. IDEA: Intellect database for emotion analysis using EEG signal. J. King Saud Univ. Comput. Inf. Sci. 2020. [Google Scholar] [CrossRef]

- Yang, J.; Huang, X.; Wu, H.; Yang, X. EEG-based emotion classification based on Bidirectional Long Short-Term Memory Network. Procedia Comput. Sci. 2020, 174, 491–504. [Google Scholar] [CrossRef]

- Alakus, T.B.; Gonen, M.; Turkoglu, I. Database for an emotion recognition system based on EEG signals and various computer games—GAMEEMO. Biomed. Signal Process. Control 2020, 60, 101951. [Google Scholar] [CrossRef]

- Tsironi, E.; Barros, P.; Weber, C.; Wermter, S. An analysis of Convolutional Long Short-Term Memory Recurrent Neural Networks for gesture recognition. Neurocomputing 2017, 268, 76–86. [Google Scholar] [CrossRef]

| Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).