XplainLungSHAP: Enhancing Lung Cancer Surgery Decision Making with Feature Selection and Explainable AI Insights

Abstract

1. Introduction

2. Materials and Methods

- FEV1 (Forced Expiratory Volume in One Second): A measure of lung function, FEV1 evaluates the volume of air exhaled in one second. Lower values indicate impaired pulmonary function, which increases the risk of complications and affects recovery post-surgery.

- Before Surgery: This binary variable reflects whether the patient experienced significant preoperative pain, often associated with advanced disease or tumor involvement in sensitive areas, which can negatively impact recovery.

- Age: Older patients face higher surgical risks due to reduced physiological reserves and comorbidities, while younger patients generally experience better outcomes.

- Dyspnea (Shortness of Breath): This symptom signals compromised respiratory function, increasing the likelihood of perioperative complications such as hypoxia or ventilation issues.

- Cough: Chronic cough, a symptom of lung conditions like cancer or bronchitis, can exacerbate discomfort and indicate advanced airway involvement.

- Hemoptysis: Coughing up blood is an important symptom often linked to severe underlying pathologies like advanced lung cancer or airway damage, increasing surgical risks.

- Tumor Size and Extent: Larger tumors or those involving relevant structures require more extensive resections, elevating surgical complexity and affecting recovery.

- Surgical Approach: The type of procedure (e.g., lobectomy or pneumonectomy) significantly impacts postoperative outcomes. Minimally invasive methods like VATS reduce complications and recovery time.

- Air Leak and Pneumothorax: Postoperative complications such as prolonged air leaks or collapsed lungs delay recovery and may require additional interventions.

- Performance Status Scores: These scores evaluate the patient’s overall ability to perform daily activities, predicting their tolerance to surgical stress and recovery prospects.

- Comorbidity Indices: Chronic conditions like diabetes or hypertension are important factors that influence recovery and overall surgical risk.

- Dependent Variable: Survival Outcome: The binary outcome indicates whether the patient survived beyond one year (class 2) or not (class 1). This serves as the central variable for modeling postoperative survival probabilities [2].

2.1. Feature Engineering

- FEV1 (PRE5) represents the volume of air a patient can forcefully exhale in one second.

- FVC (PRE4) represents the total volume of air exhaled during a forced breath.

2.2. SHAP for Feature Relevance Extraction

- N is the set of all features.

- S is a subset of features excluding j.

- is the model prediction when only features in S are considered.

- The FEV1/FVC ratio, capturing pulmonary function.

- The Symptom Index, reflecting the cumulative symptom burden.

- The comorbidity score, indicating the impact of chronic conditions.

- The Age–Pulmonary Function Interaction, highlighting the interplay between age and respiratory capacity [7].

| Algorithm 1 SHAP for Feature Relevance Extraction |

|

- is the relevance score of feature , calculated using a trainable scoring function , where W and b are learnable parameters.

- ensures that the weights sum to 1, making them interpretable as probabilities.

- The input features, including those identified as important by SHAP, were transformed into high-dimensional embeddings through a linear layer.

- Attention scores were calculated for each feature and normalized using the softmax function to generate weights .

- A weighted sum z of the features was then computed, and this output was fed into subsequent fully connected layers to perform the binary classification (survival or mortality).

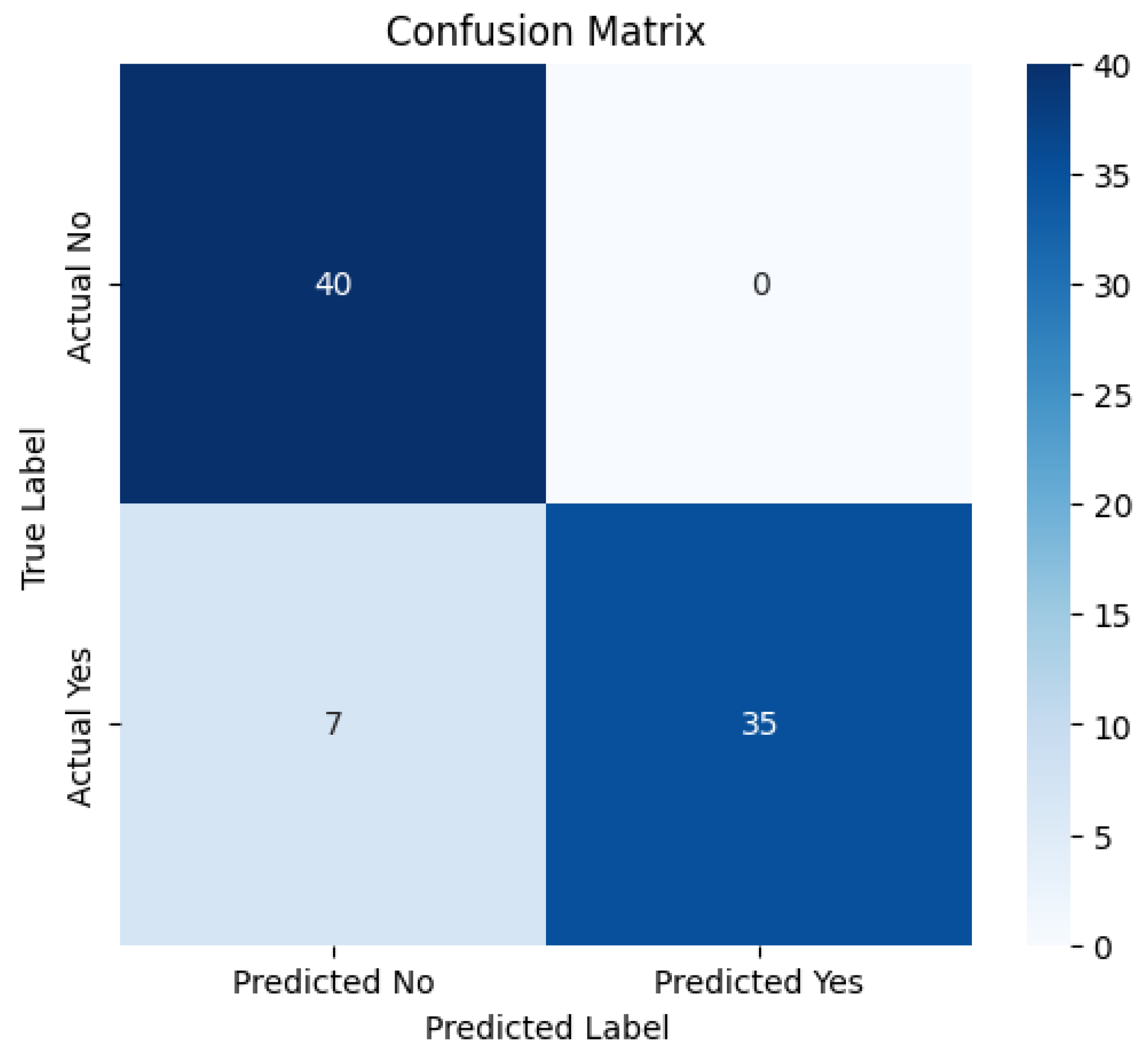

3. Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Ancel, J.; Bergantini, L.; Mendogni, P.; Hu, Z. Thoracic Malignancies: From Prevention and Diagnosis to Late Stages. Life 2025, 15, 138. [Google Scholar] [CrossRef]

- Kaggle. Thoracic Surgery Data Set. Available online: https://www.kaggle.com/datasets/sid321axn/thoraric-surgery (accessed on 21 November 2024).

- Van den Broeck, G.; Lykov, A.; Schleich, M.; Suciu, D. On the tractability of SHAP explanations. J. Artif. Intell. Res. 2022, 74, 851–886. [Google Scholar] [CrossRef]

- Niu, Z.; Zhong, G.; Yu, H. A review on the attention mechanism of deep learning. Neurocomputing 2021, 452, 48–62. [Google Scholar] [CrossRef]

- Onchis, D.M.; Costi, F.; Istin, C.; Secasan, C.C.; Cozma, G.V. Method of Improving the Management of Cancer Risk Groups by Coupling a Features-Attention Mechanism to a Deep Neural Network. Appl. Sci. 2024, 14, 447. [Google Scholar] [CrossRef]

- Rahman, M.M.; Munir, M.; Marculescu, R. EMCAD: Efficient Multi-scale Convolutional Attention Decoding for Medical Image Segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 16–22 June 2024; pp. 1–14. [Google Scholar]

- Biosymetrics. Feature Engineering of Electronic Medical Records. Available online: https://www.biosymetrics.com/blog/emr-feature-engineering (accessed on 21 November 2024).

- Liu, Y.; Wang, H.; Zhang, X. A Comparative Study of Normalization Techniques in Deep Learning Models for Medical Image Analysis. Appl. Sci. 2023, 13, 1234. [Google Scholar]

- Kor, C.-T.; Li, Y.-R.; Lin, P.-R.; Lin, S.-H.; Wang, B.-Y.; Lin, C.-H. Explainable Machine Learning Model for Predicting First-Time Acute Exacerbation in Patients with Chronic Obstructive Pulmonary Disease. J. Pers. Med. 2022, 12, 228. [Google Scholar] [CrossRef] [PubMed]

- Molnar, C. Interpretable Machine Learning: A Guide for Making Black Box Models Explainable, 2nd ed.; Leanpub: Victoria, BC, Canada, 2022. [Google Scholar]

- Lundberg, S.M.; Lee, S.-I. A Unified Approach to Interpreting Model Predictions. Adv. Neural Inf. Process. Syst. 2017, 30, 4765–4774. [Google Scholar]

- Costi, F.; Onchis, D.M.; Istin, C.; Cozma, G.V. Explainability-Enhanced Neural Network for Thoracic Diagnosis Improvement. In Proceedings of the International Conference on Computer Analysis of Images and Patterns, Limassol, Cyprus, 28–30 September 2023; pp. 35–44. [Google Scholar]

- Liu, Y.; Zhang, X.; Wang, H. Application of SHAP Values in Predicting Postoperative Survival in Thoracic Surgery Patients. J. Clin. Med. 2023, 12, 1234. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is All You Need. Adv. Neural Inf. Process. Syst. 2017, 30, 5998–6008. [Google Scholar]

- Livadiotis, G. Linear Regression with Optimal Rotation. Stats 2019, 2, 416–425. [Google Scholar] [CrossRef]

- Belgiu, M.; Drăguţ, L. Comparison of Random Forest, Support Vector Machines, and Neural Networks for Forest Species Classification Using Airborne Hyperspectral Data. Remote Sens. 2021, 13, 2581. [Google Scholar] [CrossRef]

- Liu, Y.; Zhang, W.; Chen, J. Advanced Short-Term Load Forecasting with XGBoost-RF Feature Selection and CNN-GRU Neural Network. Processes 2024, 12, 2466. [Google Scholar] [CrossRef]

- GeeksforGeeks. Binary Cross Entropy/Log Loss for Binary Classification. 2023. Available online: https://www.geeksforgeeks.org/binary-cross-entropy-log-loss-for-binary-classification/ (accessed on 21 November 2024).

- Kingma, D.P.; Ba, J. Adam: A Method for Stochastic Optimization. In Proceedings of the 3rd International Conference on Learning Representations (ICLR), San Diego, CA, USA, 7–9 May 2015. arxiv:1412.6980. [Google Scholar]

| Model | Accuracy (%) |

|---|---|

| Logistic regression | 78.65 |

| Random Forest | 79.12 |

| XGBoost | 80.49 |

| Attention mechanism only | 80.97 |

| XplainLungSHAP model | 91.49 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Costi, F.; Covaci, E.; Onchis, D. XplainLungSHAP: Enhancing Lung Cancer Surgery Decision Making with Feature Selection and Explainable AI Insights. Surgeries 2025, 6, 8. https://doi.org/10.3390/surgeries6010008

Costi F, Covaci E, Onchis D. XplainLungSHAP: Enhancing Lung Cancer Surgery Decision Making with Feature Selection and Explainable AI Insights. Surgeries. 2025; 6(1):8. https://doi.org/10.3390/surgeries6010008

Chicago/Turabian StyleCosti, Flavia, Emanuel Covaci, and Darian Onchis. 2025. "XplainLungSHAP: Enhancing Lung Cancer Surgery Decision Making with Feature Selection and Explainable AI Insights" Surgeries 6, no. 1: 8. https://doi.org/10.3390/surgeries6010008

APA StyleCosti, F., Covaci, E., & Onchis, D. (2025). XplainLungSHAP: Enhancing Lung Cancer Surgery Decision Making with Feature Selection and Explainable AI Insights. Surgeries, 6(1), 8. https://doi.org/10.3390/surgeries6010008