Integrating a Fast and Reliable Robotic Hooking System for Enhanced Stamping Press Processes in Smart Manufacturing

Abstract

1. Introduction

- (1)

- According to the wheel suspension process, the architecture of smart manufacturing is designed and includes the advantages of both CPMSs and CPPSs. In addition, the concept of the DT is considered, and the database J. Redmon generated by the virtual engine is combined with the database of real scenarios for machine learning training.

- (2)

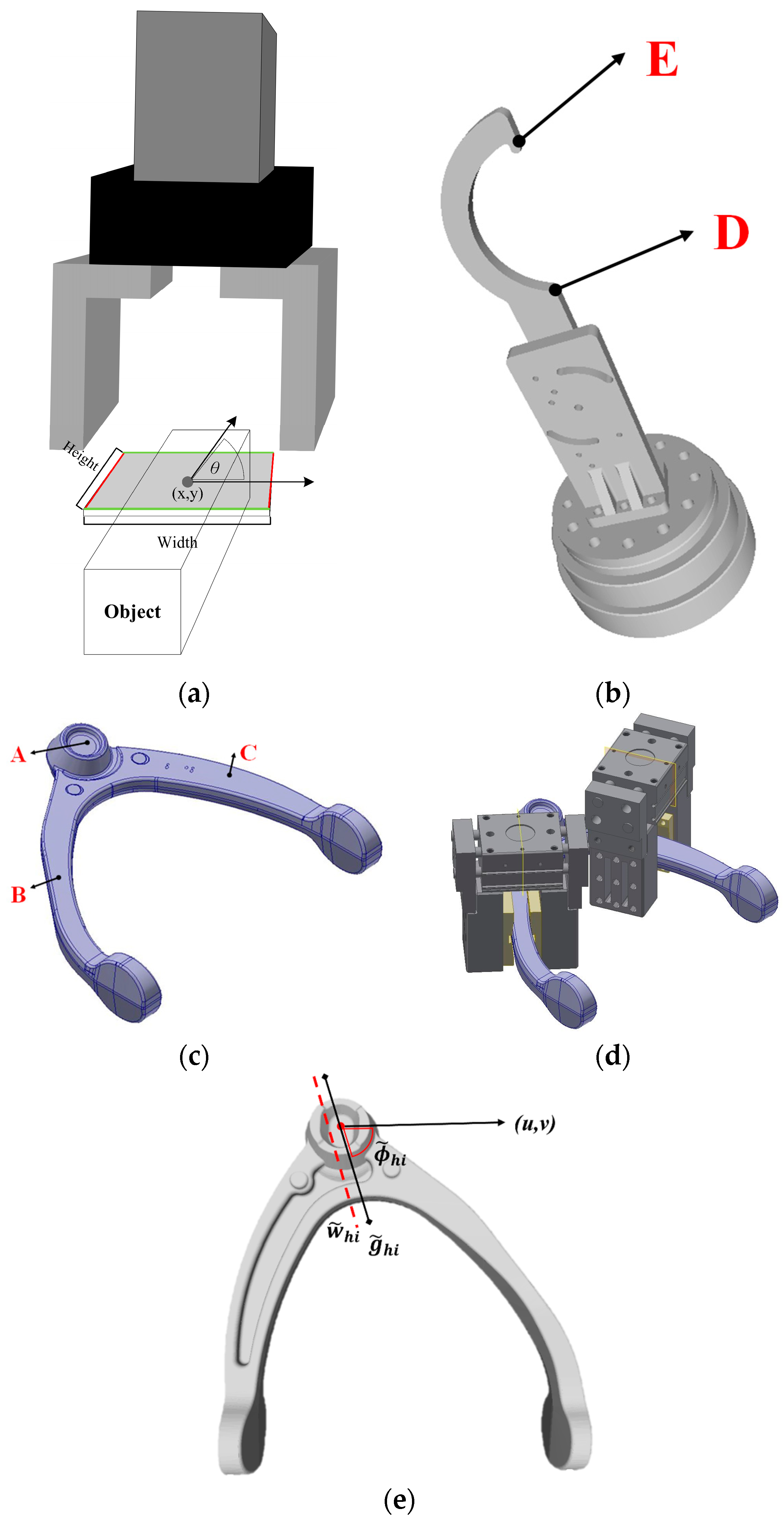

- Development of a hook-type gripper based on the geometric structure of wheel suspension parts.

- (3)

- Design a generative model architecture that can be used universally for a parallel-jaw gripper and hook-type gripper and that can significantly improve performance.

2. Related Work

2.1. Network Computing Architecture in Smart Manufacturing

2.2. Robotic Grasping in Machine Learning

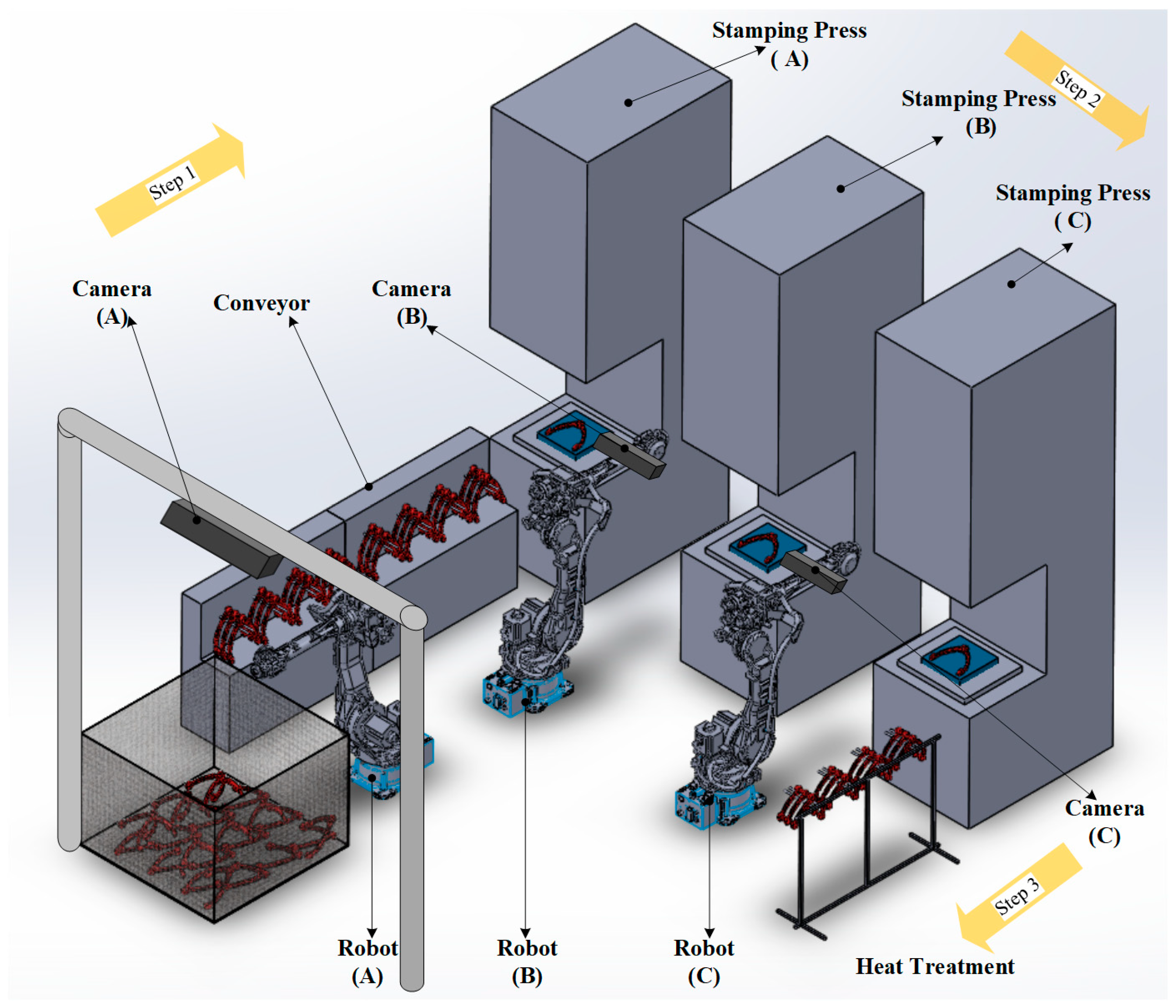

3. Robot Hooking and Network Computing Architecture for the Stamping Press Process

3.1. Motivation

3.2. Hook Structure at the Endpoint of the Robot

3.3. Robot Hooking Method

3.4. Network Computing Architecture for Robot-Based Manufacturing of Wheel Suspension Parts

- Step 1. This system can accurately take the objects out of the iron basket and place them on the conveyor, and the objects can be transferred to the stamping press through the conveyor.

- Step 2. According to the transfer of the continuous stamping press, we use the eye-in-hand visual guidance method to move the objects to different mold stamping presses.

- Step 3. Finally, the object is moved to the special bracket through the visual guidance of the robot eye in the hand and then heat-treated.

4. Experiments

4.1. General Database Validation

4.1.1. Dataset and Evaluation Metric

4.1.2. Learning Network Architecture Difference Analysis

4.1.3. Validation Based on Different Datasets

4.1.4. Computing Performance of Different Devices

4.1.5. Clamping Category Classification Based on Cornell Dataset Regional Features

- The category is judged according to the similar geometric appearance and hooking position in the Cornell dataset. The 240 objects in the Cornell dataset can be divided into 40 categories.

- In Figure 6, we encode the class of the hooking point.

- We update the model architecture part of Figure 6 to the following output:

- By embedding the category into the hook area and obtaining the quality, angle, width, and classes of the hook area at the same time during inference, we are able to sort the number of categories in the hook area according to Equation (11) and find the category with the largest number of occurrences; this category is identified as follows:

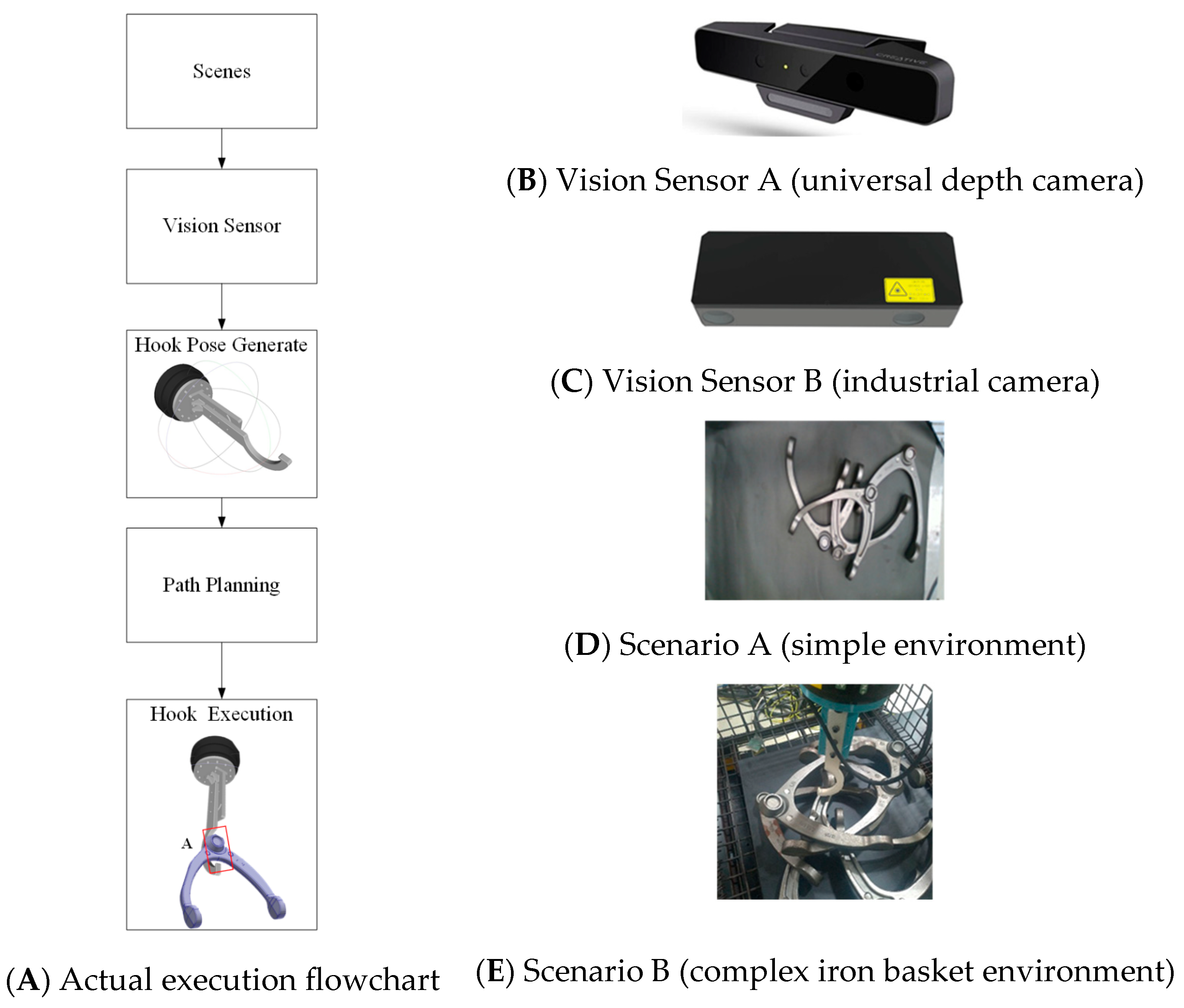

4.2. Real-Scenario Verification

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Ashton, K. That ‘Internet of Things’ thing. RFID J. 2009, 22, 97–114. [Google Scholar]

- Chellappa, R. Intermediaries in cloud-computing: A new computing paradigm. In Proceedings of the INFORMS Annual Meeting, Dallas, TX, USA, 26–29 October 1997. [Google Scholar]

- Lopez, P.G.; Montresor, A.; Epema, D.; Datta, A.; Higashino, T.; Iamnitchi, A.; Barcellos, M.; Felber, P.; Riviere, E. Edge-centric Computing: Vision and Challenges. ACM SIGCOMM Comput. Commun. Rev. 2015, 45, 37–42. [Google Scholar] [CrossRef]

- Xue, Y.; Pan, J.; Geng, Y.; Yang, Z.; Liu, M.; Deng, R. Real-Time Intrusion Detection Based on Decision Fusion in Industrial Control Systems. IEEE Trans. Ind. Cyber Phys. Syst. 2024, 2, 143–153. [Google Scholar] [CrossRef]

- Tao, F.; Cheng, J.; Qi, Q. IIHub: An industrial Internet-of-Things hub toward smart manufacturing based on cyber-physical system. IEEE Trans. Ind. Inform. 2018, 14, 2271–2280. [Google Scholar] [CrossRef]

- Liu, W.; Xu, X.; Qi, L.; Zhou, X.; Yan, H.; Xia, X.; Dou, W. Digital Twin-Assisted Edge Service Caching for Consumer Electronics Manufacturing. IEEE Trans. Consum. Electron. 2024, 70, 3141–3151. [Google Scholar] [CrossRef]

- Zhang, J.; Tian, J.; Luo, H.; Wu, S.; Yin, S.; Kaynak, O. Prognostics for the Sustainability of Industrial Cyber-Physical Systems: From an Artificial Intelligence Perspective. IEEE Trans. Ind. Cyber Phys. Syst. 2024, 2, 495–507. [Google Scholar] [CrossRef]

- Xia, C.; Wang, R.; Jin, X.; Xu, C.; Li, D.; Zeng, P. Deterministic Network–Computation–Manufacturing Interaction Mechanism for AI-Driven Cyber–Physical Production Systems. IEEE Internet Things J. 2024, 11, 18852–18868. [Google Scholar] [CrossRef]

- Zhou, X.; Xu, X.; Liang, W.; Zeng, Z.; Shimizu, S.; Yang, L.T.; Jin, Q. Intelligent small object detection for digital twin in smart manufacturing with industrial cyber-physical systems. IEEE Trans. Ind. Inform. 2022, 18, 1377–1386. [Google Scholar] [CrossRef]

- Fraccaroli, E.; Vinco, S. Modeling Cyber-Physical Production Systems with System C-AMS. IEEE Trans. Comput. 2023, 72, 2039–2051. [Google Scholar] [CrossRef]

- Pochyly, A.; Kubela, T.; Singule, V.; Cihak, P. 3D vision systems for industrial bin-picking applications. In Proceedings of the 15th International Conference MECHATRONIKA, Prague, Czech Republic, 5–7 December 2012. [Google Scholar]

- Zhang, K.; Shi, Y.; Karnouskos, S.; Sauter, T.; Fang, H.; Colombo, A.W. Advancements in Industrial Cyber-Physical Systems: An Overview and Perspectives. IEEE Trans. Ind. Inform. 2023, 19, 716–729. [Google Scholar] [CrossRef]

- Sharma, S.K.; Chaurasia, A.; Sharma, V.S.; Chowdhary, C.L.; Basheer, S. GEMM, a Genetic Engineering-Based Mutual Model for Resource Allocation of Grid Computing. IEEE Access 2023, 11, 128537–128548. [Google Scholar] [CrossRef]

- Gad, A.G.; Houssein, E.H.; Zhou, M.; Suganthan, P.N.; Wazery, Y.M. Damping-Assisted Evolutionary Swarm Intelligence for Industrial IoT Task Scheduling in Cloud Computing. IEEE Internet Things J. 2024, 11, 1698–1710. [Google Scholar] [CrossRef]

- Teoh, Y.K.; Gill, S.S.; Parlikad, A.K. IoT and Fog-Computing-Based Predictive Maintenance Model for Effective Asset Management in Industry 4.0 Using Machine Learning. IEEE Internet Things J. 2023, 10, 2087–2094. [Google Scholar] [CrossRef]

- Sundermeyer, M.; Mousavian, A.; Triebel, R.; Fox, D. Contact-GraspNet: Efficient 6-DoF Grasp Generation in Cluttered Scenes. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Xian, China, 30 May–5 June 2021. [Google Scholar]

- Duffhauss, F.; Koch, S.; Ziesche, H.; Vien, N.A.; Neumann, G. SyMFM6D: Symmetry-Aware Multi-Directional Fusion for Multi-View 6D Object Pose Estimation. IEEE Robot. Autom. Lett. 2023, 8, 5315–5322. [Google Scholar] [CrossRef]

- Jiang, Y.; Moseson, S.; Saxena, A. Efficient grasping from rgbd images: Learning using a new rectangle representation. In Proceedings of the International Conference on Robotics and Automation (ICRA), Shanghai, China, 9–13 May 2011. [Google Scholar]

- Redmon, J.; Angelova, A. Real-time grasp detection using convolutional neural networks. In Proceedings of the International Conference on Robotics and Automation (ICRA), Seattle, WA, USA, 26–30 May 2015; Available online: https://www.kaggle.com/datasets/oneoneliu/cornell-grasp (accessed on 1 November 2023).

- Yu, S.; Zhai, D.H.; Xia, Y.; Wu, H.; Liao, J. SE-ResUNet: A Novel Robotic Grasp Detection Method. IEEE Robot. Autom. Lett. 2022, 7, 5238–5245. [Google Scholar] [CrossRef]

- Chen, L.; Niu, M.; Yang, J.; Qian, Y.; Li, Z.; Wang, K. Robotic Grasp Detection Using Structure Prior Attention and Multiscale Features. IEEE Trans. Syst. Man Cybern. Syst. 2024, 54, 7039–7053. [Google Scholar] [CrossRef]

- Cao, H.; Chen, G.; Li, Z.; Feng, Q.; Lin, J.; Knoll, A. Efficient Grasp Detection Network with Gaussian-Based Grasp Representation for Robotic Manipulation. IEEE/ASME Trans. Mechatron. 2023, 28, 1384–1394. [Google Scholar] [CrossRef]

- Cheng, H.; Wang, Y.; Meng, M.Q.H. Anchor-Based Multi-Scale Deep Grasp Pose Detector with Encoded Angle Regression. IEEE Trans. Autom. Sci. Eng. 2024, 21, 3130–3140. [Google Scholar] [CrossRef]

- Tong, L.; Song, K.; Tian, H.; Man, Y.; Yan, Y.; Meng, Q. A Novel RGB-D Cross-Background Robot Grasp Detection Dataset and Background-Adaptive Grasping Network. IEEE Trans. Instrum. Meas. 2024, 73, 9511615. [Google Scholar] [CrossRef]

- Zuo, G.; Shen, Z.; Yu, S.; Luo, Y.; Zhao, M. HBGNet: Robotic Grasp Detection Using a Hybrid Network. IEEE Trans. Instrum. Meas. 2025, 74, 2503109. [Google Scholar] [CrossRef]

- Xu, R.; Chu, F.J.; Vela, P.A. GKNet: Grasp keypoint network for grasp candidates detection. Int. J. Robot. Res. 2022, 41, 361–389. [Google Scholar] [CrossRef]

- Liu, D.; Feng, X. A Real-Time Grasp Detection Network Based on Multi-scale RGB-D Fusion. In Proceedings of the International Joint Conference on Neural Networks (IJCNN), Yokohama, Japan, 30 June–5 July 2024. [Google Scholar]

- Kumra, S.; Kanan, C. Robotic grasp detection using deep convolutional neural networks. In Proceedings of the International Conference on Intelligent Robots and Systems (IROS), Vancouver, BC, Canada, 24–28 September 2017. [Google Scholar]

- Depierre, A.; Dellandrea, E.; Chen, L. Jacquard: A large scale dataset for robotic grasp detection. In Proceedings of the International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 1–5 October 2018. [Google Scholar]

- Kumra, S.; Joshi, S.; Sahin, F. Antipodal robotic grasping using generative residual convolutional neural network. In Proceedings of the International Conference on Intelligent Robots and Systems (IROS), Las Vegas, NV, USA, 24 October 2020–24 January 2021. [Google Scholar]

- Ioffe, S.; Szegedy, C. Batch normalization: Accelerating deep network training by reducing internal covariate shift. arXiv 2015, arXiv:1502.03167. [Google Scholar] [CrossRef]

- Wu, Y.; He, K. Group normalization. In Proceedings of the European Conference on Computer Vision, Munich, Germany, 8–14 September 2018. [Google Scholar]

- Lenz, I.; Lee, H.; Saxena, A. Deep learning for detecting robotic grasps. Int. J. Robot. Res. 2015, 34, 705–724. [Google Scholar] [CrossRef]

- Chu, F.J.; Xu, R.; Vela, P.A. Real-World Multi-object, Multi-grasp Detection. IEEE Robot. Autom. Lett. 2018, 3, 3355–3362. [Google Scholar] [CrossRef]

- Zhou, X.; Lan, X.; Zhang, H.; Tian, Z.; Zhang, Y.; Zheng, N. Fully convolutional grasp detection network with oriented anchor box. In Proceedings of the International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 1–5 October 2018. [Google Scholar]

- Zhou, Z.; Zhu, X.; Cao, Q. AAGDN: Attention-Augmented Grasp Detection Network Based on Coordinate Attention and Effective Feature Fusion Method. IEEE Robot. Autom. Lett. 2023, 8, 3462–3469. [Google Scholar] [CrossRef]

| Dataset | Nor. Method | Acc. (%) |

|---|---|---|

| Jacquard | Batch nor. | 95.60 |

| Group nor. | 96.13 | |

| Cornell | Batch nor. | 97.75 |

| Group nor. | 98.8 |

| Method | Acc. (%) | Speed (msec) | Year | |

|---|---|---|---|---|

| Image-Wise | Object-Wise | |||

| Y. Jiang [18] | 60.5 | 58.3 | 5000 | 2011 |

| I. Lenz [33] | 73.5 | 75.6 | 1350 | 2015 |

| J. Redmon [19] | 88 | 87.1 | 76 | 2015 |

| S. Kumra [28] | 89.2 | 88.9 | 103 | 2017 |

| F. J. Chu [34] | 96 | 89.1 | 132 | 2018 |

| X. Zhou [35] | 97.7 | 96.6 | 117 | 2018 |

| S. Kumra [20] | 97.7 | 96.6 | 20 | 2021 |

| R. Xu [26] | 96.9 | 96.8 | 47.67 | 2021 |

| S. Yu [20] | 98.2 | 97.1 | 25 | 2022 |

| Z. Zhou [36] | 99.3 | 98.8 | 18 | 2023 |

| H. Cao [22] | 97.8 | - | 6 | 2023 |

| L. Chen [21] | 99.2 | 98.4 | 28 | 2024 |

| L. Tong [24] | 97.8 | 96.7 | - | 2024 |

| D. Liu [27] | 95.2 | 94.4 | 6 | 2024 |

| Our Method | 98.8 | 94.38 | 12 | 2025 |

| Method | Acc. (%) | Speed (msec) | Year |

|---|---|---|---|

| A. Depierre [29] | 74.2 | 14.56 | 2018 |

| X. Zhou [35] | 91.8 | 117 | 2018 |

| F. J. Chu [34] | 95.08 | 132 | 2018 |

| S. Kumra t [28] | 94.6 | 20 | 2021 |

| R. Xu [26] | 98.39 | 23.26 | 2021 |

| S. Yu [20] | 95.7 | 25 | 2022 |

| Z. Zhou [36] | 96.2 | 18 | 2023 |

| H. Cao [22] | 95.5 | 6 | 2023 |

| L. Chen [21] | 96.1 | 28 | 2024 |

| H. Cheng [23] | 92.3 | - | 2024 |

| L. Tong [24] | 94.6 | 33 | 2024 |

| Our Method | 96.13 | 12 | 2025 |

| Dataset | Color Map | Method | Accuracy (%) |

|---|---|---|---|

| Jacquard | RGB-D | S. Kumra [28] | 94.6 |

| R. Xu [26] | 88.78 | ||

| S. Yu [20] | 95.7 | ||

| Our Method | 92.48 | ||

| RGD | S. Kumra [28] | 95.48 | |

| R. Xu [26] | 98.39 | ||

| S. Yu [20] | 94.5 | ||

| Our Method | 96.13 | ||

| Cornell | RGB-D | S. Kumra [28] | 97.7 |

| R. Xu [26] | 96.63 | ||

| S. Yu [20] | 98.2 | ||

| Our Method | 98.8 | ||

| RGD | S. Kumra [28] | 93.26 | |

| R. Xu [26] | 96.9 | ||

| S. Yu [20] | 94.38 | ||

| Our Method | 96.63 |

| Method @FLOPs(G) | S. Kumra [34] @22 | S. Yu [20] @18 | R. Xu [26] @11.9 | Our Method @10.1 | |

|---|---|---|---|---|---|

| Devices @CUDA | |||||

| Intel CPU@0(i7-9700F) from U.S.A | 176 | 165 | 61 | 375 | |

| AverMedia Jetson Xavier NX@384 from T.W | 47 | 92 | 53 | 48 | |

| Dell 1050Ti @768 from T.W | 38 | 70 | 39 | 45 | |

| Nvida TITANV@5120 from U.S.A | 9 | 22 | 32 | 18 | |

| ASUS 2080Ti@4352 from T.W | 8 | 15 | 22 | 12 | |

| GIGABYTE 3070Ti@6144 from T.W | 6 | 11 | 18 | 9 | |

| ZOTAC 4070Ti@7680 from H.K | 3 | 7 | 11 | 4 | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chen, Y.-C.; Chang, F.-Y.; Lai, C.-F. Integrating a Fast and Reliable Robotic Hooking System for Enhanced Stamping Press Processes in Smart Manufacturing. Automation 2025, 6, 55. https://doi.org/10.3390/automation6040055

Chen Y-C, Chang F-Y, Lai C-F. Integrating a Fast and Reliable Robotic Hooking System for Enhanced Stamping Press Processes in Smart Manufacturing. Automation. 2025; 6(4):55. https://doi.org/10.3390/automation6040055

Chicago/Turabian StyleChen, Yen-Chun, Fu-Yao Chang, and Chin-Feng Lai. 2025. "Integrating a Fast and Reliable Robotic Hooking System for Enhanced Stamping Press Processes in Smart Manufacturing" Automation 6, no. 4: 55. https://doi.org/10.3390/automation6040055

APA StyleChen, Y.-C., Chang, F.-Y., & Lai, C.-F. (2025). Integrating a Fast and Reliable Robotic Hooking System for Enhanced Stamping Press Processes in Smart Manufacturing. Automation, 6(4), 55. https://doi.org/10.3390/automation6040055