Robust Object Detection Under Adversarial Patch Attacks in Vision-Based Navigation

Abstract

1. Introduction

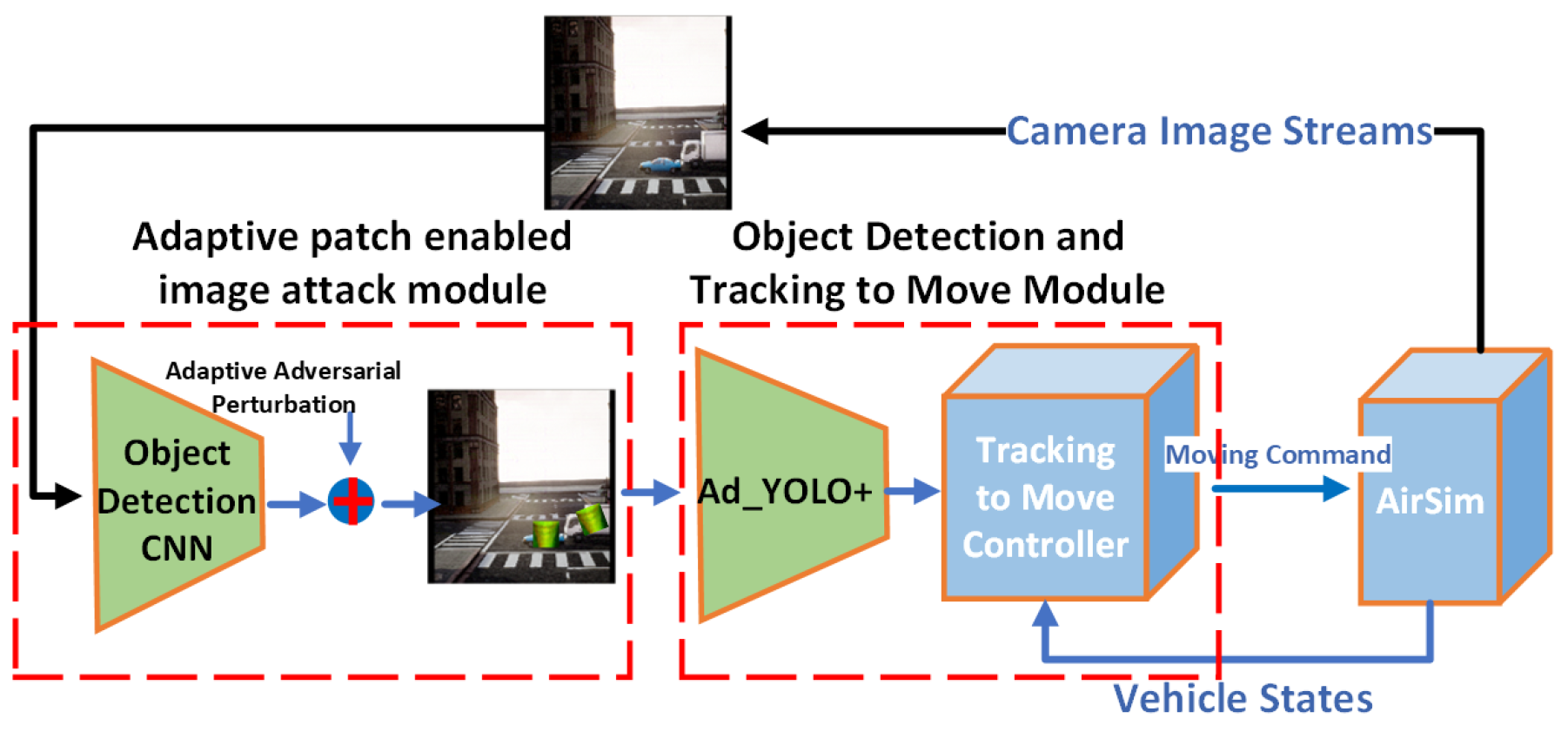

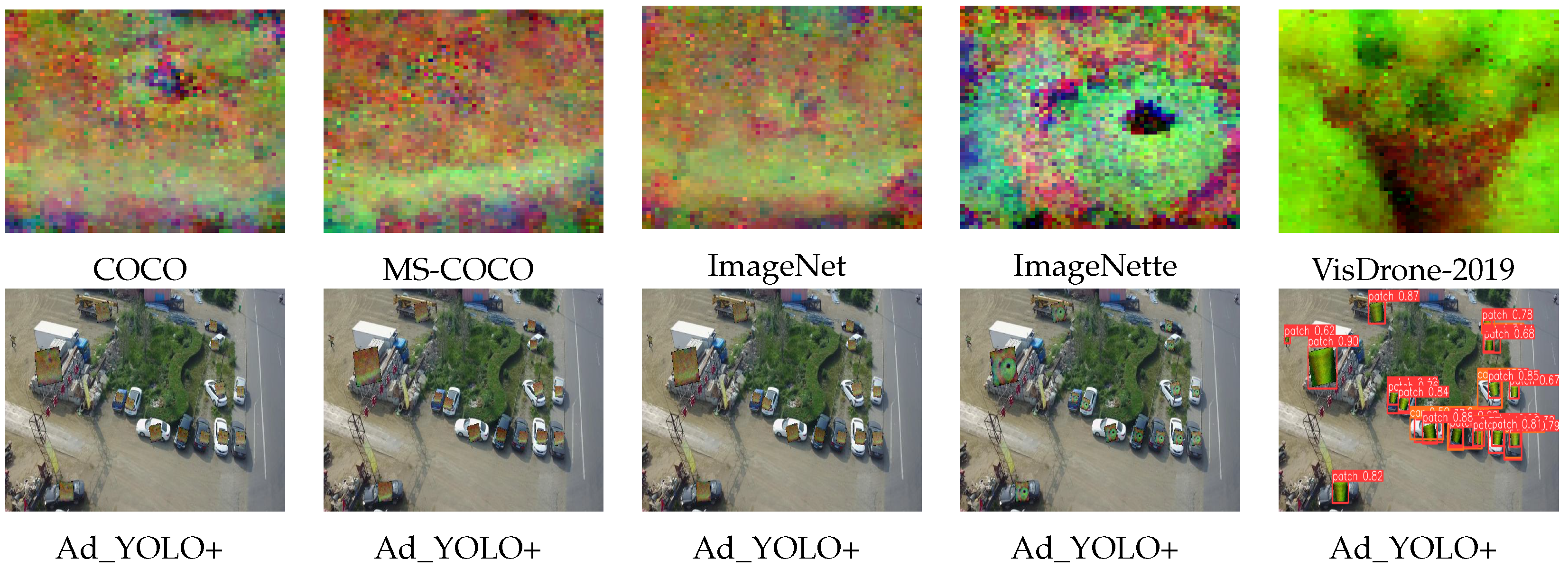

- Step 1: Adversarial Training Dataset Generation. To enable Ad_YOLO+ to detect both adversarial patches and target objects simultaneously, we constructed a specialized adversarial training dataset comprising patch-attacked images and the COCO dataset [31]. Using pretrained YOLOv5s weights, we first detect objects in each image frame and then adaptively apply adversarial patches to the detected bounding boxes, generating adversarial samples. The patch dataset is derived from VisDrone-2019 [32] and includes 80 non-targeted image attacks, ensuring diverse and challenging adversarial scenarios.

- Step 2: Patch Category Preparation. Despite its robustness, Ad_YOLO+ must still detect adversarial patches as a distinct category (e.g., both the adversarial patch and the attacked target in Table 1 are identified by Ad_YOLO+). To accomplish this, we introduced a new patch category in the training YAML file, enabling precise detection of adversarial patches.

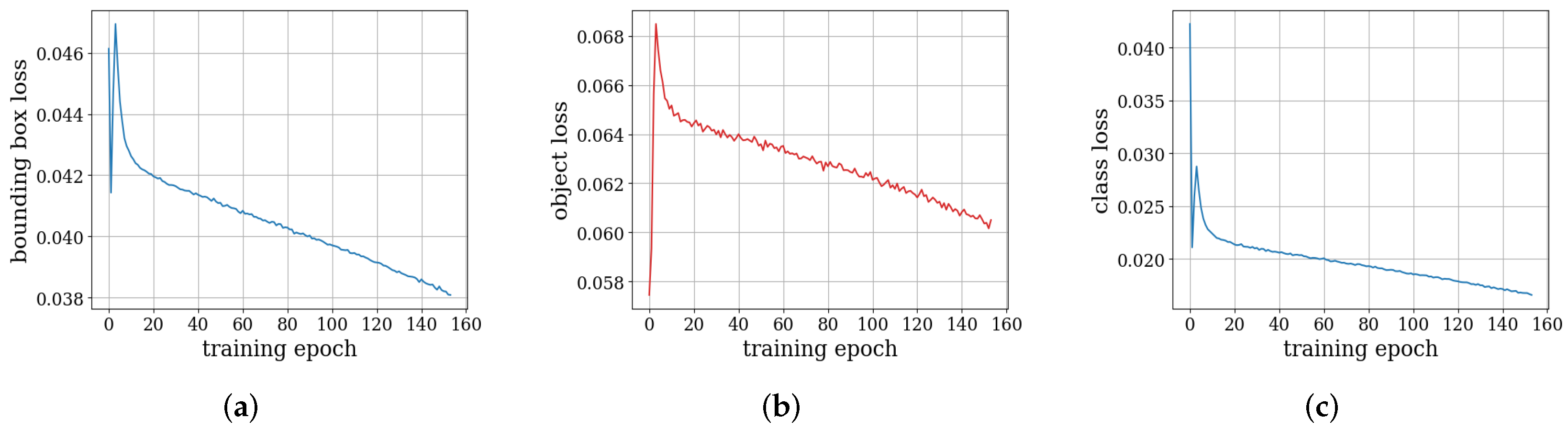

- Step 3: Training Ad_YOLO+ model. We use YOLOv5x as the backbone model and incorporate an additional patch detection layer as the final layer. The overall Ad_YOLO+ architecture includes a detection head and neck structure. The training schematic is illustrated in Figure 1. The network is trained for 300 epochs on a single GPU with a batch size of 16, using a specially designed adversarial training and validation dataset. The optimization process combines objectiveness loss, localization loss, and classification loss to enhance detection performance.

- We propose Ad_YOLO+, an object detection model designed to be robust against adversarial patches of varying sizes and locations in a vision-based tracking task. By treating adversarial patches as a distinct category during training, Ad_YOLO+ effectively identifies both adversarial patches and target objects simultaneously.

- We evaluate Ad_YOLO+ in the AirSim virtual simulation environment. Our results show that Ad_YOLO+ achieves an for tracking targets—significantly outperforming YOLOv5s —while also detecting adversarial patches with on the specially generated adversarial training dataset ( represents average precision at an intersection over union (IoU) threshold of ).

- We implement a verification procedure to assess whether Ad_YOLO+ provides provable robustness against adaptive adversarial patches in real time under a defined threat model. Additionally, our comparison results demonstrate that Ad_YOLO+ outperforms the evaluated state-of-the-art approaches in defense against patch attacks, particularly in terms of object detection accuracy.

2. Relevant Work

2.1. Detection-Based Defense Methods for Adversarial Patches

2.2. Adversarial Training Method

2.3. Gradient Masking

3. The Problem Statement and Proposed Method

3.1. Adversarial Adaptive Patch Generation

3.2. Robust Object Detection Model: Ad_YOLO+

3.2.1. Ad_YOLO+ Framework

3.2.2. VisDrone-2019 Dataset for Adversarial Training

| Algorithm 1 Adversarial training dataset generation. |

|

3.2.3. Adversarial Training Process

| Algorithm 2 Adversarial training of Ad_YOLO+. |

|

4. Evaluation of Effectiveness of Adversarial Patch Attacks

4.1. Adaptive Adversarial Patch Attack Evaluation

4.1.1. Adversarial Patches for Static Images

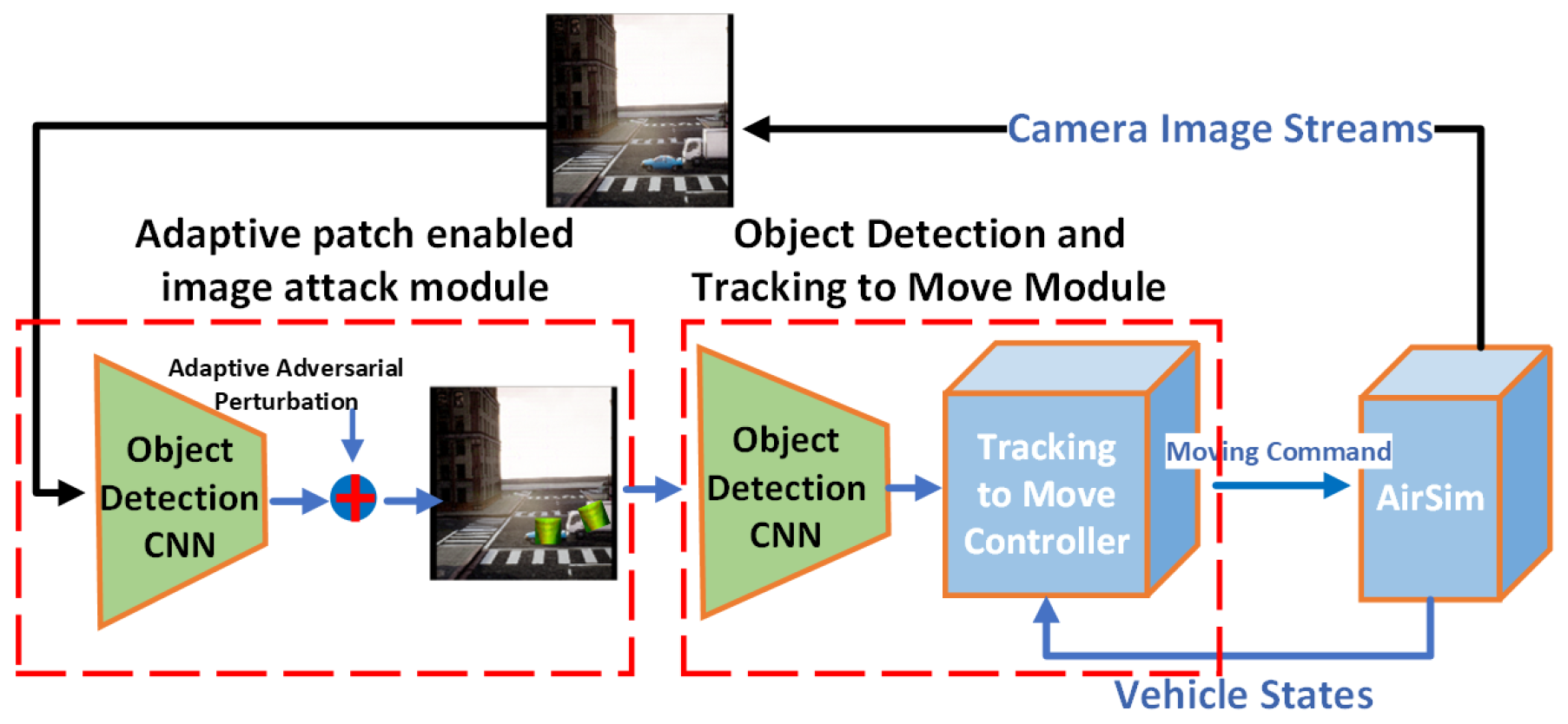

4.1.2. Simulation Environment for Patch Attack in Dynamic Setting

4.1.3. Attack Efficiency

5. Evaluation Results

5.1. Evaluation Settings

5.1.1. Dataset

5.1.2. Simulation Framework

5.1.3. Evaluation Metrics

5.1.4. Computation Setup

5.2. Performance Results for Ad_YOLO+

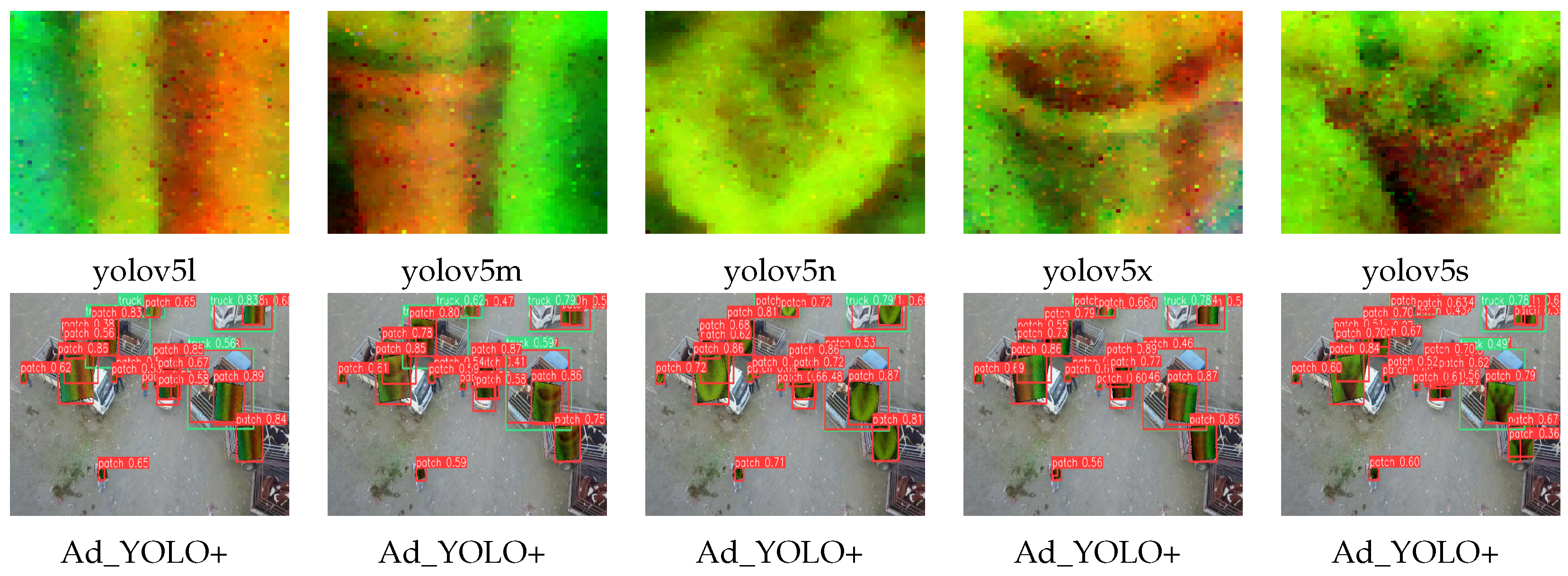

5.3. Ablation Study

6. Limitations

7. Discussion and Concluding Remarks

Author Contributions

Funding

Conflicts of Interest

References

- Chakraborty, A.; Alam, M.; Dey, V.; Chattopadhyay, A.; Mukhopadhyay, D. A survey on adversarial attacks and defences. CAAI Trans. Intell. Technol. 2021, 6, 25–45. [Google Scholar] [CrossRef]

- Zhai, C.; Wu, W.; Xiao, Y.; Zhang, J.; Zhai, M. Jam traffic pattern of a multi-phase lattice hydrodynamic model integrating a continuous self-stabilizing control protocol to boycott the malicious cyber-attacks. Chaos Solitons Fractals 2025, 197, 116531. [Google Scholar] [CrossRef]

- Hayes, J. On visible adversarial perturbations & digital watermarking. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Salt Lake City, UT, USA, 18–22 June 2018; pp. 1597–1604. [Google Scholar]

- Chou, E.; Tramer, F.; Pellegrino, G. Sentinet: Detecting localized universal attacks against deep learning systems. In Proceedings of the IEEE Security and Privacy Workshops (SPW), San Francisco, CA, USA, 21–21 May 2020; pp. 48–54. [Google Scholar]

- Liu, J.; Levine, A.; Lau, C.P.; Chellappa, R.; Feizi, S. Segment and complete: Defending object detectors against adversarial patch attacks with robust patch detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 14973–14982. [Google Scholar]

- Jing, L.; Wang, R.; Ren, W.; Dong, X.; Zou, C. PAD: Patch-agnostic defense against adversarial patch attacks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 16–22 June 2024; pp. 24472–24481. [Google Scholar]

- Xu, K.; Xiao, Y.; Zheng, Z.; Cai, K.; Nevatia, R. Patchzero: Defending against adversarial patch attacks by detecting and zeroing the patch. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Waikoloa, HI, USA, 2–7 January 2023; pp. 4632–4641. [Google Scholar]

- Xiang, C.; Valtchanov, A.; Mahloujifar, S.; Mittal, P. Objectseeker: Certifiably robust object detection against patch hiding attacks via patch-agnostic masking. In Proceedings of the 2023 IEEE Symposium on Security and Privacy (SP), San Francisco, CA, USA, 21–25 May 2023; pp. 1329–1347. [Google Scholar]

- McCoyd, M.; Park, W.; Chen, S.; Shah, N.; Roggenkemper, R.; Hwang, M.; Liu, J.X.; Wagner, D. Minority reports defense: Defending against adversarial patches. In Proceedings of the International Conference on Applied Cryptography and Network Security, Rome, Italy, 19–22 October 2020; pp. 564–582. [Google Scholar]

- Naseer, M.; Khan, S.; Porikli, F. Local gradients smoothing: Defense against localized adversarial attacks. In Proceedings of the 2019 IEEE Winter Conference on Applications of Computer Vision (WACV), Waikoloa, HI, USA, 7–11 January 2019; pp. 1300–1307. [Google Scholar]

- Chen, Z.; Dash, P.; Pattabiraman, K. Jujutsu: A two-stage defense against adversarial patch attacks on deep neural networks. In Proceedings of the ACM Asia Conference on Computer and Communications Security, Melbourne, VIC, Australia, 10–14 July 2023; pp. 689–703. [Google Scholar]

- Chattopadhyay, N.; Guesmi, A.; Shafique, M. Anomaly unveiled: Securing image classification against adversarial patch attacks. In Proceedings of the IEEE International Conference on Image Processing (ICIP), Abu Dhabi, United Arab Emirates, 27–30 October 2024; pp. 929–935. [Google Scholar]

- Bunzel, N.; Frick, R.A.; Klause, G.; Schwarte, A.; Honermann, J. Signals are all you need: Detecting and mitigating digital and real-world adversarial patches using signal-based features. In Proceedings of the 2nd ACM Workshop on Secure and Trustworthy Deep Learning Systems, Singapore, 2–20 July 2024; pp. 24–34. [Google Scholar]

- Mao, Z.; Chen, S.; Miao, Z.; Li, H.; Xia, B.; Cai, J.; Yuan, W.; You, X. Enhancing robustness of person detection: A universal defense filter against adversarial patch attacks. Comput. Secur. 2024, 146, 104066. [Google Scholar] [CrossRef]

- Kang, C.; Dong, Y.; Wang, Z.; Ruan, S.; Chen, Y.; Su, H.; Wei, X. DIFFender: Diffusion-Based Adversarial Defense against Patch Attacks. In Proceedings of the European Conference on Computer Vision, Milan, Italy, 29 September–4 October 2024; pp. 130–147. [Google Scholar]

- Xiang, C.; Mahloujifar, S.; Mittal, P. PatchCleanser: Certifiably robust defense against adversarial patches for any image classifier. In Proceedings of the 31st USENIX Security Symposium (USENIX Security 22), Boston, MA, USA, 10–12 August 2022; pp. 2065–2082. [Google Scholar]

- Xiang, C.; Wu, T.; Dai, S.; Petit, J.; Jana, S.; Mittal, P. PatchCURE: Improving certifiable robustness, model utility, and computation efficiency of adversarial patch defenses. In Proceedings of the 33rd USENIX Security Symposium (USENIX Security 24), Philadelphia, PA, USA, 14–16 August 2024; pp. 3675–3692. [Google Scholar]

- Tarchoun, B.; Ben Khalifa, A.; Mahjoub, M.A.; Abu-Ghazaleh, N.; Alouani, I. Jedi: Entropy-based localization and removal of adversarial patches. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 4087–4095. [Google Scholar]

- Li, Y.; Duan, M.; Xiao, B. Adv-Inpainting: Generating natural and transferable adversarial patch via attention-guided feature fusion. arXiv 2023, arXiv:2308.05320. [Google Scholar]

- Zhang, Y.; Zhao, S.; Wei, X.; Wei, S. Defending adversarial patches via joint region localizing and inpainting. In Proceedings of the Chinese Conference on Pattern Recognition and Computer Vision (PRCV), Urumqi, China, 18–20 October 2024; pp. 236–250. [Google Scholar]

- Huang, Y.; Li, Y. Zero-shot certified defense against adversarial patches with vision transformers. arXiv 2021, arXiv:2111.10481. [Google Scholar]

- Metzen, J.H.; Yatsura, M. Efficient certified defenses against patch attacks on image classifiers. arXiv 2021, arXiv:2102.04154. [Google Scholar] [CrossRef]

- Brendel, W.; Bethge, M. Approximating CNNs with bag-of-local-features models works surprisingly well on imagenet. arXiv 2019, arXiv:1904.00760. [Google Scholar]

- Zhang, Z.; Yuan, B.; McCoyd, M.; Wagner, D. Clipped bagnet: Defending against sticker attacks with clipped bag-of-features. In Proceedings of the IEEE Security and Privacy Workshops (SPW), San Francisco, CA, USA, 21–21 May 2020; pp. 55–61. [Google Scholar]

- Xiang, C.; Mittal, P. Patchguard++: Efficient provable attack detection against adversarial patches. arXiv 2021, arXiv:2104.12609. [Google Scholar]

- Xiang, C.; Mittal, P. Detectorguard: Provably securing object detectors against localized patch hiding attacks. In Proceedings of the ACM SIGSAC Conference on Computer and Communications Security, Virtual, 15–19 November 2021; pp. 3177–3196. [Google Scholar]

- Levine, A.; Feizi, S. (De) Randomized smoothing for certifiable defense against patch attacks. Adv. Neural Inf. Process. Syst. 2020, 33, 6465–6475. [Google Scholar]

- Lecuyer, M.; Atlidakis, V.; Geambasu, R.; Hsu, D.; Jana, S. Certified robustness to adversarial examples with differential privacy. In Proceedings of the IEEE Symposium on Security and Privacy (SP), San Francisco, CA, USA, 19–23 May 2019; pp. 656–672. [Google Scholar]

- Xiang, C.; Bhagoji, A.N.; Sehwag, V.; Mittal, P. PatchGuard: A provably robust defense against adversarial patches via small receptive fields and masking. In Proceedings of the 30th USENIX Security Symposium (USENIX Security 21), Online, 11–13 August 2021; pp. 2237–2254. [Google Scholar]

- Ji, N.; Feng, Y.; Xie, H.; Xiang, X.; Liu, N. Adversarial yolo: Defense human detection patch attacks via detecting adversarial patches. arXiv 2021, arXiv:2103.08860. [Google Scholar] [CrossRef]

- Lin, T.Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft coco: Common objects in context. In Proceedings of the Computer Vision–ECCV 2014: 13th European Conference, Zurich, Switzerland, 6–12 September 2014; pp. 740–755. [Google Scholar]

- Du, D.; Zhu, P.; Wen, L.; Bian, X.; Lin, H.; Hu, Q.; Peng, T.; Zheng, J.; Wang, X.; Zhang, Y.; et al. VisDrone-DET2019: The vision meets drone object detection in image challenge results. In Proceedings of the IEEE/CVF International Conference on Computer Vision Workshops, Seoul, Republic of Korea, 27–28 October 2019. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Liu, A.; Wang, J.; Liu, X.; Cao, B.; Zhang, C.; Yu, H. Bias-based universal adversarial patch attack for automatic check-out. In Proceedings of the Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, 23–28 August 2020; Proceedings, Part XIII 16. pp. 395–410. [Google Scholar]

- Akhtar, N.; Mian, A.; Kardan, N.; Shah, M. Advances in adversarial attacks and defenses in computer vision: A survey. IEEE Access 2021, 9, 155161–155196. [Google Scholar] [CrossRef]

- Thys, S.; Van Ranst, W.; Goedemé, T. Fooling automated surveillance cameras: Adversarial patches to attack person detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, Long Beach, CA, USA, 16–17 June 2019. [Google Scholar]

- Brown, T.B.; Mané, D.; Roy, A.; Abadi, M.; Gilmer, J. Adversarial patch. arXiv 2017, arXiv:1712.09665. [Google Scholar]

- Chakraborty, A.; Alam, M.; Dey, V.; Chattopadhyay, A.; Mukhopadhyay, D. Adversarial attacks and defences: A survey. arXiv 2018, arXiv:1810.00069. [Google Scholar] [CrossRef]

- Athalye, A.; Carlini, N.; Wagner, D. Obfuscated gradients give a false sense of security: Circumventing defenses to adversarial examples. In Proceedings of the International Conference on Machine Learning, Stockholm, Sweden, 10–15 July 2018; pp. 274–283. [Google Scholar]

- Lei, X.; Cai, X.; Lu, C.; Jiang, Z.; Gong, Z.; Lu, L. Using frequency attention to make adversarial patch powerful against person detector. IEEE Access 2022, 11, 27217–27225. [Google Scholar] [CrossRef]

- Shrestha, S.; Pathak, S.; Viegas, E.K. Towards a robust adversarial patch attack against unmanned aerial vehicles object detection. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Detroit, MI, USA, 1–5 October 2023; pp. 3256–3263. [Google Scholar]

- Deng, J.; Dong, W.; Socher, R.; Li, L.J.; Li, K.; Fei-Fei, L. Imagenet: A large-scale hierarchical image database. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 248–255. [Google Scholar]

- Howard, J. A Smaller Subset of 10 Easily Classified Classes from Imagenet, and a Little More French. 2020. Available online: https://github.com/fastai/imagenette (accessed on 1 February 2024).

| Attacked Image | PatchZero [7] | DIFFender [15] | Jedi [18] |

|  |  |  |

| Ad_YOLO+ | PatchZero [7] + YOLOv5x [33] | DIFFender [15] + YOLOv5x [33] | Jedi [18] + YOLOv5x [33] |

|  |  |  |

| Detector | Yolov5n | Yolov5s | Yolov5m | Yolov5l | Yolov5x | |

|---|---|---|---|---|---|---|

| Weights | ||||||

| Yolov5n | 90.8% | |||||

| Yolov5s | 79.8% | |||||

| Yolov5m | 59.13% | |||||

| Yolov5l | 78.91% | |||||

| Yolov5x | 85.4% | |||||

| Yolov5n | 83.90% | |||||

| Yolov5s | 83.60% | |||||

| Yolov5m | 87.63% | |||||

| Yolov5l | 84.57% | |||||

| Yolov5x | 85.89% | |||||

| Weights | Yolov5n | Yolov5s | Yolov5m | Yolov5l | Yolov5x | |

|---|---|---|---|---|---|---|

| Evaluation | ||||||

| Clean Accuracy | ||||||

| Robust Accuracy | ||||||

| Lost Prediction | ||||||

| Class | Giraffe | Zebra | Bird | Boat | Bottle | Bus | Dog | Horse | Bike | Person | Plant |

|---|---|---|---|---|---|---|---|---|---|---|---|

| YOLOv5x | |||||||||||

| Ad_YOLO | |||||||||||

| Ad_YOLO+ | 83.67 | 92.22 | 59.22 | 55.72 | 62.60 | 84.74 | 79.59 | 89.42 | 64.94 | 81.29 | 56.77 |

| Class | Bus | Car | Cat | Chair | Cow | Table | No Sofa | Train | tv | Sheep | mAP |

| YOLOv5x | |||||||||||

| Ad_YOLO | |||||||||||

| Ad_YOLO+ | 84.74 | 71.44 | 90.02 | 57.43 | 85.51 | 45.85 | 73.94 | 90.72 | 82.85 | 80.50 | 74.63 |

| Backbone Model | Model Convergence Efficiency | Image Processing Speed | mAP | Clean Accuracy |

|---|---|---|---|---|

| Ad_YOLOv5n | h/Epoch | 60 fps | ||

| Ad_YOLOv5s | h/Epoch | 48 fps | ||

| Ad_YOLOv5m | h/Epoch | 35 fps | ||

| Ad_YOLOv5l | h/Epoch | 28 fps | ||

| Ad_YOLOv5x | 6.23 h/Epoch | 18 fps | 74.63% | 85.4% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Gu, H.; Yoon, H.J.; Jafarnejadsani, H. Robust Object Detection Under Adversarial Patch Attacks in Vision-Based Navigation. Automation 2025, 6, 44. https://doi.org/10.3390/automation6030044

Gu H, Yoon HJ, Jafarnejadsani H. Robust Object Detection Under Adversarial Patch Attacks in Vision-Based Navigation. Automation. 2025; 6(3):44. https://doi.org/10.3390/automation6030044

Chicago/Turabian StyleGu, Haotian, Hyung Jin Yoon, and Hamidreza Jafarnejadsani. 2025. "Robust Object Detection Under Adversarial Patch Attacks in Vision-Based Navigation" Automation 6, no. 3: 44. https://doi.org/10.3390/automation6030044

APA StyleGu, H., Yoon, H. J., & Jafarnejadsani, H. (2025). Robust Object Detection Under Adversarial Patch Attacks in Vision-Based Navigation. Automation, 6(3), 44. https://doi.org/10.3390/automation6030044