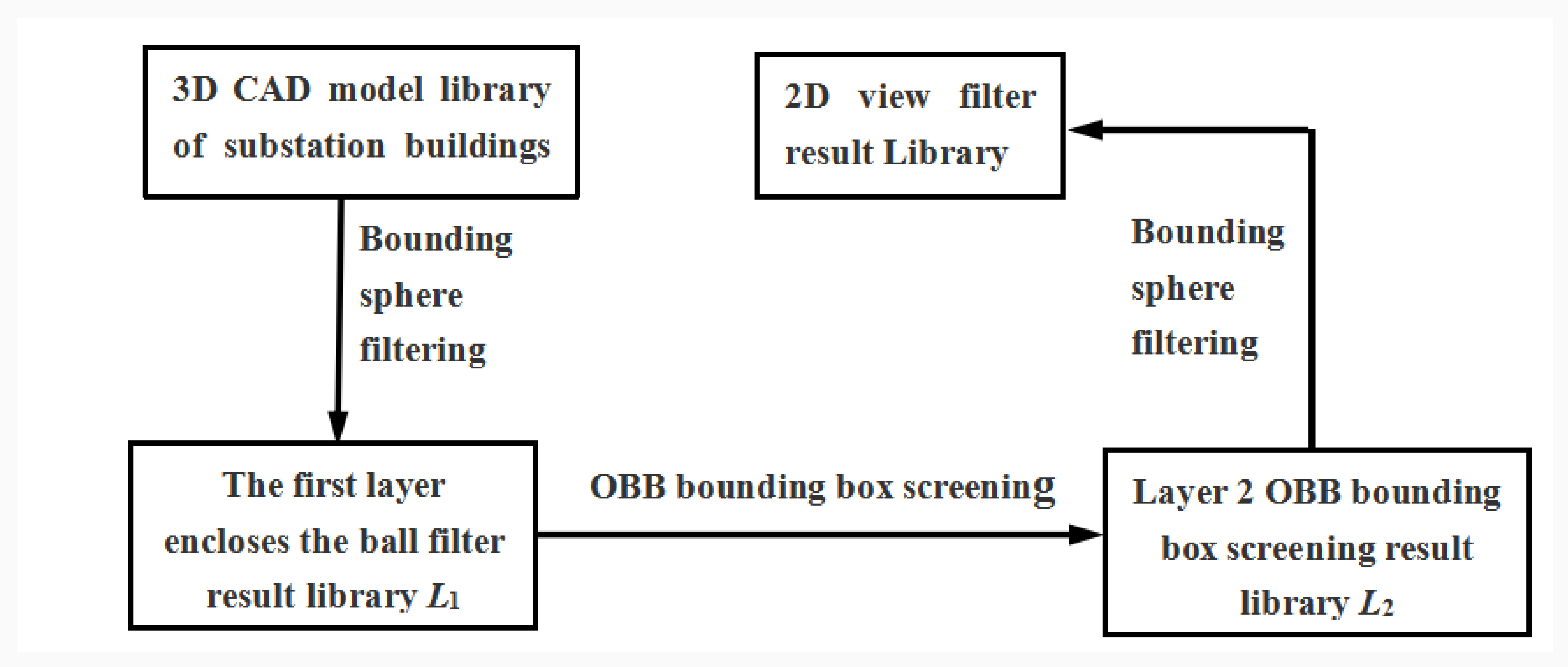

2.1. Screening of the Double-Layer Bounding Box Model Based on the Bounding Sphere and OBB Bounding Box

The bounding box is an optimal closed-space algorithm used for solving a set of discrete points. The commonly-used bounding box algorithms include a bounding ball, an axially-aligned bounding box (AABB bounding box), a directional bounding box (OBB bounding box), and so on.

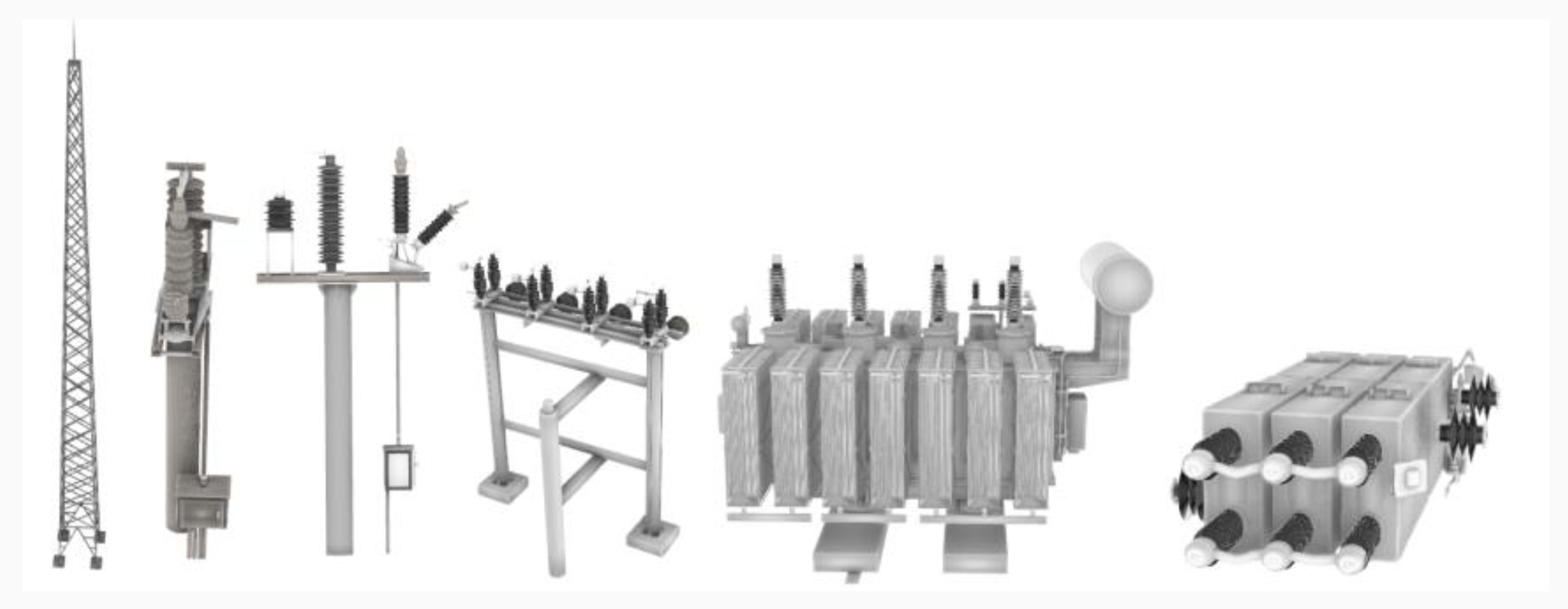

The bounding ball is the smallest sphere that contains the object. When the object rotates, the bounding ball does not need to be updated and has rotational invariance. The AABB bounding box is the smallest hexahedron that contains the object. When the object rotates, it cannot rotate correspondingly and does not have rotational invariance. The OBB bounding box is insensitive to the object’s direction and has an arbitrary direction, which enables it to surround the object as much as possible according to the shape characteristics of the surrounding object, and has rotational invariance. Because the attitudes of CAD models and the input point cloud data in transformer substation-building 3D model libraries are different, the bounding box of the model and point cloud data are required to have rotational invariance in the process of 3D CAD model screening. Bounding balls and OBB bounding boxes are the best choices as shown in the

Figure 2.

The 3D CAD model retrieval technology studied in this paper involves searching for the input-specific 3D point cloud data in the model library to obtain a similar model. The advantages of using the OBB bounding box as the feature descriptor for 3D model retrieval is that it can retrieve a model of the same size as the existing point cloud from the model library, and provide data support for the later refined retrieval. However, the creation of the OBB bounding box is complex, and it takes a long time to retrieve. Compared with the OBB bounding box, the creation process of the bounding sphere is fast and the structure is simple. Using the bounding sphere as the feature descriptor can retrieve the CAD model from the model library that is consistent with the radius of the bounding sphere of the current point cloud. To obtain a 3D CAD model that shares the same size as the current point cloud data, further retrieval is required. To satisfy the demands of controlling the retrieval time while ensuring its accuracy, the method of combining the bounding sphere and the OBB bounding box is the most sensible choice. The first layer of rough screening is performed through the bounding sphere to reduce the number of CAD models retrieved in the library. Then, the OBB bounding box is used for further filtering to obtain a CAD model that is consistent with the size of the current point cloud.

- (1)

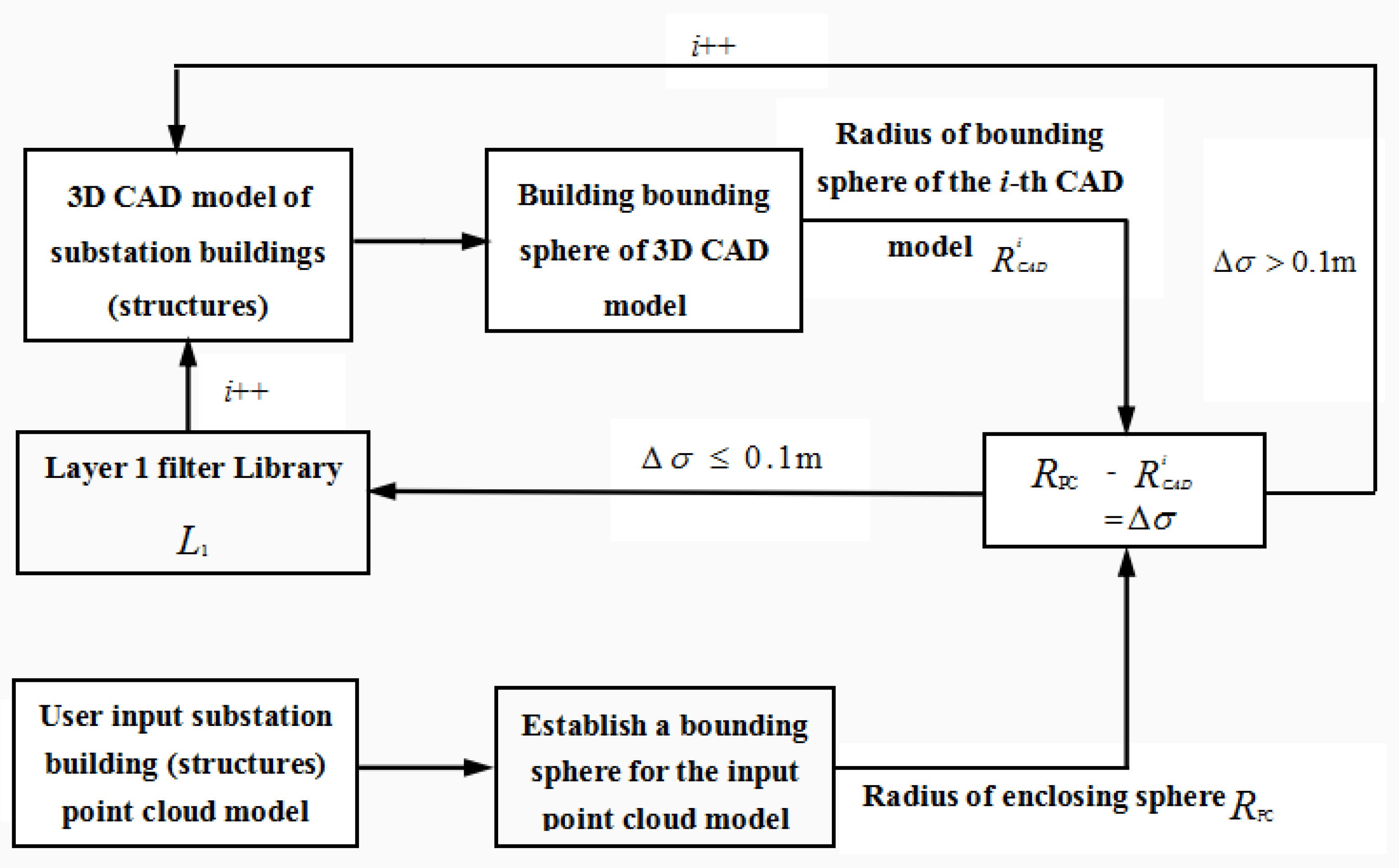

The first-layer model screening (model screening based on the bounding sphere).

Before the model screening, firstly, the three-dimensional CAD model in the building model’s substation library and the current point cloud data should be built separately to form the bounding ball. Then, the 3D CAD model is selected with the radius, consistent with that of the sphere surrounded by the point cloud data. This provides data support for the second-layer model screening. When constructing the bounding sphere of a 3D CAD model and point cloud data, we simply need to determine the center and radius of the sphere. We calculate the maximum and minimum values of the

X,

Y, and

Z coordinates of the vertices of all elements in the set of basic geometric elements that make up the model (the vertices of the triangular patch of the 3D CAD model or the points of the point cloud data model), and make the mean values of the maximum and minimum values as the center of the ball. Then, we calculate the distance between the maximum and the minimum of

X,

Y, and

Z as the diameters of the sphere [

8].

The equation of the bounding sphere:

,

,

,

, and

represent the maximum and minimum values of the model’s vertex projection on the

x,

y, and

z coordinates.

O is the center of the bounding sphere.

,

, and

are the components of the center coordinates of the bounding sphere.

R is the radius of the bounding sphere. The steps of using the bounding sphere for the model screening are as follows:

When

, the bounding sphere of the current CAD model in the 3D model library is the same size as that of the point cloud data, and has similarities; thus, it is added to the first-layer screening result library (otherwise, the current model is not similar to the point cloud data). The 3D CAD models similar to 3D point cloud data are sequentially found from the 3D model library, which can provide data support for the second-layer OBB bounding box screening. This can greatly reduce the amount of data in the OBB bounding box screening, and improve the screening speed. Model screening process based on bounding sphere is shown in

Figure 3.

- (2)

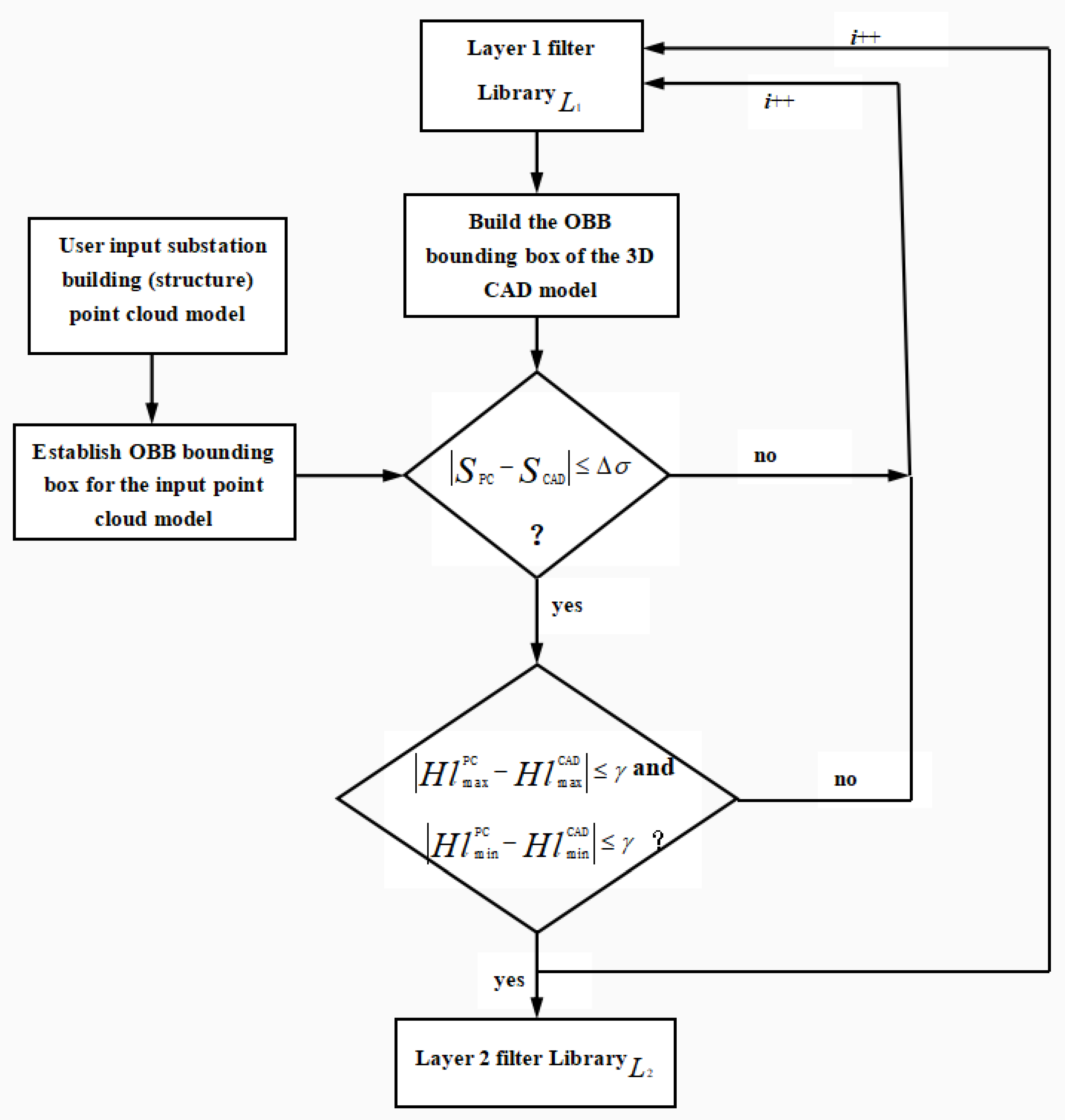

The second layer of the model screening (model screening based on the OBB bounding box).

After the first-layer model screening, the 3D CAD models that are equal to the radius of the bounding sphere of the point cloud model of the substation building are selected. However, there are a large number of 3D CAD models in the model library that share the same radius as the surrounding sphere of the point cloud model of the substation building. This is not conducive to the comparison of the later model details. Therefore, based on the first-layer model screening, the second model screening is carried out to screen out 3D CAD models with similar sizes to the point cloud model, to reduce the amount of data for refined comparisons of later models, and to improve retrieval efficiency.

When constructing the OBB bounding box for the 3D CAD model in the first-layer screening result, the 3D CAD model is regarded as a complex structure composed of many triangular patches, so the vertices of the triangular patches provide data support for the creation of the OBB bounding box for the 3D CAD model. There are many algorithms used to construct the OBB bounding box. In this paper, the principal component analysis (PCA) was used to obtain the three main directions of the triangle vertex to create the OBB bounding box [

9]. The specific method is as follows: to obtain the vertex centroid of the triangle patch, calculate the covariance, construct the covariance matrix, and generate the eigenvalues and eigenvectors of the covariance matrix. The eigenvector is the main direction of the OBB bounding box.

First, the mean value of the vertices of the triangular patch is calculated, where

n is the number of vertices.

After obtaining the mean value of the vertices of the triangular patch, the covariance matrix

C of the vertices can be obtained.

The Jacobi iterative algorithm is used to solve the eigenvector of covariance matrix

C [

10,

11], and then the Schmidt orthogonalization algorithm is used to orthogonalize the eigenvector with the orthogonalized eigenvectors of

b1,

b2, and

b3 [

12].

b1,

b2, and

b3 are taken as the coordinate axes of the OBB bounding box. The

X,

Y, and

Z coordinates of the current 3D CAD model’s triangular patch vertex are projected onto the calculated coordinate axes

b1,

b2, and

b3, respectively, to obtain the triangular patch vertex data in the new coordinate system.

represents the vertex coordinates of the 3D CAD model in the

b1,

b2,

b3 coordinate system, and the origin of the

b1,

b2,

b3 coordinate system is o.

We obtained the maximum values of , , , , , , , , in the b1, b2, b3 coordinate system, respectively, and took , , and as the half length of the OBB bounding box to obtain the OBB bounding box of the 3D CAD model, which represents the absolute value.

The construction method of the OBB bounding box of the 3D point cloud model is the same as that of the 3D CAD model, which will not be described in detail here.

After building the OBB bounding box for the point cloud data model and the 3D CAD model, the 3D CAD model of the same size as the current OBB bounding box for the 3D point cloud data is selected from the first-layer screening result library (

L1). The process is shown in

Figure 4, where

SPC represents the area of the OBB bounding box of the 3D point cloud of the substation building,

SCAD represents the area of the OBB bounding box of the CAD model in

L1,

= 0.1 m.

and

represent the maximum and minimum half-lengths of the OBB bounding box of the current 3D point cloud data.

and

represent the maximum and minimum half-lengths of the OBB bounding box of the CAD model in

L1.

2.2. Detailed Retrieval of the 3D CAD Model

The double-layer bounding box model screening process, based on the bounding sphere and OBB bounding box, belongs to the similarity screening stage of the global features of the 3D CAD models in the model library (using the point cloud data model). This method ignores the local features of the model and lacks the ability to distinguish the local details of the model. The bounding box screening method can only filter out 3D CAD models with similar appearances to the point cloud data model from the model library. Some of these models may only have similar external sizes to the point cloud data, while the local details are very different from the point cloud data. Therefore, after the preliminary screening of the bounding box, it is necessary to refine the local details of the model to screen out a 3D CAD model that is more similar to the current point cloud data. Thus, this paper adopted a view-based 3D model retrieval method on the basis of previous research results.

A view-based 3D model retrieval algorithm is a content-based 3D retrieval method that can significantly reduce the complexity of the algorithm [

13]. The core concept is to transform the existing model into a set of two-dimensional images through a group of virtual cameras and then obtain the model similarity by calculating the distance between the two-dimensional images [

14].

- (1)

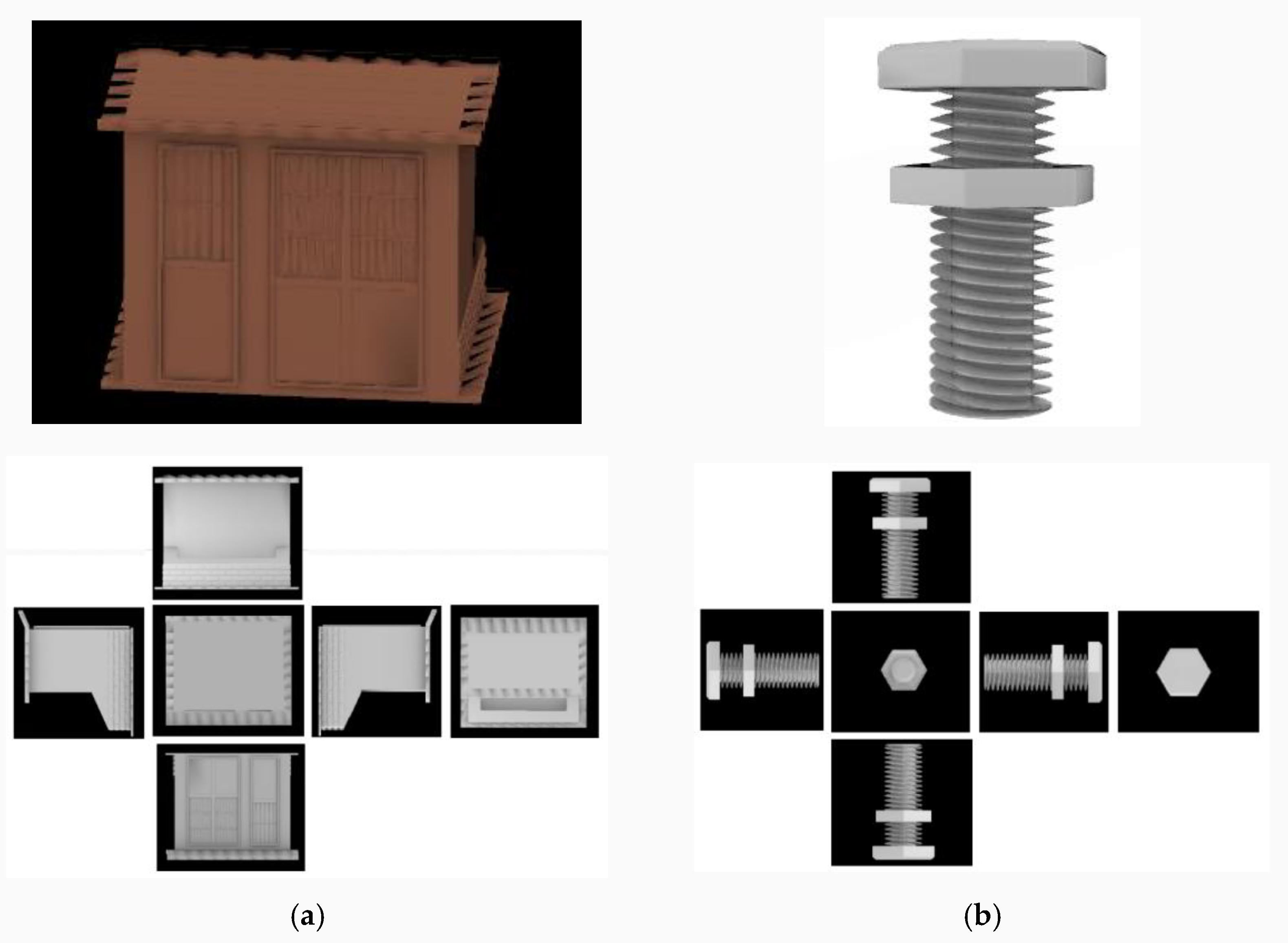

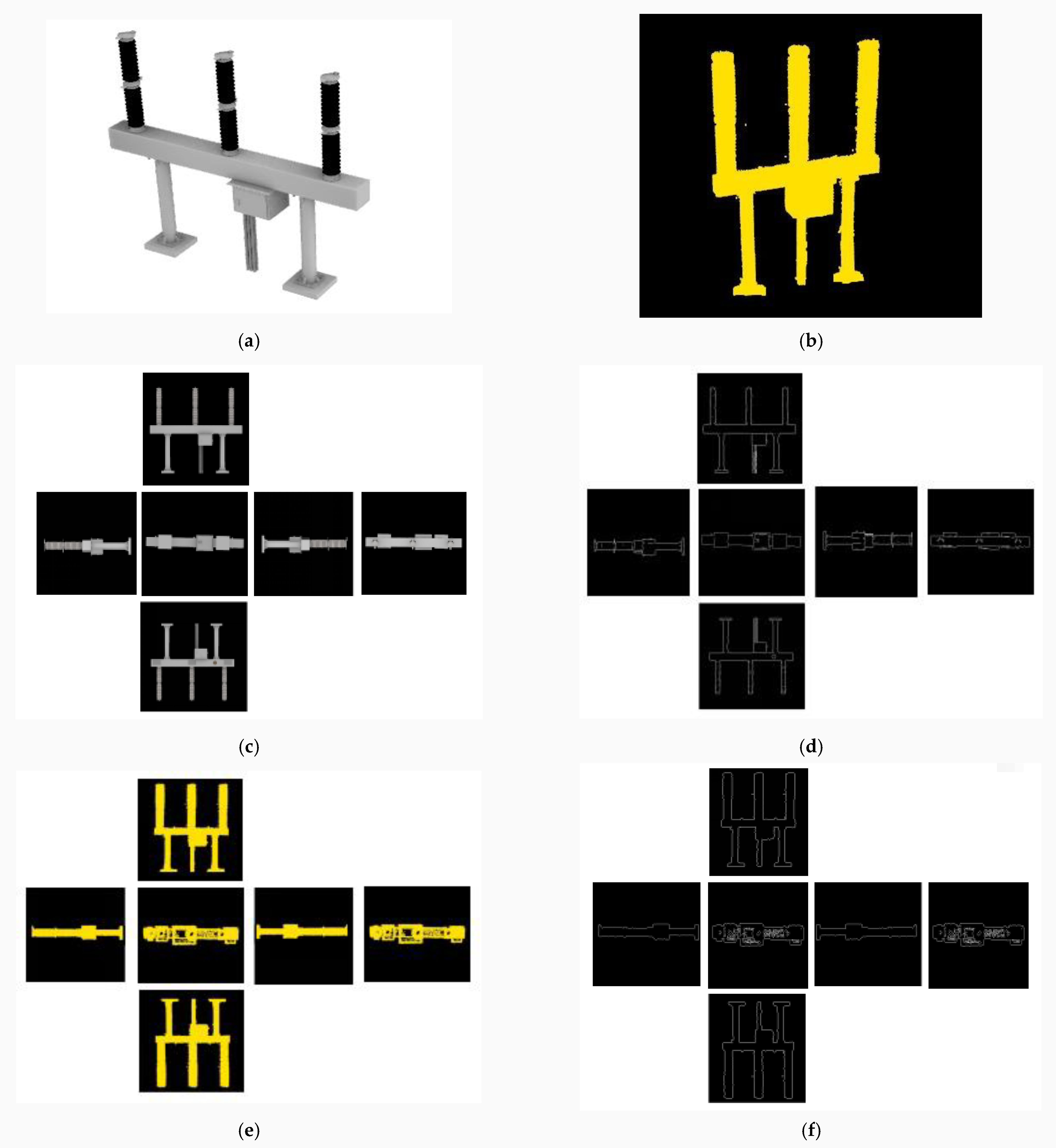

Generate a 2D image of the model.

The view-based 3D model retrieval algorithm is difficult for such methods to balance the efficiency of the 3D model retrieval process and the accuracy of the retrieval results. The accuracy of the view-based 3D model retrieval results is proportional to the detailed description of the 3D model features, i.e., related to the number of 2D images. The storage of 2D images requires a large space. The comparison of images involves computational costs, which reduce the retrieval efficiency. Therefore, when collecting the 2D images of 3D models, we should use algorithms that are relatively simple and can fully describe the details of 3D models [

15]. Considering the retrieval time and efficiency, it is more appropriate to use the hexahedron-based view acquisition method proposed by Shih et al. [

16] when acquiring 2D views of 3D models. Firstly, a regular hexahedron with the same centroid as the model is constructed, and the side length of the hexahedron is

L. Then, the model is mapped to six faces corresponding to the hexahedron. Finally, the corresponding features are extracted to match the similarity between the models. Two-dimensional image of the model obtained by the means of Shih et al. is shown in

Figure 5.

- (2)

Extraction of image features

After obtaining 2D views of the point cloud model and 3D CAD model, extracting 3D model features from these views is an important step in the view-based 3D model retrieval algorithm. In this paper, we adopted the Canny operator to extract the edge features of different view models. The algorithm for using the Canny operator to extract the edge features is as follows:

① We used Gaussian filtering to smooth the image. Let

be the original image,

the smoothed image, and

be the Gaussian kernel function, then:

② The gradient value and gradient direction are calculated. The core concept of the Canny operator is to find the position where the gray intensity changes the most in the image. The so-called maximum change is the gradient direction. We used the finite difference of the first-order partial derivative to calculate the magnitude and direction of the

f(

x) gradient.

M is the intensity of the image edge and

is the direction of the image edge.

③ Filter non-maxima. The gradient intensity value of the current pixel is compared with two pixels in the positive and negative directions. If the current pixel value is greater than these two pixel values, the current pixel is the edge point; otherwise, the current pixel is suppressed, i.e., the current pixel is assigned a value of 0.

④ After filtering the non-maximum value, the image edge can be more accurately represented by the remaining pixels. However, some edge pixels are inevitably caused by noise and color changes. In order to solve this problem, we can use weak gradient values to filter the edge pixels, while retaining the edge pixels with high gradient values. If the gradient value of the edge pixel is greater than the high threshold, it is marked as a strong edge pixel. On the contrary, if the gradient value of the edge pixel is less than the low threshold, the edge pixel will be suppressed. The specific implementation is shown in Algorithm 1:

| Algorithm 1: Filtering the Edge Pixels |

| 1: If fp > Height Threshold && fp = Height Threshold |

| 2: fp is a strong edge |

| 3: else if fp > Low Threshold && fp = Height Threshold |

| 4: fp is a weak edge |

| 5: else |

| 6: fp should be Suppressed |

⑤ Suppress isolated low threshold points.

So far, the pixels extracted from the real edge of the image have been identified as edges. However, for some weak edge pixels, there are still some disputes, because it is uncertain whether these pixels are extracted from the real edge, or caused by noise or color changes. By suppressing the interference of weak edge pixels caused by noise or color changes, the accuracy of the obtained results can be guaranteed. Generally, there is a certain connection between the weak edge pixels and the strong edge pixels caused by real edges, but there is no connection between the two caused by the noise points. Therefore, we can check the eight adjacent pixels of the weak edge pixels. If at least one of them is a strong edge pixel, the weak edge pixels can be retained as the real edge. Otherwise, the weak edge pixels are suppressed. The specific implementation is shown in Algorithm 2:

| Algorithm 2: Suppress Isolated Low Threshold Points |

| 1: If fp == Low Threshold && fp connected to a strong edge pixel |

| 2: fp is a strong edge |

| 3: else |

| 4: fp should be Suppressed |

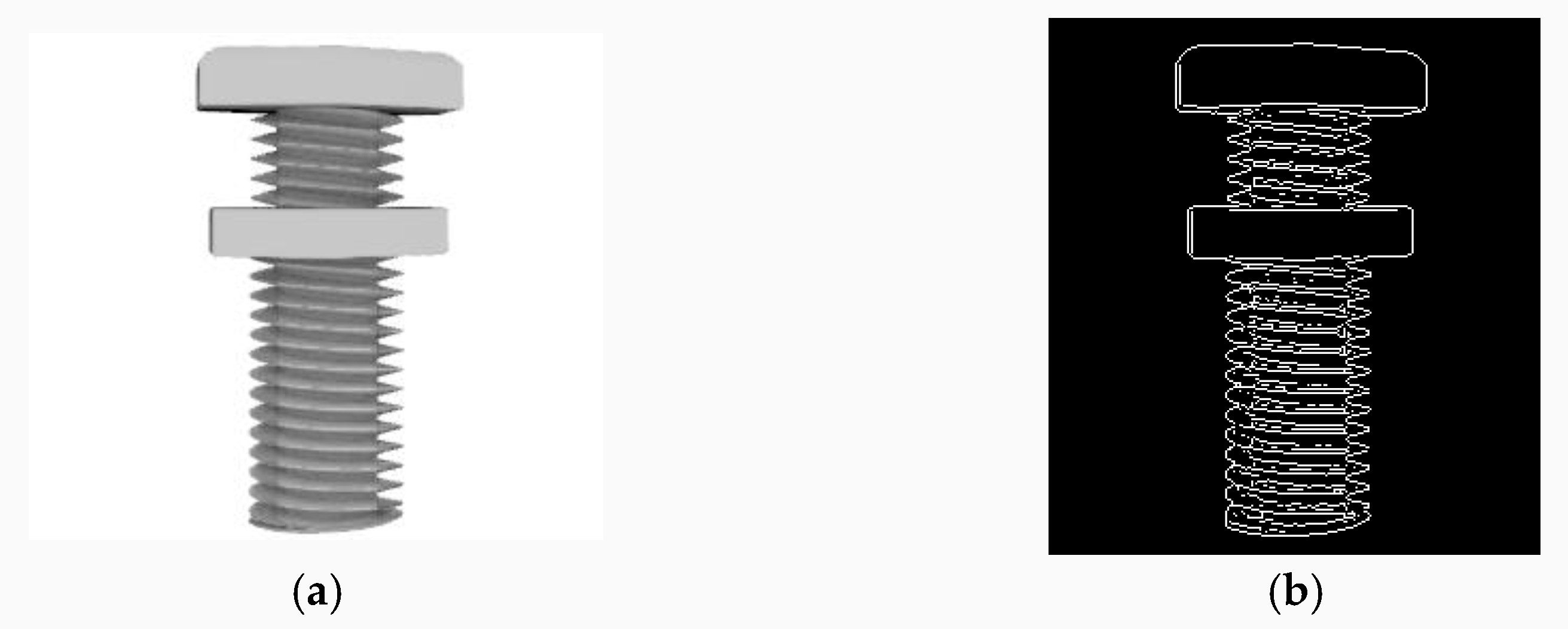

The canny edge feature is shown in

Figure 6. Canny edge descriptors are extracted from six images of the model to acquire the edge information of six angles of the model. Suppose

Kc represents each edge information and

KC represents the features of the 3D model [

17,

18,

19], namely: