Game-Based Simulation and Study of Pedestrian-Automated Vehicle Interactions

Abstract

:1. Introduction

2. Background and Motivation

2.1. Embodiment in Virtual Environments and Video Games

2.2. Existing AV Simulators

- Availability—Availability refers to which simulators are publicly available for further research and if the simulators are free and/or open-source. Broad availability increases the likelihood of public use, and therefore increases the research utility of the tool.

- Engines and Technical Development—This category refers to the underlying technical elements enabling the simulators. Most simulators are built upon game engines as a means of enabling realistic physics and graphics, with relatively straightforward development techniques. The dominant game engines are Unity and Unreal, although there are other options available too. Depending on the game engine, different programming knowledge may be needed for tool development or modification. Unity requires C# (or Javascript), and Unreal requires C++. Similarly, the game engine determines the supported platforms for tool use. More platform support tends to increase the availability of the tool for research use among diverse audiences.

- Usability, Type of Application, and Target Audience—This refers to the type of application each simulator is, namely, whether the simulation is a desktop or virtual reality application. This dictates the cost and complexity of the requisite software and hardware. We then identify, in coarse and subjective terms, the complexity of setting up and running the simulator. Due to the fact that this research combines social and technical elements, it is not reasonable to assume a high level of technical familiarity from all application users—even survey facilitators. We therefore also identify the target audience for which the simulator may be readily used to conduct research.

- Realistic Graphics Environment—This category identifies the subjective visual quality of the 3D environment and models or prefabs used in the simulation. Graphics are an important element towards increasing the engagement of a simulator and a user’s perceived embodiment. The more plausible the graphics, the better and more realistic the experience the tool will offer. Increased realism is often seen as essential to generating plausible and repeatable research data from game-based tools.

- Pedestrian Point of View—Pedestrian Point of View denotes whether a tool is well-suited to pedestrian-centric research and development application. Since we explore human interaction with AVs, and not the other way around, it is important to identify which simulators offer the ability for players to “become” a pedestrian.

2.3. Unmet Opportunity

- What are typical pedestrian–vehicle interaction patterns for varying degrees of autonomy?

- Can goal-driven humans effectively “coexist” with goal-driven automated vehicles, e.g., in a social context?

- Does the degree to which a pedestrian is familiar with autonomy change these interactions? How?

- Does the knowledge that a vehicle is automated change a pedestrian’s interaction patterns? How?

- Does exposure to automated vehicles increase or decrease pedestrian trust in those vehicles?

3. Materials and Methods

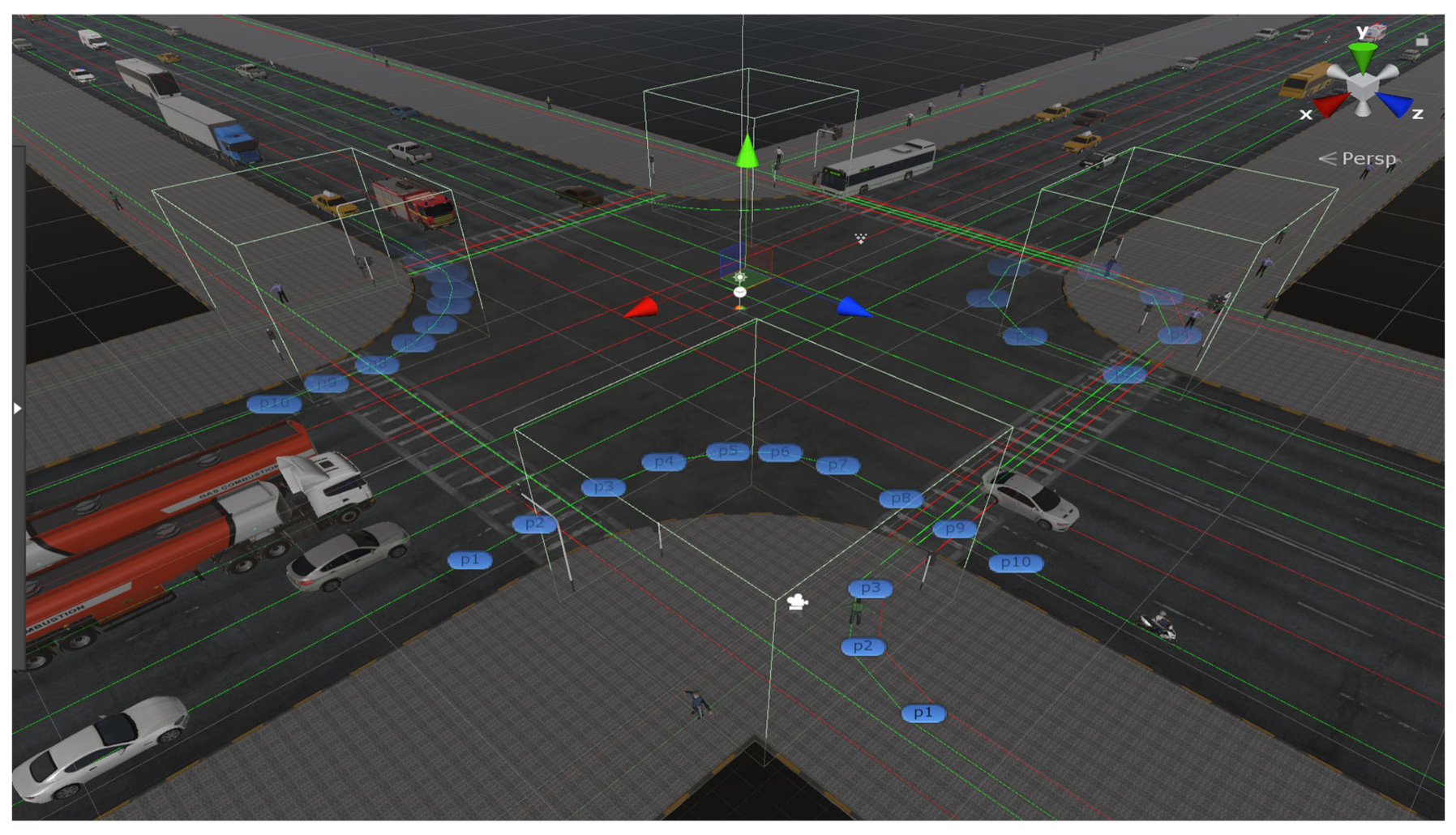

3.1. Initial Build and Play Testing

3.2. Second Build and Play Testing

3.3. Third Build and Play Testing

3.4. Final Build and Play Testing

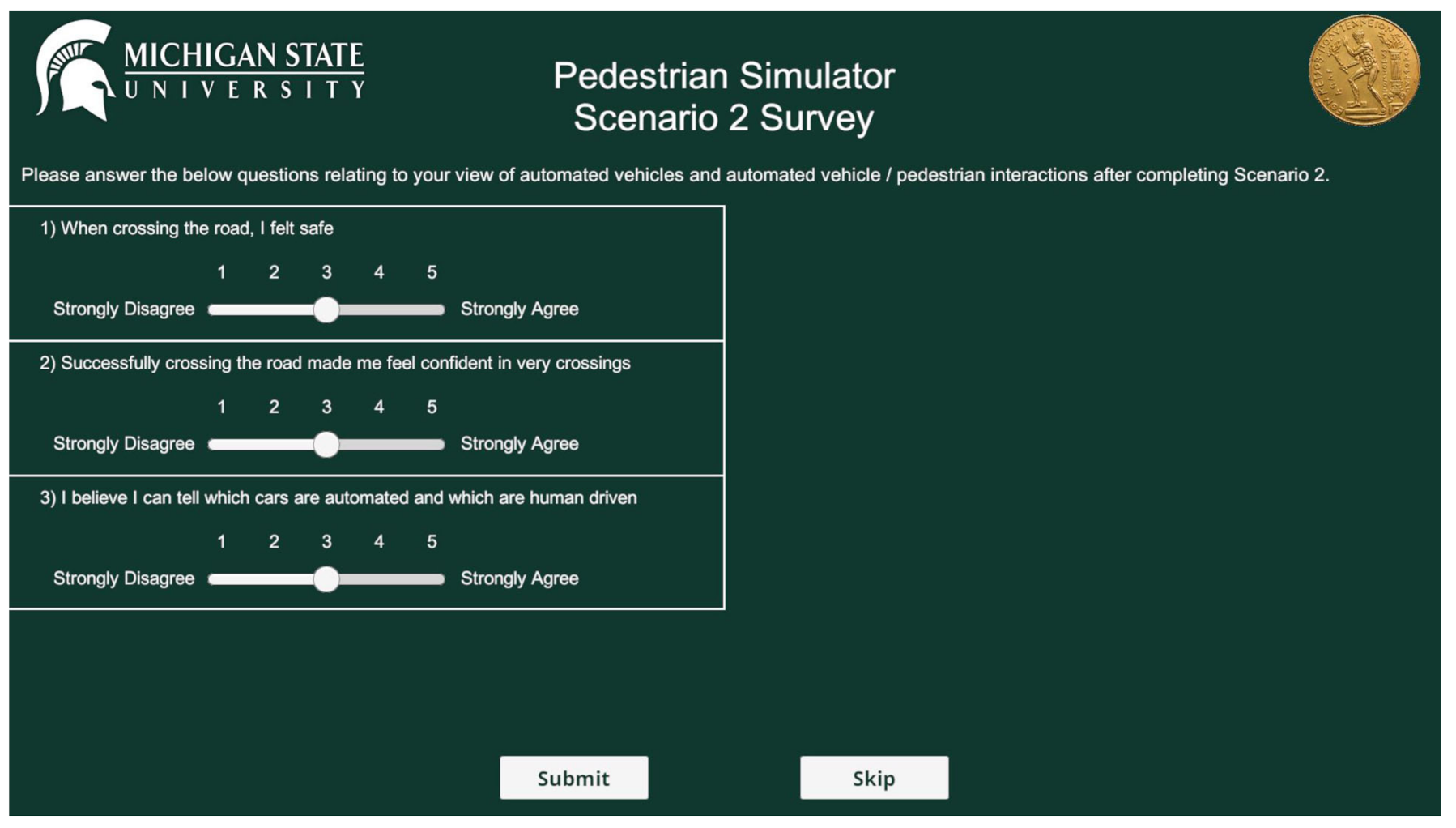

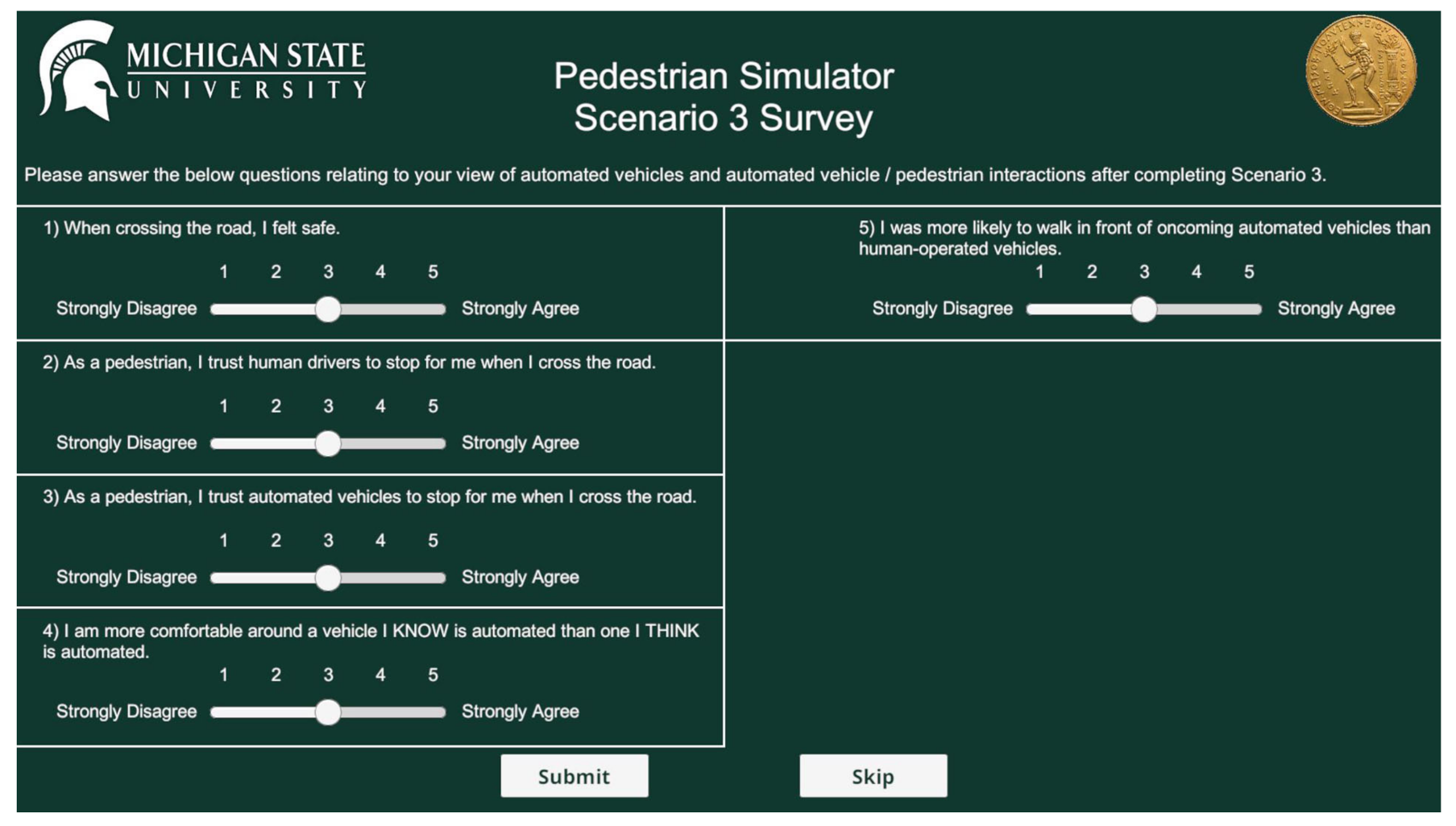

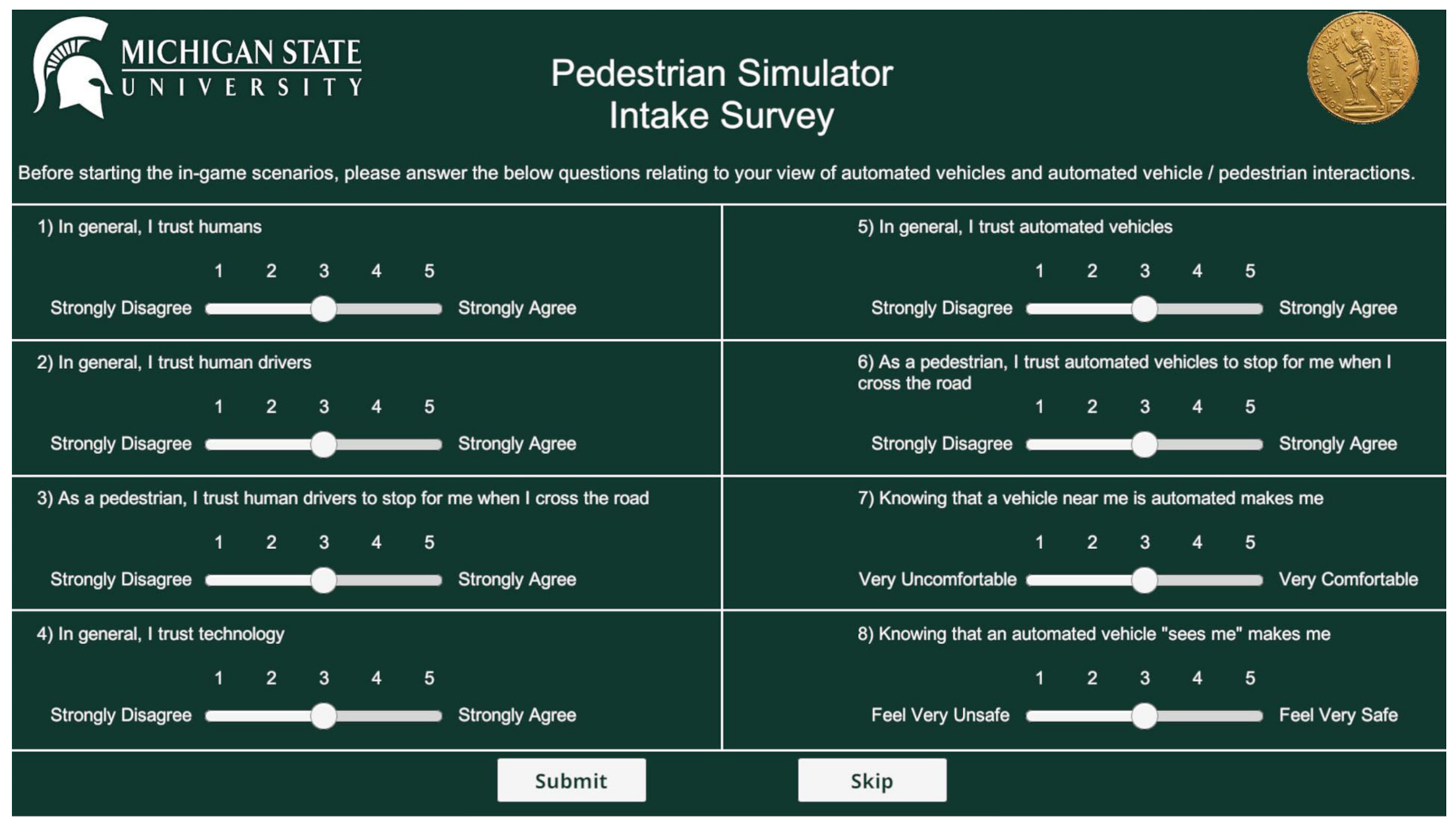

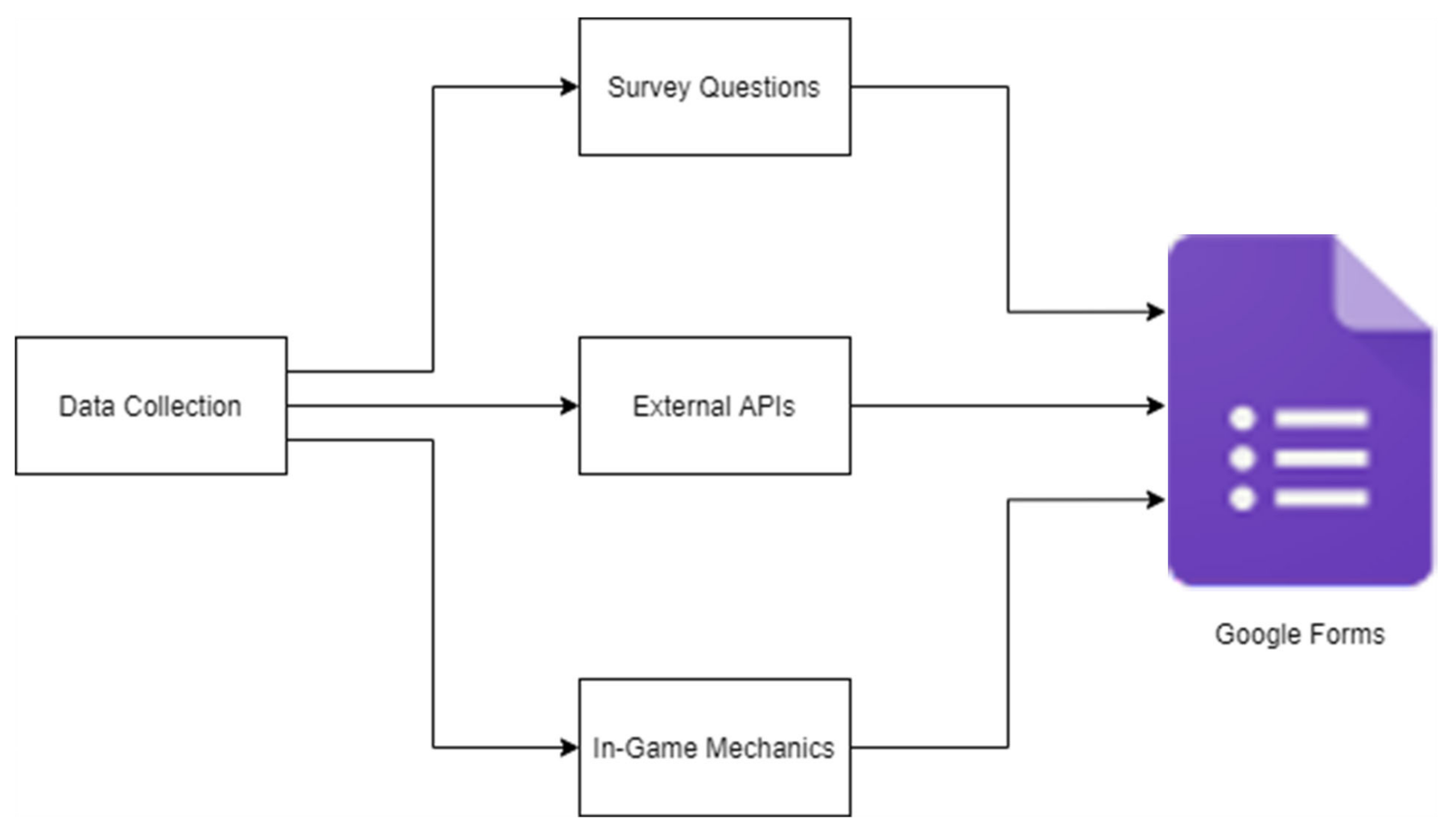

- the survey answers to the questions,

- the location data (country/city) that is automatically extracted from the users’ IPs using a free API service, and

- some in-game info extracted by events during the scenarios (score, being “hit” by a car, and time of “hit”).

- Scenario 1: All cars, human or AI operated, stop safely before hitting the user-pedestrian if physically possible. The possibility of being “hit” is low.

- Scenario 2: Some cars may stop before colliding with the user-pedestrian. Users do not know which, if any, given car (AV or human driven) will stop or not. The possibility of being “hit” is increased.

- Scenario 3: Some cars may stop before the user-pedestrian while others will not. In this case, the AVs are identified by a green light indication that appears under the vehicles. This indication starts when the pedestrian–AV distance is less than 15 m. The AVs will always stop before hitting the pedestrian if physically possible (Figure 12).

4. Results

5. Discussion

6. Conclusions

7. Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A

Appendix A.1

- BRIEF SUMMARY

- PURPOSE OF RESEARCH

- WHAT YOU WILL BE ASKED TO DO

- POTENTIAL BENEFITS

- POTENTIAL RISKS

- PRIVACY AND CONFIDENTIALITY

- YOUR RIGHTS TO PARTICIPATE, SAY NO, OR WITHDRAW

- CONTACT INFORMATION

- DOCUMENTATION OF INFORMED CONSENT

Appendix A.2

References

- Tirachini, A.; Antoniou, C. The economics of automated public transport: Effects on operator cost, travel time, fare and subsidy. Econ. Transp. 2020, 21, 100151. [Google Scholar] [CrossRef]

- Piao, J.; McDonald, M.; Hounsell, N.; Graindorge, M.; Graindorge, T.; Malhene, N. Public Views towards Implementation of Automated Vehicles in Urban Areas; Elsevier: Amsterdam, The Netherlands, 2016; Volume 14, pp. 2168–2177. [Google Scholar] [CrossRef] [Green Version]

- Deb, S.; Strawderman, L.J.; Carruth, D.W. Investigating Pedestrian Suggestions for External Features on Fully Autonomous Vehicles: A Virtual Reality Experiment; Elsevier: Amsterdam, The Netherlands, 2018; Volume 59, pp. 135–149. [Google Scholar] [CrossRef]

- Habibovic, A.; Lundgren, V.M.; Andersson, J.; Klingegård, M.; Lagström, T.; Sirkka, A.; Fagerlönn, J.; Edgren, C.; Fredriksson, R.; Krupenia, S.; et al. Communicating intent of automated vehicles to pedestrians. Front. Psychol. 2018, 9, 1336. [Google Scholar] [CrossRef] [PubMed]

- Voukkali, I.; Zorpas, A. Evaluation of urban metabolism assessment methods through SWOT analysis and analytical hierocracy process. Sci. Total Environ. 2021, 807, 150700. [Google Scholar] [CrossRef]

- Pappas, G.; Papamichael, I.; Zorpas, A.; Siegel, J.E.; Rutkowski, J.; Politopoulos, K. Modelling Key Performance Indicators in a Gamified Waste Management Tool. Modelling 2021, 3, 27–53. [Google Scholar] [CrossRef]

- Kassens-Noor, E.; Kotval-Karamchandani, Z.; Cai, M. Willingness to ride and perceptions of autonomous public transit. Transp. Res. Part A Policy Pract. 2020, 138, 92–104. [Google Scholar] [CrossRef]

- Parida, S.; Franz, M.; Abanteriba, S.; Mallavarapu, S.S.C. Autonomous Driving Cars: Future Prospects, Obstacles, User Acceptance and Public Opinion. In Advances in Human Aspects of Transportation, Proceedings of the AHFE 2018 International Conference on Human Factors in Transportation, Orlando, FL, USA, 21–25 July 2018; Springer: Berlin/Heidelberg, Germany, 2019; pp. 318–328. [Google Scholar]

- Kaur, K.; Rampersad, G. Trust in Driverless Cars: Investigating Key Factors Influencing the Adoption of Driverless Cars. 2018. Available online: http://www.scopus.com/inward/record.url?scp=85046728683&partnerID=8YFLogxK (accessed on 1 September 2021).

- Schoettle, B.; Michael, S. A Survey of Public Opinion about Autonomous and Self-Driving Vehicles in the US, the UK, and Australia; Technical Report; University of Michigan Transportation Research Institute: Ann Arbor, MI, USA, 2014. [Google Scholar]

- Kassens-Noor, E.; Cai, M.; Kotval-Karamchandani, Z.; Decaminada, T. Autonomous Vehicles and Mobility for People with Special Needs. Transp. Res. Part A Policy Pract. 2021, 150, 385–397. [Google Scholar] [CrossRef]

- Siegel, J.; Pappas, G. Morals, ethics, and the technology capabilities and limitations of automated and self-driving vehicles. AI Soc. 2021. [Google Scholar] [CrossRef]

- Kassens-Noor, E.; Siegel, J.; Decaminada, T. Choosing Ethics Over Morals: A Possible Determinant to Embracing Artificial Intelligence in Future Urban Mobility. Front. Sustain. Cities 2021, 3, 723475. [Google Scholar] [CrossRef]

- Papamichael, I.; Pappas, G.; Siegel, J.; Zorpas, A. Unified waste metrics: A gamified tool in next-generation strategic planning. Sci. Total Environ. 2022, 833, 154835. [Google Scholar] [CrossRef]

- Shah, S.; Dey, D.; Lovett, C.; Kapoor, A. AirSim: High-Fidelity Visual and physical simulation for autonomous vehicles. Field Serv. Robot. 2017, 5, 621–635. [Google Scholar] [CrossRef] [Green Version]

- Dosovitskiy, A.; Ros, G.; Codevilla, F.; López, A.; Koltun, V. CARLA: An open urban driving simulator. In Proceedings of the 1st Annual Conference on Robot Learning, Mountain View, CA, USA, 13–15 November 2017; Volume 78, pp. 1–16. [Google Scholar]

- Karur, K.; Pappas, G.; Siegel, J.; Zhang, M. End-to-End Synthetic LiDAR Point Cloud Data Generation and Deep Learning Validation; SAE Technical Paper; SAE International: Warrendale, PA, USA, 2022; Available online: https://www.sae.org/publications/technical-papers/content/2022-01-0164/ (accessed on 29 March 2022).

- Deb, S.; Carruth, D.W.; Sween, R.; Strawderman, L.; Garrison, T.M. Efficacy of Virtual Reality in Pedestrian Safety Research; Elsevier: Amsterdam, The Netherlands, 2017; Volume 65, pp. 449–460. [Google Scholar] [CrossRef]

- Pappas, G.; Siegel, J.E.; Rutkowski, J.; Schaaf, A. Game and Simulation Design for Studying Pedestrian-Automated Vehicle Interactions. arXiv 2021. [Google Scholar] [CrossRef]

- Shabanpour, R.; Golshani, N.; Shamshiripour, A.; Mohammadian, A.K. Eliciting preferences for adoption of fully automated vehicles using best-worst analysis. Transp. Res. Part C Emerg. Technol. 2018, 93, 463–478. [Google Scholar] [CrossRef]

- Yurtsever, E.; Lambert, J.; Carballo, A.; Takeda, K. A Survey of Autonomous Driving: Common Practices and Emerging Technologies. IEEE Access. 2020, 8, 58443–58469. [Google Scholar] [CrossRef]

- Shahrdar, S.; Park, C.; Nojoumian, M. Human trust measurement using an immersive virtual reality autonomous vehicle simulator. In Proceedings of the 2019 AAAI/ACM Conference on AI, Ethics, and Society, AIES ’19, Honolulu, HI, USA, 27–28 January 2019; pp. 515–520. [Google Scholar]

- Ackermann, C.; Beggiato, M.; Schubert, S.; Krems, J.F. An Experimental Study to Investigate Design and Assessment Criteria: What Is Important for Communication between Pedestrians and Automated Vehicles? Elsevier: Amsterdam, The Netherlands, 2019; Volume 75, pp. 272–282. [Google Scholar] [CrossRef]

- Keeling, G.; Evans, K.; Thornton, S.M.; Mecacci, G.; Santoni de Sio, F. Four Perspectives on What Matters for the Ethics of Automated Vehicles. Road Veh. Autom. 2019, 6, 49–60. [Google Scholar]

- Yigitcanlar, T.; Wilson, M.; Kamruzzaman, M. Disruptive Impacts of Automated Driving Systems on the Built Environment and Land Use: An Urban Planner’s Perspective. J. Open Innov. Technol. Mark. Complex. 2019, 5, 24. [Google Scholar] [CrossRef] [Green Version]

- Kassens-Noor, E.; Wilson, M.; Cai, M.; Durst, N.; Decaminada, T. Autonomous vs. Self-Driving Vehicles: The Power of Language to Shape Public Perceptions. J. Urban Technol. 2021, 28, 5–24. [Google Scholar] [CrossRef]

- Golbabaei, F.; Yigitcanlar, T.; Paz, A.; Bunker, J. Individual Predictors of Autonomous Vehicle Public Acceptance and Intention to Use: A Systematic Review of the Literature. J. Open Innov. Technol. Mark. Complex. 2020, 6, 106. [Google Scholar] [CrossRef]

- Reig, S.; Norman, S.; Morales, C.G.; Das, S.; Steinfeld, A.; Forlizzi, J. A field study of pedestrians and autonomous vehicles. In Proceedings of the 10th International Conference on Automotive User Interfaces and Interactive Vehicular Applications, AutomotiveUI ’18, Toronto, ON, Canada, 23–25 September 2018; pp. 198–209. [Google Scholar]

- Li, N.; Kolmanovsky, I.; Girard, A.; Yildiz, Y. Game Theoretic Modeling of Vehicle Interactions at Unsignalized Intersections and Application to Autonomous Vehicle Control. In Proceedings of the 2018 Annual American Control Conference (ACC), Milwaukee, WI, USA, 27–29 June 2018; Volume 2018, pp. 3215–3220. [Google Scholar]

- Chen, B.; Zhao, D.; Peng, H. Evaluation of automated vehicles encountering pedestrians at unsignalized crossings. In Proceedings of the 2017 IEEE Intelligent Vehicles Symposium (IV), Los Angeles, CA, USA, 11–14 June 2017; pp. 1679–1685. [Google Scholar]

- Fisac, J.F.; Bronstein, E.; Stefansson, E.; Sadigh, D.; Sastry, S.S.; Dragan, A.D. Hierarchical game-theoretic planning for autonomous vehicles. In Proceedings of the 2019 International Conference on Robotics and Automation (ICRA), Montreal, QC, Canada, 20–24 May 2019; Volume 2019, pp. 9590–9596. [Google Scholar]

- Song, Y.; Lehsing, C.; Fuest, T.; Bengler, K. External HMIs and Their Effect on the Interaction Between Pedestrians and Automated Vehicles BT—Intelligent Human Systems Integration; Springer International Publishing: Berlin/Heidelberg, Germany, 2018. [Google Scholar]

- Robert, L. The Future of Pedestrian-Automated Vehicle Interactions. XRDS Crossroads ACM Mag. Stud. 2019, 25, 30–33. [Google Scholar] [CrossRef]

- Ezzati Amini, R.; Katrakazas, C.; Riener, A.; Antoniou, C. Interaction of automated driving systems with pedestrians: Challenges, current solutions, and recommendations for eHMIs. Transp. Rev. 2021, 41, 788–813. [Google Scholar] [CrossRef]

- Thornton, S.M.; Pan, S.; Erlien, S.M.; Gerdes, J.C. Incorporating Ethical Considerations into Automated Vehicle Control. IEEE Trans. Intell. Transp. Syst. 2017, 18, 1429–1439. [Google Scholar] [CrossRef]

- Mirnig, A.G.; Meschtscherjakov, A. Trolled by the Trolley Problem: Trolled by the Trolley Problem: On What Matters for Ethical Decision Making in Automated Vehicles. In Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems, CHI’19, Glasgow, UK, 4–9 May 2019; pp. 1–10. [Google Scholar]

- Keeling, G. Why Trolley Problems Matter for the Ethics of Automated Vehicles; Springer: Dordrecht, The Netherlands, 2020; Volume 26, pp. 293–307. [Google Scholar] [CrossRef] [Green Version]

- Jayaraman, S.; Tilbury, D.; Pradhan, A.; Robert, L.J. Analysis and Prediction of Pedestrian Crosswalk Behavior during Automated Vehicle Interactions. In Proceedings of the 2020 IEEE International Conference on Robotics and Automation (ICRA), Paris, France, 31 May–31 August 2020. [Google Scholar]

- Juliani, A.; Berges, V.P.; Teng, E.; Cohen, A.; Harper, J.; Elion, C.; Goy, C.; Gao, Y.; Henry, H.; Mattar, M.; et al. Unity: A General Platform for Intelligent Agents. arXiv 2018. [Google Scholar] [CrossRef]

- Pappas, G.; Siegel, J.E.; Politopoulos, K.; Sun, Y. A Gamified Simulator and Physical Platform for Self-Driving Algorithm Training and Validation. Electronics 2021, 10, 1112. [Google Scholar] [CrossRef]

- Nguyen, V.T.; Dang, T. Setting up Virtual Reality and Augmented Reality Learning Environment in Unity. In Proceedings of the 2017 IEEE International Symposium on Mixed and Augmented Reality (ISMAR-Adjunct), Nantes, France, 9–13 October 2017; pp. 315–320. [Google Scholar]

- Morschheuser, B.; Hassan, L.; Werder, K.; Hamari, J. How to design gamification? A method for engineering gamified software. Inf. Softw. Technol. 2018, 95, 219–237. [Google Scholar] [CrossRef] [Green Version]

- Fernandez, M. Augmented-Virtual Reality: How to improve education systems. High. Learn. Res. Commun. 2017, 7, 1–15. [Google Scholar] [CrossRef]

- Löcken, A.; Golling, C.; Riener, A. How Should Automated Vehicles Interact with Pedestrians? A Comparative Analysis of Interaction Concepts in Virtual Reality. In Proceedings of the 11th International Conference on Automotive User Interfaces and Interactive Vehicular Applications, Utrecht, The Netherlands, 21–25 September 2019; pp. 262–274. [Google Scholar] [CrossRef]

- Pappas, G.; Siegel, J.; Politopoulos, K. VirtualCar: Virtual Mirroring of IoT-Enabled Avacars in AR, VR and Desktop Applications. In Proceedings of the ICAT-EGVE 2018—International Conference on Artificial Reality and Telexistence and Eurographics Symposium on Virtual Environments, Limassol, Cyprus, 7–9 November 2018; pp. 15–17. [Google Scholar]

- Riegler, A.; Riener, A.; Holzmann, C. A Research Agenda for Mixed Reality in Automated Vehicles. In Proceedings of the 19th International Conference on Mobile and Ubiquitous Multimedia, Essen, Germany, 22–25 November 2020; pp. 119–131. [Google Scholar] [CrossRef]

- Gee, J.P. Video games and embodiment. Games Cult. 2008, 3, 253–263. [Google Scholar] [CrossRef] [Green Version]

- Moghimi, M.; Stone, R.; Rotshtein, P.; Cooke, N. The Sense of embodiment in Virtual Reality. Presence Teleoperators Virtual Environ. 2016, 25, 81–107. [Google Scholar] [CrossRef] [Green Version]

- Alton, C. Experience, 60 Frames Per Second: Virtual Embodiment and the Player/Avatar Relationship in Digital Games. Load. J. Can. Game Stud. Assoc. 2017, 10, 214–227. [Google Scholar]

- Kiela, D.; Bulat, L.; Vero, A.L.; Clark, S. Virtual Embodiment: A Scalable Long-Term Strategy for Artificial Intelligence Research. arXiv 2016. [Google Scholar] [CrossRef]

- Gonzalez-Franco, M.; Peck, T.C. Avatar embodiment. Towards a standardized questionnaire. Front. Robot. AI 2018, 5, 1–9. [Google Scholar] [CrossRef]

- Kassens-Noor, E.; Dake, D.; Decaminada, T.; Kotval-K, Z.; Qu, T.; Wilson, M.; Pentland, B. Sociomobility of the 21st century: Autonomous vehicles, planning, and the future city. Transp. Policy 2020, 99, 329–335. [Google Scholar] [CrossRef]

- Lelièvre, E. Research-Creation Methodology for Game Research. 2018. Available online: https://hal.archives-ouvertes.fr/hal-02615671 (accessed on 1 September 2021).

- Salen, K.; Zimmerman, E. Rules of Play: Game Design Fundamentals; MIT Press: Cambridge, MA, USA, 2003. [Google Scholar]

- Ott, M.; De Gloria, A.; Arnab, S.; Bellotti, F.; Kiili, K.; de Freitas, S.; Berta, R. Designing serious games for education: From pedagogical principles to game mechanisms. In Proceedings of the 7th European Conference on Management Leadership and Governance, ECMLG 2011, Sophia-Antipolis, France, 6–7 October 2011; Volume 2011. [Google Scholar]

- Michael, D.R.; Chen, S. Serious Games: Games that Educate, Train, and Inform; Muska & Lipman/Premier-Trade: Boston, MA, USA, 2005. [Google Scholar]

- Jesse, S. The Art of Game Design: A Book of Lenses; AK Peters: Natick, MA, USA; CRC Press: Boca Raton, FL, USA, 2014. [Google Scholar]

- Zimmerman, E. Play as Research: The Iterative Design Process. Des. Res. Methods Perspect. 2003, 2003, 176–184. [Google Scholar]

- Lazzaro, N. The 4 Keys 2 Fun. Available online: http://www.nicolelazzaro.com/the4-keys-to-fun/ (accessed on 1 November 2020).

- Bartle, R. Hearts, clubs, diamonds, spades: Players who suit MUDs. J. Mud Res. 1996, 1, 19. [Google Scholar]

- Schneider, M.O.; Moriya, É.T.U.; da Silva, A.V.; Néto, J.C. Analysis of Player Profiles in Electronic Games applying Bartle’s Taxonomy. In Proceedings of the SBC—Proceedings of SBGames 2016, São Paulo, Brazil, 8–10 September 2016. [Google Scholar]

- Brooke, J. SUS: A “quick and dirty” usability scale. In Usability Evaluation in Industry; CRC Press: London, UK, 1996; p. 189. [Google Scholar]

- McGloin, R.; Farrar, K.; Krcmar, M. Video games, immersion, and cognitive aggression: Does the controller matter? Media Psychol. 2013, 16, 65–87. [Google Scholar] [CrossRef]

- Erskine, M.A.; Brooks, S.; Greer, T.H.; Apigian, C. From driver assistance to fully-autonomous: Examining consumer acceptance of autonomous vehicle technologies. J. Consum. Mark. 2020, 37, 883–894. [Google Scholar] [CrossRef]

- Choi, J.K.; Ji, Y.G. Investigating the Importance of Trust on Adopting an Autonomous Vehicle. Int. J. Hum. Comput. Interact. 2015, 31, 692–702. [Google Scholar] [CrossRef]

- Ayoub, J.; Yang, X.J.; Zhou, F. Modeling dispositional and initial learned trust in automated vehicles with predictability and explainability. Transp. Res. Part F Traffic Psychol. Behav. 2021, 77, 102–116. [Google Scholar] [CrossRef]

- Venkatesh, V.; Morris, M.G.; Davis, G.B.; Davis, F.D. User Acceptance of Information Technology: Toward a Unified View. MIS Q. 2003, 27, 425–478. [Google Scholar] [CrossRef] [Green Version]

- Kafai, Y.B.; Richard, G.T.; Tynes, B.M. Diversifying Barbie and Mortal Kombat: Intersectional Perspectives and Inclusive Designs in Gaming; Lulu Press: Morrisville, NC, USA, 2016; Available online: http://press.etc.cmu.edu/files/Diversifying-Barbie-Mortal-Kombat_Kafai-Richard-Tynes-etal-web.pdf (accessed on 1 September 2021).

- Pappas, G.; Siegel, J.; Vogiatzakis, I.; Politopoulos, K. Gamification and the Internet of Things in Education. In Handbook of Intelligent Techniques in Educational Process; Springer: Berlin/Heidelberg, Germany, 2022. [Google Scholar] [CrossRef]

- Siegel, J.; Pappas, G.; Rutkowski, J. Game and Survey Data for “Game-Based Simulation and Study of Pedestrian-Automated Vehicle Interactions”. Harv. Dataverse 2022. [Google Scholar] [CrossRef]

| CARLA Simulator | AirSim | Mississippi State University Tool | |

|---|---|---|---|

| Availability | Free and Open Source | Free and Open Source | Not on Public Repository |

| Unity | No Compatibility | Experimental Compatibility | Compatible |

| Unreal Engine | Compatible | Compatible | No Compatibility |

| Technical Development | C++, Python | C++, Python, C# | No Compatibility |

| Ease of Use | Hard to Use | Hard to Use | Easy to use |

| Type of Application | Desktop | Desktop | Virtual Reality |

| Target Audience | Mainly Programmers or Engineers | Mainly Programmers or Engineers | Academia Audience |

| Graphics | High | High | Average |

| Pedestrian Point of View | No Pedestrian POV | No Pedestrian POV | Pedestrian POV |

| Scenario | Q1 | Q2 | Q3 | Q4 | Q5 | Q6 | Q7 | Q8 | Q9 | Q10 | Q11 | Q12 | Q13 | S1 |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Intake (n = 89) | ||||||||||||||

| 1 (n = 78) | ||||||||||||||

| 2 (n = 67) | ||||||||||||||

| 3 (n = 62) |

| Question | Low (Text) | High (Text) | |

|---|---|---|---|

| Q1 | In general, I trust humans | 1 (Strongly Disagree) | 5 (Strongly Agree) |

| Q2 | In general, I trust human drivers | 1 (Strongly Disagree) | 5 (Strongly Agree) |

| Q3 | As a pedestrian, I trust human drivers to stop for me when I cross the road | 1 (Strongly Disagree) | 5 (Strongly Agree) |

| Q4 | In general, I trust technology | 1 (Strongly Disagree) | 5 (Strongly Agree) |

| Q5 | In general, I trust automated vehicles | 1 (Strongly Disagree) | 5 (Strongly Agree) |

| Q6 | As a pedestrian, I trust automated vehicles to stop for me when I cross the road | 1 (Strongly Disagree) | 5 (Strongly Agree) |

| Q7 | Knowing that a vehicle near me is automated makes me | 1 (Very Uncomfortable) | 5 (Very Comfortable) |

| Q8 | Knowing that an automated vehicle “sees me” makes me | 1 (Feel Very Unsafe) | 5 (Feel Very Safe) |

| Q9 | When crossing the road, I felt safe | 1 (Strongly Disagree) | 5 (Strongly Agree) |

| Q10 | Successfully crossing the road made me feel very confident | 1 (Strongly Disagree) | 5 (Strongly Agree) |

| Q11 | I believe I can tell which cars are automated and which are human driven | 1 (Strongly Disagree) | 5 (Strongly Agree) |

| Q12 | I was more likely to walk in front of oncoming automated vehicles than human-operated vehicles | 1 (Strongly Disagree) | 5 (Strongly Agree) |

| Q13 | I am more comfortable around a vehicle I KNOW is automated than one I THINK is automated | 1 (Strongly Disagree) | 5 (Strongly Agree) |

| S1 | Game Score (points) | 0 | ≈2000 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Pappas, G.; Siegel, J.E.; Kassens-Noor, E.; Rutkowski, J.; Politopoulos, K.; Zorpas, A.A. Game-Based Simulation and Study of Pedestrian-Automated Vehicle Interactions. Automation 2022, 3, 315-336. https://doi.org/10.3390/automation3030017

Pappas G, Siegel JE, Kassens-Noor E, Rutkowski J, Politopoulos K, Zorpas AA. Game-Based Simulation and Study of Pedestrian-Automated Vehicle Interactions. Automation. 2022; 3(3):315-336. https://doi.org/10.3390/automation3030017

Chicago/Turabian StylePappas, Georgios, Joshua E. Siegel, Eva Kassens-Noor, Jacob Rutkowski, Konstantinos Politopoulos, and Antonis A. Zorpas. 2022. "Game-Based Simulation and Study of Pedestrian-Automated Vehicle Interactions" Automation 3, no. 3: 315-336. https://doi.org/10.3390/automation3030017

APA StylePappas, G., Siegel, J. E., Kassens-Noor, E., Rutkowski, J., Politopoulos, K., & Zorpas, A. A. (2022). Game-Based Simulation and Study of Pedestrian-Automated Vehicle Interactions. Automation, 3(3), 315-336. https://doi.org/10.3390/automation3030017