5.1. Experiment 1: Eight Queues

In this section, we describe an experiment involving eight queues in the form of an arrival flow with arrival rates λi = (0.03, 0.16, 0.2, 0.1, 0.08, 0.15, 0.06, 0.05) packets/s, where each arriving packet is classified and placed into its respective queue. The WFQ continual-DRL algorithm services queues in a manner that reflects their assigned weights. The interarrival times and service times were generated using a gamma distribution with a Squared Coefficient of Variation (SCV) of 0.8. The average packet size was 1 byte/packet, the buffers of queues were packets, and the total finite bandwidth was 1 byte per second. The control policy allocated bandwidth weights satisfying . The number of episodes was 1000, with a time length of 2000 s for each episode. The agent took action every 20 s.

When the random function had been implemented,

was obtained, as explained in

Section 2, based on six ranges, each of which was operated over 1000 episodes. Next, we set the six values in the grid to search for optimal values from them. The optimal value of

was then obtained from the grid approach process, the FIM was computed every 30 episodes, and then the parameters

and

were stored.

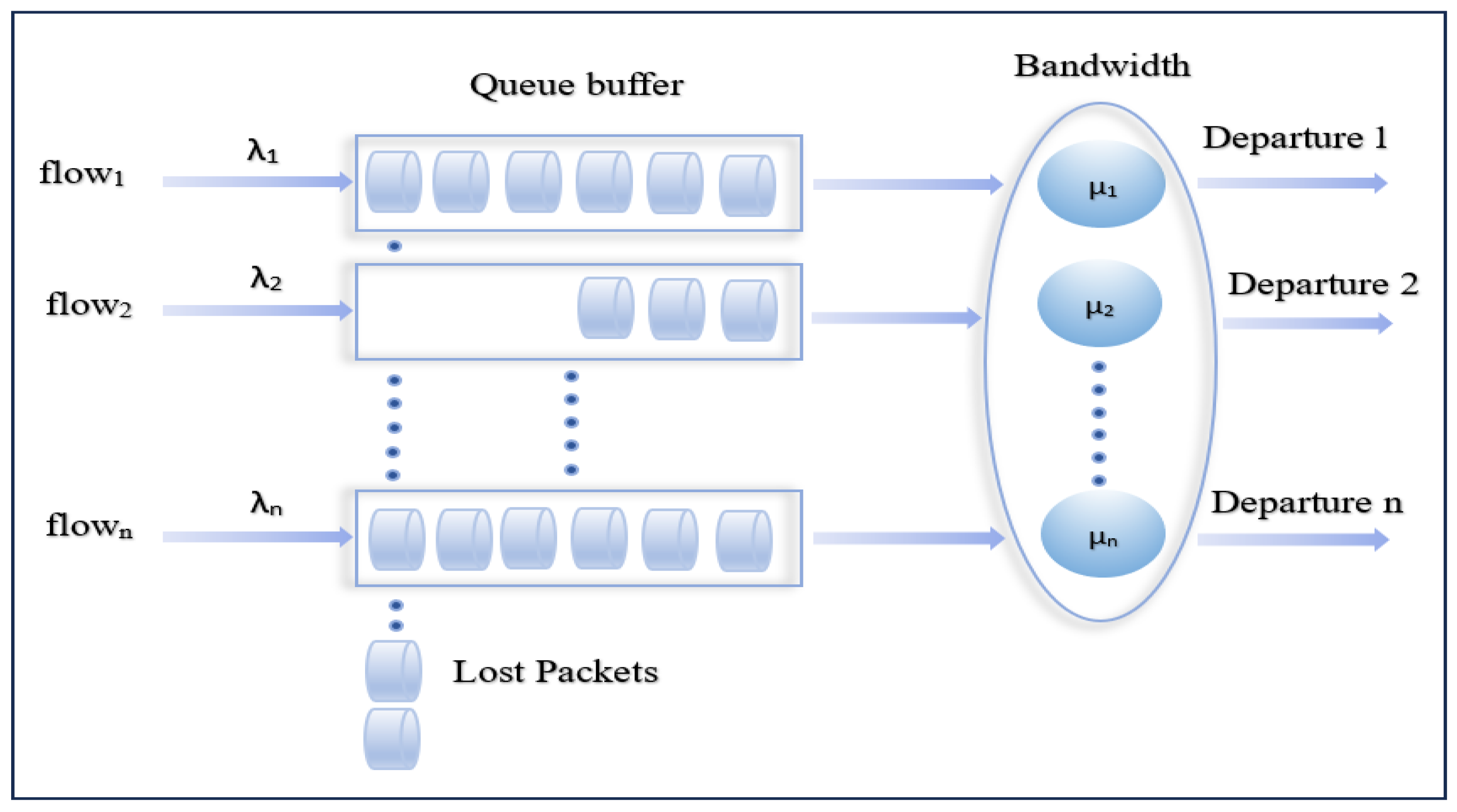

At the initial implementation step, a multi-queueing system consisting of eight queues was experimentally designed to evaluate the WFQ-DRL algorithm, as presented in [

22]. This research aims to employ deep reinforcement learning for the allocation of dynamic bandwidth in router environments using a WFQ mechanism; each queue was modeled with identical arrival rates (

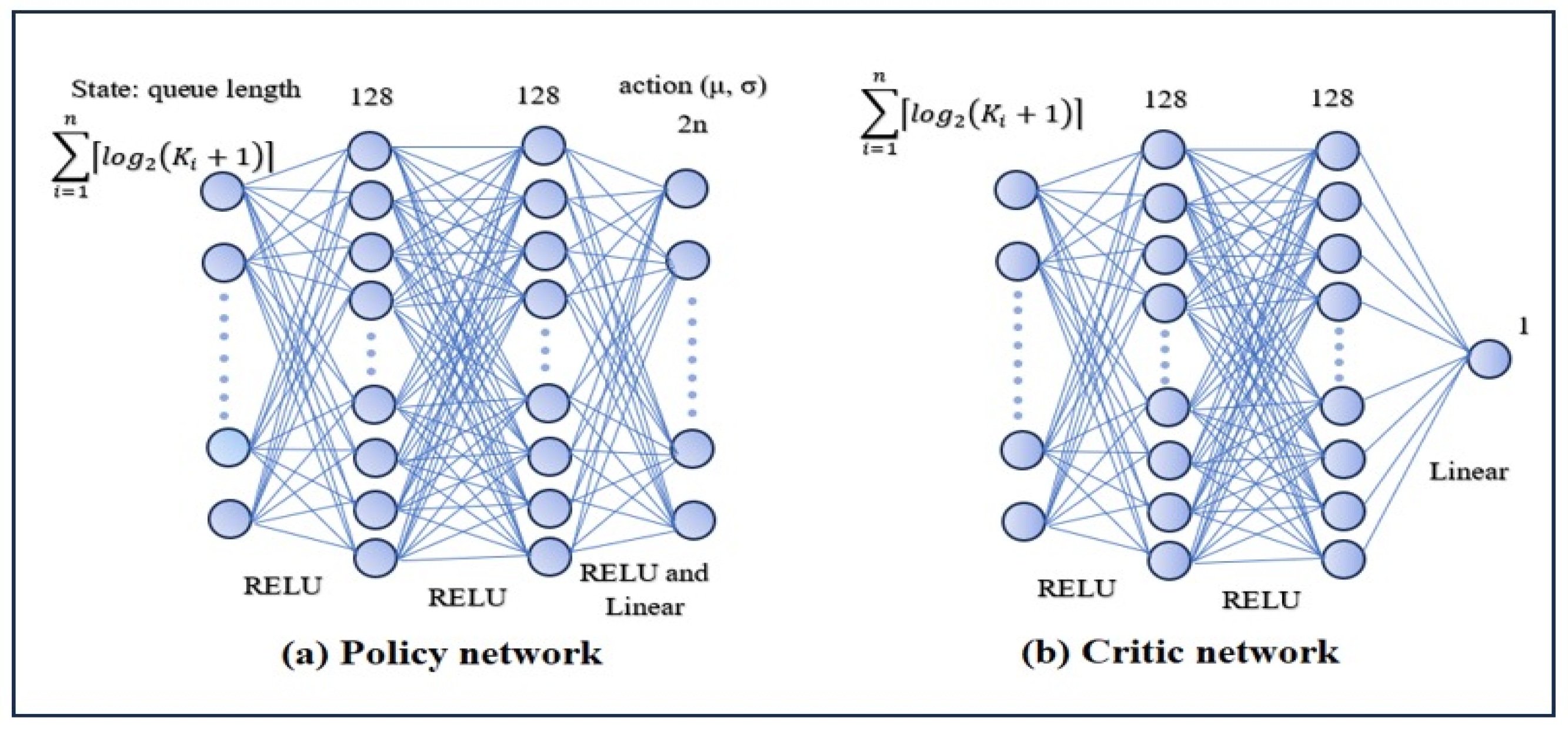

λ) and buffer sizes, as detailed in the previous section. The implementation structure adopts a neural network containing policy and critic networks, as illustrated in

Figure 3. The key hyperparameters of the network are summarized in

Table 1.

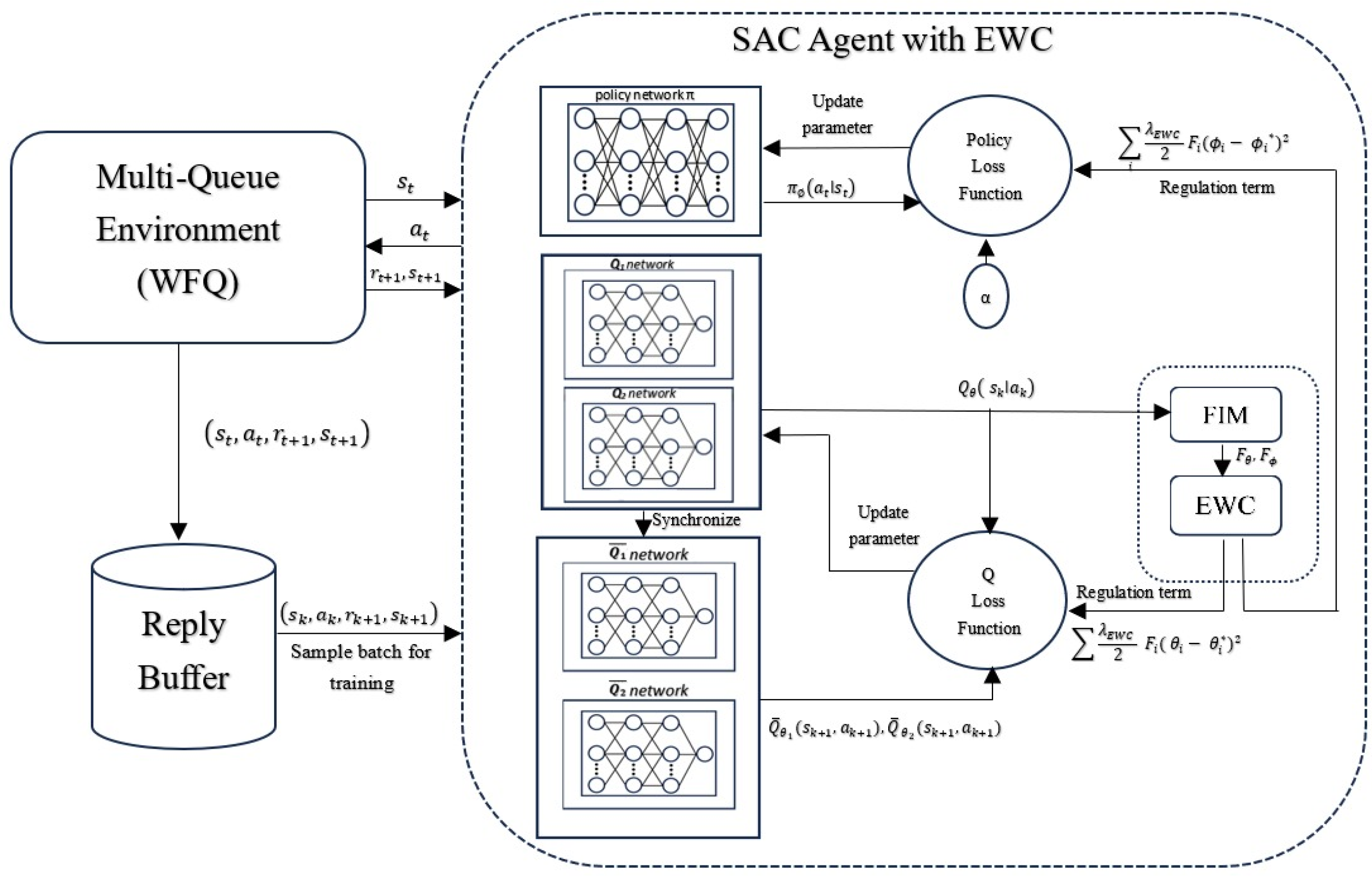

After this, improving the WFQ-DRL algorithm by integrating continual learning allows the model to adapt over time without forgetting previously acquired knowledge. It primarily utilizes the EWC mechanism to preserve important network parameters as the model learns new tasks. The adaptive algorithm of the WFQ continual-DRL enables ongoing learning and adaptation to varying network conditions and objectives while maintaining the same structural configuration and parameter settings across the eight queues. This technique effectively maximizes the cumulative reward over time, ensuring robust performance in dynamic networking environments.

The average reward is shown in

Figure 7 for the curve of SAC, and it can be seen that the curve exponentially converges after a 200-episode run, and the fluctuation range for average reward is between −10 and −6.5, according to SAC with EWC curve of average reward for enhance algorithm resulting in consistent performance, and it can be seen that the curve exponentially converges after a 100-episode run. This indicates that the model was stable, with a steady range for the average rewards of between −2 and −1.5, resulting in consistent performance. At the beginning of training, the controller typically explores different assignments as action strategies (bandwidth weights) through queues, leading to increased fluctuation. In this step, the controller has the potential to explore all the probabilities of processes to achieve an action that yields benefits. During the learning process, the controller exploits actions, resulting in a rise in the reward curve, and the policy gradually converges toward the optimal allocation bandwidth, reducing the variability in the reward curve. The rise indicates that the policy used in the WFQ continual-DRL algorithm improves performance and provides a suitable understanding of the environment dynamics. Eventually, the reward stabilizes during training, indicating that the controller consistently produces optimal performance rewards, thereby balancing exploration with exploitation while preserving past knowledge.

A comparison of the SAC and SAC with EWC rewards in

Table 2, the standard SAC algorithm exhibits unsteady and fluctuating performance during training episodes from 50 to 1000. Initial rewards decline from −8.891 to −10.699 by episode 150, with only slight and inconsistent through episode 300. In middle training, rewards initially improve—reaching −7.381 at episode 650—but remain erratic. In the next steps, SAC achieves its best reward of −6.999 at episode 800; however, overall, SAC struggles to maintain stable and high rewards over time, reflecting challenges in long-term policy retention. The SAC with EWC consistently exceeds standard SAC across all training steps. At the same time, SAC shows unstable and suboptimal reward trends. The SAC with EWC maintains stable and significantly higher rewards, ranging nearly from −2.266 to −2. The EWC-enhanced model exhibits better learning and improved convergence, especially during the middle to late training steps, where SAC rewards remain inconsistent; in contrast, SAC with EWC approach near-optimal values. This highlights the effectiveness of EWC in supporting stable and high-quality policy learning in DRL for the WFQ system over time.

Table 3 presents the MSE and MEA records for every 50 episodes during training for SAC with EWC in the WFQ system, using the WFQ continual-DRL algorithm, which enables an assessment of how well the environment supports adaptive training and learning. The MSE value is 0.612 at episode 50, which rises slightly to 0.639 and exhibits more fluctuations. At the start of training, the agent typically explores different assignment procedures as actions (bandwidth weights) for the queues, resulting in increased fluctuations in the system. That point to the agent explores all possible processes of the action it is learning to reach an action that yields benefits. Then, it decreases to 0.597 at episode 150, indicates that the model was successfully adjusted and improved its predictions. These fluctuations are common in reinforcement learning and reflect the balance between exploration and convergence.

It can be observed that the MSE values steadily decrease from 0.547 at episode 200 to 0.445 at episode 600, suggesting steady learning and improvement in the model’s predictions. The results demonstrate successful training with continuous improvement in MSE between episodes 650 and 1000, and the model achieves its best performance while maintaining stable operation within these values. The slight increase in the MSE values after episode 900 remains within an acceptable range. The models reflect more complex or bursty traffic patterns that appear in training. Yet, the values of MSE remain within an acceptable range (near 0.47), meaning that the model has reached stability and effectively minimizes prediction error, although it has increased variability. The training process is well optimized, resulting in high accuracy.

From the column of MAE values, we see that this parameter is 0.583208 at episode 50, fluctuates slightly, and then improves to 0.535 at episode 300. Between episodes 400 and 700, the error remains controlled and stable, with a decline, indicating strong generalization capabilities. This suggests that the model is learning effectively and reducing prediction errors over time. The lowest MAE values are achieved at episode 1000 (0.510144), indicating that the model performs optimally towards the end of training. The learning process of the WFQ continual-DRL algorithm was efficient, as the estimation of errors was reduced over time, resulting in a well-optimized algorithm for dynamic traffic management.

In

Figure 8, during training, the model with eight queues of WFQ initially has an MSE curve that rises to 0.7, indicating the model’s adaptation to changing queue dynamics. Then, it decreases and fluctuates within the range of 0.35–0.45, indicating a change into a steady-state. These bounded fluctuations confirm robust error minimization and model stability under a dynamic environment.

According to

Figure 9, the MAE rises above 0.6, indicating an early training prediction error, and then gradually declines and stabilizes, with fluctuations in the range of nearly 0.5–0.55, reflecting steady fluctuations and demonstrating the model’s consistent performance in its dynamic state.

Table 4 represents a comparison between the RMSE and Jain’s Index of the WFQ-DRL algorithm and the enhanced WFQ continual-DRL algorithm. SAC integration with EWC yields lower average and minimum RMSE and appears to have better prediction accuracy. The SAC with the EWC model achieved higher fairness in resource allocation compared to the baseline SAC, indicating that the enhanced model maintains a better allocation bandwidth among network flows. Jain’s Index confirms that incorporating EWC leads to more stable and fair decision-making during training in dynamic environment.

Figure 10 shows a comparison between the curve of RMSE computed for SAC and SAC with EWC. After 200 episodes, the curve of SAC decreased to a stable range between 0.07 and 0.09. According to the curve for SAC, the EWC declines after 200 episodes, stabilizing between 0.05 and 0.07. The enhanced SAC with EWC exhibits higher learning performance, achieving a lower and more stable RMSE compared to the SAC. This suggests that EWC helps mitigate catastrophic forgetting learning, especially in complex or dynamic environments of the WFQ system. The curve of enhancement appeared to decrease in RMSE, reflecting improved steadiness and adaptability in decision-making. Lower RMSE indicates better accuracy in predicting actions in dynamic queue management (resource allocation) with respect to optimal resource allocation computed in the Fairness function.

As shown in

Figure 11, the comparison of the Jain’s index between SAC and SAC with EWC reveals that the results show the curve of SAC after a 200-episode rise and steady between 0.6 and 0.7, while the curve of SAC with EWC remains stable after a 200-episode rise and remains steady between 0.7 and 0.8. Whenever the Jain’s Index value is close to 1, it indicates excellent fairness, meaning better exploitation of resources.

Complexity Analysis

The computation and comparison of the time complexity of SAC were implemented in the WFQ-DRL algorithm [

22], and SAC with EWC was implemented in the WFQ continual-DRL algorithm in this paper. Analysis of the execution time for both average step and overhead represented by periodic FIM in DRL as a continual process, providing insight into the tradeoff between learning stability of performance model and computational cost.

For policy (π) network structure:

Calculating the parameter (P) for the policy (π) structure:

For the first fully connected layer O (8 × 64) → O (512)

Second fully connected layer O (64 × 64) → O (4096)

Output fully connected layer 2 × (64 × 8) → O (1024)

Total P for policy network (π): O (512 + 4096 + 1024) = O (5632)

Complexity model:

For policy network (π) is O (1) because the dimension is constant.

For the critic network (Q) structure:

Input layer (8 + 8) → 16

Hidden layer 64 → 64

The output layer is a 64 → 1 output layer in a neural network

Calculating the parameter (P) for the policy (π) structure:

For the first fully connected layer O (16 × 64) → O (1024)

Second fully connected layer O (64 × 64) → O (4096)

Output fully connected layer (64 × 1) → O (64)

Total (P) for one critic network (π): O (1024 + 4096 + 64) = O (5184)

Total P for both critic networks (Q1, Q2) and policy network (π)

5632 + 2 × 5148= P (16,000)

Since the network dimension remains constant, the per-step computational complexity remains O (1). Over the course of N training steps (100 steps per episode), the total complexity linearity is O(N); although, for WFQ continual-DRL using SAC with EWC step cost remains O (1), an additional overhead of O(P) arises for 30 episodes due to FIM, highlighting the computational trade-off between standard DRL algorithm and enhancement algorithm introduced by continual learning.

For the complexity model:

For critic network (Q): O (1), because the dimension is constant.

Overall complexity for N training network steps:

Total time complexity O (N × 1) = O(N).

One episode has 100 steps, which is O (100), where N = 100.

For 1000 episodes (100,000) where N = 100,000, 100 steps for each episode.

Where FIM computes every 30 episodes, 100 steps per episode, P = 16,000 parameters.

Table 5 illustrates the complexity time comparison between SAC and SAC with EWC algorithm.

For the SAC algorithm, 1 step requires 0.004 s, so for 100 steps, the total time is 0.4 s.

For (SAC with EWC), FIM step time = 0.00294 s every 30 episodes. Total 30 episodes (30 × 0.4) + 0.00294 s = 12.00294 s. Experimental time comparison between SAC and SAC with EWC algorithm is shown in

Table 6.

FIM updates every 30 episodes (3000 steps), making the overhead negligible, which is only added at a rate of 0.00294 s every 30 episodes (3000 steps). Total time added when computing FIM through 1000 episodes about 33 times =33 × 0.000294 s = 0.009702 s. By observation, the overhead caused by using FIM in the WFQ continual-DRL algorithm is negligible compared to the performance enhancement, indicating that the new algorithm, WFQ continual-DRL, functions effectively.

5.3. Training Real Traffic

In the experiment, twelve arrival flows with various flows (categories) arrive at the WFQ system of twelve queues. Twelve category groups of traffic data were used to train the agent. The average arrival rates of twelve sources in these twelve categories are shown in

Table 7.

The remaining twelve arrival flows with different flows (categories) arrive at the WFQ system of twelve queues. Twelve category groups of traffic data were used to train the agent. The average arrival rates of twelve sources in these twelve categories are shown in

Table 8.

The reward function is calculated as an equation:

The episode time length, buffer size, and the total bandwidth resources are T = 2000 s, , bandwidth = 1 byte/s, correspondingly. Running the 1000 episodes to train the agent by the SAC with EWC algorithms of twelve queues.

A plot of the run average reward comparison of the twelve queues simulation curves, along with two datasets according to SAC with the EWC algorithm in the WFQ continual-DRL algorithm, is shown in

Figure 13. For the first simulation curve, it can be seen that convergence occurs after 200 episodes, and the range of reward fluctuation stabilizes between −2.5 and −2, resulting in consistent performance. At the beginning of the reward curve, the agent typically explores different assignment strategies (bandwidth weights) for the queues, resulting in more fluctuation. This means that the controller explores all possible processes of the action it is learning in order to achieve an action that yields benefits. As learning processing, the agent exploits the probabilities of action, resulting in an increase in the reward curve; the policy then gradually converges toward the optimal bandwidth allocation, reducing the variability in the reward curve.

Referring to the convergence of the curve average reward after around 200 episodes for the first 12 categories of the real traffic 2 dataset, the range of the fluctuation curve gradually increases and shows steady fluctuation between −2.5 and −1.5, indicating a stable performance. According to the recent 12 categories of the real traffic two of the dataset, the convergence of the average reward curve is before 200 episodes, and the range of the fluctuation curve is between −1.8 and −1, reducing the fluctuation in the curve.

Table 9 presents the results of computing the MSE and MEA values for the twelve-queue scenario, which are used to measure the effectiveness of the environment in supporting adaptive training. The column containing the MSE values shows that at the start of training, the MSE is initially 0.8680; it then undergoes fluctuations before increasing to 1.0482 as the environment is explored and gradually improves during training. The slight variation suggests that the model is still learning, adjusting parameters, and refining predictions. By episode 300, the error is relatively steady compared to the prior fluctuations, indicating better convergence. In the intervening episodes, from 300 to 700, the error is more stable than the previous fluctuations, indicating better convergence. A noteworthy development is observed in the value of the MSE, which drops to 0.5302 at episode 400 and remains low, at approximately 0.5263, until episode 450. In the steady state, from episodes 400 to 700, the MSE values exhibit strong consistency over time, indicating that the model makes predictions that are consistent and accurate. Small fluctuations are expected during training due to the dynamic nature of the environment. The lowest MSE value of 0.4909 is observed at episode 1000, confirming the optimal performance of the model. The results for the last 200 episodes suggest that the model has achieved high accuracy and stability, as the variation between episodes 750 and 950 remains controlled. From the column of MEA values at the start of training, showing that the MEA is 0.6437 at episode 50 and increases slightly to 0.6765 at episode 100. A slight increase is expected when the model adjusts at episode 300 during training, and the MEA value stabilizes, indicating that the model is starting to learn effectively. The MEA value decreases from episode 400 (0.5754) to episode 750 (0.5640), indicating that the model is learning to make more accurate predictions. The fluctuation between episodes 600 and 700 (between around 0.5994 and 0.6057) suggests that the model exhibits normal and healthy training behavior. At episode 1000, the lowest MAE value of 0.5528 is recorded, indicating optimal performance. From episode 850 (MAE values 0.5543 to 0.5528), the model maintains a constant learning rate with minimal fluctuations.

According to the evaluation of the twelve-queue real traffic for the first twelve categories using MSE and MAE metrics, there is an improvement in model stability over time. According to the MSE, a consistent decline across the training episodes indicates advanced improvement in the model’s predictive accuracy. Beginning at 0.533 in episode 50, the MSE declines to 0.317 in episode 350, reflecting effective start learning. Between episodes 400 and 700, the MSE values fluctuate between 0.322 and 0.356, suggesting a stabilization phase where the model fine-tunes its parameters. In the final episode, from 750 to 1000, the MSE continues its downward trend, reaching 0.331, which highlights the model’s capacity to minimize errors over time. The MAE shows below throughout the training process, suggesting improvements in the model’s average prediction accuracy, which starts at 0.574 in episode 50, then decreases to 0.441 in episode 350, indicating successful initial learning. Between episodes 400 and 700, the MAE values exhibit small fluctuations, ranging from 0.445 to 0.468, which reflects the model’s efforts to refine its predictions and handle more nuanced patterns in the data. From episode 750 to 1000, the MAE continues to decline, reaching 0.453, which underscores the model’s steady improvement in minimizing average absolute errors.

Referring to the twelve-queue real traffic for the second twelve categories using MSE and MAE metrics, the MSE indicates a decreasing trend through the training process, showing advanced improvement in the model’s predictive accuracy. Beginning at 0.788 in episode 50, the MSE declines to 0.511 by episode 350, which is effective in early-stage learning. Between episodes 400 and 700, the MSE values fluctuate within a range of around 0.400 to 0.525, suggesting a stabilization stage where the model fine-tunes its parameters. In the last phase, from 750 to 1000, the MSE continues its downward trend, reaching 0.412, which indicates the model’s capacity to minimize errors over time. The MAE for real traffic of the recent twelve categories also displays a decreasing trend throughout the training process, indicating improvements in the model’s average prediction accuracy. Initiating at 0.696 at episode 50, the MAE decreases to 0.560 at episode 350, indicating successful initial learning. Between episodes 400 and 700, the MAE values exhibit minor fluctuations, ranging from 0.499 to 0.571. From episode 750 to 1000, the MAE decreases, reaching 0.505, which highlights the model’s steady improvement in minimizing average absolute errors.

In conclusion of comparison Table, the simulation has higher MSE and MAE than real traffic 1 and traffic 2 for twelve queues of the dataset; it generalized well to real-world conditions. A G/G/1/K WFQ system parallel queuing model with a gamma distribution was formulated to simulate complicated network traffic. That indicates that the simulation accurately captured realistic traffic variability and system conditions. Thus, the WFQ scheduler, as implemented in continual and DRL framework, demonstrated robust performance, validating its effectiveness.

According to

Figure 14, the MSE curve initially rises to 0.8, indicating prediction error during early adaptation. In the last episodes of training, it then decreases and enters a steady fluctuating range nearly between 0.5 and 0.7. This bounded variability indicates that the model has stabilized and maintains reliable performance under dynamic multi-queue conditions, although in extended queues.

As shown in

Figure 15, during the early training, the MAE curve rises to 0.7 in the initial step, indicating a high error. In the last episodes, it then drops and stabilizes within a fluctuating range between nearly 0.55 and 0.65. This behavior suggests the model’s ability to generalize while maintaining consistent performance in a dynamic environment.

According to

Figure 16, the MSE curve for the twelve-queue system trained on real traffic exhibits a high initial error due to the complexity of the traffic patterns. Still, it gradually decreases and stabilizes between 3.0 and 3.5, indicating that the model has effectively learned and adapted to real-world traffic.

For

Figure 17, the MAE curve begins with a high error, reflecting meaningful early prediction deviations. As training advances, the error steadily declines. It stabilizes between nearly 4.0 and 4.5, demonstrating bounded fluctuations. That indicates the model has adapted well to real traffic dynamics and supports robust performance.

Regarding

Figure 18, the MSE curve for the second real traffic dataset starts high, reflecting the initial instability of the prediction. It gradually decreases and stabilizes within the range of 4.5 to 5.5, showing enhanced model learning. This trend suggests that the model is evolving to accommodate more diverse traffic patterns over time. Despite the dynamic challenges, the error range suggests reliable convergence and robust performance.

Referring to

Figure 19, the MAE curve for the second real traffic dataset initially increases sharply, exhibiting poor early prediction accuracy. As training progresses, the error decreases and stabilizes within a narrow range of 0.5 to 0.6. This steady fluctuation suggests the model has adapted better to the traffic dynamics. The bounded MAE range reflects consistent and robust performance under real-world dynamics.

The processing with statistical analysis addresses bursty real traffic for all categories, after which the dataset is equal to 1,393,777 [

33].

Regarding the Square Coefficient of Variance

in the real traffic data, computed in equation [

33]:

where

represent standard deviation, and

represents the mean. As illustrated in

Figure 20, a higher

indicated that the real traffic data arrival patterns were more unpredictable and random. High variance reflects the bursty and unpartable nature of real traffic conditions.

To compute correlation, the copy of interarrival time will be shifted to one position, and the correlation coefficient for real traffic will be computed in the equation of correlation coefficient [

34]. The results shown in

Figure 21 revealed that the real traffic data appear to have a more dynamic pattern in its correlation. The decline of correlation curves indicates more influence. The statistics clearly showed patterns of bursty traffic.

This paper presented knowledge that improved this algorithm by combining the continual learning technique with the DRL algorithm for the WFQ system. The development of the WFQ continual-DRL algorithm was achieved by contributing a continual learning method represented by the EWC technique, which helps to overcome catastrophic forgetting by remembering old tasks, selectively slowing down learning on the parameters important for those tasks, and improving the performance over time, which is combined with advanced deep reinforcement in the form of the SAC algorithm. In addition to developing this algorithm, the evaluation of the algorithm was expanded by increasing the number of queues to twelve. This expansion was considered to assess the scalability and robustness of the model in terms of managing higher traffic loads and more complex network conditions. The aim was to maximize the cumulative reward, and this approach achieved an improvement of approximately 76% in performance compared to the baseline reward curve in an experiment involving eight queues for WFQ-DRL. This finding demonstrates the effectiveness of our approach in regard to maintaining stable learning and adapting to new tasks while retaining previously learned knowledge. For the expansion in a second experiment involving twelve queues, the average reward curve shows an enhancement in performance by increasing reward with smother fluctuation, indicating that this new approach is more effective even with an expanded environment, is more robust, and is suitable for the nature of the queueing system, as it carries out dynamic bandwidth allocation control among WFQ parallel queues. The model shows higher reward than compared to simulation of twelve queues. This suggests that the model adapts well in real world traffic, and the result highlights the model’s effectiveness under realistic traffic environments.