Multiple-Parallel Morphological Anti-Aliasing Algorithm Implemented in FPGA

Abstract

:1. Introduction

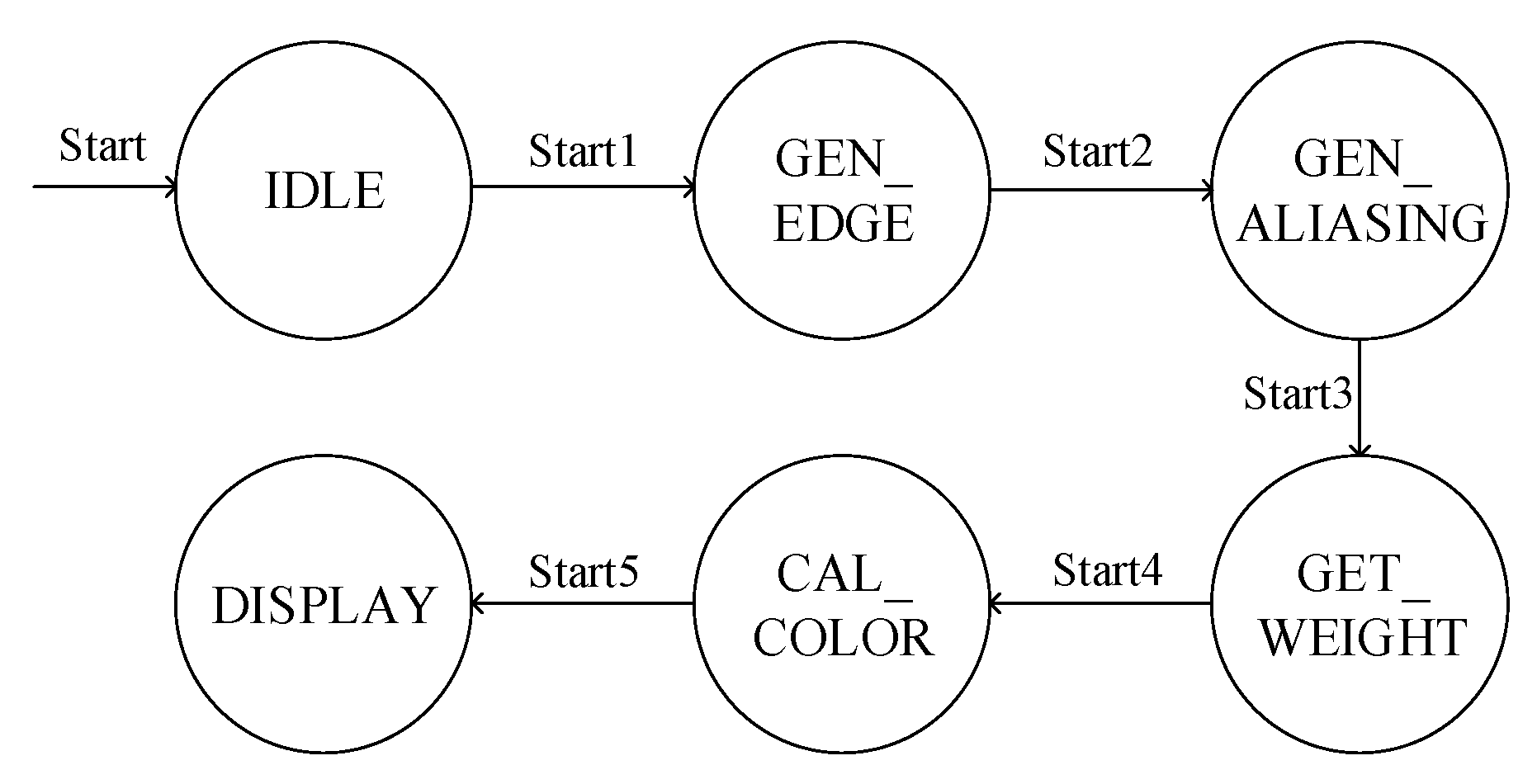

2. Multiple-Parallel Morphological Anti-Aliasing Algorithm

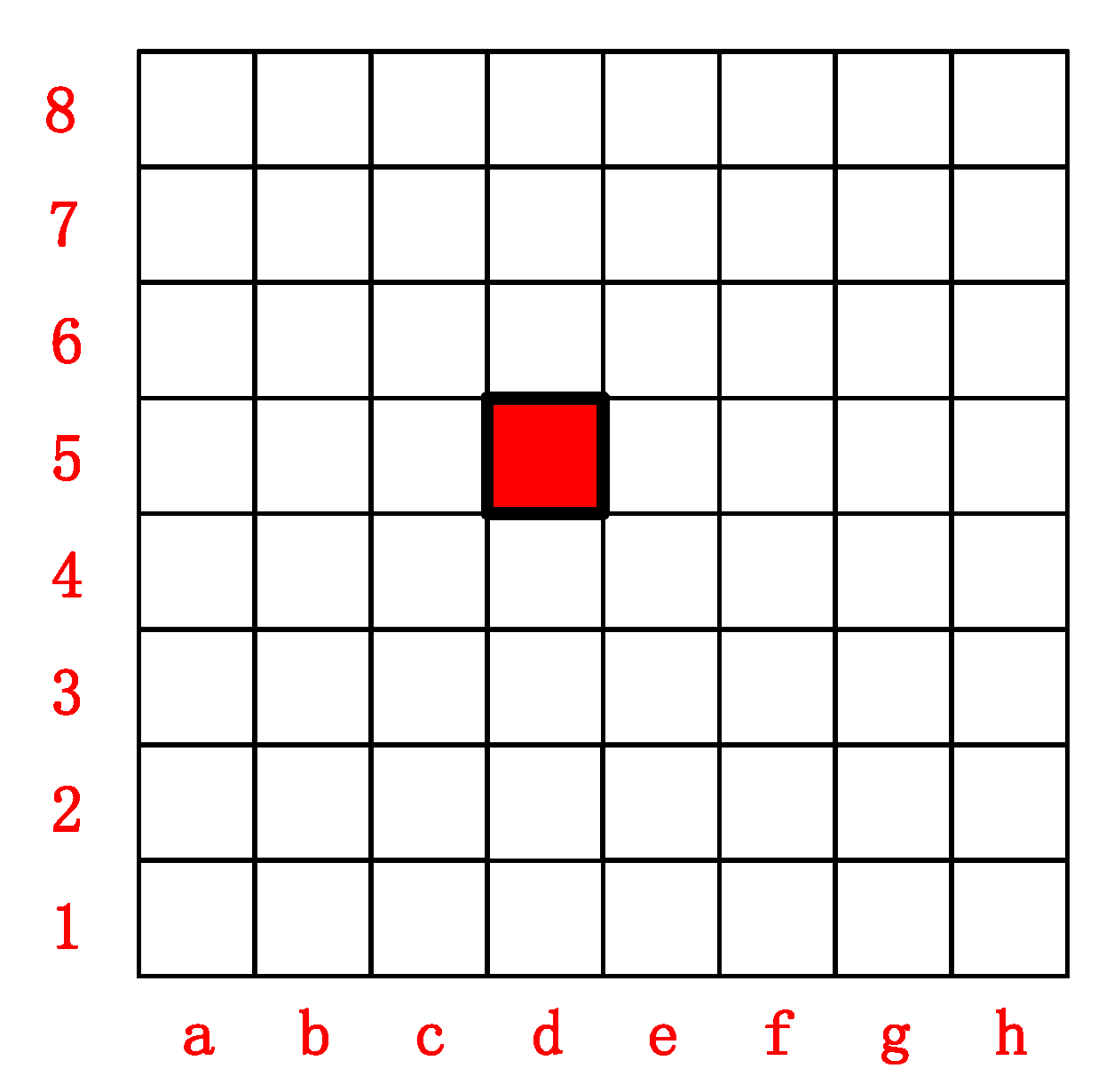

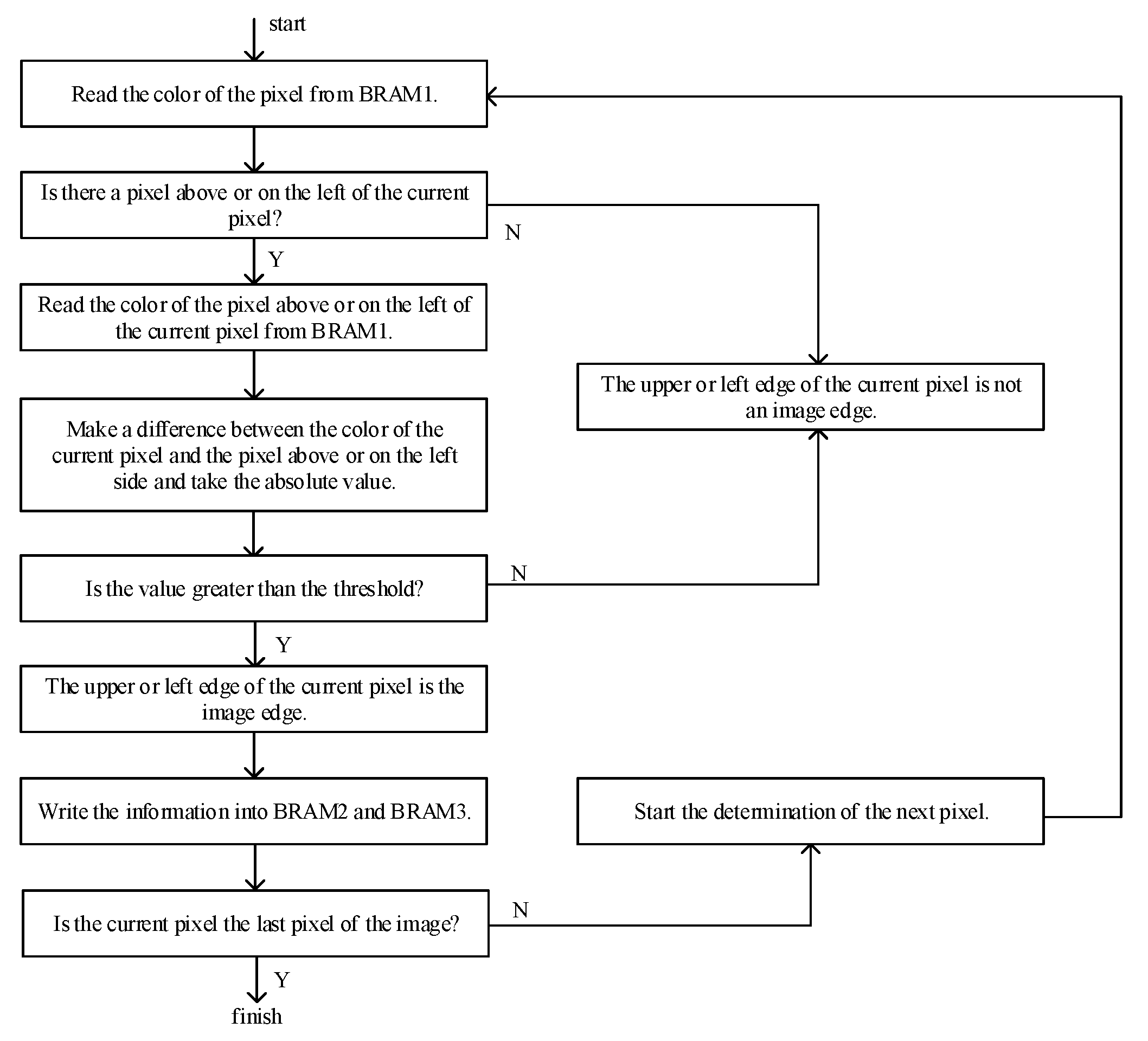

2.1. Edge Detection of the Image

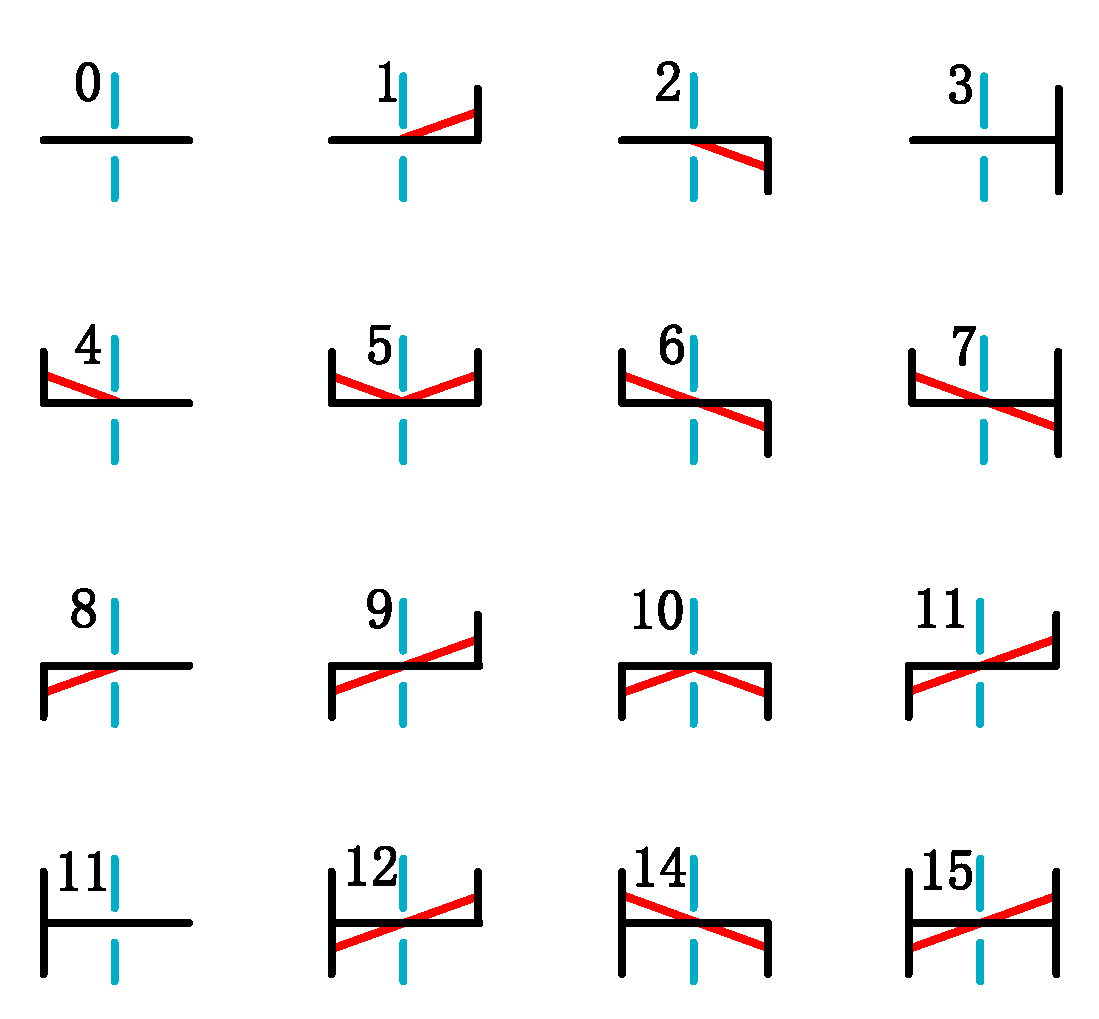

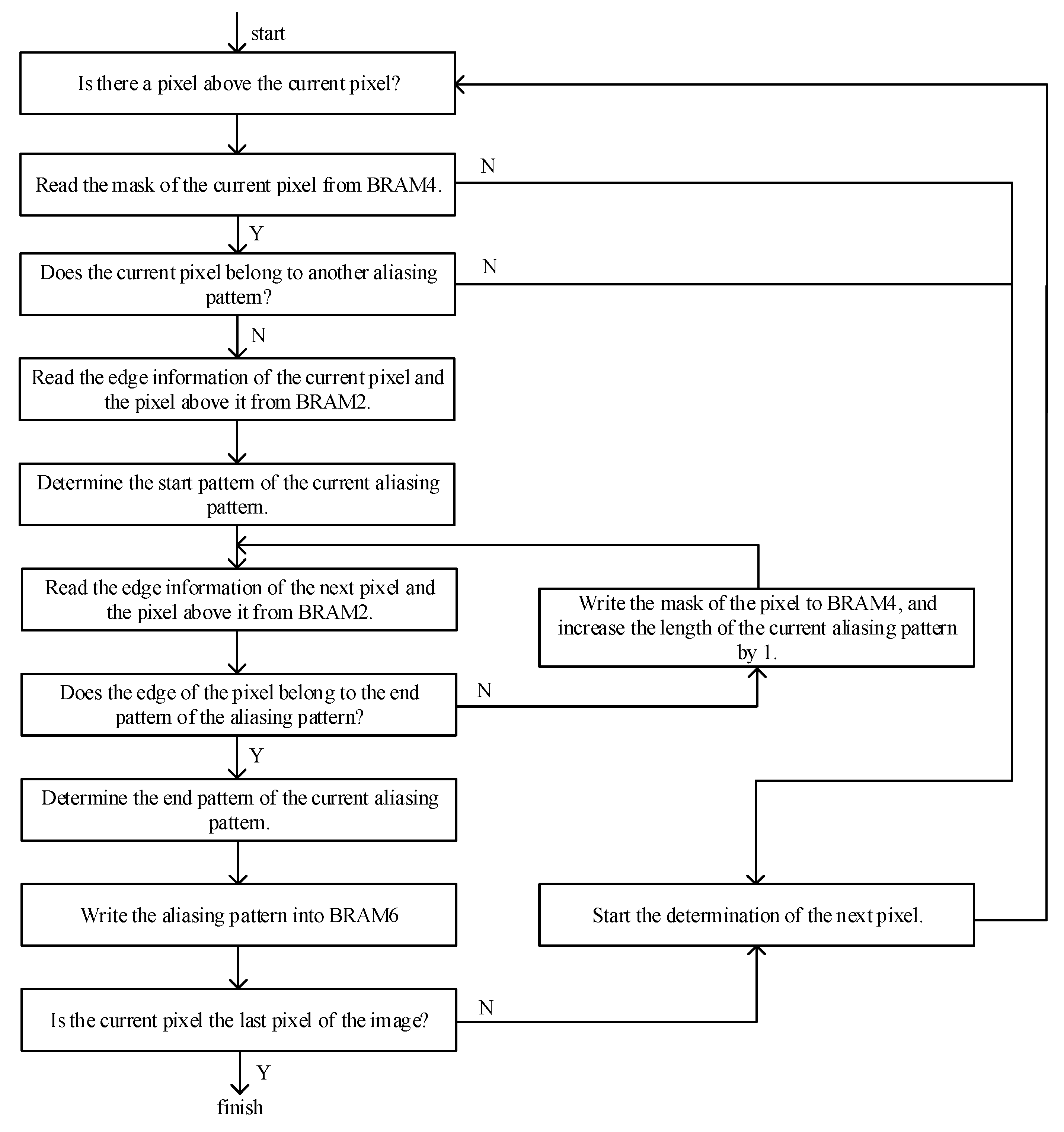

2.2. Classification of the Aliasing Patterns

2.3. Acquisition of the Blend Weight Coefficients

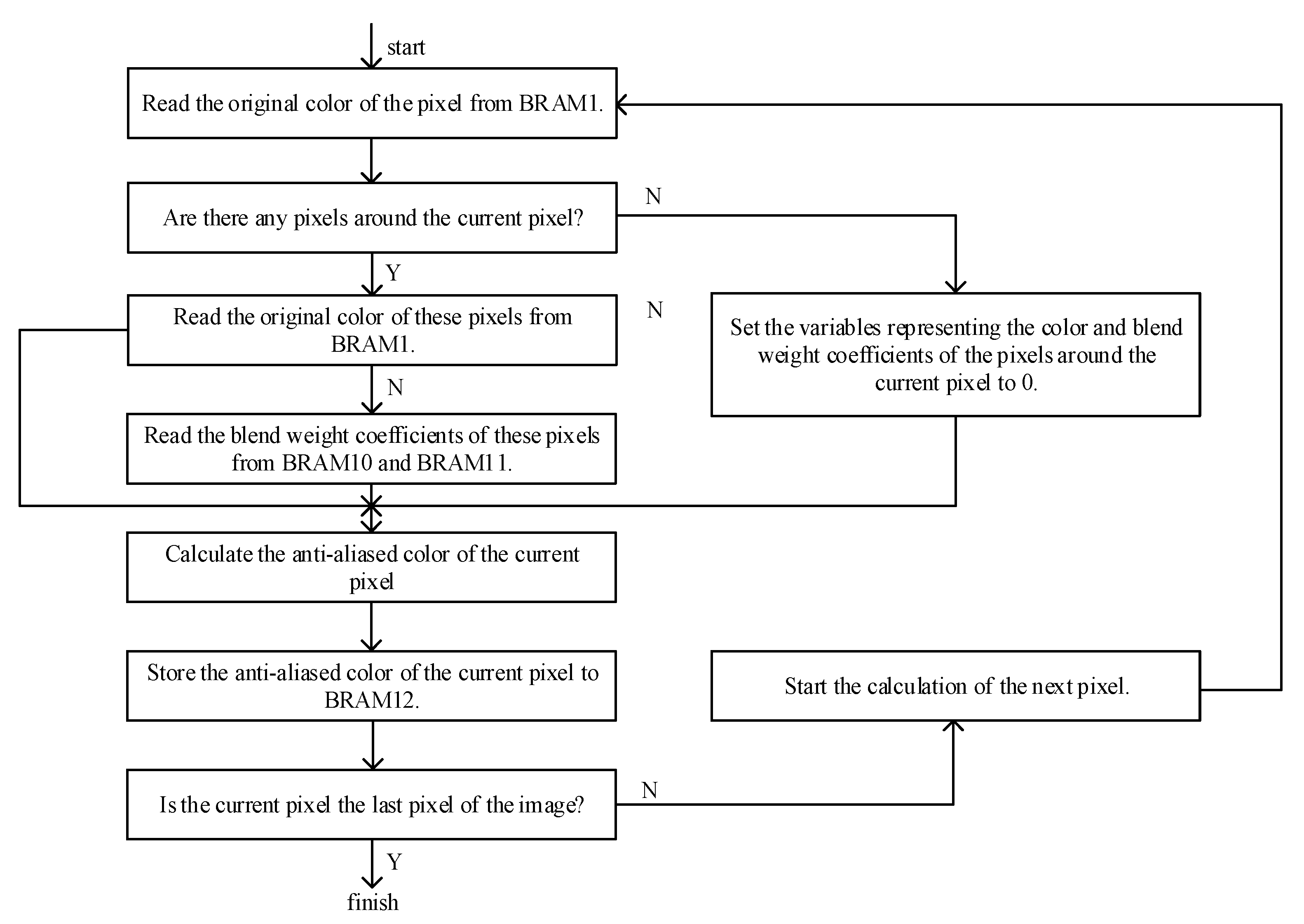

2.4. Calculation of the Anti-Aliased Color

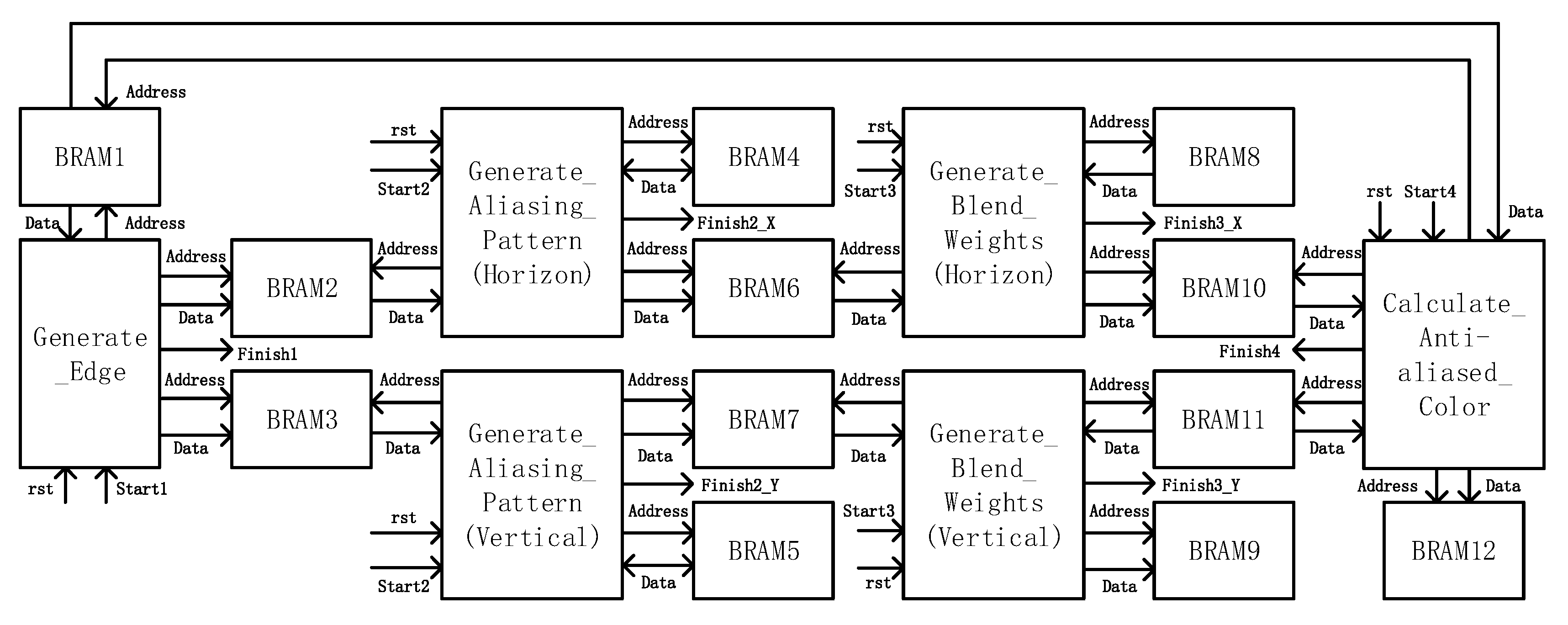

3. Implementation of the Algorithm in FPGA

3.1. Implementation of the Edge Detection of the Image

3.2. Implementation of the Classification of the Aliasing Patterns

3.3. Implementation of the Acquisition of the Blend Weight Coefficients

3.4. Implementation of the Calculation of the Anti-Aliased Color

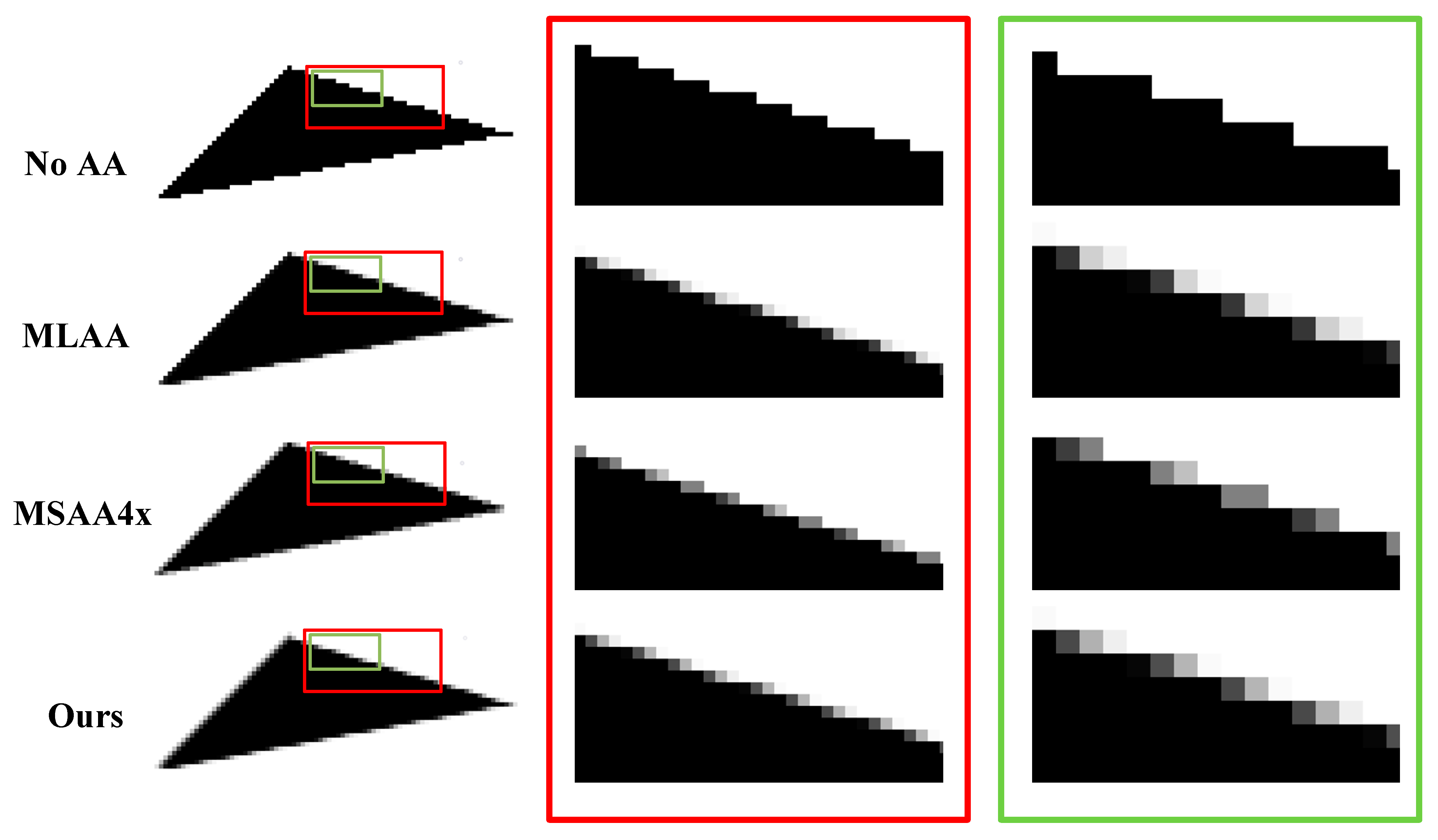

4. Results and Comparisons

4.1. Anti-Aliasing Performance

4.2. Timing Performance

4.3. Memory Size

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Mammem, A. Transparency and antialiasing algorithms implemented with the virtual pixel maps technique. IEEE Comput. Graph. Appl. 1989, 9, 43–55. [Google Scholar] [CrossRef]

- Molnar, S. Efficient Supersampling Antialiasing for High-Performance Architectures; North Carolina University at Chapel Hill Department of Computer Science: Chapel Hill, NC, USA, 1991. [Google Scholar]

- Shen, Y.L.; Seo, S.W.; Zhang, Y. A low hardware cost 2D vector graphic rendering algorithm for supersampling antialiasing. In Proceedings of the 2010 Second International Workshop on Education Technology and Computer Science, Wuhan, China, 6–7 March 2010; Volume 1, pp. 141–144. [Google Scholar]

- Holländer, M.; Boubekeur, T.; Eisemann, E. Adaptive supersampling for deferred anti-aliasing. J. Comput. Graph. Tech. 2013, 2, 1–14. [Google Scholar]

- Akeley, K. Reality engine graphics. In Proceedings of the 20th Annual Conference on Computer Graphics and Interactive Techniques, Anaheim, CA, USA, 2–6 August 1993; pp. 109–116. [Google Scholar]

- Schwarz, M.; Stamminger, M. Multisampled Antialiasing of Per-pixel Geometry. In Eurographics (Short Papers); University of Erlangen-Nuremberg: Erlangen, Germany, 2009; pp. 21–24. [Google Scholar]

- Beaudoin, P.; Poulin, P.; Iro, D. Compressed Multisampling for Efficient Hardware Edge Antialiasing. In Graphics Interface; LIGUM: Montreal, QC, Canada, 2004; pp. 169–176. [Google Scholar]

- Kerzner, E.; Salvi, M. Streaming g-buffer compression for multi-sample anti-aliasing. In Proceedings of the High Performance Graphics, Lyon, France, 23 June 2014; pp. 1–7. [Google Scholar]

- Nicolas, T. 2.8 Deferred Shading with Multisampling Anti-Aliasing in DirectX 10. 2009. Available online: https://gitea.yiem.net/QianMo/Real-Time-Rendering-4th-Bibliography-Collection/raw/branch/main/Chapter%201-24/[1764]%20[ShaderX7%202009]%20Deferred%20Shading%20with%20Multisampling%20Anti-Aliasing%20in%20DirectX%2010.pdf (accessed on 15 August 2022).

- Fridvalszky, A.; Tóth, B. Multisample Anti-Aliasing in Deferred Rendering. In Eurographics (Short Papers); University of Erlangen-Nuremberg: Erlangen, Germany, 2020; pp. 21–24. Available online: https://diglib.eg.org/bitstream/handle/10.2312/egs20201008/021-024.pdf (accessed on 15 August 2022).

- Fridvalszky, A. Optimizing Multisample Anti-Aliasing for Deferred Renderers. Available online: https://cescg.org/wp-content/uploads/2020/03/Fridvalszky-Optimizing-multisample-anti-aliasing-for-deferred-renderers-1.pdf (accessed on 15 August 2022).

- Akenine-Möller, T. FLIPQUAD: Low-Cost Multisampling Rasterization; Technical Report 02-04; Chalmers University of Technology: Gothenburg, Sweden, 2002. [Google Scholar]

- Peter, Y. Coverage Sampled Antialiasing; Technical Report; NVIDIA Corporation: Santa Clara, CA, USA, 2007. [Google Scholar]

- Waller, M.D.; Ewins, J.P.; White, M. Efficient coverage mask generation for antialiasing. IEEE Comput. Graph. Appl. 2000, 20, 86–93. [Google Scholar]

- Wang, Y.; Wyman, C.; He, Y. Decoupled coverage anti-aliasing. In Proceedings of the 7th Conference on High-Performance Graphics, Los Angeles, CA, USA, 7–9 August 2015; pp. 33–42. [Google Scholar]

- Crisu, D.; Cotofana S, D.; Vassiliadis, S. Efficient hardware for antialiasing coverage mask generation. In Proceedings of the Computer Graphics International, Crete, Greece, 19 June 2004; pp. 257–264. [Google Scholar]

- Almeida, T.; Pereira, J.M.; Jorge, J. Evaluation of Antialiasing Techniques on Mobile Devices. In Proceedings of the 2019 International Conference on Graphics and Interaction (ICGI), Faro, Portugal, 21–22 November 2019; pp. 64–71. [Google Scholar]

- Thaler, J.; TU Wien. Deferred Rendering; TU Wein: Vienna, Austria, 2011. [Google Scholar]

- Malan, H. Edge Antialiasing by Post-Processing. In GPU Pro 360 Guide to Image Space; AK Peters/CRC Press: Wellesley, MA, USA, 2018; pp. 33–58. [Google Scholar]

- Lottes, T. Fast Approximate Antialiasing; Technical Report; NVIDIA Corporation: Santa Clara, CA, USA, 2009. [Google Scholar]

- Nah, J.H.; Ki, S.; Lim, Y. Axaa: Adaptive approximate anti-aliasing. In Proceedings of the ACM SIGGRAPH 2016 Posters, Anaheim, CA, USA, 24–28 July 2016; pp. 1–2. [Google Scholar]

- Reshetov, A. Morphological antialiasing. In Proceedings of the Conference on High Performance Graphics, Victoria, BC, Canada, 26–29 May 2009; pp. 109–116. [Google Scholar]

- Navarro, F.; Gutierrez, D. Practical Morphological Antialiasing. In GPU Pro 2; AK Peters Ltd.: Natick, MA, USA, 2011; pp. 95–113. [Google Scholar]

- Biri, V.; Herubel, A.; Deverly, S. Practical morphological antialiasing on the GPU. In Proceedings of the ACM SIGGRAPH 2010 Talks, Los Angeles, CA, USA, 26–30 July 2010; p. 1. [Google Scholar]

- Zhong, Y.; Huo, Y.; Wang, R. Morphological Anti-Aliasing Method for Boundary Slope Prediction. arXiv 2022, arXiv:2203.03870. [Google Scholar]

- Jimenez, J. Filmic SMAA: Sharp morphological and temporal antialiasing. ACM SIGGRAPH Courses Adv. Real-Time Render. Games 2016, 4, 10. [Google Scholar]

- Jimenez, J.; Masia, B.; Echevarria, J.I. Practical Morphological Antialiasing. In GPU Pro 360; AK Peters/CRC Press: Wellesley, MA, USA, 2018; pp. 135–153. [Google Scholar]

- Herubel, A.; Biri, V.; Deverly, S. Morphological Antialiasing and Topological Reconstruction. In Proceedings of the GRAPP, Algarve, Portugal, 5–7 March 2011. [Google Scholar]

- LIU, J.; Du, H.; Du, Q. An improved morphological anti-aliasing algorithm. J. Comput. Appl. 2016, 1001–9081. [Google Scholar]

- Yong-Jae, L. A Study on Antialiasing Based on Morphological Pixel Structure. J. Korea Game Soc. 2003, 3, 86–93. [Google Scholar]

- Alexander, G. An Image and Processing Comparison Study of Antialiasing Methods. 2016. Available online: https://diglib.eg.org/bitstream/handle/10.2312/egs20201008/021-024.pdf?sequence=1&isAllowed=y (accessed on 15 August 2022).

- Jiang, X.D.; Sheng, B.; Lin, W.Y.; Lu, W.; Ma, L.Z. Image anti-aliasing techniques for Internet visual media processing: A review. J. Zhejiang Univ. Sci. C 2014, 15, 717–728. [Google Scholar] [CrossRef]

- Jimenez, J.; Echevarria, J.I.; Sousa, T.; Gutierrez, D. SMAA: Enhanced subpixel morphological antialiasing. Comput. Graph. Forum 2012, 31, 355–364. [Google Scholar] [CrossRef]

- AMD. AMD FidelityFX Super Resolution 1.0. 2016. Available online: gpuopen.com/fidelityfx-superresolution (accessed on 15 August 2022).

- Chajdas; Matthaus, G.; McGuire, M.; Luebke, D. Subpixel reconstruction antialiasing for deferred shading. In Proceedings of the Symposium on Interactive 3D Graphics and Games, San Francisco, CA, USA, 18–20 February 2011; pp. 15–22. [Google Scholar]

- Yang, L.; Liu, S.; Salvi, S. A survey of temporal antialiasing technique. Comput. Graph. Forum 2020, 39, 607–621. [Google Scholar] [CrossRef]

- Marrs, A.; Spjut, J.; Gruen, H. Adaptive temporal antialiasing. In Proceedings of the Conference on High-Performance Graphics, Vancouver, BC, Canada, 10–12 August 2018; pp. 1–4. [Google Scholar]

- Sung, K.; Pearce, A.; Wang, C. Spatial-temporal antialiasing. IEEE Trans. Vis. Comput. Graph. 2002, 8, 144–153. [Google Scholar] [CrossRef]

- Xing-sheng, D.U.; Tong, W.U.; Jing-yi, Z. Future-frame-based temporal anti-aliasing research and practice. J. Graph. 2022, 43, 133. [Google Scholar]

- Berhane, H.; Scott, M.B.; Barker, A.J. Deep learning–based velocity antialiasing of 4D—Flow MRI. Magn. Reson. Med. 2022, 88, 449–463. [Google Scholar] [CrossRef] [PubMed]

- Zhao, C.; Shao, M.; Carass, A. Applications of a deep learning method for anti-aliasing and super-resolution in MRI. Magn. Reson. Imaging 2019, 64, 132–141. [Google Scholar] [CrossRef] [PubMed]

| Size of the Image | Ours | MLAA |

|---|---|---|

| Height = 100 Width = 100 | 3216.3 us | 11,951.2 us |

| Height = 150 Width = 150 | 7214.8 us | 26,817.2 us |

| Height = 200 Width = 200 | 12,813.3 us | 47,633.3 us |

| Size of the Image | Ours | MLAA |

| Height = 100 Width = 100 | 100 Kbit | 1600 Kbit |

| Height = 150 Width = 150 | 121 Kbit | 5625 Kbitus |

| Height = 200 Width = 200 | 144 Kbit | 10,000 Kbitus |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ai, D.; Xue, J.; Wang, M. Multiple-Parallel Morphological Anti-Aliasing Algorithm Implemented in FPGA. Telecom 2022, 3, 526-540. https://doi.org/10.3390/telecom3030029

Ai D, Xue J, Wang M. Multiple-Parallel Morphological Anti-Aliasing Algorithm Implemented in FPGA. Telecom. 2022; 3(3):526-540. https://doi.org/10.3390/telecom3030029

Chicago/Turabian StyleAi, Dihan, Junjie Xue, and Mingjiang Wang. 2022. "Multiple-Parallel Morphological Anti-Aliasing Algorithm Implemented in FPGA" Telecom 3, no. 3: 526-540. https://doi.org/10.3390/telecom3030029

APA StyleAi, D., Xue, J., & Wang, M. (2022). Multiple-Parallel Morphological Anti-Aliasing Algorithm Implemented in FPGA. Telecom, 3(3), 526-540. https://doi.org/10.3390/telecom3030029